3

Standards for Finding and Assessing Individual Studies

Abstract: This chapter addresses the identification, screening, data collection, and appraisal of the individual studies that make up a systematic review’s (SR’s) body of evidence. The committee recommends six related standards. The search should be comprehensive and include both published and unpublished research. The potential for bias to enter the selection process is significant and well documented. Without appropriate measures to counter the biased reporting of primary evidence from clinical trials and observational studies, SRs will reflect and possibly exacerbate existing distortions in the biomedical literature. The review team should document the search process and keep track of the decisions that are made for each article. Quality assurance and control are critical during data collection and extraction because of the substantial potential for errors. At least two review team members, working independently, should screen and select studies and extract quantitative and other critical data from included studies. Each eligible study should be systematically appraised for risk of bias; relevance to the study’s populations, interventions, and outcomes measures; and fidelity of the implementation of the interventions.

The search for evidence and critical assessment of the individual studies identified are the core of a systematic review (SR).

These SR steps require meticulous execution and documentation to minimize the risk of a biased synthesis of evidence. Current practice falls short of recommended guidance and thus results in a meaningful proportion of reviews that are of poor quality (Golder et al., 2008; Moher et al., 2007a; Yoshii et al., 2009). An extensive literature documents that many SRs provide scant, if any, documentation of their search and screening methods. SRs often fail to acknowledge or address the risk of reporting biases, neglect to appraise the quality of individual studies included in the review, and are subject to errors during data extraction and the meta-analysis (Cooper et al., 2006; Delaney et al., 2007; Edwards et al., 2002; Golder et al., 2008; Gøtzsche et al., 2007; Horton et al., 2010; Jones et al., 2005; Lundh et al., 2009; Moher et al., 2007a; Roundtree et al., 2008; Tramer et al., 1997). The conduct of the search for and selection of evidence may have serious implications for patients’ and clinicians’ decisions. An SR might lead to the wrong conclusions and, ultimately, the wrong clinical recommendations, if relevant data are missed, errors are uncorrected, or unreliable research is used (Dickersin, 1990; Dwan et al., 2008; Glanville et al., 2006; Gluud, 2006; Kirkham et al., 2010; Turner et al., 2008).

In this chapter, the committee recommends methodological standards for the steps involved in identifying and assessing the individual studies that make up an SR’s body of evidence: planning and conducting the search for studies, screening and selecting studies, managing data collection from eligible studies, and assessing the quality of individual studies. The committee focused on steps to minimize bias and to promote scientifically rigorous SRs based on evidence (when available), expert guidance, and thoughtful reasoning. The recommended standards set a high bar that will be challenging for many SR teams. However, the available evidence does not suggest that it is safe to cut corners if resources are limited. These best practices should be thoughtfully considered by anyone conducting an SR. It is especially important that the SR is transparent in reporting what methods were used and why.

Each standard consists of two parts: first, a brief statement describing the related SR step and, second, one or more elements of performance that are fundamental to carrying out the step. Box 3-1 lists all of the chapter’s recommended standards.

Note that, as throughout this report, the chapter’s references to “expert guidance” refer to the published methodological advice of the Agency for Healthcare Research and Quality (AHRQ) Effective Health Care Program, the Centre for Reviews and Dissemination (CRD) (University of York), and the Cochrane Collaboration.

Appendix E contains a detailed summary of expert guidance on this chapter’s topics.

THE SEARCH PROCESS

When healthcare decision makers turn to SRs to learn the potential benefits and harms of alternative health care therapies, it is with the expectation that the SR will provide a complete picture of all that is known about an intervention. Research is relevant to individual decision making, whether it reveals benefits, harms, or lack of effectiveness of a health intervention. Thus, the overarching objective of the SR search for evidence is to identify all the studies (and all the relevant data from the studies) that may pertain to the research question and analytic framework. The task is a challenging one. Hundreds of thousands of research articles are indexed in bibliographic databases each year. Yet despite the enormous volume of published research, a substantial proportion of effectiveness data are never published or are not easy to access. For example, approximately 50 percent of studies appearing as conference abstracts are never fully published (Scherer et al., 2007), and some studies are not even reported as conference abstracts. Even when there are published reports of effectiveness studies, the studies often report only a subset of the relevant data. Furthermore, it is well documented that the data reported may not represent all the findings on an intervention’s effectiveness because of pervasive reporting bias in the biomedical literature. Moreover, crucial information from the studies is often difficult to locate because it is kept in researchers’ files, government agency records, or manufacturers’ proprietary records.

The following overview further describes the context for the SR search process: the nature of the reporting bias in the biomedical literature; key sources of information on comparative effectiveness; and expert guidance on how to plan and conduct the search. The committee’s related standards are presented at the end of the section.

Planning the Search

The search strategy should be an integral component of the research protocol1 that specifies procedures for finding the evidence directly relevant to the SR. Items described in the protocol include,

|

1 |

See Chapter 2 for the committee’s recommended standards for establishing the research protocol. |

Standard 3.4 Document the search Required elements:

Standard 3.5 Manage data collection Required elements:

Standard 3.6 Critically appraise each study |

but are not limited to, the study question; the criteria for a study’s inclusion in the review (including language and year of report, publication status, and study design restrictions, if any); the databases, journals, and other sources to be searched for evidence; and the search strategy (e.g., sequence of database thesaurus terms, text words, methods of handsearching).

Expertise in Searching

A librarian or other qualified information specialist with training or experience in conducting SRs should work with the SR team to design the search strategy to ensure appropriate translation of the research question into search concepts, correct choice of Boolean operators and line numbers, appropriate translation of the search strategy for each database, relevant subject headings, and appropriate application and spelling of terms (Sampson and McGowan, 2006). The Cochrane Collaboration includes an Information Retrieval Methods Group2 that provides a valuable resource for information specialists seeking a professional group with learning opportunities.

Expert guidance recommends that an experienced librarian or information specialist with training in SR search methods should also be involved in performing the search (CRD, 2009; Lefebvre et al., 2008; McGowan and Sampson, 2005; Relevo and Balshem, 2011). Navigating through the various sources of research data and publications is a complex task that requires experience with a wide range of bibliographic databases and electronic information sources, and substantial resources (CRD, 2009; Lefebvre et al., 2008; Relevo and Balshem, 2011).

Ensuring an Accurate Search

An analysis of SRs published in the Cochrane Database of Systematic Reviews found that 90.5 percent of the MEDLINE searches contained at least one search error (Sampson and McGowan, 2006). Errors included spelling errors, the omission of spelling variants and truncations, the use of incorrect Boolean operators and line numbers, inadequate translation of the search strategy for different databases,

|

2 |

For more information on the Cochrane Information Retrieval Methods Group, go to http://irmg.cochrane.org/. |

misuse of MeSH3 and free-text terms, unwarranted explosion of MeSH terms, and redundancy in search terms. Common sense suggests that these errors affect the accuracy and overall quality of SRs. AHRQ and CRD SR experts recommend peer review of the electronic search strategy to identify and prevent these errors from occurring (CRD, 2009; Relevo and Balshem, 2011). The peer reviewer should be independent from the review team in order to provide an unbiased and scientifically rigorous review, and should have expertise in information retrieval and SRs. In addition, the peer review process should take place prior to the search process, rather than in conjunction with the peer review of the final report, because the search process will provide the data that are synthesized and analyzed in the SR.

Sampson and colleagues (2009) recently surveyed individuals experienced in SR searching and identified aspects of the search process that experts agree are likely to have a large impact on the sensitivity and precision of a search: accurate translation of each research question into search concepts; correct choice of Boolean and proximity operators; absence of spelling errors; correct line numbers and combination of line numbers; accurate adaptation of the search strategy for each database; and inclusion of relevant subject headings. Then they developed practice guidelines for peer review of electronic search strategies. For example, to identify spelling errors in the search they recommended that long strings of terms be broken into discrete search statements in order to make null or misspelled terms more obvious and easier to detect. They also recommended cutting and pasting the search into a spell checker. As these guidelines and others are implemented, future research needs to be conducted to validate that peer review does improve the search quality.

Reporting Bias

Reporting biases (Song et al., 2010), particularly publication bias (Dickersin, 1990; Hopewell et al., 2009a) and selective reporting of trial outcomes and analyses (Chan et al., 2004a, 2004b; Dwan et al., 2008; Gluud, 2006; Hopewell et al., 2008; Turner et al., 2008; Vedula et al., 2009), present the greatest obstacle to obtaining a complete collection of relevant information on the effectiveness of healthcare interventions. Reporting biases have been identified across many health fields and interventions, including treatment, prevention, and diagnosis. For example, McGauran and colleagues (2010) identified

instances of reporting bias spanning 40 indications and 50 different pharmacological, surgical, diagnostic, and preventive interventions and selective reporting of study data as well as efforts by manufacturers to suppress publication. Furthermore, the potential for reporting bias exists across the entire research continuum—from before completion of the study (e.g., investigators’ decisions to register a trial or to report only a selection of trial outcomes), to reporting in conference abstracts, selection of a journal for submission, and submission of the manuscript to a journal or other resource, to editorial review and acceptance.

The following describes the various ways in which reporting of research findings may be biased. Table 3-1 provides definitions of the types of reporting biases.

Publication Bias

The term publication bias refers to the likelihood that publication of research findings depends on the nature and direction of

TABLE 3-1 Types of Reporting Biases

|

Type of Reporting Bias |

Definition |

|

Publication bias |

The publication or nonpublication of research findings, depending on the nature and direction of the results |

|

Selective outcome reporting bias |

The selective reporting of some outcomes but not others, depending on the nature and direction of the results |

|

Time-lag bias |

The rapid or delayed publication of research findings, depending on the nature and direction of the results |

|

Location bias |

The publication of research findings in journals with different ease of access or levels of indexing in standard databases, depending on the nature and direction of results. |

|

Language bias |

The publication of research findings in a particular language, depending on the nature and direction of the results |

|

Multiple (duplicate) publications |

The multiple or singular publication of research findings, depending on the nature and direction of the results |

|

Citation bias |

The citation or noncitation of research findings, depending on the nature and direction of the results |

|

SOURCE: Sterne et al. (2008). |

|

a study’s results. More than two decades of research have shown that positive findings are more likely to be published than null or negative results. At least four SRs have assessed the association between study results and publication of findings (Song et al., 2009). These investigations plus additional individual studies indicate a strong association between statistically significant or positive results and likelihood of publication (Dickersin and Chalmers, 2010).

Investigators (not journal editors) are believed to be the major reason for failure to publish research findings (Dickersin and Min, 1993; Dickersin et al., 1992). Studies examining the influence of editors on acceptance of submitted manuscripts have not found an association between results and publication (Dickersin et al., 2007; Lynch et al., 2007; Okike et al., 2008; Olson et al., 2002).

Selective Outcome Reporting Bias

To avert problems introduced by post hoc selection of study outcomes, a randomized controlled trial’s (RCT’s) primary outcome should be stated in the research protocol a priori, before the study begins (Kirkham et al., 2010). Statistical testing of the effect of an intervention on multiple possible outcomes in a study can lead to a greater probability of statistically significant results obtained by chance. When primary or other outcomes of a study are selected and reported post hoc (i.e., after statistical testing), the reader should be aware that the published results for the “primary outcome” may be only a subset of relevant findings, and may be selectively reported because they are statistically significant.

Outcome reporting bias refers to the selective reporting of some outcomes but not others because of the nature and direction of the results. This can happen when investigators rely on hypothesis testing to prioritize research based on the statistical significance of an association. In the extreme, if only positive outcomes are selectively reported, we would not know that an intervention is ineffective for an important outcome, even if it had been tested frequently (Chan and Altman, 2005; Chan et al., 2004a,b; Dwan et al., 2008; Turner et al., 2008; Vedula et al., 2009).

Recent research on selective outcome reporting bias has focused on industry-funded trials, in part because internal company documents may be available, and in part because of evidence of biased reporting that favors their test interventions (Golder and Loke, 2008; Jorgensen et al., 2008; Lexchin et al., 2003; Nassir Ghaemi et al., 2008; Ross et al., 2009; Sismondo 2008; Vedula et al., 2009).

Mathieu and colleagues (2009) found substantial evidence of selective outcome reporting. The researchers reviewed 323 RCTs with results published in high-impact journals in 2008. They found that only 147 had been registered before the end of the trial with the primary outcome specified. Of these 147, 46 (31 percent) were published with different primary outcomes than were registered, with 22 introducing a new primary outcome. In 23 of the 46 discrepancies, the influence of the discrepancy could not be determined. Among the remaining 23 discrepancies, 19 favored a statistically significant result (i.e. a new statistically significant primary outcome was introduced in the published article or a nonsignificant primary outcome was omitted or not defined as primary in the published article).

In a study of 100 trials published in high-impact journals between September 2006 and February 2007 and also registered in a trial registry, Ewart and colleagues found that in 34 cases (31 percent) the primary outcome had changed (10 by addition of a new primary outcome; 3 by promotion from a secondary outcome; 20 by deletion of a primary outcome; and 6 by demotion to a secondary outcome); and in 77 cases (70 percent) the secondary outcome changed (54 by addition of a new secondary outcome; 5 by demotion from a primary outcome; 48 by deletion; 3 by promotion to a primary outcome) (Ewart et al., 2009).

Acquiring unpublished data from industry can be challenging. However, when available, unpublished data can change an SR’s conclusions about the benefits and harms of treatment. A review by Eyding and colleagues demonstrates both the challenge of acquiring all relevant data from a manufacturer and how acquisition of those data can change the conclusion of an SR (Eyding et al., 2010). In their SR, which included both published and unpublished data acquired from the drug manufacturer, Eyding and colleagues found that published data overestimated the benefit of the antidepressant reboxetine over placebo by up to 115 percent and over selective serotonin reuptake inhibitors (SSRIs) by up to 23 percent. The addition of unpublished data changed the superiority of reboxetine vs. placebo to a nonsignificant difference and the nonsignificant difference between reboxetine and SSRIs to inferiority for reboxetine. For patients with adverse events and rates of withdrawals from adverse events inclusion of unpublished data changed nonsignificant difference between reboxetine and placebo to inferiority of reboxetine; while for rates of withdrawals for adverse events inclusion of unpublished data changed the nonsignificant difference between reboxetine and fluoxetine to an inferiority of fluoxetine.

Although there are many studies documenting the problem of publication bias and selective outcome reporting bias, few studies have examined the effect of such bias on SR findings. One recent study by Kirkham and colleagues assessed the impact of outcome reporting bias in individual trials on 81 SRs published in 2006 and 2007 by Cochrane review groups (Kirkham et al., 2010). More than one third of the reviews (34 percent) included at least one RCT with suspected outcome reporting bias. The authors assessed the potential impact of the bias and found that meta-analyses omitting trials with presumed selective outcome reporting for the primary outcome could overestimate the treatment effect. They also concluded that trials should not be excluded from SRs simply because outcome data appear to be missing when in fact the missing data may be due to selective outcome reporting. The authors suggest that in such cases the trialists should be asked to provide the outcome data that were analyzed, but not reported.

Time-lag Bias

In an SR of the literature, Hopewell and her colleagues (2009a) found that trials with positive results (statistically significant in favor of the experimental arm) were published about a year sooner than trials with null or negative results (not statistically significant or statistically significant in favor of the control arm). This has implications for both systematic review teams and patients. If positive findings are more likely to be available during the search process, then SRs may provide a biased view of current knowledge. The limited evidence available implies that publication delays may be caused by the investigator rather than by journal editors (Dickersin et al., 2002b; Ioannidis et al., 1997, 1998).

Location Bias

The location of published research findings in journals with different ease of access or levels of indexing is also correlated with the nature and direction of results. For example, in a Cochrane methodology review, Hopewell and colleagues identified five studies that assessed the impact of including trials published in the grey literature in an SR (Hopewell et al., 2009a). The studies found that trials in the published literature tend to be larger and show an overall larger treatment effect than those trials found in the grey literature (primarily abstracts and unpublished data, such as data from trial registries, “file drawer data,” and data from individual trialists).

The researchers suggest that, by excluding grey literature, an SR or meta-analysis is likely to artificially inflate the benefits of a health care intervention.

Language Bias

As in other types of reporting bias, language bias refers to the publication of research findings in certain languages, depending on the nature and direction of the findings. For example, some evidence shows that investigators in Germany may choose to publish their negative RCT findings in non-English language journals and their positive RCT findings in English-language journals (Egger and Zellweger-Zahner, 1997; Heres et al., 2004). However, there is no definitive evidence on the impact of excluding articles in languages other than English (LOE), nor is there evidence that non-English language articles are of lower quality (Moher et al., 1996); the differences observed appear to be minor (Moher et al., 2003).

Some studies suggest that, depending on clinical specialty or disease, excluding research in LOE may not bias SR findings (Egger et al., 2003; Gregoire et al., 1995; Moher et al., 2000, 2003; Morrison et al., 2009). In a recent SR, Morrison and colleagues examined the impact on estimates of treatment effect when RCTs published in LOE are excluded (Morrison et al., 2009).4 The researchers identified five eligible reports (describing three unique studies) that assessed the impact of excluding articles in LOE on the results of a meta-analysis. None of the five reports found major differences between English-only meta-analyses and meta-analyses that included trials in LOE (Egger et al., 2003; Jüni et al., 2002; Moher et al., 2000, 2003; Pham et al., 2005; Schulz et al., 1995).

Many SRs do not include articles in LOE, probably because of the time and cost involved in obtaining and translating them. The committee recommends that the SR team consider whether the topic of the review might require searching for studies not published in English.

Multiple (Duplicate) Publication Bias

Investigators sometimes publish the same findings multiple times, either overtly or what appears to be covertly. When two or more articles are identical, this constitutes plagiarism. When the articles are not identical, the systematic review team has difficulty

discerning whether the articles are describing the findings from the same or different studies. von Elm and colleagues described four situations that may suggest duplicate publication; these include articles with the following features: (1) identical samples and outcomes; (2) identical samples and different outcomes; (3) samples that are larger or smaller, yet with identical outcomes; and (4) different samples and different outcomes (von Elm et al., 2004). The World Association of Medical Editors (WAME, 2010) and the International Committee of Medical Journal Editors (ICMJE, 2010) have condemned duplicate or multiple publication when there is no clear indication that the article has been published before.

Von Elm and colleagues (2004) identified 141 SRs in anesthesia and analgesia that included 56 studies that had been published two or more times. Little overlap occurred among authors on the duplicate publications, with no cross-referencing of the articles. Of the duplicates, 33 percent were funded by the pharmaceutical industry. Most of the duplicate articles (63 percent) were published in journal supplements soon after the “main” article. Positive results appear to be published more often in duplicate, which can lead to overestimates of a treatment effect if the data are double counted (Tramer et al., 1997).

Citation Bias

Searches of online databases of cited articles are one way to identify research that has been cited in the references of published articles. However, many studies show that, across a broad array of topics, authors tend to cite selectively only the positive results of other studies (omitting the negative or null findings) (Gøtzsche, 1987; Kjaergard and Als-Nielsen, 2002; Nieminen et al., 2007; Ravnskov, 1992, 1995; Schmidt and Gøtzsche, 2005;). Selective pooling of results, that is, when the authors perform a meta-analysis of studies they have selected without a systematic search for all evidence, could be considered both a non-SR and a form of citation bias. Because a selective meta-analysis or pooling does not reflect the true state of research evidence, it is prone to selection bias and may even reflect what the authors want us to know, rather than the totality of knowledge.

Addressing Reporting Bias

Reporting bias clearly presents a fundamental obstacle to the scientific integrity of SRs on the effectiveness of healthcare inter-

ventions. However, at this juncture, important, unresolved questions remain on how to overcome the problem. No empirically-based techniques have been developed that can predict which topics or research questions are most vulnerable to reporting bias. Nor can one determine when reporting bias will lead to an “incorrect” conclusion about the effectiveness of an intervention. Moreover, researchers have not yet developed a low-cost, effective approach to identifying a complete, unbiased literature for SRs of comparative effectiveness research (CER).

SR experts recommend a prespecified, systematic approach to the search for evidence that includes not only easy-to-access bibliographic databases, but also other information sources that contain grey literature, particularly trial data, and other unpublished reports. The search should be comprehensive and include both published and unpublished research. The evidence on reporting bias (described above) is persuasive. Without appropriate measures to counter the biased reporting of primary evidence from clinical trials and observational studies, SRs may only reflect—and could even exacerbate—existing distortions in the biomedical literature. The implications of developing clinical guidance from incomplete or biased knowledge may be serious (Moore, 1995; Thompson et al., 2008). Yet, many SRs fail to address the risk of bias during the search process.

Expert guidance also suggests that the SR team contact the researchers and sponsors of primary research to clarify unclear reports or to obtain unpublished data that are relevant to the SR. See Table 3-2 for key techniques and information sources recommended by AHRQ, CRD, and the Cochrane Collaboration. Appendix E provides further details on expert guidance.

Key Information Sources

Despite the imperative to conduct an unbiased search, many SRs use abbreviated methods to search for the evidence, often because of resource limitations. A common error is to rely solely on a limited number of bibliographic databases. Large databases, such as MEDLINE and Embase (Box 3-2), are relatively easy to use, but they often lack research findings that are essential to answering questions of comparative effectiveness (CRD, 2009; Hopewell et al., 2009b; Lefebvre et al., 2008; Scherer et al., 2007; Song et al., 2010). The appropriate sources of information for an SR depend on the research question, analytic framework, patient outcomes of interest, study population, research design (e.g., trial data vs. observational

TABLE 3-2 Expert Suggestions for Conducting the Search Process and Addressing Reporting Bias

|

|

AHRQ |

CRD |

Cochrane |

|

Expertise required for the search: |

|

|

|

|

• Work with a librarian or other information specialist with SR training to plan the search strategy |

|

|

|

|

• Use an independent librarian or other information specialist to peer review the search strategy |

|

|

|

|

Search: |

|

|

|

|

• Bibliographic databases |

|

|

|

|

• Citation indexes |

|

|

|

|

• Databases of unpublished and ongoing studies |

|

|

|

|

• Grey-literature databases |

|

|

|

|

• Handsearch selected and conference abstracts |

|

|

|

|

• Literature cited by eligible studies |

|

|

|

|

• Regional bibliographic databases |

|

|

|

|

• Studies reported in languages other than English |

|

|

|

|

• Subject-specific databases |

|

|

|

|

• Web/Internet |

|

|

|

|

Contact: |

|

|

|

|

• Researchers to clarify study eligibility, study characteristics, and risk of bias |

|

|

|

|

• Study sponsors and researchers to submit unpublished data |

|

|

|

|

NOTE: See Appendix E for further details on guidance for searching for evidence from AHRQ, CRD, and Cochrane Collaboration. |

|||

data), likelihood of publication, authors, and other factors (Egger et al., 2003; Hartling et al., 2005; Helmer et al., 2001; Lemeshow et al., 2005). Relevant research findings may reside in a large, well-known bibliographic databases, subject-specific or regional databases, or in the grey literature.

The following summarizes the available evidence on the utility of key data sources—such as bibliographic databases, grey literature, trial registries, and authors or sponsors of relevant research—primarily for searching for results from RCTs. While considerable research has been done to date on finding relevant randomized trials (Dickersin et al., 1985; Dickersin et al., 1994; McKibbon et al., 2009; Royle and Milne, 2003; Royle and Waugh, 2003), less work has been done on methods for identifying qualitative (Flemming and Briggs,

|

BOX 3-2 Bibliographic Databases

Regional Databases

SOURCES: BIREME (2010); Cochrane Collaboration (2010a); CRD (2010); Dickersin et al. (2002a); Embase (2010); National Library of Medicine (2008); WHO (2006). |

2007) and observational data for a given topic (Booth 2006; Furlan et al., 2006; Kuper et al., 2006; Lemeshow et al., 2005). The few electronic search strategies that have been evaluated to identify studies of harms, for example, suggest that further methodological research

is needed to find an efficient balance between sensitivity5 and precision in conducting electronic searches (Golder and Loke, 2009).

Less is known about the consequences of including studies missed in these searches. For example, one SR of the literature on search methods found that adverse effects information was included more frequently in unpublished sources, but also concluded that there was insufficient evidence to determine how including unpublished studies affects an SR’s pooled risk estimates of adverse effects (Golder and Loke, 2010). Nevertheless, one must assume that the consequences of missing relevant articles may be clinically significant especially if the search fails to identify data that might alter conclusions about the risks and benefits of an intervention.

Bibliographic Databases

Unfortunately, little empirical evidence is available to guide the development of an SR bibliographic search strategy. As a result, the researcher has to scrutinize a large volume of articles to identify the relatively small proportion that are relevant to the research question under consideration. At present, no one database or information source is sufficient to ensure an unbiased, balanced picture of what is known about the effectiveness, harms, and benefits of health interventions (Betran et al., 2005; Crumley et al., 2005; Royle et al., 2005; Tricco et al., 2008). Betran and colleagues, for example, assessed the utility of different databases for identifying studies for a World Health Organization (WHO) SR of maternal morbidity and mortality (Betran et al., 2005). After screening more than 64,000 different citations, they identified 2,093 potentially eligible studies. Several databases were sources of research not found elsewhere; 20 percent of citations were found only in MEDLINE, 7.4 percent in Embase, and 5.6 percent in LILACS and other topic specific databases.

Specialized databases Depending on the subject of the SR, specialized topical databases such as POPLINE and PsycINFO may provide research findings not available in other databases (Box 3-3). POPLINE is a specialized database of abstracts of scientific articles, reports, books, and unpublished reports in the field of population, family planning, and related health issues. PsycINFO, a database of psychological literature, contains journal articles, book chapters,

|

BOX 3-3 Subject-Specific Databases

SOURCES: APA (2010); Campbell Collaboration (2000); EBSCO Publishing (2010); Knowledge for Health (2010). |

books, technical reports, and dissertations related to behavioral health interventions.

Citation indexes Scopus, Web of Science, and other citation indexes are valuable for finding cited reports from journals, trade publications, book series, and conference papers from the scientific, technical, medical, social sciences, and arts and humanities fields (Bakkalbasi et al., 2006; Chapman et al., 2010; Falagas et al., 2008; ISI Web of Knowledge, 2009; Kuper et al., 2006; Scopus, 2010). Searching the citations of previous SRs on the same topic could be particularly fruitful.

Grey literature Grey literature includes trial registries (discussed below), conference abstracts, books, dissertations, monographs, and reports held by the Food and Drug Administration (FDA) and other government agencies, academics, business, and industry.

|

BOX 3-4 Grey-Literature Databases

SOURCES: New York Academy of Medicine (2010); Online Computer Library Center (2010); OpenSIGLE (2010); ProQuest (2010). |

Grey-literature databases, such as those described in Box 3-4, are important sources for technical or research reports, doctoral dissertations, conference papers, and other research.

Handsearching Handsearching is when researchers manually examine—page by page—each article, abstract, editorial, letter to the editor, or other items in journals to identify reports of RCTs or other relevant evidence (Hopewell et al., 2009b). No empirical research shows how an SR’s conclusions might be affected by adding trials identified through a handsearch. However, for some CER topics and circumstances, handsearching may be important (CRD, 2009; Hopewell et al., 2009a; Lefebvre et al., 2008; Relevo and Balshem, 2011). The first or only appearance of a trial report, for example, may be in the nonindexed portions of a journal.

Contributors to the Cochrane Collaboration have handsearched literally thousands of journals and conference abstracts to identify controlled clinical trials and studies that may be eligible for Cochrane reviews (Dickersin et al., 2002a). Using a publicly available

resource, one can identify which journals, abstracts, and years have been or are being searched by going to the Cochrane Master List of Journals Being Searched.6 If a subject area has been well covered by Cochrane, then it is probably reasonable to forgo handsearching and to rely on the Cochrane Central Register of Controlled Trials (CENTRAL), which should contain the identified articles and abstracts. It is always advisable to check with the relevant Cochrane review group to confirm the journals/conference abstracts that have been searched and how they are indexed in CENTRAL. The CENTRAL database is available to all subscribers to the Cochrane Library. For example, if the search topic was eye trials, numerous years of journals and conference abstracts have been searched, and included citations have been MeSH coded if they were from a source not indexed on MEDLINE. Because of the comprehensive searching and indexing available for the eyes and vision field, one would not need to search beyond CENTRAL.

Clinical Trials Data

Clinical trials produce essential data for SRs on the therapeutic effectiveness and adverse effects of health care interventions. However, the findings for a substantial number of clinical trials are never published (Bennett and Jull, 2003; Hopewell et al., 2009b; MacLean et al., 2003; Mathieu et al., 2009; McAuley et al., 2000; Savoie et al., 2003; Turner et al., 2008). Thus, the search for trial data should include trial registries (ClinicalTrials.gov, Clinical Study Results, Current Controlled Trials, and WHO International Clinical Trials Registry), FDA medical and statistical reviews records (MacLean et al., 2003; Turner et al., 2008), conference abstracts (Hopewell et al., 2009b; McAuley et al., 2000), non-English literature, and outreach to investigators (CRD, 2009; Golder et al., 2010; Hopewell et al., 2009b; Lefebvre et al., 2008; Miller, 2010; O’Connor, 2009; Relevo and Balshem, 2011; Song et al., 2010).

Trial registries Trial registries have the potential to address the effects of reporting bias if they provide complete data on both ongoing and completed trials (Boissel, 1993; Dickersin, 1988; Dickersin and Rennie, 2003; Hirsch, 2008; NLM, 2009; Ross et al., 2009; Savoie et al., 2003; Song et al., 2010; WHO, 2010; Wood, 2009). One can access a large proportion of international trials registries using the WHO International Clinical Trials Registry Platform (WHO, 2010).

|

6 |

Available at http://uscc.cochrane.org/en/newPage1.html. |

ClinicalTrials.gov is the most comprehensive public registry. It was established in 2000 by the National Library of Medicine as required by the FDA Modernization Act of 19977 (NLM, 2009). At its start, ClinicalTrials.gov had minimal utility for SRs because the required data were quite limited, industry compliance with the mandate was poor, and government enforcement of sponsors’ obligation to submit complete data was lax (Zarin, 2005). The International Committee of Medical Journal Editors (ICMJE), among others, spurred trial registration overall by requiring authors to enroll trials in a public trials registry at or before the beginning of patient enrollment as a precondition for publication in member journals (DeAngelis et al., 2004). The implementation of this policy is associated with a 73 percent increase in worldwide trial registrations at ClinicalTrials.gov for all intervention types (Zarin et al., 2005).

The FDA Amendments Act of 20078 significantly expanded the potential depth and breadth of the ClinicalTrials.gov registry. The act mandates that sponsors of any ongoing clinical trial involving a drug, biological product, or device approved for marketing by the FDA, not only register the trial,9 but also submit data on the trial’s research protocol and study results (including adverse events).10 As of October 2010, 2,300 results records are available. Much of the required data have not yet been submitted (Miller, 2010), and Congress has allowed sponsors to delay posting of results data until after the product is granted FDA approval. New regulations governing the scope and timing of results posting are pending (Wood, 2009).

Data gathered as part of the FDA approval process The FDA requires sponsors to submit extensive data about efficacy and safety as part of the New Drug Application (NDA) process. FDA analysts—statisticians, physicians, pharmacologists, and chemists—examine and analyze these data.

Although the material submitted by the sponsor is confidential, under the Freedom of Information Act, the FDA is required to make its analysts’ reports public after redacting proprietary or sensitive information. Since 1998, selected, redacted copies of reports conducted by FDA analysts have been publicly available (see Drugs@

|

7 |

Public Law 105-115 sec. 113. |

|

8 |

Public Law 110-85. |

|

9 |

Phase I trials are excluded. |

|

10 |

Required data include demographic and baseline characteristics of the patients, the number of patients lost to follow-up, the number excluded from the analysis, and the primary and secondary outcomes measures (including a table of values with appropriate tests of the statistical significance of the values) (Miller 2010). |

FDA11). When available, these are useful for obtaining clinical trials data, especially when studies are not otherwise reported.12,13 For example, as part of an SR of complications from nonsteroidal anti-inflammatory drugs (NSAIDs), MacLean and colleagues identified trials using the FDA repository. They compared two groups of studies meeting inclusion criteria for the SR: published reports of trials and studies included in submissions to the FDA. They identified 20 published studies on the topic and 37 studies submitted to the FDA that met their inclusion criteria. Only one study was in both the published and FDA groups (i.e., only 1 of 37 studies submitted to the FDA was published) (MacLean et al., 2003). The authors found no meaningful differences in the information reported in the FDA report and the published report on sample size, gender distribution, indication for drug use, and components of study methodological quality. This indicated, at least in this case, there is no reason to omit unpublished research from an SR for reasons of study quality.

Several studies have demonstrated that the FDA repository provides opportunities for finding out about unpublished trials, and that reporting biases exist such that unpublished studies are associated more often with negative findings. Lee and colleagues examined 909 trials supporting 90 approved drugs in FDA reviews, and found that 43 percent (394 of 909) were published 5 years post-approval and that positive results were associated with publication (Lee et al., 2008).

Rising and colleagues (2008) conducted a study of all efficacy trials found in approved NDAs for new molecular entities from 2001 to 2002 and all published clinical trials corresponding to trials within those NDAs. The authors found that trials in NDAs with favorable primary outcomes were nearly five times more likely to be published than trials with unfavorable primary outcomes. In addition, for those 99 cases in which conclusions were provided in both the NDA and the published paper, in 9 (9 percent) the conclusion was different in the NDA and the publication and all changes favored the test drug. Published papers included more outcomes favoring the test drug than the NDAs. The authors also found that, excluding outcomes with unknown significance, 43 outcomes in the NDAs did not favor the test drug (35 were nonsignificant and 8

|

11 |

Available at http://www.accessdata.fda.gov/scripts/cder/drugsatfda/. |

|

12 |

NDA data were not easily accessed at the time of the MacLean study; the investigators had to collect the data through a Freedom of Information Act request. |

|

13 |

NDAs are available at http://www.accessdata.fda.gov/scripts/cder/drugsatfda/index.cfm?fuseaction=Search.Search_Drug_Name. |

favored the comparator). Of these 20 (47 percent) were not included in the published papers and of the 23 that were published 5 changed between the NDA-reported outcome and the published outcome with 4 changed to favor the test drug in the published results.

Turner and his colleagues (2008) examined FDA submissions for 12 antidepressants, and identified 74 clinical trials, of which 31 percent had not been reported. The researchers compared FDA review data of each drug’s effects with the published trial data. They found that the published data suggested that 94 percent of the antidepressant trials were positive. In contrast, the FDA data indicated that only 51 percent of trials were positive. Moreover, when meta-analyses were conducted with and without the FDA data, the researchers found that the published reports overstated the effect size from 11 to 69 percent for the individual drugs. Overall studies judged positive by the FDA were 12 times as likely to be published in a way that agreed with the FDA than studies not judged positive by the FDA.

FDA material can also be useful for detecting selective outcome reporting bias and selective analysis bias. For example, Turner and colleagues (2008) found that the conclusions for 11 of 57 published trials did not agree between the FDA review and the publication. In some cases, the journal publication reported different p values than the FDA report of the same study, reflecting preferential reporting of comparisons or analyses that had statistically significant p values.

The main limitation of the FDA files is that they may remain unavailable for several years after a drug is approved. Data on older drugs within a class are often missing. For example, of the 9 atypical antipsychotic drugs marketed in the United States in 2010, the FDA material is available for 7 of them. FDA reviews are not available for the 2 oldest drugs—clozapine (approved in 1989) and risperidone (approved in 1993) (McDonagh et al., 2010).

Contacting Authors and Study Sponsors for Missing Data

As noted earlier in the chapter, more than half of all trial findings may never be published (Hopewell et al., 2009b; Song et al., 2009). If a published report on a trial is available, key data are often missing. When published reports do not contain the information needed for the SR (e.g., for the assessment of bias, description of study characteristics), the SR team should contact the author to clarify and obtain missing data and to clear up any other uncertainties such as possible duplicate publication (CRD, 2009; Glasziou et al., 2008; Higgins and Deeks, 2008; Relevo and Balshem, 2011). Several studies have documented that collecting some, if not all,

data needed for a meta-analysis is feasible by directly contacting the relevant author and Principal Investigators (Devereaux et al., 2004; Kelley et al., 2004; Kirkham et al., 2010; Song et al., 2010). For example, in a study assessing outcome reporting bias in Cochrane SRs, Kirkham and colleagues (2010) e-mailed the authors of the RCTs that were included in the SRs to clarify whether a trial measured the SR’s primary outcome. The researchers were able to obtain missing trial data from more than a third of the authors contacted (39 percent). Of these, 60 percent responded within a day and the remainder within 3 weeks.

Updating Searches

When patients, clinicians, clinical practice guideline (CPG) developers, and others look for SRs to guide their decisions, they hope to find the most current information available. However, in the Rising study described earlier, the researchers found that 23 percent of the efficacy trials submitted to the FDA for new molecular entities from 2001–2002 were still not published 5 years after FDA approval (Rising et al., 2008). Moher and colleagues (2007b) cite a compelling example—treatment of traumatic brain injury (TBI)—of how an updated SR can change beliefs about the risks and benefits of an intervention. Corticosteroids had been used routinely over three decades for TBI when a new clinical trial suggested that patients who had TBI and were treated with corticosteroids were at higher risk of death compared with placebo (CRASH Trial Collaborators, 2004). When Alderson and Roberts incorporated the new trial data in an update of an earlier SR on the topic, findings about mortality risk dramatically reversed—leading to the conclusion that steroids should no longer be routinely used in patients with TBI (Alderson and Roberts, 2005).

Two opportunities are available for updating the search and the SR. The first opportunity for updating is just before the review’s initial publication. Because a meaningful amount of time is likely to have elapsed since the initial search, SRs are at risk of being outdated even before they are finalized (Shojania et al., 2007). Among a cohort of SRs on the effectiveness of drugs, devices, or procedures published between 1995 and 2005 and indexed in the ACP Journal Club14 database, on average more than 1 year (61 weeks) elapsed

|

14 |

The ACP Journal Club, once a stand-alone bimonthly journal, is now a monthly feature of the Annals of Internal Medicine. The club’s purpose is to feature structured abstracts (with commentaries from clinical experts) of the best original and review articles in internal medicine and other specialties. For more information go to www.acpjc.org. |

between the final search and publication and 74 weeks elapsed between the final search and indexing in MEDLINE (when findings are more easily accessible) (Sampson et al., 2008). AHRQ requires Evidence-Based Practice Centers (EPCs) to update SR searches at the time of peer review.15 CRD and the Cochrane Collaboration recommend that the search be updated before the final analysis but do not specify an exact time period (CRD, 2009; Higgins et al., 2008).

The second opportunity for updating is post-publication, and occurs periodically over time, to ensure a review is kept up-to-date. In examining how often reviews need updating, Shojania and colleagues (2007) followed 100 meta-analyses, published between 1995 and 2005 and indexed in the ACP Journal Club, of the comparative effectiveness of drugs, devices, or procedures. Within 5.5 years, half of the reviews had new evidence that would have substantively changed conclusions about effectiveness, and within 2 years nearly 25 percent had such evidence.

Updating also provides an opportunity to identify and incorporate studies with negative findings that may have taken longer to be published than those with positive findings (Hopewell et al., 2009b) and larger scale confirmatory trials that can appear in publications after smaller trials (Song et al., 2010).

According to the Cochrane Handbook, an SR may be out-of-date under the following scenarios:

-

A change is needed in the research question or selection criteria for studies. For example, a new intervention (e.g., a newly marketed drug within a class) or a new outcome of the interventions may have been identified since the last update;

-

New studies are available;

-

Methods are out-of-date; or

-

Factual statements in the introduction and discussion sections of the review are not up-to-date.

Identifying reasons to change the research question and searching for new studies are the initial steps in updating. If the questions are still up-to-date, and searches do not identify relevant new studies, the SR can be considered up-to-date (Moher and Tsertsvadze, 2006). If new studies are identified, then their results must be incorporated into the existing SR.

A typical approach to updating is to consider the need to update the research question and conduct a new literature search every 2 years. Because some reviews become out-of-date sooner than this, several recent investigations have developed and tested strategies to identify SRs that need updating earlier (Barrowman et al., 2003; Garritty et al., 2009; Higgins et al., 2008; Louden et al., 2008; Sutton et al., 2009; Voisin et al., 2008). These strategies use the findings that some fields move faster than others; large studies are more likely to change conclusions than small ones; and both literature scans and consultation with experts can help identify the need for an update. In the best available study of an updating strategy, Shojania and colleagues sought signals that an update would be needed sooner rather than later after publication of an SR (Shojania et al., 2007). Fifty-seven percent of reviews had one or more of these signals for updating. Cardiovascular medicine, heterogeneity in the original review, and publication of a new trial larger than the previous largest trial were associated with shorter survival times, while inclusion of more than 13 studies in the original review was associated with increased time before an update was needed. In 23 cases the signal occurred within 2 years of publication. The median survival of a review without any signal that an update was needed was 5.5 years.

RECOMMENDED STANDARDS FOR THE SEARCH PROCESS

The committee recommends the following standards and elements of performance for identifying the body of evidence for an SR:

Standard 3.1—Conduct a comprehensive systematic search for evidence

Required elements:

|

3.1.1 |

Work with a librarian or other information specialist trained in performing systematic reviews to plan the search strategy |

|

3.1.2 |

Design the search strategy to address each key research question |

|

3.1.3 |

Use an independent librarian or other information specialist to peer review the search strategy |

|

3.1.4 |

Search bibliographic databases |

|

3.1.5 |

Search citation indexes |

|

3.1.6 |

Search literature cited by eligible studies |

|

3.1.7 |

Update the search at intervals appropriate to the pace of generation of new information for the research question being addressed |

|

3.1.8 |

Search subject-specific databases if other databases are unlikely to provide all relevant evidence |

|

3.1.9 |

Search regional bibliographic databases if other databases are unlikely to provide all relevant evidence |

Standard 3.2—Take action to address reporting biases of research results

Required elements:

|

3.2.1 |

Search grey-literature databases, clinical trial registries, and other sources of unpublished information about studies |

|

3.2.2 |

Invite researchers to clarify information related to study eligibility, study characteristics, and risk of bias |

|

3.2.3 |

Invite all study sponsors to submit unpublished data, including unreported outcomes, for possible inclusion in the systematic review |

|

3.2.4 |

Handsearch selected journals and conference abstracts |

|

3.2.5 |

Conduct a web search |

|

3.2.6 |

Search for studies reported in languages other than English if appropriate |

Rationale

In summary, little evidence directly addresses the influence of each search step on the final outcome of the SR (Tricco et al., 2008). Moreover, the SR team cannot judge in advance whether reporting bias will be a threat to any given review. However, evidence shows the risks of conducting a nonsystematic, incomplete search. Relying solely on mainstream databases and published reports may misinform clinical decisions. Thus, the search should include sources of unpublished data, including grey-literature databases, trial registries, and FDA submissions such as NDAs.

The search to identify a body of evidence on comparative effectiveness must be systematic, prespecified, and include an array of information sources that can provide both published and unpublished research data. The essence of CER and patient-centered health care is an accurate and fair accounting of the evidence in the research literature on the effectiveness and potential benefits and harms of health care interventions (IOM, 2008, 2009). Informed health care decision making by consumers, patients, clinicians, and others, demands unbiased and comprehensive information. Developers of clinical practice guidelines cannot produce sound advice without it.

SRs are most useful when they are up-to-date. Assuming a field is active, initial searches should be updated when the SR is final-

ized for publication, and studies ongoing at the time the review was undertaken should be checked for availability of results. In addition, notations of ongoing trials (e.g., such as those identified by searching trials registries) is important to notify the SR readers when new information can be expected in the future.

Some of the expert search methods that the committee endorses are resource intensive and time consuming. The committee is not suggesting an exhaustive search using all possible methods and all available sources of unpublished studies and grey literature. For each SR, the researcher must determine how best to identify a comprehensive and unbiased set of the relevant studies that might be included in the review. The review team should consider what information sources are appropriate given the topic of the review and review those sources. Conference abstracts and proceedings will rarely provide useful unpublished data but they may alert the reviewer to otherwise unpublished trials. In the case of drug studies, FDA reviews and trial registries are likely sources of unpublished data that, when included, may change an SR’s outcomes and conclusions from a review relying only on published data. Searches of these sources and requests to manufacturers should always be conducted. With the growing body of SRs being performed on behalf of state and federal agencies, those reviews should also be considered as a potential source of otherwise unpublished data and a search for such reports is also warranted. The increased burden on reviewers, particularly with regard to the inclusion of FDA reviews, will likely decrease over time as reviewers gain experience in using those sources and in more efficiently and effectively abstracting the relevant data. The protection against potential bias brought about by inclusion of these data sources makes the development of that expertise critical.

The search process is also likely to become less resource intensive as specialized databases of comprehensive article collections used in previous SRs are developed, or automated search and retrieval methods are tested and implemented.

SCREENING AND SELECTING STUDIES

Selecting which studies should be included in the SR is a multistep, labor-intensive process. EPC staff have estimated that the SR search, review of abstracts, and retrieval and review of selected full-text papers takes an average of 332 hours (Cohen et al., 2008). If the search is conducted appropriately, it is likely to yield hundreds—if not thousands—of potential studies (typically in the form of cita-

tions and abstracts). The next step—the focus of this section of the chapter—is to screen the collected studies to determine which ones are actually relevant to the research question under consideration.

The screening and selection process requires careful, sometimes subjective, judgments and meticulous documentation. Decisions on which studies are relevant to the research question and analytic framework are among the most significant judgments made during the course of an SR. If the study inclusion criteria are too narrow, critical data may be missed. If the inclusion criteria are too broad, irrelevant studies may overburden the process.

The following overview summarizes the available evidence on how to best screen, select, and document this critical phase of an SR. The focus is on unbiased selection of studies, inclusion of observational studies, and documentation of the process. The committee’s related standards are presented at the end of the section.

See Table 3-3 for steps recommended by AHRQ, CRD, and the Cochrane Collaboration for screening publications and extracting data from eligible studies. Appendix E provides additional details.

Ensuring an Unbiased Selection of Studies

Use Prespecified Inclusion and Exclusion Criteria

Using prespecified inclusion and exclusion criteria to choose studies is the best way to minimize the risk of researcher biases influencing the ultimate results of the SR (CRD, 2009; Higgins and Deeks, 2008; Liberati et al., 2009; Silagy et al., 2002). The SR research protocol should make explicit which studies to include or exclude

TABLE 3-3 Expert Suggestions for Screening Publications and Extracting Data from Eligible Studies

|

|

AHRQ |

CRD |

Cochrane |

|

Use two or more members of the review team, working independently, to screen studies |

|

|

|

|

Train screeners |

|

|

|

|

Use two or more researchers, working independently, to extract data from each study |

|

|

|

|

Use standard data extraction forms developed for the specific systematic review |

|

|

|

|

Pilot-test the data extraction forms and process |

|

|

|

|

NOTE: See Appendix E for further details on guidance on screening and extracting data from AHRQ, CRD, and the Cochrane Collaboration. |

|||

based on the patient population and patient outcomes of interest, the healthcare intervention and comparators, clinical settings (if relevant), and study designs (e.g., randomized vs. observational research) that are appropriate for the research question. Only studies that meet all of the criteria and none of the exclusion criteria should be included in the SR. Box 3-5 provides an example of selection criteria from a recent EPC research protocol for an SR of therapies for children with an autism spectrum disorder.

Although little empirical evidence informs the development of the screening criteria, numerous studies have shown that, too often, SRs allow excessive subjectivity into the screening process (Cooper et al., 2006; Delaney et al., 2007; Dixon et al., 2005; Edwards et al., 2002; Linde and Willich, 2003; Lundh et al., 2009; Mrkobrada et al., 2008; Peinemann et al., 2008; Thompson et al., 2008). Mrkobrada and colleagues, for example, assessed the quality of all the nephrology-related SRs published in 2005 (Mrkobrada et al., 2008). Of the 90 SRs, 51 did not report efforts to minimize bias during the selection process, such as using prespecified inclusion criteria and having more than one person select eligible studies. An assessment of critical care meta-analyses published between 1994 and 2003 yielded similar findings. Delaney and colleagues (2007) examined 139 meta-analyses related to critical care medicine in journals or the Cochrane Database of Systematic Reviews. They found that a substantial proportion of the papers did not address potential biases in the selection of studies; 14 of the 36 Cochrane reviews (39 percent) and 69 of the 92 journal articles (75 percent).

Reviewing the full-text papers for all citations identified in the original search is time consuming and expensive. Expert guidance recommends that a two-stage approach to screening citations for inclusion in an SR is acceptable in minimizing bias or producing quality work (CRD, 2009; Higgins and Deeks, 2008). The first step is to screen the titles and abstracts against the inclusion criteria. The second step is to screen the full-text papers passing the first screen. Selecting studies based solely on the titles and abstracts requires judgment and experience with the literature (Cooper et al., 2006; Dixon et al., 2005; Liberati et al., 2009).

Minimize Subjectivity

Even when the selection criteria are prespecified and explicit, decisions on including particular studies can be subjective. AHRQ, CRD, and the Cochrane Collaboration recommend that more than one individual independently screens and selects studies in order to

|

BOX 3-5 Study Selection Criteria for a Systematic Review of Therapies for Children with Autism Spectrum Disorders (ASD) Review questions: Among children ages 2–12 with ASD, what are the short- and long-term effects of available behavioral, educational, family, medical, allied health, or complementary or alternative treatment approaches? Specifically,

SOURCE: Adapted from the AHRQ EPC Research Protocol, Therapies for Children with ASD (AHRQ EHC, 2009). |

minimize bias and human error and to help ensure that the selection process is reproducible (Table 3-3) (CRD, 2009; Higgins and Deeks, 2008; Khan, 2001; Relevo and Balshem, 2011). Although doubling the number of screeners is costly, the committee agrees that the additional expense is justified because of the extent of errors and

bias that occur when only one individual does the screening. Without two screeners, SRs may miss relevant data that might affect conclusions about the effectiveness of an intervention. Edwards and colleagues (2002), for example, found that using two reviewers may reduce the likelihood that relevant studies are discarded. The researchers increased the number of eligible trials by up to 32 percent (depending on the reviewer).

Experience, screener training, and pilot-testing of screening criteria are key to an accurate search and selection process. The Cochrane Collaboration recommends that screeners be trained by pilot testing the eligibility criteria on a sample of studies and assessing reliability (Higgins and Deeks, 2008), and certain Cochrane groups require that screeners take the Cochrane online training for handsearchers and pass a test on identification of clinical trials before they become involved (Cochrane Collaboration, 2010b).

Use Observational Studies, as Appropriate

In CER, observational studies should be considered complementary to RCTs (Dreyer and Garner, 2009; Perlin and Kupersmith, 2007). Both can provide useful information for decision makers. Observational studies are critical for evaluating the harms of interventions (Chou and Helfand, 2005). RCTs often lack prespecified hypotheses regarding harms; are not adequately powered to detect serious, but uncommon events (Vandenbroucke, 2004); or exclude patients who are more susceptible to adverse events (Rothwell, 2005). Well-conducted, observational evaluations of harms, particularly those based on large registries of patients seen in actual practice, can help to validate estimates of the severity and frequency of adverse events derived from RCTs, identify subgroups of patients at higher or lower susceptibility, and detect important harms not identified in RCTs (Chou et al., 2010).

The proper role of observational studies in evaluating the benefits of interventions is less clear. RCTs are the gold standard for determining efficacy and effectiveness. For this reason they are the preferred starting place for determining intervention effectiveness. Even if they are available, however, trials may not provide data on outcomes that are important to patients, clinicians, and developers of CPGs. When faced with treatment choices, decision makers want to know who is most likely to benefit from a treatment and what the potential tradeoffs are. Some trials are designed to fulfill regulatory requirements (e.g., for FDA approval) rather than to inform everyday treatment decisions and these studies may address narrow patient populations and intervention options. For example, study

populations may not represent the population affected by the condition of interest; patients could be younger or not as ill (Norris et al., 2010). As a result, a trial may leave unanswered certain important questions about the treatment’s effects in different clinical settings and for different types of patients (Nallamothu et al., 2008).

Thus, although RCTs are subject to less bias, when the available RCTs do not examine how an intervention works in everyday practice or evaluate patient-important outcomes, observational studies may provide the evidence needed to address the SR team’s questions. Deciding to extend eligibility of study designs to observational studies represents a fundamental challenge because the suitability of observational studies for assessment of effectiveness depends heavily on a number of clinical and contextual factors. The likelihood of selection bias, recall bias, and other biases are so high in certain clinical situations that no observational study could address the question with an acceptable risk of bias (Norris et al., 2010).

An important note is that in CER, observational studies of benefits are intended to complement, rather than substitute for, RCTs. Most literature about observational studies of effectiveness has examined whether observational studies can be relied on to make judgments about effectiveness when there are no high-quality RCTs on the same research question (Concato et al., 2000; Deeks et al., 2003; Shikata et al., 2006). The committee did not find evidence to support a recommendation about substituting observational data in the absence of data from RCTs. Reasonable criteria for relying on observational studies in the absence of RCT data have been proposed (Glasziou et al., 2007), but little empiric data support these criteria.

The decision to include or exclude observational studies in an SR should be justifiable, explicit and well-documented (Atkins, 2007; Chambers et al., 2009; Chou et al., 2010; CRD, 2009; Goldsmith et al., 2007). Once this decision has been made, authors of SRs of CER should search for observational research, such as cohort and casecontrol studies, to supplement RCT findings. Less is known about searching for observational studies than for RCTs (Golder and Loke, 2009; Kuper et al., 2006; Wieland and Dickersin, 2005; Wilczynski et al., 2004). The SR team should work closely with a librarian with training and experience in this area and should consider peer review of the search strategy (Sampson et al., 2009).

Documenting the Screening and Selection Process

SRs rarely document the screening and selection process in a way that would allow anyone to either replicate it or to appraise the appropriateness of the selected studies (Golder et al., 2008; Moher et

al., 2007a). In light of the subjective nature of study selection and the large volume of possible citations, the importance of maintaining a detailed account of study selection cannot be understated. Yet, years after reporting guidelines have been disseminated and updated, documentation remains inadequate in most published SRs (Liberati et al., 2009).

Clearly, the search, screening, and selection process is complex and highly technical. The effort required in keeping track of citations, search strategies, full-text articles, and study data is daunting. Experts recommend using reference management software, such as EndNote, RefWorks, or RevMan, to document the process and keep track of the decisions that are made for each article (Cochrane IMS, 2010; CRD, 2009; Elamin et al., 2009; Hernandez et al., 2008; Lefebvre et al., 2008; RefWorks, 2009; Relevo and Balshem, 2011; Thomson Reuters, 2010). Documentation should occur in real time—not retrospectively, but as the search, screening, and selection are carried out. This will help ensure accurate recordkeeping and adherence to protocol.

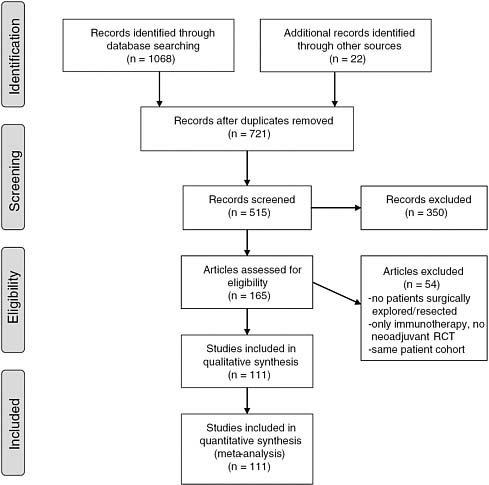

The SR final report should include a flow chart that shows the number of studies that remain after each stage of the selection process.16 Figure 3-1 provides an example of an annotated flow chart. The flow chart documents the number of records identified through electronic databases searched, whether additional records were identified through other sources, and the reasons for excluding articles. Maintaining a record of excluded as well as selected articles is important.

RECOMMENDED STANDARDS FOR SCREENING AND SELECTING STUDIES

The committee recommends the following standards for screening and selecting studies for an SR:

Standard 3.3—Screen and select studies

Required elements:

|

3.3.1 |

Include or exclude studies based on the protocol’s prespecified criteria |

|

3.3.2 |

Use observational studies in addition to randomized clinical trials to evaluate harms of interventions |

|

3.3.3 |

Use two or more members of the review team, working independently, to screen and select studies |

|

16 |

See Chapter 5 for a complete review of SR reporting issues. |

FIGURE 3-1 Example of a flow chart.

SOURCE: Gillen et al. (2010).

|

3.3.4 |

Train screeners using written documentation; test and retest screeners to improve accuracy and consistency |

|

3.3.5 |

Use one of two strategies to select studies: (1) read all full-text articles identified in the search or (2) screen titles and abstracts of all articles and then read the full-text of articles identified in initial screening |

|

3.3.6 |

Taking account of the risk of bias, consider using observational studies to address gaps in the evidence from randomized clinical trials on the benefits of interventions |

Standard 3.4—Document the search

Required elements:

|

3.4.1 |

Provide a line-by-line description of the search strategy, including the date of search for each database, web browser, etc. |

|

3.4.2 |

Document the disposition of each report identified including reasons for their exclusion if appropriate |

Rationale

The primary purpose of CER is to generate reliable, scientific information to guide the real-world choices of patients, clinicians, developers of clinical practice guidelines, and others. The committee recommends the above standards and performance elements to address the pervasive problems of bias, errors, and inadequate documentation of the study selection process in SRs. While the evidence base for these standards is sparse, these common-sense standards draw from the expert guidance of AHRQ, CRD, and the Cochrane Collaboration. The recommended performance elements will help ensure scientific rigor and promote transparency—key committee criteria for judging possible SR standards.

The potential for bias to enter the selection process is significant and well documented. SR experts recommend a number of techniques and information sources that can help protect against an incomplete and biased collection of evidence. For example, the selection of studies to include in an SR should be prespecified in the research protocol. The research team must balance the imperative for a thorough search with constraints on time and resources. However, using only one screener does not sufficiently protect against a biased selection of studies. Experts agree that using two screeners can reduce error and subjectivity. Although the associated cost may be substantial, and representatives of several SR organizations did tell the committee and IOM staff that dual screening is too costly, the committee concludes that SRs may not be reliable without two screeners. A two-step process will save the time and expense of obtaining full-text articles until after initial screening of citations and abstracts.

Observational studies are important inputs for SRs of comparative effectiveness. The plan for using observational research should be clearly outlined in the protocol along with other selection criteria. Many CER questions cannot be fully answered without observational data on the potential harms, benefits, and long-term effects. In many instances, trial findings are not generalizable to individual

patients. Neither experimental nor observational research should be used in an SR without strict methodological scrutiny.

Finally, detailed documentation of methods is essential to scientific inquiry. It is imperative in SRs. Study methods should be reported in sufficient detail so that searches can be replicated and appraised.

MANAGING DATA COLLECTION

Many but not all SRs on the comparative effectiveness of health interventions include a quantitative synthesis (meta-analysis) of the findings of RCTs. Whether or not a quantitative or qualitative synthesis is planned, the assessment of what is known about an intervention’s effectiveness should begin with a clear and systematic description of the included studies (CRD, 2009; Deeks et al., 2008). This requires extracting both qualitative and quantitative data from each study, then summarizing the details on each study’s methods, participants, setting, context, interventions, outcomes, results, publications, and investigators. Data extraction refers to the process that researchers use to collect and transcribe the data from each individual study. Which data are extracted depends on the research question, types of data that are available, and whether meta-analysis is appropriate.17 Box 3-6 lists the types of data that are often collected.

The first part of this chapter focused on key methodological judgments regarding the search for and selection of all relevant high-quality evidence pertinent to a research question. Data collection is just as integral to ensuring an accurate and fair accounting of what is known about the effectiveness of a health care intervention. Quality assurance and control are especially important because of the substantial potential for errors in data handling (Gøtzsche et al., 2007). The following section focuses on how standards can help minimize common mistakes during data extraction and concludes with the committee’s recommended standard and performance elements for managing data collection.

Preventing Errors

Data extraction errors are common and have been documented in numerous studies (Buscemi et al., 2006; Gøtzsche et al., 2007; Horton et al., 2010; Jones et al., 2005; Tramer et al., 1997). Gøtzsche

|

17 |

Qualitative and quantitative synthesis methods are the subject of Chapter 4. |

|

BOX 3-6 Types of Data Extracted from Individual Studies General Information

Study Characteristics

Participant Characteristics

Intervention and Setting

|

and colleagues, for example, examined 27 meta-analyses published in 2004 on a variety of topics, including the effectiveness of acetaminophen for pain in patients with osteoarthritis, antidepressants for mood in trials with active placebos, physical and chemical methods to reduce asthma symptoms from house dust-mite allergens,

Outcome Data/Results

SOURCE: CRD (2009). |