5

The Role of Simulations and Games in Science Assessment

As outlined in previous chapters, simulations and games can increase students’ motivation for science learning, deepen their understanding of important science concepts, improve their science process skills, and advance other important learning goals. However, the rapid development of simulations and games for science learning has outpaced their grounding in theory and research on learning and assessment.

This chapter focuses on assessment of the learning outcomes of simulations and games and their potential to both assess and support student science learning. The first section uses the lens of contemporary assessment theory to identify weaknesses in the assessment of student learning resulting from interaction with simulations and games, as well as weaknesses of science assessment more generally. The next section focuses on the opportunities offered by simulations for enhanced assessment of science learning. The third section discusses similar opportunities for enhanced assessment offered by games. The fourth section describes social and technical challenges to using simulations and games to assess science learning and the research and development needed to address these challenges. The final section presents conclusions.

MEASUREMENT SCIENCE AND SCIENCE ASSESSMENT

The past two decades have seen rapid advances in the cognitive and measurement sciences and an increased awareness of their complementary strengths in understanding and appraising student learning. Knowing What Students Know: The Science and Design of Educational Assessment, a National Research Council report (2001), conceptualized the implications of the

integration of these advances for assessment in the form of an “assessment triangle.” This symbol represents the critical idea that assessment is a highly principled process of reasoning from evidence in which one attempts to infer what students know from their responses to carefully constructed and selected sets of tasks or performances. One corner of the triangle represents cognition (theory and data about how students learn), the second corner represents observations (the tasks students might perform to demonstrate their learning), and the third corner represents interpretation (the methods used to draw inferences from the observations). The study committee emphasized that the three elements of the triangle must be closely interrelated for assessment to be valid and informative.

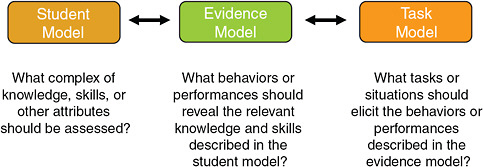

Mislevy et al. (2003) extended this model in a framework known as evidence-centered design (ECD). This framework relates (1) the learning goals, as specified in a model of student cognition; (2) an evidence model specifying the student responses or performances that would represent the desired learning outcomes; and (3) a task model with specific types of questions or tasks designed to elicit the behaviors or performances identified in the evidence model (Messick, 1994). The assessment triangle and ECD frameworks can be used in a variety of ways, including evaluation of the quality and validity of particular assessments that have been used to appraise student learning for research or instructional purposes and to guide the design of new assessments. Examples of both applications are described below.

Limitations of Assessments Used to Evaluate Learning with Simulations and Games

Quellmalz, Timms, and Schneider (2009) used ECD (see Figure 5-1) as a framework to evaluate assessment practices used in recent research on science simulations. The authors reviewed 79 articles that investigated the use of simulations in grades 6-12 and included reports of measured learning outcomes, drawing on a study by Scalise et al. (2009).

The authors found that the assessments included in the research on student learning outcomes rarely reflected the integrated elements of this framework. The studies tended to not describe in detail the learning outcomes targeted by the simulation (the student model), how tasks were designed to provide evidence related to this model (the task and evidence models), or the approach used to interpret the evidence and reach conclusions about student performance (the interpretation component of the assessment triangle). The lack of attention to the desired learning outcomes led to a lack of alignment between the assessment tasks used and the capabilities of simulations. Simulations often engage students in science processes in virtual environments, presenting them with interactive tasks that yield rich streams of data. Although these data could provide evidence of science process skills

FIGURE 5-1 Evidence-centered design of assessments.

SOURCE: Quellmalz et al. (2009). Reprinted with permission.

and other science learning goals that are difficult to measure via conventional items and tests, such data were rarely used for assessment purposes. Instead, most of the studies used paper and pencil tests to measure only one science learning goal—conceptual understanding.

The lack of description of the desired learning goals and how the tasks were related to these goals made it impossible to evaluate the depth of conceptual understanding or the nature of science process skills measured in the studies of simulations (Quellmalz, Timms, and Schneider, 2009). In addition, the limited descriptions of the assessment items and data on item and task quality made it impossible to evaluate the technical quality of the assessment items or their validity for drawing inferences about the efficacy of simulations to enhance student learning. Finally, the studies did not always describe how the assessment results could be used by researchers, teachers, or other potential users and for which user group the results might be most appropriate.

When Quellmalz, Timms, and Schneider (2009) applied the ECD framework to evaluate assessments of student outcomes in recent studies of games, they concluded that research on how to effectively assess the learning outcomes of playing games is still in its infancy. As was the case with simulations, the studies often failed to specify the desired learning outcomes or how assessment tasks and items have been designed to measure these outcomes. Furthermore, game developers and researchers rarely tapped the capacity of the technology to embed assessment and learning in game play.

In an education system driven by standards and external, large-scale assessments, simulations and games are unlikely to be more widely used until their capacity to advance science learning goals can be demonstrated via assessment results. Such results, in turn, will require alternate forms of evidence and improved assessment methods. At the same time, improved

assessment methods that draw on the capabilities of simulations and games to measure important student learning outcomes have potential to address some of the major weaknesses of current science assessment, as discussed below.

Limitations of Assessments Used to Evaluate Science Learning

Most large-scale science assessment programs operated by states and school districts are largely incapable of measuring the multiple science learning goals that simulations and games support. The states administer summative assessments to measure student science achievement. These assessments reflect current state science standards, which frequently give greater weight to conceptual understanding than other learning goals and typically include long lists of science topics that students are expected to master each year1 (Duschl, 2004; National Research Council, 2006). Although science standards in the majority of states also address science processes and understanding of the nature of science, they do not always explicitly describe the performances associated with meeting these learning goals, making it difficult to align assessments with these elements of the standards (National Research Council, 2006).

Most large-scale science assessments use paper and pencil formats and are composed primarily of selected-response (multiple-choice) tasks, making them well suited to testing student knowledge of the many content topics included in state science standards. Although they can provide a snapshot of some science process skills, they do not adequately measure others, such as formulating scientific explanations or communicating scientific understanding (Quellmalz et al., 2005). They cannot assess students’ ability to design and execute all of the steps involved in carrying out a scientific investigation (National Research Council, 2006). A few states have developed standardized classroom assessments of science process skills, providing uniform kits of materials that students use to carry out hands-on laboratory tasks; this approach has also been used in the National Assessment of Educational Progress (NAEP) science test. However, because administering and scoring the hands-on tasks can be cumbersome and expensive, this approach is rarely used in state achievement tests (National Research Council, 2005b).

Another problem of current science assessment is its lack of coherence as a system (National Research Council, 2005b, 2006). Although states and school districts use summative assessments to evaluate overall levels of student science achievement, teachers use formative assessments to provide diagnostic

feedback during instruction, so that teaching and learning can be adapted to meet student needs. In most states and school districts, these different types and levels of science assessment are designed and administered separately. Often they are not well aligned with each other, nor are they linked closely with curriculum and instruction to advance the science learning goals specified in state science standards. As a result, the multiple forms and levels of assessment results can yield conflicting or incomplete information about student science learning (National Research Council, 2006).

Despite repeated calls for improvement (National Research Council, 2005b, 2006, 2007), science assessment has been slow to change. Simulations and games offer new possibilities for improvement in the assessment of critical forms of knowledge and skill that are deemed to be important targets for science learning (National Research Council, 2007). As such, both science learning and assessment stand to benefit from tapping the possibilities offered by simulations and games.

ASSESSMENT OPPORTUNITIES IN SIMULATIONS

New Paradigms in Large-Scale Summative Assessment

A new generation of assessments is attempting to break the mold of traditional, large-scale summative testing practices through the use of current technology and media (Quellmalz, Timms, and Schneider, 2009). Simulations are being designed to measure deep conceptual understanding and science process skills that are difficult to assess using paper and pencil tests or hands-on laboratory tasks. This new paradigm in assessment design and use aims to align summative assessment more closely to the processes and contexts of learning and instruction, particularly in science (Quellmalz and Pellegrino, 2009).

By allowing learners to interact with representations of phenomena, simulations expand the range of situations that can be used to provide interesting and challenging problems to be solved. This, in turn, allows testing of conceptual understanding and science process skills that are not tested well or at all in a static format. Simulations also allow adaptive testing that adjusts the items or tasks presented based on the learner’s responses, and the creation of logs of learners’ problem-solving sequences as they investigate scientific phenomena. Finally, because simulations use multiple modalities to represent science systems and to elicit student responses, English language learners, students with disabilities, and low-performing students may be better able to demonstrate their knowledge and skills through simulations than when responding to text-laden print tests (Kopriva, Gabel, and Bauman, 2009).

The use of short simulation scenarios in large-scale summative assessments is increasing in national, international, and state science testing pro-

grams (see Box 5-1). These examples demonstrate the capacity of simulations to generate evidence of students’ summative science achievement levels, including measures of science process skills and other science learning goals seldom tapped in paper-based tests (Quellmalz and Pellegrino, 2009).

New Paradigms in Integrating Assessment with Instruction

Formative assessments are intended to measure student progress during instruction, providing timely feedback to support learning. Simulations are well suited to the data collection, complex analysis, and individualized feedback needed for formative assessment (Brown, Hinze, and Pellegrino, 2008). They can be used to collect evidence related to students’ inquiry approaches and strategies, reflected in the features of the virtual laboratory tools they manipulate, the information they select, the sequence and number of trials they attempt, and the time they allocate to different activities. Simulations can also provide adaptive tasks, reflecting student responses, as well as immediate, individualized feedback and customized, graduated coaching. Technology can be used to overcome constraints to the systematic use of formative assessment in the classroom, allowing measurement of skills and deep understandings in a feasible and cost-effective manner (Quellmalz and Haertel, 2004).

Reflecting their potential to support both formative and summative assessment, simulations and games offer the possibility of designing digital and mixed media curricula that integrate assessment with instruction.

An Example of an Integrated Science Learning Environment

SimScientists is an ongoing program of research and development focusing on the use of simulations as environments for formative and summative assessment and as curriculum modules to supplement science instruction (Quellmalz et al, 2008). One of these projects, Calipers II, provides an example of this type of integrated digital learning environment (Quellmalz, Timms, and Buckley, in press). It is a simulation-based curriculum unit that embeds a sequence of assessments designed to measure student understanding of components of an ecosystem and roles of organisms in it, interactions in the ecosystem, and the emergent behaviors that result from these interactions (Buckley et al., 2009).

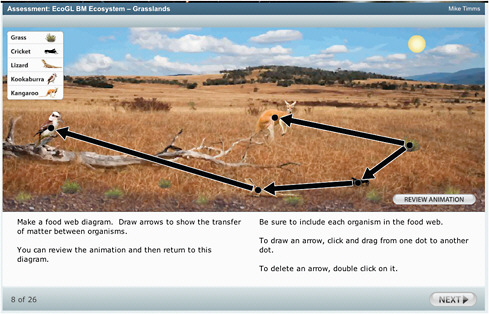

The summative assessment is designed to provide evidence of middle school students’ understanding of ecosystems and inquiry practices after completion of the curriculum unit on ecosystems. Students are presented with the overarching problem of preparing a report describing an Australian grassland ecosystem for an interpretive center. Working with simulations, they investigate the roles and relationships of the animals, birds, insects, and grass in the ecosystem by observing animations of the interactions of these

|

BOX 5-1 Technology-Based Science Assessment in Large-Scale Assessment Programs Information and communications technologies expand the range of knowledge and cognitive skills that can be assessed beyond what is measured in conventional paper and pencil tests. The computer’s ability to capture student inputs while he or she is performing complex, interactive tasks permits the collection of evidence of such processes as problem solving and strategy use as reflected by the information selected, numbers of attempts, and time allocation. Such data can be combined with statistical and measurement algorithms to extract patterns associated with varying levels of expertise. In addition, technology can be used for adaptive testing that integrates diagnosis of errors with student and teacher feedback. Propelled by these trends, technology-based science tests are increasingly appearing in state, national, and international testing programs. The area of science assessment is perhaps leading the way in exploring the presentation and interpretation of complex, multifaceted problem types and assessment approaches. In 2006 and 2009, the Programme for International Student Assessment pilot-tested the Computer-Based Assessment of Science (CBAS), designed to measure science knowledge and inquiry processes not assessed in paper-based test booklets. CBAS tasks include scenario-based item and task sets, such as investigations of the temperature and pressure settings for a simulated nuclear reactor. The 2009 National Assessment of Educational Progress (NAEP) science test included Interactive Computer Tasks designed to test students’ ability to engage in science inquiry practices. These simulation-based tasks measure scientific understanding and inquiry skills more accurately than do paper and pencil tests. The 2012 NAEP Technological Literacy Assessment will include simulations designed to assess how well students can use information and communications technology tools and their ability to engage in the engineering design process. At the state level, Minnesota has an online science test with tasks engaging students in simulated laboratory experiments or investigations of such phenomena as weather and the solar system. Bennett et al. (2007) pioneered the design of simulation-based assessment tasks that were included in the 2009 NAEP science test. In one such task, the students were presented with a scenario involving a helium balloon and asked to determine how different payload masses affect the altitude of the balloon. They could design a virtual experiment, manipulate parameters, run their experiment, record their data, and graph the results. The students could obtain various types of data and plot their relationships before reaching a conclusion and typing in a final response. SOURCE: Adapted from Quellmalz and Pellegrino (2009). |

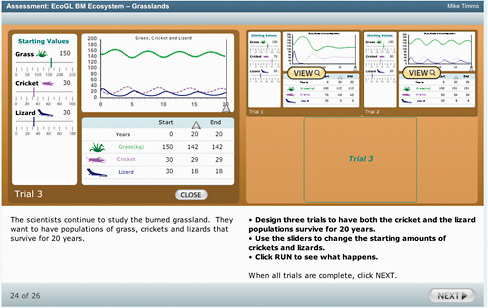

organisms. In one task, students draw a food web representing interactions among the organisms in the ecosystem (see Figure 5-2). Students then conduct investigations with the simulation to predict, observe, and explain what happens to population levels when the numbers of particular organisms are varied (see Figure 5-3). In a culminating task, students present their findings about the grasslands ecosystem.

To assess transfer of learning, the curriculum unit engages students with a companion simulation focusing on a different ecosystem (a mountain lake). Formative assessment tasks embedded in this simulation identify the types of errors individual students make, and the system follows up with feedback and graduated coaching. The levels of feedback and coaching progress from notifying the student that an error has occurred and asking him or her to try again, to showing results of investigations that met the specifications.

The new curriculum unit shows promise in addressing two weaknesses of current science assessment. First, it assesses science process skills as well as other learning goals beyond the science content emphasized in current science tests. Second, it is designed to increase coherence in assessment systems. The researchers are collaborating with several state departments of education to integrate the assessments into classroom-level formative assessment and district- and state-level summative assessment. The goal is

FIGURE 5-2 Screenshot of SimScientists Ecosystems Benchmark Assessment showing a food web diagram produced by a student.

SOURCE: Quellmalz, Timms, and Schneider (2009). Reprinted with permission.

FIGURE 5-3 Screenshot of SimScientists Ecosystems Benchmark Assessment showing a student’s investigations with the interactive population model.

SOURCE: Quellmalz, Timms, and Schneider (2009). Reprinted with permission.

to create balanced systems in which district, classroom, and state tests are nested, mutually informed, and aligned.

An Example of an Integrated Environment for Problem Solving

Another example of assessment embedded in a simulation-based learning environment illustrates how the resulting data on student learning can be made useful and accessible in the classroom (Stevens, Beal, and Sprang, 2009). Interactive Multimedia Exercises (IMMEX) is an online library of science simulations that incorporate assessment of students’ problem-solving performance, progress, and retention. Each problem set presents authentic real-world situations that require complex thinking. Originally created for use in medical school, IMMEX has been used to develop and assess science problem solving among middle, high school, and undergraduate science students as well as medical students.

One IMMEX problem set, Hazmat, asks students to use multiple chemical and physical tests to identify an unknown toxic spill. The learning environment randomly presents 39 different problem cases that require students to identify an unknown compound and tracks their actions and strategies as they gather information and solve the problems. Simple measures provide

information on whether students solved the problem and the time required to reach the solution. More sophisticated measures assess students’ strategies as they navigate the problem-solving tasks, and the two types of measures are combined to create learning trajectories.

As students of various ages work in IMMEX, they typically develop a consistent strategy after they have encountered a particular problem approximately four times (Cooper and Stevens, 2008). They tend to persist in this strategy over time, and their strategies are highly influenced by the teacher’s model of problem solving. To help teachers intervene quickly and assist students in developing efficient, effective problem-solving strategies, IMMEX developers have created an online “digital dashboard.” It provides whole-class information so that the teacher can compare progress across classes, and it also graphically displays the distribution of individual student performances in each class. The teacher may respond to the information by providing differentiated instruction to individual students, groups of students, or entire classes before asking them to continue solving problems. This example illustrates the potential of simulations to facilitate formative assessment by rapidly providing feedback that teachers can use to tailor instruction to meet individual learning needs.

ASSESSMENT OPPORTUNITIES IN GAMES

Although assessment of the learning outcomes of games is still at an early stage, work is under way to embed assessment in games in ways that support both assessment and learning. Quellmalz, Timms, and Schneider (2009) illustrate both the weakness of current assessment methods and these new opportunities by examining three games designed for science learning: Quest Atlantis: Taiga Park, River City, and Crystal Island. None of these games currently incorporates assessments of learning as core game play elements, but researchers are beginning to conceptualize, develop, and integrate dynamic assessment tasks in each one. The three games, which take a similar approach to immersing learners in simulated investigations, are not meant to represent the entire field of serious science games.

In Quest Atlantis: Taiga Park, students engage with virtual characters and data in order to evaluate competing explanations for declining fish populations in the Taiga River. Currently, assessment of learning in the game is undertaken by classroom teachers who score the written mission reports submitted by students (Hickey, Ingram-Goble, and Jameson, 2009). Shute et al. (2009) propose to develop “stealth” assessment in Taiga Park, embedding performance tasks so seamlessly within game play that they are not noticed by the student playing the game. The proposed approach, which would allow monitoring of student progress and drive automated feedback to students, requires much further research, development, and validation.

In the game-based curriculum unit River City, students conduct virtual investigations to identify the cause of an illness and recommend strategies to combat it (Nelson, 2007; see Chapter 3). Most assessment is undertaken by teachers, who use rubrics to score each student’s final written product—a recommendation letter to the mayor of River City. River City also engages students in self-assessment, as teams compare their research findings with those of other teams in their class. The game also incorporates some digital assessment, implemented in an embedded individualized guidance system that uses interaction histories to offer real-time, customized support for students’ investigations. Nelson (2007) found a statistically positive relationship between levels of use of the guidance system and students’ gain scores on a test of content knowledge. On average, boys used the guidance system less and performed more poorly in comparison to girls.

Crystal Island is a narrative-centered learning environment built on a commercial game platform. In this virtual world, students play the role of Alyx, the protagonist who is trying to discover the identity and source of an unidentified infectious disease. Students move their avatar around the island, manipulating objects, taking notes, viewing posters, operating lab equipment, and talking with nonplayer characters to gather clues about the disease’s source. To progress through the mystery, students must form questions, generate hypotheses, collect data, and test their hypotheses. Students encounter five different problems related to diseases and finally select an appropriate treatment plan for the sickened researchers.

Assessment in Crystal Island is evolving. Currently it is mainly embedded in the reaction of in-game characters to the student’s avatar. Researchers have been gradually building pedagogical agents into the game that attempt to gauge the student’s emotional state while learning (anger, anxiety, boredom, confusion, delight, excitement, flow, frustration, sadness, fear) and react with appropriate empathy to support the student’s problem-solving activities (McQuiggan, Robison, and Lester, 2008; Robison, McQuiggan, and Lester, 2009). Students playing this game take notes as they navigate through the virtual world, trying to identify the cause of a disease. Researchers scored these notes, using rubrics to place each student’s notes into one of five categories representing progressively higher levels of science content knowledge and inquiry skills (McQuiggan, Robison, and Lester, 2008). For example, students whose notes included a hypothesis about the problem performed better on the posttests of content knowledge, so these notes were placed in a higher category than notes that did not include a hypothesis. Although the scoring process was time-consuming, it illuminated the importance of scaffolding students in their efforts to generate hypotheses.

McQuiggan, Robison, and Lester (2008) investigated whether machine learning techniques could be applied to create measurement models that use information from student notes to successfully predict the note-taking

categories as judged by human scorers. Their research indicates that Bayes nets and other methods (discussed further below) could be applied to score student notes in real time. Application of such methods would reduce the costs of a scoring system that provides evidence of students’ conceptual understanding and science process skills—skills that are difficult to measure using paper and pencil tests.

These three examples suggest that researchers and game developers are making some progress toward improved assessment of student learning as a result of game play activity, as well as assessment within game play to support better overall student learning. In her proposal to use evidence-centered assessment as a framework for assessment design in Crystal Island, Shute et al. (2009) recognizes the importance of clearly specifying desired learning outcomes and designing assessment tasks to provide evidence related to these outcomes. Nelson (2007) provides evidence that carefully designed embedded assessment in River City supports development of conceptual understanding. And the work on Crystal Island shows the potential of carefully designed assessment methods (in this case, scoring of student notes) to yield information that can inform design of online learning environments to support development of science process skills. The work also shows the potential of new measurement methods to draw inferences about student science learning from patterns derived from the extensive data generated by students’ interactions with the characters, contexts, and scenarios that are found in games (McQuiggan, Robison, and Lester, 2008).

Another example of current efforts to integrate learning and assessment is provided by the Cisco Networking Academy, a global education program that teaches students how to design, build, troubleshoot, and secure computer networks. The academy’s online training curriculum uses simulations and games. Behrens (2009) notes that, historically, the developers of the training curriculum created content that was loaded into a media shell for students to navigate. The software architecture of the curriculum was separate from that of the assessment system, even though the curriculum included embedded quizzes and simulation software (Frezzo, Behrens, and Mislevy, in press). More recently, the developers have begun to transfer performance data from the simulation activities in the curriculum to a business intelligence dashboard that would help instructors and students make sense of the large amount of performance data that is generated by the students’ interactions with the simulation. Current research and development aims to make assessment a ubiquitous, unobtrusive element that supports learning in the digital learning environment.

SOCIAL AND TECHNICAL CHALLENGES

Social Challenges

The costs of new forms of assessment embedded in simulations and games could present a challenge to their wider use. Selected-response items, like those used in current large-scale science tests, can be scored by computer and are relatively inexpensive (National Research Council, 2002). Tests incorporating open-ended items that must be scored by humans are much more expensive to develop and score (Hamilton, 2003), although progress is being made in machine scoring of more complex test items. The states might be able to reduce the costs of new types of assessments by sharing assessment and task designs as well as data and reporting infrastructure. The current development of state assessment consortia, in response to the U.S. Department of Education’s Race to The Top initiative to develop a new generation of high-stakes assessments, offers a vehicle for sharing the costs of all types of assessments, including ones designed to be used in simulation or gaming environments.

Another challenge is related to the role of the teacher. As mentioned previously, the teacher plays an important role in both supporting and assessing learning through simulations and games. While assessments embedded in simulations and games can provide timely, useful information to guide instruction, the extent to which a teacher uses this information may strongly influence how much learning takes place. If assessment were more widely incorporated in simulations and games, a large-scale teacher professional development effort would be needed to support and assist teachers in making use of the new information on individual students’ progress. Teachers could be provided with instruction and practice related to how to use simulations and games for teaching as well as for aligned assessment purposes. At the same time, developers would need to consider how to make the assessment information most useful for teachers—as the developers of IMMEX have done in creating the online digital dashboard. The Cisco Networking Academy includes a comprehensive assessment authoring interface that allows instructors both to use simulation-based assessment and to customize or create their own assessment items.

Technical Challenges and Emerging Solutions

Perhaps the most important technical challenge to embedding assessment in simulations and games is how to make use of the rich stream of data and complex patterns generated as learners interact with these technologies to reliably and validly interpret their learning. Simulations and games engage learners in complex tasks. As defined by Williamson, Bejar, and Mislevy (2006), complex tasks have four characteristics:

-

Completion of the task requires the student to undergo multiple, nontrivial, domain-relevant steps or cognitive processes. For example, as shown in Figures 5-2 and 5-3, students in the SimScientists assessment first observe a simulated ecosystem, noting the behaviors of the organisms, then construct a food web to represent their observations, and finally use a population model tool to vary the number of organisms in the ecosystem and observe outcomes over time.

-

Multiple elements, or features, of each task performance are captured and considered to determine the summative performance or provide diagnostic feedback. Simulations and games are able to do this, capturing a wide range of student responses and actions, from standard multiple-choice tasks and short written responses to actions like gathering quantitative evidence on fish, water, and sediment in a lake (Squire and Jan, 2007).

-

There is a high degree of potential variability in the types of data provided for each task, reflecting the relatively unconstrained learning activities in simulations and games. For example, some simulations include measures of the time taken by a student to perform a task, but the amount of time spent does not necessarily reflect more or less effective performance. Without being considered in conjunction with additional variables about task performance, time is not an easy variable to interpret.

-

The measurement of the adequacy of task solutions requires the task features to be considered as an interdependent set, rather than as conditionally independent. Simulations and games can mimic real-world scenarios and thereby provide greater authenticity to the assessment, which in turn would impact its potential validity. At the same time, however, the use of these complex tasks reduces the number of measures that can be included in any one test, thereby reducing reliability as typically construed in large-scale testing contexts.

As illustrated by these four characteristics, engaging students in complex tasks yields diverse sequences of student behaviors and performances. Assessment requires drawing inferences in real time about student learning from these diverse behaviors and performances. However, most conventional psychometric theory and methods are not well suited for such modeling and interpretation. To overcome these limitations, researchers are pursuing a variety of applications of current methods, such as item response theory (IRT), while also exploring new methods better suited to modeling assessment data derived from complex tasks. Such new methods can accommodate uncertainty about the current state of the learner, model patterns of student

behavior, and be used to provide the basis for immediate feedback during task performance (Quellmalz, Timms, and Schneider, 2009).

IRT is one existing method often used for conventional large-scale tests that shows promise for application to assessment of learning with games and simulations. IRT models place estimates of student ability and item difficulty on the same linear scale, so that the difference between a student’s ability estimate and the item difficulty can be used to interpret student performance. This method could be useful in determining how much help students need when solving problems in an intelligent learning environment, by measuring the gap between item difficulty and current learner ability (Timms, 2007). In a study of IMMEX, Stevens, Beal, and Sprang (2009) used IRT analysis to distinguish weaker from stronger problem solvers among 1,650 chemistry students using the Hazmat problem set. The IRT analysis informed further research in which the authors compared the different learning strategies of weaker and stronger problem solvers in several different classrooms and tested interventions designed to improve students’ problem solving.

Researchers are also applying and testing machine learning methods2 to allow computers to infer behavior patterns based on the large amounts of data generated by students’ interactions with simulations and games. One promising method is the Bayes net (also called a Bayesian network). The use of Bayes nets in assessment, including assessment in simulations and games, has grown (Martin and VanLehn, 1995; Mislevy and Gitomer, 1996). For example, Bayes nets are used to score the ecosystems benchmark assessments in SimScientists, and Cisco Networking Academy staff have used this method to assess examinees’ ability to design and troubleshoot computer networks (Behrens et al., 2008).

Another promising machine learning method is the Artificial Neural Network (ANN). The detailed assessments of the quality of student problem solving in IMMEX are enabled by ANN, together with other techniques (Stevens, Beal, and Sprang, 2009).

In addition to machine learning methods, developers sometimes use simpler, rule-based methods to provide immediate assessment and feedback in response to student actions in the simulation or game. Rule-based methods employ some type of logic to decide how to interpret a student action. A simple example would be posing a multiple-choice question in which the distracters (wrong answer choices) were derived from known misconceptions in the content being assessed. The student’s incorrect response revealing a misconception could be diagnosed logically and immediate action could be taken, such as providing coaching.

Research and Development Needs

Applications of the new methods described in this chapter offer promise to strengthen assessment of the learning outcomes of simulations and games and to seamlessly embed assessment in them in ways that support science teaching and learning. Wider use of the ECD framework would encourage researchers, measurement specialists, and developers to explicitly describe the intended learning goals of a simulation or game and how tasks and items were designed to measure those goals. This, in turn, could support an increased focus on science process skills and other learning outcomes that are often targeted by simulations and games but have rarely been measured to date, strengthening the field of science assessment. Behrens (2009) cautions that, without greater clarity about intended learning outcomes, designers may add complex features to simulations and games that have no purpose. He suggests that the “physical” modeling of a game or simulation will need to evolve simultaneously with modeling of the motivation and thinking of the learner.

Perhaps the greatest technical challenge to embedding assessment of learning into simulations and games lies in drawing inferences from the large amount of data created by student interactions with these learning environments. Further research is needed in machine learning and probability-based test development methods and their application. Such research can help to realize these technologies’ potential to seamlessly integrate learning and assessment into engaging, motivating learning environments. Research and development projects related to games would be most effective if they coordinated assessment research with game design research. Such projects could help realize the potential of assessments to motivate and direct the learner to specific experiences in the game that are appropriate to individual science learning needs.

Continued research and development is critical to improve assessment of the learning outcomes of simulations and games. Improved assessments are needed for research purposes—to more clearly demonstrate the effectiveness of simulations and games to advance various science learning goals—and for teaching and learning. Continued research and development of promising approaches that embed assessment and learning scaffolds directly into simulations and games holds promise to strengthen science assessment and support science learning. Recognizing the need for further research to fulfill this promise, the U.S. Department of Education’s draft National Education Technology Plan (2010, p. xiii) calls on states, districts, the federal government, and other educational stakeholders to:

Conduct research and development that explore how gaming technology, simulations, collaboration environments, and virtual worlds can be used in assessments to engage and motivate learners and to assess complex skills and performances embedded in standards.

CONCLUSIONS

The rapid development of simulations and games for science learning has outpaced their grounding in theory and research on learning and assessment. Recent research on simulations uses assessments that are not well aligned with the capacity of these technologies to advance multiple science learning goals. More generally, state and district science assessment programs are largely incapable of measuring the multiple science learning goals that simulations and games support. However, a new generation of assessments is attempting to use technology to break the mold of traditional, large-scale summative testing practices. Science assessment is leading the way in exploring the presentation and interpretation of complex, multifaceted problem types and assessment approaches.

Conclusion: Games and simulations hold enormous promise as a means for measuring important aspects of science learning that have otherwise proven challenging to assess in both large-scale and classroom testing contexts. Work is currently under way that provides examples of the use of simulations for purposes that include both formative and summative assessment in classrooms and large-scale testing programs, such as NAEP and PISA.

In an education system driven by standards and external, large-scale assessments, simulations and games are unlikely to be more widely used until their capacity to advance multiple science learning goals can be demonstrated via assessment results. This chapter provides examples of current work to provide such summative assessment results, by embedding assessment in game play. These examples suggest that it is valuable to clearly specify the desired learning outcomes of a game, so that assessment tasks can be designed to provide evidence aligned with these learning outcomes. They also illuminate the potential of new measurement methods to draw inferences about student science learning from the extensive data generated by students’ interactions with the games—for the purpose of both summative and formative assessment.

Conclusion: Games will not be useful as alternative environments for formative and summative assessment until assessment tasks can be embedded effectively and unobtrusively into them. Three design principles may aid this process. First, it is important to establish learning goals at the outset of game design, to ensure that the game play supports these goals. Second, the design should include assessment of performance at key points in the game and use the resulting information to move the player to the most appropriate level of the game to support individual learning. In this way, game play, assessment, and learning are intertwined. Third, the extensive data generated by a

learner’s interaction with the game should be used for summative as well as formative purposes, to measure the extent to which a student has advanced in the targeted science learning goals because of game play.

Research on how to effectively assess the learning outcomes of playing games is still in its infancy. Investigators are beginning to explore how best to embed assessment in games in ways that support both assessment and learning.

Conclusion: Although games offer an opportunity to enhance students’ learning of complex science principles, research on how to effectively assess their learning and use that information in game environments to impact the learning process is still in its infancy.

Continued research and development is critical to improve assessment of the learning outcomes of simulations and games. Improved assessments are needed for research purposes—to more clearly demonstrate the effectiveness of simulations and games to advance various science learning goals—and to support improvements in teaching and learning.

Conclusion: Much further research and development is needed to improve assessment of the science learning outcomes of simulations and games and realize their potential to strengthen science assessment more generally and support science learning.