Using Computerized Text Analysis to Assess Threatening Communications and Behavior

Cindy K. Chung and James W. Pennebaker

Understanding the psychology of threats requires expertise across multiple domains. Not only must the actions, words, thoughts, emotions, and behaviors of the person making a threat be examined, but the world of the recipient of the threats also needs to be understood. The problem is more complex when considering that threats can be made by individuals or groups and can be directed toward individuals or groups. A threat, then, can occur across any domain and on multiple levels and must be understood within the social context in which it occurs.

Within the field of psychology, most research on threats has focused on the nonverbal correlates of aggression. In the animal literature, for example, considerable attention has been paid to behaviors that signify dominance or submission. Various species of birds, fish, and mammals often change their appearance by becoming larger when threatening others. Dominance and corresponding threat displays have also been found in vocalization, gaze, and even smell signals (e.g., Buss, 2005). In the literature on humans, an impressive number of studies have analyzed threatening behaviors by studying posture, facial expression, tone of voice, and an array of biological changes (Hall et al., 2005).

The authors wish to acknowledge funding from the Army Research Institute (W91WAW-07-C-0029), CIFA (DOD H9c104-07-C-0014), NSF (NSF-NSCC-090482), DIA (HHM-402-10-C-0100), and START (DHS Z934002). They would also like to thank Douglas H. Harris, Cherie Chauvin, and Amanda Schreier for their helpful comments in the preparation of the manuscript.

Although nonverbal features of threats are clearly important, many of the most dangerous threats between people are conveyed using language. Whether among individuals, groups, or entire nations, early threats often involve one or more people using words to warn others. Despite the obvious importance of natural language as the delivery system of threats, very few social scientists have been able to devise simple systems to identify or calibrate language-based threats. Only recently, with the advent of computer technology and the availability of large language-based datasets, have scientists been able to start to identify and understand threatening communications and responses to them through the study of words (Cohn et al., 2001; Pennebaker and Chung, 2005, 2008; Smith, 2004, 2008; Smith et al., 2008).

This paper provides a general overview of computerized language assessment strategies relevant to the detection and assessment of word-based threats. It is important to appreciate that this work is in its infancy. Consequently, there are no agreed-on methods or theories that have defined the field. Indeed, the “field” is currently made up of a small group of laboratories generally working independently with very different backgrounds and research goals. The current review explores threats from a decidedly social-psychological perspective. As such, the emphasis is on the ways in which word use can reveal important features of a threatening message and also the psychological nature of the speaker and the target of the threatening communication.

Whereas traditional language analyses have emphasized the content of a threatening communication (i.e., what the speaker explicitly says), this review focuses on the language style of the message, especially those words that people cannot readily manipulate (for a review, see Chung and Pennebaker, 2007). This is especially helpful in the area of assessing threatening communications and actual behavior because subtle markers of language style (e.g., use of pronouns or articles) can reveal behavioral intent that the speaker may be trying to withhold from the target. Finally, this paper discusses methods that have the goal of automated analyses and largely draws on word count approaches, which are increasingly being used in the social sciences. Computerized tools are especially helpful for establishing a high standard of reliability in any given analysis and for real-time or close to real-time assessment of threatening communications, so that our analyses might one day lead to interventions as opposed to just retrospective case studies.

This paper also briefly describes common automated methods available to study language content and language style. Next, a classification scheme for different types of threats is presented that serves as the organizing principle for this review. The next section summarizes empirical research that has been conducted to assess intent and actual behaviors in

contexts of varying stakes using text analysis. The review concludes with a discussion of the gaps where research is desperately needed across various fields, along with our perspective on how to improve predictions and an emphasis on how various models should be built and applied.

TEXT ANALYSIS METHODS

Features of language or word use can be counted and statistically analyzed in multiple ways. The existing approaches can be categorized into three broad methodologies: (1) judge-based thematic content analysis, (2) computerized word pattern analysis, and (3) word count strategies. All are valid approaches to understanding threatening communications and can potentially yield complimentary results to both academic and nonacademic investigators. While it is beyond the scope of this paper to review each approach in detail, an overview is given below. Then the discussion focuses on word count strategies, which serve as the basis for the remainder of the review.

Judge-Based Thematic Content Analysis

Qualitative approaches use an expert or a group of judges to systematically rate particular texts along various themes. Such approaches have explored the subjective or psychological meaning of language within a phrase or sentence (e.g., Semin et al., 1995), conversational turn (e.g., Tannen, 1993), or an entire narrative (e.g., McAdams, 2001). Thematic content analyses have been widely applied for studying a variety of psychological phenomena, such as motive imagery (e.g., Atkinson and McClelland, 1948; Heckhausen, 1963; Winter, 1991), explanatory styles (Peterson, 1992), cognitive complexity (Suedfeld et al., 1992), psychiatric syndromes (Gottschalk et al., 1997), and goal structures (Stein and Albro, 1997).

Several problems exist with qualitative approaches to text analysis. Judge-based coding requires elaborate coding schemes, along with multiple trained raters. The reliability of judges’ ratings must be assessed and reevaluated early in the process through extensive discussions. Consideration of time and effort has limited analyses of this kind to small numbers of individuals per analysis. For the analysis of completely open-ended text, for example, when a series of very different threatening communications are assessed for the probability of leading to actual threatening behaviors, the coding schemes developed in judge-based thematic content analysis may not be applicable or particularly relevant to any new threat or document.

As a side note, the authors have spoken with and read about a number

of “expert” language analysts who often market their own language analysis methods. Some of these approaches claim to reliably assess deception, author identification, or other intelligence-relevant dimensions. Often, it is claimed that the various methods have accuracy rates of more than 90 to 95 percent. To our knowledge, no human-based judge system has ever been independently assessed by a separate laboratory or been tested outside of experimentally produced and manipulated stimuli. Given the current state of knowledge, it is inconceivable that any language assessment method—whether by human judges or the best computers in the world—could reliably detect real-world deception or other psychological quality at rates greater than 80 percent, even in highly controlled datasets. This issue will be discussed in greater detail later.

Computerized Word Pattern Analysis

Rather than exploring text “top down” within the context of previously defined psychological content dimensions, word pattern strategies mathematically detect “bottom up” how words covary across large samples of text (Foltz, 1996; Poppin, 2000) or the degree to which words overlap within texts (e.g., Graesser et al., 2004). One particularly promising strategy is Latent Semantic Analysis (LSA; see, e.g., Landauer and Dumais, 1997), which is a method used to learn how writing samples are similar to one another based on how words are used together across documents. For example, LSA has been used to detect whether or not a student essay has hit all the major points covered in a textbook or the degree to which a student essay is similar to a group of essays previously graded with top grades on the same topic (e.g., Landauer et al., 1998).

Not only can word pattern analyses detect the similarity of groups of text, they can also be used to extract the underlying topics of text samples (see Steyvers and Griffiths, 2007). One example of a topic modeling approach in the social sciences is the Meaning Extraction Method (MEM; Chung and Pennebaker, 2008). MEM finds clusters of words that tend to co-occur in a corpus. The clusters tend to form coherent themes that have been shown to produce valid dimensions for a variety of corpora. For example, Pennebaker and Chung (2008) found MEM-derived word factors of al-Qaeda statements and interviews that differentially peaked during the times when those topics were most salient to al-Qaeda’s missions. MEM-derived factors have been shown to hold content validity across multiple domains. Since the MEM does not require a predefined dictionary (only characters separated by spaces), and translation occurs only at the very end of the process, MEM has served as an unbiased way to examine psychological constructs across multiple languages (e.g., Ramirez-Esparza et al., 2008, in press; Wolf et al., 2010a, 2010b).

Word pattern analyses are generally statistically based and therefore require large corpora to identify reliable word patterns (e.g., Biber et al., 1998). Some word pattern tools feature modules developed from discourse processing, linguistics, and communication theories (e.g., Crawdad Technologies1; Graesser et al., 2004), representing a combination of top-down and bottom-up processing capabilities. Overall, word pattern approaches are able to assess high-level features of language to assess commonalities within a large group of texts.

Word Count Strategies

The third general methodology focuses on word count strategies. These strategies are based on the assumption that the words people use convey psychological information over and above their literal meaning and independent of their semantic context. Word count approaches typically rely on a set of dictionaries with precategorized terms. The categories can be grammatical categories (e.g., adverbs, pronouns, prepositions, verbs) or psychological categories (e.g., positive emotions, cognitive words, social words). While grammatical categories are fixed (i.e., entries belong in one or multiple known categories), psychological categories are formed by judges’ ratings on whether or not each word belongs in a category. Computerized software can then be programmed to categorize words appearing in text according to the dictionary that it references. Accordingly, these programs typically allow for the use of new, user-defined dictionaries, enabling broader or more specific sampling of word categories.

Today, there is an ever-increasing number of applications of word count analyses in clinical psychology (e.g., Gottschalk, 1997), criminology and forensic psychology (e.g., Adams, 2002, 2004), cultural and cross-language studies (e.g., Tsai et al., 2004), and personality assessments (e.g., Pennebaker and King, 1999; Mehl et al., 2006). An increasingly popular tool used for text analysis in psychology is Linguistic Inquiry and Word Count (LIWC; Pennebaker et al., 2007). LIWC is a computerized word counting tool that searches for approximately 4,000 words and word stems and categorizes them into grammatical (e.g., articles, numbers, pronouns), psychological (e.g., cognitive, emotions, social), or content (e.g., achievement, death, home) categories. Results are reported as a percentage of words in a given text file, indicating the degree to which a particular category was used. The words in LIWC categories have previously been validated by independent judges, and

|

1 |

Find Crawdad text analysis software at http://www.crawdadtech.com, Crawdad Technologies LLC [April 2010]. |

use of the categories within texts has been shown to be a reliable marker for a number of psychologically meaningful constructs (Pennebaker et al., 2003; Tausczik and Pennebaker, 2010).

Using LIWC, word counts have been shown to have modest yet reliable links to personality and demographics. For example, one study across 14,000 texts of varying genres found that women tend to use more personal pronouns and social words than men and that men tend to use more articles, numbers, and fewer verbs (Newman et al., 2008). Together, these findings suggest that women are more socially oriented and that men tend to focus more on objects. Word count tools have effectively uncovered psychological states from spoken language (e.g., Mehl et al., 2006), in published literature (e.g., Pennebaker and Stone, 2003), and in computer-mediated communications (e.g., Chung et al., 2008; Oberlander and Gill, 2006). There is also evidence that word counts are diagnostic of various psychiatric disorders and can reflect specific psychotic symptoms (Junghaenel et al., 2008; Oxman et al., 1982). For example, Junghaenel and colleagues found that psychotic patients tend to use fewer cognitive mechanism and communication words than do people who are not suffering from a mental disorder, reflecting psychotic patients’ tendencies to avoid in-depth processing and their general disconnect from social bonds. These studies provide evidence that word use is reflective of thoughts and behaviors that characterize psychological states. Word counts provide meaningful measures for a variety of thoughts and behaviors.

LANGUAGE CONTENT VERSUS LANGUAGE STYLE

Most early content analysis approaches by both humans and computers focused on words related to specific themes. By analyzing an open-ended interview, a human or computer can detect theme-related words such as family, health, illness, and money. Generally, these words are nouns and regular verbs. Nouns and regular verbs are “content heavy” in that they define the primary categories and actions dictated by the speaker or writer. It makes sense; to have a conversation, it is important to know what people are talking about.

However, there is much more to communication than content. Humans are also highly attentive to the ways in which people convey a message. Just as there is linguistic content, there is also linguistic style—how people put their words together to create a message. What accounts for “style”? Consider the ways by which three different people might summarize how they feel about ice cream:

Person A: I’d have to say that I like ice cream.

Person B: The experience of eating a scoop of ice cream is certainly quite satisfactory.

Person C: Yummy. Good stuff.

The three people differ in their use of pronouns, large versus small words, verbosity, and other dimensions. We can begin to detect linguistic style by paying attention to “junk words”—those words that do not convey much in the ways of content (for a review, see Chung and Pennebaker, 2007; Pennebaker et al., 2003). These junk words, usually referred to as function words, serve as the cement that holds the content words together. In English, function words include pronouns (e.g., I, they, it), prepositions (e.g., with, to, for), articles (e.g., a, an, the), conjunctions (e.g., and, because, or), auxiliary verbs (e.g., is, have, will), and a limited number of other words. Although there are less than 200 common function words, they account for over half of the words used in everyday speech.

Function words are virtually invisible in daily reading and speech. Even most language experts could not tell if the past few paragraphs have used a high or low percentage of pronouns or articles. People are reliable in their use across contexts and over time. Although most everyone uses far more pronouns in informal settings than in formal ones, the highest pronoun use in informal contexts tends to be by the same people who use pronouns at high rates in formal contexts (Pennebaker and King, 1999). Analyzing function words at the paragraph, page, or broader text level completely ignores context. The ultimate difference between the current approach and more traditional linguistic strategies is that function words tell us about the psychology of the writer/speaker rather than what is explicitly being communicated.

Given that function words are so difficult to control, examining the use of these words in natural language samples has provided a nonreactive way to explore social and personality processes. Much like other implicit measures used in experimental laboratory studies in psychology, the authors or speakers examined often are not aware of the dependent variable under investigation (Fazio and Olson, 2003). In fact, most of the language samples from word count studies come from sources in which natural language is recorded for purposes other than linguistic analysis and therefore have the advantage of being more externally valid than the majority of studies involving implicit measures. For this reason, function words are particularly useful in uncovering the relationship between intent and actual behaviors as they occur outside the laboratory.

CLASSIFICATION SCHEME FOR THREATS

One of the difficulties in examining threatening communications and actual behaviors is that researchers typically do not have access to a large group of similar documents on threats and subsequent behaviors. In addition, threats differ tremendously in form, type, and actual intent. Also, situational features across multiple threats cannot be cleanly or confidently classified into discrete categories in order to generalize to new threats. Many of these difficulties in research on threatening communications overlap with the difficulties in research on deception, for which empirical and naturalistic research has made considerable progress through the use of computerized text analyses (for a review, see Hancock et al., 2008).

Comparison with Features of Research on Deception

Deception has been defined as “a successful or [an] unsuccessful deliberate attempt, without forewarning, to create in another a belief … the communicator considers … untrue” (Vrij, 2000, p. 6; see also Vrij, 2008). This commonly accepted definition of deception notes several features that could be used to succinctly define threatening communications within the task of predicting behaviors (see Table 1-1). Specifically, Vrij’s definition includes information about outcome, intent, timing, social features and goals, and a psychological interpretation of the actor. Threatening communications can be compared along all of these features.

A threatening communication will likely carry the language cues used

TABLE 1-1 Comparison of Features in Deceptive Versus Threatening Communications

|

Features |

Deception |

Threats |

|

Outcome |

Successful/unsuccessful |

Fulfilled/unfulfilled |

|

Intent |

Deliberate |

Deliberate/not deliberate |

|

Timing |

Without forewarning |

With/without forewarning |

|

Social features/goals |

Create belief in another |

Communicate possibility of harm/no harm |

|

Psychology of actor |

Communicator considers communication to be untrue |

Communicator considers threat to be untrue (i.e., has no intent to substantiate the threat) or true (i.e., has real intent to substantiate the threat) |

|

SOURCE: Defining features of deception from Vrij (2000, 2008). |

||

in deception if the communicator knows that the message is false (i.e., has no intent to substantiate the threat). This situation is akin to “bluffing,” when a threat is made to achieve some goal(s) by creating in another a belief that the threat is real, when the communicator is aware that it is not. This suggests that, for text analysis of threatening communications, language cues that have reliably been found to signal deception can be used to classify this type of threat as being less likely to be fulfilled. When the communicator knows that the threat is true (i.e., has real intent to substantiate the threat), language cues that have reliably been found to signal honesty can be used to classify this type of threat as being more likely to be fulfilled.

The distinction between deception and threatening communications regarding timing is also an important point. Most language samples of deception come from retrospective accounts of some event. With language samples of threatening communications, often the threatening message is revisited after the act. However, a threat, by definition, is received before the act of harm, and so the language samples analyzed to investigate threats versus deceptive messages typically come from different time points. With some threats there is the possibility of intervention.

These features permit classification of four different types of threats (see Table 1-2). Threats that might have the language features of deception are bluffs and latent threats. Threats that might have the language features

TABLE 1-2 Classification Scheme and Features of Threats

of honesty are real threats and nonthreats. Briefly described, a real threat is made known to the target before the harm occurs, with real intent to carry through on the threat. An example would be President George H. W. Bush’s threat to Saddam Hussein to leave Kuwait or a coalition attack would follow. In this case, the threat was directly communicated beforehand and was followed by the promised action.

A bluff is a threat that is made known to the target but with no intent to act on the threat. Multiple examples can be found in the speeches of Saddam Hussein, who explicitly stated and implicitly suggested that his army had the capability of inflicting mass casualties on coalition forces prior to both the Persian Gulf War of 1991 and the more recent war beginning in 2003. Latent threats are those that are concealed to the target before the harm occurs, with real intent to carry through on the threat. An example might be the case of Bernard Madoff, who was recently imprisoned for masterminding a Ponzi scheme that bankrupted hundreds of innocent investors. Many people invested their money with Madoff under his guise of a trusted financier. In this case, no threat was communicated, but his communications with victims likely would have shown linguistic markers of deception.

Nonthreats are communications from people who have no intent to harm. Indeed, nonthreats can be considered control communications in the sense that the speaker speaks honestly about events, actions, or intentions that the speaker believes to be nonthreatening.

Nonthreats, like all other forms of threat communication, carry with them another potentially vexing dimension: the role of the listener or target of the communication. Table 1-2 is based on the speaker’s intent and behaviors, not the listener’s. It is possible that a speaker can issue a true threat but that the listener perceives it as a bluff. By the same token, latent threats and nonthreats can variously be interpreted in both benign and threatening ways. Failure to adequately detect a real threat or to falsely perceive a true nonthreat may say as much about the perceiver as the message itself. For example, Saddam Hussein’s apparent failure to appreciate coalition threats in both 1991 and 2003 very likely reflected something about his own ways of seeing the world.

Just as there are likely personality dimensions of people who deny or fail to appreciate real threats, a long tradition in psychology has been interested in the opposite pattern—the belief that a real threat exists when actually one does not. Dozens of examples of this can be seen in American politics, especially among those on the extreme left and right. During the George W. Bush years, many far-left pundits were convinced that the administration was planning to do away with the First Amendment. Currently, many right-wing voices claim that the Obama administra-

tion wants to outlaw all guns—resulting in record sales of firearms and ammunition.

From a linguistic perspective it is important that researchers explore the natural language use of both communicators and perceivers. For example, Hancock et al. (2008) have shown linguistics changes on the part of a listener who is being deceived, demonstrating that deception might be better detected and understood by considering the greater social dynamics in which it takes place (see also Burgoon and Buller, 2008, for a review of Interpersonal Deception Theory). Situations are dynamic, and there are possibilities that real threats could be revoked or unsuccessfully attempted or that bluffs might be carried out under pressure. However, the key feature in language analyses is that an attempt is made to understand the psychology or deep-structure processes underlying the threats. In this regard, the personality or psychological states of both speakers and targets can be assessed in order to better understand the nature, probability, and evolving dynamics of a given threat.

REVIEW OF EMPIRICAL RESEARCH ON COMMUNICATED INTENT AND ACTUAL BEHAVIORS USING TEXT ANALYSIS

To distinguish between real threats and bluffs, or between latent threats and nonthreats, the first step is to assess whether or not a given communication is deceptive. To detect deception, computerized text analysis methods have been applied to natural language samples in both experimental laboratory tests and a limited number of real-world settings.

Typical lab studies induce people to either tell the truth or lie. Across several experiments with college students, researchers have accurately classified deceptive communications at a rate of approximately 67 percent (Hancock et al., 2008; Newman et al., 2003; Zhou et al., 2004). Similar rates have been found for classifying truthful and deceptive statements in similar experimental tests among prison inmates (Bond and Lee, 2005). The most consistent language dimensions in identifying truth telling have included use of first-person singular pronouns, exclusive words (e.g., but, without, except), higher use of positive emotion words, and lower rates of negative emotion words. Note that the patterns of effects vary somewhat depending on the experimental paradigm.

Correlational real-world studies have found similar patterns. In an unpublished analysis by the second author and Denise Huddle of the courtroom testimony of over 40 people convicted of felonies, those who were later exonerated (approximately half of the sample) showed similar patterns of language markers of truth telling, such as much higher rates of first-person singular pronoun use. A more controversial but interesting real-world example of classifying false and true statements is in the inves-

tigation of claims made by Bush administration officials in citing the reasons for the Iraq war. Specifically, Hancock and colleagues (unpublished) examined false statements (e.g., claims that Iraq had weapons of mass destruction or direct links to al-Qaeda) and nonfalse statements (e.g., that Hussein had gassed Iraqis) for words previously found to be associated with deceptive statements. It was found that the statements that had been classified as false contained significantly fewer first-person singular (e.g., I, me, my) words and exclusive words (e.g., but, except, without) but more negative emotion words (e.g., angry, hate, terror) and action verbs (e.g., lift, push, run).

Across the various deception studies, the relative rates of word use signaled the underlying psychology of deception. Deception involves less ownership of a story (i.e., fewer first-person singular pronouns) and less complexity (i.e., fewer exclusive words), along with more emotional leakage (i.e., more negative emotion words) and more focus on actions as opposed to intent (i.e., more action verbs). Based on the use of these words, approximately 77 percent of the statements made by the Bush administration were correctly classified as either false or not false. Note that these numbers are likely inflated since estimates of the veracity of statements is dependent on the selection of statements themselves—as opposed to a broader analysis of all statements made by the Bush administration.

It is important to note that the strength of the language model is that it has been applied to a wide variety of natural language samples from low- to high-stakes situations. The degree to which language markers of deception were more pronounced in high-stakes situations relative to low-stakes situations is encouraging. Being able to classify the veracity of high-stakes communications with greater confidence could lead to more efficient allocation of resources for interventions.

Real Threats

A real threat is one that is believed to be true by a speaker or writer, and so linguistic markers of honesty would likely appear in a threatening communication. The next step, then, would be to assess the likelihood of actual behavior. One area in which text analyses have informed psychologists of future behavior is in the written literature and letters of suicidal and nonsuicidal individuals. In one study, Stirman and Pennebaker (2001) analyzed the published works of poets who committed suicide and poets who had not attempted suicide. Poets who committed suicide had used first-person singular pronouns at higher rates in their published poetry than those who did not commit suicide. Poets who committed suicide also used fewer first-person plural pronouns later in their career than did poets who did not commit suicide. Overall, the language used by suicidal

poets showed that they were focused more on themselves and were less socially integrated in later life than were nonsuicidal poets. Surprisingly, there were no significant differences in the use of positive and negative emotion words between the two groups and only a marginal effect of greater death-related words used by the poets who committed suicide. Similar effects have been found in later case studies of suicide blogs, letters, and notes (Hui et al., 2009; Stone and Pennebaker, 2004). These results highlight the importance of linguistic style markers (assessed by word count tools) as potentially more psychologically revealing than content words (which would more likely be the focus of judge-based thematic coding).

Stated intentions are not necessarily threats. One area in which follow-through of stated intentions has been studied is in clinical psychology. In psychotherapy, patients typically state an intention to change maladaptive thoughts and behaviors. Mergenthaler (1996) used word counts to identify word categories that characterize key moments in therapy sessions in order to provide an adequate theory of change. He found that key moments of progress are characterized by the co-occurrence of emotion terms and abstractions (i.e., abstract nouns that characterize the intention to reason further about that term) in a case study and in a sample of improved versus nonimproved patients. These suggest that being able to express emotions in a distanced and abstract way is important for therapeutic improvements.

The text analysis programs used by these clinicians, such as Bucci’s Discourse Attribute Analysis Program (Bucci and Maskit, 2005), are similar to LIWC in that they use a word count approach and many of their dictionary categories are both grammatical and empirically derived. However, the grammatical categories for the clinical dictionaries are broad (i.e., they throw all function words into a single category), and their empirically derived categories are based on psychoanalytic theories and clinical observations. The advantage of all word count tools for the analysis of therapeutic text is that word counts tend to be a less biased measure of therapeutic improvements than clinician’s self-reports (Bucci and Maskit, 2007). In addition, word count tools can be assessed at the turn level, by conversations over time, and for the overall total of all interactions, making word count approaches a powerful tool for assessing follow-through of stated intentions.

Another area in which follow-through of stated intentions has been examined is in weight loss blogs (Chung and Pennebaker, unpublished). Diet blogs were processed using LIWC and assessed for blogging rates and social support. One finding was that cognitive mechanism words (e.g., understand, realize, should, maybe) were predictive of quitting the diet blog early and of gaining weight instead of losing weight. This finding

was consistent with previous literature which found that attempts at changing self-control behaviors typically fail if an individual is stuck at the precontemplation or contemplation phase of self-change (Prochaska et al., 1992, 1995). Instead, writing in a personal narrative style and actively seeking out social support were predictors of weight loss. Use of cognitive mechanism words, then, can signal flexibility in thinking, and perhaps less resolve, or coming to terms with failure. Since the blogs tracked everyday thoughts and behaviors in a naturalistic environment (i.e., not in a laboratory or clinical study) and were not retrospective reports of the entire self-change process after success or failure, the findings were likely more reflective of the various stages of self-change, instead of a description of a memory of the change. Accounts of a narrative recorded during the time in which an event happened or prospectively instead of simply retrospectively are important in generalizing findings from language studies to threat detection.

Studying the nature of threatening communications can come from the study of terrorist organizations and their communications, as interviews with world terrorists are rare (Post et al., 2009). Note that not all communications by terrorist organizations are threats. However, comparing the natural language of violent and nonviolent groups can tell us about the psychology of groups that will act on their threats (Post et al., 2002). In one study, both computerized word pattern and word count analyses of public statements made by Osama bin Laden and Ayman al-Zawahiri, from the years 1988 through 2006, were examined (Pennebaker and Chung, 2008). Initially, the 58 translated al-Qaeda texts were compared with those of other terrorist groups from a corpus created by Smith (2004). The alQaeda texts contained far more hostility as evidenced by their greater use of anger words and third-person plural pronouns.

As for the individual leaders’ use of language over time, bin Laden evidenced an increase in his use of positive emotion words as well as negative emotion words, especially anger words. He also showed higher rates of exclusive words (e.g., but, except, exclude, without) over the past decade, which often marks cognitive complexity in thinking. On the other hand, al-Zawahiri’s statements tended to be slightly more positive, significantly less negative, and less cognitively complex than those of bin Laden. He evidenced a surprising shift in his use of first-person singular pronouns from 2004 to 2006. This was interpreted as indicating greater insecurity, feelings of threat, and perhaps a shift in his relationship with bin Laden. The word count strategy, then, allowed for a close examination of the psychology of the leaders in a way that otherwise would not have been possible.

While much of the above review has been focused on word count approaches, it is worth noting the judge-based thematic analysis approach

of Smith et al. (2008) in studying the language of violent and nonviolent groups. Specifically, instead of having a computer count a set of target words, these researchers had trained coders manually interpret and rate the communications of two terrorist groups (central al-Qaeda and al-Qaeda in the Arabian Peninsula) and two comparison groups that did not engage in terrorist violence (Hizb ut-Tahrir and the Movement for Islamic Reform in Arabia). Among some of the complex coding constructs examined were dominance values, which included any statements where subjects were judged to have or want power over others (see Smith, 2003; White, 1951), and affiliation motives, which included statements where subjects were judged to have a concern with establishing, maintaining, or restoring friendly relations with others (see Winter, 1994).

The results from their analyses and the results from previous studies on terrorist and matched control groups (Smith, 2004, 2008) showed some consistent findings. Specifically, the violent groups’ communications contained more references to morality, religion/culture, and aggression/ dominance. The violent terrorist groups expressed less integrative complexity, more power motive imagery, and more in-group affiliation motive imagery than did the nonviolent groups. These effects were present in the language of violent terrorist groups even before they had engaged in terrorist acts, suggesting that these dimensions could potentially predict the likelihood of violence by a group (Smith, 2004). Further research is needed in order to assess whether these judge-based dimensions will be found at higher rates within a single real threat versus nonthreats from within an organization. In addition, this research would be more suitable for realtime or close to real-time analyses if the judge-based dimensions that are coded at high intercoder reliability rates could reliably be detected using computerized word pattern or word count indices.

Bluffs

Unlike a real threat, a bluff might contain markers of deception since it is one that is believed by the writer or speaker to be false. Although there are many instances of psychologists using deception in laboratory studies to experimentally manipulate states of anxiety, parents threatening to take naughty children to the police, and people threatening to leave their lovers, relatively few studies have examined the word patterns of bluffs.

Although an arguable form of bluffing, several studies have examined the psychology of people who attempt suicide versus those who complete suicide. Some researchers have argued that those who attempt but do not complete suicide have a different motivation—one that is focused on attracting the attention of others (e.g., Farberow and Shneidman, 1961).

If failed attempts at suicide are considered a form of bluffing, it might be thought that the suicide notes of attempters would be different from those of completers. A recent LIWC analysis of notes from 20 attempters and 20 completers found that there were, indeed, significant differences in word use between the two groups. Specifically, completers made more references to positive emotions and social connections and fewer references to death and religion than did attempters. The attempters (or, perhaps, bluffers) appeared to focus more on the suicidal act itself rather than the long-term implications for themselves and others (Handelman and Lester, 2007).

Latent Threats

A latent threat refers to the explicit planning of an aggressive action while at the same time concealing the planned action from the target. In Godfather terms this could be an example of keeping one’s friends close but one’s enemies closer. History, of course, is littered with examples of latent threats—from overtures by Hitler to England and Russia, the Spanish with the Aztecs, and probably most world leaders who have made a decision to go to war. Hogenraad (2003, 2005) and his colleagues (Hogenraad and Garagozav, 2008), for example, used a computer-based motive dictionary (motives that are typically assessed through judge-based thematic coding) to assess the language of leaders during periods of rising conflict. Interestingly, the same pattern of results was found across multiple realworld situations (e.g., in a commented chronology of events leading up to World War II, in Robert F. Kennedy’s memoirs of events before the Cuban missile crisis, and in President Saakashvili’s speeches during Georgia’s recent conflicts with the Russian Federation) and in published fiction (e.g., William Golding’s Lord of the Flies and Tolstoy’s War and Peace). It was found that the discrepancy between power and affiliation motives becomes greater as leaders approach wars. Specifically, power motives are identified by words such as ambition, conservatism, invade, legitimate, and recommend—and increase in times before war. Affiliation motives are identified by such words as courteous, dad, indifference, mate, and thoughtful and decrease in relation to power motive words before times of war. These results are generally consistent with the findings of Smith et al. (2008) for violent terrorist and nonviolent groups.

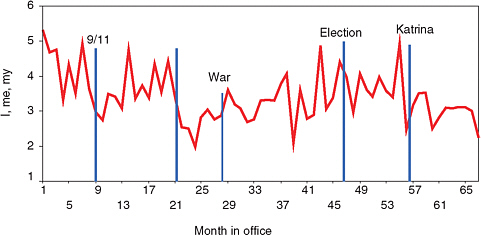

An example of a latent threat is that of President George W. Bush’s use of first-person singular pronouns during his (over 600) formal and informal press meetings over the course of his presidency (see Figure 1-1). Note that only press interactions in which he was speaking “off the cuff” rather than reading prepared remarks were analyzed. As can be seen in Figure 1-1, there was a large drop in use of the word I immediately after

FIGURE 1-1 President George W. Bush’s use of first-person singular pronouns during his time in office.

9/11 and again with Hurricane Katrina. Most striking were the drops that began in August 2002, just prior to Senate authorization of the use of force in Iraq. Perhaps this was when President Bush formally decided to go to war in Iraq, an event that caused attention to shift away from himself and on to a task instead. Similarly, the decision to go to war demands a certain degree of secrecy and deception. A leader who does not want to alert the enemy of his intentions must, by definition, talk in a more measured way so as not to reveal hostile intent. Similar observations have been made by other researchers concerning the language that leaders use in planning for wars.2 If true, one can begin to appreciate how word counts can betray intentions and future actions.

Analysis of the natural language of these political leaders highlights the ability of computerized word counts to reveal how people are attending and responding to their personal upheavals, relationship changes, and world events. A within-subject text analysis of public speeches over time bypassed the difficulties in traditional self-reports (i.e., personally seeking out these leaders in their top-secret hideouts to ask them to fill out questionnaires with minimal response biases).

Nonthreats and the Nature of Genres

As noted earlier, text analysis of nonthreats is conceptually similar to study of a control group. In other words, most studies on threats have relied on a nonthreat control group. Such a group assumes a reasonable degree of honesty in the communication stream. Ironically, any discussion of a nonthreat group raises a series of additional questions about the defining feature of honest communications.

People use language differently depending on the context of their speech. A person talking with a close friend uses language differently than when making an address to a nation. Communicating to a hostile audience typically involves a different set of words and sentence constructions than when speaking to admirers. Any computer analysis of threatening communication must take into account the context. For example, there is reason to believe that, for some people, honest formal speech can look remarkably similar to dishonest more informal language.

A comparison of word use as a function of genres was first reported in a pioneering study by Biber (1988). Using a factor analytic approach to word use, he found that different forms of writing (e.g., news stories, romance novels, telephone conversations) each had their own unique linguistic fingerprint. The present authors have amassed hundreds of thousands of texts spanning dozens of genres and found striking differences that reflect genre, the demographics of the speaker (e.g., age, sex, social class), the relationship between speaker and listener, and so forth (see Pennebaker et al., 2003; Chung and Pennebaker, 2007).

FUTURE DIRECTIONS

The use of text analysis to understand the psychology of threatening communications is just beginning. A small but growing number of experts in social/cognitive/personality psychology, communications, linguistics, computational linguistics, and computer science have only recently begun to realize the importance of linking relatively low level language use to much broader culture-relevant phenomena. Indeed, there is a sense that we are on the threshold of a paradigm shift in the social sciences. As can be gleaned from this review, very little research, to date, has focused specifically on computerized text analysis of threats. Several challenges and suggestions for future research are outlined below.

Practical and Structural Hurdles

For language research to reach the next level, a number of practical questions must be addressed. Many of these call for greater cooperation

between researchers and practitioners in academia, corporations, and forensic field agents.

Cross-Discipline Cooperation

Cross-disciplinary and cross-institution research can lead to an exponential growth in understanding and predicting threatening behaviors. The ability to address socially relevant questions using large data banks will require the close cooperation of psychologists, computer scientists, and computational linguists in academia and in private companies. The ability to find, retrieve, store, and analyze large and quickly changing datasets requires expertise across multiple domains.

Creation of Shared-Text Databases

A pressing practical issue that must be addressed is access to data. First, by increasing access to data from naturally occurring threats from forensic investigations and across laboratories, researchers can start to build a more complete picture of threatening communications and compare text analytic methods in terms of their efficacy for assessing threat features. A consortium or text bank would have to include transcribed threats of all genres, across various modalities, of varying stakes, and in multiple languages, along with annotations for common features with other corpora in the text bank. In addition, the text bank would need to include language samples from nonthreats, such as transcripts for spoken language in everyday life (e.g., Mehl et al., 2001). The text bank could potentially be updated with annotations from study results, such as classification rates, psychological characteristics, and new findings as they are produced.

The collection and maintenance of a large threat-related data bank must include data from four very different types of sources. The first is an Internet base made up of hundreds of thousands of blogs and other frequently changing text samples. As potential threats appear in different parts of the world, a database that reflects the thoughts and feelings of a large group of people can be invaluable in learning the degree to which any socially shared upheaval is reflecting and/or leading a societal shift in attention or attitudes. Comparable publicly available databases should also be built for newspapers, letters to the editor, and so forth. These databases should be updated in near real time.

A second database should be a threat communications base. Some of the contents might be classified, but most should be open source. Such a database would include speeches, letters, historical communications, and position statements of leaders and formal and informal institutions. The

database should include a corpus of individual-level threats—ransom notes, telephone transcripts, television interview transcripts—that can provide insight into how people threaten others. Such a database should also provide transcripts and background information on the people or groups being threatened.

A third database should include natural language samples. To date, very few real-world samples of people talking actually exist. The closest are transcribed telephone calls (as part of the Linguistic Data Consortium; see, e.g., Liberman, 2009), which are recorded in controlled laboratory settings. One strategy that has promise concerns use of the Electronic Activated Recorder (EAR; see, e.g., Mehl et al., 2001)—a digital recorder that can record for 30 seconds every 12 to 13 minutes for several days. Mehl and others have now amassed daily recordings of hundreds of people, all of which have been transcribed. Using technologies such as the EAR, we can ultimately get natural instances of a broad range of human interactions, including those that involve threats.

Finally, threat-related experimental laboratory studies must be run and archived. A significant concern of the large-database approach to linking language with threatening communications is that it is ultimately correlational. That is, we can see how events can influence language changes. Similarly, we can determine how the language of one person may ultimately predict behaviors. The problem is that this approach is generally unable to determine if language is a causal variable. A president, for example, might use the pronoun I less often before going to war. However, the drop in I is not the reason or causal agent for the war. Curious social scientists will want to know what the drops signify. Such questions are most efficiently answered with laboratory experiments. An experimental laboratory study database could include both language samples from studies and any other data collected from the studies for further annotations.

Beyond the Words: Personality, Social Relationships, and Mental Health

On the surface, it might seem that the study of threatening communications should focus most heavily on the communications themselves. Indeed, it is important to know about the components of written or spoken threats. However, threats are made by individuals to other individuals within particular social contexts. It is critical that any language analyses of threatening communications explore the individual differences of the threateners and the threatened within the social context of the interactions.

To better assess the relationships between personality and language,

individuals of all ages, socioeconomic status, and cultures must be sampled, along with any language samples that can be acquired from political leaders (e.g., Hart, 1984; Post, 2005; Suedfeld, 1994; Slatcher et al., 2007). Researchers should be encouraged to track threats that occur in everyday life. It is known from over 20 years of research studies conducted worldwide that, when asked to write about their deepest thoughts and feelings about a traumatic event, participants are often very willing to disclose vivid and personal details about highly stigmatizing traumas, such as disfigurement, the death of a loved one, incest, and rape (for a review, see Pennebaker and Chung, 2007). The ability to collect naturalistic evidence of long-term secrets and deception, then, is promising. Studies might come, for example, from e-mail records from individuals in the community who had kept a secret from a spouse, a lover, friends, or their boss that implied various levels of harm. Academics might also explore threats across various modalities in order to tap how a particular community or population experiences widespread threats, for example, from blogs, newspaper articles, or telephone calls (see Cohn et al., 2001, and Pennebaker and Chung, 2005).

There has been much research showing that the odds of violent or approach behavior increase when mental illness is present (Dietz et al., 1991; Douglas et al., 2009; Fazel et al., 2009; James et al., 2007, 2008, 2009; Mullen et al., 2008; Warren et al., 2007). Once empirical research has reliably identified the linguistic features of mental illness, future research can investigate the degree to which threats are communicated by individuals with various mental illnesses and disorders.

Culture and Language

Threats can come from individuals and groups of varying languages and cultures. The ability to assess a threatening communication in the same language it was produced is important because there are no perfect translations. Such communications must also be assessed within the context of cultural experts. Below, a text analytic approach is described that the present authors are developing to assess the psychology of speakers from other cultures and determine which features are lost or gained in translations.

The use of LIWC in psychological studies has extended beyond the United States, where it was originally developed. This has been made possible because the software includes a feature for the user to select an external dictionary to reference when analyzing text files. This feature, along with the ability of LIWC2007 to process Unicode text, has enabled processing of texts in many other languages. Currently, there are validated dictionaries available in Spanish (Ramirez-Esparza et al., 2007), German

(Wolf et al., 2008), Dutch, Norwegian, Italian, and Korean. Versions in Chinese, French, Hungarian, Russian, and Turkish are in various phases of development. Note that each of these dictionaries has been developed using the LIWC2001 (Pennebaker et al., 2001) or LIWC2007 default dictionary categorization scheme. That is, words in other languages have been forced into the English language categorization scheme used in the LIWC2001 or LIWC2007 default dictionaries.

During the Arabic translation of the English default dictionary, the present authors and their colleagues also began to develop the first LIWC dictionary that was categorized entirely according to a foreign language’s grammatical scheme. Specifically, we created a LIWC dictionary according to an Arabic grammatical scheme (Hayeri et al., unpublished). Next, the dictionary was translated into English to make an English version of LIWC that would impose an Arabic categorization scheme onto English texts. Because each language affords somewhat different types of information, we should be able to see how the two languages provide different insights into the same texts. These sets of dictionaries have many potential applications in cross-cultural psychology, computational linguistics, and forensic psychology.

In the case of cross-cultural psychology, demographic or psychological characteristics could be assessed in translations or in documents for which the original language is unknown. With further validation work for language style markers for psychological features such as deception or psychopathy across translations, researchers who are familiar with only the English language could conduct analyses of foreign language texts using translations into English. News articles from a given Middle Eastern region could be assessed for demographic or psychological characteristics, without full proficiency in the original language of the article. Of course, having cultural experts on hand to understand the greater social context of any communication in which it originally occurred is important, but text analyses can sometimes offer an unbiased look at a given text.

In the case of computational linguistics, finding out whether, for example, language markers of sex differences are maintained in translations between Arabic and English could aid in investigations of author identification for translated documents. Note that computerized word patterning methods have already been successfully applied to authorship identification and characterization for Arabic and English extremist-group Web forums (Abbasi and Chen, 2005).

In the case of forensic psychology, if a translated text is presented to a researcher, it might be difficult to assess the demographic or psychological characteristics of the author if the author’s original text or language skills are unavailable or inaccessible. In another example, consider the case where some documents are available for a given subject in Arabic, while

other documents are available only as English language translations. By using the set of Arabic LIWC dictionaries, it would be possible to assess certain features of language style in both texts and treat them as equivalent or be cautious in doing so in order to maximize the use of all available documents without translations. In forensic investigations this may be the case: Captured or overheard communications may be available in a given language, but more public communications might be more readily available in English. Having the set of dictionaries to combine the language samples could maximize the degree to which the results are reliable and representative of communications from a particular individual or group.

Clearly, more validation work is required to assess the use of the Arabic LIWC dictionaries for cross-language investigations. However, the approach laid out here can help in beginning to see the world through Arabic and English eyes using a simple word counting program for assessing language style. Although it is currently rare, some multidisciplinary labs have come together using a rapprochement of text analytic techniques to provide a more complete psychological profile in cross-language investigations (Graesser et al., 2009; Hancock et al., 2010). Future multidisciplinary research in cross-language investigations is encouraged.

Automated Classifiers: Social Language Processing

The language features reported here have been shown to be predictors for a variety of behaviors. However, in assessing the value of the findings and determining how they can be applied in future investigations of actual cases of predicting behaviors, a larger framework for systematically applying language techniques to behaviors is needed. The following section describes an interdisciplinary paradigm that seeks to build an automated classifier for predicting behaviors from natural language. Specifically, Hancock et al.’s 2010 Social Language Processing (SLP) paradigm, which represents a rapprochement of tools, techniques, and theories from computational linguistics, communications, discourse processing, and social and personality psychology, is described.

SLP has only recently been introduced by Hancock et al. (2010) as a paradigm for predicting behaviors from language. Broadly, it consists of three stages: (1) linguistic feature identification, (2) linguistic feature extraction, and (3) statistical classifier development. The first stage, linguistic feature identification, involves finding theoretical or empirically known grammatical or psychological features of language at the word or phrase level that might be associated with the behavior or construct in question. Hancock et al. (2010) gave the example of first-person singular pronouns, or I, in the case of deception, since liars tend to divert focus from themselves in a lie. In the second stage, linguistic feature

extraction, texts whose social features are known are examined for the language feature from stage 1. In the case of deception, court transcripts (described earlier), laboratory studies (e.g., Newman et al., 2003), and political speeches (e.g., Hancock et al., unpublished) known to include deceptive and nondeceptive texts have been assessed for rates of first-person singular pronoun use, it has been shown that lies are indeed associated with a decrease in I usage (for a review, see Hancock et al., 2008).

Finally, in the third stage, classifier development, a series of stages is used to classify texts according to the social construct in question and to automatically and inductively assess texts for additional language features that might improve classifier performance. Again, returning to the case of deception, the classifiers would be run on documents known to be deceptive or not, additional features that predict deception could be assessed, and then future documents of unknown verity could be assessed using the same classifier for the probability that the new document is deceptive or not.

Note that there are several features of SLP that make it a suitable approach to be developed and applied for investigations of threatening communications and actual behavior. First, SLP is empirically based. That is, SLP draws from theories and case studies and from previous and continuing research on large numbers of texts.

Second, SLP learns. Each of SLP’s stages can inform the others and be recursive, meaning that the classifier for each construct can be continually improved for accuracy and detection of features with additional data. For example, in the third stage, classifier development, the unsupervised machine learning techniques for inductively identifying linguistic features associated with a given construct can be especially helpful in examining communications in another language for which linguistic features signaling a social or psychological construct are as yet unknown (Hancock et al., 2010).

Third, SLP is probabilistic. In the prediction of behaviors, only probabilistic, not absolute, predictions can be made. To say that there are only a few features that predict a particular behavior and that these are completely and accurately conveyed through a set of known language features would oversimplify the complexities of human behavior and the ways in which natural language is produced. In the case of deception, human judges can barely assess deception above chance levels regardless of expertise or confidence in making such assessments (Newman et al., 2003; Vrij, 2008). Word counts have detected deception at rates that are much higher than chance (approximately 67 to 77 percent). Even these rates should not be taken too seriously. Most laboratory-based deception studies are conducted in a gamelike atmosphere, where the experimenters maintain tight control over the information and setting and rely on

participants who are typically quite similar to one another and where a ground truth of 50 percent is known. The regularities found in deceptive communications even within highly curated datasets are probabilistic and with considerable error, requiring even greater caution when identifying deception in the real world. Any models of predicting behaviors must be evaluated by the rates at which they can accurately classify behaviors above chance occurrence (i.e., 50 percent) in more complicated real-world settings.

Finally, SLP is deliverable. That is, the tools and techniques that SLP uses to identify and to assess linguistic features for a given construct are the same tools and techniques that would be applied to a new document. These tools and techniques are mostly free and publicly available or can be purchased online for a couple hundred dollars. The techniques require some programming skills, but these steps can be made into a Windows-based program by which most any layperson could upload a document and the computer would display a number associated with the probability that the document is either x or y (e.g., likely to lead to actual behavior or not). This is especially important for real-time or close to real-time investigations of threatening communications for which interventions are needed immediately. Note, again, that the ultimate contribution of text analyses of threatening communications will come from the degree to which text analysis informs us about the underlying psychology of the actors.

CONCLUSION

There has been little work so far on computerized text analysis of threatening communications. Nevertheless, several studies across several disciplines have demonstrated that word use is reliably linked to psychological states and that the underlying psychology of a speaker or author can be revealed through text analysis. With continued research on the basic relationships between natural language use and psychological states, a shared open-source or consortium-style text bank on threatening communications and a multidisciplinary effort in building models to assess the probability of harm arising from threats, much progress can be made. In assessing a threatening communication it is important to understand the psychology of the threat’s deliverer and receiver, especially in light of the culture in which it occurs. Our responses to threats, then, can be better informed, as threatening communications dynamically unfold. With the use of text analytic tools, quick and reliable assessments and interventions may be possible.

REFERENCES

Abbasi, A., and H. Chen. 2005. Applying authorship analysis to extremist-group Web forum messages. IEEE Intelligent Systems, 20(5):67-75.

Adams, S.H. 2002. Communication Under Stress: Indicators of Veracity and Deception in Written Narratives. Unpublished doctoral dissertation, Virginia Polytechnic Institute and State University, Blacksburg.

Adams, S. 2004. Statement analysis: Beyond the words. FBI Law Enforcement Bulletin, 73(April):22-23.

Atkinson, J.W., and D.C. McClelland. 1948. The projective expression of needs. II. The effect of different intensities of hunger drive on Thematic Apperception. Journal of Experimental Psychology, 38(6):643-658.

Biber, D. 1988. Variation Across Speech and Writing. Cambridge, UK: Cambridge University Press.

Biber, D., S. Conrad, and R. Reppen. 1998. Corpus Linguistics: Investigating Language Structure and Use. Cambridge, UK: Cambridge University Press.

Bond, G.D., and A.Y. Lee. 2005. Language of lies in prison: Linguistic classification of prisoners’ truthful and deceptive natural language. Applied Cognitive Psychology, 19(3):313-329.

Bucci, W., and B. Maskit. 2005. Building a weighted dictionary for referential activity. In Y. Qu, J.G. Shanahan, and J. Wiebe, eds., Computing Attitude and Affect in Text (pp. 49-60). Dordrecht, The Netherlands: Springer.

Bucci, W., and B. Maskit. 2007. Beneath the surface of the therapeutic interaction: The psychoanalytic method in modern dress. Journal of the American Psychoanalytic Association, 55:1355-1397.

Burgoon, J.K., and D.B. Buller. 2008. Interpersonal deception theory. In L. A. Baxter and D. O. Braithewaite, eds., Engaging Theories in Interpersonal Communication: Multiple Perspectives (pp. 227-239). Thousand Oaks, CA: Sage Publications.

Buss, D.M., ed. 2005. The Handbook of Evolutionary Psychology. Hoboken, NJ: John Wiley and Sons.

Chung, C.K., and J.W. Pennebaker. 2007. The psychological functions of function words. In K. Fiedler, ed., Social Communication (pp. 343-359). New York: Psychology Press.

Chung, C.K., and J.W. Pennebaker. 2008. Revealing dimensions of thinking in open-ended self-descriptions: An automated meaning extraction method for natural language. Journal of Research in Personality, 42:96-132.

Chung, C.K., and J.W. Pennebaker. Unpublished. Predicting weight loss in blogs using computerized text analysis. Derived from Ph.D. thesis of the same name, by C.K. Chung. Available: http://repositories.lib.utexas.edu/bitstream/handle/2152/6541/ chungc16811.pdf?sequence=2 [accessed October 2010].

Chung, C.K., C. Jones, A. Liu, and J.W. Pennebaker. 2008. Predicting success and failure in weight loss blogs through natural language use. Proceedings of the International Conference on Weblogs and Social Media (ICWSM 2008), pp. 180-181.

Cohn, M.A., M.R. Mehl, and J.W. Pennebaker. 2001. Linguistic markers of psychological change surrounding September 11, 2001. Psychological Science, 15:687-693.

Dietz, P.E., D.B. Matthews, C. Van Duyne, D.A. Martell, C.D.H. Parry, T. Stewart, J. Warren, and J.D. Crowder. 1991. Threatening and otherwise inappropriate letters to Hollywood celebrities. Journal of Forensic Sciences, 36:185-209.

Douglas, K.S., L.S. Guy, and S.D. Hart. 2009. Psychosis as a risk factor for violence to others: A meta-analysis. Psychological Bulletin, 135(5):679-706.

Farberow, N.L., and E.S. Shneidman, eds. 1961. The Cry for Help. New York: McGraw-Hill.

Fazel, S., G. Gulati, L. Linsell, J.R. Geddes, and M. Grann. 2009. Schizophrenia and violence: Systematic review and meta-analysis. PLoS Med, 6(8):1-15, article number e1000120.

Fazio, R.H., and M.A. Olson. 2003. Implicit measures in social cognition research: Their meaning and use. Annual Review of Psychology, 54:297-327.

Foltz, P.W. 1996. Latent semantic analysis for text-based research. Behavior Research Methods, Instruments and Computers, 28(2):197-202.

Gottschalk, L.A. 1997. The unobtrusive measurement of psychological states and traits. In C.W. Roberts, ed., Text Analysis for the Social Sciences: Methods for Drawing Statistical Inferences from Texts and Transcripts (pp. 117-129). Mahwah, NJ: Erlbaum.

Gottschalk, L.A., M.K. Stein, and D.H. Shapiro. 1997. The application of computerized content analysis of speech to the diagnostic process in a psychiatric outpatient clinic. Journal of Clinical Psychology, 53(5):427-441.

Graesser, A.C., D.S. McNamara, M.M. Louwerse, and Z. Cai. 2004. Coh-Metrix: Analysis of text on cohesion and language. Behavioral Research Methods, Instruments, and Computers, 36:193-202.

Graesser, A.C., L. Han, M. Jeon, J. Myers, J. Kaltner, Z. Cai, P. McCarthy, L. Shala, M. Louwerse, X. Hu, V. Rus, D. McNamara, J. Hancock, C. Chung, and J. Pennebaker. 2009. Cohesion and classification of speech acts in Arabic discourse. Paper presented at the Society for Text and Discourse, Rotterdam, The Netherlands.

Hall, J.A., E.J. Coats, and L. Smith LeBeau. 2005. Nonverbal behavior and the vertical dimension of social relations: A meta-analysis. Psychological Bulletin, 131(6):898-924.

Hancock, J.T., L. Curry, S. Goorha, and M.T. Woodworth. 2008. On lying and being lied to: A linguistic analysis of deception. Discourse Processes, 45(1):1-23.

Hancock, J.T., D.I. Beaver, C.K. Chung, J. Frazee, J.W. Pennebaker, A.C. Graesser, and Z. Cai. 2010. Social language processing: A framework for analyzing the communication of terrorists and authoritarian regimes. Behavioral Sciences in Terrorism and Political Aggression, Special Issue: Memory and Terrorism, 2:108-132.

Hancock, J.T., N.N. Bazarova, and D. Markowitz. Unpublished. A linguistic analysis of Bush administration statements on Iraq. J.T. Hancock, Department of Communication, Cornell University.

Handelman, L.D., and D. Lester. 2007. The content of suicide notes from attempters and completers. Crisis, 28(2):102-104.

Hart, R.P. 1984. Verbal Style and the Presidency: A Computer-Based Analysis. New York: Academic Press.

Hayeri, N., C.K. Chung, and J.W. Pennebaker. Unpublished. Computer-based text analysis across cultures: Viewing language samples through English and Arabic eyes. C.K. Chung and J.W. Pennebaker, Department of Psychology, University of Texas, Austin.

Heckhausen, H. 1963. Eine Rahmentheorie der Motivation in zehn Thesen [A theoretical framework of motivation in 10 theses]. Zeitschrift für Experimentelle und Angewandte Psychologie, 10:604-626.

Hogenraad, R. 2003. The words that predict the outbreak of wars. Empirical Studies of the Arts, 21:5-20.

Hogenraad, R. 2005. What the words of war can tell us about the risk of war. Peace and Conflict: Journal of Peace Psychology, 11(2):137-151.

Hogenraad, R., and R. Garagozov. 2008. The Age of Divergence: Georgia and the Lost Certainties of the West. Report to the Sixth General Meeting, World Public Forum, “Dialogue of Civilizations,” Rhodes, Greece.

Hui, N.H.H., V.W.K. Tang, G.H.H. Wu, and B.C.P. Lam. 2009. ON-line to OFF-life? Linguistic comparison of suicide completer and attempter’s online diaries. Paper presented at the International Conference on Psychology in Modern Cities, Hong Kong.

James, D.V., P.E. Mullen, J.R. Meloy, M.T. Pathe, F.R. Farnham, L. Preston, and B. Darnley. 2007. The role of mental disorder in attacks on European politicians, 1990-2004. Acta Psychiatry Scandinavia, 116:334-344.

James, D.V., P.E. Mullen, M.T. Pathe, J.R. Meloy, F.R. Farnham, L. Preston, and B. Darnley. 2008. Attacks on the British Royal Family: The role of psychotic illness. Journal of the American Academy of Psychiatry and Law, 36(1):59-67.

James, D.V., P.E. Mullen, M.T. Pathe, J.R. Meloy, L.F. Preston, B. Darnley, and F.R. Farnham. 2009. Stalkers and harassers of royalty: The role of mental illness and motivation. Psychological Medicine, 39(9):1479-1490.

Junghaenel, D.U., J.M. Smyth, and L. Santner. 2008. Linguistic dimensions of psychopathology: A quantitative analysis. Journal of Social and Clinical Psychology, 27(1):36-55.

Landauer, T.K., and S.T. Dumais. 1997. A solution to Plato’s problem: The latent semantic analysis theory of acquisition, induction, and representation of knowledge. Psychological Review, 104(2):211-240.

Landauer, T.K., P.W. Foltz, and D. Laham. 1998. An introduction to latent semantic analysis. Discourse Processes, 25(2-3):259-284.

Liberman, M. 2009. The Linguistic Data Consortium. Philadelphia: University of Pennsylvania. Available: http://www.ldc.upenn.edu [accessed April 2010].

McAdams, D.P. 2001. The psychology of life stories. Review of General Psychology, 5(2):100-122.

Mehl, M., J.W. Pennebaker, D.M. Crow, J. Dabbs, and J. Price. 2001. The Electronically Activated Recorder (EAR): A device for sampling naturalistic daily activities and conversations. Behavior Research Methods, Instruments, and Computers, 33(4):517-523.

Mehl, M.R., S.D. Gosling, and J.W. Pennebaker. 2006. Personality in its natural habitat: Manifestations and implicit folk theories of personality in daily life. Journal of Personality and Social Psychology, 90:862-877.

Mergenthaler, E. 1996. Emotion-abstraction patterns in verbatim protocols: A new way of describing psychotherapeutic processes. Journal of Consulting and Clinical Psychology, 64(6):1306-1315.

Mullen, P.E., D.V. James, J.R. Meloy, M.T. Pathe, F.R. Farnham, L. Preston, and B. Darnley. 2008. The role of psychotic illness in attacks on public figures. In J.R. Meloy, L. Sheridan, and J. Hoffman, eds., Stalking, Threatening and Attacking Public Figures (pp. 55-82). New York, NY: Oxford University Press.

Newman, M.L., J.W. Pennebaker, D.S. Berry, and J.M. Richards. 2003. Lying words: Predicting deception from linguistic style. Personality and Social Psychology Bulletin, 29(5):665-675.

Newman, M.L., C.J. Groom, L.D. Handelman, and J.W. Pennebaker. 2008. Gender differences in language use: An analysis of 14,000 text samples. Discourse Processes, 45:211-246.

Oberlander, J., and A.J. Gill. 2006. Language with character: A stratified corpus comparison of individual differences in e-mail communication. Discourse Processes, 42(3):239-270.

Oxman, T.E., S.D. Rosenberg, P.P. Schnurr, and G.J. Tucker. 1982. Diagnostic classification through content analysis of patients’ speech. American Journal of Psychiatry, 145:464-468.

Pennebaker, J.W., and C.K. Chung. 2005. Tracking the social dynamics of responses to terrorism: Language, behavior, and the Internet. In S. Wessely and V.N. Krasnov, eds., Psychological Responses to the New Terrorism: A NATO Russia Dialogue (pp. 159-170). Amsterdam, The Netherlands: IOS Press.

Pennebaker, J.W., and C.K. Chung. 2007. Expressive writing, emotional upheavals, and health. In H. Friedman and R. Silver, eds., Handbook of Health Psychology (pp. 263-284). New York: Oxford University Press.

Pennebaker, J.W., and C.K. Chung. 2008. Computerized text analysis of al-Qaeda statements. In K. Krippendorff and M. Bock, eds., A Content Analysis Reader (pp. 453-466). Thousand Oaks, CA: Sage.

Pennebaker, J.W., and L.A. King. 1999. Linguistic styles: Language use as an individual difference. Journal of Personality and Social Psychology, 77(6):1296-1312.

Pennebaker, J.W., and L.D. Stone. 2003. Words of wisdom: Language use over the lifespan. Journal of Personality and Social Psychology, 85:291-301.

Pennebaker, J.W., M.E. Francis, and R.J. Booth. 2001. Linguistic Inquiry and Word Count: LIWC 2001. Mahwah, NJ: Erlbaum Publishers.

Pennebaker, J.W., M.R. Mehl, and K. Niederhoffer. 2003. Psychological aspects of natural language use: Our words, our selves. Annual Review of Psychology, 54:547-577.

Pennebaker, J.W., R.J. Booth, and M.E. Francis. 2007. Linguistic Inquiry and Word Count (LIWC2007). Available: http://www.liwc.net [accessed April 2010].

Peterson, C. 1992. Explanatory style: Motivation and personality. In C.P. Smith, J.W. Atkinson, D.C. McClelland, and J. Veroff, eds., Handbook of Thematic Content Analysis (pp. 376-382). New York: Cambridge University Press.

Poppin, R. 2000. Computer-Assisted Text Analysis. Thousand Oaks, CA: Sage Publications.

Post, J.M. 2005. The Psychological Assessment of Political Leaders: With Profiles of Saddam Hussein and Bill Clinton. Ann Arbor, MI: University of Michigan Press.

Post, J.M., K.G. Ruby, and E.D. Shaw. 2002. The radical group in context: 1. An integrated framework for the analysis of group risk for terrorism. Studies in Conflict and Terrorism, 25(2):73-100.

Post, J.M., E. Sprinzak, and L.M. Denny. 2009. The terrorists in their own words: Interviews with incarcerated Middle Eastern terrorists. In J. Victoroff and A.W. Kruglanski, eds., Psychology of Terrorism: Classic and Contemporary Insights (pp. 109-117). New York: Psychology Press.

Prochaska, J.O., C.C. DiClemente, and J.C. Norcross. 1992. In search of how people change: Applications to addictive behaviors. American Psychologist, 47(9):1102-1114.

Prochaska, J.O., J.C. Norcross, and C.C. DiClemente. 1995. Changing for Good. New York: Avon.

Ramirez-Esparza, N., J.W. Pennebaker, A.F. Garcia, and R. Suria. 2007. La psicologia del uso de las palabras: Un programa de computadora que analiza textos en Espanol [The psychology of word use: A computer program that analyzes text in Spanish]. Revista Mexicana de Psicologia, 24:85-99.

Ramirez-Esparza, N., C.K. Chung, E. Kacewicz, and J.W. Pennebaker. 2008. The psychology of word use in depression forums in English and in Spanish: Testing two text analytic approaches. Proceedings of the 2008 International Conference on Weblogs and Social Media, pp. 102-108, Menlo Park, CA: The AAAI Press.