Reference Guide on Neuroscience

Henry T. Greely, J.D., is Deane F. and Kate Edelman Johnson Professor of Law, Professor, by courtesy, of Genetics, and the Director of Center for Law and the Biosciences, Stanford University, Stanford, California.

Anthony D. Wagner, Ph.D., is Associate Professor of Psychology and Neuroscience, Stanford University, Stanford, California.

CONTENTS

C. Some Aspects of How the Brain Works

III. Some Common Neuroscience Techniques

3. MRI—structural and functional

2. Transcranial magnetic stimulation (TMS)

3. Deep brain stimulation (DBS)

4. Implanted microelectrode arrays

IV. Issues in Interpreting Study Results

B. Problems in Experimental Design

C. The Number and Diversity of Subjects

D. Applying Group Averages to Individuals

V. Questions About the Admissibility and the Creation of Neuroscience Evidence

2. Rule 702 and the admissibility of scientific evidence

4. Other potentially relevant evidentiary issues

B. Constitutional and Other Substantive Rules

1. Possible rights against neuroscience evidence

2. Possible rights to the creation or use of neuroscience evidence

VI. Examples of the Possible Uses of Neuroscience in the Courts

1. Issues involved in the use of fMRI-based lie detection in litigation

2. Two cases involving fMRI-based lie detection

Science’s understanding of the human brain is increasing exponentially. We know almost infinitely more than we did 30 years ago; however, we know almost nothing compared with what we are likely to know 30 years from now. The results of advances in understanding human brains—and of the minds they generate—are already beginning to appear in courtrooms. If, as neuroscience indicates, our mental states are produced by physical states of our brain, our increased ability to discern those physical states will have huge implications for the law. Lawyers already are introducing neuroimaging evidence as relevant to questions of individual responsibility, such as claims of insanity or diminished responsibility, either on issues of liability or of sentencing. In May 2010, parties in two cases sought to introduce neuroimaging in court as evidence of honesty; we are also beginning to see efforts to use it to prove that a person is in pain. These and other uses of neuroscience are almost certain to increase with our growing knowledge of the human brain as well as continued technological advances in accurately and precisely measuring the brain. This chapter strives to give judges some background knowledge about neuroscience and the strengths and weaknesses of its possible applications in litigation in order to help them become better prepared for these cases.1

The chapter begins with a brief overview of the structure and function of the human brain. It then describes some of the tools neuroscientists use to understand the brain—tools likely to produce findings that parties will seek to introduce in court. Next, it discusses a number of fundamental issues that must be considered when interpreting neuroscientific findings. Finally, after discussing, in general, the issues raised by neuroscience-based evidence, the chapter concludes by analyzing a few illustrative situations in which neuroscientific evidence is likely to appear in court in the future.

This abbreviated and simplified discussion of the human brain describes the cellular basis of the nervous system, the structure of the brain, and finally our current understanding of how the brain works. More detailed, but still accessible, informa-

1. The Law and Neuroscience Project, funded by the John D. and Catherine T. MacArthur Foundation, is preparing a book about law and neuroscience for judges, which should be available by 2011. A Primer on Neuroscience (Stephen Morse & Adina Roskies eds., forthcoming 2011). The Project has already published a pamphlet written by neuroscientists for judges, with brief discussions of issues relevant to law and neuroscience. A Judge’s Guide to Neuroscience: A Concise Introduction (M.S. Gazzaniga & J.S. Rakoff eds., 2010). One early book on a broad range of issues in law and neuroscience also deserves mention: Neuroscience and the Law: Brain, Mind, and the Scales of Justice (Brent Garland ed., 2004).

tion about the human brain can be found in academic textbooks and in popular books for general audiences.2

Like most of the human body the nervous system is made up of cells. Adult humans contain somewhere between 50 trillion and 100 trillion human cells. Each of those cells is both individually alive and part of a larger living organism.

Each cell in the body (with rare exceptions) contains each person’s entire complement of human genes—his or her genome. The genes, found on very long molecules of deoxyribonucleic acid (DNA) that make up a human’s 46 chromosomes, work by leading the cells to make other molecules, notably proteins and ribonucleic acid (RNA). We now believe that there are about 23,000 human genes. Cells are different from each other not because they contain different genes but because they turn on and off different sets of genes. All human cells seem to use the same group of several thousand “housekeeping” genes that run the cell’s basic machinery, but skin cells, kidney cells, and brain cells differ in which other genes they use. Scientists count different numbers of “types” of human cells, with estimates ranging from a few hundred to a few thousand (depending largely on how narrowly or broadly one defines a cell type).

The most important cells in the nervous system are called neurons. Neurons pass messages from one neuron to another in a complex way that appears to be responsible for brain function, conscious or otherwise.

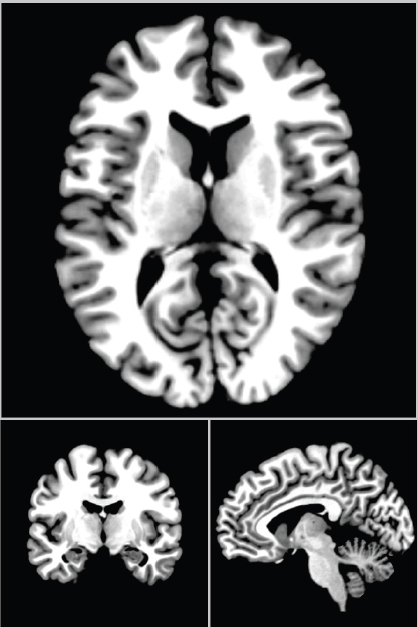

Neurons (Figure 1) come in many sizes, shapes, and subtypes (with their own names), but they generally have three features: a cell body (or “soma”), short extensions called dendrites, and a longer extension called an axon. The cell body contains the nucleus of the cell, which in turn contains the 46 chromosomes with the cell’s DNA. The dendrites and axons both reach out to make connections with other neurons. The dendrites generally receive information from other neurons; the axons send information.

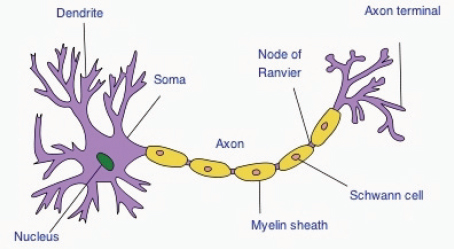

Communication between neurons occurs at areas called synapses (Figure 2), where two neurons almost meet. At a synapse, the two neurons will come within

2. The Society for Neuroscience, the very large scholarly society that covers a wide range of brain science, has published a brief and useful primer about the human brain called Brain Facts. The most recent edition, published in 2008, is available free at www.sfn.org/index.aspx?pagename=brainfacts.

Some particularly interesting books about various aspects of the brain written for a popular audience include Oliver W. Sacks, The Man Who Mistook His Wife for a Hat and Other Clinical Tales (1990); Antonio R. Damasio, Descartes’ Error: Emotion, Reason, and the Human Brain (1994); Daniel L. Schacter, Searching for Memory: The Brain, the Mind, and the Past (1996); Joseph E. LeDoux, The Emotional Brain: The Mysterious Underpinnings of Emotional Life (1996); Christopher D. Frith, Making Up the Mind: How the Brain Creates Our Mental World (2007); and Sandra Aamodt & Sam Wang, Welcome to Your Brain: Why You Lose Your Car Keys But Never Forget How to Drive and Other Puzzles of Everyday Life (2008).

Figure 1. Schematic of the typical structure of a neuron.

Source: Quasar Jarosz at en.wikipedia.

less than a micrometer (a millionth of a meter) of each other, with the presynaptic side, on the axon, separated from the postsynaptic side, on the dendrite, by a gap called the synaptic cleft. At synapses, when the axon (on the presynaptic side) “fires” (becomes active) it releases molecules, known as neurotransmitters, into the synaptic cleft. Some of those molecules are picked up by special receptors on the dendrite that is on the postsynaptic side of the cleft. More than 100 different neurotransmitters have been identified; among the best known are dopamine, serotonin, glutamate, and acetylcholine. Some of the neurotransmitters released into the synaptic cleft are picked up by special receptors on the postsynaptic side of the cleft by the dendrite.

At the postsynaptic side of the cleft, neurotransmitters binding to the receptors can have a wide range of effects. Sometimes they cause the receiving (postsynaptic) neuron to “fire,” sometimes they suppress (inhibit) the postsynaptic neuron from firing, and sometimes they seem to do neither. The response of the receiving neuron is a complicated summation of the various messages it receives from multiple neurons that converge, through synapses, on its dendrites.

A neuron that does fire does so by generating an electrical current that flows down (away from the cell body) the length of its axon. We normally think of electrical current as flowing in things like copper wiring. In that case, free electrons move down the wire. The electrical currents of neurons are more complicated. Molecules with a positive or negative electrical charge (ions) move through the neuron’s membrane and create differences in the electrical charge between the inside and outside of the neuron, with the current traveling along the axon, rather like a fire brigade passing buckets of water in only one direction

Figure 2. Synapse. Communication between neurons occurs at the synapse, where the sending (presynaptic) and receiving (postsynaptic) neurons meet. When the presynaptic neuron fires, it releases neurotransmitters into the synaptic cleft, which bind to receptors on the postsynaptic neuron.

Source: From Carlson. Carlson, Neil R. Foundations of Physiological Psychology (with Neuroscience Animations and Student Study Guide CD-ROM), 6th. © 2005. Printed and electronically reproduced by permission of Pearson Education, Inc., Upper Saddle River, New Jersey.

down the line. Firing occurs in milliseconds. This process of moving ions in and out of the cell membrane requires that the cell use large amounts of energy. When the current reaches the end of the axon, it may or may not cause the axon to release neurotransmitters into the synaptic cleft. This complicated part-electrical, part-chemical system is how information passes from one neuron to another.

The axons of human neurons are all microscopically narrow, but they vary enormously in length. Some are micrometers long; others, such as neurons running from the base of the spinal cord to the toes, are several feet long. Longer axons tend to be coated with a fatty substance called myelin. Myelin helps insulate the axon and thus increases the strength and efficiency of the electrical signal, much like the insulation wrapped around a copper wire. (The destruction of this myelin sheathing is the cause of multiple sclerosis.) Axons coated with myelin appear white; thus areas of the nervous system that have many myelin-coated axons are referred to as “white matter.” Cell bodies, by contrast, look gray, and so areas with many cell bodies and relatively few axons make up our “gray matter.” White matter can roughly be thought of as the wiring that connects gray matter to the rest of the body or to other areas of gray matter.

What we call nerves are really bundles of neurons. For example, we all have nerves that run down our arms to our fingers. Some of those nerves consist of neurons that pass messages from the fingers, up the arm, to other neurons in the spinal cord that then pass the messages on to the brain, where they are analyzed and experienced. This is how we feel things with our fingers. Other nerves are bundles of neurons that pass messages from the brain through the spinal cord to nerves that run down the arms to the fingers, telling them when and how to move.

Neurons can connect with other neurons or with other kinds of cells. Neurons that control body movements ultimately connect to muscle cells—these are called motor neurons. Neurons that feed information into the brain start with specialized sensory cells (i.e., cells specialized for detecting different types of stimuli—light, touch, heat, pain, and more) that fire in response to the appropriate stimulus. Their firings ultimately lead, directly or through other neurons, into the brain. These are sensory neurons. These neurons send information only in one direction—motor neurons ultimately from the brain, sensory neurons to the brain. The paralysis caused by, for example, severe damage to the spinal cord both prevents the legs from receiving messages to move that would come from the brain through the motor neurons and keeps the brain from receiving messages from sensory neurons in the legs about what the legs are experiencing. The break in the spinal column prevents the messages from getting through, just as a break in a local telephone line will keep two parties from connecting. (There are, unfortunately, not yet any human equivalents to wireless service.)

Estimates of the number of cells in a human brain vary widely, from a few hundred billion to several trillion. These cells include those that make up blood vessels and various connective tissues in the brain, but most of them are specialized brain cells. About 80 billion to 100 billion of these brain cells are neurons; the

other cells (and the source of most of the uncertainty about the number of cells) are principally another class of cells referred to generally as glial cells. Glial cells play many important roles in the brain, including, for example, producing and maintaining the myelin sheaths that insulate axons and serving as a special immune system for the brain. The full importance of glial cells is still being discovered; emerging data suggest that they may play a larger role in mental processes than as “support staff.” At this point, however, we concentrate on neurons, the brain structures they form, and how those structures work.

Anatomists refer to the brain, the spinal cord, and a few other nerves directly connecting to the brain as the central nervous system. All the other nerves are part of the peripheral nervous system. This reference guide does not focus on the peripheral nervous system, despite its importance in, for example, assessing some aspects of personal injuries. We also, less fairly, ignore the central nervous system other than the brain, even though the spinal cord, in particular, plays an important role in modulating messages going into and coming out of the brain.

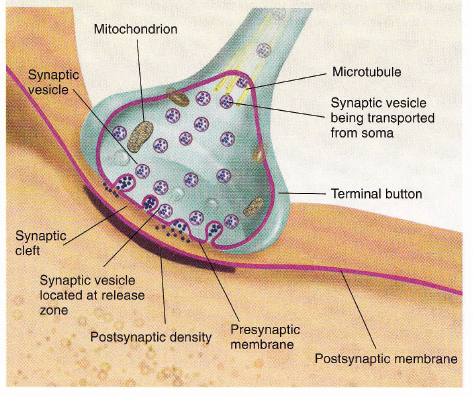

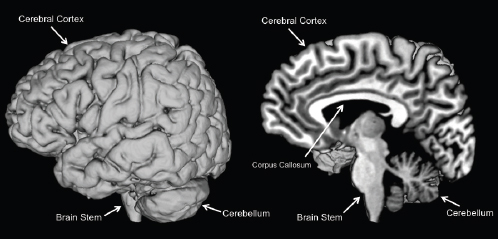

The average adult human brain (Figure 3) weighs about 3 pounds and fills a volume of about 1300 cubic centimeters. If liquid, it would almost fill two standard wine bottles with a little space left over. Living brains have a consistency about like that of gelatin. Despite the softness of brains, they are made up of regular shapes and structures that are generally consistent from person to person. Just as every nondamaged or nondeformed human face has two eyes, two ears,

Figure 3. Lateral (left) and mid-sagittal (right) views of the human brain.

Source: Courtesy of Anthony Wagner.

one nose, and one mouth with standard numbers of various kinds of teeth, every normal brain has the same set of identifiable structures, both large and small.

Neuroscientists have long worked to describe and define particular regions of the brain. In some ways this is like describing parcels of land in property documents, and, like property descriptions, several different methods are used. At the largest scale, the brain is often divided into three parts: the brain stem, the cerebellum, and the cerebrum.3

The brain stem is found near the bottom of the brain and is, in some ways, effectively an extension of the spinal cord. Its various parts play crucial roles in controlling the body’s autonomic functioning, such as heart rate and digestion. The brain stem also contains important regions that regulate processing in the cerebrum. For example, the substantia nigra and ventral tegmental area in the brain stem consist of critical neurons that generate the neurotransmitter dopamine. While the substantia nigra is crucial for motor control, the ventral tegmental area is important for learning about rewards. The loss of neurons in the substantia nigra is at the core of the movement problems of Parkinson’s disease.

The cerebellum, which is about the size and shape of a squashed tennis ball, is tucked away in the back of the skull. It plays a major role in fine motor control and seems to keep a library of learned motor skills, such as riding a bicycle. It was long thought that damage to the cerebellum had little to no effect on a person’s personality or cognitive abilities, but resulted primarily in unsteady gait, difficulty in making precise movements, and problems in learning movements. More recent studies of patients with cerebellar damage and functional brain imaging studies of healthy individuals indicate that the cerebellum also plays a role in more cognitive functions, including supporting aspects of working memory, attention, and language.

The cerebrum is the largest part of the human brain, making up about 85% of its volume. The cerebrum is found at the front, top, and much of the back of the human brain. The human brain differs from the brains of other mammals mainly because it has a vastly enlarged cerebrum.

There are several different ways to identify parts of, or locations in, the cerebrum. First, the cerebrum is divided into two hemispheres—the famous left and right brain. These two hemispheres are connected by tracts of white matter—of axons—most notably the large connection called the corpus callosum. Oddly, the right hemisphere of the brain generally receives messages from and controls the movements of the left side of the body, while the left hemisphere receives messages from and controls the movements of the right side of the body.

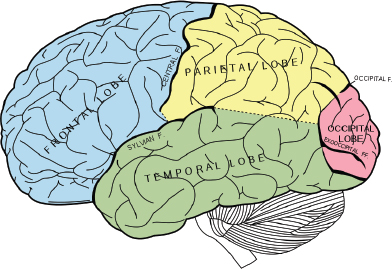

Each hemisphere of the cerebrum is divided into four lobes (Figure 4): The frontal lobe in the front of the cerebrum (behind the forehead), the parietal lobe at

3. The brain also is sometimes divided into the forebrain, midbrain, and hindbrain. This classification is useful for some purposes, particularly in describing the history and development of the vertebrate brain, but it does not entirely correspond to the categorization of cerebrum, brain stem, and cerebellum, and it is not used in this reference guide.

Figure 4. Lobes of a hemisphere. Each hemisphere of the brain consists of four lobes—the frontal, parietal, temporal, and occipital lobes.

Source: http://commons.wikimedia.org/wiki/File:Gray728.svg. This image is in the public domain because its copyright has expired. This applies worldwide.

the top and toward the back, the temporal lobe on the side (just behind and above the ears), and the occipital lobe at the back. Thus, one could describe a particular region as lying in the left frontal lobe—the frontal lobe of the left hemisphere.

The surface of the cerebrum consists of the cortex, which is a sheet of gray matter a few millimeters thick. The cortex is not a smooth sheet in humans, but rather is heavily folded with valleys, called sulci (“sulcus” in the singular), and bulges, called gyri (“gyrus”). The sulci and gyri have their own names, and so a location can be described as in the inferior frontal gyrus in the left frontal lobe. These folds allow the surface area of the cortex, as well as the total volume of the cortex, to be much greater than in other mammals, while still allowing it to fit inside our skulls, similar to the way the many folds of a car’s radiator give it a very large surface area (for radiating away heat) in a relatively small space.

The cerebral cortex is extraordinarily large in humans compared with other species and is clearly centrally involved in much of what makes our brains special, but the cerebrum contains many other important subcortical structures that we share with other vertebrates. Some of the more important areas include the thalamus, the hypothalamus, the basal ganglia, and the amygdala. These areas all connect widely, with the cortex, with each other, and with other parts of the brain to form complex networks.

The functions of all these areas are many, complex, and not fully understood, but some facts are known. The thalamus seems to act as a main relay that carries

information to and from the cerebral cortex, particularly for vision, hearing, touch, and proprioception (one’s sense of the position of the parts of one’s body). It also is, importantly, involved in sleep, wakefulness, and consciousness. The hypothalamus has a wide range of functions, including the regulation of body temperature, hunger, thirst, and fatigue. The basal ganglia are a group of regions in the brain that are involved in motor control and learning, among other things. They seem to be strongly involved in selecting movements, as well as in learning through reinforcement (as a result of rewards). The amygdala appears to be important in emotional processing, including how we attach emotional significance to particular stimuli.

In addition, many other parts of the brain, in the cortex or elsewhere, have their own special names, usually with Latin or Greek roots that may or may not seem descriptive today. The hippocampus, for example, is named for the Greek word for seahorse. For most of us, these names will have no obvious rhyme or reason, but merely must be learned as particular structures in the brain—the superior colliculus, the tegmentum, the globus pallidus, the substantia nigra, the cingulate cortex, and more. All of these structures come in pairs, with one in the left hemisphere and one in the right hemisphere; only the pineal gland is unpaired. Brain atlases include scores of names for particular structures or regions in the brain and detailed information about the structures or regions.

Some of these smaller structures may have special importance to human behavior. The nucleus accumbens, for example, is a small subcortical region in each hemisphere of the cerebrum that appears important for reward processing and motivation. In experiments with rats that received stimulation of this region in return for pressing a lever, the rats would press the lever almost to the exclusion of any other behavior, including eating. The nucleus accumbens in humans appears linked to appetitive motivation, responding in anticipation of primary rewards (such as pleasure from food and sex) and secondary rewards (such as money). Through interactions with the orbital frontal cortex and dopamine-generating neurons in the midbrain (including the ventral tegmental area), the nucleus accumbens is considered part of a “reward network.” With a hypothesized role in addictive behavior and in reward computations, more broadly, this putative reward network is a topic of considerable ongoing research.

All of these various locations, whether defined broadly by area or by the names of specific structures, can be further subdivided using directions: front and back, up and down, toward the middle, or toward the sides. Unfortunately, the directions often are not expressed in a straightforward manner, and several different terminological conventions exist. Locations toward the front or back of the brain can be referred to as either anterior or posterior or as rostral or caudal (literally, toward the nose, or beak, or the tail). Locations toward the bottom or top of the brain are termed inferior or superior or, alternatively, as ventral or dorsal (toward the stomach or toward the back). A location toward the middle of the brain is called medial; one toward the side is called lateral. Thus, different loca-

tions could be described, for example, as in the left anterior cingulate cortex, in the dorsal medial (or sometimes dorsomedial) prefrontal cortex, or in the posterior hypothalamus.

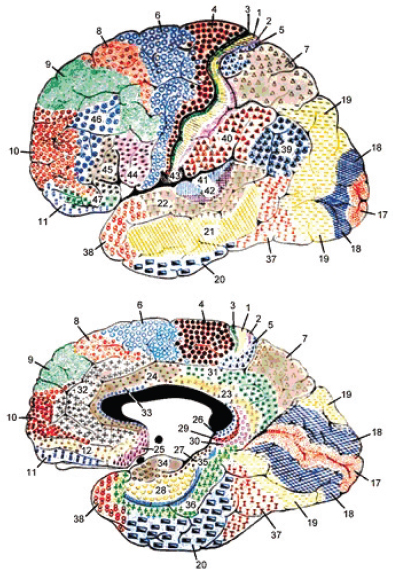

Finally, one other method often is used, a method created by Korbinian Brodmann in 1909. Brodmann, a neuroanatomist, divided the brain into about 50 different areas or regions (Figure 5). Each region was defined on the basis of the

Figure 5. Brodmann’s areas. Brodmann divided the cortex into different areas based on the cell types and how they were organized.

Source: Prof. Mark Dubin, University of Colorado.

kinds of neurons found there and how those neurons are organized. A location described by Brodmann area may or may not correspond closely with a structural location. Other organizational schemes exist, but Brodmann’s remains the most widely used to describe the approximate locations of findings in modern human brain imaging studies.

C. Some Aspects of How the Brain Works

Most of neuroscience is dedicated to finding out how the brain works, but although much has been learned, considerably more remains unknown. We could use many different ways to describe what is known about how the brain works. This section discusses a few important aspects of brain function and makes several general points about the localization and distribution of functions, as well as brain plasticity, before commenting on the effects of hormones and other chemical influences on the brain.

Some brain functions are localized in, or especially dependent on, particular regions of the brain. This has been known for many years as a result of studies of people who, through traumatic injury, stroke, or cancer, have lost, or lost the use of, particular regions of their brains. For example, in the 1860s, French anatomist Paul Broca discovered through autopsies of patients that damage to a region in the left inferior frontal lobe (now known as Broca’s area) caused an inability to speak. It is now known that some functions cannot normally be performed when particular brain areas are damaged or missing. The visual cortex, located at the back of the brain in the occipital lobes, is as necessary for vision as the eyes are; the hippocampus is necessary for the creation of many kinds of memory; and the motor cortex is necessary for voluntary movements. The motor cortex and the parallel somatosensory cortex, which is essential for processing sensory information such as the sense of touch from the body, are further subdivided, with particular regions necessary for causing motion or sensing feelings from the legs, arms, fingers, face, and so on. Other brain regions also will be involved in these actions or sensations, but these regions are necessary to them.

At the same time, the fact that a region is necessary to a particular class of sensations, behaviors, or cognition does not mean either that it is not involved in other brain functions or that other brain regions do not also contribute to these particular abilities. The amygdala, for example, is involved in our feelings of fear, but it is also involved broadly in emotional reactions, both positive and negative. It also modulates learning, memory, and even sensory perception. Although some functions are localized, others are widely distributed. For example, the visual cortex is essential to vision, but actual visual perception involves many parts of the brain in addition to the occipital lobes. Memories appear to be stored over much of the cortex. Networks of brain regions participate in many of these functions.

For example, if you touch something very hot with your left index finger, your spinal cord, through a reflex loop, will cause you to pull your finger back

very quickly. Then the part of your right somatosensory cortex devoted to the index finger will be involved in receiving and initially interpreting the sensation. Other areas of your brain will recognize the stimulus as painful, your motor regions will be involved in waving your hand back and forth or bringing your finger to your mouth, widespread parts of your cortex may lead to your remembering other instances of burning yourself, and your hippocampus may play a role in making a new long-term memory of this incident. There is no brain region “for” burning your finger; many regions, both specific and general, contribute to the brain’s response.

In addition, brains are at least somewhat “plastic” or changeable on both small and large scales. Anyone who can see has a working visual cortex, and it is always located in the back of the brain (in the occipital lobe), but its exact borders will vary slightly from person to person. In other cases, the brain may adjust and change in response to a person’s behavior or changes in that person’s anatomy. For example, a right-handed violinist may develop an enlarged brain region for controlling the fingers of the left hand, used in fingering the violin. If a person loses an arm to amputation, the parts of the motor and somatosensory cortices that had dealt with that arm may be “taken over” by other body parts. In some cases, this brain plasticity can be extreme. A young child who has lost an entire hemisphere of his or her brain may grow up to have normal or nearly normal functionality as the remaining hemisphere takes on the tasks of the missing hemisphere. Unfortunately, the possibilities of this kind of extreme plasticity do diminish with age, but rehabilitation after stroke in adults sometimes does show changes in the brain functions undertaken by particular brain regions.

The picture of the brain as a set of interconnected neurons that fire in networks or patterns in response to stimuli is useful but not complete. In addition to neuron firings, other factors affect how the brain works, particularly chemical factors.

Some of these are hormones, generated by the body either inside or outside the brain. They can affect how the brain functions, as well as how it develops. Sex hormones such as estrogen and testosterone can have both short-term and long-term effects on the brain. So can other hormones, such as cortisol, associated with stress, and oxytocin, associated with, among other things, trust and bonding. Endorphins, chemicals secreted by the pituitary gland in the brain, are associated with pain relief and a sense of well-being. Still other chemicals, brought in from outside the body, can have major effects on the brain, both in the short term and the long term. Examples include alcohol, caffeine, nicotine, morphine, and cocaine. These can trigger very specific brain reactions or can have broad effects.

III. Some Common Neuroscience Techniques

Neuroscientists use many techniques to study the brain. Some of them have been used for centuries, such as autopsies and the observation of patients with brain damage. Some, such as the intentional destruction of parts of the brain, can be used ethically only in research on nonhuman animals. Of course, research with nonhuman animals, although often helpful in understanding human brains, is of less value when examining behaviors that are uniquely developed among humans. The current revolution in neuroscience is largely the result of a revolution in the tools available to neuroscientists, as new methods have been developed to image and to intervene in living brains. These methods, particularly the imaging methods that allow more precise measurements of human brain structure and function in living people, are giving rise to increasing efforts to introduce neuroscientific evidence in court.

This section of this chapter focuses on several kinds of neuroimaging—computerized axial tomography (CAT) scans, positron emission tomography (PET) scans, single photon emission computed tomography (SPECT) scans, and magnetic resonance imaging (MRI), as well as an older method, electroencephalography (EEG), and its close relative, magnetoencephalography (MEG). Some of these methods show the structure of the brain, others show the brain’s functioning, and some do both. These are not the only important neuroscience techniques; several others are discussed briefly at the end of this section. Genetic analysis provides yet another technique for increasing our understanding of human brains and behaviors, but this chapter does not deal with the possible applications of human genetics to understanding behavior.

Traditional imaging technologies have not been very helpful in studying the brain. X-ray images are the shadows cast by dense objects. Not only is the brain surrounded by our very dense skulls, but there are no dense objects inside the brain to cast these shadows. Although a few features of the brain or its blood vessels could be seen through methods that involved the injection of air into some of the spaces in the brain or of contrast media into the blood, these provided limited information. The opportunity to see inside a living brain itself only goes back to about the 1970s, with the development of CAT scans. This ability has since exploded with the development of several new techniques, three of which, with CAT, are discussed on the following pages.

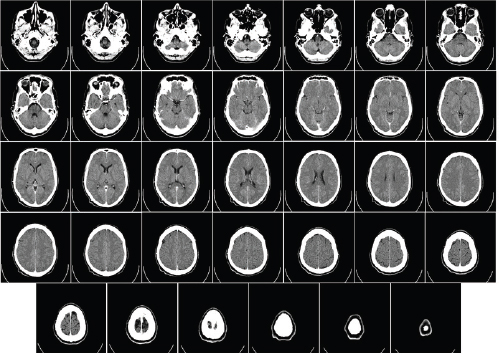

The CAT scan is a multidimensional, computer-assisted X-ray machine. Instead of taking one X ray from a fixed location, in a CAT scan both the X-ray source and (180 degrees opposite the source) the X-ray detectors rotate around the person being scanned. Rather than exposing negatives to make “pictures” of dense objects, as in traditional X rays, the X-ray detectors produce data for computer analysis. A complete modern CAT scan includes data sufficient to reconstruct the scanned object in three dimensions. Computerized algorithms can then be used to produce an image of any particular slice through the object. The multiple angles and computer analysis make it possible to pick out the relatively small density differences within the brain that traditional X-ray technology could not distinguish and to use them to produce images of the soft tissue (Figure 6).

Figure 6. CAT scan depicting axial sections of the human brain. The ventral most (bottom) surface of the brain is at upper left and the dorsal most (top) surface is at the lower right.

Source: http://en.wikipedia.org/wiki/File:CT_of_brain_of_Mikael_H%C3%A4ggstr%C3%B6m_large.png. Image in the public domain.

The CAT scan provides a structural image of the brain. It is useful for showing some kinds of structural abnormalities, but it provides no direct information

about the brain’s functioning. A CAT scan brain image is not as precise as the image produced from an MRI, but because the procedure is both quick and (relatively) inexpensive, CAT scanners are common in hospitals. Medically, brain CAT scans are used mainly to look for bleeding or swelling inside the brain, although they also will record sizeable tumors or other large structural abnormalities. For neuroscience, the great advantage of the CAT scan was its ability, for the first time, to reveal some details inside the skull, an ability that has been largely superseded for research by MRI. CAT scans have been used in courts to argue that structural changes in the brain, shown on the CAT scan, are evidence of insanity or other mental impairments. Perhaps their most notable use was in 1982 in the trial of John Hinckley for the attempted assassination of President Ronald Reagan. A CAT scan of Hinckley’s brain that showed widened sulci (the “valleys” in the surface of the brain) was introduced into evidence to show that Hinckley suffered from organic brain damage in the form of shrinkage of his brain.4

Traditional X-ray machines and their more sophisticated descendant, the CAT scan, project X rays through the skull and create images based on how much of the X rays are blocked or absorbed. PET scans and SPECT scans operate very differently. In these methods, a substance that emits radiation is introduced into the body. That radiation then is detected from outside the body in a way that can determine the location of the radiation source. These scans generally are not used for determining the brain’s structure, but for understanding how it is functioning. They are particularly good at measuring one aspect of brain structure—the density of particular receptors, such as those for dopamine, at synapses in some areas of the brain, such as the frontal lobes.

Radioactive decay of atoms can take several forms, producing alpha, beta, or gamma radiation. PET scanners take advantage of isotopes of atoms that decay by giving off positive beta radiation. Beta decay usually involves the emission of an electron; positive beta decay involves the emission of a positron, the positively charged antimatter equivalent of an electron. When positrons (antimatter) meet electrons (matter), the two particles are annihilated and converted into two photons of gamma radiation with a known energy (511,000 electron volts) that follow directly opposite paths from the site of the annihilation. Inside the body, the collision between the positron and electron and the consequent production of the gamma radiation photons takes place within a short distance (a millimeter or two) of the site of the initial radioactive decay that produced the positron.

4. The effects of this evidence on the verdict are unclear. See Lincoln Caplan, The Insanity Defense and the Trial of John W. Hinckley, Jr. (1984) for a discussion of the case and its consequences for the law.

PET scans, therefore, start with the introduction into a person’s body of a radioactive tracer that decays by giving off a positron. One common tracer is fluorodeoxyglucose (FDG), a molecule that is almost identical to the simple sugar, glucose, except that one of the oxygen atoms in glucose is replaced by an atom of fluorine-18, an isotope of the element fluorine with nine protons and nine neutrons. Fluorine normally found in nature is fluorine-19, with 9 protons and 10 neutrons, and is stable. Fluorine-18 is very unstable and decays, through positive beta decay, quickly losing about half of its mass every 110 minutes (its half-life). The body treats FDG as though it were glucose, and so the FDG is concentrated where the body needs the energy supplied by glucose. A major clinical use of PET scans derives from the fact that tumor cells use energy, and hence glucose, at much higher rates than normal cells.

After giving the FDG time to become concentrated in the body, which usually takes about an hour, the person is put inside the scanner itself. There, the person is entirely surrounded by a very sensitive radiation detector, tuned to respond to gamma radiation of the energy produced by annihilated positrons. When two “hits” are detected by two sensors at about the same time, the source is known to be located on a line connecting the two. Very small differences in the timing of when the radiation is detected can help determine where along that the line the annihilation took place. In this way, as more gamma radiation from the decaying FDG is detected, the general location of the FDG within the body can be determined and, as a result, tissue that is using a lot of glucose, such as a tumor, can be located.

In neuroscience research, PET scans also can be taken using different molecules that bind more specifically to particular tissues or cells. Some of these more specific ligands use fluorine-18, but others use a different radioactive tracer that also decays by emitting a positron—oxygen-15. This can be used to determine what parts of the brain are using more or less oxygen. Oxygen-15, however, has a much shorter half-life (2 minutes) and so is more difficult and expensive to use than FDG. Similarly, carbon-11, with a half-life of 20 minutes, also can be used. Carbon-11 atoms can be introduced into various molecules that bind to important receptors in the brain, such as receptors for dopamine, serotonin, or opioids. This allows the study of the distribution and function of these receptors, both in healthy people and in people with various mental illnesses or neurological diseases.

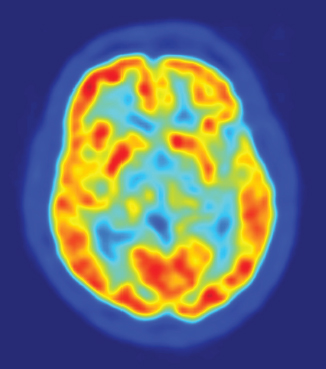

The result of a PET scan is a record of the locations of positron decay events in the brain. Computer visualization tools can then create cross-sectional images of the brain, showing higher and lower rates of decay, with differences in magnitude typically depicted through the use of different colors (Figure 7).

PET scans are excellent for showing the location of various receptors in normal and abnormal brains. PET scans are also very good for showing areas of different glucose use and, hence, of different levels of metabolism. This can be very useful, for example, in detecting some kinds of brain damage, such as the damage that occurs with Alzheimer’s disease, where certain regions of the brain become abnormally inactive, or in brain regions that have been damaged by a stroke.

Figure 7. PET scan depicting an axial section of the human brain.

Source: http://en.wikipedia.org/wiki/Positron_emission_tomography. Image in the public domain.

In addition, the comparison (subtraction) of two PET scan measurements, one scan when a person is engaged in a task that is thought to require particular brain functions and a second control (or baseline) scan that is not thought to require these functions, allows researchers indirectly to measure brain function. PET scans were initially used in this way in research to show what areas of the brain were used when people experienced various stimuli or performed particular tasks. PET has been substantially superseded for this purpose by functional MRI, which is less expensive, does not involve radiation exposure, provides better spatial resolution, and allows a longer period of testing.

SPECT scans are similar to PET scans. Each can produce a three-dimensional model of the brain and display images of any cross section through the brain. Like PET scans, they require the injection of a radioactive tracer material; unlike PET scans, the radioactive tracer in SPECT directly emits gamma radiation rather than emitting positrons. These kinds of tracers are more stable, more accessible, and much cheaper than the positron-emitting tracers needed for PET scans. With a PET scan, the gamma detector entirely surrounds the person; with a SPECT scan, one to three gamma detectors are rotated around the body over about 15 to

20 minutes. As with PET scans, the SPECT tracers can be used to measure brain metabolism or to attach to specific molecular receptors in the brain. The spatial resolution of a SPECT scan, however, is poorer than with a PET scan, with an uncertainty of about 1 cm.

Both PET and SPECT scans are most useful if coupled with good structural images. Contemporary PET and SPECT scanners often include a simultaneous CAT scan; there is some experimental work aimed at providing simultaneous PET and MRI scans.

3. MRI—structural and functional

MRI was developed in the 1970s, first came into wide use in the 1980s, and is currently the dominant neuroimaging technology for producing detailed images of the brain’s structure and for measuring aspects of brain function. MRI operates on completely different principles than either CAT scans or PET or SPECT scans; it does not rely on X rays passing through the brain or on the decay of radioactive tracer molecules inside the brain. Rather, MRI’s workings involve more complicated physics. This section discusses the general characteristics of MRI and then focuses on structural MRI, diffusion tensor imaging, and finally, functional MRI.

The power of an MRI scanner is measured by the strength of its magnetic field, measured in units called tesla (T). The magnetic field of a small bar magnet is about 0.01 T. The strength of the Earth’s magnetic field is about 0.00005 T. The MRI machines used for clinical purposes use magnetic fields of between 0.2 T and 3.0 T, with 1.5 T or 3.0 T being the systems most commonly used today. MRI machines for human research purposes have reached 9.4 T. In general, the stronger the magnetic field, the better the image, although higher fields also can create their own measurement difficulties, especially when imaging brain function. MRI machines achieve these high magnetic fields through using superconducting magnets, made by cooling the electromagnet with liquid helium at a temperature 4° (Celsius) above absolute zero. For this and other reasons, MRI systems are complicated, with higher initial and continuing maintenance costs compared with some other methods for functional imaging (e.g., electroencephalography; see infra Section III.B).

In most MRI systems (Figure 8), the subject, on an examination table, slides into a cylindrical opening in the machine so that the part of the body to be imaged is in the middle of the magnet. Depending on the kind of imaging performed, the examination or experiment can take from about 30 minutes to more than 2 hours; throughout the scanning process the subject needs to stay as motionless as possible to avoid corrupting the images. The main sensations for the subject are the loud thumping and buzzing noises made by the machine, as well as the machine’s vibration.

MRI examinations appear to involve minimal risk. Unlike the other neuroimaging technologies discussed above, MRI does not involve any high-energy

Figure 8. MRI machine. Magnetic resonance imaging systems are used to acquire both structural and functional images of the brain.

Source: Courtesy of Anthony Wagner.

radiation. The magnetic field seems to be harmless, at least as long as magnetizable objects are kept away from it. MRI subjects need to remove most metal objects; people with some kinds of implanted metallic devices, with tattoos with metal in their ink, or with fragments of ferrous metal anywhere in their bodies cannot be scanned because of the dangerous effects of the field on those bits of metal.

When the subject is positioned in the MRI scanner, the powerful field of the magnet causes the nuclei of atoms (usually the hydrogen nuclei of the body’s water molecules) to align with the direction of the main magnetic field of the magnet. Using a brief electromagnetic pulse, these aligned atoms are then “flipped” out of alignment from the main magnetic field, and, after the pulse stops, the nuclei then

rapidly realign with the strong main magnetic field. Because the nuclei spin (like a top), they create an oscillating magnetic field that is measured by a receiver coil. During structural imaging, the strength of the signal generated partially depends on the relative density of hydrogen nuclei, which varies from point to point in the body according to the density of water. In this manner, MRI scanners can generate images of the body’s anatomy or of other scanned objects. Because an MRI scan can effectively distinguish between similar soft tissues, MRI can provide very-high-resolution images of the brain’s anatomy, which is, after all, made up of soft tissue.

Structural MRI scans produce very detailed images of the brain (Figure 9). They can be used to spot abnormalities, large and small, as well as to see normal variation in the size and shape of brain features. Structural MRI can be used, for example, to see how brain features change as a person ages. Previously, getting that kind of detailed information about a brain required an autopsy or, at a minimum, extensive neurosurgery. This ability makes structural MRI both an important clinical tool and a very useful technique for research that tries to correlate human differences, normal and abnormal, with differences in brain structure, as well as for research that seeks to understand brain development.

Another structural imaging application of brain MRI has become increasingly prevalent over the past decade: diffusion tensor imaging (DTI). As noted above, neuronal tissue in the brain can be divided roughly into gray matter (the bodies of neurons) and white matter (neuronal axons that transmit signals over distance). DTI uses MRI to see what direction water diffuses through brain tissue. Tracts of white matter are made up of bundles of axons coated with fatty myelin. Water will diffuse through that white matter along the direction of the axons and not, generally, across them. This method can be used, therefore, to trace the location of these bundles of white matter and hence the long-distance connections between different parts of the brain. Abnormal patterns of these connections may be associated with various conditions, from Alzheimer’s disease to dyslexia, some of which may have legal implications.

Functional MRI (fMRI) is perhaps the most exciting use of MRI in neuroscience for understanding brain function. This technique shows what regions of the brain are more or less active in response to the performance of particular tasks or the presentation of particular stimuli. It does not measure brain activity (the firing of neurons) directly but, instead, looks at how blood flow changes in response to brain activity and uses those changes, through the so-called BOLD response (the blood-oxygen-level dependent response), to allow the researcher to infer patterns of brain activity.

Structural MRI generally creates its images through detecting the density of hydrogen atoms in the subject and flipping them with radio pulses. For fMRI, the scanner detects changes in the ratio of oxygenated hemoglobin (oxyhemoglobin) and deoxygenated hemoglobin (deoxyhemoglobin) in particular locations in the brain. Hemoglobin is the protein in red blood cells that carries oxygen from the lungs to the body. On the basis of metabolic demands, hemoglobin molecules

supply oxygen for the body’s needs. Accordingly, “fresher” blood will have a higher ratio of oxyhemoglobin to deoxyhemoglobin than more “used” blood. Importantly, because deoxyhemoglobin (which is found at a higher level in “used” blood) causes the fMRI signal to decay, a higher ratio of oxyhemoglobin to deoxyhemoglobin will produce a stronger fMRI signal.

Neural activity is energy intensive for neurons, and neurons do not contain any significant reserves of oxygen or glucose. Therefore, the brain’s blood vessels respond quickly to increases in activity in any one region of the brain by sending more fresh blood to that area. This is the basis of the BOLD response, which measures changes in the ratio of oxyhemoglobin to deoxyhemoglobin in a brain region several seconds after activity in that region. In particular, when a brain region becomes more active, there is first, perhaps more intuitively, a decline in the ratio of oxyhemoglobin to deoxyhemoglobin immediately after activity in the region, apparently corresponding to the depletion of oxygen in the blood at the site of the activity. This decline, however, is very small and very hard to detect with fMRI. Immediately after this decrease, there is an infusion of fresh (oxyhemoglobin-rich) blood, which can take several seconds to reach maximum; it is this infusion that results in the increase in the oxy/deoxyhemoglobin ratio that is measured in BOLD fMRI studies. Because even this subsequent increase is relatively small and variable, fMRI experiments typically involve many trials of the same task or class of stimuli in order to be able to see the signal amidst the noise.

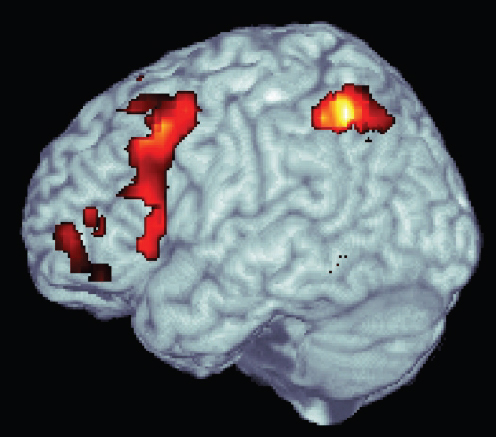

Thus, in a typical fMRI experiment the subject will be placed in the scanner and the researchers will measure differences in the BOLD response throughout his or her brain between different conditions. A subject might, for example, be told to look at a video screen on which images of places alternate with images of faces. For purposes of the experiment, the computer will impose a spatial map on the subject’s brain, dividing it into thousands of little cubes, each a few cubic millimeters in size, referred to as “voxels.” Either while the data are being collected (so-called “real-time fMRI”5) or after an entire dataset has been gathered, a computerized program will compare the BOLD signal for each voxel when the screen was showing places to that when the screen contained faces. Regions that showed a statistically significant increase in the BOLD response several seconds after the face was on the video screen compared with the effects several seconds after a screen showing a place appeared will be said to have been “activated” by seeing the face. The researchers will infer that those regions were, in some way, involved in how the brain processes images of faces. The results typically will be shown as a structural brain image on which areas of more or less activation, as shown by a statistical test, will be shown by different colors (Figure 10).6

5. Use of this “real-time” fMRI has been increasing, but it is not yet clear whether the claims for it will stand up.

6. This example is actually a simplified version of experiments performed by Professor Nancy Kanwisher at MIT in the early 2000s that explored a region of the brain called the fusiform face area

Figure 10. fMRI image. Functional MRI data reveal regions associated with cognition and behavior. Here, regions of the frontal and parietal lobes that are more active when remembering past events relative to detecting novel stimuli are depicted.

Source: Courtesy of Anthony Wagner.

Functional MRI was first proposed in 1990, and the first research results using BOLD-contrast fMRI in humans were published in 1992. The past decade has seen an explosive increase in the number of research articles based on fMRI, with nearly 2500 articles published in 2008—compared with about 450 in 1998.7

which is particularly involved in processing visions of faces. See Kathleen M. O’Craven & Nancy Kanwisher, Mental Imagery of Faces and Places Activates Corresponding Stimulus-Specific Brain Regions, 12 J. Cog. Neurosci. 1013 (2000).

7. See the census of fMRI articles from 1993 to 2008 in Carole A. Federico et al., Intersecting Complexities in Neuroimaging and Neuroethics, in Oxford Handbook of Neuroethics (J. Illes & B.J. Sahakian eds., 2011). This continued an earlier census from 1993 to 2001. Judy Illes et al., From Neuroimaging to Neuroethics, 5 Nature Neurosci. 205 (2003).

MRI (functional and structural) is quite safe, and MRI machines are widespread in developed countries, largely for clinical use but increasingly for research use as well. Although fMRI research is subject to many questions and controversies (discussed infra Section IV), this technique has been responsible for most of the recent interest in applying neuroscience to law, from criminal responsibility to lie detection.

EEG is the measurement of the brain’s electrical activity as exhibited on the scalp; MEG is the measurement of the small magnetic fields generated by the brain’s electrical activity. The roots of EEG go back into the nineteenth century, but its use increased dramatically in the 1930s and 1940s.

The process uses electrodes attached to the subject’s head with an electrically conductive substance (a paste or a gel) to record electrical currents on the surface of the scalp. Multiple electrodes are used; for clinical purposes, 20 to 25 electrodes are commonly used, although arrays of more than 200 electrodes can be used. (In MEG, superconducting “squids”8 are positioned over the scalp to detect the brain’s tiny magnetic signals.) The electrical currents are generated by the neurons throughout the brain, although EEG is more sensitive to currents emerging from neurons closer to the skull. It is therefore more challenging to use EEG to reveal the functioning of structures deep in the brain.

Because EEG and MEG directly measure neural activity, in contrast to the measures of blood flow in fMRI, the timing of the neural activity can be measured with great precision (the temporal resolution), down to milliseconds. On the other hand, in comparison to fMRI, EEG and MEG are poor at determining the location of the sources of the currents (the spatial resolution). The EEG/MEG signal is a summation of the activity of thousands to millions of neurons at any one time. Any one pattern of EEG or MEG signal at the scalp has an infinite number of possible source patterns, making the problem of determining the brain source of measured EEG/MEG signal particularly challenging and the results less precise.

The results of clinical EEG and MEG tests can be very useful for detecting some kinds of brain conditions, notably epilepsy, and are also part of the process of diagnosing brain death. EEG and MEG are also used for research, particularly in the form of event-related potentials, which correlate the size or pattern of the EEG or MEG signal with the performance of particular tasks or the presentation of particular stimuli. Thus, as with the hypothetical fMRI experiment described above, one could look for any consistent changes in the EEG or MEG signal when a subject sees faces rather than a blank screen. Apart from the determina-

8. SQUID stands for superconducting quantum interference device (and has nothing to do with the marine animal). This device can measure extremely small magnetic fields, including those generated by various processes in living organisms, and so is useful in biological studies.

tion of brain death, where EEG is already used, the most discussed possible legally relevant uses of EEG have been lie detection and memory detection.

EEG is safe, cheap, quiet, and portable. MEG is safe and quiet, but the technology is considerably more expensive than EEG and is not easily portable. EEG methods can tolerate much more head movement by the subject than PET or MRI techniques, although movement is often a challenge for MEG. EEG and MEG have good temporal resolution, distinguishing between milliseconds, which makes them very attractive for research, but their spatial resolution is inadequate for many research questions. As a result, some researchers use a combination of methods, integrating MRI and EEG or MEG data (acquired simultaneously or at different times) using sophisticated data analysis techniques.

Functional neuroimaging (especially fMRI) and EEG seem to be the techniques that are most likely to lead to efforts to introduce neuroscience-based evidence in court, but several other neuroscience techniques also might have legal applications. This section briefly describes four other methods that may be discussed in court: lesion studies, transcranial magnetic stimulation, deep brain stimulation, and implanted microelectrode arrays.

One powerful way to test whether particular brain regions are associated with particular mental processes is to study mental processes after those brain regions have been destroyed or damaged. Observations of the consequences of such lesions, created by accidents or disease, were, in fact, the main way in which localization of brain function was originally understood.

For ethical reasons, the experimental destruction of brain tissue is limited to nonhuman animals. Nonetheless, in addition to accidental damage, on occasion human brains will need to be intentionally damaged for clinical purposes. Tumors may have to be removed or, in some cases, epilepsy may have to be treated by removing the region of the brain that is the focus for the seizures. Valuable knowledge may be gained from following these subjects.

Our understanding of the role of the hippocampus in creating memories, as one example, was greatly aided by study of a patient known as H.M.9 When he was 27 years old, H.M. was treated for intractable epilepsy, undergoing an

9. H.M.’s name, not publicly released until his death, was Henry Gustav Molaison. Details of his life can be found in several obituaries, including Benedict Carey, H.M., An Unforgettable Amnesiac, Dies at 82, N.Y. Times, Dec. 4, 2008, at A1, and H.M., A Man Without Memories, The Economist, Dec. 20, 2008. The first scientific report of his case was W.B. Scoville & Brenda Milner, Loss of Recent Memory After Bilateral Hippocampal Lesions, 20 J. Neurol., Neurosurg. Psychiatry 11 (1957).

experimental procedure that surgically removed his left and right medial temporal lobes, including most of his two hippocampi. The surgery was successful, but from that time until his death in 2008, H.M. could not form new long-term memories, either of events or of facts. His short-term memory, also known as working memory, was intact, and he could learn new motor, perceptual, and (some) cognitive skills (his “procedural memory” still functioned). He also could remember his life’s events from before his surgery, although his memories were weaker the closer the events were to the surgery. Those brain regions were clearly involved in making new long-term memories for facts or events, but not in storing old ones.

2. Transcranial magnetic stimulation (TMS)

TMS is a noninvasive method of creating a temporary, reversible functional brain “lesion.” Using this technique, researchers disrupt the organized activity of the brain’s neurons by applying an electrical current. The current is formed by a rapidly changing magnetic field that is generated by a coil held next to the subject’s skull. The field penetrates the scalp and skull easily and causes a small current in a roughly conical portion of the brain below the coil. This current induces a change in the typical responses of the neurons, which can block the normal functioning of that part of the brain.

TMS can be done in a number of ways. In some approaches, TMS happens at the same time as the subject performs the task to be studied. These concurrent approaches include single pulses or paired pulses as well as rapid (more than once per second) repetitive TMS that is delivered during task performance. Another method uses TMS for an extended period, often several minutes, before the task is performed. This sequential TMS uses slow (less than once per second) repetitive TMS.

The effects of single-pulse/paired-pulse and concurrent repetitive TMS are present while the coil is generating the magnetic field, and can extend for a few tens of milliseconds after the stimulation is turned off. By contrast, the effects of pretask repetitive TMS are thought to last for a few minutes (about half as long as the actual stimulation). When TMS is repeated regularly in nonhumans, long-term effects have been observed. Therefore, guidelines regarding how much stimulation can be applied in humans have been established.

The Food and Drug Administration (FDA) has approved TMS as a treatment for otherwise untreatable depression. The neuroscience research value of TMS stems from its ability to alter brain function in a relatively small area (about 2 cm) in an otherwise healthy brain, thus allowing for targeted testing of the role of a particular brain region for a particular class of cognitive abilities. By blocking normal functioning of the affected neurons, this can be equivalent, in effect, to a temporary lesion of that area of the brain. TMS appears to have minimal risks, but its long-term effects are not known.

3. Deep brain stimulation (DBS)

DBS is an FDA-approved treatment for several neurological conditions affecting movement, notably Parkinson’s disease, essential tremor, and dystonia. The device used in DBS includes a lead that is implanted into a specific brain region, a pulse generator (generally implanted under the shoulder or in the abdomen), and a wire connecting the two. The pulse generator sends an electric current to the electrodes in the lead, which in turn affect the functioning of neurons in an area around the electrodes.

The precise manner by which DBS affects brain function remains unclear. Even for Parkinson’s disease, for which it is widely used, individual patients sometimes benefit in unpredictable ways from placement of the lead in different locations and from different frequency or power of the stimulation.

Researchers are continuing to experiment with DBS for other conditions, such as depression, minimally conscious state, chronic pain, and overeating that leads to morbid obesity. The results are sometimes surprising. In a Canadian trial of DBS for appetite control, the obese patient did not ultimately lose weight but did suddenly develop a remarkable memory. That research group is now starting a trial of DBS for dementia.10 Other surprises have included some negative side effects from DBS, such as compulsive gambling, hypersexuality, and hallucinations. These kinds of unexpected consequences from DBS make it of continuing broader research interest.

4. Implanted microelectrode arrays

Ultimately, to understand the brain fully one would like to know what each of its 100 billion neurons is doing at any given time, analyzed in terms of their collective patterns of activity.11 No current technology comes close to that kind of resolution. For example, although fMRI has a voxel size of a few cubic millimeters, it is looking at the blood flow responding to thousands or millions of neurons at each point in the brain. Conversely, while direct electrical recordings allow individual neurons to be examined, and manipulated, it is not easy to record from many neurons at once. While still on a relatively small scale, recent developments now offer one method for recording from multiple neurons simultaneously by using an implanted microelectrode array.

A chip containing many tiny electrodes can be implanted directly into brain tissue. Some of those electrodes will make useable connections with neurons and can then be used either to record the activity of that neuron (when it is firing or

10. See Clement Hamani et al., Memory Enhancement Induced by Hypothalamic/Fornix Deep Brain Stimulation, 63 Annals Neurol. 119 (2008).

11. See the discussion in Emily R. Murphy & Henry T. Greely, What Will Be the Limits of Neuroscience-Based Mindreading in the Law? in The Oxford Handbook of Neuroethics (J. Illes & B.J. Sahakian eds., 2011).

not) or to stimulate the neuron to fire. These kinds of implants have been used in research on motor function, both in monkeys and in occasional human patients. The research has aimed at understanding better what neuronal activity leads to motion and hence, in the long run, perhaps to a method of treating quadriplegia or other motion disorders.

These arrays have several disadvantages as research tools. Arrays require neurosurgery for their implantation, with all of its consequent risks of infection or damage. They also have a limited lifespan, because the brain’s defenses eventually prevent the electrical connection between the electrode and the neuron, usually over the span of a few months. Finally, the arrays can only reach a tiny number of the billions of neurons in the brain; current arrays have about 100 microelectrodes.

IV. Issues in Interpreting Study Results

Lawyers trying to introduce neuroscience evidence will almost always be arguing that, when interpreted in the light of some preexisting research study, some kind of neuroscience-based test of the brain of a person in the case—usually a party, though sometimes a witness—is relevant to the case. It might be a claim that a PET scan shows that a criminal defendant was likely to have been legally insane at the time of the crime; it could be a claim that an fMRI of a witness demonstrates that she is lying. The judge will have to determine whether the scientific evidence is admissible at all under the Federal Rules of Evidence, and particularly under Rule 702. If the evidence is admissible, the finder of fact will need to consider the validity and strength of the underlying scientific finding, the accuracy of the particular test performed on the party or witness, and the application of the former to the latter.

Neuroscience-based evidence will commonly raise several scientific issues relevant to both the initial admissibility decision and the eventual determination of the weight to be given the evidence. This section of the reference guide examines seven of these issues: replication, experimental design, group averages, subject selection and number, technical accuracy, statistical issues, and countermeasures. The discussion focuses on fMRI-based evidence, because that seems likely to be the method that will be used most frequently in the coming years, but most of the seven issues apply more broadly.

One general point is absolutely crucial. The various techniques discussed in Section III, supra, are generally accepted scientific procedures, both for use in research and, in most cases, in clinical care. Each one is a good scientific tool in general. The crucial issue is not likely to be whether the techniques meet the requirements for admissibility when used for some purposes, but whether the techniques—when used for the purpose for which they are offered—meet those requirements. Sometimes proponents of fMRI-based lie detection, for example, have argued that the technique should be accepted because fMRI is the subject of more than 12,000 peer-reviewed

publications. That is true, but irrelevant—the question is the application of fMRI to lie detection, which is the subject of far fewer, and much less definitive, publications.

A good general rule of thumb in science is never to rely on any experimental finding until it has been independently replicated. This may be particularly true with fMRI experiments, not because of fraud or negligence on the part of the experimenters, but because, for reasons discussed below, these experiments are very complicated. Replication builds confidence that those complications have not led to false results.

In many scientific fields, including much of fMRI research, replication is sometimes not as common as it should be. A scientist often is not rewarded for replicating (or failing to replicate) another’s work. Grants, tenure, and awards tend to go to people doing original research. The rise of fMRI has meant that such original experiments are easy to conceive and to attempt—anyone with experimental expertise, access to research subjects (often undergraduates), and access to an MRI scanner (found at any major medical facility) can try his or her own experiments and, if the study design and logic are sound and the results are statistically significant, may well end up with published results. Experiments replicating, or failing to replicate, another’s work are neither as exciting nor as publishable.

For example, as discussed in more detail below, more than 15 different laboratories have collectively published 20 to 30 peer-reviewed articles finding some statistically significant relationship between fMRI-measured brain activity and deception. None of the studies is an independent replication of another laboratory’s work. Each laboratory used its own experimental design, its own scanner, and its own method of analysis. Interestingly, the published results implicate many different areas of the brain as being activated when a subject lies. A few of the brain regions are found to be important in most of the studies, but many of the other brain regions showing a correlation with deception differ from publication to publication. Only a few of the laboratories have published replications of their own work; some of those laboratories have actually published findings with different results from those in their earlier publications.

That a finding has been replicated does not mean it is correct; different laboratories can make the same mistakes. Neither does failure of replication mean that a result is wrong. Nonetheless, the existence of independent replication is important support for a finding.

B. Problems in Experimental Design

The most important part of an fMRI experiment is not the MRI scanner, but the design of the underlying experiment being examined in the scanner. A poorly

designed experiment may yield no useful information, and even a well-designed experiment may lead to information of uncertain relevance.

A well-designed experiment must focus on the particular mental state or brain process of interest while minimizing any systematic biases. This can be especially difficult with fMRI studies. After all, these studies are measuring blood flow in the brain associated with neuronal responses in particular regions. If, for example, in an experiment trying to assess how the brain reacts to pain, the experimental subjects are consistently distracted at one point in the experiment by thinking about something else, the areas of brain activation will include the areas activated by the distraction. One of the earliest published lie detection experiments was designed so that the experimental subjects pushed a button for “yes” only when saying (honestly) that they held the card displayed; they pushed the “no” button both when they did not hold the card displayed and when they did hold it but were following instructions to lie. They were to say “yes” only 24 times out of 432 trials.12 The resulting differences might have come from the differences in thinking about telling the truth or telling a lie—but they also may have come from the differences in thinking about pressing the “no” button (the most common action) and pressing the “yes” button (the less frequent response). The results themselves cannot distinguish between the two explanations.

Designing good experiments is difficult, but in some respects the better the experiment, the less relevant it may prove to a real situation. A laboratory experiment attempts to minimize distractions and differences among subjects, but such factors will be common in real-world settings. Perhaps more important, for some kinds of experiments it will be difficult, if not impossible, to reproduce in the laboratory the conditions of interest in the real world. As an extreme example, if one is interested in how a murderer’s brain functions during a murder, one cannot conduct an experiment that involves having the subject commit a murder in the scanner. For ethical reasons, that condition of interest cannot be tested in the experiment.

The problem of trying to detect deception provides a different example. All published laboratory-based experiments involve people who know that they are taking part in a research project. Most of them are students and are being paid to participate in the project. They have received detailed information about the experiment and have signed a consent form. Typically, they are instructed to “lie” about a particular matter. Sometimes they are told what the lie should be (to deny that they see a particular playing card, such as the seven of clubs, on a screen in the scanner); sometimes they are told to make up a lie (about their most recent

12. Daniel D. Langleben et al., Telling Truth from Lie in Individual Subjects with Fast Event-Related fMRI, 26 Human Brain Mapping 262 (2005). See discussion in Nancy Kanwisher, The Use of fMRI in Lie Detection: What Has Been Shown and What Has Not, in Emilio Bizzi et al., Using Imaging to Identify Deceit: Scientific and Ethical Questions (2009), at 10, and in Anthony Wagner, Can Neuroscience Identify Lies? in A Judge’s Guide to Neuroscience, supra note 1, at 30.

vacation, for example). In either case, they are following instructions—doing what they should be doing—when they tell the “lie.”

This situation is different from the realistic use of lie detection, when a guilty person needs to tell a convincing story to avoid a high-stakes outcome such as arrest or conviction—and even an innocent person will be genuinely nervous about the possibility of an incorrect finding of deception. In an attempt to parallel these real-world characteristics, some laboratory-based studies have tried to give subjects some incentive to lie successfully; for example, the subjects may be told (falsely) that they will be paid more if they “fool” the experimenters. Although this may increase the perceived stakes, it seems unlikely that it creates a realistic level of stress. These differences between the laboratory and the real world do not mean that the experimental results of laboratory studies are unquestionably different from the results that would exist in a real-world situation, but they do raise serious questions about the extent to which the experimental data bear on detecting lies in the real world.

Few judges will be expert in the difficult task of designing valid experiments. Although judges may be able themselves to identify weaknesses in experimental design, more often they will need experts to address these questions. Judges will need to pay close attention to that expert testimony and the related argument, as “details” of experimental design may turn out to be absolutely crucial to the value of the experimental results.

C. The Number and Diversity of Subjects

Doing fMRI scans is expensive. The total cost of performing an hour-long research scan of a subject ranges from about $300 to $1000. Much fMRI research, particularly work without substantial medical implications, is not richly funded. As a result, studies tend to use only a small number of subjects—many fMRI studies use 10 to 20 subjects, and some use even fewer. In the lie detection literature, for example, the number of subjects used ranges from 4 to about 30.

It is unclear how representative such a small group would be of the general population. This is particularly true of the many studies that use university students as research subjects. Students typically are from a restricted age range, are likely to be of above-average intelligence and socioeconomic background, may not accurately reflect the country’s ethnic diversity, and typically will underrepresent people with serious mental conditions. To limit possible confounding variables, it can make sense for a study design to select, for example, only healthy, right-handed, native-English-speaking male undergraduates who are not using drugs. But the very process of selecting such a restricted group raises questions about whether the findings will be relevant to other groups of people. They may be directly relevant, or they may not be. At the early stages of any fMRI research, it may not be clear what kinds of differences among subjects will or will not be important.

D. Applying Group Averages to Individuals

Most fMRI-based research looks for statistically significant associations between particular patterns of brain activation across a number of subjects. It is highly unlikely than any fMRI pattern will be found always to occur under certain circumstances in every person tested, or even that it will always occur under those circumstances in any one person. Human brains and their responses are too complicated for that. Research is highly unlikely to show that brain pattern “A” follows stimulus “B” each and every time and in every single person, although it may show that A follows B most of the time.