Scientific Basis and Engineering Approaches for Improving Small Unit Decision Making

The topic of decision making has been studied in a number of fields, and each offers possibilities for improving the decision making abilities of small unit leaders given the operational and technical challenges facing small unit leaders in today’s operational environment, the existing abilities of the Marine Corps, and the findings presented in Chapter 2. The breadth of material related to decision making is substantial and beyond the scope of this report. What follows is a selective review based on the knowledge and experience of the committee members who were particularly interested in reviewing theories and perspectives that could address the operational gaps identified in the previous chapter and that would lead to actionable recommendations. This is an area where committee members are not in unanimous agreement. While the material in this chapter represents the majority opinion, a dissent can be found in Appendix G.

No single theory of decision making or human performance can account for the complex and diverse decisions that small unit leaders must make. The nature of the decision and the context in which it occurs will instead have an effect on which theories (and associated interventions) best support improved decision making performance. The committee focused on two areas: the scientific basis for decision making (cognitive psychology, cognitive neuroscience), and engineering support for decision making (engineering approaches to support decision making, physiological monitoring, and augmented cognition).

Philosophers and historians of science, most notably Thomas Kuhn, describe the evolution of scientific inquiry in any field of knowledge according to phases. Fields that have developed a scientific approach are said to have “paradigms,”1

![]()

1 Thomas S. Kuhn. 1977. The Essential Tension: Selected Studies in Scientific Tradition and Change, University of Chicago Press, Chicago, Ill., p. 294.

which are shared commitments to a certain understanding of the real world among members of a scientific group. Paradigms are used to guide the collection of data through normal science.2 Those efforts produce results that are often consistent with (and at times anomalous with respect to) the group’s accepted rules. Those in the group who are bothered by anomalies eventually instigate research efforts to challenge, not to reinforce, the paradigm.3 This perceived failure of the existing rules creates a prelude to the search for new rules. The revolutionary science that ensues strives to develop a new set of rules that will better fit both the accepted and the anomalous data. This effort often involves the pursuit of a new language to represent the new model.4 After the acceptance and adoption of a new paradigm, normal science resumes.

Regarding the stages of evolution among the fields of knowledge that this report considers, naturalistic decision making (NDM) has evolved into normal science since its inception in the late 1980s. However, other fields that the chapter refers to are in earlier stages of evolution and have potential to change accepted models of thought. Cognitive neuroscience is an example of a field that may provide a deeper understanding of decision making over the longer term.

This chapter is organized in three major sections and closes with a brief summary and the committee’s seventh and final finding. The first major section, “3.1 Cognitive Psychology,” summarizes one aspect of the scientific basis for understanding decision making: the broad field of cognitive psychology, including prescriptive and descriptive approaches, and the emerging field of resilience theory. The next major section, “3.2 Cognitive Neuroscience,” summarizes a second aspect: the emerging field of cognitive neuroscience and its potential for understanding the fundamental neurophysiological mechanisms underlying human decision making. The last major section, “3.3 Engineering Approaches to Support Decision Making,” provides a broad overview of existing and potential engineering approaches to aiding the decision maker, including approaches to information integration, tactical decision aiding, human-computer interface (HCI) design, and physiological monitoring and augmented cognition. Also included in that section is a brief discussion of human-centered design methods that can help develop promising concepts related to decision aiding into useful and usable decision aids for the small unit leader.

![]()

2 Thomas S. Kuhn. 1970. The Structure of Scientific Revolutions, 2nd ed., University of Chicago Press, Chicago, Ill., pp. 25, 68.

3 Gary A. Klein. 1997. “An Overview of Naturalistic Decision Making Applications,” p. 141 in C.E. Zsambok and Gary A. Klein (eds.), Naturalistic Decision Making, Lawrence Erlbaum, Mahwah, N.J.

4 Thomas S. Kuhn. 2000. The Road Since Structure: Philosophical Essays, 1970-1993, University of Chicago Press, Chicago, Ill., p. 30.

Approaches to modeling human decision making behavior have evolved through various phases, as Figure 3.1 shows. According to prescriptive theories, such as economic theory5 and expected utility theory,6 humans consider available options in a formal and systematic way and then “choose the one with the highest expected return.”7 “Specifying principles and contraints derived from formal or mathematical systems such as deductive logic, Bayesian probability theory, and decision theory,” normative research explores “how people ought to make decisions”; in this vein, “the need to improve decision making arises because human decision makers systematically violate normative constraints.”8 That is, people often do not behave in a manner that is consistent with what is prescribed by rational, optimized models. These normative approaches are described below.

In contrast, descriptive models were built to capture specific decision making processes based on the actual behavior of individuals and teams, typically within natural settings. Six cognitive approaches to descriptive modeling of decision making are reviewed below.

Finally, this section on cognitive psychology concludes with a discussion of resilience, what it means for decision makers operating in uncertain environments, and how resilience engineering can help improve decision outcomes in uncertain and rapidly changing situations.

3.1.1 Prescriptive Theories

3.1.1.1 Subjective Expected Utility9

Subjective expected utility (SEU) is a mathematical model regarding choice that is at the foundation of most contemporary economics, theoretical statistics, and operations research (OR). Blume and Easley consider SEU as one class of

![]()

5 John von Neumann and Oskar Morgenstern. 1947. (2007, 60th Anniversary Edition). Theory of Games and Economic Behavior, Princeton University Press, Princeton, N.J.

6 Ralph L. Keeney and Howard Raiffa. 1993. Decisions with Multiple Objectives: Preferences and Value Tradeoffs, Cambridge University Press, New York.

7 Christopher Nemeth and Gary A. Klein. 2011. “The Naturalistic Decision Making Perspective,” in James J. Cochran (ed.), Wiley Encyclopedia of Operations Research and Management Science, Wiley, New York.

8 Raanan Lipshitz and Marvin S. Cohen. 2005. “Warrants for Prescription: Analytically and Empirically Based Approaches to Improving Decision Making,” Human Factors 47(1):102-120. See also Jonathan Baron, 2007, Thinking and Deciding, Cambridge University Press, New York.

9 This section is taken in large part from: Herbert A. Simon, George B. Dantzig, Robin Hogarth, Charles R. Piott, Howard Raiffa, Thomas C. Schelling, Kenneth A. Shepsle, Richard Thaier, Amos Tversky, and Sidney Winter, 1986, Research Briefings 1986: Report of the Research Briefing Panel on Decision Making and Problem Solving, National Academy of Sciences, National Academy of Engineering, Institute of Medicine, National Academy Press, Washington, D.C.

FIGURE 3.1 Behavioral modeling methods. SOURCE: © Ashgate Publishing. Reprinted with permission from: Jens Rasmussen. 1997. Figure 2 of Chapter 5, “Merging Paradigms: Decision Making, Management, and Cognitive Control,” in Rhona Flin, Eduardo Salas, Michael Strub, and Lynne Martin (eds.), Decision Making Under Stress: Emerging Themes and Applications, Ashgate Publishing Company, Brookfield, Vt., p. 75.

decision models for choice under uncertainty, and its dominance was understandable at a time when few alternatives were available.10

SEU assumes that a decision maker has what is termed a “utility function”—an ordering, by subjective preference, among all of the possible outcomes of a choice. In SEU, all of the alternatives are known among which a choice can be made, and the consequences of choosing each alternative can be determined.

SEU theory makes it possible to assign probabilities subjectively, which opens the way to combining subjective opinions with objective data. SEU can also be used in systems that aid human decision making. In the probabilistic version of SEU, Bayes’s rule prescribes how people should take account of new information and respond to incomplete information. Many of the modern approaches to

![]()

10 Lawrence E. Blume and David Easley. 2007. “Rationality,” in Lawrence E. Blume and Steven N. Durlauf (eds.), The New Palgrave Dictionary of Economics, June. Available at http://www.dictionaryofeconomics.com/dictionary. Accessed September 9, 2011.

optimizing operations research use assumptions of SEU theory, the major ones being that (1) maximizing the achievement of some goal is desired, (2) this can be done under specified constraints, and (3) all alternatives and consequences (or their probability distributions) are known. Satisfying these assumptions is often difficult or impossible in real-world situations.

3.1.1.2 Economic Model

Becker contends that “all human behavior can be viewed as involving participants who maximize their utility from a stable set of preferences and accumulate an optimal amount of information and other inputs in a variety of markets.”11 The economic, or rational-choice, approach equates human rational behavior with instrumentalist (especially economic) rationality. The rational-choice approach applies this concept to all rational activity and explains human behavior as economic rationality.12

3.1.1.3 Rational Actor

The rational-actor (also rational-choice) theory is used to understand economic and social behavior. In this instance, “rational” signals the desire for more of a good rather than less of it, under the presumption of some cost for obtaining it. Models used in rational-choice theory assume that “individuals choose the best action according to unchanging and stable preference functions and constraints.”13 These assumptions, however, are often violated under real-world conditions in which models are not rich enough to capture all of the behaviors that one might want to examine,14 and actual behavior is not available for observation in the model.

According to Hedström and Stern, rational-choice sociologists typically “use explanatory models in which [individuals] … are assumed to act rationally … as conscious decision makers whose actions are significantly influenced by the costs and benefits of different action alternatives.”15 Rather than focusing on the actions of single individuals, most rational-choice sociologists seek to explain

![]()

11 Gary S. Becker. 1976. The Economic Approach to Human Behavior, University of Chicago Press, Chicago, Ill., p. 14.

12 Milan Zafirovski. 2003. “Human Rational Behavior and Economic Rationality,” Electronic Journal of Sociology. Available at http://www.sociology.org/content/vol7.2/02_zafirovski.html. Accessed September 2, 2011.

13 See an entry title “Rational Choice Theory” at http://en.wikipedia.org/wiki/Rational_choice_theory.

14 Lawrence E. Blume and David Easley. 2007. “Rationality,” in Lawrence E. Blume and Steven N. Durlauf (eds.), The New Palgrave Dictionary of Economics, June. Available at http://www.dictionaryofeconomics.com/dictionary. Accessed September 9, 2011.

15 Peter Hedström and Charlotte Stern. 2007. “Rational Choice and Sociology,” in Lawrence E. Blume and Steven N. Durlauf (eds.), The New Palgrave Dictionary of Economics, June. Available at http://www.dictionaryofeconomics.com/dictionary. September 9, 2011.

“macro-level or aggregate outcomes such as the emergence of norms, segregation patterns, or various forms of collective action … [by studying] the actions and interactions that brought them about.”16

3.1.1.4 Behavioral Decision Theory

In decision theory, making effective decisions relies on “understanding the facts of a choice and the implications of [making that choice] … well enough to identify [and carry through with] the option in one’s own best interests” from among the available options.17 As Fischhoff explains, choices are described in terms of the following:

• Options: “actions that an individual might [or might not] take”;

• Outcomes: “valued consequences that might follow from those actions”;

• Values: “the relative importance of those outcomes”; and

• Uncertainties: “regarding which outcomes will be experienced.”18

These four elements are synthesized in decision rules that enable a choice among options. As a normative analysis, decision theory “can [help to] clarify the structure of complex choices” by identifying the best courses of action in light of the values that a decision maker holds.19 Fischhoff also suggests that descriptive studies and prescriptive research complement normative analysis and should be used iteratively, because (1) “descriptive research [such as approaches described in the next subsection] is needed to reveal the facts and values that normative analysis must consider,” and (2) “prescriptive interventions are needed to assess whether descriptive accounts provide the insight that is needed in order to improve decision making.”20

3.1.2 Descriptive Models of Human Behavior

A significant limitation of much of the early work in decision making theory is that training methods and decision aiding systems that were developed from formal, prescriptive systems (including SEU, economic model, rational-actor, and behavioral decision approaches) neither improved decision quality nor were

![]()

16 Peter Hedström and Charlotte Stern. 2007. “Rational Choice and Sociology,” in Lawrence E. Blume and Steven N. Durlauf (eds.), The New Palgrave Dictionary of Economics, June. Available at http://www.dictionaryofeconomics.com/dictionary. September 9, 2011.

17 Baruch Fischhoff. 2005. “Decision Research Strategies,” Health Psychology 24(4):S9-S16.

18 Baruch Fischhoff. 2005. “Decision Research Strategies,” Health Psychology 24(4):S9-S16.

19 Baruch Fischhoff. 2005. “Decision Research Strategies,” Health Psychology 24(4):S9-S16.

20 Baruch Fischhoff. 2010. “Judgment and Decision Making,” WIREs Cognitive Science 1:724-735.

adopted in field settings.21 Researchers in human behavior and performance found the tools and prescribed methods difficult to use in their own work.22 This is because field settings are typically complex, emergent, poorly defined, and strongly influenced by context. While they were academically appealing, prescriptive theories of decision making were rarely the basis for practical changes that improved decision making.23 As a result, newer approaches began to be developed in the 1980s that have been found to be better suited to understanding and improving decision making behavior in the real world, such as that carried out by Marine Corps small unit leaders. A discussion of these newer approaches is presented in the following sections.

3.1.2.1 Heuristics and Biases

The heuristics and biases (HB) approach contends that people do not use strategies in the form of algorithms in order to follow principles of optimal performance. Instead, individuals rely on rules of thumb to make decisions under conditions of uncertainty and employ them even when expected utility theory, probability laws, and statistics suggest that an individual is likely to choose certain optimal courses of behavior. These heuristics include representativeness (“in which probabilities are evaluated by the degree to which A is representative of B”), availability of instances or scenarios (“in which people assess the frequency of a class or the probability of an event by the ease with which instances of occurrences can be brought to mind”), and adjustment from an anchor (in which “people make estimates by starting from an initial value that is adjusted to yield the final answer”).24 Although heuristics can be “highly economical and usually effective,” their use can also lead to biases resulting in “systematic and predict-

![]()

21 One potential explanation is simply that the prescriptive “models” of human behavior do not, in fact, model human behavior and thus are incompatible with how a human accomplishes the unaided task. A more complete discussion of how decision theory models and game theory models in particular fail in representing human behavior in the “real world” can be found in a recent study: National Research Council, 2008, Greg L. Zacharias, Jean Macmillan, and Susan B. Van Hemel (eds.), Behavioral Modeling and Simulation: From Individuals to Societies, The National Academies Press, Washington, D.C., pp. 195-206.

22 J. Frank Yates, Elizabeth S. Veinott, and Andrea L. Patalano. 2003. “Hard Decisions, Bad Decisions: On Decision Quality and Decision Aiding,” pp. 13-63 in Sandra L. Schneider and James Shanteau (eds.), Emerging Perspectives on Judgment and Decision Research, Cambridge University Press, New York.

23 Although the constrained optimization methods that underlie many of the prescriptive theories have found significant application in the development of tactical decision aids, as described below in the section titled “3.3.3 Tactical Decision Aids for Course of Action Development and Planning.”

24 Amos Tversky and Daniel Kahneman. 1974. “Judgment Under Uncertainty: Heuristics and Biases,” Science 185(4157):1124-1131.

able errors.”25 The HB approach can benefit activities such as training by enabling decision makers to anticipate and avoid such errors.

3.1.2.2 Naturalistic Decision Making26

The naturalistic decision making approach seeks to understand human cognitive performance by studying how individuals and teams actually make decisions in real-world settings rather than in a laboratory. NDM researchers typically focus on mental activities such as decision making and sensemaking strategies, while also trying to be sensitive to the context of a situation. Three major criteria have appeared in the literature to describe research that counts as NDM study: such research (1) focuses on expertise, (2) takes place in field (not laboratory) settings, and (3) reflects the conditions such as complexity and uncertainty that complicate our lives. Marine Corps small unit leaders operate in the kind of complex, uncertain environment for which the NDM approach is a good fit.

NDM has focused on the importance of intuition, as well as on two key models: recognition-primed decision making (RPD) and the data-frame theory (DFT) of sensemaking.

3.1.2.3 Intuition

The lay person routinely thinks of intuition as knowledge or belief that is obtained by some means other than reason or perception. In fact, intuition is tacit knowledge, or expertise, that comes from experience. Intuition relies on experience to recognize key patterns that indicate the dynamics of a situation.27 NDM research has helped to “demystify intuition by identifying the cues that experts use to make their judgments, even if those cues involve tacit knowledge and are difficult for the expert to articulate.”28 Intuition-based models account for how people use their experience to rapidly categorize situations, relying on “some kind of synthesis of their experience to make … judgments.”29 These situation categories, implicitly or explicitly, then suggest appropriate courses of action.30

![]()

25 Amos Tversky and Daniel Kahneman. 1974. “Judgment Under Uncertainty: Heuristics and Biases,” Science 185(4157):1124-1131.

26 This section is taken nearly verbatim from: Christopher Nemeth and Gary A. Klein, 2011, “The Naturalistic Decision Making Perspective,” in James J. Cochran (ed.), Wiley Encyclopedia of Operations Research and Management Science, Wiley, New York.

27 Gary A. Klein. 1999. Sources of Power, MIT Press, Cambridge, Mass.

28 Daniel Kahneman and Gary A. Klein. 2009. “Conditions for Intuitive Expertise: A Failure to Disagree,” American Psychologist 64(6):515-526.

29 Christopher Nemeth and Gary A. Klein. 2011. “The Naturalistic Decision Making Perspective,” in James J. Cochran (ed.), Wiley Encyclopedia of Operations Research and Management Science, Wiley, New York.

30 Raanan Lipshitz and Marvin S. Cohen. 2005. “Warrants for Prescription: Analytically and Empirically Based Approaches to Improving Decision Making,” Human Factors 47(1):102-120.

3.1.2.4 Recognition-Primed Decision Making

For the past two decades, the Marine Corps has subscribed in varying degrees to the recognition-primed decision making model of human decision making. Given the changing nature of small unit operations as described in Chapters 1 and 2, the use of this model is worth reconsidering.

Developed from NDM research, the RPD model (Figure 3.2) “describes how people use their experience in the form of a repertoire of patterns. The patterns highlight the most relevant cues [in a situation], provide expectancies, identify plausible goals, and suggest typical types of reactions.”31 The decision maker relies on specific content expertise and experience.32 The RPD model blends pattern matching (intuition as described above) and analysis (specifically, by means of mental simulation).33

In the RPD model, people who “need to make a decision … can quickly match the situation [that they confront] to the patterns they have learned. If they find a clear match [between the situation and a learned pattern], they can carry out the most typical course of action. They do not evaluate an option by comparing it to others, but instead imagine—mentally simulate—how [the action] might be carried out, … [making it possible to] successfully make very rapid decisions … [I]n-depth interviews with fire ground commanders about recent and challenging incidents … [have shown] that the percentage of [times that] RPD strategies [were used] generally ranged from 80% to 90%.”34, 35

3.1.2.5 Data-Frame Theory of Sensemaking

Sensemaking is the exploitation of information under conditions of uncertainty, complexity, and time pressure in order to support awareness, understanding, planning, and decision making. Individuals and teams with superior sensemaking abilities can be expected to handle situations better in spite of uncertainty and information overload, to make faster and better decisions with regard to an

![]()

31 Christopher Nemeth and Gary A. Klein. 2011. “The Naturalistic Decision Making Perspective,” in James J. Cochran (ed.), Wiley Encyclopedia of Operations Research and Management Science, Wiley, New York; Gary A. Klein. 2008. “Naturalistic Decision Making,” Human Factors 50(3):456-460.

32 Terry Connolly and Ken Koput. 1997. “Naturalistic Decision Making and the New Organizational Context,” pp. 285-303 in Zur Shapira (ed.), Organizational Decision Making, Cambridge University Press, Cambridge, U.K.

33 Gary A. Klein. 1993. “Recognition-Primed Decision (RPD) Model of Rapid Decision Making,” pp. 138-147 in Gary A. Klein, Judith Orasanu, Roberta Calderwood, and Caroline E. Zsambok (eds.), Decision Making in Action, Wiley, Norwood, N.J.

34 Christopher Nemeth and Gary A. Klein. 2011. “The Naturalistic Decision Making Perspective,” in James J. Cochran (ed.), Wiley Encyclopedia of Operations Research and Management Science, Wiley, New York; Gary A. Klein. 2008. “Naturalistic Decision Making,” Human Factors 50(3):456-460.

35 Gary A. Klein. 1998. Sources of Power: How People Make Decisions, MIT Press, Cambridge, Mass.

FIGURE 3.2 Recognition-primed decision making model. SOURCE: Gary A. Klein. 1989. “Recognition-Primed Decisions,” pp. 47-92 in Advances in Man-Machine Systems Research, W.B. Rouse (ed.), Vol. 5, JAI Press, Greenwich, Conn.

adversary, and to prevent fundamental surprise.36 Success in seeking and using information is essential to sensemaking because this behavior responds to, and is mandated by, changing situational conditions.37

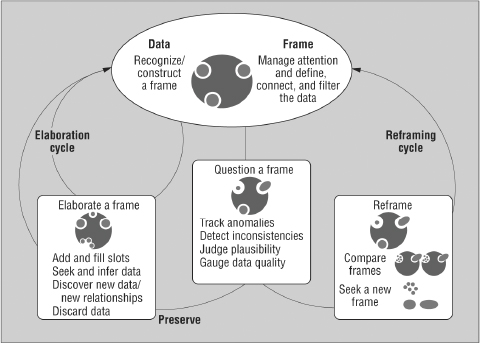

The data-frame theory of sensemaking (Figure 3.3) describes the process of fitting data into a frame (a story, script, map, or plan) and fitting a frame around the data.38 Context informs how an individual views and handles new information. A frame provides cues, goals, and expectancies and guides attention toward data that are of interest to the frame. Experience-based knowledge helps to create

![]()

36 Gary A. Klein, David Snowden, Chew Lock Pin, and Cheryl A. Teh. 2007. “A Sense Making Experiment—Enhanced Reasoning Techniques to Achieve Cognitive Precision,” paper presented at 12th International Command and Control Research and Technology Symposium, Singapore.

37 Brenda Derwin. 1983. “An Overview of Sense-Making Research: Concepts, Methods, and Results to Date,” paper presented at International Communication Association Annual Meeting, Dallas, Tex., May.

38 Gary A. Klein, Jennifer K. Phillips, Erica L. Rall, and Deborah A. Battaglia. 2003. “A Summary of the Data/Frame Model of Sensemaking,” Proceedings of Human Factors of Decision Making in Complex Systems, University of Abertay, Dundee, Scotland.

FIGURE 3.3 The data-frame theory of sensemaking. SOURCE: © IEEE. Reprinted with permission from: Gary A. Klein, Brian Moon, and Robert R. Hoffman. 2006. “Making Sense of Sensemaking, 2: A Macrocognitive Model,” IEEE Intelligent Systems 21(5):89.

an emergent frame, which in turn informs the significance of new information. The frame becomes a sort of dynamic filter that can be questioned, compared to other frames, or elaborated and enriched, as an individual continuously seeks to assess the situation.

The deliberate construction and use of information in sensemaking find parallels in Revans’s action learning, in which individuals learn with and from others by studying their own actions and experience in order to improve performance. The action learning approach includes four activities: (1) encountering changes in perceptions of the world (hearing); (2) the exchange of information, advice, criticisms, and other forms of influence (counseling); (3) taking action in the world with deliberately designed plans (managing); and (4) following the five stages of the scientific method (authentication).39

3.1.2.6 Team Cognition

The notion that team members must share knowledge about their task and each other has been studied for more than 20 years. “Team cognition,” or shared

![]()

39 David Botham. 1998. “The Context of Action Learning,” in Wojciech Gasparski and David Botham (eds.), Action Learning. Praxiology, The International Annual of Practical Philosophy and Methodology 6:33-61, Transaction Publishers, New Brunswick, N.J.

mental models as some have labeled it, refers to the knowledge that must be shared among team members so that sophisticated, coordinated performance can occur even under extreme conditions such as time pressure or danger.40 Taking a sports example, the “no-look” or “blind pass” in basketball aptly illustrates how team members can perform a fairly complex sequence of behaviors with little or no overt communication. In such cases, the team members are relying on shared knowledge about the task (e.g., how much time is left in the game, how good the opponent is, etc.) and each other (e.g., the skill level of their teammates, how likely it is that a teammate will anticipate the pass, etc.) in order to coordinate effectively. This degree of shared knowledge must be developed over time as the team members perform together. In addition, research has shown that shared knowledge can be increased with targeted team-level training interventions.41

Team researchers have studied a number of other team-level competencies in addition to shared knowledge that are important for team functioning; these include communication, coordination, compensatory behavior, mutual performance monitoring, intrateam feedback, and collective orientation.42 Over the past 20 years, investigations into how to improve team decision making have revealed several useful strategies.43 In most cases, successful team training involves exposing the team to realistic scenarios that represent the types of problems that it will encounter in the operational environment. Such scenarios, when appropriately designed and paired with effective feedback and debriefing mechanisms, help teams to develop the repertoire of instances necessary to support adaptive team performance.44

![]()

40 Janis A. Cannon-Bowers, Eduardo Salas, and Sharolyn Converse. 1993. “Shared Mental Models in Expert Team Decision-Making,” pp. 221-246 in N. John Castellan, Jr. (ed.), Individual and Group Decision Making: Current Issues, Lawrence Erlbaum Associates, Hillsdale, N.J.

41 Kimberly A. Smith-Jentsch, Janis A. Cannon-Bowers, Scott I. Tannenbaum, and Eduardo Salas. 2008. “Guided Team Self-Correction: Impacts on Team Mental Models, Processes, and Effectiveness,” Small Group Research 39(3):303-327.

42 Janis A. Cannon-Bowers, Scott I. Tannenbaum, Eduardo Salas, and Catherine E. Volpe. 1997. “Defining Competencies and Establishing Team Training Requirements,” pp. 333-380 in Richard A. Guzzo and Eduardo Salas (eds.), Team Effectiveness and Decision-Making in Organizations, Jossey-Bass, San Francisco, Calif.

43 Eduardo Salas, Diana R. Nichols, and James E. Driskell, 2007, “Testing Three Team Training Strategies in Intact Teams: A Meta-Analysis,” Small Group Research 38(4):471-488; Eduardo Salas and Janis A. Cannon-Bowers, 2000, “The Anatomy of Team Training,” pp. 312-335 in Sigmund Tobias and J.D. Fletcher (eds.), Training and Retraining: A Handbook for Businesses, Industry, Government and Military, Macmillan, Farmington Hills, Mich. Also see Janis A. Cannon-Bowers and Clint A. Bowers, 2010, “Team Development and Functioning,” in Sheldon Zedeck (ed.), Handbook of Industrial and Organizational Psychology, American Psychological Association, Washington, D.C., for a summary.

44 Steve W.J. Kozlowski, Rebecca J. Toney, Morell E. Mullins, Daniel A. Weissbein, Kenneth G. Brown, and Bradford S. Bell, 2001, “Developing Adaptability: A Theory for the Design of Integrated-Embedded Training Systems,” pp. 59-123 in Advances in Human Performance and Cognitive Engineering Research, US: Elsevier Science/JAI Press; Janis A. Cannon-Bowers and Clint A. Bowers, 2009, “Synthetic Learning Environments: On Developing a Science of Simulation, Games and Virtual Worlds for Training,” pp. 229-261 in Steve W.J. Kozlowski and Eduardo Salas (eds.), Learning, Training and Development in Organizations, Routledge, New York.

3.1.3 Resilience

Resilience is the ability either “to mount a robust response to unforeseen, unpredicted and unexpected demands and to resume normal operations, or to develop new ways to achieve operational objectives.”45 The ability to realize that potential, however, relies on understanding change and having the ability to adapt. Any number of factors can lead to systems that are brittle,46 or unable to change in response to circumstances. A system that is resilient “can maintain the ability to adapt when demands go beyond an organization’s customary operating boundary.”47

Individuals, groups, and systems all have the potential to be resilient, and “human operators can be a source of resilience as a result of initiatives to create safety under resource and performance pressure.”48 However, operators alone cannot be expected to ensure resilience. The systems of which they are a part also need the ability to adapt. Resilience engineering (RE) can make it possible to cope with and recover from unexpected developments. RE provides the tools to develop and manage systems that can anticipate the need for change, because no organization or system can be designed to anticipate all the variability in the real world. Research approaches such as cognitive systems engineering (CSE)49 make it possible to learn how to avoid brittleness in the face of uncertainty and unanticipated variability.

3.1.4 Implications from Cognitive Psychology

Cognitive psychology brings to bear time-tested knowledge based on scientific inquiry that can address each of the findings in Chapter 2 and support the committee’s recommendations in Chapter 4.

![]()

45 Christopher Nemeth. 2011. “Adapting to Change and Uncertainty,” Cognition, Technology & Work December 28; Erik Hollnagel, David D. Woods, and Nancy Leveson (eds.). 2006. Resilience Engineering: Concepts and Precepts, Ashgate Publishing, Aldershot, U.K.

46 Nadine B. Sarter, David D. Woods, and Charles E. Billings. 1997. “Automation Surprises,” pp. 1926-1943 in Gavriel Salvendy (ed.), Handbook of Human Factors and Ergonomics, 2d Ed., Wiley, Hoboken, N.J.

47 Christopher Nemeth. 2011. “Adapting to Change and Uncertainty,” Cognition, Technology & Work December 28; Christopher Nemeth, Robert Wears, David D. Woods, Erik Hollnagel, and Richard I. Cook. 2008. “Minding the Gaps: Creating Resilience in Healthcare,” in Kerm Henricksen, James B. Battles, Margaret A. Keyes, and Mary L. Grady (eds.), Advances in Patient Safety: New Directions and Alternative Approaches, Vol. 3, Performance and Tools, AHRQ Publication No. 08-0034-3, Agency for Healthcare Research and Quality, Rockville, Md.

48 Christopher Nemeth. 2011. “Adapting to Change and Uncertainty,” Cognition, Technology & Work December 28; Richard I. Cook and David D. Woods. 1994. “Operating at the Sharp End: The Complexity of Human Error,” pp. 255-310 in Marilyn Sue Bogner (ed.), Human Error in Medicine. Lawrence Erlbaum, Hilllsdale, N.J.

49 David D. Woods and Emilie M. Roth. 1988. “Cognitive Systems Engineering,” pp. 3-43 in M. Helander (ed.), Handbook of Human-Computer Interaction, North-Holland, Amsterdam.

Methods that are inherent in cognitive psychology research approaches such as cognitive task analysis (CTA) and cognitive systems engineering—both described in more detail below in the section titled “3.3.1 Decision Aid Design Methodologies”—reveal the key characteristics of uncertain, risky, hazardous work settings. They also reveal actual behaviors of small unit leaders as they overcome obstacles in the pursuit of the goals that their missions dictate. Data from studies of individual and team performance enable the Marine Corps to understand how Marines actually perform cognitive and metacognitive work, from problem detection, naturalistic decision making, sensemaking, planning, and replanning, to adaptation, coordination, attention management, the maintaining of common ground, management of uncertainty and risk, and more. Findings can be used to create new processes, facilities, and information systems and aids to support cognitive work.

The use of cognitive psychology methods ensures that models and solutions based on those data are valid, accurate reflections of the true nature of that world. For example, the close match of the recognition-primed decision making model to actual decision making behavior observed in the field led to its adoption by the Marine Corps. This scientific rigor makes it possible for cognitive psychology to develop solutions that gain traction in actual applications. Cognitive psychology can be used to derive requirements for tasks that form the basis for job design and selection criteria. It can be used to create training content, scenarios, and means to evaluate performance before and after training. It can provide approaches to elicit expertise and transfer it to others efficiently, or use it to develop cognitive aids to assist decision making in any number of applications.

Descriptive approaches to decision making will continue as the foundation of contributions to small unit leader decision making behavior that includes sensemaking, situational assessment, problem detection, planning, and coordination and collaboration. Cognitive psychology methods used in field research can reveal individual and group initiatives that Marines develop to perform new missions in new settings. These methods can then be used to provide the basis for the design and development of useful and usable information systems, the next generation of lessons learned, and tactical decision aids (TDAs). Shedding light on team behaviors can support new approaches to team training, rapid-response training capabilities that allow faster reaction to the evolution of enemy tactics and techniques, courses of instruction that are scaled to the company level, and, finally, training systems that respond to field experience, to incorporate and convey lessons learned more quickly. Cognitive psychology can continue to be used to discover and to learn about new challenges that small unit leaders face as the missions and role of the Marine Corps evolve.

Following is a summary of recent work in cognitive neuroscience, which seeks to use insight from functional neuroanatomy to extend theoretical models

of decision making, as well as to explain individual differences in decision making performance.

3.2.1 Overview of Cognitive Neuroscience

Cognitive neuroscience is an emerging academic area that uses measures of brain function to examine decision making in a way that complements cognitive psychology, with its primary focus on overt behaviors and associated internal mental processes. Using functional magnetic resonance imaging (fMRI) and electroencephalographic measures of brain activity, the science aims to uncover specific brain-behavior relationships that could explain behavior and performance in decision making. The approach is only beginning to examine what cognitive factors might lead to successful decision making, with recent experiments drawing mainly on tasks involving economic exchange. Clearly, these are relatively constrained compared to the complex decision making environment of the small unit leader (e.g., adaptive decision making in the face of an adversary). Initial results demonstrate that it is possible to separate decision making into distinct cognitive components that are supported by separable brain systems.50 A consistent observation across studies is that a particular decision depends on (1) the way that a person integrates available evidence and (2) the way that he or she estimates the expected value placed on the decision.51

Much of the research in cognitive neuroscience related to decision making examines the influences that determine the estimated value of one choice over another. In this framework, valuation is an individual’s determination of the possible risks, rewards, and costs, and also the way in which the person’s value is shaped by societal influences. Functional magnetic resonance imaging studies demonstrate that different sources of reward, costs, and societal influences are processed in distinct networks of the brain.52 Furthermore, reward networks can be distinguished from networks that track the relative risk of a decision.53 This dissociation of brain networks for reward and risk raises a fundamental question

![]()

50 Vinod Venkatraman, John W. Payne, James R. Bettman, Mary Francis Luce, and Scott A. Huettel, 2009, “Separate Neural Mechanisms Underlie Choices and Strategic Preferences in Risky Decision Making,” Neuron 62:593-602; Joseph W. Kable and Paul W. Glimcher, 2009, “The Neurobiology of Decision: Consensus and Controversy,” Neuron 63(6):733-745.

51 Jan Peters and Christian Büchel, 2009, “Overlapping and Distinct Neural Systems Code for Subjective Value During Intertemporal and Risky Decision Making,” Journal of Neuroscience 29(50):15727-15734; David V. Smith, Benjamin Y. Hayden, Trong-Kha Truong, Allen W. Song, Michael L. Platt, and Scott A. Huettel, 2010, “Distinct Value Signals in Anterior and Posterior Ventromedial Prefrontal Cortex,” Journal of Neuroscience 30(7):2490-2495.

52 Jamil Zaki and Jason P. Mitchell. 2011. “Equitable Decision Making Is Associated with Neural Markers of Intrinsic Value,” Proceedings of the National Academy of Sciences of the United States of America 108(49):19761-19766.

53 Peter N.C. Mohr, Guido Biele, and Hauke R. Heekeren, 2010, “Neural Processing of Risk,” Journal of Neuroscience 30(19):6613-6619; Gui Xue, Zhong-Lin Lu, Irwin P. Levin, and Antoine Bechara, 2010, “The Impact of Prior Risk Experiences on Subsequent Risky Decision-Making: The Role of the Insula,” Neuroimage 50:709-716.

about how the brain reconciles competing influences. There is growing consensus that the brain uses another network to perform a mental calculus that combines different rewards and risks into a common valuation.54 It is this calculation of value across many inputs rather than a single reward or risk that ultimately directly shapes a decision. These distinctions can be used to explain why decisions may often appear to be “irrational” if all factors influencing an internalized valuation (and subsequent externalized and observed decision) are not considered.

3.2.2 Implications from Cognitive Neuroscience

Cognitive processes used for estimating value and evaluating evidence are potential sources of individual variation that could explain differences in how people make decisions. This possibility has been supported by neuroscience research using fMRI experiments to study tasks that are specifically associated with risk taking,55 avoiding uncertainty,56 and expending effort.57 These studies are particularly important because they provide objective metrics of decision making that could—the word could is emphasized here—be applied in the assessment of small unit leader decision making “style” or “biases” (e.g., toward or away from risk). One potential application could be in the selection process: if cognitive neuroscience techniques eventually prove useful in the identification of objective metrics of decision making performance by “good” small unit leaders, for example, then such techniques might be applied to the screening of potential small unit leader candidates. Recent research also suggests that some of the processes influencing decision making performance can be modified through training to account for individual differences seen across populations. For example, reward-sensitive individuals can be trained to increase working memory and increase performance on tasks that do not include a reward.58 Thus, another

![]()

54 Joseph W. Kable and Paul W. Glimcher, 2010, “An ‘As Soon As Possible’ Effect in Human Intertemporal Decision Making: Behavioral Evidence and Neural Mechanisms,” Journal of Neurophysiology 103(5):2513-2531; Guillaume Sescousse, Jérǒme Redouté, and Jean-Claude Dreher, 2010, “The Architecture of Reward Value Coding in the Human Orbitofrontal Cortex,” Journal of Neuroscience 30:13095-13104.

55 Jan B. Engelmann and Diana Tamir. 2009. “Individual Differences in Risk Preference Predict Neural Responses During Financial Decision-Making,” Brain Research 1290:28-51.

56 Koji Jimura, Hannah S. Locke, and Todd S. Braver. 2010. “Prefrontal Cortex Mediation of Cognitive Enhancement in Rewarding Motivational Contexts,” Proceedings of the National Academy of Sciences of the United States of America 107:8871-8876.

57 Joseph T. McGuire and Matthew M. Botvinick. 2010. “Prefrontal Cortex, Cognitive Control, and the Registration of Decision Costs,” Proceedings of the National Academy of Sciences of the United States of America 107:7922-7926.

58 Koji Jimura, Hannah S. Locke, and Todd S. Braver. 2010. “Prefrontal Cortex Mediation of Cognitive Enhancement in Rewarding Motivational Contexts,” Proceedings of the National Academy of Sciences of the United States of America 107:8871-8876.

potential application is to assess the progress of an individual’s decision making effectiveness during training.

Cognitive neuroscience is still in its infancy and is far from being a validated and proven practical means for selection and/or training assessment. However, it should be recognized that the methodology is evolving rapidly, with new techniques emerging to map the strength of connections between brain regions based on either structural information (diffusion tensor and diffusion spectrum imaging)59 or functional information (resting-state fMRI).60 It remains to be seen if these methods could be used to select for or assess the decision making performance of small unit leaders.

3.3 ENGINEERING APPROACHES TO SUPPORT DECISION MAKING

There is a long history of the leveraging of methods from engineering to enhance decision making, through the development of what are called decision aids, and a complete review is beyond the scope of this report.61 Here, the focus is on five areas of opportunity that the committee considered to be the most relevant to the development of decision aids for small unit leaders: (1) design methodology, (2) information integration, (3) algorithmic decision aids, (4) human-computer interaction, and (5) physiologic monitoring and augmented cognition.

3.3.1 Decision Aid Design Methodologies

Engineering “solutions” oftentimes start with what the engineer thinks should be the solution, rather than with an assessment of what the end user is trying to accomplish. As a result, fielded decision aids may not only fail to satisfy the fundamental goal of aiding the user—in this case the small unit leader—but may actually hinder the user in any number of ways (e.g., by being so cumbersome to use that user’s workload ends up being increased rather than reduced by the aid; by being used outside the bounds for which they were designed; by being sufficiently complex so as to obfuscate their inner workings, thereby reducing trustworthiness; and so on).62,63 As a result, designers in the systems engineering and human

![]()

59 Danielle S. Bassett, Jesse A. Brown, Vibhas Deshpande, Jean M. Carlson, and Scott T. Grafton. 2011. “Conserved and Variable Architecture of Human White Matter Connectivity,” Neuroimage 54(2):1262-1279.

60 Gagan S. Wig, Bradley L. Schlaggar, and Steven E. Petersen. 2011. “Concepts and Principles in the Analysis of Brain Networks,” Annals of the New York Academy of Sciences 1224(1):126-146.

61 An early example is the more than 2,000-year-old astrolabe, an aid for the analog calculation of the locations of celestial bodies, and later, an aid for navigation.

62 See, for example, Steven Casey, 1998, Set Phasers on Stun: And Other True Tales of Design, Technology, and Human Error, Agean Publishing Company, Santa Barbara, Calif.

63 Thomas B. Sheridan. 2002. Humans and Automation: System Design and Research Issues, Wiley, New York.

factors community have come together and identified general approaches to the design and development of systems that account for user needs while recognizing the capabilities and limitations of both the user and the decision aid being developed. Rather than a summary of the extensive literature in this area, what follows is a very brief overview of two methodologies introduced in the discussion above: cognitive task analysis and cognitive systems engineering.

3.3.1.1 Cognitive Task Analysis

Researchers cannot expect decision makers to explain accurately why they have made decisions,64 and so researchers have developed methods to learn from experts. Cognitive task analysis65 is a set of methods, such as semi-structured interviews and observations, that are used to discover the cues and context that influence how people make decisions. These methods reveal the actual demands and obstacles that practitioners confront in their work and serve as a basis to make inferences about the judgment and decision process. Results of CTA research are used to develop representations that include descriptions, diagrams, and models. Although the committee did not perform a complete CTA, the interviews that the committee conducted at Quantico, Virginia, among combat-experienced Marines relied on a CTA approach to elicit their expert knowledge (see Appendix E for the interview protocol).

3.3.1.2 Cognitive Systems Engineering

The science base described in this report is critical to the improvement of small unit leader decision making. However, as noted in a 2007 study from the National Research Council (NRC),66 it is not sufficient in itself to ensure the successful development, acquisition, deployment, operation, and maintenance of effective human-centered systems to support that decision making. Cognitive systems engineering67,68 extends CTA methods to the development of tools, processes, and facilities to support cognitive work. Although there are many different approaches to the analysis components of CSE (e.g., cognitive task

![]()

64 Richard E. Nisbett and Timothy D. Wilson. 1977. “Telling More Than We Can Know: Verbal Reports on Mental Processes,” Psychological Review 84(3):231-259.

65 Beth Crandall, Gary A. Klein, and Robert R. Hoffman. 2006. Working Minds: A Practitioner’s Guide to Cognitive Task Analysis, MIT Press, Cambridge, Mass.

66 National Research Council. 2007. Richard W. Pew and Anne S. Mavor (eds.), Human-System Integration in the System Development Process, The National Academies Press, Washington, D.C.

67 David D. Woods and Emilie M. Roth. 1988. “Cognitive Systems Engineering,” pp. 3-43 in M. Helander (ed.), Handbook of Human-Computer Interaction, North-Holland (Elsevier Science Publishers), New York.

68 Erik Hollnagel and David D. Woods. 2005. Joint Cognitive Systems: Foundations of Cognitive Systems Engineering, Taylor and Francis, Boca Raton, Fla.

analysis,69 cognitive work analysis,70 work-centered support systems,71 applied cognitive task analysis72) they share a common view of system development as being human-centered. The incorporation of CSE into the Department of Defense (DOD) systems acquisition process will account for the complex individual and team cognitive activity that Marines perform. It will also ensure that systems involving decision support will incorporate human-centered design into initial requirements specification, and then to development, evaluation, and eventual fielded systems.

3.3.2 Information Integration: Collection, Fusion, and Assessment

Good decision making requires an accurate assessment of the current and evolving situation, as well as a clear understanding of the options available for dealing with a given situation. Here the opportunities for improving the assessment half of the problem are discussed, and the next section addresses opportunities for improving associated option-generation and option-selection activities.

As discussed in an extensive literature reaching back to the late 1980s73 and recently documented in a collection of [critical] essays,74 situational awareness (SA) entails “the perception of the critical elements in the environment within a volume of time and space, the comprehension of their meaning, and the projection of their status in the near future.”75

SA enables decision makers to assess the state of the world, important features in a scene, estimates for progress against plan, and adversaries’ intentions. Given the explosion of air- and ground-based sensors, providing adequate SA to the Marine may seem a solved problem. However, four significant challenges exist:

![]()

69 Jan Maarten Schraagen, Susan F. Chipman, and Valerie L. Shalin (eds). 2000. Cognitive Task Analysis (Expertise: Research and Application Series), Lawrence Erlbaum Associates, Mahwah, N.J.

70 Kim J. Vicente. 1999. Cognitive Work Analysis: Toward Safe, Productive, and Healthy Computer-Based Work, CRC Press, Boca Raton, Fla.

71 Robert G. Eggleston, Emilie M. Roth, and Ronald Scott. 2003. “A Framework for Work-Centered Product Evaluation,” pp. 503-507 in Proceedings of the Human Factors and Ergonomics Society 47th Annual Meeting, Human Factors and Ergonomics Society, Santa Monica, Calif.

72 Laura G. Militello and Robert J.B. Hutton. 1998. “Applied Cognitive Analysis (ACTA): A Practitioner’s Toolkit for Understanding Cognitive Task Demands,” Ergonomics 41(11):1618-1641.

73 Martin L. Fracker. 1988. “A Theory of Situational Assessment: Implications for Measuring Situation Assessment,” pp. 102-106 in Proceedings of the Human Factors Society 32nd Annual Meeting, Santa Monica, Calif.

74 Eduardo Salas and Aaron S. Dietz. 2011. Situational Awareness, Ashgate Publishing Co, Burlington, Vt.

75 Mica Endlsey. 1995. “Toward a Theory of Situation Awareness in Dynamic Systems,” Human Factors 37(1):32-64.

1. Perceiving key elements76 requires properly emplaced sensors, be they human eyes on a target, or unmanned aerial vehicles (UAVs) with electro-optical/ forward-looking infrared sensors onboard.

2. The collected sensor data must be processed to provide a perception of the elements. Humans can do this with relative ease, but machine sensors must be augmented with sophisticated processing systems.

3. Perceived elements must be aggregated to define a situation that is operationally relevant to the Marine charged with making decisions, at whatever echelon is being considered. Humans do this quite well, particularly with training and practice, but reliable machine-based “situation assessors” have yet to be developed.

4. The assessment of dynamic situations requires some form of extrapolation in order to anticipate how events might unfold. Although computational analysis can support human judgment, reliable predictive “situational forecasters” simply do not exist.

Shortcomings at all levels of the SA-chain have been exacerbated by the rapid proliferation of sensor feeds that are generating petabytes of data.77 However, considerable effort is being put into new sensor-management, data-processing, and information-integration capabilities, all of which may significantly benefit small unit decision making in the following areas:

• Collection management (CM) to provide capabilities for sensor selection, planning, and placement are more common in upper echelons of the military than among small units. However, the provision of appropriate sensor systems and CM capabilities could enhance the small unit’s ability to develop SA. For example, simple “apps” (applications) that employ novel optimization algorithms can help small unit leaders deploy static and dynamic sensor systems so as to maximize information gains.

• Sensor signal processing to convert collected data into higher-order elements (objects, events, relationships) will be critical in addressing the data deluge. For example, the explosive growth of UAV-supplied full-motion video (FMV) will overwhelm exploitation methodologies that rely on human processing.78 Data fusion, feature detection and identification, pattern recognition, and anomaly detection can support human analysts by aggregating large amounts of

![]()

76 Critical elements vary from situation to situation: in a “kinetic” situation this could be a small adversary group waiting in ambush; in a “nonkinetic” situation, the banker funding a bomb maker, and so forth. Elements can be objects (e.g., individual adversaries, weapons systems, etc.), relationships (parts of a formation, members of a terrorist cell, etc.), or events (explosions, IED emplacements, food riots, etc.).

77 Comment by Lt Gen David A. Deptula, USAF, in Stew Magnuson, 2010, “Military ‘Swimming in Sensors and Drowning in Data,’” National Defense Magazine, January.

78 That is, detecting the elements of interest to the decision maker.

raw data into elements of interest—not just for FMV streams but for other sensor systems as well. The fusion of different classes of data (e.g., imagery and acoustic streams) is quite difficult, requiring not only the georegistration of separately emplaced sensors and their fields of sensitivity, but also an understanding of how each “registers” different elements (e.g., a truck). Although both single-sensor and multisensor data fusion technologies are still under development, emerging methods and technologies are likely to play an important role in augmenting the efficiency and accuracy of human analysts.

• Estimation of the current situation is primarily a manual process.79 Little support exists for small units, especially in hybrid engagements calling for “nonkinetic” situational assessment (e.g., “What is the sentiment of the village toward our presence here?”). However, two related NRC reports80,81 have recently suggested that methodologies such as expert systems82 and case-based reasoning83 might be used to integrate disparate elements into situational assessments to support small unit decision making in complex hybrid engagements. More sophisticated probabilistic methodologies, including Bayesian Belief Networks,84,85 Dynamic Bayesian Belief Networks, and Probabilistic Relational Models,86 bear investigation, as do network models that support the visualization of social relationships, communication pathways, and information dissemination. Moreover, machine learning techniques87,88 may enable knowledge capture and reuse: for example, encoding information about recent events to support Marines in assess-

![]()

79 Typically accomplished with simple “laydown” maps showing blue and red forces. More advanced displays could be envisioned that used sensor data on enemy movements and fires, fused with terrain features and blue force information, to serve as the basis for a probabilistic threat assessment, visually displayed by “heat maps” or threat-density maps, showing relative concentration densities.

80 National Research Council. 1998. “Situation Awareness,” pp. 172-202 in Richard Pew and Anne Mavor (eds.), Modeling Human and Organizational Behavior, National Academy Press, Washington, D.C.

81 National Research Council. 2008. Greg L. Zacharias, Jean MacMillan, and Susan B. Van Hemel (eds.), Behavioral Modeling and Simulation: From Individuals to Societies, The National Academies Press, Washington, D.C.

82 Peter Jackson. 1998. Introduction to Expert Systems, Addison Wesley, Boston, Mass.

83 Agnar Aamodt and Enric Plaza. 1994. “Case-Based Reasoning: Foundational Issues, Methodological Variations, and System Approaches,” AI Communications 7(1):39-59.

84 Gregory F. Cooper. 1990. “The Computational Complexity of Probabilistic Inference Using Bayesian Belief Networks,” in Artificial Intelligence 42(2-3).

85 Ann E. Nicholson and J. Michael Brady. 1994. “Dynamic Belief Networks for Discrete Monitoring,” in IEEE Systems, Man, and Cybernetics Society 24(11).

86 Lise Getoor, Nir Friedman, Daphne Koller, and Avi Pfeffer. 2001. “Learning Probabilistic Relational Models,” pp. 307-338 in Sašo Džeroski and Lavrac Nada (eds.), Relational Data Mining, Springer, New York.

87 Dimitri P. Bertsekas and John Tsitsiklis. 1996. Neuro-Dynamic Programming, Athena Scientific, Nashua, N.H.

88 Pieter Abbeel and Andrew Y. Ng. 2004. “Apprenticeship Learning Via Inverse Reinforcement Learning,” in Proceedings of the Twenty-first International Conference on Machine Learning, ACM, New York.

ing adversarial intentions and objectives. Finally, the DOD is investing in new methods and techniques to support nonkinetic SA; for example, in the Human Socio-Cultural Behavior (HSCB) modeling program managed by the Office of Naval Research.89 It must be recognized, however, that weakly constrained “nonkinetic” situations present immense methodological and technical challenges for modeling and simulation that must be addressed if programs such as HSCB are to be successful.

• Forecasting may benefit from the development of computational models and simulations that have a sound theoretical basis and have undergone rigorous verification and validation in the intended operational scenario. Forecasting also requires that systems exploit up-to-date information in order to ensure operational relevance and accuracy. Many “kinetic” red force (adversary) tracking and projection models have been designed with these capabilities, but providing the same level of reliability in less constrained, “nonkinetic” situations is an immensely more difficult problem.

In summary, accurate SA enables the decision maker to monitor events, to determine if the objectives and constraints of a current operational plan or solution are being followed, and perhaps to detect unforeseen opportunities that support additional goals or objectives. The decision maker may choose to pursue the current plan, or create a new plan to accommodate emerging problems, or capitalize on new opportunities (regarding replanning, see below). Given today’s high-resolution sensors, low-cost flight control and guidance systems, and computing power, such aids are not unrealistic, although they require significant development, testing, and verification and validation.

3.3.3 Tactical Decision Aids for Course-of-Action Development and Planning

Marine Corps Doctrinal Publication One (MCDP1) states:

Decision making may be an intuitive process based on experience. This will likely be the case at lower levels and in fluid, uncertain situations. Alternatively, decision making may be a more analytical process based on comparing several

![]()

89 See http://www.onr.navy.mil/Science-Technology/Departments/Code-30/All-Programs/Human-Behavioral-Sciences.aspx. Accessed December 3, 2011. The HSCB program seeks to understand the human, social, cultural, and behavioral factors that influence human behavior; improve the ability to model these influences and understand their impact on human behavior at the individual, group, and society level of analysis; improve computational modeling and simulation capabilities, visualization software tool sets, and training and mission rehearsal systems that provide forecasting capabilities for sociocultural responses; and develop and demonstrate an integrated set of model description data (metadata), information systems, and procedures that will facilitate assessment of the software engineering quality of sociocultural behavior models, their theoretical foundation, and the translation of theory into model constructs.

options. This will more likely be the case at higher levels or in deliberate planning situations.90

Tactical decision aids (TDAs) have been used to support military decision making for many years. For example, some TDAs have employed case-based reasoning to generate potential courses of action (COAs); examples are BattlePlanner,91 JADE (Joint Assistant for Deployment and Execution),92 and HICAP (Hierarchical Interactive Case-based Architecture for Planning).93 Others use high-level modeling and simulation, including qualitative reasoning, to help decision makers evaluate COAs.94,95 Such technologies, however, are geared for situations that afford deliberative information processing and assessment—for example, to support decision makers at higher command echelons in assessing order of battle. In contrast, small units engage in both deliberative planning and rapid, high-consequence decision making in real time. The former affords time and resources for deliberate information collection and processing, but the latter does not. Small units may benefit from TDAs that support both modes and which provide small unit leaders with a “playbook” of cues and frameworks to support the accurate and efficient assessment of incoming information, as discussed above in the description of RPD models of decision making.

Inexpensive and powerful computers, coupled with the development of efficient algorithms,96 mean that portable TDAs may provide small unit leaders with access to efficient and useful optimization techniques. Methods from operations research,97 including optimization formulations (e.g., mathematical programming, dynamic programming) and associated algorithms (e.g., the many variants of the simplex method, branch-and-bound, interior point methods, approximate dynamic programming) have been incorporated into TDAs to identify and evaluate near-best solutions, given constraints such as task scheduling, resource availability, and

![]()

90 Gen Charles C. Krulak, USMC, Commandant of the Marine Corps. 1997. Warfighting, Marine Corps Doctrinal Publication One, Washington, D.C., June 20, pp. 85-86.

91 Marc Goodman. 1989. “CBR in Battle Planning,” in Proceedings of the Second Workshop on Case-Based Reasoning, Pensacola Beach, Fla.

92 Alice M. Mulvehill and Joseph A. Caroli. 1999. “JADE: A Tool for Rapid Crisis Action Planning,” in Proceedings of the 4th International Command and Control Research and Technology Symposium, Providence, R.I.

93 Hector Muñoz-Avila, David W. Aha, Leonard A. Breslow, and Dana S. Nau. 1999. “HICAP: An Interactive Case-Based Planning Architecture and Its Application to Noncombatant Evacuation Operations,” in Proceedings of the Ninth Conference on Innovative Applications of Artificial Intelligence, AIAA Press, Orlando, Fla.

94 Johan de Kleer and Brian C. Williams (eds.). 1991. Artificial Intelligence Journal 51 (Special Issue on Qualitative Reasoning About Physical Systems II).

95 Benjamin J. Kuipers. 1994. Qualitative Reasoning: Modeling and Simulation with Incomplete Knowledge. MIT Press, Cambridge, Mass.

96 Jorge Nocedal and Stephen J. Wright. 2006. Numerical Optimization, Springer, New York.

97 Wayne Winston. 2004. Operations Research: Applications and Algorithms, Duxbury Press, Belmont, Calif.

risk. More recently, genetic and evolutionary algorithms,98,99,100 as well as distributed agent-based approaches such as market-based optimization,101 have provided new techniques to support tactical decision making. Aids that incorporate these techniques can generate “satisficing” solutions relatively quickly, but they also allow more optimal solutions to emerge over time. In addition, such methods can be relatively robust to uncertainty, data staleness, and brittleness of the optimum, all of which are problematic for traditional OR-based approaches. Small units may benefit from technologies that incorporate such methods.

Tactical decision aids that incorporate novel optimization algorithms might also be very useful for deliberative planning at the small unit level. For example, the resupply of dispersed units can present significant logistical challenges, but TDAs could be developed to help company commanders ensure that their units have the required materiel. A route-planning TDA could search among possible convoy routes to satisfy traversability constraints, minimize travel time, and maximize protection from possible threats. When the decision maker receives new threat information, the TDA would support modification of the route, just as a vehicle driver might modify a route proposed by Google Maps when learning of a road closure due to, say, flooding.

Similarly, a TDA might help small unit leaders manage sensor arrays and collection assets in order to maximize the probability of interdicting insurgents, or help them in making decisions about distributing improvised explosive device (IED) clearance assets over a road network.102 In such scenarios, optimization techniques may be useful in helping small unit leaders generate sets of possible actions with estimates of relative “goodness” with respect to mission objectives, as made explicit to the TDA.

TDAs might also have a role in rapidly unfolding situations, such as those encountered by small units when hybrid engagements shift from nonkinetic to kinetic states. As discussed in Chapter 2, many of the difficult decisions faced by Marines are associated with the question of whether to employ fires, given the risk of collateral damage. A very simple TDA could help the small unit leader assess the probability of overall physical damage in a target zone, while a more informative aid could estimate the probability of damage to specific intended targets

![]()

98 David E. Goldberg. 1989. Genetic Algorithms in Search, Optimization and Machine Learning, Kluwer Academic Publishers, Boston, Mass.

99 David E. Goldberg. 2002. The Design of Innovation: Lessons from and for Competent Genetic Algorithms, Addison-Wesley, Reading, Mass.

100 David B. Fogel. 2006. Evolutionary Computation: Toward a New Philosophy of Machine Intelligence, 3d ed., IEEE Press, Piscataway, N.J.

101 Dan Schrage, Christopher Farnham, and Paul G. Gonsalves. 2006. “A Market-Based Optimization Approach to Sensor and Resource Management,” in Proceedings of SPIE Defense and Security, Vol. 6229, Orlando, Fla., April.

102 Alan R. Washburn and P. Lee Ewing. 2011. “Allocation of Clearance Assets in IED Warfare,” Naval Research Logistics 58(3):180-187.

and associated collateral features. An enhanced map showing relative locations of true and collateral targets, together with damage probability contours, might allow small unit leaders to make more efficient and reliable risk assessments, as opposed to their recalling and mentally processing relevant factors while under stress. In either case, the applicable mathematics are well understood,103 and the required computations would be easily performed on a handheld or laptop device.

It is not difficult to provide additional examples of TDAs that could be used effectively by the small unit leader. However, there are important caveats:

• As noted earlier, the “front-end” analysis (e.g., CTA, CSE) is required in order to clearly identify the problem being addressed. This must be done before any technical formulation or algorithm development. Doing it the other way round, and attempting to make the TDA “user-friendly” after the fact, is a sure route to another discarded tactical tool.

• A TDA designed for COA development or mission planning will only be as good as the assessed-situation data feeding it. If the TDA is “optimizing” for the wrong situation, the aiding that it offers may be worse than none at all.

• Critical attention needs to be paid to what is being “optimized” and what assumptions are being made by the optimization algorithms. If the optimization metric is not the same as that being implicitly held by the user operating the TDA, and/or if the TDA design assumptions are being violated by the actual scenario of use, then the TDA advice is unlikely to be optimal in any sense of the word.

• Consideration should be given to other factors in the design of the TDA besides optimality in some predefined solution space. For example, robustness of the proposed solution104 may be much more important than optimality if the operating context is fraught with uncertainty. Likewise, if the user cannot understand the solution logic (“Why did it suggest that???”), a simpler but less optimizing technique may be more appropriate. Some decisions may be better supported by explanatory capabilities that enable the user to trace the TDA’s “reasoning.” In addition, effective visualization modes must be developed. Map-based aids are the most popular, but some situations call for totally novel representations (e.g., influence analysis may call on social network visualization, logistics planning on Gantt charts, etc.). Finally, ease of training on how to use the new TDA105 and its ease of integration into the existing operations will both be strong determinants of technology adoption.

![]()

103 Alan R. Washburn and Moshe Kress. 2009. Combat Modeling in the International Series in Operations Research and Management Science, Springer, New York.

104 That is, the sensitivity of the solution payoff to unpredictable or uncontrollable variations in the solution space.

105 With today’s “20-something” users expecting to need no training at all in view of their consumer-electronic experiences, significant “usability” issues need to be addressed by future TDA developers.

3.3.4 Human-Computer Interaction: Displays and Controls

Although the above considerations for successful TDA design and deployment are broad, general, and certainly not exhaustive, there is an extensive, prescriptive, and empirically validated body of specific knowledge that exists under the rubric of what is called human-computer interaction, or HCI. The Association for Computing Machinery defines human-computer interaction as “a discipline concerned with the design, evaluation and implementation of interactive computing systems for human use and with the study of major phenomena surrounding them.”106

Many of the science and technology HCI “products” come in the form of best practices by HCI designers and evaluators (e.g., the guidelines noted above). Many more, however, are summarized in formalized guidelines, textbooks, and handbooks,107,108,109 which cover topics that range from the “shallow,” interfaced-focused topics of how to deal with, in the present case, the TDA interface between human and computer (regarding displays and controls; see below), to the “deep,” under-the-hood topics dealing, on the computer side, with issues like opacity of operation, trustworthiness of the computations, and so on, and on the human side with issues like the operator’s skill level, that person’s mental model of the TDA, and so on.

Interface displays have primarily focused on visual modality, and display guidance has ranged from very early work in the 1940s on the design of good displays for the aircraft cockpit,110,111 to work in the 1990s focusing on the development of a consistent design framework for visualizing different classes of information,112,113 to current efforts for displaying high-dimensional data sets with complex relationships between data entities. In this last category, a relevant

![]()

106 See http://old.sigchi.org/cdg/cdg2.html#2_11. Accessed December 3, 2011.

107 Andrew Sears and Julie A. Jacko (eds.). 2008. The Human-Computer Interaction Handbook: Fundamentals, Evolving Technologies and Emerging Applications, 2d Ed., CRC Press, New York.

108 Christopher D. Wickens, John D. Lee, Yili Liu, and Sallie E. Gordon Becker. 2004. An Introduction to Human Factors Engineering, 2d ed., Pearson Prentice Hall, Upper Saddle River, N.J.

109 See also the Association for Computing Machinery Special Interest Group on Human-Computer Interaction Bibliography: Human-Computer Interaction Resources for links to more than 65,000 related publications. Available at http://hcibib.org. Accessed December 3, 2011.

110 L.F.E. Coombs, 1990, The Aircraft Cockpit: From Stick-and-String to Fly-by-Wire, Patrick Stephens Limited, Wellingborough; see also L.F.E. Coombs, 2005, Control in the Sky: The Evolution and History of The Aircraft Cockpit, Pen and Sword Books Limited, Barnsley, U.K.

111 Mary L. Cummings and Greg L. Zacharias. 2010. “Aircraft Pilot and Operator Interfaces,” in Richard Blockley and Wei Shyy (eds.), Encyclopedia of Aerospace Engineering, Vol. 8, Wiley, Hoboken, N.J.

112 Sig Mejdal, Michael E. McCauley, and Dennis B. Beringer. 2001. Human Factors Design Guidelines for Multifunction Displays, DOT/FAA/AM-01/17, Office of Aerospace Medicine, Washington, D.C.

113 Ben Schneiderman. 1996. “The Eyes Have It: A Task by Data Type Taxonomy for Information Visualizations,” Proceedings of IEEE Symposium on Visual Languages, Boulder, Colo.

concern in today’s hybrid environment is the presentation of complex information associated with a large social network, consisting of multiple categories of entities (nodes) connected by multiple types of relationships (links). Algorithmic-centric approaches often take the tack of reducing node and link complexity, computing simple social network analysis measures114 such as node centrality, and displaying abstracted two-dimensional representations of the networks with their associated measures. In contrast, visualization-centric approaches attempt to maintain the full network complexity, and present it in its full richness by means of innovative information-coding schemes (color, luminosity, size, animation, etc.). Examples of this “algorithmic-averse” approach, in which the human does the network parameter extraction, can be found at many web sites.115 Finally, it is important to note that, in the right operational context, the visual modality may not be the best way to display information (hence, auditory alarms), and other modalities should be considered. Indeed, there is a push toward multimodality displays (combined visual, auditory, haptic, etc.) in certain cases, and there are emerging guidelines for their use and design.116

The development of interface controls does not have as rich a history as that of the display side, except perhaps in highly constrained environments like the aircraft cockpit. In the aircraft cockpit, manual controls have evolved from crude direct linkages from hands and feet to the control surfaces, to exquisitely complex fly-by-wire hand controllers augmented by dozens of on-stick switches and buttons, some dedicated to controlling the functionality of others.117 Transition of interface controls to the ground-based warfighter has happened at a considerably slower pace, but it is happening. As discussed just a few years ago:

These technologies include spatial auditory displays, skinbased haptic and tactile displays, and automatic speech recognition (ASR) voice input controls. When used by themselves or collectively, displays involving more than one sensory modality (also known as multimodal displays) can enhance soldier safety [and effectiveness] in a wide variety of applications.118

Potential clearly exists for improving the controls side of the interface, especially in the demanding environments faced by today’s Marines. Right now,

![]()

114 David Knoke and Song Yang. 2008. Social Network Analysis, 2d ed., Sage Publications, Thousand Oaks, Calif.

115 For example, http://socialmediatrader.com/10-amazing-visualizations-of-social-networks. Acessed December 3, 2011.

116 Leah M. Reeves, Jennifer Lai, James A. Larson, Sharon Oviatt, T.S. Balaji, Stéphanie Buisine, Penny Collings, Phil Cohen, Ben Kraal, Jean-Claude Martin, Michael McTear, T.V. Raman, Kay M. Stanney, Hui Su, and QianYing Wang. 2004. “Guidelines for Multimodal User Interface Design,” Communications of the Association for Computing Machinery 47(1):57-59.

117 See, for example, the F-22 controls description, at http://www.f22fighter.com/cockpit.htm#Hands-On%20Throttle%20and%20Stick%20%28HOTAS%29. Accessed December 3, 2011.