3

Evidence and Decision-Making

In Chapter 2, the committee recommends a framework for the US Food and Drug Administration (FDA) regulatory decision-making process in which scientific evidence plays a critical role, together with other factors including ethical considerations and the perspectives of patients and other stakeholders. This chapter focuses on the evaluation of the scientific evidence and on how FDA should use evidence in its decisions. Just as courts determine when evidence is admissible and which standard of proof to apply in a given case, scientific evidence must be evaluated for its quality and applicability to the public health question that is the focus of regulatory decision-making. FDA needs to base its decisions on the best available scientific evidence related to that question. Different people, however, can interpret and judge scientific evidence in various ways. Decisions in which there is disagreement among experts about what decisions are best supported by a given body of evidence are among the most difficult that FDA must make. For these decisions to properly incorporate all the relevant uncertainties and values, the regulators need to understand the bases of the various judgments that the experts are making. As has been shown in many difficult cases that FDA has had to decide, evidence does not speak for itself.

This chapter will categorize and discuss the sources of technical disagreements between experts about the kinds of data that FDA typically deals with. It will start with a short primer on approaches to statistical inference, with an introduction to Bayesian methods, followed by a discussion of the distinctions between scientific data and evidence. It then discusses why scientists sometimes disagree about the evidence of a drug’s benefits and risks and how their disagreements may affect regulatory decision-making.

STATISTICAL INFERENCE AND DECISION-MAKING

Evidence

Although the terms data and evidence are often used interchangeably, data is not a synonym for evidence. The Compact Oxford English Dictionary defines data as “facts and statistics collected together for reference or analysis” and evidence as “the available body of facts or information indicating whether a belief or proposition is true” (Oxford Dictionaries, 2011). The difference is whether or not the information is being used to draw scientific conclusions about a specific proposition. In the context of a drug study, the “proposition” is a hypothesis about a drug effect, often stated in the form of a scientific question, such as “Do broad-spectrum antibiotics increase the risk of colitis”? In the broader context of FDA’s regulatory decisions, the proposition may be implicit in the public health question that prompts the need for a regulatory decision, such as, “Does the risk of colitis caused by broad-spectrum antibiotics outweigh their benefits to the public’s health”? In this way, evidence is defined with respect to the questions developed in the first step of the decision-making framework described in Chapter 2.

Statistical methods help to ascertain the “strength of the evidence” supporting a given hypothesis by measuring the degree to which the data support one hypothesis rather than the other. The evidence in turn affects the likelihood that either hypothesis is true. The most common scientific hypothesis in the realm of drug evaluation is the “null hypothesis”—that in a given treated population, the drug has no effect relative to a comparator treatment. For the concept of evidence to have meaning, however, there must be at least one other hypothesis under consideration, such as that the drug has some effect.

A small change in the scientific hypotheses being compared can change the strength of the evidence provided by a given set of data. For example, if the question above changed from whether broad-spectrum antibiotics produce any increase in the risk of colitis to whether broad-spectrum antibiotics produce a clinically important increase in the risk of colitis—say, an increase of more than 10 percent—the strength of the evidence provided by the same data could change. Where one observer might see a four percent increase in risk as strong evidence of some excess risk, another could regard it as strong evidence against a 10 percent increase in risk.1 Agreement on the strength of the evidence therefore requires agreement on the hypotheses being contrasted and on the public health questions that gives rise to them.

![]()

1Confusion can result from use of the word significant to describe an effect that is both statistically significant and clinically relevant; the latter is often termed clinically significant. The two uses should remain separate.

Inference

Good science, together with proper statistics, has a dual role. The first role is to decrease uncertainty about which hypotheses are true; the second is to properly measure the remaining uncertainty. These are carried out in part through a process called statistical inference. Statistical inference involves the process of summarizing data, estimating the uncertainty around the summary, and using the summary to reach conclusions about the underlying truth that gave rise to the data.

The two main approaches to statistical inference are the standard “frequentist” approach and the Bayesian approach. Each has distinctive strengths and weaknesses when used as bases for decision-making; including both approaches in the technical and conceptual toolbox can be extraordinarily important in making proper decisions in the face of complex evidence and substantial uncertainty. The frequentist approach to statistical inference is familiar to medical researchers and is the basis for most FDA rules and guidance. The Bayesian approach is less widely used and understood, however, it has many attractive properties that can both elucidate the reasons for disagreements, and provide an analytic model for decision-making. This model allows decision-makers to combine the chance of being wrong about risks and benefits, together with the seriousness of those errors, to support optimal decisions.

The frequentist approach employs such measures as P values, confidence intervals, and type I and II errors, as well as practices such as hypothesis-testing. Evidence against a specified hypothesis is measured with a P value. P values are typically used within a hypothesis-testing paradigm that declares results “statistically significant” or “not significant”, with the threshold for significance usually being a P value less than 0.05. By convention, type I (false-positive) error rates in individual studies are set in the design stage at 5 percent or lower, and type II (false-negative) rates at 20 percent or below (Gordis, 2004).

In the colitis example, if the null hypothesis posits that broad-spectrum antibiotics do not increase the risk of colitis, a P value less than 0.05 would lead one to reject that null hypothesis and conclude that broad-spectrum antibiotics do increase the risk of colitis. The range of that elevation statistically consistent with the evidence would be captured by the confidence interval. If the P value exceeded 0.05, several conclusions could be supported, depending on the location and width of the confidence interval; either that a clinically negligible effect is likely, or that the study cannot rule out either a null or clinically important effect and thus is inconclusive. In the drug-approval setting, the FDA regulatory threshold of “substantial evidence”2 for effectiveness is generally defined as two well controlled trials that have achieved statistical significance on an agreed upon endpoint, although there can be exceptions (Carpenter, 2010; Garrison et al., 2010).

![]()

221 USC § 355(d) (2010).

Hypothesis-testing provides a yes-or-no verdict that is useful for regulatory purposes, and its value has been demonstrated over time, both procedurally and inferentially. Its emphasis on pre-specification of endpoints, study procedures and analytic plans has regulatory and often inferential benefits. But hypothesis tests, P values, and confidence intervals do not provide decision-makers with an important measure—the probability that a hypothesis is right or wrong. In settings where a difficult balancing of various decisional consequences must be made in the face of uncertainty about both the presence and magnitude of benefits and risks, the probability that a given hypothesis is true plays a central role. The failure to assign a degree of certainty to a conclusion is a weakness of the frequentist approach when it is used for regulatory decisions (Berry et al., 1992; Etzioni and Kadane, 1995; IOM, 2008; Parmigiani, 2002).

In contrast, the Bayesian approach to inference allows a calculation on the basis of results from an experiment of how likely a hypothesis is to be true or false. However, this calculation is premised on an estimated probability that a hypothesis is true prior to the conduct of the experiment, a probability that is not uniquely scientifically defined and about which scientists can differ. Both in spite of this and because of this, Bayesian approaches can be very useful complements to traditional frequentist analyses, and can yield insights into the reasons why scientists disagree, a topic that will be discussed in more depth later in this chapter.

The use of Bayesian approaches is not new to FDA. FDA’s Center for Devices and Radiological Health (CDRH) has published guidance for the use of Bayesian statistics in medical device clinical trials (FDA, 2010a) and FDA has used Bayesian approaches in regulatory decisions. A 2004 FDA workshop on the use of Bayesian methods for regulatory decision-making included extensive discussion by FDA scientists, as well as Center for Drug Evaluation and Research (CDER) and CDRH leadership, of ways in which Bayesian approaches could enhance the science of premarketing approval.3 Campbell (2011), director of the CDRH Biostatistics division, discussed the uses of Bayesian methods for FDA decision-making, and presented 17 requests for premarketing approval submitted to and approved by the CDRH for medical devices that used Bayesian methods. Although Bayesian methods have been little used by CDER, Berry (2006) discusses how a Bayesian meta-analysis served as the basis for a CDER approval of Pravigard™ Pac (co-packaged pravastin and buffered aspirin) to lower the risk of cardiovascular events. Bayesian sensitivity analyses were used to help evaluate the literature investigating the possible association between antidepressants and suicidal outcomes (Laughren, 2006; Levenson and Holland, 2006), elaborated later in Kaizar (2006). Finally, FDA staff has recently proposed Bayesian methodology for analysis of safety endpoints in clinical trials (McEvoy et al., 2012).

![]()

3Published papers from the workshop are available in the August 2005 issue of Clinical Trials (2:271-378).

The Bayesian approach does not use a P value to measure evidence; rather, it uses an index called the Bayes factor (Goodman, 1999; Kass and Raftery, 1995). The Bayes factor encodes mathematically the principle presented earlier—that the role of evidence is to help adjudicate between two or more competing hypotheses. The Bayes factor modifies the probability of whether a hypothesis is true. Decision-makers can then use that probability to characterize the likelihood that their decisions will be wrong. In its simplest form, Bayes theorem can be defined in the following equation (Goodman, 1999; Kass and Raftery, 1995):

| The odds that a hypothesis is true after new evidence | = | The odds that a hypothesis is true before new evidence | × | The strength of new evidence (the Bayes factor) |

The Bayes factor is sometimes regarded as the “weight of the evidence” comparing how strongly the data support one hypothesis (or combination of hypotheses) to another (Good, 1950; Kass and Raftery, 1995). Most important is the role that the Bayes factor plays in Bayes theorem; it modifies the probability that a given hypothesis is true. This concept that a hypothesis has a certain “truth probability” has no counterpart in standard frequentist approaches.

There is not a one-to-one relationship between P values and Bayes factors, because the magnitude of an observed effect and the prior probabilities of hypotheses also can affect the Bayes factor calculation itself. But in most common statistical situations, there exists a strongest possible Bayes factor, and that can be defined as a function of the observed P value. That relationship can be used to calculate the maximum chance that the non-null hypothesis is true as a function of the P value and a prior probability (Goodman, 2001; Royall, 1997).

Assume that the null hypothesis is that a given drug does not cause a given harm, and that the alternative hypothesis is that it does elevate the risk of that harm. Table 3-1 shows how a given P value (translated into the strongest Bayes factor) alters the probability of the hypothesis of harm, defining the null hypothesis as stating that a given drug does not harm, and the alternative hypothesis is that it does elevate the risk of that harm. For example, if a new randomized controlled trial (RCT) yields a P value of 0.03 for a newly reported adverse effect of a drug and there was deemed to be only a 1 percent chance before the RCT of that unsuspected adverse effect being caused by the drug, the new evidence increases the chance of the causal relationship to at most 10 percent (see Table 3-1). A regulatory decision predicated on the harm being real would therefore be wrong more than 90 percent of the time.

Without a formal Bayesian interpretation, that high probability of error would not be apparent from any standard analysis. Using conventional measures, such a study might report that “a previously unreported association of tinnitus was observed with the drug, OR [odds ratio] = 3.5, 95% CI [confidence interval] 1.1 to 11.1. P = 0.03”. This statement does not actually indicate how likely it is

TABLE 3-1 Maximum Change in the Probability of a Drug Effect as a Function of P Value and Bayes Factor, Calculated by Using Bayes’ Theorem

| P Value in New Study |

Strongest Bayes Factor |

Strength of Evidencea |

Prior Probability of an Effect, %b |

Maximum Probability After the New Study, % |

| 0.10 | 0.26 | Weak | 1 | 2.5 |

| 25 | 46 | |||

| 50 | 79 | |||

| 83 | 95 | |||

| 0.05 | 0.15 | Moderate | 1 | 6 |

| 25 | 69 | |||

| 50 | 87 | |||

| 76 | 95 | |||

| 0.03 | 0.10 | Moderately | 1 | 10 |

| Strong | 25 | 78 | ||

| 50 | 81 | |||

| 67 | 95 | |||

| 0.01 | 0.04 | Strong | 1 | 21 |

| 25 | 90 | |||

| 40 | 95 | |||

| 50 | 96.5 | |||

| 0.001 | 0.005 | Very Strong | 1 | 75 |

| 8 | 95 | |||

| 25 | 99 | |||

| 50 | 99.5 | |||

aThe qualitative descriptor of the strength of the evidence is made on the basis of the quantitative change in the probability of truth of a null-null drug effect.

bThe prior truth probabilities of 1%, 25%, or 50% are arbitrarily chosen to span a wide range of strength of prior evidence. The shaded prior probability illustrates the minimum prior probability required to provide a 95% probability of a drug effect after observing a result with the reported P value.

SOURCE: Modified from Goodman (1999).

that the drug actually raises the risk of tinnitus. For that, a prior probability is needed, and the Bayes factor. If the mechanism or some preliminary observations justified a 25 percent prior chance of a harmful effect, the same evidence would raise that to at most a 78 percent chance of harm—that is, at least a 22 percent chance that the drug does not cause that harm. Table 3-1 shows that after observing P = 0.03 for an elevated risk of harm, in order to be 95 percent certain that this elevation was true, the prior probability of a risk elevation would have to have been at least 67 percent before the study. That might be the case if there was an established mechanism for the adverse effect, if other drugs in the same class were known to produce this effect, or if a prior study showed the same effect.

In practice, however, there exist no conventions or empirical data to determine exactly how to assign such prior probabilities, although the elicitation of prior probabilities from experts has been much studied (Chaloner, 1996; Kadane

and Wolfson, 1998). FDA incorporated the notion of a prior informally in its incorporation of “biologic plausibility” into decision-making of how to respond to drug safety signals that arise in the course of pharmacovigilance, in March 2012 draft guidance (FDA, 2012):

CDER will consider whether there is a biologically plausible explanation for the association of the drug and the safety signal, based on what is known from systems biology and the drug’s pharmacology. The more biologically plausible a risk is, the greater consideration will be made to classifying a safety issue as a priority.

As demonstrated in the above paragraph, biologic plausibility and other forms of external evidence are currently accommodated qualitatively; Bayesian approaches allows that to be done quantitatively, providing a formal structure by which both prior evidence and other sources of information (for example, on common mechanisms underlying different harms, or their relationship to disease processes) should affect decisions.

This discussion illustrates a number of important issues

• Given new evidence, the probability that a drug will be harmful can vary widely depending on the strength of the prior or external information, represented as a prior probability distribution.

• The chance that a drug will be harmful, based on P values for a harmful effect in the borderline significant range (0.01–0.05), is often far lower than is suspected, unless there are fairly strong reasons to believe in the harm before the study.

• The Bayesian approach allows the calculation of intermediate levels of certainty (for example, less than 95 percent) that might be sufficient for regulatory action, particularly for drug harms.

• Without agreed-upon conventions or empirical bases for assigning prior probabilities, the prior probabilities derived from a given body of evidence will differ among scientists, resulting in different conclusions from the same data.

The probability that a given harm will be caused by a drug is a key attribute in regulatory decision-making. How sure regulators must be to take a given action varies according to the consequences of decisions. In some cases, 95 percent certainty might be needed, in others 75 percent, and in still others less than 50 percent. The Bayesian approach provides numbers that feed into that judgment (Kadane, 2005).

Despite these advantages, one of the weaknesses of Bayesian calculations is that there is no unique way to assign a prior probability to the strength of external evidence, particularly if that evidence is difficult to quantify, such as biologic

plausibility. Although it may be impossible to assess subtle differences in prior probability, even crude distinctions can be helpful, such as whether the prior evidence justifies probability ranges of 1–5 percent, 15–50 percent, 60–80 percent, or 90+ percent. Such categorizations often provide fine enough discrimination to be useful for decision-making. In the absence of agreement on prior probabilities, “non-informative” prior distributions can be used that rely almost exclusively on the observed data, and sensitivity analyses with different kinds of prior probabilities from different decision-makers can be conducted (Emerson et al., 2007; Greenhouse and Waserman, 1995). At a minimum, these prior probabilities should be elicited and their evidential bases made explicit so that this potential source of disagreement can be better understood, and perhaps diminished.

The difference between Bayesian and frequentist approaches can go well beyond the incorporation of prior evidence, extending to more complex aspects of how the analytic problem is structured and analyzed. Madigan et al. (2010) provide a comprehensive suite of Bayesian methods to analyze safety signals arising from a broad range of study designs likely to be employed in the postmarketing setting.

When new information arises that puts into question a drug’s benefits and risks, FDA’s decision-makers often face sharp disagreements among scientists over how to interpret that information in the context of pre-existing information and over what regulatory action, if any, should be taken in response to the new information. Such disagreements are often unavoidable, and moving forward with appropriate decision-making is difficult if the underlying reasons for them are unknown or misunderstood. The committee identified a number of reasons for the disagreements about scientific evidence that occur among scientists. Those reasons, which are listed in Box 3-1, are discussed below.

Different Prior Beliefs About the Existence of an Effect

People’s beliefs about the plausibility of an effect of a drug are determined, in part, by their knowledge and interpretation of prior evidence about the drug’s benefits and risks (Eraker et al., 1984). That knowledge shapes their responses to new evidence. Prior evidence can come directly from earlier clinical studies of the drug’s effects, from studies of drugs in the same class that demonstrate the effect, and from information about the drug’s mechanism of action. Newly observed evidence might be interpreted as resulting in a higher chance that a drug is harmful if earlier studies have also demonstrated the harm. If other drugs in the same class have been associated with a particular adverse effect, the drug has a higher prior probability of causing that effect than a drug in a class whose mem-

BOX 3-1

Why Scientists Disagree About the Strength

of Evidence Supporting Drug Safety

Prior Evidence

1. Different weights given to pre-existing mechanistic or empirical evidence supporting a given benefit or risk.

Quality of the New Study

2. Different views about the reliability of the data sources.

3. Different confidence in the design’s ability to eliminate the effect of factors unrelated to drug exposure.

4. Different views on the appropriateness of statistical models.

Relevance of the New Evidence to the Public Health Question

5. Different views of the hypotheses needing evaluation.

6. Different assessments of the transportability of results.

Synthesizing the Evidence

7. Different ideas about how to weigh and combine all the available evidence from disparate sources relevant to the public health question.

Appropriate Regulatory Response to the Body of Evidence

8. Different opinions among scientists regarding the thresholds of certainty to justify concern or regulatory action, which can affect how they view the evidence

bers have not produced such an effect. If a drug has a mechanism of action that has been implicated in a particular adverse effect, it has a higher prior probability of causing that effect than a drug for which such a mechanism is implausible. For example, the prior probability that a topical steroid would produce significant internal injury would be very low because what is known about the absorption, metabolism, and physiologic actions of topical steroids makes it difficult to imagine how such an injury could occur, but the prior probability of an adverse dermatologic effect would be much higher.

Evidential bases of prior probability can take two forms: an assessment of the evidence supporting the mechanistic explanation of a proposed effect and the cumulative weight of previous empirical studies. Marciniak, in the FDA Office of New Drugs (OND) Division of Cardiovascular and Renal Products discussed mechanism directly in a letter that was provided for a July 2010 FDA Advisory Committee meeting related to Avandia (Marciniak, 2010):

Others have speculated that rosiglitazone could increase MI [myocardial infarction] rates through its effects upon lipids or by the same mechanism whereby it increases HF [heart failure] rates. There are no clinical studies establishing these mechanisms. We propose that there is a third mechanism for which there is some evidence from clinical studies. The third possible mechanism is the following: The Avandia label states that “In vitro data demonstrate that rosiglitazone is predominantly metabolized by Cytochrome® P450 (CYP) isoenzyme 2C8, with CYP2C9 contributing as a minor pathway.” The published literature suggests that rosiglitazone may also function as an inhibitor of CYP2C8. … Allelic variants of the CYP2C9 gene have been associated in epidemiological studies with increased risk of myocardial infarction and atherosclerosis. … Recently, CYP2C8 variants has also been associated with increased risk of MI. … CYP2C9 and 2C8 catalyze the metabolism of arachidonic acid to vasoactive substances, providing one potential mechanism for affecting cardiac disease. Interference with cigarette toxin metabolism is another. … Rosiglitazone effects upon CYP2C8 and CYP2C9 could be the mechanism for its CV adverse effects. Regardless, there are several possible mechanisms for CV toxicity of rosiglitazone.

The above paragraph describes a mechanism that is fairly speculative, as labeled. There is no suggestion or claim that such a mechanism would definitely or even probably produce adverse cardiovascular effects. Rather, this particular exposition is exploratory and aimed at establishing that such an effect is possible rather than probable. Those who have a good understanding of this particular set of pathways might interpret the explanation differently and establish a different starting point for the probability of such an effect. It is unlikely, though, that on the basis of such evidence general consensus could be garnered for a high prior probability of effect.

Mechanistic explanations generally provide weak evidence when they are offered post hoc to support an observed result. They carry more weight when they are proposed before such an effect is observed. Misbin (2007) raised questions about the safety of rosiglitazone on the basis of its effects on body weight and lipids—both well-established risk factors for cardiovascular disease—long before any risk of myocardial infarction (MI) was seen in any studies.

Another, more subtle way in which mechanistic considerations can affect inferences is in the choice of endpoints, as illustrated in discussions by Marciniak, from the FDA Office of New Drugs (OND) Division of Cardiovascular and Renal Products, of the wisdom of combining silent and clinical MIs into a single endpoint (Marciniak, 2010):

There is additional evidence from RECORD [the Rosiglitazone Evaluated for Cardiac Outcomes and Regulation of Glycemia in Diabetes trial] that the MI risk for rosiglitazone is real rather than a random variation:

We prospectively excluded silent MIs from our primary analysis because we had concerns that silent MIs might represent a different disease mechanism than symptomatic MIs, e.g., could they represent

gradual necrosis from diabetic microvascular disease rather than an acute event with coronary thrombosis in an epicardial coronary artery?

Whether or not silent and clinical MIs should be combined—a critical decision in assessing the evidence—is framed here as contingent on whether or not they represent different manifestations of the same pathophysiologic process. What is important to recognize is that the numbers arising from an analysis that excludes silent MIs are only as credible as the underlying mechanistic explanation. This example shows how a mechanistic explanation can affect the analyses, especially exploratory analysis, even if it is not explicitly invoked as an evidential basis of a claim.

Even if two scientists agree about what evidence new data provides, if they have different assessments of the strength of prior evidence they might disagree about the probability of a higher drug risk. Such a disagreement might appear outwardly to be about the new evidence when in fact the disagreement is about the prior probability. That phenomenon is captured quantitatively by Bayes theorem, as previously noted (Fisher, 1999), which can use sensitivity analyses with different priors to illustrate the plausible range of chances that the drug induces unacceptable safety risks.

Quality of the New Study

Standard approaches to evaluating evidence rely on the use of evidence hierarchies, which traditionally emphasize the type of study design as the main determinant of evidential quality; an example is the US Preventive Services Task Force guidance (AHRQ, 2008). Many scientists judge a study on the basis of its type of design above all other considerations. The type of study design, however, is only one of the factors that should be taken into account in assessing the quality of a study and thereby the quality of the evidence from the study. In addition to the type of study, such other aspects as the source and reliability of the data, study conduct, whether there are missing or misclassified data, and data analyses influence the quality of the evidence generated by a study. Some of these reflected in the Grading of Recommendations Assessment, Development and Evaluation (GRADE) approach to evidence assessment (Guyatt et al., 2008). Those factors and their role in disagreements among scientists are discussed below.

Different Views about the Reliability of the Data Source

Most evidence hierarchies assume that data in a study are generated for research purposes and that outcome measures are specified in advance. Much postmarketing research about a drug’s benefits and risks, however, whether an RCT or an observational study, depends at least in part on data gathered with systems developed for other purposes. For example, billing data that happen to

include diagnoses or RCTs that were designed to assess outcomes other than safety-related outcomes could be used in the postmarketing setting. One source of disagreement among scientists is the reliability of the data sources that are used for a study.

Data are gathered and captured electronically in many settings and provide important evidence about exposures, covariates, and outcomes. A number of health-monitoring systems or (linked) databases are or could be used for drug-or vaccine-safety investigation, including the Adverse Event Reporting System (AERS), Sentinel, Vaccine Safety Datalink, Post-licensure Rapid Immunization Safety Monitoring, the Health Maintenance Organization (HMO) Research Network, health plan records, data from the Centers for Medicare and Medicaid Services (CMS) and the Department of Veterans Affairs (VA), disease registries, pharmacy records and prescriber databases, hospital administrative databases, and cohort studies.

Concerns related to reliability include concerns about the measurement quality, completeness, and accuracy of the data. The conduct of high-quality studies using electronic data requires local knowledge about how care is delivered, how the computerized systems operate, and how they change. Problems with data quality affect the quality of evidence, decreasing precision and increasing bias in a study. (Formal definitions of bias and precision are presented later in this chapter.) Some of the issues are discussed below.

The quality of databases is variable. In the case of the AERS database, for instance, reporting of adverse events is incomplete, and the quality of the information about the adverse events that are reported may be poor. There is no information about the denominators, such as the number of people taking a drug, which is necessary for estimating event rates. Despite their limitations, however, a database of adverse-event reports can provide sufficient evidence of a drug’s harm, especially when the reported harm is rare, unrelated to the indication for using the drug, and distinctive enough for most of or all the reports to be attributed to the drug. More than half the 36 drugs withdrawn from the US market since 1956 were withdrawn on the basis of safety evidence from case reports like those included in AERS (Saunders et al., 2010). For example, after a request by FDA, the manufacturer of the statin cerivastatin (Baycol®) withdrew it from the market because of the number of reports of rhabdomyolysis (a breakdown of muscle fibers that can result in kidney failure) (Furberg and Pitt, 2001; Lanctot and Naranjo, 1995; Staffa et al., 2002). The number of reports of that adverse event occurred at more than 50 times the frequency associated with other drugs in the same class and was unrelated to the indication for cerivastatin therapy (Staffa et al., 2002).

When databases are used for dual purposes, changes for one purpose may affect the quality of data used for the other. Hospitals, health plans, and other sources of care often change computerized systems, typically to optimize them for administrative purposes. With each of those changes, the quality of the data

and their ability to capture events, exposures, or covariates for investigations of drug safety can change as well. Estimates of the reliability and validity of various methods and approaches may not stay accurate when the underlying systems change. Therefore, if data are to be used for drug safety research, continuing quality-control analyses are essential.

Considerations Regarding Data on Drug Exposures

Closed systems of care, such as health plans, tend to provide the most complete information on medical care. The denominators of membership are known, and entry into and exit from the cohort of patients can be reasonably well defined, allowing calculation of the risk of adverse events. Health insurance databases are likely to capture most drug exposures and serious adverse events requiring medical care, although the complete ascertainment of outcomes may require the use of multiple administrative files.

Computerized pharmacy files are likely to provide more complete and accurate information about drug use than medical records or patient surveys. Information about the date of a prescription, the number of days of supply, and the refill date for a chronic-disease medication often permit an assessment of drug exposure during a specific time window, assuming that the patient is taking the medication.4 Computerized drug data will provide less reliable and valid estimates of exposure to medications that are used as needed and medications that are available over the counter. Drug-use information might be missing for inpatient medications, medications received from family members or friends, and medications purchased outside the system of care.

Considerations for Data on Outcomes

Problems arise in efforts to capture information about events of interest. The more disparate the sources of care, the more dangerous it is to rely on a single administrative data source for the conduct of a study. In the setting of health plans that own hospitals, inpatient diagnostic codes are generally available in administrative records, but codes for out-of-plan hospitalizations (such as a hospitalization that occurs when a patient is away from home) might not be available unless billing records include sufficient diagnostic information. Similarly, medical records of veterans might be complete in the VA’s data systems for hospitalizations in the VA system of hospitals but might lack information on hospitalizations in non-VA hospitals or on drugs prescribed by non-VA providers.

Whether the data come from a single source or multiple sources, the diagnostic codes used in the administrative files are subject to error. For instance, a hospital discharge diagnosis of hypertension has been associated with a decreased risk of in-hospital death even though hypertension is a risk factor for adverse

![]()

4Except for drugs that may have resale value on the street, patients typically do not refill prescriptions for drugs that they are not taking (Lau et al., 1997).

cardiovascular outcomes, including death (Jencks et al., 1988). That paradoxical finding arises from the fact that there are fewer discharge diagnoses on fatal hospitalizations and such diagnoses as hypertension tend to be omitted; as a result, patients discharged alive will probably have more discharge diagnoses than those who died during their hospitalization. In one study, a comparison between hospital discharge diagnoses and six major cardiovascular events adjudicated according to accepted diagnostic criteria revealed levels of agreement between 44 percent and 86 percent (Ives et al., 1995). Diagnostic coding matters for reimbursement, so some diagnoses, such as heart failure, appear with surprising frequency in the absence of evidence (Psaty et al., 1999). In a recent study of the association between opioid use and fracture risk, only 67 percent of fractures identified with administrative diagnostic or X-ray data were actually incident fractures (Saunders et al., 2010). Agreements between death-certificate causes of death and adjudicated deaths based on medical records, interviews with witnesses, questionnaires to physicians, and autopsies are only modest—coronary heart disease: kappa statistic, 0.61, 95% CI, 0.58–0.64; death from stroke: kappa statistic, 0.59, 95% CI, 0.54–0.64 (Ives et al., 2009).

Diagnostic codes can also change. The International Classification of Diseases codes are used worldwide and provide consistency in information on effects, but the codes are periodically updated, and the updates can affect health data both within a study over time and in comparisons among different studies. In addition, nonstandard definitions of endpoints, economic incentives for listing particular diagnoses, and insufficient detail about key variables of interest can affect data quality.

Data Quality in Primary Research

Issues about data quality can arise in research even when data-gathering and quality control are parts of the design. The problem occurs particularly in the classification of cause-specific events. Questions and disputes over the extent and possible effect of data-quality issues arose in the discussion of the RECORD trial with respect to rosiglitazone-related risks. The questions included whether events were properly adjudicated and recorded and whether events were missed, whether followup was sufficient, whether handling of withdrawals was appropriate, how disagreements were settled, whether unclear or incomplete case-report forms were handled properly, and whether cotreatments were recorded. Below are comments bearing on some of those issues and noting the role of judgment in assessing the likely effects of the problems (Marciniak, 2010):

Our assignments regarding bias involve varying levels of subjectivity. While we believe we have strong, documented justifications for some assignments, such as our unacceptable handling [of] cases, for other assignments our judgment calls are not unquestionable. For this reason we have provide[d] copies of the relevant case report forms (CRFs—redacted for personal and institutional identifiers) for a selection of problem cases in Appendix 1. We have also provided short sum-

maries of many of the other problem cases in Appendix 3 and short summaries of all cases for which we made a different CV death, MI, or stroke assignment than GSK in Appendices 5-7. …

Our review of the trial conduct appears to confirm that, as the protocol issues suggest, biases did arise in RECORD. The trial conduct issues reinforce our belief that RECORD can not provide any reassurances regarding rosiglitazone CV safety.

In contrast, Ellis Unger, deputy director of the Office of New Drug-I in OND, disagreed with the judgments made by Marciniak (in the OND Division of Cardiovascular and Renal Products) (Unger, 2010):

For the upper bound of the 95% CI for the relative risk of death to exceed 1.2, there would need to have been a differential of approximately 16 deaths between subjects lost to follow-up in the rosiglitazone and control groups. …

Such striking imbalances may be plausible, but they seem highly unlikely. I disagree, therefore, with Dr. Marciniak’s interpretation of all-cause mortality. I deem the results of RECORD to be reassuring with respect to all-cause mortality, an endpoint essentially unaffected by ascertainment bias in an open-label study.

There may be some merit in re-adjudicating MIs in RECORD; however, there are reasons why diagnostic criteria are strictly defined and enshrined in the protocol, reasons why adjudication committees are actually committees (i.e., more than a single individual), and reasons why scrupulous blinding is essential for these committees to perform their duties correctly.

My view of MIs in RECORD is that the findings are neither reassuring nor concerning. I am not surprised that, using modified criteria, Dr. Marciniak was able to increase the number of MIs by 18%; I am somewhat concerned that nearly all of them were in the rosiglitazone group.

What is particularly important to note about these disputes is that they have a direct connection with the estimated quantitative risk and its attendant uncertainty. However, such disputes cannot always be settled by reviewing records or by repeating procedures. They typically involve some degree of missing information whose potential impact can be assessed only with sensitivity analyses. How much the various assumptions should be allowed to vary in those analyses is a matter of judgment. So data-quality issues can have a central, sometimes irresolvable role in creating disagreement among scientists about numerical results; at best, the plausible range of estimates that would be consistent with their qualitative disagreement can be calculated with sensitivity analyses.

Confidence in a Design’s Ability to Eliminate Bias

The science of drug safety concerns questions of causal, not just statistical,

relationships. That is, the important drug safety question is whether drug exposure actually causes an adverse outcome, not simply whether such an outcome occurs more frequently in people who choose to take the drug. Whether or not an observed increase in risk is likely to be causally related to drug exposure depends on a variety of non-statistical judgments about the design of the study, the analytic methods, and the underlying biologic mechanisms. Those judgments focus on whether something other than the drug itself could be causing the increase in risks—or in benefits. If the evidence pointing to such a relationship has been generated by a well-designed, well-conducted clinical trial in which drug treatment has been randomly assigned and there is adequate size and time for adverse effects to appear, confidence is typically fairly high that the difference in drug exposure is the cause of any differences in benefits or risks. However, if deviations from initial randomization occur (such as that caused by dropouts, missing data, or poor adherence), the conclusion of causality will rest heavily on judgments about the appropriateness of analytic procedures, the plausibility of alternative causes given the study designs, and knowledge of drug action and the natural history of disease. These issues assume even greater prominence in the analysis of observational studies. Those considerations are not always objectively quantifiable and can be the subject of disagreement and debate among scientists.

Two main determinants of the inherent quality of a study are precision and bias (Figure 3-1). Precision is the magnitude of variability in an estimated benefit or risk that can be ascribed to the play of chance. It is the only determinant that has a clearly quantifiable effect on the strength of evidence. The confidence limits or intervals around an estimate of benefits or risks are a quantitative indication of the precision of a study. The more precise a result, the stronger the evidence it will provide for one hypothesis versus another. In practice, study sample size is the prime determinant of precision—a large study produces an estimate of benefits or risks that has a small confidence interval, indicating high precision.

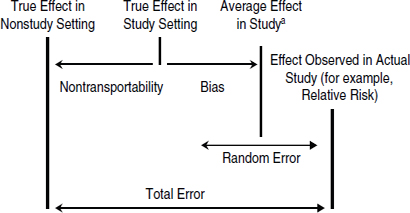

FIGURE 3-1 Illustration of the contributions of different types of errors to the average effect of a drug in a study, the true effect of a drug in a study setting, and the true effect of a drug in nonstudy settings. The total error is the difference between the effect of a drug observed in the study and the true effect of the drug in nonstudy settings. If the bias is large, the confidence interval around the average effect in a study (represented as the random error) will not include the true effect in the study setting.

aThe average effect in the study is a hypothetical value that would be seen if the study were conducted multiple times.

Bias is the difference between the average effect of many hypothetical repetitions of a given study and the true effect in the population being studied. If the study draws research participants randomly from a target population (that is, the population likely to be prescribed the drug), the quality of evidence is determined, in part, by the degree of bias in the results. Unlike precision, bias cannot be eliminated by increasing sample size; only proper design or analysis can control or eliminate it. The presence of bias is not apparent in the numerical results of a study; it can only be discerned from close examination of the design and conduct of the study, and even then it may not be evident. A study without bias is said to have high internal validity. The three main types of bias that affect the internal validity of a study are confounding, selection bias, and information bias, which are described in Box 3-2.

Confidence in the Transportability of Results

The Concept of Transportability

A study estimate of the benefit or risk associated with a drug can deviate from the results that patients would actually experience in wider clinical practice if the study participants were not representative of the wider target population. That disparity can occur when a study is conducted in hospitalized patients but the results are used to estimate the risks in outpatients or when a study is conducted in patients who do not have comorbidities or cotreatments but the drug will be used in patients who have both. The transportability5 of study results, also known as external validity or generalizability of a study, is determined by the difference between the effect seen in the people studied and those in the wider target population.

The concept of transportability captures what is at stake in the traditional efficacy-versus-effectiveness distinction (Gordis, 2004). Traditionally, efficacy is a measurement of the beneficial effect of the drug with respect to a specific endpoint of interest under conditions that are optimized to favor an accurate assessment of the drug’s benefits and risks. Effectiveness is a measurement of the beneficial effects of a drug as it is used in the less controlled conditions of

![]()

5The term transportability is used in this report, rather than external validity or generalizability, because the committee thinks that it better reflects a nonbinary characteristic. Different effects can occur in a variety of settings, and study results may be transportable to some populations or settings but not others, so transportability may not be a simple binary property.

BOX 3-2

Explanations of the Three Main Types of Bias

Confounding

Confounding occurs when the populations compared in a study differ in important predictors of the outcome being studied other than an exposure of interest (such as exposure to a drug), that is, when another risk factor is associated with both the exposure and the outcome of interest and is a cause of the outcome. For instance, a disease state may affect both the use of a drug and the clinical outcome of interest.

Selection Bias

Selection bias results when the exposure affects participation (“selection”) in the study or analysis and selection is associated with the outcome of interest. For example, if the use of a drug increases both the risk of harm and the probability that people using the drug will drop out of the study and be lost to follow-up, the risk of harm is likely to be underestimated because the people whose data are used in estimating the incidence of the adverse event (that is, those not lost to follow-up) are less likely to have experienced the harm simply because they remained in the study.

Other types of selection bias that affect estimates in randomized controlled trials and observational studies include missing data and nonresponse bias, healthy-worker bias, and self-selection bias (Hernán and Robins, 2012). Although the terms selection bias and confounding are sometimes used interchangeably outside epidemiology, it is valuable to use the terms to refer to the two different types of bias (see Figure 3-1).

Information Bias

Information bias, caused by certain patterns of measurement error, occurs when “the association between treatment and outcome is weakened or strengthened as a result of the process by which the study data are measured” (Hernán and Robins, 2012). Errors in measuring and classifying exposures, outcomes, and confounders can influence the strength and direction of effect estimates.

clinical practice. However, neither efficacy nor effectiveness is an absolute concept, and their distinction is less clear than commonly supposed. For example, a double-blind RCT conducted in one country might produce an estimate of a drug’s efficacy under conditions that might be optimal for that country but not relevant or applicable to the United States. There is no one unique set of “real-world conditions”—estimates of a drug’s effectiveness may vary among many

populations and settings. The appropriate and informative question about a study is not whether it is “generalizable” in the binary sense, but to which populations and settings its results are transportable, to what degree, and what the determinants of that transportability are.

Nontransportability is caused by different distributions of “effect-modifiers” in the study and target settings (Hernán and Robins, 2012). For example, if women are more likely than men to experience an adverse effect as a result of taking a drug, sex would be an effect-modifier. Effect-modifiers may include characteristics of the patients (such as severity of disease or comorbidities), nonadherence, cotreatments, and cointerventions, such as the monitoring that typically takes place in clinical trials. For example, the risk of an adverse effect may be lower in a study in which patients are closely monitored than in a setting in which such monitoring is not part of clinical care. Variations in dosage and administration of the treatment may also present different or additional risks relative to those identified in the trial (Weiss et al., 2008).

It is important to note that the scale upon which the effect-modification is measured is important. The public health question typically depends on the degree of additional absolute risk incurred by drug exposure. If two populations are at different baseline risk for an adverse effect, a relative risk of 2 will be more dangerous for the high-risk group than those at low initial risk. This will not show up as effect-modification on the multiplicative scale, which is most often used in epidemiology, but it will be effect modification on the additive scale, the scale relevant to public health decisions. So if multiplicative models are being used for analysis, close attention must be paid to the variation in baseline risks from one population to another when transportability is assessed.

The assessed risks in a given population can differ according to how an adverse effect is elicited from the patient. Studies that depend on passive reporting of adverse events versus those that ask patients about specific adverse events can affect the reported frequency several-fold (Bent et al., 2006; Ioannidis et al., 2006).

Assessing the transportability of the results of any study requires clinical, pathophysiologic, and epidemiologic knowledge of the factors that can change a drug’s benefits or harms and of how the factors are distributed in the study and in community settings. RCTs often do not have adequate power to detect such effect-modifiers statistically, and relevant effect-modifiers (such as co-treatments) may be absent or unmeasured. In the absence of such information, conducting a study in the community setting is the best way to obtain direct knowledge about a drug’s effect in routine clinical practice. In the absence of high-quality information from a community-based setting, disputes about transportability—the relevance of study information to the public health context—can be among the most difficult to resolve because the empirical evidence base may be thin and claims based on clinical experience or claimed knowledge of biologic mechanisms hard to adjudicate. The experience with the RECORD trial shows the complexity of

this issue; some criticisms of the RECORD trial arose because of weaknesses that could be partly attributed to the attempt to conduct it in pragmatic fashion in a community setting.

Transportability is not typically treated as a formal source of error and is not taken into account in traditional evidence hierarchies, which focus almost exclusively on precision and bias. But using a public health perspective requires that the focus be on the effects of a drug as it is used in the general population. For that, transportability is a key consideration. From the perspective of FDA’s decisions, therefore, transportability should be treated as a potential source of error, with bias and imprecision, as displayed graphically in Figure 3-1. That approach leads to treating the transportability of study results as a formal contributor to the relevance of evidence for a given decision, rather than as a minor qualifier.

Issues related to transportability were raised repeatedly (using the more familiar term generalizability) in the FDA briefing document for the rosiglitazone hearings. One of FDA’s statistical reviewers questioned the relevance of research done with the UK General Practice Research Database (GPRD) (Yap, 2010):

While the GPRD database captures information for a large number of subjects, the generalizability of these data to the U.S. population might be difficult given varying prescribing practices, risk factors, and medical practices.

Critiquing a study that used a multistate Medicaid database, the FDA statistical reviewer stated (Yap, 2010):

Cohort eligibility required that a patient have at least one inpatient claim. This led to a huge reduction in cohort size from approximately 307,000 individuals to approximately 95,000. In addition, the cohort was restricted to patients receiving Medicaid services. Findings from this restrictive cohort might not be generalizable to the intended population. … The diabetes population studied comprises mostly older and generally sicker patients thus raising concerns of generalizability of results to healthier and younger diabetic populations.

Finally, the transportability of another study was criticized for having been done in Canada (Yap, 2010):

The population studied comprises patients aged 65 or older residing in Quebec, Canada. Therefore, the results reported in the publication cannot be generalized to a population of patients below 65 years of age. In addition, results might not be fully generalizable to non-Canadian population given varying baseline characteristics and differences in access to care.

In the text above, the evidential basis for claiming nontransportability is unclear at best, and therefore difficult to adjudicate. Such assertions may be

reasonable, but whether they should be accepted and how much they should affect the assessment of a study require more detailed explanation of why the differences noted would be expected to modify the drug effect and by how much. For example, is it literally true that evidence derived from people over 65 years old cannot be applied to those who are younger? What is the evidence for that claim, and how big is that effect? What if the target population had an age range of 60–64 years? Those are the kinds of questions that must be asked because differences between the study and target populations will always exist, even if the differences are only between past and future members of the same community.

In summary, if the “true effect of a drug” is defined in public health terms as its benefit–risk profile when it is used in medical practice in the general population of patients for whom the drug is used, or on a subset of the population, all three sources of error—bias, imprecision, and nontransportability—must be considered as contributing equally to the relevance of evidence generated by studies. Claims of nontransportability should be supported with evidence that the differences between settings or populations would be expected to introduce different effects that are clinically meaningful.

Randomized Controlled Trials Versus Observational Studies

Observational studies are a major source of evidence related to drug safety and are playing an increasing role in FDA’s oversight of drug safety (Hamburg, 2011). Such designs play a relatively minor role in establishing drug efficacy in the preapproval stage in FDA, where RCTs are regarded as fulfilling the statutory requirement of “adequate and well-controlled” studies to support a marketing claim. However, as discussed in this section, the relative value and quality of evidence from the two classes of designs can be quite different in connection with efficacy vs safety endpoints.

The different quality attributed to evidence from observational and randomized designs was a central aspect of the rosiglitazone debate. It is often stated that ORs less than 2, and certainly less than 1.5, cannot be reliably regarded as different from unity if they are generated by an observational study. That claim is based on a sense that there are unknowable and uncontrollable biases in all observational studies that are not discernable or controllable even with close examination of study details. The issue is described in the following passage from the Avandia memorandum from Dal Pan, director of the FDA Office of Surveillance and Epidemiology (Dal Pan, 2010), in which this viewpoint is contested:

The results of the observational studies strengthen the concern over the risks of rosiglitazone, especially when compared to pioglitazone. Observational drug safety studies are often criticized because they lack the experimental design rigor of a controlled clinical trial. Specifically, there is often concern that patients who are prescribed a particular medicine are different from those who are prescribed an alternative treatment, in ways that may be correlated with the outcome of

interest. This phenomenon is known as channeling bias, and is often a concern when measures of relative risk are below 2.0, when the effect of unmeasured confounders could account for the observed findings. While this concern is generally valid, it should not be automatically invoked to dismiss the results of observational studies in which the measure of relative risk is below 2.0. Data from the CMS observational study, for example, indicate that rosiglitazone and pioglitazone recipients were similar with regard to multiple cardiac and non-cardiac factors, a finding that suggests minimal channeling bias. Furthermore, the risk estimates from the observational studies are generally similar to those from the meta-analyses of clinical trials. Thus, dismissing the results of the observational studies simply because the observed measures of risk may be due to channeling bias may not be appropriate.

Traditional evidence hierarchies that rely on the type of study design to classify evidence generally focus on the strengths and weaknesses of those designs with respect to the evaluation of therapeutic efficacy (Barton et al., 2007; Owens et al., 2010). Study designs are ranked according to their capacity to generate unbiased evidence about efficacy endpoints, and considerations of transportability are given no weight.

The RCT design, in theory, produces the highest confidence that observed differences are caused by drug exposure, not by ancillary characteristics that might be associated with drug exposure. Such ancillary characteristics are known as confounders. In the context of a perfectly designed and conducted RCT—one without patient dropout, missing data, or nonadherence—causal inference effectively becomes statistical inference. That is, there is confidence that any quantitative differences between groups in the endpoints evaluated were due to the randomized intervention. The likelihood that a statistical hypothesis about association is true becomes equivalent to the likelihood that a hypothesis about causality is true.

As conduct deviates from ideal design, however, the certainty about a causal hypothesis decreases with the decreasing certainty that the design or analysis has adequately “controlled” for other causal factors. Such deviations include patient dropout, crossover between treatment arms, loss to followup, missing data, nonadherence to treatment, differential cotreatment, and differential measurement (Dal Pan, 2010). Those aspects of study design and conduct must be assessed to determine the evidential value of an RCT, especially if oversight of the study might have been complicated by its being conducted at multiple, overseas sites (Frank et al., 2008; GAO, 2010a, 2010b; Greene et al., 2006; Manion et al., 2009). Observational studies are affected by similar issues, with biases induced by treatment selection in place of those caused by deviation from treatment assignment.

Various characteristics of safety endpoints, with other constraints, may favor the strength of observational evidence over evidence from RCTs in generating valid and reliable evidence needed for benefit–risk assessments in the postmar-

keting setting. In a standard RCT, participants are randomly assigned to different treatment arms. The groups are then compared for observed risks of developing a particular health outcome, such as myocardial infarction. The most important property of an RCT is that—in large samples—the baseline distribution of risk factors, both known and unknown, is expected to be equal among groups. Under a number of assumptions—including complete event ascertainment, no differential loss to followup, and perfect treatment compliance—the estimate of the drug’s efficacy is unbiased and provides high confidence that any observed association between the drug and the efficacy endpoint is due to the drug.

Observational studies of efficacy, in contrast, are often subject to a variety of biases, the most common of which is known as confounding by indication (Vandenbroucke and Psaty, 2008). Confounding by indication, also known as “channeling bias”, occurs when treatment assignment is based on a risk factor for an outcome. In clinical medicine, patients are typically treated to improve their chances of a beneficial outcome. That makes it difficult to separate the effect of the drug itself from that of the patient’s condition that led to the drug’s use, that is, the drug’s indication. When physicians treat some patients differently for reasons related to their risk for various outcomes, confounding by indication is likely. For example, if sicker patients choose medical care for a given condition more often than surgery because they are afraid that they will not survive the operation and if sicker patients are more likely to die whether or not they receive surgery, observational studies of surgical versus medical care will show that surgery is safer than medical care even if they are equally efficacious.

Confounding by contraindication is the corresponding concern in studies that evaluate safety endpoints, although it is not as common as confounding by indication. If an adverse effect of a drug is known, physicians might avoid prescribing it for patients who are at higher risk for that effect (for example, the use of nonsteroidal anti-inflammatory drugs and GI bleeding, or the use of aspirin and anticoagulants together). If the use of the drug increases the risk of a particular adverse effect and patients who were at higher risk for that effect avoid taking the drug, the results will be biased toward the hypothesis of no effect. The findings of such a study may mistakenly indicate that the use of the drug does not increase the risk of the adverse event or, worse, that it prevents it. Such a treatment approach is in the patient’s best interest, but it makes observational studies of the harms associated with drugs difficult to conduct well. Confounding by contraindication, however, is not a major concern in studies of unexpected adverse events. If the risk itself or the factors that affect it are unknown, treatment cannot be based on avoidance of the risks (Golder et al., 2011); although it could be based on correlates of those unknown risks (for example, age, disease severity).

Empirical evidence suggests that the findings of observational studies of harms can be similar to the findings of RCTs for the same drugs and harms (Vandenbroucke, 2006). In a recent study of safety outcomes, Golder et al. (2011) compared the results of meta-analyses of RCTs with the results of meta-analyses

of observational studies. Their meta-analysis of the meta-analyses included 58 drug–adverse event comparisons. The ratio of ORs was used as the method of comparison, and the RCTs were associated with only a slightly higher estimate of risk (ratio of odds ratio, 1.03; 95% CI, 0.93–1.15). Of the 58 comparisons, 64 percent agreed completely (same direction and same level of significance), although some of the studies had low statistical power. This large meta-analysis provides empirical support for the claim that in large samples, observational studies can yield findings on adverse events that are similar to those of RCTs. However, more empirical research is needed into the factors that determine concordance between observational and randomized studies of harms.

A number of features of safety endpoints and the postmarketing context strengthen the role of observational studies in generating valid and reliable evidence needed to answer public health questions of interest. Differences in the frequency of efficacy and safety endpoints and the timescale on which they occur affect comparative judgments about the quality of evidence generated by RCTs and observational studies. Adverse effects resulting from the use of a drug may be severe but rare, and the sample size of preapproval RCTs, or achievable postmarketing RCTs, may be insufficient to detect rare or delayed outcomes. Preapproval RCTs are also likely to miss adverse effects resulting from chronic use or those arising after a long latent period, whereas observational studies, particularly those based on existing data, can typically provide longer followup. Observational studies based on data sources collected from large populations with long follow up can often report a greater number of adverse events than typical RCTs. However, any design with long followup, whether it is concurrent or non-concurrent, needs to be scrutinized very carefully for the extent and pattern of missing data; over time, the problems with selective retention or reporting can be substantial in all designs.

A second way in which the strength of evidence from an RCT for safety can be weakened is when the adverse effects are unknown or unforeseeable. Such endpoints by definition cannot be pre-specified (Claxton et al., 2005). It has been shown that quality and consistency of the reports and measurements of non-specified endpoints is often poor (Lilford et al., 2003; Thomas and Petersen, 2003). This problem will affect prospective observational studies as well, but it nevertheless can narrow the internal validity gap between randomized and non-randomized designs.

Potential confounders of efficacy endpoints may differ from confounders of safety endpoints; if only the former are measured, effect estimates of safety endpoints may not be appropriately adjusted for confounders (see for example Camm et al., 2011). This is likely to affect observational studies more than RCTs, whose results will typically require less adjustment, if any, depending on the extent and patterns of deviations from randomization and degree and determinants of missing information.

Finally, as noted previously, the transportability of evidence from obser-

vational studies to populations of interest may be superior to that of evidence produced by an RCT. Because RCTs often restrict eligibility to patients for whom the anticipated benefit is thought to outweigh (known) drug risks, RCTs are incapable of detecting adverse events that may arise only in the populations excluded from the trial, which are often characterized by a wider array of comorbidities, different disease severity, concomitant treatments, or other risk factors (such as age, sex, low socioeconomic status, poor monitoring of dose, adherence, or outcomes) that may modify the effects of treatment. Observational studies can include people who are more representative of those who receive the treatment of interest in the general population and in diverse care settings. Thus, less restrictive eligibility criteria typically used in observational studies can increase the transportability of the resulting effect estimates.

The eligibility criteria for observational studies, however, are sometimes restricted in an attempt to limit the magnitude of confounding (Psaty and Siscovick, 2010). For example, consider an observational study to compare cardiovascular risk in initiators vs noninitiators of statin therapy in a particular population. Suppose that all patients in that population who have LDL cholesterol greater than 4.9 mmol/L already receive statin therapy. The observational study should exclude current users and thus restrict participation to patients who have LDL cholesterol less than 4.9 mmol/L; otherwise, it would be difficult to adjust the effect estimate for confounding by concentration of LDL cholesterol. The desire for increased transportability in observational studies should be tempered by the need to ensure internal validity.

There is no study in which all measurements are perfectly reliable or in which many judgments have not already been made before study data are analyzed. In studies of drug safety, there is a long documented history of underreporting, selective reporting, or misclassification of harms (Ioannidis and Lau, 2001; Lilford et al., 2003; Talbot and Walker, 2004). Missing data are common in such studies, and the validity of statistical methods to account for missing data rests on assumptions that often cannot be confirmed by using the data. Data quality affects not only estimates of harms themselves but also the measurement of other risk factors for those harms, such as cotreatments, comorbidities, and patient-specific characteristics. It is often critical to understand the exact operational procedures by which harms were identified and reported and other key data recorded if one is to judge properly whether data on harms reported in a study are reliable. That degree of detailed operational knowledge is often not available to those outside the study, or, if the extent of that knowledge differs among scientists, their assessments of the reliability of any ensuing inferences may differ as well.

Disagreements About the Choice of Statistical Analysis

Judgments about the most appropriate statistical model for a given study

depend on many implicit and often unverifiable assumptions, both statistical and biologic, and scientists often disagree on the most appropriate statistical methods. Different ways of coding the same data can change their probability. For example, dichotomizing a continuous variable or combining harms of different severities can lead to vastly different estimates of effect size. The probability can depend heavily on how issues of multiplicity (that is, testing statistically for multiple endpoints) are treated. If researchers evaluate many adverse events statistically and only a few are observed to have increased risks, the strength of evidence of those adverse events depends on whether the “data” are treated as all the comparisons taken together or as each taken separately for the specific adverse events whose risks seem to be increased. Sometimes this problem is handled through multiplicity adjustments, but there is no ideal or universally agreed-on solution for knowing how much the analytic strategy should depend on patterns seen after the data have been observed.

Decision-makers cannot be expected to be expert in the many technical issues involved in statistical modeling (see Chapter 2 for more discussion of needed expertise for decision-making). The intricacies and nuances of statistical modeling highlight the importance of having inputs from several statisticians or others with deep technical understanding, just as the input from multiple scientists familiar with the content is routine. Data do not always speak for themselves—they speak through the filter of statistical models—and getting input from multiple experts in statistical analysis and modeling can be critical in understanding the extent to which the models being used are introducing clarity or distortion.

A further source of disagreements about statistical analyses is whether to analyze the data from a study according to the intention to treat (ITT) perspective or “as treated”. Assuming that all confounders are identified and well measured, the simplest approach to compare two treatments is an analysis that follows the ITT principle. In RCTs, an ITT analysis measures the effect of being assigned to a treatment; when all research participants initiate the treatment, an ITT analysis measures the effect of treatment assignment. For ITT analyses of large RCTs, only data on each individual’s treatment assignment and outcome are needed (Hernán and Hernandez-Diaz, 2012).

The observational analogue to ITT analysis needs to adjust for potential confounders. An observational ITT estimate will have only a causal interpretation as the effect of treatment initiation if all confounders have been appropriately identified, measured, and included in the analysis. Adjustment methods include stratification, outcome regression, standardization, matching, restriction, inverse-probability (IP) weighting, and g-estimation (Hernán and Robins, 2006).

Instrumental-variable approaches can also be used to estimate the effect of treatment initiation in observational studies (Hernán and Robins, 2006). An “instrumental-variable” is a variable on which exposure, but not the outcome,

depend. In an RCT, the “instrument” is the randomization itself, which determines drug treatment but by itself has no relationship with the outcome. “Natural experiments” often have an embedded instrument that causes groups to be treated or exposed in different ways unrelated to the group characteristics. The most common instrument is geography, that is, different regions of the country (or care settings) that use different treatment regimens for essentially equivalent patients. The instrumental variable method, however, relies on strong assumptions, the primary one being the validity of the instrument itself, and these always have to be examined closely.

When people drop out of a study or are otherwise lost to followup, their outcomes cannot be ascertained. As a result, regardless of whether the study is an observational study or an RCT, the ITT effect cannot be calculated directly. Loss to followup forces investigators to make untestable assumptions about why people were lost to followup. If one assumes that the people lost and not lost to followup are perfectly comparable, one would restrict the ITT analysis to participants on whom there was complete followup. A safer approach is to adjust for measured predictors of loss to followup that also predict the outcome (NRC, 2010). Such adjustments can be appropriately achieved with longitudinal outcome models by regression if the factors are non–time-varying or by inverse probability weighting otherwise.

The magnitude of the ITT effect depends on the type and patterns of nonadherence, which may vary among studies, whether they are observational studies or RCTs. Dependence of the ITT effect on nonadherence makes the effect particularly unfit for safety and noninferiority studies. One alternative to estimating the ITT effect is estimating the effect of treatment if all participants had adhered to the intended treatment regimen. In RCTs, that approach would estimate the effect of treatment if no one had deviated from the protocol. Such an effect is sometimes referred to as the effect of continuous treatment. To estimate the effect of continuous treatment, whether in observational studies or in RCTs, one needs to compare groups of people according to the treatment they actually received rather than the treatment to which they were assigned (an as-treated analysis) and make untestable assumptions about the time-varying reasons why people adhere or do not adhere to treatment. Specifically, valid estimation of the effect of continuous treatment requires that all time-varying factors that predict both adherence to treatment and the outcome of interest be measured reasonably well.

In RCTs, as-treated comparisons ignore the randomization assignment and therefore involve comparisons of groups that are not necessarily balanced with respect to prognostic factors. As-treated estimates can be confounded in RCTs. The problem with using ITT in safety analyses was noted in the FDA Avandia briefing documents (Graham and Gelperin, 2010a):

The primary analysis for RECORD was intention-to-treat (ITT), which is generally accepted as the preferred analytic method for trials conducted to show