11. The Web-Enabled Research Commons: Applications, Goals, and Trends

– Thinh Nguyen35

Creative Commons and Science Commons

Today we have heard about some of the problems that material transfer agreements (MTAs) have posed for the sharing of materials for research. Almost 10 years after the NIH, under Harold Varmus, issued a challenge to universities funded by the NIH to simplify the way that we share materials, this problem has not gone away, and may would argue has become worse.

Norms that led to these problems took a long time to develop. Once entrenched, they are difficult to reverse. Because these are legal rather than professional rules they are not informed by the needs of bench science, as they must be.

There is a risk that we will replicate that problem for how scientists share data. To avoid doing so, we need to create a robust, scientific consensus that serves the needs of science and of the public. The legal modes of sharing that we choose should be driven by that consensus.

In the first part of my talk, I will take a specific example—a bioinformatics project undertaken by Science Commons—to provide some motivation and context for a set of goals that I want to propose for data sharing. Second, I will map those goals against three, broad categories of legal regimes that typically we see in data sharing. In the final part, I will discuss some possible convergences: a possible emerging consensus among scientists about how to share data, particularly in the genomics area, and how to build a system for data sharing that promotes, rather than inhibits, scientific discovery.

The example that I will use is the Neurocommons Project from Science Commons. We heard this morning from about using ontologies and the semantic Web to link different kinds of data and to use computational bioinformatics to make systematic discoveries based on the corpus of knowledge we already have. Consider just a few genes that are involved in Huntington’s disease, or that are thought to be involved in the development of the disease. For each of these genes, there are in some cases of up to 40,000 or even 100,000 papers attached to each particular gene or protein involved in this network.

If you are a scientist trying to understand how these interactions work and you are faced with hundreds of thousands of papers, you must narrow your search, for you cannot possibly read all those papers. The process by which you narrow your search is driven by heuristics that have worked well for you, but that involve discarding the vast field of data that is otherwise available. So, the bioinformatics challenge is how to tackle using computational approaches the information processing problem that would overwhelm human beings trying to make sense of a sea of data. Fortunately, computers are very good at this kind of task. All they need is access to content. But that is where social and legal constraints, rather than technical limitations, come into play.

In the Neurocommons Project, we draw out all these different types of sources. These are different databases or sources for information about genes that might affect neurological diseases, and the challenge is that all of them are in different formats, they

_____________

35 Presentation slides available at: http://sites.nationalacademies.org/xpedio/idcplg?IdcService=GET_FILE&dDocName=PGA_053707&RevisionSelectionMethod=Latest.

use different terminology, and they are not really built to be compatible. So, how do you put them all together and start to do federated searches and queries?

The approach that we have chosen is to use available ontologies and, specifically, we use a technological tool called RDF, Resource Description Framework, to traverse these data sources on the Web. To provide an analogy, on the Web a URL is basically just a link between two Web pages. However, you can also conceive of URLs as definitions of things, and then the links could have meaning. Rather than just saying this page is linked to that page, we can say this receptor is located in that cell membrane and use these terms to connect different data sources. That is to say, that the Web can be used not only to link pages, but also concepts, and by doing so, to merge definition and knowledge.

So, for example, each of these concepts could exist as a separate link or a separate resource on the Internet. Then, when you want to make that connection, you just link other things to these networks of definitions. When you study the genes related to Alzheimer’s, the networks of biological pathways are extremely complicated and the only way that you can begin to elucidate them using computational approaches is to build the skeleton of meaning on which the flesh of knowledge can be attached.

The challenge is that, right now, we are limited to using sources that are open access, that are public domain, but there are a lot of journals with primary sources that are not available for text mining., There are consequently lots of holes in our ability to do this kind of research because of the closed status of some journals. Many databases, including government databases, are built upon restrictive licensing models that make data integration impractical or impossible. Thus, the challenge is how do we reformat what we already have stored in databases and journals and other sources of knowledge into a digitally networked commons that we can connect together, and then how do we also get the materials that are related to these digital objects into the emerging research Web so they could be accessible to those who need them most?

To explore the question of what kind of data-sharing protocol enables the kind of research we have been discussing here, I want to first describe three “licensing” regimes. The first broad category of data is those that are in the public domain. They have no restrictions on their use, no restrictions in distribution, and if there is any copyright, it is waived. The last is sometimes called “functional public domain”—because can be treated as public domain even if the legal status is different. The good news is that there are fields of research where the functional public domain is the norm. The human genome research community is one example, which evolved from the very deliberate consensus formed by the Bermuda Principles.

The second kind of regime is of community licenses, such as open source licenses, like GPL, and the Creative Commons licenses. What they have in common is that they are standard licenses that everyone within the relevant community uses. They sometimes offer a range of different rights, with some rights reserved. So, this is not the public domain because there are some restrictions, but, generally, the information or the resources are available to everybody under the same terms.

The third regime is private licenses, and, by that, I mean custom agreements that are specific to particular institutions or providers. These of course are the norm for commercially available sources of data, and they range wide broadly in terms of the rights provided to the user. However, in general, they are fairly restrictive in terms of redistribution or sharing of data, because of the need to protect a revenue stream.

Based on the models that I have been talking about and the need for bioinformatics, I want to propose a set of goals against which we measure these legal regimes.

Goals

Interoperable: data from many sources can be combined without restriction

Reusable: data can be repurposed into new and interesting contexts

Administrative Burden: low transaction costs and administrative costs over time

Legal Certainty: users can rely on legal usability of the data

Community Norms: consistent with community expectations and usages

The first goal is interoperability. The question is: Can data from different sources be combined? We have seen that the ability to combine the data is really very important for bioinformatics. You cannot link together knowledge that leads to new discoveries if are not aware that such knowledge exists. While the growing costs of scientific periodicals have been widely discussed, the most important issue to scientists is not only cost, but accessibility and searching. In other words, the problem is interoperability of knowledge.

Interoperability

Public Domain ****

Can be combined with other data sources with ease

Community Licenses *** / **

Depends on type of license: share-alike or copyleft are unsuitable, but attribution-only licenses are less problematic

Private Licenses * / **

Depends on restrictions, but not scalable; permutations too large

Transaction costs and the administrative burden are significant barriers to data integration. What are the costs not only for any specific transaction, but over time? Even something as simple as an attribution requirement, when you are required to give citation, can become a huge burden if you are looking at thousands of different data sources or millions of data elements.

Administrative Burden

Public Domain ****

No paperwork or legal review needed

Community License ***

Little paperwork, but some legal review needed (attribution stacking issues)

Private Licenses *

Large amounts of paperwork, frequent legal review needed

We saw that in particular with a recent addition of Wikipedia from Germany that was accompanied by 20 pages of attribution in tiny print that nobody could read it, but it is legally required to be there. There were just these useless additional pages that served no purpose other than to comply with a legal requirement. So, that is not a problem that we want to saddle scientific projects with over time.

The final goal is: How does the legal status map against community norms? What are scientists actually doing in the research lab? Is the activity consistent with what scientists are doing or does it require them to change their behavior in some way? This is critical because we want scientific norms to drive legal rules, not the other way around. In addition, there has to be legal certainty. If you are going to put the materials or the data into the public domain, they have to stay there. If people cannot rely on a stable set of rights over time, then projects which build upon other projects become untenable.

Legal Certainty

Public Domain **** / ***

Clear rights; generally irrevocable; (copyright should be addressed)

Community Licenses ***

Generally credible, good track record with open access and open source licenses

Private Licenses **

Must be considered individually; few private licenses tested by time

How do the different licensing regimes compare in terms of these goals? I would argue that public domain, at least for scientific work, is clearly the best fit. That should not be surprising, because that has been the prevailing norm among scientists since the first scientific journals were published. That is not to say that scientists are not competitive or that they do not hoard pre-publication data. That goes with the territory, which involves intense pressure to publish and fierce competition for tenure. But at the end of the day, when results have been published and discoveries have been claimed, that information should be freely available to all, and not least because of the need to verify the validity of claims made. That need for proof in science is a crucial scientific norm, wonderfully summarized by W. Edwards Deming in the phrase, “In God we trust, all others must bring data.” The availability of data in the public domain is crucial for its operation of this norm.

Public licenses, like open source licenses and Creative Commons licenses, come in a distant second. While these licenses have served many useful purposes in the field of

computer programming and the sharing of artistic content or Web content, they are relatively new legal inventions that are foreign to many scientists. The lack of understanding of how these licenses work, and their legal jargon, may deter widespread adoption. In addition, even those of us who design these licenses do not yet understand how to adapt these types of licenses to the scientific enterprise, and so they can present hidden dangers. For example, the embedded attribution requirements discussed above, which is a feature of all open source licenses and Creative Commons licenses, may seem perfectly reasonable to a computer programmer or artist who only cares about a single work, but for a scientist who must integrate data across many sources, such legal rules quickly become burdensome, if not impossible to follow.

In addition, almost all of these licenses change over time. Common open source licenses like GPL, BSD, and Mozilla have gone through multiple revisions, as have the Creative Commons licenses. Such revisions incorporate best practices and changing community norms, and for cultural sharing, they are perfectly workable. But to build a scientific infrastructure that changes, if at all, in time spans measured by decades and not years, on such licenses would be like playing a ball game whose rules are revised every inning.

Finally, commercial or proprietary licenses—whether expressed in click-through agreements or Web site terms and conditions of use—are proliferating widely throughout the Web. Even some government Web sites share data using customized data licenses that are restrictive and burdensome. Of course we cannot avoid such licenses entirely, particularly for commercial sources of data, where they are a necessity. But there should be no reason why universities, government agencies, or other public institutions that are charged with the dissemination of data for the public good should embrace such onerous mechanisms. At least the argument needs to be made that in these contexts, “over-lawyering” is unnecessary and harmful.

Because of all these reasons, we have started to see some convergence recently in the data community. One that I have been involved with is CC0. It is a result of a three-year policy discussion within Creative Commons and with our community. Technically, CC0 is not a license, but a waiver of copyright and certain related rights, including database rights that exist in Europe and other jurisdictions. In essence, CC0 allows a data owner to guarantee to the public forever the right to use the data in the functional public domain. But if science has been operating for centuries in the functional public domain, why is such a tool even needed? The reasons have to do with the recent (by historical standards) expansion—by courts and legislatures—of the boundaries of copyright to encompass more and more of what has been traditionally considered unprotected by copyright. This puts many categories of data in a minimal state of “borderline copyright.” That is, you may have a collection of data that is mostly factual data, so it is questionable whether or not there is enough creativity to qualify for copyright protection, but nevertheless there is sufficient residual doubt that you cannot entirely rely on such a conclusion. This is where CC0 is useful to remove that last residuum of doubt.

Another reason is that in Europe, there is a sui generis database directive that gives database owners additional rights in addition to traditional copyright in databases. CC0 also can be used in Europe to waive those database protection rights.

Even within the copyright system, varying countries have different standards for what qualifies for copyright protection. Australia has a different standard from Canada, which has a different standard from the United States. It is very hard, consequently, for international collaborations to figure out who has what rights and when. That is why CC0

is another very useful tool to use in that context to restore data to the functional public domain.

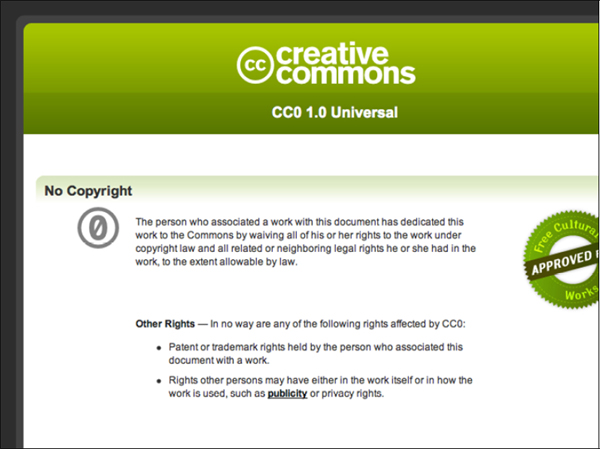

Figure 11-1 shows the deed for CC0. This is what the summary of it looks like.

FIGURE 11-1 Summary of deed for CC0.

SOURCE: Creative Commons, http://creativecommons.org/publicdomain/zero/1.0/

Beneath it is actually a legal document that spells out the legal effects in more detail. As you see, the goal is that whoever associates this with their work is releasing it into the public domain. They retain patent and trademark rights and publicity rights, which cannot be waived, but they waive their copyright and related rights.

The goals of scientific research, now more crucially than ever before, demand a degree of transparency and openness that are undermined by restrictive legal rules. We need a consensus among the stakeholders of public science regarding common goals and infrastructure, so that the right rules may be chosen. Without such a consensus, a fragmented landscape of norms and legal rules will make data integration and sharing difficult or impossible. Of all the possible rules, the public domain remains the best choice for most public sources of data.

12. Comments on Designing the Microbial Research Commons: Digital Knowledge Resources

–Katherine Strandburg36

New York University Law School

In this presentation I will offer a few comments on some of the proposals in the monograph. At the outset, though, I will say a little about my background.

My interest in this topic stems from two things. First, I have done a fair amount of work on patents and research tools and on how the social norms of scientists might promote the sharing of research tools, how that is affected by patenting, and so on. The second thing is that before I went to law school and became a lawyer, I was a physicist at Argonne National Lab. So I used to be a scientist.

My comments today are not so much from the legal perspective. Instead, I want to emphasize the importance of social norms and of what in economics would be called “preferences,” or what scientists really want to do. In other words, I will be looking at the proposals less from the institutional perspective and more from the scientist’s point of view. In doing that, I use a concept that I refer to as Homo scientificus, playing off the economics concept of Homo economicus, or the rational, self-interested actor.

One can develop a typical preference profile for scientists based on various empirical studies, which in essence attempt to answer the question, “What do scientists care about?” The contours of such a profile would not be surprising to anyone in this room.

Scientists really care about doing science. They care about performing their research. They care about being able to do the research they want to do, and they want to know what other scientists are doing. In order to be able to do all this, to satisfy those preferences, they need access to various scarce resources. The scarce resources that they need most are, first, funding and, second, some sort of attention from other scientists so that they have the ability to go to conferences, talk to people, find out what is going on, and be in on the action. And although it is something that everybody knows, it is still important to emphasize that access to these resources is very strongly mediated by publication. If open access is to succeed, it will have to align with these preferences and, in particular, with the importance of publication in science.

In discussing open access, I am going to talk first about the issue as it relates to journals and then about it as it relates to data, because I think that these two issues are somewhat different when looked at from the researcher’s perspective.

For journals there are at least three different ways that one could achieve the goal of complete open access. One way would be to try to create new open-access journals, perhaps having them based at universities, as Paul Uhlir discussed. A second path would be to prevail somehow upon existing journals to adopt open access. A third path that might lead toward open access—and which is the one that I want to emphasize—is a parallel path in which these proprietary journals continue to exist but, at the same time, one promotes open-access manuscript repositories.

_____________

36 Presentation slides available at: http://sites.nationalacademies.org/xpedio/idcplg?IdcService=GET_FILE&dDocName=PGA_053669&RevisionSelectionMethod=Latest.

Let me explain why I think we ought to focus on that approach. My argument relies on the importance of something called “impact factor.” Impact factor is a measure of the impact or importance of a particular journal, and the existence of such a measure exacerbates the emphasis on high-status publications. This emphasis has always been around, of course, but it has been magnified by the recent trend to try to quantify publication records using impact factor. The importance of a journal has become something we can measure objectively, and the things we can measure matter.

This emphasis on measuring journals’ impact points to what may be a major problem for open access. The impact factor for current open-access journals is not bad, on average. The average impact factor is 4, and open-access journal impact factors range up to 9. If you compare this with the restricted journals purely on the basis of the average, it does not seem like much of a difference, as their average is 5.77. But what is more important is that the impact factors of the restricted journals range up to 50. In essence, all of the journals with the largest impact factor are restricted. I would submit that an impact factor of 50 trumps almost every other consideration for almost any scientist who is deciding what to do with a paper. A long-term altruistic belief in open access is just not going to win.

Thus efforts to encourage open-access models cannot depend on somehow getting scientists to forego publication in high-impact journals. We need a long- term strategy for either establishing open-access journals while researchers can still publish in the existing high-impact journals or else somehow increasing the impact of open-access journals. But this second approach in particular will not be easy. You can explain it in various ways—path dependence, or network effects, or preferential attachment—but once certain journals have been established as having high impact factors, it is very hard to dislodge them from that position or, conversely, to build up the impact factor of new journal. Scientists are unlikely to vote with their feet for the open access model as long as we have this issue with impact factors.

So, let us consider the first option of achieving open access: starting up new journals, perhaps based at universities. I have to be a bit of a wet blanket on the idea of using law reviews as a model. From personal experience of having published in both law reviews and scientific journals, I do not think the laws reviews offer a good model, for a simple reason. With law reviews, there was a tremendous proliferation of journals. Because every journal was associated with a university, it came to be that every university had to have a journal. After all, Harvard has one, and Yale has one, so we ought to have one, too. The result was an overly fine-tuned ranking of the journals, so that it became very important for an author to figure out exactly in which journal his or her article is going to get placed. The result is an overemphasis on placement.

Furthermore, graduate students are not law students. Law students are not doing research, and they need a publication venue. Graduate students, on the other hand, do not need a publication venue—they publish in the regular journals—and they generally do not have time for the journal editing functions which are provided by law students for law reviews.

I also doubt whether law review publication is really faster. I know from my former experience and also anecdotally from people I have talked to that in physics you can get something published in about six months. I do not think law reviews do much better than that. Finally, I am also not convinced that there will be synergies between the university’s educational mission and the publishing of journals in science the way there

may be in law. While universities might have a big role to play in open-access publishing, I do not think that law reviews offer the right analogy.

The second option would be to get existing journals to adopt open-access policies. I believe we can go some way down that route, and I think we have seen that already with Springer Open Choice and other programs. But overall I think this option is unlikely to succeed because the journals, particularly the high-impact-factor journals, have tremendous bargaining power. Intellectual property laws protect these journals’ proprietary approaches, and it will be difficult to put direct pressure on them. Even the open-access tiers, such as the Springer Open Choice method, are problematic because of the issue of where the money is coming from. Suppose I want to publish my article in an open-access journal. It will cost me, and the money will have to come from somewhere. If universities were to get directed funds to do that, initially that sounds like a great idea, but where is that money coming from? Is it going to come out of people’s research budgets? Few scientists are likely to make that choice, given how tight research budgets are today.

The third option—and what I think is the more promising possibility—is author self-archiving in digital repositories. To establish manuscript repositories, you need only get the journals to acquiesce, so they will not sue you if you put your manuscript in the repository. They do not have to do anything different or change their mode of operation, which is a big advantage to this approach. The repository approach also lets the universities do those things that they can do easily and well, such as putting out manuscripts and getting the computer scientists and other researchers together to figure out how to mine the data in the manuscripts, while not asking the universities to take on those things that are either more difficult for universities to do, such as hard copy printing, or that are hard to dislodge from existing journals, such as the credentialing function. Finally, it would be possible to get funding agencies to mandate that their grant recipients deposit their manuscripts in these repositories, which would solve the collective action problem and align the incentives. Experience with the National Institutes of Health indicates that journals do not prohibit depositing in these repositories.

Last year a bill was introduced in the Senate, the Federal Research Public Access Act, which would mandate that all agencies ensure open access deposit for most federally funded research. It did not go anywhere the last time it was introduced, but I think there is some hope for something like this now because the Obama administration is making a big open government push, and this is totally consistent with that. The manuscript repository concept also tends to mitigate concerns with database protection statutes in Europe because even if the journal maintains its own database, it is not sole source anymore.

Of course, one would hope that this could be integrated with material in data repositories. You could even require, for example, that any researcher who used data from the repository would have to deposit any papers that resulted from that data. You could even go further and require researchers who used data from the repository not only to deposit any papers that came out of use of the data, but also to deposit the data and the materials associated with the papers. This is somewhat analogous to an open source software General Public License (GPL).

If such open-access manuscript repositories were successfully established, there would be at least two possible fates for the proprietary journals. One possibility is that the journals would adapt and move into a service provider role. They might have to finance this with page charges, but plenty of journals are financed with page charges now

anyway. They might make hard copies or archival versions of the journals, or perhaps they might create better or “premium” database services that competed with the open-access repository.

Or perhaps in the long term it might turn out that the proprietary journals are not commercially viable. If so, then the scientific societies or the universities or the knowledge hubs could essentially replace them or take them over, inheriting their impact factors. Or perhaps these institutions might simply partner with the proprietary journals. There are various possibilities for the future of these journals. Of the three possibilities, I think that manuscript repositories are probably the most practical path to an open-access world.

Switching gears, let us consider the data depositories. In many ways the issues regarding these data depositories are similar to the issues relating to material and research tool sharing. In particular, the major potential problem with data depositories is the collective action problem. This refers to the temptation to withdraw data or materials from the pool without contributing to the common pool.

The situation is similar to the classic prisoner’s dilemma. Suppose there is a group of scientists each deciding independently whether to share or not share their data. Let us focus on one particular scientist, Scientist A, who is trying to decide whether to share or not share. If everyone shares, then everyone gets whatever the value of the database is once all the data is in it, which, of course, depends on how many scientists contribute. Scientist A will get a bit of first-mover advantage regarding his or her own data, perhaps from knowing the data better than others or perhaps because the repository gives Scientist A six months to use it exclusively. Scientist A also gets some reputational value from contributing, which might come in the form of attribution when someone reuses the data. Finally, Scientist A must take into account the fact that there is a certain cost to contributing—not just actual costs, but also opportunity costs.

On the other hand, if Scientist A does not share but everybody else does share, Scientist A still gets essentially the same benefits—access to everyone else’s data plus exclusive use of his or her data. There might also be some cost to not sharing—a penalty imposed by the granting agency, for example, or reputational cost.

The bottom line in the economics approach to understanding the situation, which assumes Scientist A is a rational actor, is that Scientist A will do whatever offers the greatest return—share or not share, depending on a rational calculation of the benefits and the costs of each alternative.

In this overly simplistic, rational choice model the value of the database does not play a role, because Scientist A does not think that his or her choice is going to affect what everybody else is going to do. Either everybody else will not share, in which case there is no database, or else everybody else will share, and Scientist A can have a free ride—get the benefits of the database without the costs of sharing.

This is the typical free rider problem, but modeling it explicitly with an equation involving the benefits and costs to Scientist A, emphasizes that the success of a depository depends on increasing the benefits and reducing the costs of sharing. Even if we believe that people will naturally want to do the right thing, that they are going to feel guilty if they take data and do not share their own, it is still important to make the economic case as attractive as possible. One of the best ways to do this is to reduce the cost of contributing.

An interesting article in Nature from a couple of weeks ago called "Empty Archives" described exactly this phenomenon. Everybody said it would be great to have

this archive, but when it was set up at a university, at a cost of perhaps $200,000, nobody contributed. Why did they not contribute? Probably they just did not have time. They were too busy. It was too costly to them to contribute.

It is also important to provide rewards for contributing. This is why I believe the attribution aspect is important. We ought to think carefully about how to structure this incentive, because the best approach might not be the same mechanism as the usual citation mechanism or the usual collaboration mechanism. Furthermore, when we are considering what the best reward mechanism might be, we should keep in mind that rewards for contributing to the database are competing with the rewards for just sharing informally with collaborators. On the one hand, if I put my data in the repository, I may get rewarded by getting citations from everybody who uses it. On the other hand, if I keep my data for myself, it might help me get collaborations with other people. That could be quite valuable. So it is important to think carefully about how to do the rewards.

One other factor that must be taken into consideration is the value a researcher gets from depositing data versus not depositing them. If the data are very interdependent—that is, if a scientist’s set of data is not worth very much by itself—then the scientist is much more likely to contribute the data than if it is possible, for example, to write 10 papers based on those data alone. Clearly, depositories are likely to work better for interdependent data, so it would be a good idea to look for opportunities to use them for interdependent data.

Finally, what about people who are not academics, such as scientists who are in industry? We should think carefully about what to do about industry scientists because of the free rider problem of people withdrawing data without contributing. Within the academic community, that may be difficult to do. Once you publish, people know that you have accessed the data, so it is hard to hide what you have done. If industry scientists have open access to the data, however, they are probably much more likely to be free riders because they may not be publishing everything. They also may not care as much if people are talking about them behind their back, and they may not rely on funding from the same funders. Furthermore, it is just much easier for them to keep what they are doing secret.

This is a problem. Should we put some fences around data repositories to keep industry scientists out? I do not know. Maybe, maybe not. Perhaps we will decide that because public money has gone into making these data, we should encourage private actors to do whatever they want with them. If you put a fence around a resource, however, you have it available to trade with people on the outside. You can get them to pay for the data or perhaps trade their own data for them. It is something we should think about.