This chapter addresses the following key study question:

Key Question #4. To what extent are the final outputs from NIDRR grants of high quality?

The chapter answers this central question and also provides an assessment of the methods used by the committee to conduct the summative evaluation. The scope and methods used to conduct the External Evaluation of the National Institute on Disability and Rehabilitation Research (NIDRR) and its Grantees were described earlier in Chapter 2. The first section of this chapter elaborates on the methods to evaluate the quality of outputs. The second section describes the results of the assessment of grant outputs and provides recommendations for improving the quality of outputs. The final section presents the committee’s self-assessment of the methods and recommendations for future evaluations.

SUMMARY OF METHODS DEVELOPED FOR ASSESSING THE QUALITY OF OUTPUTS

The methods and procedures developed by the committee for assessing the quality of outputs involved first determining the criteria and dimensions to be used for the assessment. Second, a questionnaire was developed to assist grantees in nominating outputs for review and to elicit supplemental descriptive information about those outputs. Third, a sampling plan was

developed for selecting grantees who would be invited to participate in the evaluation. Fourth, the committee and staff worked with grantees who agreed to participate in the evaluation, and gathered and cataloged the outputs and supplemental information submitted for the committee’s review. Finally, the committee assessed the outputs through an expert review process.

Development of Quality Criteria

A key element of the summative evaluation was the response to NIDRR’s request to develop criteria for assessing the quality of its grantees’ outputs. In developing these criteria, the committee drew on its own research expertise, recommendations of the external advisory group convened by NIDRR in the course of planning this evaluation (National Institute on Disability and Rehabilitation Research, 2008), and methods used in other National Research Council (NRC) and international studies that have evaluated federal research programs (Bernstein et al., 2007; Chien et al., 2009; Ismail et al., 2010; National Research Council and Institute of Medicine, 2007; Panel on Return on Investment in Health Research, 2009; Wooding and Starkey, 2010; Wooding et al., 2009).

Quality Criteria

Four criteria were developed for the evaluation of grantee outputs: (1) technical quality, (2) advancement of knowledge or the field, (3) likely or demonstrated impact, and (4) dissemination.

Technical quality The technical quality of outputs was assessed using dimensions that included the application of standards of science and technology, appropriate methodology (quantitative or qualitative design and analysis), and the degree of accessibility and usability.

Advancement of knowledge or the field (e.g., research, practice, or policy as relevant) The dimensions used to assess this criterion included scientific advancement of methods, tools, and theory; the development of new information or technologies; closing of an identified gap; and use of methods and approaches that were innovative or novel.

Likely or demonstrated impact This criterion was used to assess the likely or demonstrated impact of outputs on science (impact, citations), consumers (health, quality of life, and participation for people with disabilities), provider practice, health and social systems, social and health policy, or the private sector/commercialization.

Dissemination Dimensions of dissemination assessed included the identification and tailoring of materials for reaching different audience/user types; collaboration with audience/users in identifying content and medium needs/preferences; delivery of information through multiple media types and sources for optimal reach and accessibility; evaluation of dissemination efforts and impacts; and commercialization/patenting of devices, if applicable.

Scale Developed for Rating the Criteria

For the output ratings, the quality scale used by the committee was substantively different than the opinion scale used in the evaluation reported in earlier chapters for surveys of stakeholder organizations and peer reviewers. A 7-point scale was used to rate the criteria at varying levels of quality, where 1 indicated poor quality, 4 indicated good quality, and 7 indicated excellent quality. The committee deliberated at length in determining what the midpoint score (4) would represent on the quality scale and decided that the midpoint should be “meeting expectations for good quality.” This midpoint anchor description was operationalized for assessing the technical quality of publications, which made up 70 percent of the outputs reviewed. For publications, a rating of 4 was generally assigned to journal articles that were published in peer-reviewed journals based on the fact that they had already been peer reviewed and met the scientific standards of their respective fields of research or development. However, articles could be rated higher or lower than 4 if, after review, their quality was determined to be higher or lower than “good.” For other output categories (tools, devices, or informational products), there was no such common way to operationalize the midpoint anchor, but the committee applied its expert judgment in determining ratings for these other outputs relative to the standard applied to the publications category.

Box 6-1 provides examples of quality indicators considered by committee members in determining scores for each criterion. These examples are not intended to be exhaustive but to illustrate the attributes of outputs that were considered in their review. In rating the outputs, committee members drew on their scientific expertise to consider the outputs’ quality with respect to the dimensions within each criterion (see the above discussion). (More information on the review procedures is presented later in this section; the review procedures guide and output rating sheet used by committee members are included in Appendix B.)

Grantee Questionnaire

NIDRR supplied the committee with information gathered from grantees in their Annual Performance Reports (APRs) (Research Triangle Inter

BOX 6-1

Examples of Quality Indicators Considered in Determining Output Scores

Technical Quality

• Strength of literature review and framing of issues

• Competence of design, considering the research question and other parameters of the study

• Quality of measurement planning and description

• Analytic methods and interpretation; degree to which recommendations for change are drawn clearly from the analysis

• Description of feasibility, usability, accessibility, and consumer satisfaction testing

Advancement of Knowledge or the Field

• Degree to which a groundbreaking and innovative approach is presented

• Application of a formal test of a hypothesis regarding a technique used widely in the field to improve practice

• Level of advancement and improvement of current classification systems

• Usefulness of descriptive base of information about factors associated with a condition

• Novelty of ways of studying a condition that can be applied to the development of new models, training, or research

Likely or Demonstrated Impact

• Degree to which the output is well cited or has promise to be (for newer articles)

• Potential to improve the lives of persons with disabilities by increasing accessibility

• Possibly transformative clinical and policy implications

• Potential for building capacity, lowering costs, commercialization, etc.

• Influence on the direction of research, use in the field, or capacity of the field

Dissemination

• Method and scope of dissemination

• Description of the evidence of dissemination (e.g., numbers distributed to different audiences)

• Level of strategic dissemination to target audiences when needed

• Evidence of reaching the target audience

• Degree to which dissemination used appropriate multiple media outlets, such as webinars, television coverage, Senate testimony, websites, DVDs, and/or social network sites

SOURCE: Generated by the committee.

national, 2009). Grantees are required to complete an APR annually to report on their progress. At the end of a grant, they must complete a final report. To supplement the APR information provided by NIDRR, the committee developed a grantee questionnaire (see Appendix B). The first part of the questionnaire asked grantees to list all projects under the grant and nominate the top two outputs from each project that reflected their grant’s best achievements. The questionnaire specified that outputs were to be drawn from the four categories defined in NIDRR’s APR (Research Triangle International, 2009):

• publications (e.g., research reports and other publications in peerreviewed and nonpeer-reviewed publications);

• tools, measures, and intervention protocols (e.g., instruments or processes created to acquire quantitative or qualitative information, knowledge, or data on a specific disability or rehabilitation issue, or to provide a rehabilitative intervention);

• technology products and devices (e.g., industry standards/guidelines, software/netware, inventions, patents/licenses/patent disclosures, working prototypes, product(s) evaluated or field tested, product(s) transferred to industry for potential commercialization, product(s) in the marketplace); and

• informational products (e.g., training manuals or curricula, fact sheets, newsletters, audiovisual materials, marketing tools, educational aids, websites or other Internet sites produced in conjunction with research and development, training, dissemination, knowledge translation, and consumer involvement activities).

The instructions for the questionnaire indicated that the committee would prefer to review one publication and one other type of output for each project within their grants, but that grantees could select two publications if that was the only type of output for a project. The questionnaire asked the grantees to submit the actual outputs for the committee’s review. If the output was a website, a tool, or a technology device that had to be demonstrated, grantees were asked to provide descriptive information, pictures, or links to websites for the committee’s direct review.

The second part of the questionnaire included a series of questions designed to elicit more in-depth description of an output when needed and to provide supplemental information on the output’s technical quality, how it advanced knowledge or practice, its likely or demonstrated impact, and how it was disseminated. This type of information, needed for a comprehensive assessment of the output, would not always be apparent in reviewing the output in isolation. For technical quality, grantees were asked to describe examples, such as the approach or method used in an output’s development;

relevant peer recognition; receipt of a patent, approval by the Food and Drug Administration, or use of the output in standards development; and evidence of the output’s usability and accessibility. For advancement of knowledge or the field, grantees were asked to discuss the importance of the original question or issue and describe how the output advanced knowledge in such arenas as making discoveries; providing new information; establishing theories, measures, and methods; closing gaps in the knowledge base; and developing new interventions, products, technology, and environmental adaptations. For likely or demonstrated impact, grantees were instructed to describe the output’s potential or actual impact on science, people with disabilities, provider practice, health and social systems, social and health policy, the private sector/commercialization, capacity building, and any other relevant arenas. Under dissemination, grantees were asked to describe the stage and scope (e.g., local, regional, national) of dissemination efforts, specific dissemination activities, any identification and tailoring of materials for particular audiences, efforts to collaborate with particular audiences or user communities to identify content and medium needs and preferences, and the delivery of information through multiple media types. Grantees were also asked to provide information from evaluations of their dissemination efforts and impacts that they may have conducted (e.g., results of audience feedback or satisfaction surveys).

The committee piloted the questionnaire on one NIDRR grant that had ended in 2008 and was outside the sampling pool (described below). Subgroups of the committee assessed five outputs of this grant, which consisted of two publications, an assessment package, a working prototype, and a fact sheet; discussed results; and adapted the questionnaire by collapsing some of the dimensions from an original set of six criteria into the four final criteria.1

To supplement the grantee questionnaire in assessing the likely impact of published articles, the committee used such sources as Scopus and the Web of Science to determine the journal impact factor and the number of citations of a particular article.

Sampling

NIDRR provided the committee with a data set of grantee information that consisted of all grants ending in years 2006 to 2010 (N = 248). Included in that data set was extensive information on all of the outputs produced by

_______________

1 An original criterion on output usability was collapsed into the final technical quality criterion. Another original criterion on consumer and audience involvement was restructured as dimensions of the other criteria. For example, the technical quality criterion now includes a dimension on “evidence of usability and accessibility”; the impact criterion includes a dimension on “impact on people with disabilities”; and the dissemination criterion includes a dimension on “tailoring materials to audiences” and “collaboration with users.”

all NIDRR grantees, which NIDRR routinely collects. The committee sampled from that larger data set with no involvement of NIDRR staff in which grants were selected. The committee was directed by its charge to draw a sample of 30 grants ending in 2009 that was representative of NIDRR’s 14 program mechanisms. As shown in Table 6-1, there were 107 grants that ended in 2009. As displayed in the table, however, a number of program mechanisms did not have at least 2 grants ending in 2009: Burn Model System (BMS), Spinal Cord Injury Model System (SCIMS), Traumatic Brain Injury Model System (TBIMS), Disability and Business Technical Assistance Center (DBTAC), Knowledge Translation (KT), Advanced Rehabilitation Research Training (ARRT), and Section 21.

Because the BMS, SCIMS, and TBIMS program mechanisms support some of NIDRR’s flagship programs, the committee adjusted the sampling pool to ensure that these grants would be included in the sample. The committee went back to the most recent year in which at least two grants under

TABLE 6-1 Number of NIDRR Grants Ending in 2007 to 2009, with Grants Included in Sampling Pool Highlighted

| Program Mechanism | 2007 | 2008 | 2009 |

| Burn Model System (BMS) | 0 | 5 | 0 |

| Traumatic Brain Injury Model System (TBIMS) | 7 | 8 | 1 |

| Spinal Cord Injury Model System (SCIMS) | 9 | 0 | 0 |

| Rehabilitation Engineering Research Center (RERC) | 0 | 0 | 8 |

| Rehabilitation Research and Training Center (RRTC) | 0 | 0 | 10 |

| Disability and Rehabilitation Research Project-General (DRRP) | 0 | 0 | 14 |

| Field Initiated Project (FIP) | 0 | 0 | 36 |

| Small Business Innovation Research I (SBIR-I) | 0 | 0 | 16 |

| Small Business Innovation Research II (SBIR-II) | 0 0 | 0 1 | 8 |

| Disability and Business Technical Assistance Center (DBTAC) | 0 | ||

| Knowledge Translation (KT) | 0 | 0 | 0 |

| Advanced Rehabilitation Research Training (ARRT) | 0 | 0 | 1 |

| Swirzer Fellowship | 0 | 0 | 12 |

| Section 21 | 0 | 1 | 1 |

| Total Grants in Years Ending in 2007, 2008, 2009 | 16 | 15 | 107 |

| Total Granrs Included in Sample (N = 111) | 9 | 13 | 89 |

SOURCE: Generated by the committee based on data from the NIDRR grantee database.

these program mechanisms ended, which was 2008 for BMS (N = 5) and TBIMS (N = 9, with 1 in 2009 and 8 in 2008) and 2007 for SCIMS (N = 9), and included these grants in the pool. The DBTAC, KT, ARRT, and Section 21 program mechanisms were excluded from the pool for this first evaluation cycle. Small Business Innovation Research I (SBIR-I) grants also were excluded from the sampling pool because they do not produce “outputs” and therefore did not align with the evaluation parameter of reviewing two outputs for each project within a grant. After these adjustments, the total pool consisted of 111 grants across nine NIDRR program mechanisms, shown in the highlighted cells of Table 6-1. The older grants included in the evaluation may have had an advantage over the grants ending in 2009 because of the additional time for their outputs to have had an impact.

From this pool of 111 grants, 30 grants (27 percent) were randomly selected for review in the following way. To balance the desire for the sample of grants to represent the nine program mechanisms included in the pool, the committee stratified the sampling at the program-mechanism level as a proportion of all grants in the sampling pool. For example, there were 36 Field Initiated Project (FIP) grants in the sampling pool, as shown in Table 6-1, representing 32 percent of all of the grants in the sampling pool (N = 111); therefore, 32 percent of the 30 grants in the sample (N = 10) should be FIP. The 36 FIP grants in the sampling pool were numbered 1 through 36, and 10 FIP grants were randomly selected using a website that generated random numbers. A table in the next section shows the number of grants included in the sample by program mechanism.

Once the proposed evaluation methods had been approved by the Institutional Review Board of the National Academies, the sample of 30 grants was drawn, and invitations to participate were sent to the principal investigators of those grants. The principal investigators were fully informed about the methods to be used in the evaluation and what would be required of them. Of the original 30 grantees invited, 2 declined because they did not have time to fulfill the evaluation requirements and 1 because of a change in institutions. Three additional grants were then randomly selected from the pool to bring the final sample to 30. The committee acknowledges that bias from self-selection could have caused the fin/al sample of 30 grantees that participated in the evaluation to be less representative of the larger population of grants.

Compiling Outputs to Be Reviewed and Number of Outputs Reviewed

The questionnaire described above was sent to the 30 grantees who agreed to participate in the study. As noted, the principal investigators of the grants included in the sample were given written instructions for submitting their outputs for the evaluation and providing supplemental information

about the outputs. Committee staff worked with the grantees to clarify the instructions and to encourage them to submit their output packages. Because some grants had ended several years before the evaluation (2007 and 2008 for the Model System grants), some grantees had difficulty submitting materials because the principal investigators had changed institutions or departments within the same university or had other competing priorities during the time period of our review. Staff accommodated these principal investigators by providing additional time to submit their materials and in five cases by assisting them in completing the questionnaires through telephone interviews. Two grantees did not respond to the supplemental questionnaire.

As described above, grantees received questionnaires on which they were asked to list each project under their grant and identify two outputs per project to be reviewed by the committee. They were asked to identify the “top” two outputs per project that reflected their grant’s best achievements. As noted, to permit assessment of outputs beyond journal publications, grantees were asked to offer at least one non-journal publication output per project if such outputs were available. The number of projects for each grant varied by size, from 1 for small FIP to 10 for larger center grants.

A total of 156 outputs were submitted for review across the 30 grants in the sample. Eight outputs were considered highly related to other outputs, and they were reviewed together with those other outputs. This occurred when one output was a derivative or different expression of another and when the principal investigator responses to criteria questions were basically the same. Therefore, the total number of outputs for analysis was 148. Table 6-2 presents the number of grants included in the sample by program mechanism and the types of outputs that were reviewed.

To place the outputs reviewed into the larger context of the outputs produced by grantees in the sampling pool of 111 grants, Table 6-2 also shows that the proportions of publications and other outputs (tools, technology, and informational products) reviewed by the committee were relatively close to the proportions of the various output types produced by grantees in the larger sampling pool. The proportion of publications reviewed was somewhat lower at 70 percent (versus 76 percent in the sampling pool), and the proportion of informational products reviewed was somewhat higher at 18 percent (versus 11 percent in the sampling pool).

Review Process

The committee members, whose expertise encompasses social sciences, rehabilitation medicine, engineering, evaluation, and knowledge translation, were divided into three groups of five members each. The subgroups were organized to ensure that outputs would be reviewed by a group of individuals with the collective expertise necessary to judge their quality. The

TABLE 6-2 Number of Grants and Distribution of Outputs Reviewed by Program Mechanism

| NIDRR Grant Category and Program Mechanism | Grants | Publications | Tools | Technology | Informational Products | Total |

| Model System Grants | ||||||

| Burn Model System (BMS) | 2 | 12 | 2 | 0 | 4 | 18 (12%) |

| Traumatic Brain Injury Model System (TBIMS) | 2 | 12 | 0 | 0 | 2 | 14(10%) |

| Spinal Cord injur)’ Model System (SC1MS) | 2 | 11 | 0 | 0 | 0 | 11 (7%) |

| Center Grants | ||||||

| Rehabilitation Research and Training Center(RRTC) | 3 | 16 | 0 | 0 | 12 | 28 (19%) |

| Rehabilitation Engineering Research Center (IRERC) | 2 | 16 | 2 | 5 | 3 | 26 (18%) |

| Research and Development Grants | ||||||

| Disability and Rehabilitation Research Project-General (DRRP) | 4 | 13 | 4 | 0 | 5 | 22 (15%) |

| Field Initiated Project (FIP) | 10 | 17 | 1 | 3 | 1 | 22 (15%) |

| Small Business Innovation Research II (SBIR-II) | 2 | 1 | 0 | 1 | 0 | 2(1%) |

| Training Grants | ||||||

| Switzer Fellowship | 3 | 5 | 0 | 0 | 0 | 5 (3%) |

| Total and Proportion of Output Types in Sample | 30 | 103 (70%) | 9 (6%) | 9 (6%) | 27 (18%) | 148 |

| Total and Proportion of Output Types in Sampling Pool | 11 1 | 1,060(76%) | 101 (7%) | 84 (6%) | 148(11%) | 1,393 |

SOURCE: Generated by the committee based on data from the grantee questionnaire and NIDRR grantee database.

subgroups were convened on three occasions—in October 2010, December 2010, and February 2011. Because of the relatively short time period available to conduct the reviews, grants were scheduled for review according to size, with the smaller grants being invited first (e.g., FIP, Switzer, SBIR-II) and the larger grants (DRRP, Model System grants, center grants) being invited to participate in the later rounds. The rationale for this approach was that the smaller grants had fewer outputs and would require less preparation time for the review than the larger grants, which had many projects and more outputs so that more preparation time was required. Therefore, the content of the grants tended to be mixed during each round of reviews, necessitating a corresponding mix of expertise in each subgroup. As noted, however, efforts were made to match the expertise of the reviewers in each subgroup with the outputs they would be reviewing (e.g., technology outputs were assigned to a subgroup with engineering expertise). The review procedures are described in detail in Box 6-2.

The committee’s expert review involved consideration and assessment of the multiple quality dimensions of the outputs—a process that has been recommended as a valid method for evaluating the relevance and quality of federal research programs (National Academy of Sciences, National Academy of Engineering, and Institute of Medicine, 1999). The seven-point rating scale was used to describe the results of the output assessment more precisely in terms of the varying levels of quality. During the reviews, the committee members frequently discussed how they were applying the criteria and interpreting the anchors of the rating scale so they could calibrate their ratings. In addition, brief narrative statements were written summarizing the rationale for the subgroups’ ratings of each output. These statements were reviewed after the ratings had been completed to identify attributes that particularly characterized the varying levels of quality and were helpful in further exemplifying the dimensions of the criteria.

Although the final scores used to report results of the output assessment were based on subgroups’ consensus scores, the committee conducted an interrater reliability analysis of their initial independent ratings (i.e., raw scores before the subgroup discussions) to determine the extent to which the individual committee members were using and interpreting the scale in the same way. The interrater reliability analysis was conducted, using methods suggested by MacLennan (1993), for more than two raters with ordinal data. This method calculates an intraclass correlation coefficient (ICC) that represents an average correlation among raters.

The interrater reliability analyses were run on 15 grants for which at least 3 outputs were reviewed by the subgroups. The analyses could not be run with less than 3 outputs, and only 15 grants had 3 or more outputs reviewed. The ratings compared were the individual committee members’ raw scores (before discussion) on each of the criteria. According to Yaffee

BOX 6-2

Committee Member Review Procedures

Each of the 30 grants was assigned to one of the three subgroups so that all outputs from a grant were reviewed by the same subgroup. To ensure consistency in approach across subgroups, the committee chair attended each subgroup meeting.

Based on direct review of the output itself and descriptive information about the output in the Annual Performance Report (APR) and grantees’ questionnaire responses, each subgroup member independently rated every output assigned to that subgroup. The subgroup member assigned a score for each of the four quality criteria (technical quality, advancement of knowledge or the field, likely or demonstrated impact, and dissemination), as well as an overall score for the output and provided a rationale for their scores. Scores were assigned using a 7-point scale, ranging from 1 to 7 and anchored at 3 points: 1 = poor quality, 4 = good quality, and 7 = excellent quality.

For each output, one subgroup member was assigned as the primary reviewer. The remaining four subgroup members were secondary reviewers. The process for arriving at consensus scores was as follows:

• The primary reviewer opened discussion of each output by presenting a brief summary of the output and his or her rationale for the rating of each criterion plus the overall score.

• The secondary reviewers then presented their ratings for each output, along with a brief rationale.

• The subgroup then developed consensus ratings for each output through discussion facilitated by the subgroup chair.

Following the discussion of all outputs from an individual grant, the subgroup considered the full spectrum of the reviewed material, along with the grant’s overall purpose and objectives (using the grant’s APR). The subgroup then assigned an overall performance rating for the grant using the same seven-point scale.

(1998), the minimum acceptable ICC is .75 to .80. The ICC resulting from the analyses are shown for each of the 4 criteria in Table 6-3.

On the technical quality criterion, 13 of the 15 grants had statistically significant ICCs greater than .75; on the impact criterion, 11 grants had ICCs in this acceptable range; on the advancement of knowledge or the field criterion, 10 grants had ICCs in this range; and on the dissemination criterion, 9 grants had ICCs in this range. Although the ICC results show greater challenges in achieving interrater reliability on the criteria other than technical quality, the results suggest that individual members were using and interpreting the seven-point scale in a similar manner prior to

TABLE 6-3 Results of Interrater Reliability Analysis

| Grant | Number of Outputs Reviewed | Technical Quality ICC | Impact ICC | Advancement of Knowledge or the Field ICC | Dissemination ICC |

| 1 | 8 | .64c | .81c | .91b | .80b |

| 2 | 3 | .96c | -5.6 | .65 | -.21 |

| 3 | 4 | .95b | .33 | .95b | .92b |

| 4 | 8 | .87a | .88a | .81a | .83a |

| 5 | 10 | .76b | .79b | .85a | .74b |

| 6 | 10 | .81a | .83a | .67b | .85a |

| 7 | 9 | .81b | .57 | .93 | .67c |

| 8 | 6 | .97a | .97a | .93a | .96a |

| 9 | 7 | .83b | .31 | .77c | -1.65 |

| 10 | 7 | .79b | .78b | .64 | .88a |

| 11 | 8 | .94a | .92a | .88a | .72b |

| 12 | 9 | .83b | .81b | .78b | .86a |

| 13 | 10 | .73b | .76a | .72b | .45 |

| 14 | 9 | .93a | .94a | .80b | .87a |

| 15 | 16 | .84b | .92a | .48 | .86a |

ap ≤ .001; bp ≤ .01; cp ≤ .05.

NOTES: For individual grants, not all outputs reported in column 2 could be analyzed for each criterion because of missing data, which occurred when committee members did not rate all four of the criteria for all of the outputs examined during their independent reviews prior to the subgroup discussions. ICC = intraclass correlation coefficient.

SOURCE: Generated by the committee based on data from the committee’s interrater reliability analysis.

the full subgroups’ discussions of the output ratings and their subsequent determination of consensus scores.

It would have been advantageous to conduct the interrater reliability analysis during the course of the evaluation and make adjustments to improve interrater reliability. However, there was insufficient time for this approach because of the short span of time in which the reviews were performed. The results of the interrater reliability analysis should be considered in designing future output evaluations.

RESULTS OF THE EVALUATION OF GRANTEE OUTPUTS

This section presents the results of the committee’s quality ratings of the four types of grant outputs according to the four criteria described above. In reviewing publications, the committee also referred to well-known sources that rate journal impact factors and count the number of times published articles have been cited; these results are summarized for the publications reviewed.

Quality Assessment of Outputs Reviewed

Figures 6-1 through 6-4 illustrate the distribution of ratings for all outputs2 on each of the four quality criteria. Percentages show the proportion of outputs that received the various ratings along the 7-point scale. Figures 6-5 and 6-6 show distributions in the aggregate for the overall ratings that were determined for each output (i.e., considering all four criteria), and for grant performance (i.e., considering all outputs submitted by a grantee). Results for each criterion are discussed below the figures. For consistency in reporting these results, the discussion refers to ratings falling into the “higher quality” range (i.e., ratings of 4 to 7 with anchors of “good” to “excellent” on the quality scale) or into the “lower quality” range (i.e., ratings of 1 to 3 with anchors of “poor” to “below good” on the quality scale).

In addition, the committee wrote brief statements summarizing the rationale for its ratings of each output. The committee reviewed these statements after completing the ratings to identify attributes that particularly characterized the ratings for the four quality criteria, thereby clarifying the dimensions of each criterion. The attributes that characterized the lowerrated and higher rated outputs are summarized for each criterion below each respective figure.

_______________

2 Although a total of 148 outputs were reviewed, the numbers vary slightly across the criteria in the figures (N = 138 to 142). The committee was not able to rate 6 outputs because the information available was not sufficient. These included a technical workshop, a national conference, a clinic, an intervention program, a list of publications, and a training curriculum. A few other outputs could be rated on some criteria, but not all 4, for the same reason.

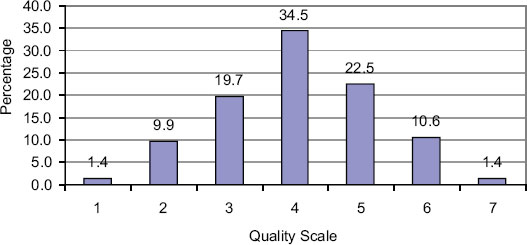

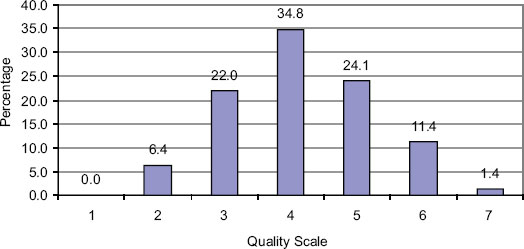

Technical Quality

With regard to technical quality (see Figure 6-1) the majority of outputs (69 percent) were rated in the higher quality range (4-7). However, more than a quarter of the outputs (31 percent) were rated in the lower quality range (1-3).

Several characteristics distinguished the lower ratings on technical quality (1-3) from the higher ratings (4-7). An example is lack of information presented in the output itself or in the accompanying descriptive questionnaire. Reviewers commented in these cases that there was an insufficient description of the output itself, that a publication was written poorly, that methods or protocols for tools were not described thoroughly, that results were not clearly presented to be able to understand how the research question was answered, that documentation was lacking on how a measure was developed, that testing results for a technology product were not presented, or that not enough substance was presented in an informational product to assess it. Another example was lack of clarity in information that was presented. Reviewers commented that questions on a survey did not appear to be well thought out, that weak findings were not fully explained in conclusions, that it was unclear whether adequate validation had actually been performed on a measure or technology product, or that it was unclear whether a new technology was based on scientific evidence. Outputs that were rated lower in the area of design and methods, included reports of studies with small sample sizes that were not representative or that used single-group design with self-reported measures, no controls, and a pretest

FIGURE 6-1 Distribution of quality ratings for technical quality (N = 142).

SOURCE: Generated by the committee based on data from the committee’s output review.

only. These study designs and methods could still be strong if a project were treated as a pilot or feasibility study, but were seen as weak if claims were made regarding their potential for changing policy or practice. Failing to describe or address limitations in some way also was described as a weakness, particularly in cases in which there were high attrition rates or low response rates. Weaknesses in analyses were identified as well. These included use of obsolete data sets, failure to use standard methods of analysis, failure to fully use all of the variables available, or description of statistical analyses that appeared to be ad hoc. Informational products that were rated lower included newsletters that presented data but failed to synthesize the data for users or presented tips for practice without providing supporting evidence. They also included websites that were difficult to navigate, had missing links, or lacked interactive elements necessary to fully access or utilize the information.

In contrast, comments on outputs that were rated on the higher end of the quality scale praised attributes of presentation and clarity, such as careful and scholarly approach, excellent literature review and framing of issues, very systematic approach that was described well, recommendations for change drawn clearly from the analysis, and narrative descriptions of high quality that accurately represent key issues in technical and conceptual terms. Examples of positive comments on measurement qualities included good measurement planned and described; good description of feasibility, usability, accessibility, and consumer satisfaction testing; and use of simulator plus neurological tests. Outputs that were rated higher on methodology and analytic techniques were noted as being competent through use of a convenience sample with a longitudinal design, having a good sample size, using a prospective sample that was monitored across a 2-year period and used several predictors, and using analytic methods and interpretation that appeared to be sound. Attributes of tools and technology products that were rated highly were strong design and an article on a technological innovation that won a prize for technical quality from the journal in which it was published. One grantee stood out as presenting three outputs in a cohesive manner that illustrated the technical quality of the research and development: the first output described the research base, the second described the software application, and the third assessed use of the protocol. Some highly rated informational products (websites) were described as being easy to navigate, presenting evidence on consulting with and tailoring the website to users, and including data that were highly accessible and usable.

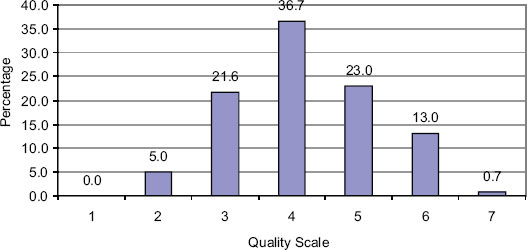

Advancement of Knowledge or the Field

The output ratings on advancement of knowledge or the field (see Figure 6-2) show that almost three-quarters of the outputs (73 percent) were

FIGURE 6-2 Distribution of quality ratings for advancement of knowledge or the field (N = 139).

SOURCE: Generated by the committee based on data from the committee’s output review.

deemed to be in the higher quality range. But again slightly more than a quarter fell into the lower range.

Outputs rated lower on this criterion received comments, such as nothing new or novel presented, unclear how this work is an advancement over current knowledge, no new theory work and unclear how the output will break new ground, dated concepts in the field, unclear what the advancement is in some knowledge transfer outputs, and evolution of technology not driven by this product development.

In contrast, comments on outputs scoring at the higher end of the quality rating scale included novel and interesting topic; unique work that is a good contribution to the state of knowledge; groundbreaking and innovative; added knowledge about a practice to the field; formal test of a hypothesis regarding a clinical technique used widely in the field; randomizing to conditions was new for this technology; new indicators used to set new prevalence rates; moves the knowledge base forward by linking concepts and measures to change how measurement is done; significant advancement and clear improvement over current classification systems; provides a useful descriptive base of information about risk factors; could lead to new ways of studying a condition and developing new models that can be used for training and designing interventions or developing new study approaches; and experimental design is well conceived and well designed, with potential for moving the field forward.

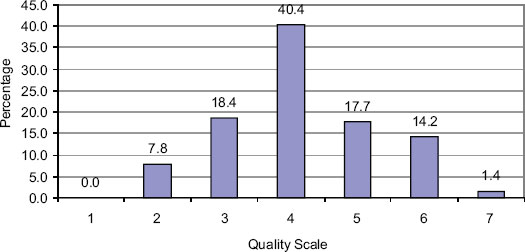

Likely or Demonstrated Impact

The ratings on likely or demonstrated impact (see Figure 6-3) show that 74 percent of the outputs were determined to be in the higher quality range.

But again slightly more than a quarter fell into the lower range.

Outputs rated lower on this criterion generally did not present evidence of likely or demonstrated impact, and the impact was not apparent from examining the output itself. In one case, it appeared that a paper might have had more potential for impact if it had been published in a journal more suited to the information presented, and in another case if more planning had been done to increase the likelihood of adoption of a tool.

When outputs received higher ratings, their likely or demonstrated impact was readily apparent. The outputs were published in journals that that had appropriate impact factors; they were relatively well cited or had promise to be (for newer articles), and may have been cited in national newspapers. These were outputs that had clear potential to improve the lives of people with disabilities by increasing accessibility; that had clear and possibly transformative clinical and policy implications; and whose results may hold promise for supporting new financial coverage, providing an intervention at lower cost, or being commercialized. Some of the outputs that received high ratings had demonstrated their impact by already influencing the direction of research, being widely used in the field, helping to inform and advance health care legislation, shedding light on institutional bias, or building capacity for the use of statistical products.

FIGURE 6-3 Distribution of quality ratings for likely or demonstrated impact (N = 141).

SOURCE: Generated by the committee based on data from the committee’s output review.

FIGURE 6-4 Distribution of quality ratings for dissemination (N = 138).

SOURCE: Generated by the committee based on data from the committee’s output review.

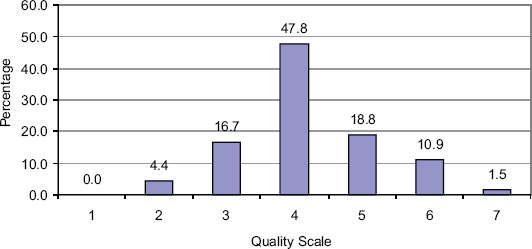

Dissemination

Output ratings on the dissemination criterion (see Figure 6-4) are somewhat different from those on the other criteria. A higher proportion (79 percent) of the outputs were rated in the higher quality range, and less than a quarter (21 percent) fell in the lower range.

Outputs were rated low on the dissemination criterion for a variety of reasons that pertained to failure to conform to some of the basic principals of knowledge translation. For example, the target audience was unclear; the information was not targeted to the audience identified; it was unclear how the audience would use the output; or the voice, content, or format was not consumer-friendly or not of high quality. Sometimes it was unclear whether or how outputs had been disseminated and what the volume or scope of the dissemination was.

On the other hand, outputs that were rated high on this quality criterion were characterized as having extensive dissemination and a good description of the evidence for this (e.g., numbers distributed to different audiences); having an appropriate method of dissemination and format; providing evidence of reaching the target audience; being disseminated through a patent and commercialization; being widely disseminated by a federal agency; being disseminated strategically through targeting of states or associations; and using multiple media outlets for dissemination, such as webinars, television coverage, Senate testimony, websites, DVDs, and/or social network sites. One grantee was planning to disseminate a technology product through support by the grantee’s university for licensing the technology for possible commercialization.

Ratings of Overall Quality of Outputs

The committee determined overall quality ratings by considering the ratings on the four criteria. Thus Figure 6-5 reflects the same overall pattern of scores skewed slightly toward the higher quality range, with 72 percent of the outputs being rated in this range and 28 percent in the lower quality range

All outputs were noted as having strengths and weakness. However, those that received lower overall scores of 1 to 3 had a preponderance of lower ratings across the individual criteria, whereas the opposite was true for the outputs that received the higher overall scores. Those outputs that received ratings of 4 had a greater mix of both positive and negative critiques, which made the products good, but not exceptional.

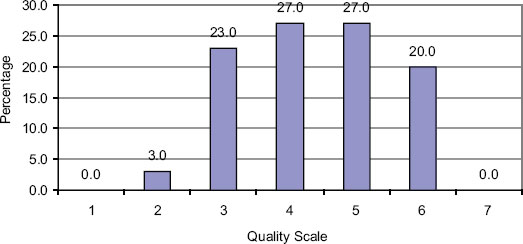

Ratings of Grant Performance on Outputs

After reviewing all of the outputs of an individual grant, the committee considered and rated the grant’s overall performance with regard to all of the outputs reviewed for that grant. In rating grant performance, the committee also considered the fact that the outputs reviewed had been identified by the grant’s principal investigators as the “top” two outputs per project, best reflecting the grant’s achievements. In addition, the committee considered the grant’s overall purpose and objectives (using its APR). With these considerations in mind, the committee assigned a grant performance rating using the same seven-point scale.

FIGURE 6-5 Distribution of overall quality ratings for each output (N = 141).

SOURCE: Generated by the committee based on data from the committee’s output review.

FIGURE 6-6 Distribution of quality ratings for grant performance score (N = 30).

SOURCE: Generated by the committee based on data from the committee’s output review.

Figure 6-6 shows that the distribution of scores on the performance of grants is quite different from the ratings for the individual outputs. While three-quarters of the grants’ overall performance was rated in the higher quality range and one-quarter as lower quality, a smaller proportion of grants were rated at the midpoint of 4 (27 percent), and larger proportions were rated on the higher end of the scale, with 27 percent receiving a rating of 5 and 20 percent scoring a 6.

This result may reflect that a grant’s performance on the particular outputs reviewed was determined to be more than the sum of its outputs as considered within the context of the grant’s overall purpose and objectives. However, there was great concern that the committee did not have enough information to rate grant-level performance by focusing so exclusively on outputs and not in more depth on how the outputs fit within the context of the grants’ specific aims and developmental trajectories. This was particularly the case for larger, more complex projects such as those under the Rehabilitation Research and Training Center (RRTC), Rehabilitation Engineering Research Center (RERC), and Model System grants. The NIDRR APRs, which were taken into consideration, in some cases did a good job of providing this larger context, but in many cases reviewing the APR alone was not sufficient. (Issues related to rating outputs in the context of grants and program mechanisms are discussed further in the section on self-assessment of the committee’s review methods later in the chapter.)

Journal Impact and Citation Analysis of Publication Outputs

Assessing the quality criterion of likely or demonstrated impact included the additional step of considering the impact factors of the journals in which articles were published, as well as the number of times specific publications reviewed by the committee had been cited in other published manuscripts. While this type of analysis has the benefit of providing quantifiable metrics, it is also fraught with many limitations that are particularly relevant to NIDRR grantees. Many NIDRR-funded researchers publish in specialty journals that may have lower impact factors because the work is in narrow fields that are not well populated with researchers. In addition, the citation half-life (the time it takes for an article to receive half of its total citations by others) can be quite lengthy. The citation half-life for the Archives of Physical Medicine and Rehabilitation (one of the leading journals in this field), for example, is about 8 years. Thus, it may take many years before an article’s true value is revealed through this metric. Because most of the grants reviewed ended in 2009, the citation data for the publications reviewed were collected within a short time window (0-24 months).

Acknowledging these limitations, the committee used Scopus and the Web of Science to access information on the journal impact factors and number of citations for each article included in the review. Table 6-4 shows all the journals in which articles reviewed by the committee (N = 80) were published, the Scopus (SJR) and Web of Science (ISI) impact factors, and the number of articles reviewed that were published in each of these journals.

The wide variety of journals corresponds to NIDRR’s broad portfolio. As can be seen in the table, the journal with the largest number of articles reviewed by the committee was the Archives of Physical Medicine and Rehabilitation (N = 18), followed by the Journal of Spinal Cord Medicine (N = 12). The Web of Science (ISI) classifies 43 journals in the subject area of rehabilitation, and the impact factors of these journals range from a low of .08 (Athletic Therapy Today) to a high of 3.77 (Neurorehabilitation and Neural Repair). The most widely cited journal in the category of rehabilitation is the Archives of Physical Medicine and Rehabilitation, which has an impact factor of 2.25.

The table shows that 35 percent of the 80 publications reviewed by the committee were published in journals with ISIs greater than 2.0. Only 6 of the journals in which grantees published were not listed in either Scopus (SJR) or Web of Science (ISI) databases. This could be because these journals have not applied or because they have applied and have not yet been accepted. The committee did not pursue the question beyond searching the two websites. However, the committee learned how changeable the database is. In the 2009 Web of Science journal impact factor database, there were 33 journals listed in the rehabilitation category; in 2010 there were 43.

TABLE 6-4 Journal Impact Factors of Published Articles Reviewed (N = 30 grants, 80 articles)

| Journal Name | Scopus Journal Impact Factors (SJR)a | Web of Science Journal Impact Factors (ISI)b | Number of Articles Reviewed That Were Published in the Journal |

| Archives of Plrysical Medicine and Rehabilitation |

0.16 | 2.25 | 18 |

| journal of Spinal Cord Medicine |

0.12 | 1.44 | 12 |

| journal of Burn Care and Research |

0.11 | 1.56 | 5 |

| Disability and Rehabilitation | 0.10 | 1.49 | 4 |

| Health Affairs | 0.42 | 3.79 | 3 |

| International journal of Telerehabilitation |

NA | NA | 2 |

| journal of Burn Care and Rehabilitation |

NA | NA | 2 |

| journal of Head Trauma Rehabilitation |

0.17 | 2.78 | 2 |

| journal of Keurotrauma | 0.36 | 3.43 | 2 |

| journal of Rehabilitation Research and Development |

0.13 | 1.71 | 2 |

| Rehabilitation Psychology | 0.08 | 1.68 | 2 |

| Topics in Stroke and Rehabilitation |

0.10 | 1.22 | 2 |

| AER-journal of Research and Practice in Visual impairment and Blindness |

NA | NA | 1 |

| Assistive Technology | 0.06 | NA | 1 |

| Brain Injury | 0.12 | 1.75 | 1 |

| Burns | 0.12 | 1.72 | 1 |

| Developmental Seurorehabilitation |

0.07 | 1.38 | 1 |

| Disability and Health journal | 0.04 | NA | 1 |

| Generations | 0.03 | NA | 1 |

| Hearing journal | 0.03 | NA | 1 |

| IEEE Transactions on Biomedical Engineering |

0.14 | 1.78 | 1 |

| Interacting with Computers | 0.04 | 1.19 | 1 |

| International journal of Geriatric Psychiatry |

0.16 | 2.03 | 1 |

| journal of Aging and Social Policy |

0.05 | NA | 1 |

| Journal Name | Scopus Journal Impact Factors (SJR)a | Web of Science Journal Impact Factors (ISI)b |

Number of Articles Reviewed That Were Published in the Journal |

| Journal of Applied | NA | NA | 1 |

| Rehabilitation Counseling | |||

| Journal of Behavioral | 0.23 | 3.17 | 1 |

| Nutrition and Physical Activity | |||

| Journal of Cardiopulmonary Rehabilitation and Prevention | 0.14 | 1.42 | 1 |

| Prevention | |||

| Journal of Occupational | 0.11 | 1.81 | |

| Rehabilitation | |||

| Journal of Vocational | 0.03 | NA | |

| Rehabilitation | |||

| Lecture Kotes in Computer | 0.03 | NA | |

| Science | |||

| NeuroRehabilitation | 0.10 | 1.59 | |

| Keurorebabilitation Neural | 0.31 | 3.77 | |

| Neurorehabilitation Neural Repair | 0.31 | 3.77 | 1 |

| Physical Medicine Clinics of North America | 0.10 | 1.36 | |

| Rehabilitation Education | NA | NA | |

| Spinal Cord | 0.16 | 1.83 | |

| Telemedicine and E-Health | 0.08 | 1.30 | |

| Total Published Articles Reviewed | 80 | ||

NOTE: NA = journal not tracked by Scopus or Web of Science in the 2010 databases.

aSJR (Scopus): The SJR database for 2010 was used. Available: http://www.scopus.com/source/eval.url [August 29, 2011].

bISI (Web of Science): These data were obtained by searching two Web of Knowledge JCR databases (editions): (1) JCR Science Edition 2010 and (2) JCR Social Sciences Edition 2010. Available: http://admin-apps.webofknowledge.com/JCR/JCR?RQ=HOME [August 29, 2011].

SOURCE: Generated by the committee based on data from Scopus (http://www.scopus.com) and Web of Science (http://apps.webofknowledge.com) (membership required for both).

As context for the results of Table 6-4, Annex 6-1 at the end of this chapter includes another table that lists the journals in which the larger pool of 111 NIDRR grantees published (the original pool of NIDRR grants from which the 30 grants in the sample for this evaluation were drawn). Thirtysix percent of these papers were published in prestigious journals (ISI >2).

Using a different metric for citation analysis, the committee was able to identify citations in Scopus for 52 of the 80 journal articles reviewed. The

number of citations for these articles ranged from 1 (10 articles) to 74 (one article). The median number of citations was 7.

Limitations and Possible Biases in Rating the Quality of Outputs

As stated in Chapter 2, which describes methods used in the evaluation, several potential limitations could affect the committee’s ability to draw unequivocal conclusions. First, results are not generalizable because of the small number of grants reviewed (N = 30) and the small number of outputs reviewed from each grantee’s portfolio of work. Second, although the resulting distributions of ratings on all four criteria were quite similar when presented in the aggregate as in Figures 6-1 to 6-4, variation was found in post hoc comparisons of ratings by program mechanism and output type. However, the small number of grants reviewed within each program mechanism, as well as the relatively small number of outputs reviewed other than publications, made these comparisons tentative. Therefore, these comparisons are not reported here. More testing is required to assess the construct validity of the individual criteria. Third, several factors in the methods used could have biased the results in positive or negative directions and posed threats to the validity of the conclusions. These factors are discussed below with regard to the reasons for using the methods, efforts to reduce the threats when possible, and an indication of the direction and magnitude of the possible biases.

• Reviewing only outputs that grantees nominated as their best— The committee’s decision to have grantees nominate outputs that best represented their portfolio of products was made after much debate. Alternatives such as randomly sampling outputs could have presented other challenges in determining how to accomplish this type of complex sampling across various types of grants and outputs that were produced at different times. Allowing grantees to nominate their best outputs could have biased results in a positive direction and could have had a potentially strong effect. The results were slightly skewed in a positive direction toward the “excellent” range of the scale.

• Relying to a degree on grantees’ self-reports of what was important and/or of high quality about their outputs—As discussed later in this chapter in the committee’s self-assessment of the methods used, the outputs themselves were the primary focus of the evaluation, but the supplemental information provided by grantees’ self-reports could have biased the results in a strong positive direction.

• Assessing grants potentially too soon after they ended to gain a full measure of the impact of their outputs—This factor could have

biased results in a negative direction. To address this threat to some degree, the instructions for submitting outputs allowed grantees to submit recent outputs that may have been produced following the end of their NIDRR grants. Results did not show any notable differences in ratings for grants that had ended in 2007 and 2008 versus 2009.

• Excluding grantees in the original sample who declined to participate—Self-selection could have biased results when three grantees declined to participate in the evaluation, and three more were randomly redrawn from the pool. The possible impact of this factor is judged to be fairly small, but could have made the final sample of 30 grants that participated in the evaluation less representative of the larger population of grants.

• Excluding the Section 21, DBTAC, KT, and AART grants from the sample—Because NIDRR grant competitions for different program mechanisms are on different funding cycles, there were not enough grants (at least two) in these particular program mechanisms to include in the pool. This factor would not have biased results, however, because output ratings were not compared by program mechanism, and no conclusions were drawn on the entire NIDRR portfolio.

Conclusions and Recommendations Related to Output Quality

The study question addressed in this chapter was: To what extent are the final outputs from NIDRR grants of high quality? The committee found that the ratings on all of the criteria were symmetrically distributed along the quality scale with the largest proportions of scores falling at the midpoint of 4 (good quality) and most being slightly skewed toward the higher end of the scale. Although close to 75 percent of the outputs rated were found to be in the higher range of the quality scale (i.e., being rated as 4 or “good” to 7 or “excellent”), across all of the criteria a quarter of the outputs reviewed were found to be in the lower quality range (1 or “poor” to 3 or “below good”).

While expert review is a widely accepted method for assessing the quality of research grant proposals, it must be emphasized that the ratings of outputs here were based on expert opinion rather than quantifiable effects. Other limitations and possible biases were discussed above. Having extensively acknowledged the limitations in conducting the summative evaluation, the committee asserts that the system developed for assessing the quality of outputs worked reasonably well, especially for publications, which made up 70 percent of the outputs reviewed. This assertion is made because the criteria used in the evaluation were based on criteria widely used in federal research programs in the United States and other countries, because

expert review methods have validity (National Academy of Sciences, National Academy of Engineering, and Institute of Medicine, 1999), and because the committee did in fact find variation in ratings that was supported by descriptive rationales. Based on the evidence, a set of recommendations is offered to assist NIDRR in striving toward continuously improving the quality of their grants’ outputs.

Improving Quality of Outputs

As stated earlier in this report, the quality of outputs is the product of multiple complex factors that involve the priority-setting process, funding levels, the peer review process, the grant management process, and the quality of the science/research and of the grantees. Findings presented in earlier chapters show that for grantees that are not performing optimally, NIDRR has the option of conducting ongoing formative reviews with experts to identify strategies for improvement. The committee also learned that NIDRR has begun routinely adding to its staff meetings an agenda item for project officers to consult about problems with grants, and that program officers have the flexibility to require additional reporting from grantees as needed. Chapter 5 reveals that grantees believe NIDRR’s oversight and reporting functions foster successful grants and high-quality outputs by assisting them in adhering to their budget and timeline, providing an external quality assurance mechanism for their project management, and prompting them to maintain their focus on project goals for high-quality products.

Recommendation 6-1: Although close to 75 percent of outputs were rated as “good to excellent” (i.e., 4 or higher on the sevenpoint quality scale), NIDRR should make it clear that it expects all grantees to produce the highest-quality outputs.

The intent of this recommendation is for NIDRR to encourage all of its grantees to publish in peer-reviewed journals, present at national meetings, publish/disseminate materials, and bring technology solutions to market while producing these outputs at the highest levels of quality. To this end, NIDRR should push forward by establishing clear and consistent expectations for grantees to publish in higher impact journals, which would be one indicator of higher quality. For outputs that are not publications, NIDRR should establish clear and consistent standards for quality to be achieved and adopt appropriate metrics for assessing whether grantees are meeting those standards. One way of setting the quality bar higher would be to begin to encourage grantees to use standardized reporting forms and checklists for reviewing the technical quality of their own work before subjecting it to external review. Many resources exist for reviewing different types of

research manuscripts and various nonpublication outputs to ensure that the necessary technical elements have been covered. (See http://www.equatornetwork.org/ [November 22, 2011] for examples of standards for outputs such as publications, health information products, and clinical guidelines.) For various technologies and devices, resources such as the Principles of Universal Design (Story and Mueller, 2002) provide a good starting point for assessing quality in terms of access and usability. Although the evidence base for these types of checklists and standards may vary (testing is described in the protocols), they do provide indicators of quality for ongoing self-assessment. When grantees provided evidence of self-assessment, along with external review by consumers, academics, and other stakeholders, the committee rated their outputs higher on the quality scale. Finally, a frequent weakness noted among the tools and technology outputs in particular was that there was insufficient evidence of the science underlying their development. Relying on checklists and standards should assist in reminding developers of the need to document their evidence base.

Additionally, despite limitations of using bibliometrics as described earlier in this chapter, they are a valuable and objective set of metrics that can be used in combination with other assessment strategies. NIDRR has conducted bibliometric analyses in the past, but has not routinely incorporated use of these metrics into its performance measurement.

Recommendation 6-2: NIDRR should consider undertaking bibliometric analyses of its grantees’ publications as a routine component of performance measurement.

Bibliometric analyses would take advantage of an existing data source for periodic measurement of the scientific impact of NIDRR grantees’ publications, as well as the extent to which these outputs are being disseminated and used. This type of metric is being recommended for use in combination with other measures, just as it was used in this evaluation along with expert review and supplemental evidence of the impact an article may have had on consumers, practice, health and social systems, social and health policy, or the private sector and commercialization. Alternative journal quality metrics are being developed (Brown, 2011), but SJR and ISI are widely used and accepted in the field, which facilitates comparison of journal outputs of NIDRR grants and those of other federal agencies and across the diverse fields of research and development funded by NIDRR. Several technology journals in the area of rehabilitation do have journal impact factors as presented in Table 6-4 and Annex 6-1 (e.g., Assistive Technology, Transactions on Biomedical Engineering, Telemedicine and E-health). However, technology grantees may publish in trade journals and magazines that are not tracked by Scopus and Web of Science. Alternative metrics could be

used for development projects, such as the extent of adoption and utilization of devices.

SELF-ASSESSMENT OF THE COMMITTEE’S REVIEW METHODS

As part of its charge, the committee engaged in a subjective, selfreflective appraisal of its process in developing a system for assessing the quality of grantee outputs. The committee endeavored to assess its evaluation methods informally throughout the study process. Members engaged in continuous reflection and recording of strengths and weaknesses during the rating process conducted in subgroup meetings. To facilitate this effort, the committee chair participated in all subgroup meetings to ensure that members understood how each subgroup was applying the rating methods. In addition, conference calls with the committee were held after each set of subgroup meetings to discuss the evaluation process and refine the methods. Lastly, during its final meeting, the committee devoted a half-day session to discussion of the strengths and weaknesses of the process and the development of conclusions and recommendations for future evaluations. This discussion was based on the committee’s continuous reflection on the process, along with findings from an informal assessment of committee members’ individual views about the review process.

Each committee member was asked about his or her level of confidence in multiple aspects of the review process and its replication. Their responses were intended to provide an indicator of each committee member’s impressions of the output rating process. Individual members were generally confident in the review process and its potential replication. Aspects of the process in which the committee had the greatest confidence were the technical quality scores, the face validity of the consensus scores that were produced for outputs, and the appropriateness of a seven-point quality rating scale.

These individual impressions were consistent with those developed by the committee as a whole in reflecting on the strengths and weakness of the evaluation process over the course of its work. They also confirmed the committee’s impressions regarding the challenge of rating outputs other than articles in peer-reviewed journals. The committee members indicated their lowest confidence in that aspect of the review process.

The committee’s views regarding replication of the review process largely mirrored those regarding the process itself. Committee members expressed the greatest confidence in the potential ability to match appropriate reviewer expertise with outputs for review and the ability to secure knowledgeable reviewers appropriately. They expressed less confidence in the potential ability to assess the overall quality of grants by reviewing only selected outputs.

Overall, members’ reflections on the summative evaluation process suggest

that, based on their experience, it worked well and achieved what it was designed to do. However, the committee encountered several challenges and limitations during the course of its work that limit the generalizability of the findings from this evaluation and restrict what can be said about the totality of outputs generated by all NIDRR grantees.

CONCLUSIONS AND RECOMMENDATIONS FOR FUTURE EVALUATIONS

In this section, the committee presents its conclusions and recommendations on defining future evaluation objectives, strengthening the output assessment, and improving use of the APR to capture data for future evaluations. The goal is to address aspects of the process that might be reconsidered to improve future evaluations and to ensure that evaluation results optimally inform NIDRR’s efforts to maximize the impact of its research grants.

Defining Future Evaluation Objectives

The primary focus of the summative evaluation was on assessing the quality of research and development outputs produced by grantees. The evaluation did not include in-depth examination or comparison of the larger context of the funding programs, grants, or projects within which the outputs were produced. Although capacity building is a major thrust of NIDRR’s center and training grants, assessment of training outputs, such as the number of trainees moving into research positions, was also beyond the scope of the committee’s charge.

NIDRR’s program mechanisms vary substantially in both size and duration, with grant amounts varying from under $100,000 (fellowship grants) to more than $4 million (center grants) and their duration varying from 1 to more than 5 years. Programs also differ in their objectives, so the expectations of the grantees under different programs vary widely. For example, a Switzer training grant is designed to increase the number of qualified researchers active in the field of disability and rehabilitation research. In contrast, center grants and Model System grants have multiple objectives that include research, technical assistance, training, and dissemination. Model System grants (BMS, TBIMS, SCIMS) have the added expectation of contributing patient-level data to a pooled set of data on the targeted condition.

The number of grants to be reviewed was predetermined by the committee’s charge as 30, which represented about one-quarter of the pool of 111 grants from which the sample was drawn. The committee’s task included drawing a sample of grants that reflected NIDRR’s program mechanisms.

The number of grants reviewed for any of the nine program mechanisms included in the sample was small—the largest number for any single program was 10 (FIP). Therefore, the committee made no attempt to compare the quality of outputs by program mechanism.

NIDRR directed the committee to review two outputs for each of the grantee’s projects. A grantee with a single project had two outputs reviewed, a grantee with three projects had six outputs reviewed, and so on. Although larger grants with more projects also had more outputs reviewed, the evaluation design did not consider grant size, duration, or the relative importance of a given project within a grant.

The committee was asked to produce an overall grant rating based on the outputs reviewed. Results at the grant level are subject to more limitations than those at the output level because of the general lack of information about how the outputs did or did not interrelate; whether, and if so how, grant objectives were accomplished; and the relative priority placed on the various outputs. In addition, for larger, more complex grants, such as center grants, a number of expectations for the grants, such as capacity building, dissemination, outreach, technical assistance, and training, are unlikely to be adequately reflected in the committee’s approach, which focused exclusively on specific outputs. The relationship of outputs to grants is more complex than this approach could address.

Recommendation 6-3: NIDRR should determine whether assessment of the quality of outputs should be the sole evaluation objective.

Considering other evaluation objectives might offer NIDRR further opportunities to continuously assess and improve its performance and achieve its mission. Alternative designs would be needed to evaluate the quality of grants or to allow comparison across program mechanisms. For example, if one goal of an evaluation were to assess the larger outcomes of grants (i.e., the overall impact of their full set of activities), in addition to the methods used in the current output assessment, the evaluation would need to include interviewing grantees about their original objectives to learn about how the grant was implemented and any changes that may have occurred in the projected pathway, how various projects were tied into the overall grant objectives, and how the outputs demonstrated the achievement of the grant and project objectives. The evaluation would also involve conducting bibliometric or other analyses of all publications and examining documentation of the grant’s activities and self-assessments, including cumulative APRs over time. Focusing at the grant level would provide evidence of movement along the research and development pathway (e.g., from theory to measures, from prototype testing to market), as well as allow for assessment of other

aspects of the grant, such as training and technical assistance and the possible synergies of multiple projects within one grant.

If the goal of an evaluation were to assess and compare the impact of program mechanisms, the methods might vary across different program mechanisms depending on the expectations for each, but would include those mentioned above and also stakeholder surveys to learn about the specific ways in which individual grants have affected their intended audiences. With regard to sampling methods, larger grant sample sizes that allowed for generalization and comparison across program mechanisms would be needed. An alternative would be to increase the grant sample size in a narrower area by focusing on grants working in specific research areas across different program mechanisms or on grants with shared objectives (e.g., product development, knowledge translation, capacity building).

NIDRR’s own pressing questions would of course drive future evaluations, but other levels of analysis on which NIDRR might focus include the portfolio level (e.g., Model System grants, research and development, training grants), which NIDRR has addressed in the past; the program priority level (i.e., grants funded under certain NIDRR funding priorities) to answer questions regarding the quality and impact of NIDRR’s priority setting; and institute-level questions aimed at evaluating the net impact of NIDRR grants to test assumptions embedded in NIDRR’s logic model. For example, NIDRR’s logic model targets adoption and use of new knowledge leading to changes/improvements in policy, practice, behavior, and system capacity for the ultimate benefit of persons with disabilities (National Institute on Disability and Rehabilitation Research, 2006). The impact of NIDRR grants might also be evaluated by comparing grant proposals that were and were not funded. Did applicants that were not funded by NIDRR go on to receive funding from other agencies for projects similar to those for which they did not receive NIDRR funding? Were they successful in achieving their objectives with that funding? What outputs were produced?

The number of outputs reviewed should depend on the unit of analysis. At the grant level, it might be advisable to assess all outputs to examine their development, their interrelationships, and their impacts. A case study methodology could be used for related subsets of outputs. If NIDRR aimed its evaluation at the program mechanism or portfolio level, sampling grants and assessing all outputs would be the preferred method. For output-level evaluation, having grantees self-nominate their best outputs, as was done for the present evaluation, is a good approach.

Although assessing grantee outputs is valuable, the committee believes that the most meaningful results would come from assessing outputs in the context of a more comprehensive grant-level and program mechanism-level evaluation. More time and resources would be required to trace a grant’s progress over time toward accomplishing its objectives; to understand its

evolution, which may have altered the original objectives; and to examine the specific projects that produced the various outputs. However, examining more closely the inputs and grant implementation processes that produced the outputs would yield broader implications for the value of grants, their impact, and future directions for NIDRR.

Strengthening the Output Assessment

The committee was able to develop and implement a quantifiable expert review process for evaluating the outputs of NIDRR grantees, which was based on criteria used in assessing federal research programs in both the United States and other countries. With refinements, this method could be applied to the evaluation of future outputs even more effectively. Nonetheless, in implementing this method, the committee encountered challenges and issues related to the diversity of outputs, the timing of evaluations, sources of information, and reviewer expertise.

Diversity of Outputs

The quality rating system used for the summative evaluation worked well for publications in particular, which made up 70 percent of the outputs reviewed. Using the four criteria outlined earlier in this chapter, the reviewers were able to identify varying levels of quality and the characteristics associated with each. However, the quality criteria were not as easily applied to such outputs as websites, conferences, and interventions; these outputs require more individualized criteria for assessing specialized technical elements, and sometimes more in-depth evaluation methods. Applying one set of criteria, even though broad and flexible, could not guarantee sufficient and appropriate applicability to every type of output.

Timing of Evaluations

The question arises of when best to perform an assessment of outputs. Technical quality can be assessed immediately, but assessment of the impact of outputs requires the passage of time between the release of the outputs and their eventual impact. Evaluation of outputs during the final year of an award may not allow sufficient time for the outputs to have full impact. For example, some publications will be forthcoming at this point, and others will not have had sufficient time to have an impact. The trade-off of waiting a year or more after the end of a grant before performing an evaluation is the likelihood that staff involved with the original grant may not be available, recollection of grant activities may be compromised, and engagement or interest in demonstrating results may be reduced. However, publications