This chapter begins by explaining the scope of the committee’s evaluation. It then describes the methods used for the evaluation. Both the scope of the evaluation and potential limitations of its findings are discussed to clarify the extent to which the findings can be generalized and used by the National Institute on Disability and Rehabilitation Research (NIDRR) to enhance its priority-setting, peer review, and grant management processes.

SCOPE OF THE EVALUATION

This section explains the parameters of the committee’s evaluation through a conceptual framework that guided the evaluation. It also defines “quality” as operationalized for this study.

Conceptual Framework

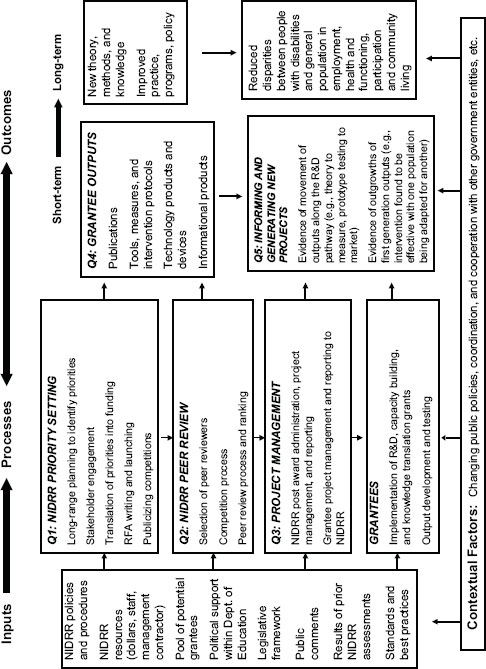

In designing the evaluation, the committee used NIDRR’s published logic model (National Institute on Disability and Rehabilitation Research, 2006) as a starting point. In this model, NIDRR’s investments in grants aimed at capacity building, research and development, and knowledge translation are intended to produce discoveries; theories, measures, and methods; and interventions, products, devices, and environmental adaptations (i.e., short-term outcomes). These outputs should promote the adoption and use of knowledge leading to changes in policy, practice, behavior, and system capacity (i.e., intermediate outcomes) for the ultimate benefit of persons with disabilities in the domains of employment, participation and

community living, and health and function (i.e., long-term outcome arenas). NIDRR holds itself accountable primarily for the generation of knowledge in the short-term outcome arena, and it is this arena that was the focus of the committee’s external evaluation.

The committee examined how NIDRR’s grant funding is prioritized for these investment areas, the processes used for reviewing and selecting grants, and the quality of the research and development outputs, as depicted in the conceptual framework in Figure 2-1. The committee developed this framework to guide the evaluation effort. The boxes labeled Q1 to Q5 (i.e., NIDRR’s process and summative evaluation questions 1 to 5; see Chapter 1), were the direct foci of the evaluation. The figure also includes other inputs, contextual factors, and implementation considerations as they are likely to influence the processes and short-term outcomes. The figure shows that the measurable elements of the short-term outcomes are what NIDRR considers to be the array of grant outputs (Q4) generated by grantees, which are expected to inform and generate new projects (Q5). Also shown are the expected long-term outcomes, which include an expanded knowledge base; improved programs and policy; and reduced disparities for people with disabilities in employment, participation and community living, and health and function. However, these long-term outcomes were beyond the scope of the committee’s evaluation.

In summary, the scope of the evaluation encompassed key NIDRR processes of priority setting, peer review, and grant management (process evaluation) and the quality of grantee outputs (summative evaluation). It is important to point out that the scope of the summative evaluation did not include a larger explicit focus on assessing the overall performance of individual grants or NIDRR portfolios (e.g., Did grants achieve their proposed objectives? Did the various research and development portfolios operate as intended to produce the expected results?). Although capacity building is a major thrust of NIDRR’s center and training grants, the present evaluation did not include assessment of outputs related to capacity building (e.g., number of trainees moving into research positions), which would have required methods different from those used for this study.

Definition of “Quality”

The evaluation focused on the quality of NIDRR’s priority-setting, peer review, and grant management processes and on the quality of the outputs generated by grants. A review of the literature on evaluation of federal research programs reveals that the term “quality” is operationalized in a variety of ways. For example, the National Research Council (NRC) and Institute of Medicine (IOM) (2007) developed a framework and suggested

measures for assessing multiple research programs across the National Institute for Occupational Safety and Health. That approach refers to various quality-related criteria for assessing “outputs” and “intermediate outcomes.” In another example, within a return-on-investment framework (Panel on Return on Investment in Health Research, 2009), the Canadian Academy of Health Sciences developed a menu of indicators that can be used for evaluating research programs. “Quality criteria” were included as an indicator of “advancing knowledge,” along with “activity” (also called “outputs”) and “outreach” (also called “transfer”). A third example is a framework and web-based survey approach developed by RAND (Ismail et al., 2010; Wooding and Starkey, 2010; Wooding et al., 2009) for measuring outputs and impacts of research programs for the Arthritis Research Campaign (UK), which was also applied to the Medical Research Council (UK) and the Canadian Institute of Health Research-Institute of Musculoskeletal Health and Arthritis. The survey contains a series of questions for grantees that are focused on stages of research and development and possible impacts. A final example is an approach, developed in Taiwan for assessing a wide range of the country’s federal research programs, that refers to quality-related criteria as “performance attributes” in the areas of academic achievement, technology output, knowledge services, and technology diffusion (Chien et al., 2009).

Annex 2-1 at the end of this chapter shows the various quality criteria and dimensions used across these studies, as well as those compiled by an external advisory group convened by NIDRR in August 2008 to assist the agency in laying the groundwork for the current External Evaluation of NIDRR and its Grantees. Referring specifically to the four output categories used by NIDRR (i.e., publications, tools, technology products, and information products), the advisory group provided responses to the following questions: What criteria could be used by an external peer review panel to rate the quality of NIDRR grantee research outputs? What are some of the dimensions of quality? The first column in the table in Annex 2-1 summarizes the advisory group’s suggested criteria and dimensions for assessing the quality of NIDRR outputs and relates these to the criteria used in the other studies referred to above.

The list of criteria is intended to be exhaustive to illustrate the types of criteria and dimensions that have been used in U.S. and international studies of federal research programs and that the committee drew on in developing the criteria used in this evaluation. Most of these criteria and dimensions were incorporated into the summative evaluation, as described in Chapter 6 of the report and as can be seen in the output quality rating form used for the evaluation (see Appendix B).

In keeping with the literature and other approaches to evaluating federal research programs, the committee used a broad concept of “quality”

encompassing attributes that lead to the selection of grants and eventual grant results that (1) meet technical and professional standards of science and technology; (2) will advance the knowledge base or the field of research, policy, or practice; (3) are likely to have or have had demonstrated impacts on science, consumers, provider practice, health and social systems, social and health policy, and the private sector/commercialization; and (4) are disseminated according to principles of appropriate knowledge translation.

METHODS

The committee used a cross-sectional design that incorporated both quantitative and qualitative methods to address the two sets of questions in the process and summative evaluation phases of the study. The process evaluation phase involved reviewing existing documentation and collecting testimonial data to examine how NIDRR, through its policies and procedures and in practice, develops its research and funding priorities, reviews and evaluates submitted proposals, makes decisions and awards grants based on these reviews, and manages grant-supported activities. The summative evaluation phase involved the use of expert panels to assess the quality of grant outputs. The following sections present the study methods that were used to address the two sets of questions for these two phases. Data collection took place between July 2010 and February 2011.

Sources of Information, Data Collection, and Analysis

Process Evaluation

To address questions related to NIDRR’s priority-setting, peer review, and grant management processes, the committee reviewed existing documentation (e.g., legislation, Federal Register notices, NIDRR and U.S. Department of Education [ED] policies and procedures) and interviewed NIDRR and ED management to obtain a more thorough and cohesive understanding of these processes.1 The committee gained additional insight into NIDRR’s peer review process by listening to teleconferences held by three panels as they conducted their reviews of different competitions. The committee also collected original data from the following key informant groups.

NIDRR staff and contractors NIDRR management provided the committee with a list of the agency’s administrative and program management staff who had sufficient knowledge, experience, and responsibilities in the

_______________

1 The committee conducted interviews with NIDRR and ED management in four sessions during summer 2010 and one session in spring 2011.

priority-setting, peer review, and grant management processes to respond to the committee’s interview questions. The committee interviewed 16 NIDRR staff from this list in person to gather information on their roles in and perspectives on these processes. Two-thirds of the interviewees were project officers or direct supervisors of project officers; the remaining held administrative positions. The interview questions were open-ended, and the interviews were recorded and transcribed. The committee also interviewed by telephone a manager from a NIDRR contractor that assists the agency with the logistics of convening peer review panels to obtain the contractor’s perspective on the peer review process.

NIDRR stakeholder organizations The committee obtained a list of 130 organizations that operate in NIDRR’s arena from (1) NIDRR, which provided the names of professional and advocacy organizations the agency considers to be stakeholders, and (2) the Interagency Committee on Disability Research (ICDR), which provided the names of federal agencies that are statutory members of the ICDR or nonstatutory members that have participated in ICDR special committees. The committee sent all 130 organizations invitations to participate in an online survey; the invitations were addressed specifically to the executive directors of the professional and advocacy organizations and to the named representatives of the federal agencies. Invited respondents were asked to either complete the survey themselves or forward it to a member of their organization who would be knowledgeable about the organization’s relationships with NIDRR. The invitations were sent in an e-mail letter that provided a link to the online survey and a password for logging on to the secure website. If respondents were unable to access the survey online or preferred another method, the committee offered to send them the survey in hard-copy form or to conduct it by telephone. The survey contained a series of closed- and open-ended questions inquiring about the organizations’ role in the NIDRR planning and priority-setting process, respondents’ perspectives on the process, benefits their agencies derived from NIDRR grants or outputs, and suggestions for enhancements to the priority-setting process. Of the 130 organizations, 72 responded to the survey (a response rate of 55 percent). According to Baruch and Holtom (2008), who examined 175 organizational studies, 55 percent is an acceptable response rate for a survey targeting executive directors.

NIDRR peer reviewers The committee sent invitations to complete a survey to all individuals (a total of 156) who served on NIDRR peer review panels during fiscal year 2008-2009. The invitations were sent in an e-mail letter that provided a link to the online survey and a password for logging on to the secure website. If respondents were unable to access the survey online or preferred another method, the committee offered to send it to them in hard-

copy form or to conduct the survey by telephone. The survey contained a series of closed- and open-ended questions inquiring about their experiences with and perspectives on the NIDRR peer review process, how it compares with the peer review processes of other federal research agencies, and suggestions for enhancements to the process. Four potential respondents were deleted from the list because their e-mail addresses were invalid even after a concentrated search. Of the 152 individuals successfully invited, 121 responded to the questionnaire (a response rate of 80 percent).

Principal investigators (PIs) of NIDRR grants The committee invited 30 PIs whose grants were randomly selected for review in the summative phase of the evaluation (see the section below on methods for the summative evaluation) to respond in writing to a special set of questions focused on NIDRR’s priority-setting, peer review, and grant management processes. One set of questions focused specifically on NIDRR’s third major study question, which related to planning and budgetary processes used by grantees to promote high-quality outputs. Twenty-eight of the 30 grantees opted to respond to this set of process questions.

Analysis of Process Data

The committee analyzed quantitative data from the online surveys of stakeholders and peer reviewers descriptively to examine frequencies and measures of central distribution. For process data gathered from NIDRR staff, grantees, stakeholder organizations, and peer reviewers (i.e., responses to open-ended questions that were based on individuals’ opinions or perspectives), standard qualitative analysis techniques (Miles and Huberman, 1994; Patton, 2002) were used. These techniques involved a three-phase process of coding the data to identify common topics, categories, and themes. The first phase in the iterative process of coding each data source involved an initial examination or “read-through” of the complete data set. The initial examination resulted in a preliminary list of topic codes, which were then used to code the data. The second phase of the process involved reviewing the coded data in order to refine and finalize the list of codes. During this effort, analysts generally combined two or more codes into one of the existing codes or into a new overarching code. Multiple variations were attempted before a final list of codes was determined and a final coding of the data was completed. The final phase of the process involved reviewing the finalized coded data and drawing out categories and themes.

While researchers sought to perform each analytic phase similarly for each source of data, differences in the nature and volume of data from the different sources necessitated some variation:

• Grantees, peer reviewers, and stakeholders—Open-ended data were collected from these sources in the form of their written answers to specific questions. The coding of these data was done initially by question, and codes were then compared and combined across the questions.

• NIDRR staff—Qualitative data were collected from NIDRR staff through personal interviews that were audio recorded and transcribed. The questions concerning NIDRR processes of priority setting, peer review, and grant management were phrased in general terms, and respondents were then prompted to provide additional details or clarification. Preliminary coding was done on the first five transcribed interviews, then refined as additional interviews were analyzed. Code lists were significantly refined through the iterative process described above.

It is important to recognize the limitations of qualitative analyses of responses elicited to open-ended survey questions. First, the data set does not generally represent the viewpoints of all respondents because it is common for 15 to 35 percent not to respond to the open-ended questions (Ulin et al., 2005). Second, among those who do respond, a varying number of the responses either are not written in a coherent manner; do not represent complete thoughts; or are vague generalities, lacking detail or specificity. Third, it is common for respondents with critical comments or suggestions to respond more often than those with neutral or positive comments (Gendall et al., 1996).

Finally, Miles and Huberman (1994) encourage using counts of the number of times that certain codes or topics are observed in the data because these counts come into play when describing results, such as the frequency or consistency of observations. In the NIDRR staff (N = 16 respondents) and grantee (N = 28 respondents) data sets collected for this evaluation, counts were used in a highly limited manner because the frequencies were very low in these small data sets. Where data sets were larger (stakeholders = 72 respondents and peer reviewers = 121), counts were used in reporting results of the qualitative analyses for greater transparency, but the committee acknowledges that in most cases, the number of specific observations for certain topics also is quite low. Despite these limitations, the committee believes that the collected data and qualitative analyses add background, context, and insight to many issues raised by respondents. In addition, they can lend support to this report’s conclusions and recommendations when similar issues emerge across respondent groups or across both qualitative and quantitative data sources.

Summative Evaluation

The summative study questions focused on the quality of outputs generated by NIDRR grantees and the potential for the outputs to lead to further research and development. Chapter 6, which summarizes the results of the summative evaluation, also describes in detail the sampling, measurement, and data collection methods used to conduct the assessment of outputs. Therefore, these methods are described only briefly here.

The committee and NRC staff sampled 30 grants from NIDRR’s portfolio, and the committee as a panel of experts reviewed outputs of these 30 grants. These grants were drawn from nine of NIDRR’s program mechanisms: Burn Model System, Traumatic Brain Injury Model System, Spinal Cord Injury Model System, Rehabilitation Research and Training Center, Rehabilitation Engineering Research Center, Disability and Rehabilitation Research Project, Field Initiated Project, Small Business Innovation Research II, and Switzer Fellowship. The primary focus of the committee’s summative evaluation was on assessing the quality of research and development outputs produced by grantees. The review focused on four different types of outputs, as defined in NIDRR’s Annual Performance Report: (1) publications (e.g., research reports and other publications in peer-reviewed and nonpeer-reviewed journals); (2) tools, measures, and intervention protocols (e.g., instruments or processes created to acquire quantitative or qualitative information, knowledge, or data on a specific disability or rehabilitation issue or to provide a rehabilitative intervention); (3) technology products and devices (e.g., industry standards/guidelines, software/netware, inventions, patents/licenses/patent disclosures, working prototypes, product(s) evaluated or field tested, product(s) transferred to industry for potential commercialization, product(s) in the marketplace); and (4) informational products (e.g., training manuals or curricula; fact sheets; newsletters; audiovisual materials; marketing tools; educational aids; websites or other Internet sites produced in conjunction with research and development, training, dissemination, knowledge translation, and/or consumer involvement activities).

In assessing the quality of outputs, the committee used the following four criteria, stemming from its definition of quality (as discussed earlier): (1) technical quality, (2) advancement of knowledge or the field, (3) likely or demonstrated impact (on science, consumers, provider practice, health and social systems, social and health policy, and the private sector/commercialization), and (4) dissemination according to principles of appropriate knowledge translation. The committee analyzed data from the summative evaluation using frequency distributions and reported ratings of the quality of outputs in the aggregate by quality criteria assessed.

Table 2-1 summarizes the data collection and measurement methods described above.

TABLE 2-1 Summary of Data Collection and Measurement Methods

| Study Question | Measures/Instruments | Data Sources | Data Collection Methods |

| Process Evaluation (July to September 2010) | |||

| 1. To what extent is NIDRR’s priority-writing process conducted in such a way as to enhance the quality of the final results? | • Existing data: - Rehabilitation Act of 1973, as amended, and other related regulations (Education Department General Administrative Regulations, Department of Education Handbook for the Discretionary Grant Process) - NIDRR briefing documents (descriptive and procedural) - Current and proposed NIDRR Long-Range Plans - Notices inviting applications (NIAs) and application kits by program mechanism (2009 and 2010) (relevant to funded priorities) - NIDRR assessments: U.S. Office of Management and Budget Performance Assessment Rating Tool (2005); Annual Performance Assessment Expert Panel Reviews (pilot reports for 2006, 2007) - NIDRR External Advisory Group report |

NIDRR and Federal Register | Extraction of relevant detail from documentation |

| • Questions for process evaluation interviews tailored for NIDRR management and staff | NIDRR personnel, stakeholder | Semistructured face-to-face interviews | |

| • Web-based survey (form tailored for NIDRR stakeholders) | organizations | Web-based survey (self-administered) | |

| Study Question | Measures/Instruments | Data Sources | Data Collection Methods |

| Process Evaluation (July to September 2010) | |||

| 2. To what extent are peer reviews of grant applications done in such a way as to enhance the quality of the final results? | • Existing data: As above for question #1, plus: - NIDRR descriptive and procedural documents on peer review: Application Technical Review Plan; peer reviewer instructions - NIAs and application kits by program mechanism (2009 and 2010) (relevant to criteria for rating grants) - Analysis of peer review tracking database (Synergy,2008) |

NIDRR and Federal Register | Extraction of relevant detail from documentation |

| • Questions for process evaluation interviews tailored for NIDRR management and staff | NIDRR personnel, peer reviewers | Semistructured face-to-face interviews | |

| • Web-based survey (form tailored for peer reviewers) | Web-based survey (self-administered) | ||

| 3. What planning and budgetary processes does the grantee use to promote high-quality outputs? | • Questions for process evaluation interviews tailored for NIDRR management and staff • Grantee questionnaire |

NIDRR personnel, grantees (principal investigators [PIs]) | Semistructured face-to-face interviews Self-administered questionnaire |

| Summative Evaluation (October 2010 to January 2011) | |||

| 4. To what extent are the final outputs from NIDRR grants of high quality? | • Existing data: NIDRR Annual Performance Report database to describe and summarize the outputs | NIDRR | Extraction of data from APR |

| • Grantee outputs and supplemental questionnaire with items classifying and describing the types of outputs, features of the outputs that reflect the quality criteria, and future research and development as an outgrowth |

Grantees (PIs) | Self-administered questionnaire | |

| • Quality of outputs: committee member rating sheet, based on criteria such as: - Technical quality - Advancement of knowledge or the field - Likely or demonstrated impact - Dissemination |

Committee’s peer review scores | Peer review of outputs and other materials (APR, grantee questionnaire) | |

| 5. To what extent are the results of the reviewed research and development outputs used to inform new projects by both the grantee and NIDRR? | • Questions for process evaluation interviews • Grantee questionnaire: same questionnaire as in #4 above for items related to the generation of new research and outputs |

NIDRR personnel | Semistructured face-toface interviews |

| Grantees (PIs) | Self-administered questionnaire | ||

Potential Limitations

Although the committee used the most rigorous methods possible to conduct its process and summative evaluations, the evaluation results may have been affected by a number of potential limitations. First, based on the study scope as described above, the committee was limited to directly evaluating only grant outputs. Evaluation of grants was performed only as a second step through synthesis of the results of the output evaluation. Additionally, several grant program mechanisms were not evaluated because the timing of their funding cycles did not accord with the timing of this study.

Second, measurement validity is concerned with the degree to which the study indicators accurately portray the concept of interest (Newcomer, 2011). For the process evaluation, the committee gathered information from different sources (existing documentation, interviews, observation, surveys), but the interviews relied on the accuracy of the memories and perceptions of individuals, which could be susceptible to recall or social desirability biases. For example, the NIDRR staff who were interviewed may have felt pressed to provide positive input on the processes being reviewed. However, NRC staff who conducted the interviews believe all NIDRR staff members were candid in their comments.

To assess the quality of outputs for the summative evaluation, the committee used sound criteria that were based on the cumulative literature reviewed and its members’ own research expertise in diverse areas of disability and rehabilitation research, medicine, engineering, and the social sciences, as well as their expertise in evaluation, economics, knowledge translation, and policy. However, the accuracy of the committee’s assessment of the quality of outputs could have been affected by a number of factors. For example, the committee’s combined expertise did not include every possible content area in such a broad field as disability and rehabilitation research. Because of the diversity of the field, the grants and outputs were extremely varied, so applying one set of criteria, even though broad and flexible, could not guarantee accurate applicability to every output. For example, websites, conferences, training curricula, therapeutic interventions, and educational outreach services ideally would require additional evaluation methods tailored to those types of outputs. The limitations and challenges encountered in conducting the output assessment are discussed in more detail in Chapter 6.

Third, measurement reliability is concerned with the extent to which a measurement can be expected to produce similar results on repeated observations of the same condition or event (Newcomer, 2011). The expert review methods used to assess the quality of grantee outputs could pose a threat to measurement reliability in that they relied on subjective assessments of different expert reviewers. To address this limitation, the committee members frequently discussed how they were applying the criteria and interpreting the anchors of the rating scale so they could calibrate their ratings. They rated

the outputs independently and then discussed their results and determined overall ratings that reflected consensus scores. Results of an interrater reliability analysis are presented in Chapter 6.

Fourth, with regard to the process evaluation, it is possible that the respondents choosing to respond to the online surveys of stakeholders and peer reviewers may have differed from those who declined to participate. However, the response rates were respectable on both surveys (80 percent on the peer reviewer survey and 55 percent on the stakeholder survey), and the results of those two surveys also appeared to be balanced and not biased.

Finally, results of the summative evaluation cannot be generalized because of the small sample size and the small number of outputs reviewed from each grant. A total of 30 grants were reviewed across nine program mechanisms from a pool of 111 grants. Another threat to the generalizability of the findings stems from the fact that most of the grants reviewed ended in 2009. Because of the length of time it takes to publish research articles, grantees may have been unable to share their most important work with the committee. Other potential biases in the summative evaluation methods are described in Chapter 6.

Review of the Evaluation Plan

Before the committee implemented its evaluation plan, the plan was reviewed by leading experts in the field who provided suggestions for strengthening the methods to be used. In addition, the evaluation plan was reviewed and approved by the Institutional Review Board of the National Academies, under the category of Expedited Review, as meeting all criteria related to data confidentiality, security, and final disposition; informed consent; and potential risks and benefits to human subjects.

CONCLUSION

In conclusion, the committee used a conceptual framework (see Figure 2-1) developed around NIDRR’s study questions and a definition of quality drawn from the literature as the foundation for its evaluation. The study was conducted with a cross-sectional design using quantitative and qualitative methods. The process evaluation included a review of existing documentation, interviews, online surveys, and written questionnaires. The summative evaluation included an expert panel review of outputs from randomly selected grantees. While the nature of the evaluation itself and the methods used suggest several potential limitations to the study findings, the committee strove to address these limitations where possible and acknowledge cases in which doing so was not possible.

REFERENCES

Baruch, Y., and Holtom, B.C. (2008). Survey levels and trends in organizational research. Human Relations, 61(8), 1139-1160.

Chien, C.F., Chen, C.P., and Chen, C.H. (2009). Designing performance indices and a novel mechanism for evaluating government R&D projects. Journal of Quality, 19(2), 119-135. Gendall, P., Menelaou, H., and Brennan, M. (1996). Open-ended questions: Some implications for mail survey research. Marketing Bulletin, 7, 1-8.

Ismail, S., Tiessen, J., and Wooding, S. (2010). Strengthening research portfolio evaluation at the Medical Research Council: Developing a survey for the collection of information about research outputs. Santa Monica, CA: RAND Corporation.

Miles, M.B., and Huberman, A.M. (1994). Qualitative data analysis (2nd ed.). Thousand Oaks, CA: Sage.

National Institute on Disability and Rehabilitation Research. (2006). Department of Education: National Institute on Disability and Rehabilitation Research—Notice of Final Long-Range Plan for Fiscal Years 2005–2009. Federal Register, 71(31), 8,166-8,200.

National Institute on Disability and Rehabilitation Research. (2008, August). Meeting report of the NIDRR External Evaluation Advisory Group. Washington, DC: National Institute on Disability and Rehabilitation Research.

National Research Council and Institute of Medicine. (2007). Mining safety and health research at NIOSH: Reviews of research programs of the National Institute for Occupational Safety and Health. Committee to Review the NIOSH Mining Safety and Research Program. Committee on Earth Resources and Board on Earth Sciences and Resources.

Washington, DC: The National Academies Press.

Newcomer, K.E. (2011). Strategies to help strengthen validity and reliability of data. Unpublished presentation. George Washington University, Washington, DC Panel on Return on Investment in Health Research. (2009). Appendices—Making an impact: A preferred framework and indicators to measure returns on investment in health research.

Ottawa, Canada: Canadian Academy of Health Sciences.

Patton, M.Q. (2002). Qualitative research & evaluation methods. Thousand Oaks, CA: Sage. Synergy Enterprises, Inc. (2008). Draft task 4 analysis. Washington, DC: National Institute on Disability and Rehabilitation.

Ulin, P., Robinson, E., and Tolley, E. (2005). Qualitative methods in public health: A field guide for applied research. San Francisco: Jossey-Bass.

Wooding, S., and Starkey, T. (2010). Piloting the RAND/ARC Impact Scoring System (RAISS) tool in the Canadian context. Cambridge, England: RAND Europe.

Wooding, S., Nason, E., Starkey, T., Haney, S., and Grant, J. (2009). Mapping the impact: Exploring the payback of arthritis research. Santa Monica, CA: RAND Corporation.

TABLE A2-1 Summary of Quality Criteria and Dimensions

| Criteria/Dimensions | NIDRR External Advisory Group (U.S.) | National Institute for Occupational Safety and Health (NIOSH) (U.S.) | Canadian Academy of Health Sciences (CAHS) Indicators (Canada) | RAND (UK, Canada) | Chien et al. (Taiwan) |

| Stage of Development of the Research and of the Output (depending on type of research) | x | ||||

| • Research (e.g., basic, clinical services, health services delivery, output development) | |||||

| • Output: What stage of output development was carried out during the grant? (e.g., prototype development, initial testing, regulatory approval, on the market, trialing in a new context) | |||||

| • Movement along research and/or development pathway | |||||

| Peer Recognition of Output | |||||

| • Number of peer-reviewed articles (e.g., using such measures as “journal impact factor” for assessing relative quality, or counting publications in high-quality journals/publishers) | x | x | x | x | x |

| -Number of original research articles | x | ||||

| -Number of review articles | x | ||||

| • Number of research reports | x | x | x | x | |

| • Number of conference papers published | x | x | x | ||

| • Number of citations in peer-reviewed articles (e.g., using such measures as “crown indicator,” “highly cited publication counts,” “H-index”) | x | x | x | x | |

| • Citations assimilated into clinical guidelines | x | ||||

| • Quantity of citations indicates “breakthrough” results | x | x | |||

| • Measures of esteem (number of awards and honors received) | x | x | x | ||

| • Number of patents awarded | x | x | x | x | |

| • Number of Web downloads or hits | x | x | x | ||

| • Number of reprints or books sold | x | ||||

| Multiple and Interdisciplinary Audience Involvement in Output Development | |||||

| • Multiple consumer input/collaboration in all stages (other researchers, clinicians, policy makers, manufacturers, intellectual property brokers) and evidence of their involvement | x | x | |||

| • Appropriate targeting of audience | x | ||||

| Output Meets Acceptable Standards of Science and Technology | |||||

| • Appropriate methodology for answering research questions | x | ||||

| • Output is well defined and implemented with integrity | x | ||||

| • Well-documented conclusions, supported by the literature | x | x | |||

| • Ability for the document to stand alone | x | ||||

| • Meets human subjects protection requirements | x | ||||

| • Meets ethical standards | x | ||||

| • Applies concepts of universal design and accessibility | x | ||||

| • Research done on the need for and development of the instrument or device | x | ||||

| • Measurement instruments tested and found to have acceptable type and level of validity | x | ||||

| • Measurement instruments tested and found to have acceptable level of reliabilit | x |

| Criteria/Dimensions | NIDRR External Advisory Group (U.S.) | National Institute for Occupational Safety and Health (NIOSH) (U.S.) | Canadian Academy of Health Sciences (CAHS) Indicators (Canada) | RAND (UK, Canada) | Chien et al. (Taiwan) |

| • Pilot testing conducted with consumers and adaptations made as indicated | x | ||||

| • Theoretical underpinnings and evidence base (e.g., level of evidence per American Psychological Association, American Medical Association) used and well documented | x | ||||

| Output Has Potential to Improve Lives of People with Disabilities | |||||

| • Valued by consumers | x | ||||

| • Evidence of beneficial outcomes (i.e., How does the output improve abilities of people with disabilities to perform activities of their choice in the community and also expand society’s capacity to provide full opportunities and accommodations?) | x | ||||

| Output Usability | |||||

| • Builds upon person-environment interaction and human-system integration paradigm | x | ||||

| • Ability to reach target population | x | ||||

| • Adheres to principles of knowledge translation | x | ||||

| • Readability; information translated into consumer language that is easy to understand and implement | x | x | |||

| • Can be used effectively, repeatedly as intended by design | x | ||||

| • Feasibility | x | ||||

| • Accessibility | x | ||||

| • Acceptability | x | ||||

| • Generalizability | x | ||||

| • User-friendly | x | x | |||

| • Ease of use (for measures: ease of scoring and administration) | x | ||||

| • Flexibility | x | ||||

| • Scalability (for measures) | |||||

| • Adoptability | x | ||||

| • Affordability and repair cost (for devices) | x | ||||

| • Cost/benefit | x | ||||

| • Financing available | x | ||||

| • Compatibility and durability | x | ||||

| • Sustainability | x | ||||

| • Safety issues considered | x | ||||

| Output Utility and Relevance | |||||

| • Addresses high-priority area | x | x | |||

| • Addresses NIDRR mission, International Classification of Function, Disability and Health (ICF) model, and Rehabilitation Act | x | ||||

| • Addresses a practical problem | x | ||||

| • Importance of contribution to the field (i.e., results expand the knowledge base, advance understanding, define issues, fill a gap) | x | x |

| Criteria/Dimensions | NIDRR External Advisory Group (U.S.) | National Institute for Occupational Safety and Health (NIOSH) (U.S.) | Canadian Academy of Health Sciences (CAHS) Indicators (Canada) | RAND (UK, Canada) | Chien et al. (Taiwan) |

| • Significance and magnitude of impact within and outside of field (i.e., Does output help build internal or extramural institutional knowledge? Does output transcend boundaries? Does output produce effective cross-agency, cross-institute, internal/external collaboration?) | x | x | x | x | x |

| • Transferability (number of technology output transfers, number of companies transferred to, technology transfer sustained, source of citations in other fields) | x | x | x | x | x |

| • Focuses on innovation or emerging technologies | x | ||||

| • Ability to stimulate new research, new knowledge, or new products | x | x | |||

| • Perceived as useful to practitioners; has changed practice | x | x | |||

| • Perceived as useful to consumers | x | ||||

| • Outputs are actually used in the field (e.g., adoption of guidelines/protocols/interventions; acceptance of outputs via publications/conference proceedings; manufacture license; product available in marketplace; percentage of target audience that has adopted; example of implementation in the field) | x | x | x | x | |

| • Outputs provide evidence to support state-level changes to policies and/or practices | x | ||||

| • Output recommendations have been implemented by policy makers | x | x | x | ||

| • Requests for expert testimony, participation in national advisory roles | x | x | |||

| Dissemination of Outputs | |||||

| • Evidence of efforts to disseminate (e.g., dissemination plan and activities) | x | ||||

| • Timely dissemination | x | ||||

| • Depth and breadth (e.g., percentage of target group that has used/adopted and duration of use) | x | x | |||

| • Dissemination of research in practice literature and conferences for transfer potential | x | x | |||

| • Use of multimedia and emerging technology (RAND survey asks about specific sources for different audiences) | x | x | |||

| • Mode of dissemination matches target audience | x | ||||

| • Counts of products distributed (e.g., manuals, newsletters, curricula) | x | x | |||

| • Evaluation plan and consumer reviews | x | ||||

| • Scope of training: topics covered, number of training events, hours of training, participant hours, participant characteristics | x | ||||

| • Technical assistance/consultation provided | x | x | |||

| • Number of sponsored conferences and workshops, with documented sponsorship, number and composition of attendees, products of the event, assessment of the event | x | x | x | ||

| • Dissemination of research through databases | x | x | x | x | |

SOURCE: Generated by the committee based on cited sources.