TA11 Modeling, Simulation, and Information

Technology and Processing

INTRODUCTION

The draft roadmap for technology area (TA) 11, Modeling, Simulation, and Information Technology and Processing, consists of four technology subareas:1

• 11.1 Computing

• 11.2 Modeling

• 11.3 Simulation

• 11.4 Information Processing

NASA’s ability to make engineering breakthroughs and scientific discoveries is limited not only by human, robotic, and remotely sensed observation, but also by the ability to transport data and transform the data into scientific and engineering knowledge through sophisticated models. But those data management and utilization steps can tax the information technology and processing capacity of the institution. With data volumes exponentially increasing into the petabyte and exabyte ranges, modeling, simulation, and information technology and processing requirements demand advanced supercomputing capabilities.

Handling and archiving rapidly growing data sets, including analyzing and parsing the data using appropriate metadata, pose significant new demands on information systems technology. The amount of data from observations and simulations is growing much more rapidly than the speed of networks, thus requiring new paradigms: rather than bringing massive data to scientists’ workstations for analysis, analysis algorithms will increasingly have to be run on remote databases.

There are also important spacecraft computer technology requirements, including intelligent data understanding, development of radiation-hard multicore chips and GPUs, fault tolerant codes and hardware,2 and software that runs efficiently on such systems. Another important challenge is developing improved software for reliably simulating and testing complete NASA missions including human components.

![]()

1The draft space technology roadmaps are available at http://www.nasa.gov/offices/oct/strategic_integration/technology_roadmap.html

2 Intelligent adaptive systems technologies for autonomous spacecraft operations are discussed under TA 4.5.1 (vehicle systems management and fault detection and isolation and recovery)

TABLE N.1 Technology Area Breakdown Structure for TA11, Modeling, Simulation, and Information Technology and Processing

| NASA Draft Roadmap (Revision 10) | Steering Committee-Recommended Changes |

| TA11 Modeling, Simulation, Information Technology, and Processing | One technology has been split into two parts. |

|

11.1. Computing |

|

|

11.1.1. Flight Computing |

|

|

11.1.2. Ground Computing |

|

|

11.2. Modeling |

|

|

11.2.1. Software Modeling and Model-Checking |

|

|

11.2.2. Integrated Hardware and Software Modeling |

|

|

11.2.3. Human-System Performance Modeling |

|

|

11.2.4. Science and Engineering Modeling |

Split 11.2.4 to create two separate technologies: |

|

11.2.4a Science Modeling and Simulation |

|

|

11.2.4b Aerospace Engineering Modeling and Simulation |

|

|

11.2.5. Frameworks, Languages, Tools, and Standards |

|

|

11.3. Simulation |

|

|

11.3.1. Distributed Simulation |

|

|

11.3.2. Integrated System Life Cycle Simulation |

|

|

11.3.3. Simulation-Based Systems Engineering |

|

|

11.3.4. Simulation-Based Training and Decision Support Systems |

|

|

11.4. Information Processing |

|

|

11.4.1. Science, Engineering, and Mission Data Life Cycle |

|

|

11.4.2. Intelligent Data Understanding |

|

|

11.4.3. Semantic Technologies |

|

|

11.4.4. Collaborative Science and Engineering |

|

|

11.4.5. Advanced Mission Systems |

|

Before prioritizing the level 3 technologies included in TA11, one technology was split into two parts. The changes are explained below and illustrated in Table N.1. The complete, revised technology area breakdown structure (TABS) for all 14 Tas is shown in Appendix B.

Technology 11.2.4, Science & Engineering Modeling (which is actually titled Science and Aerospace Engineering Modeling in the text of the TA11 roadmap), was considered to be too broad. It has been split in two:

• 11.2.4a, Science Modeling and Simulation, and

• 11.2.4b, Aerospace Engineering Modeling and Simulation.

The content of these two technologies is as described in the TA11 roadmap under Section 11.2.4 Science and Aerospace Engineering Modeling, in the subsections titled Science Modeling and Aerospace Engineering, respectively.

TOP TECHNICAL CHALLENGES

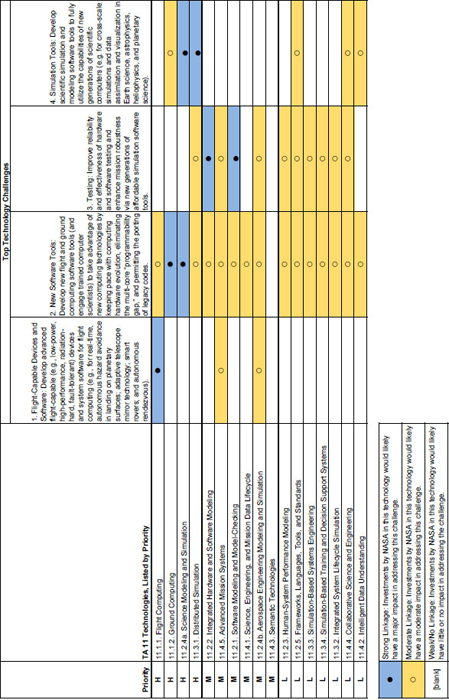

The panel identified four top technical challenges for TA11, listed below in priority order.

1. Flight-capable devices and software. Develop advanced flight-capable (e.g., low-power, high-performance, radiation-hard, fault-tolerant) devices and system software for flight computing (e.g., for real-time, autonomous hazard avoidance in landing on planetary surfaces; adaptive telescope mirror technology; smart rovers; and autonomous rendezvous).

The application of increasingly powerful computational capabilities will support more ambitious undertakings, many of which rely on autonomous smart systems. However, many of the advanced devices developed for commercial terrestrial applications are not suited to the space environment. Space applications require devices that are immune, or at least tolerant, of radiation-induced effects, within tightly constrained resources of mass and power. The software design that runs on these advanced devices, with architectures different than current space-qualified devices, also requires new approaches. The criticality and complexity of the software needed for these demanding applications requires further development to manage this complexity at low risk.

2. New Software Tools. Develop new flight and ground computing software tools (and engage trained computer scientists) to take advantage of new computing technologies by keeping pace with computing hardware evolution, eliminating the multi-core “programmability gap,” and permitting the porting of legacy codes.

Since about 2004 the increase in computer power has come about because of increases in the number of cores per chip (“multi-core”) and use of very fast vector graphical processor units (GPUs) rather than increases in processor speed. NASA has a large budget for new computer hardware, but the challenge of developing efficient new codes for these new computer architectures has not yet been addressed. Major codes are developed over decades, but computers change every few years, so NASA’s vast inventory of legacy engineering and scientific codes will need to be re-engineered to make effective use of the rapidly changing advanced computational systems. This re-engineering needs to anticipate future architectures now being developed such as Many Integrated Core (MIC) and other advanced processors with the goals of portability, reliability, scalability, and simplicity. The effort will require both the engagement of a large number of computer scientists and professional programmers and the development of new software tools to facilitate the porting of legacy codes and the creation of new more efficient codes for these new systems. Additional issues that arise as computer systems evolve to millions of cores include the need for redundancy or other defenses against hardware failures, the need to create software and operating systems that prevent load imbalance from slowing the performance of codes, and the need to make large computers more energy efficient as they consume a growing fraction of available electricity.

3. Testing. Improve reliability and effectiveness of hardware and software testing and enhance mission robustness via new generations of affordable simulation software tools.

The complexity of systems comprised of advanced hardware and software must be managed in order to ensure the systems’ reliability and robustness. New software tools that allow insight into the design of complex systems will support the development of systems with well understood, predictable behavior while minimizing or eliminating undesirable responses.

4. Simulation Tools. Develop scientific simulation and modeling software tools to fully utilize the capabilities of new generations of scientific computers (e.g., for cross-scale simulations and data assimilation and visualization in Earth science, astrophysics, heliophysics, and planetary science).

Supercomputers have become increasingly powerful, often enabling realistic multi-resolution simulations of complex astrophysical, geophysical, and aerodynamic phenomena. The sort of phenomena that are now being simulated include the evolution of circumstellar disks into planetary systems, the formation of stars in giant molecular clouds in galaxies, and the evolution of entire galaxies including the feedback from supernovas and supermassive black holes. These are also multi-resolution problems, since (for example) one can’t really understand galaxy evolution without understanding smaller-scale phenomena such as star formation. Other multi-scale phenomena that are being simulated on NASA’s supercomputers include entry of spacecraft into planetary atmospheres and the ocean-atmosphere interactions that affect the evolution of climate on Earth. However, efficient new codes that use the full capabilities of these new computer architectures are still under development.

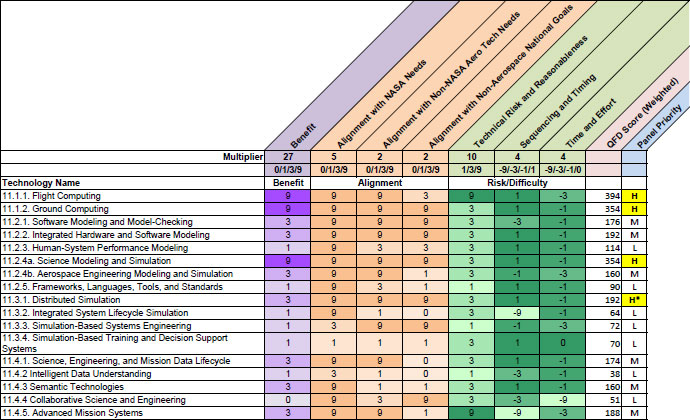

FIGURE N.1 Quality function deployment (QFD) summary matrix for TA11 Modeling, Simulation, and Information Technology and Processing. The justification for the high-priority designation of all high-priority technologies appears in the section “High-Priority Level 3 Technologies.” H = High Priority; H* = High Priority, QFD score override; M = Medium Priority; L = Low Priority.

QFD MATRIX AND NUMERICAL RESULTS FOR TA11

Assessment of computing-related technologies is difficult owing to the fact that developments will in many cases be primarily motivated and utilized outside of NASA. NASA is primarily a consumer, adopter, and/or adapter of advanced information technology facilities, with the exception of spacecraft on-board processing. As a result only four technologies rank as highest priority. This does not mean that that other technologies are unimportant to NASA, only that NASA is not viewed as the primary resource for the development of these technologies.

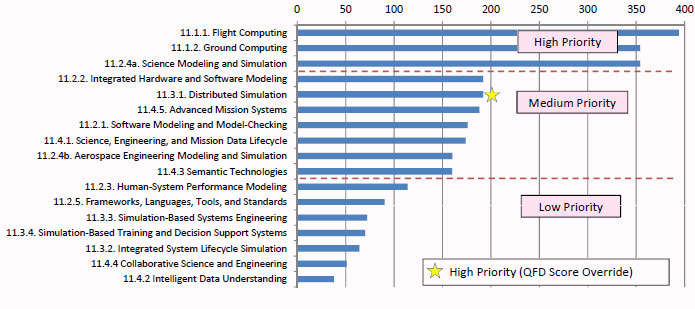

Figures N.1 and N.2 show the relative ranking of each technology. The panel assessed four of the technologies as high priority. Three of these were selected based on their QFD scores, which significantly exceeded the scores of lower-ranked technologies. After careful consideration, the panel also designated one additional technology as a high-priority technology.3

Figures N.2 displays the TA11 technologies in order of priority.

The panel’s assessment of linkages between the level 3 technologies and top technical challenges is summarized in Figure N.3.

![]()

3In recognition that the QFD process could not accurately quantify all of the attributes of a given technology, after the QFD scores were compiled, the panels in some cases designated some technologies as high priority even if their scores were not comparable to the scores of other high-priority technologies. The justification for the high-priority designation of all the high-priority technologies for TA11 appears below in the section “High-Priority Level 3 Technologies.”

FIGURE N.2 Quality function deployment rankings for TA11 Modeling, Simulation, and Information Technology and Processing.

HIGH-PRIORITY LEVEL 3 TECHNOLOGIES

Panel 3 identified four high priority technologies in TA11. The justification for ranking each of these technologies as a high priority is discussed below.

Technology 11.1.1, Flight Computing

Flight computing technology encompasses low-power, radiation-hardened, high-performance processors. These will continue to be in demand for general application in the space community. Special operations, such as autonomous landing and hazard avoidance, are made practical by these high-performance processors. Processors with the desired performance (e.g., multi-core processors) are readily available for terrestrial applications; however, radiation-hardened versions of these are not.

A major concern is ensuring the continued availability of radiation-hardened integrated circuits for space. As the feature size of commercial integrated circuits decreases, radiation susceptibility increases. Maintaining production lines for radiation-hardened devices is not profitable. Action may be required if NASA and other government organizations wish to maintain a domestic source for these devices, or a technology development effort may be required to determine how to apply commercial devices in the space environment. For example, using multi-core processors with the ability to isolate cores that have experienced an upset may be one approach. It is not unreasonable to assume such a course may be the only option to continue to fly high-performance processors in the future.

The associated risk ranges from moderate to high, depending on the approach taken to ensure continued access to these devices. Maintaining existing production lines may be prohibitively expensive and may result in performance-constrained devices. However, such action may be necessary to maintain device availability until safe, reliable application of commercial multi-core products is achieved.

This technology is well aligned with NASA’s expertise and capabilities, as evidenced by NASA’s long collaboration with industry partners to develop such devices. This technology has applications throughout all aspects of the space community: civil government, national security, and commercial space. Access to the space station would not benefit this technology development.

This technology will have significant impact because advanced computer architectures, when eventually incorporated into radiation-hardened flight processors, can be expected to yield major performance improvements in on-board computing throughput, fault management, and intelligent decision making and science data acquisition, and will enable autonomous landing, hazard avoidance. Its use is anticipated across all classes of NASA missions.

FIGURE N.3 Level of support that the technologies provide to the top technical challenges for TA11 Modeling, Simulation, and Information Technology and Processing.

Technology 11.1.2, Ground Computing

Ground computing technology consists of programmability for multi-core/hybrid/accelerated computer architectures, including developing tools to help port existing codes to these new architectures.

After about 2004, major improvements in computation have come from increasing numbers of compute cores per chip and improvements in accelerated processors (vector graphic processor units, GPUs), while before 2004 improvements came mainly from steadily increasing clock speed of individual compute cores. However, the vast library of legacy engineering and scientific codes does not run efficiently on the new computer architectures. Technology development is needed to create software tools to help programmers convert legacy codes and new algorithms so that they run efficiently on these new computer systems. Related challenges are developing improved compilers and run-time algorithms that improve load balancing in these new computer architectures, and developing methods to prevent computer hardware failures in systems with hundreds of thousands to millions of cores from impacting computational reliability. All users of high-performance computers face these challenges, and NASA can work on these issues with other agencies and industrial partners. Continuous technology improvements will be required as computer system architectures steadily change. Improved technology is likely to be widely applicable across NASA, the aerospace community, and beyond. Access to the space station is not needed.

This technology is game-changing because computer hardware capability has been increasing exponentially with new multi-core and accelerator hardware, but software has not been keeping pace with hardware. Solving the programmability gap has the potential to give 2 to 3 orders of magnitude improvement in computing capability, with a wide range of impact.

Technology 11.2.4a, Science Modeling and Simulation

Panel 3 split the original 11.2.4 Science and Engineering Modeling technology into two technologies, which were rated separately:

• 11.2.4a Science Modeling and Simulation: high priority.

• 11.2.4b Aerospace Engineering Modeling and Simulation: medium priority.

The 11.2.4a technology consists of multi-scale modeling, which is required to deal with complex astrophysical and geophysical systems with a wide range of length scales or other physical variables. Better methods also need to be developed to compare simulations with observations to improve physical understanding of the implications of rapidly growing NASA data sets.

Developing multi-scale models and simulations is an ongoing challenge that impacts many areas of science. Progress in this technology will require steady improvement in codes and methodology. As scientists attempt to understand increasingly complex astrophysical and geophysical systems using constantly improving data, the challenge grows to develop better methods to integrate data from diverse sources and compare these data with simulations in order to improve scientific understanding. Alignment with NASA is very good. Access to the space station is not needed.

This technology will have significant impact because it optimizes the value of observations by elucidating the physical principles involved. This capability could impact many NASA missions, and improved modeling and simulation technology is likely to have wide impacts in diverse mission areas.

Technology 11.3.1, Distributed Simulation

Distributed simulation technologies create the ability to share simulations between software developers, scientists, and data analysts, and thus, greatly enhance the value of the large investments of the simulation, which currently can require tens of millions of CPU hours. There is a need for large-scale, shared, secure, distributed environments with sufficient interconnect bandwidth and display capabilities to enable distributed simulation (processing) as well

as distributed analysis and visualization of data produced by simulations. (Large simulations can typically generate terabytes of data resulting in the need for advanced data management and data mining technologies.)

The panel overrode the QFD score for this technology to designate it as a high-priority technology because the QFD scores did not capture the value this technology could provide in terms of major efficiency improvement supporting collaborations, particularly interdisciplinary studies that would benefit numerous NASA missions in multiple areas. In addition, it would have a broad impact within non-NASA aerospace as well as a broad impact on many non-aerospace communities.

MEDIUM- AND LOW-PRIORITY TECHNOLOGIES

One group of medium-priority technologies includes 11.2.2 Integrated Hardware and Software Modeling; 11.4.5 Advanced Mission Systems; and 11.2.1 Software Modeling and Model Checking. These could each provide a major (but not game changing) benefit, did not have the lowest possible scores in any category, and have a common theme that development cost can be lowered.

A second group of medium-priority technologies includes 11.4.1 Science, Engineering, and Mission Data Lifecycle; 11.2.4b Engineering Modeling and Simulation; and 11.4.3 Semantic Technologies. As system modeling and simulation capabilities advance, the modeling of system safety performance due to complex interactions within the system, with the external environment, and including anomalous behaviors are also expected to improve. The technologies in this group could each provide a major (but not game changing) benefit, did not have the lowest possible scores in any category, and have a common theme that no significant new technology is required.

One group of low-priority technologies includes 11.2.3 Human-System Performance Modeling (again including system safety performance as noted above); 11.2.5 Frameworks, Languages, Tools, and Standards; and 11.3.3 Simulation-Based System Engineering. These could each provide a minor benefit, had no low scores in any category, and have a common theme that NASA OCT does not need to invest to further development, which is already underway.

A second group of low-priority technologies includes 11.3.4 Simulation-Based Training and Decision Support Systems; 11.3.2 Integrated System Lifecycle Simulation; and 11.4.4 Intelligent Data Understanding. The latter two each had a low score in one category. These could each provide a minor benefit and have a common theme that part of all of the technology already exists.

The remaining low-priority technology was 11.4.2 Collaborative Science and Engineering. It had a low score of zero for benefit; in the view of Panel 3, it would provide no significant benefit, and much of this is being done today.

WORKSHOP SUMMARY

The Instruments and Computing Panel (Panel 3) for the NASA Technology Roadmaps study held a workshop on May 10, 2011, at the National Academies Keck Center in Washington, D.C. It focused on Modeling, Simulation, Information Technology, and Processing (NASA Technology Roadmap TA11). The workshop was attended by members of Panel 3, one or more members of the Steering Committee for the NASA Technology Roadmaps study, invited workshop participants, study staff, and members of the public who attended the open sessions. The workshop began with a short introduction by the Panel 3 chair, followed by a series of four panel discussions, then a session for public comment and general discussion, and finally a short summary and wrap-up by the Panel 3 chair. Each panel discussion was moderated by a Panel 3 member. Experts from industry, academia, and/or government were invited to present.

Panel Discussion 1: Simulation of Engineering Systems

The first session of the day focused on simulation of engineering systems and was moderated by Alan Title.

Greg Zacharias (Charles River Analytics) gave a presentation on simulation-based systems engineering. He noted that systems engineering is a very human-intensive process often with very informal and implied specifications

and requirements. He believes the technical challenge of automating the processes is getting computers to think more like humans or getting human concepts into machine-readable forms. He thinks that this capability would be game changing. He also noted that another game changing potential gap in the roadmap is improved modeling of human operators in simulations. Full end-to-end systems modeling, or “digital twin,” requires human operator modeling at the right level of perceptual/cognitive/motor fidelity. This also ties in with another point that he raised of the need to improve multi-resolution modeling and simulations of systems (including human operators).

Amy Pritchett (Georgia Institute of Technology) gave the second presentation focused on aviation, but with discussions of how it relates to space-based missions. Safety drivers include coupled interdependent behaviors (hardware, software, and human dynamics). She noted that the NRC decadal survey for aeronautics rated developing complex interactive adaptive systems as the most significant challenge in developing flight critical systems. She believes that the goal should be to simulate overall systems and processes (including all the people in the loop, all the vehicles, components, etc.). Interdependencies among systems need to be modeled— not just among hardware and software systems, but human operators and also organizational aspects. Modeling and simulation consists not only of physics-based modeling, but also computational systems, communication behavior, and the cognitive behaviors of humans in the system. She sees components of such an integrated model in the roadmap individually, but there doesn’t appear to be anything about bringing these things together. She also agrees that there is a significant challenge of properly scaling individual models when they are linked together to model complete architectures.

Panel Discussion 2: Re-Engineering Simulation, Analysis, and Processing Codes

The next session focused on the new classes of programming languages and how to adapt to new multi-core computers and was moderated by Joel Primack.

Bill Matthaeus (Bartol Research Institute and University of Delaware) gave the first presentation, in which he related his views as both a theoretical and computational physicist. He described the challenges of dividing the responsibilities between computational scientists and end users. He noted the evolving computational paradigm shifts with the latest being the move to multicore processors working in clusters. He discussed the issue of to what degree the end users need to retool for each paradigm shift and how software compilers can ease the transitions. He noted the trend of end users becoming more detached from the details of the codes and software packages and perhaps putting too much trust in them without verifying. He described his concern that modern compilers have become less robust and have produced unstable code, code that is not transportable, or code that produces different results on different computers. As an example of the complexity of the simulations, he referred to his work on developing three dimensional magnetohydrodynamic models of the heliosphere (e.g., space weather) and compared it to terrestrial global circulation models for climate or calculating the flow around a 747 from first principles.

Bronson Messer (Oak Ridge National Laboratory) gave the second presentation and started with a review of ORNL’s current capabilities. The lab has three petascale platforms in a single room and the world’s second most powerful computer with over 2 petaflops (2 quadrillion floating point operations per second) of computing power. ORNL is in the midst of building a 10 to 20 petaflops computer, and by end of the decade is looking toward an exaflop computer. He reported that currently the most difficult physical challenge in large supercomputers is the power needed to move data between memory and processors and from node to node. This will change with some of the upcoming advancements. He sees the proposed exascale systems (beyond existing petascale systems) being significantly different by becoming more hierarchical and heterogeneous with increasing on-chip parallelism used to improve performance. He sees a significant challenge in dealing with the complexity of these systems and developing new programming models. He said the solution lies in a programming model that abstracts some of the architectural details from software developers. In a recent survey of potential users, there was a strong preference of evolutionary developments using current languages and tools such as MPI (Message Passing Interface).

After Messer’s presentation, there was a lengthy discussion period in which Messer answered audience and panel questions. He said that NASA does not currently invest a significant amount in advancing the state of the art of supercomputing. He discussed the challenges of dealing with the massive amount of data generated and the best way of transferring the results back to the users. Finally there was some discussion that NASA will need to address the new computing paradigms of multi-core systems for their flight computers.

Panel Discussion 3: Intelligent Data Understanding,

Autonomous S/C Operations, and On-Board Computing

The next session focused on on-board spacecraft processing and improving autonomy of operations and data processing. The session was moderated by David Kusnierkiewicz.

George Cancro (JHU/APL) gave the first presentation in which he provided several criticisms of the draft roadmap. His main suggestion was that the roadmap should be more holistic showing the linkages between modeling, simulation, autonomy, and operational software advancements as they are all interrelated. He also saw a lack of specific benefits and purposes for technology concepts across the entire roadmap, and believes that the fundamental question “Why is this needed for NASA?” is not addressed correctly throughout. As an example, he said the entire section for on-board computing is focused on multi-core processors, but there does not appear to be anything regarding what specific missions require multi-core processors. He identified gaps in the roadmap as: no discussions on on-board computing for large data flows; no discussion on virtual observatories, clearing houses, search engines and other tools for NASA science data necessary to perform multi-mission data analysis or anchor models; no discussions of frameworks or processes to enable modeling and simulation. He noted the significant potential for autonomous and adaptive systems and said their single biggest challenge is testing. He referenced the new Air Force roadmap “Technology Horizons” which identified “trusted autonomy” as a top issue, which he believes can only be solved through advances in testing of autonomous systems.

Noah Clemons (Intel) gave the second presentation. He felt that the roadmap is too general and instead needs to address four domains: efficient performance; essential performance (application coding with today’s multi-core processors); advanced performance (more advanced, cross platform); and distributed performance (high-performance MPI clusters). He noted that a lot of existing applications were never intended to be run on parallel processors and there is a significant challenge in converting code to run on parallel processors, either by changing serial codes or by writing new codes. He emphasized the need to target these features: portability, reliability, scalability, and simplicity. He warned against putting too much focus on one particular computational architecture. He sees having to recode for a new architecture or paradigm every few years. He sees things currently heading toward one computer processor that is heterogeneous—CPU and GPU all embedded together—but in the future, that whole model will likely disappear. He believes that one game changing parallel programming technology is the idea of programming in tasks rather than managing individual threads. With this type of programming technology, programs would be structured to take advantage of highly parallel hardware by focusing on scaling (with cores) and vectorization by coding at a high level. There are several parallel programming solutions embracing “tasks rather than threads” that are built into the compiler; others are built in libraries. He thinks that structured parallel patterns that can be used as building blocks with little or no cost required (i.e., you don’t have to write everything from scratch) are near a tipping point. Many of these tools already have some portability component built in. He also feels that a small investment should be made in adapting analysis tools, as some are already available to assist on how to parallelize code, optimize/improve code, and tune code.

Panel Discussion 4: Data Mining, Data Management, Distributed Processing

The final session of the day focused on using and managing data and was moderated by Robert Hanisch.

Peter Fox (Rensselaer Polytechnic Institute) gave the first presentation in which he expressed his views on how the roadmap covered data management and processing. He believes there are some gaps in the roadmap including data integration or integrate-ability and handling data fitness (quality, uncertainty, and bias). He suggests a modest investment up-front in terms of how to integrate lots of data sets from different spacecraft—as opposed to a much more difficult process after the fact. He expressed a need for collaborative development between data people (rarely in the picture up-front), algorithm developers, instrument designers, etc. There was a question from the audience regarding whether this is technology or management. Fox’s response was that it is both and gave an example of a system called Giovanni which greatly improves the productivity of scientists. In terms of data fitness, from his experiences with Earth science, he seemed most concerned with bias. He noted that bias can be systematic errors resulting from distortion of measurement data; or it can be bias of people using/processing/understanding the data.

Finally, he felt that the roadmap does not correctly capture the status and future of semantic technologies as they are already in widespread production in NASA but advancements may revolutionize how science is done.

Arcot Rajasekar (University of North Carolina at Chapel Hill) gave the second presentation which focused on integrating data life cycles with mission life cycles. He discussed the challenges in end-to-end capability for exascale data orchestration. He noted that NASA has massive amounts of mission data and there is a need to share all this data over long timeframes without loss. He suggests an integrated data and metadata system, so that the data are useful for future users but currently there is no coherent technology in the roadmap to meet these needs. He believes that the current roadmap showcases the need for data-intensive capability at various levels but provides limited guidance on how to pull and push this technology. He said the information processing roadmap is very impressive but needs a corresponding “evolutionary” data orchestration roadmap. In his view, game-changing challenges to NASA include policy-oriented data life-cycle management (manage the policies, and let the policy engine manage the bytes and files); agnostic data visualization technologies; service-oriented data operations; and distributed cloud storage and computing. However, he thinks the greatest challenge for NASA is a comprehensive data management system (as opposed to doing a stove-piped approach for each mission) and noted that the technology is out there; it just needs to be done. It would be a paradigm shift to go toward an exascale data system that is data-oriented, policy-oriented, and outcome-oriented (i.e., a system that captures behavior in terms of data outcomes).

Neal Hurlburt (Lockheed Martin) gave the third and final presentation on data systems. He started with a summary of a recent study which found a need for community oversight of emerging, integrated data systems. He believes that the top challenges for NASA include current data services are not sufficiently interoperable; the cost of future data systems will be dominated by software development rather than computing and storage; uncoordinated development and an unpredictable support lifecycle for infrastructure and data analysis tools; and the need for a more coordinated approach to data systems software. However, he thinks that NASA can exploit emerging technologies for most of their needs in this area without investing in development. He believes that NASA’s role should be to develop infrastructure for virtual observatories, establish reference architectures/standards, encourage semantic technologies to integrate with astronomy and geophysics communities and provide support for integrated data analysis tools. He sees the widespread use of consistent metadata/semantic annotation as near a tipping point.

Public Comment Session and General Discussion

At the end of the workshop there was some time set aside for general discussion and to hear comments from the audience. This session was moderated by Carl Wunsch (MIT).

Discussion started along the lines of NASA’s role in information technology and processing technology development. It was noted that a lot of these topics are not unique to NASA and there are significant efforts initiating elsewhere, for instance in industry and commercial companies. Some expressed their view that NASA is more of a beneficiary than a key player in the technology development.

It was noted that a key difference for NASA relative to commercial endeavors is NASA’s focus on minimizing risk, particularly with regard to flight systems. There was some agreement that much of what is commercially available is not compact enough, reliable enough, low power enough, etc., to fly in space. An example was given of radiation-hardened computing power or CCDs; industry is way ahead technologically, but it can no longer be used in space. It was noted that space technology is so far behind in those areas that the old technology cannot be purchased in the marketplace. It was suggested that NASA needs to team with DOD, which has deeper pockets and similar objectives. Someone also warned that if NASA does not develop something because it assume commercial interests will do it— but commercial will only do it if there’s economic payoff— there is a risk that NASA/science will be at the mercy of the market.

The example of radiation hardened electronics led to some further detailed discussion. It was noted that a lot of computing now is being done with radiation-hardened FPGAs and ASICs, which are readily available. It was mentioned that FPGAs are harder to validate and every ASIC manufacturer has its own set of simulators, compilers, etc. It was suggested that fault tolerance could be approached in a different way. A proposed technology challenge was made of developing radiation-hardened design using current technology for integrated circuits that does not need specialized facilities to produce.

Some discussion in the workshop focused on data systems (especially regarding science data), and the presenters handled engineering data and science data interchangeably, but NASA handles these two domains very differently. Every mission has its own Context-Driven Content Management system to do configuration management, which is part of product data lifecycle management. It was suggested that there should be an effort to advance the state-of-the-art and share that technology across missions for systems engineering and intelligent data understanding. It was said that the solution does not have to be homogenous, as missions really are different. Finally, it was noted that technology for smaller missions has not been addressed, i.e., common buses. There was agreement that there will not be a large mission in the coming years, and smaller missions are becoming more and more expensive.