In the last two decades, two separate revolutions have brought digital media out of the pre-Internet age. Both revolutions were deeply grounded in the mathematical sciences. One of them is now mature, and you benefit whenever you go to a movie with computer-generated animation. The other revolution has just begun but is already redefining the limits of feasibility in some areas of biological imaging, communication, remote sensing, and other fields of science.

The first could be called the “wavelet revolution.” Wavelets are a mathematical method for isolating the most relevant pieces of information in an image or in a signal of any kind (acoustic, seismic, infrared, etc.). There are coarse wavelets for identifying general features and fine wavelets for identifying particular details. Prior to wavelets, information was represented in long, cumbersome strings of bits that did not distinguish importance.

The central idea of wavelets is that for most real-world images, we don’t need all the details (bytes) in order to learn something useful. In a 10-megapixel image of a face, for instance, the vast majority of the pixels do not give us any useful information. The human eye sees the general features that connote a face—a nose, two eyes, a mouth—and then focuses on the places that convey the most information, which tend to be edges of features. We don’t look at every hair in the eyebrow, but we do look at its overall shape. We don’t look at every pixel in the skin, because most of the pixels will be very much like their neighbors. We do focus on a patch of pixels that contrast with their neighbors—which might be a freckle or a birthmark or an edge.

Now much of this information can be represented much more compactly as the overlapping of a set of wavelets, each with a different coefficient to capture its weight or importance. In any typical picture, the weighting amplitude of most of the wavelets

will be near zero, reflecting the absence of features at that particular scale. If the model in the photograph doesn’t have a blemish on a particular part of her skin, you won’t need the wavelet that would capture such a blemish. Thus you can compress the image by ignoring all of the wavelets with small weighting coefficients and keeping only the others. Instead of storing 10 million pixels, you may only need to store 100,000 or a million coefficients. The picture reconstructed from those coefficients will be indistinguishable from the original to the human eye.

After all, how can we know which 1 percent of information is the most relevant until we have acquired it all?

Curiously, wavelets were discovered and rediscovered more than a dozen times in the 20th century—for example, by physicists trying to localize waves in time and frequency and by geologists trying to interpret Earth movements from seismograms. In 1984, it was discovered that all of these disparate, ad hoc techniques for decomposing a signal into its most informative pieces were really the same. This is typical of the role of the mathematical sciences in science and engineering: Because they are independent of a particular scientific context, the mathematical sciences can bridge disciplines.

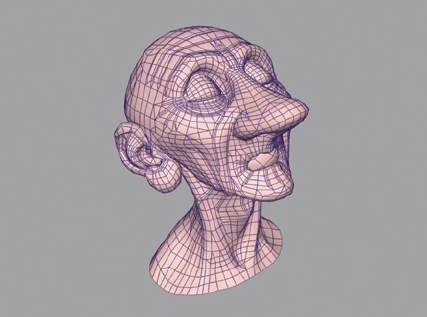

Once the mathematical foundation was laid, stronger versions of wavelets were developed and an explosion of applications occurred. Some computer images could be compressed more effectively. Fingerprints could be digitized. The process could also be reversed: Animated movie characters could be built up out of wavelets. A company called Pixar turned wavelets (plus some pretty good story ideas) into a whole series of blockbuster movies (see Figure 1).

In 2004, the central premise of the wavelet revolution was turned on its head with some simple questions: Why do we even bother acquiring 10 million pixels of information if, as is commonly the case, we are going to discard 90 percent or 99 percent of it with a compression algorithm? Why don’t we acquire only the most relevant 1 percent of the information to start with? This realization helped to start a second revolution, called compressed sensing.

Answering these questions might appear almost impossible. After all, how can we know which 1 percent of information is the most relevant until we have acquired it all? A key insight came from the interesting application of how to reconstruct a magnetic resonance image (MRI) from insufficient data. MRI scanners are too slow to allow them to capture dynamic images (videos) at a decent resolution, and they are not ideal for imaging patients such as children, who are unable to hold still and might not be good candidates for sedation. These challenges led to the discovery that MRI test images could, under certain conditions, be reconstructed perfectly—not approximately, but perfectly—from a too-short scan by a mathematical method called L1 (read as “ellone”) minimization. Essentially, random measurements of the image are taken, with each measurement being a randomly weighted average of many randomly selected pixels. Imagine replacing your camera lens with a kaleidoscope. If you do this again and again, a million times, you can get a better image than you can from a camera that takes a 10-megapixel photo through a perfect lens.

1 / Stills from an animated short film called “Geri’s Game,” released by Pixar Animation Studios in 1997, which received an Academy Award in 1998. It was the first animated film to use subdivision surfaces, a mathematical technique based on wavelet compression. Wavelets allow computers to compress an image into a smaller data file. Subdivision surfaces do the reverse: They allow the computer to create a small data file that can be manipulated and then uncompressed to create lifelike images of something that never existed—in this case, an old man playing chess in the park. The top image shows the subdivision surface. The image below shows an actual frame from the movie. © 1997 Pixar. /

The magic lies, of course, in the mathematical sciences.

The magic lies, of course, in the mathematical sciences. Even though there may be millions of scenes that would reproduce the million pictures you took with your kaleidoscopic camera, it is highly likely that there will be only one sparse scene that does. Therefore, if you know the scene you photographed is information-sparse (e.g., it contains a heart and a kidney and nothing else) and measurement noise is controlled, you can reconstruct it perfectly. L1 minimization happens to be a good technique for zeroing in on that one sparse solution. Compressed sensing actually built on, and helped make coherent, ideas that had been applied or developed in particular scientific contexts, such as geophysical imaging and theoretical computer science, and even in mathematics itself (e.g., geometric functional analysis). Lots of other reconstruction algorithms are possible, and a hot area for current research is to find the ones that work best when the scene is not quite so sparse.

As with wavelets, seeing is believing. Compressed sensing has the potential to cut down imaging time with an MRI from 2 minutes to 40 seconds. Other researchers have used compressed sensing in wireless sensor networks that monitor a patient’s heartbeat without tethering him or her to an electrocardiograph. The sensors strap to the patient’s limbs and transmit their measurements to a remote receiver. Because a heartbeat is information-sparse (it’s flat most of the time, with a few spikes whose size and timing are the most important information), it can be reconstructed perfectly from the sensors’ sporadic measurements.

Compressed sensing is already changing the way that scientists and engineers think about signal acquisition in areas ranging from analog-to-digital conversion to digital optics and seismology. For instance, the country’s intelligence services have struggled with the problem of eavesdropping on enemy transmissions that hop from one frequency to another. When the frequency range is large, no analog-to-digital converter is fast enough to scan the full range in a reasonable time. However, compressed sensing ideas demonstrate that such signals can be acquired quickly enough to allow such scanning, and this has led to new analog-to-digital converter architectures.

Ironically, the one place where you aren’t likely to find compressed sensing used, now or ever, is digital photography. The reason is that optical sensors are so cheap; they can be packed by the millions onto a computer chip. Even though this may be a waste of sensors, it costs essentially nothing. However, as soon as you start acquiring data at other wavelengths (such as radio or infrared) or in other forms (as in MRI scans), the savings in cost and time offered by compressed sensing take on much greater importance. Thus compressed sensing is likely to continue to be fertile ground for dialogue between mathematicians and all kinds of scientists and engineers.