This chapter addresses part 2 of the terms of reference for this study: “Review various analytical methods, processes and models for large scale, complex domains like ISR [intelligence, surveillance, and reconnaissance] and identify best practices.” The chapter also discusses government and industry capability planning and analysis (CP&A)-like processes and associated tools, with the aim of identifying attributes and best practices that might be applied to the Air Force ISR CP&A process. The following sections provide brief descriptions of the processes and tools used by several organizations to showcase salient attributes and illustrate best practices of each. Appendix C contains descriptions of additional organizational processes and tools that do not appear in this chapter.1 At the end of the chapter, Table 3-4 correlates the findings in this chapter with best practices.

EXAMPLES OF GOVERNMENT PROCESSES FOR PROVIDING CAPABILITY PLANNING AND ANALYSIS

The scope of Air Force responsibilities to provide global, integrated ISR capabilities across strategic, operational, and tactical missions is extraordinarily broad

_______

1 The descriptions of the individual organizations’ CP&A-like processes and tools vary considerably and are the result of the intent to provide an unclassified report. Much of the information provided to the committee during its data gathering was classified or otherwise not releasable to the public.

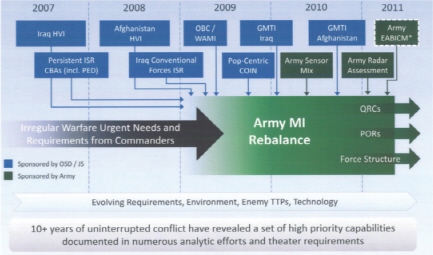

FIGURE 3-1 Analytic underpinnings of intelligence, surveillance, and reconnaissance (ISR) force sizing for the Army. NOTE: Acronyms are defined in the list in the front matter. SOURCE: LTG Richard Zahner, Deputy Chief of Staff, G-2, Headquarters, U.S. Army. “Military Intelligence Rebalance.” Presentation to the committee, November 9, 2011.

and complex. Although the organizational processes described below apply, for the most part, to arenas with smaller scope and less complexity, each process was reviewed with the goal of identifying best practices and tools that the Air Force might consider incorporating into its own CP&A process.

The U.S. Army developed a strategy to rebalance the Army Military Intelligence (MI) Force after a decade of intense ISR system development and deployment in support of operations in Iraq and Afghanistan.2 This protracted period at war resulted in many system deployments accomplished with great urgency as Quick Reaction Capabilities (QRC), depicted in Figure 3-1. The overarching strategy for Army Intelligence is to optimize core intelligence capabilities in support of Brigade Combat Teams (BCTs) and division and corps full-spectrum operations on a sustained Army Force Generation (ARFORGEN) cycle.3 Thus, the Army’s approach relies principally on its own organic ISR capability rather than on Air Force or na-

_______

2 U.S. Army. A Strategy to Rebalance the Army MI Force—Major Themes and Concepts. Available at http://www.dami.army.pentagon.mil/site/G-2%20Vision/nDocs.aspx. Accessed February 29, 2012.

3 LTG Richard Zahner, Deputy Chief of Staff, G-2, Headquarters, U.S. Army. “Military Intelligence Rebalance.” Presentation to the committee, November 9, 2011.

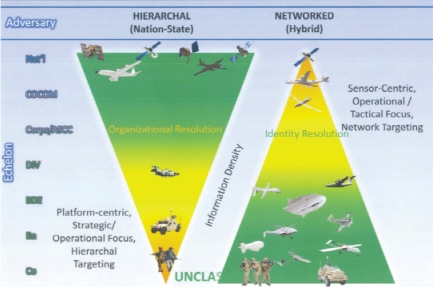

FIGURE 3-2 The Army’s intelligence, surveillance, and reconnaissance (ISR) requirements and information density generation in past and in present and future threat environments. SOURCE: LTG Richard Zahner, Deputy Chief of Staff, G-2, Headquarters, U.S. Army. “Military Intelligence Rebalance.” Presentation to the committee, November 9, 2011.

tional capabilities. Looking to the future, the MI rebalance is intended to determine which capabilities are enduring, using a Doctrine, Organization, Training, Materiel, Leadership and Education, Personnel, and Facilities (DOTMLPF) assessment.4

Figure 3-2 identifies the Army’s ISR requirements and information density generation for past threat environments compared with those for present and future threat environments. Cold War requirements were hierarchical and focused on the operational level, whereas contemporary requirements are networked, with a tactical focus. Additionally, for the most part, the Army has recently faced a benign air threat, as coalition forces enjoyed air superiority in Iraq and Afghanistan. This led the Army to focus ISR support more toward tactical units, which are at present and can be expected in the future to prosecute much of the fight.

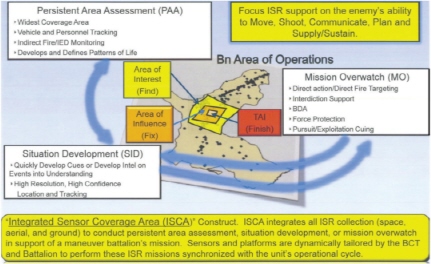

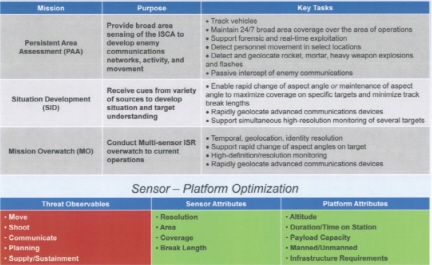

In support of these decentralized and networked operations, Army Intelligence devised the Integrated Sensor Coverage Area (ISCA) construct, featuring three distinct ISR mission sets, shown in Figure 3-3: (1) Persistent Area Assessment (PAA), (2) Mission Overwatch (MO), and (3) Situation Development (SID).

_______

4 Ibid.

FIGURE 3-3 The Army’s Integrated Sensor Coverage Area (ISCA) construct that defines three intelligence, surveillance, and reconnaissance (ISR) mission sets. SOURCE: LTG Richard Zahner, Deputy Chief of Staff, G-2, Headquarters, U.S. Army. “Military Intelligence Rebalance.” Presentation to the committee, November 9, 2011.

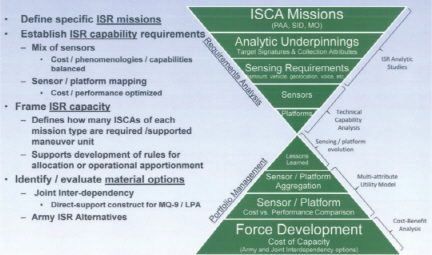

The ISCA construct integrates all ISR collection in support of a maneuver battalion’s mission, with sensors and platforms dynamically tailored by the BCT and battalion, synchronized by the unit’s operational cycle. The “hourglass chart” (Figure 3-4) depicts how the ISCA construct is incorporated into the ISR capability development process, which translates the strategy into requirements and the requirements into sensors and platforms, with sensor and platform attributes that are summarized in Figure 3-5.

What is distinctive about this process is that the Army investment strategy to deliver ISR capabilities begins with a threat-and-environment-based definition of ISR requirements. These are then deconstructed into mission requirements and subsequently into three ISR mission sets, with variable sensing requirements. These sensing requirements are then translated into sensors and platforms that allow force-development options to be evaluated in a holistic, needs-based manner that is quantifiable, repeatable, transparent, and easy to explain. The selection of appropriate sensor and platform attributes provides a set of relevant and consistent criteria for defining and assessing both sensor and system performance, which, in turn, informs acquisition decisions. Characteristics applicable to airborne collection assets are summarized in Box 3-1.

FIGURE 3-4 The Army’s intelligence, surveillance, and reconnaissance (ISR) capability development process. SOURCE: LTG Richard Zahner, Deputy Chief of Staff, G-2, Headquarters, U.S. Army. “Military Intelligence Rebalance.” Presentation to the committee, November 9, 2011.

FIGURE 3-5 The Army’s Integrated Sensor Coverage Area (ISCA) functions and intelligence, surveillance, and reconnaissance (ISR) mission requirements. SOURCE: LTG Richard Zahner, Deputy Chief of Staff, G-2, Headquarters, U.S. Army. “Military Intelligence Rebalance.” Presentation to the committee, November 9, 2011.

BOX 3-1

Characteristics of the Aerial Layer Construct

1. Sensors must be optimized to support Integrated Sensor Coverage Area (ISCA)-related information collection (Intelligence, Surveillance, and Reconnaissance [ISR]) operations of Persistent Area Assessment (PAA), Situation Development (SID), and Mission Overwatch (MO).

2. The appropriate multiple-intelligence sensor array must be resident on dedicated ISR platforms to meet intelligence requirements associated with unified land operations (formerly, full-spectrum operations).

3. Intelligence sensors must be assigned (and possess the resolution requirements) to platforms that possess the endurance to support the specific requirements of the ISCA concept—PAA, SID, and MO.

4. Intelligence captured by these sensors must be accessible by forward-deployed processing, exploitation, and dissemination (PED) (in the case of Intelligence 2020 concepts, in the PED Company of the Military Intelligence [MI] Pursuit and Exploitation Battalion, the PED detachment of the MI Brigade, and the proposed PED element located in the Aerial Exploitation Battalion co-located at the Corps Headquarters). In turn these PED “platforms” are linked in the Intelligence Readiness Operations Capability (IROC) network, which will provide intelligence overwatch for deployed units as well as expand analytical and intelligence exploitation opportunities.

Finding 3-1. The U.S. Army’s ISCA construct uses a process that links requirements analysis with force development and portfolio management in a way that helps synchronize planning and execution. Keys to this linkage are the ISCA analytical underpinnings and the methodology that enables sensor-platform aggregations. Additionally, the ISCA construct uses measured performance to inform acquisition decisions in a manner that lends transparency, responsiveness, and repeatability.

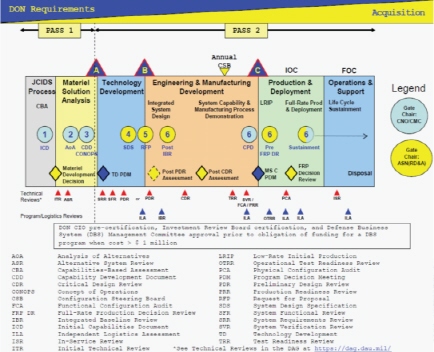

The overall U.S. Navy (USN) requirement-generation process is governed by Secretary of the Navy Instruction 5000.2E and defines a capabilities-based approach to developing and delivering technically sound, sustainable, and affordable military capabilities.5 The process is implemented by means of the Naval Capabili-

_______

5 USN. 2011. Department of the Navy Implementation and Operation of the Defense Acquisition Systemand the Joint Capabilities Integration and Development System. September 1. Available at http://nawctsd.navair.navy.mil/Resources/Library/Acqguide/SNI5000.2E.pdf. Accessed April 13, 2012.

FIGURE 3-6 Department of the Navy requirements and acquisition, two-pass, six-gate process. SOURCE: Paul Siegrist, N2N6F2 ISR Capabilities Division. Personal communication to the committee, March 6, 2012.

ties Development Process (NCDP), the Expeditionary Force Development System (EFDS), and the Joint Capabilities Integration and Development System (JCIDS) to identify and prioritize capability gaps and integrated DOTMLPF solutions. The Chief of Naval Operations (CNO) is the user representative for executing actions to identify, define, validate, assess affordability determinations, and prioritize required mission capabilities through JCIDS, allocating resources to meet requirements through the Planning, Programming, Budgeting, and Execution System (PPBES). For ISR capabilities and requirements, Navy N2/6 coordinates with the Office of the CNO (N81) throughout the process.

The NCDP creates the Integrated Capabilities Plan, which translates strategic guidance and operational concepts to specific warfighting capabilities. The Navy uses two flag-level forums—the Naval Capabilities Board and the Resources and Requirements Review Board—to review and endorse all JCIDS proposals and documents. In translating requirements to operational capability, the Navy employs the two-pass, six-gate process depicted in Figure 3-6. This process ensures alignment between service-generated capability requirements and systems acquisition.

It supports Program Objective Memorandum development as well as urgent need and rapid development in streamlined, tailored implementations. A brief description of this process follows:

• Gate 1 reviews and grants authority for the Initial Capabilities Document submission to joint review, validates the proposed analysis of alternatives (AOA) study guidance, endorses the AOA study plan, and authorizes continuation to the Material Development Decision.

• Gate 2 reviews AOA assumptions and the total ownership cost estimate, approves the AOA preferred alternative, approves the creation of the Capabilities Development Document (CDD) and Concept of Operations (CONOPS), approves the initial Key Performance Parameters and Key System Attributes, reviews program health, and authorizes the program to proceed to Gate 3 prior to Milestone A.

• Gate 3 approves initial CDD and CONOPS; supports the development of the service cost position; reviews technology development and system engineering plans; provides full funding certification; validates requirements traceability; considers the use of new or modified command, control, communications, computers, and intelligence (C4I) systems; reviews program health; and grants approval to continue to Milestone A.

• Gate 4 approves a formal system development strategy and authorizes programs to proceed to Gate 5 or Milestone B.

• Gate 5 ensures readiness for Milestone Decision Authority (MDA) approval and release of the formal Engineering and Manufacturing Development (EMD) Request for Proposal to industry, provides full funding certification, and reviews program health and risk.

• Gate 6 follows the award of the EMD contract and satisfactory completion of the initial baseline review, assessing the overall health of the program. Reviews at Gate 6 also endorse or approve the Capabilities Production Document, assess program sufficiency and health prior to full-rate production, and evaluate sustainment throughout the program life cycle.

In summary, the application of the process employed by the Navy and applied to urgent needs involves streamlining and tailoring requirements and assessing options more rapidly than the normal process, and expediting technical, programmatic, and financial decisions as well as procurement and contracting.

Finding 3-2. The U.S. Navy’s capability-based process is collaborative across the Department of the Navy and is synchronized with the PPBES and system acquisition life cycles. The process can be streamlined to address urgent needs. The process deals largely with naval requirements; utilizes existing PCPAD

(planning and direction, collection, processing and exploitation, analysis and production, and dissemination)/TCPED (tasking, collecting, processing, exploitation, and dissemination) architectures; and connects with other ISR enterprise providers through the Office of the Under Secretary of Defense for Intelligence (OUSD[I]).

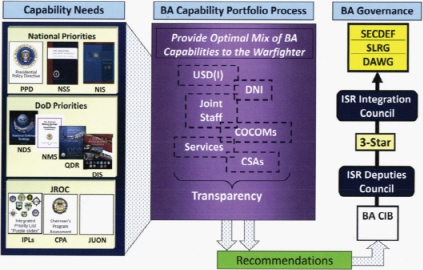

Office of the Under Secretary of Defense for Intelligence

The mission and vision of the OUSD(I) require an integrated approach to ISR across the Department of Defense (DoD): a global and horizontally integrated DoD intelligence capability consisting of highly qualified professionals and skilled leaders employing advanced technologies dedicated to supporting the needs of the warfighter and the Director of National Intelligence (DNI).6 The OUSD(I) for Portfolios, Programs and Resources (PP&R) oversees the development and execution of a balanced portfolio of military and national intelligence capabilities.7 Toward this end, the Battlespace Awareness (BA) portfolio builds the ISR investment strategy by balancing capabilities across TCPED, as shown in Figure 3-7.8

As shown, the OUSD(I) process leading from national-level strategy to budget decisions involves numerous organizations and staffs. Capability needs are derived from national-level defense and intelligence guidance and strategy. These needs are translated into an ISR investment strategy, with a portfolio of programs constructed and shaped to provide an optimal mix of capabilities for TCPED and analysis, given political, budgetary, and national security realities. Success depends on an understanding of top-level priorities, knowledge of ISR requirements and system capabilities, open communication (transparency), and effective collaboration among the participants.

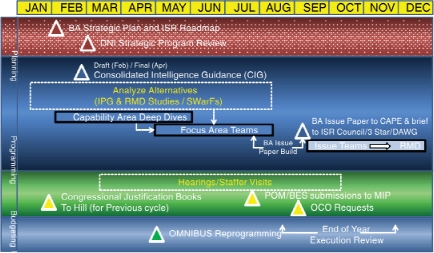

Within the OUSD(I), the Director, Battlespace Awareness and Portfolio Assessment (BAPA) has responsibility for assessing and recommending the optimal the mix of BA capabilities to the warfighter. Figure 3-8 shows a number of key activities conducted by the BAPA staff to support portfolio development and their relationship to the PPBES process.

_______

6 DoD. 2005. “Under Secretary of Defense for Intelligence (USD(I)).” Directive 5143.01. Available at http://www.fas.org/irp/DoDdir/DoD/d5143_01.pdf. Accessed February 28, 2012.

7 DoD. 2010. “Fiscal Year 2011 Budget Estimates. Office of the Secretary of Defense (OSD).” Washington, D.C.: Department of Defense. Available at http://comptroller.defense.gov/defbudget/fy2011/budget_justification/pdfs/01_Operation_and_Maintenance/O_M_VOL_1_PARTS/OSD_FY11.pdf. Accessed February 28, 2012.

8 Col Anthony Lombardo, Deputy Director, ISR Programs, Agency Acquisition Oversight, Office of the Under Secretary of Defense (Intelligence). “OUSD(I) Overview.” Presentation to the committee, October 7, 2011.

FIGURE 3-7 Office of the Under Secretary of Defense for Intelligence (OUSD[I]) strategy to budget involves numerous organizations and staffs. NOTE: Acronyms are defined in the list in the front matter. SOURCE: Col Anthony Lombardo, Deputy Director, ISR Programs, Agency Acquisition Oversight, Office of the Under Secretary of Defense (Intelligence). “OUSD(I) Overview.” Presentation to the committee, October 7, 2011.

BAPA develops the ISR roadmap every 2 years, as directed by Congress. The roadmap provides information on the current ISR portfolio, its ability to meet national and defense intelligence strategies, and how the portfolio will change to remain relevant and maximize capability. Individual systems are addressed, but the overarching goal is to evaluate the portfolio and an integrated architecture. The Consolidated Intelligence Guidance gives both the DNI and OUSD(I) programmatic and budgetary guidance for programs and budgets that fall under the National Intelligence Program (NIP) and Military Intelligence Program (MIP), and it provides strategic priorities, program guidance, and areas in which to assume risk. It also directs studies when necessary to help resolve programmatic questions and uncertainties. A significant amount of analysis underpins the portfolio assessment process. The analysis comes in various forms, from major studies with cross-community participation, to Capability Area Deep Dives (CADDs), which are relatively short, intense assessments of specific issues led by the BAPA staff. Assessment efforts feed focus area teams, which are organized by domain (i.e., sea, air, and space) and help frame BA portfolio issues that need resolution.

The OUSD(I) for PP&R recognizes that current processes for prioritizing needs and analyzing risk should be improved in order to address acknowledged

FIGURE 3-8 Office of the Under Secretary of Defense for Intelligence (OUSD[I]) and the Planning, Programming, Budgeting, and Execution System (PPBES) process. NOTE: Acronyms are defined in the list in the front matter. SOURCE: Col Anthony Lombardo, Deputy Director, ISR Programs, Agency Acquisition Oversight, Office of the Under Secretary of Defense (Intelligence). “OUSD(I) Overview.” Presentation to the committee, October 7, 2011.

shortfalls, which include the following: (1) little consideration of trade-offs among cost and schedule and performance, (2) no prioritization across portfolios and little to no risk analysis, (3) the overly bureaucratic and time-consuming nature of the processes, and (4) the impact on shaping the force. The OUSD(I) for PP&R also seeks a more dynamic and iterative process throughout a program’s life cycle— one that will revisit validated requirements when necessary and adjust to strategy shifts and changes in the threat, considerations that are very timely.9 Additionally, the OUSD(I) for PP&R has recommended changes to the Joint Requirements Oversight Council (JROC) and Functional Capabilities Boards (FCBs).10 Although OUSD(I) does not have a “standard” modeling and simulation (M&S) tool kit per se, it leverages tools and Systems Engineering and Technical Assistance (SETA) developed by Federally Funded Research and Development Centers, contractors, the services, and the Office of the Secretary of Defense (OSD) on a case-by-case basis to address specific questions (see Box 3-2). For example, the Satellite Took Kit (STK)® and the Satellite Orbit Analysis Program (SOAP) have been used primarily to help leadership and decision makers visualize overhead ISR systems and evaluate

_______

9 Ibid.

10 Ibid.

BOX 3-2

Intelligence, Surveillance, and Reconnaissance (ISR) Tools Used by the Office of the Under Secretary of Defense (Intelligence) (OUSD[I])

Satellite Tool Kit (STK)®. A three-dimensional-visualization tool that can display orbit geometries of space systems and realistic views of airborne and terrestrial assets as well. Used primarily to facilitate the understanding of ISR satellite capabilities and limitations, notably persistence and area coverage.

Satellite Orbit Analysis Program (SOAP). An interactive, three-dimensional orbit visualization and analysis program that can generate an unlimited number of world, XY plot, and textual views. Used primarily to show persistence and coverage of overhead systems and to assist with specific engineering assessments of overhead systems. (Note: Used by the National Reconnaissance Office [NRO] on its NRO Management Information System [NMIS] terminals).

DyCAST (Aerospace). A relay satellite communications scheduling and analysis tool that helps resolve contention and perform optimal communications resource allocation. It was used to support the Airborne Intelligence Surveillance and Reconnaissance (AISR) Analysis of Alternatives and several communications studies sponsored by the Department of Defense and the intelligence community.

Communications Architecture Systems Assessor (CASA). Developed by Aerospace Corporation to compare the performance of alternative communications architectures under different operational (dynamic) scenarios. Has become the “tool of choice” of OUSD(I) for evaluating communications sufficiency and has also been used by NASA and other intelligence community entities to investigate communications issues.

Joint Force Operational Readiness Combat Effectiveness Simulator (JFORCES). A government-owned simulation tool capable of producing operationally credible data on the interactive behavior of sensors, command and control, weapons and communications systems for both friendly and opposing forces. JFORCES has supported numerous engineering studies as well as command, control, communications, computers, intelligence, surveillance and reconnaissance (C4ISR) architecture assessments.

Boeing Engineering Analysis Support Tool (BEAST). A high-fidelity aerospace system simulator for subsystem, system, and system-of-systems analysis.

Architecture Evaluation Tool (AET). Developed by National Security Space Office (NSSO) (now Executive Agent for Space [EA4S] staff); displays aggregated capabilities and metrics, such as measures of effectiveness, for various architectures as a function of cost. It allows the decision maker to interactively adjust the weighting of various metrics and gauge the sensitivity of selected architecture capabilities to cost.

persistence and coverage issues. The remaining tools have been used most often to assess communications sufficiency under different scenarios and in the face of expanding collection platforms, especially airborne ISR.

In many respects, the most common analysis approach used by OUSD(I) and the one most commonly observed in CADDs is what can be described as a straightforward empirical analysis approach based on operational data. This approach has dominated in recent years because most of the leadership focus has been on ISR performance in Iraq and Afghanistan, for which empirical data can be obtained. As the fight draws down and the focus shifts to longer-range architectural issues, more M&S tools will likely be used.

Finding 3-3. The CP&A-like process employed by OUSD(I) addresses ISR enterprise concerns across the DoD and the IC and includes consideration of the capabilities of enterprise networks and PCPAD and TCPED. The OUSD(I) recognizes the need to improve the capability development process in the following ways: (1) by attaining better up-front fidelity on trade-offs involving cost and schedule and performance, (2) by providing more analytic rigor and risk/portfolio analysis, (3) by placing stronger emphasis on prioritizing requirements and capabilities, and (4) by strengthening alignment of the acquisition process.

EXAMPLES OF INDUSTRY PROCESSES FOR PROVIDING CAPABILITY PLANNING AND ANALYSIS

In addition to pertinent government approaches to CP&A, select industry CP&A-like processes and their associated tools were reviewed for their potential applicability to the Air Force CP&A process. Although many of the processes presented use a similar high-level approach that involves a requirements and needs analysis, a capabilities gap analysis, and a solutions analysis, the levels of detail, complexity, and development and employment of tools varies considerably among industry processes. Ultimately, the output of the efforts is generally a report and/or a brief that presents alternatives in terms of priorities, cost, mission utility, and risk.

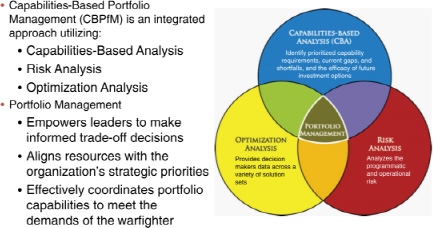

Booz Allen Hamilton (BAH) provides SETA services to the U.S. government, as well as consulting services to the ISR community.11 BAH advocates Capabilities-

_______

11 Information on BAH’s systems engineering and integration efforts is available at http://www.boozallen.com/consulting/engineer-operations/systems-engineering-integration. Accessed February 28, 2012.

FIGURE 3-9 Booz Allen Hamilton’s Capabilities-Based Portfolio Management (CBPfM) process. SOURCE: Scott Gooch, Principal, and Christopher Anderson, Lead Associate, Booz Allen Hamilton. “Capabilities-Based Portfolio Management: Methods, Processes, and Tools.” Presentation to the committee, January 5, 2012.

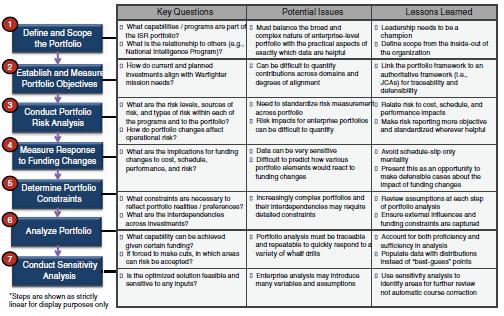

Based Portfolio Management (CBPfM) that combines capabilities-based analysis, risk analysis, and optimization analysis to inform decision makers conducting portfolio management.12 BAH capabilities-based analysis tools can be applied across different parts of the trade-off study process, depending on how both the process and the tool are customized for a particular portfolio or government customer. The benefits ascribed to this approach, as shown in Figure 3-9, are as follows: CBPfM “empowers leaders to make informed trade-off decisions, aligns resources with the organization’s strategic priorities, and effectively coordinates portfolio capabilities to meet the demands of the warfighter.”13 The CBPfM process, presented in Figure 3-10, follows the basic industry flow of needs analysis, gap analysis, and solution analysis, and includes cost, schedule, performance, and risk analyses.

Within the CBPfM process, BAH utilizes a number of commercial off-the-shelf (COTS) software systems that ingest different types of data (budget, capability, risk) and optimize and prioritize alternatives across user-defined objectives and constraints. In addition, a number of the tools employed by BAH facilitate the

_______

12 Scott Gooch, Principal, and Christopher Anderson, Lead Associate, Booz Allen Hamilton. “Capabilities-Based Portfolio Management: Methods, Processes, and Tools.” Presentation to the committee, January 5, 2012.

13 Ibid.

FIGURE 3-10 The Capabilities-Based Portfolio Management (CBPfM) process: steps and intial discussion points. SOURCE: Scott Gooch, Principal, and Christopher Anderson, Lead Associate, Booz Allen Hamilton. “Capabilities-Based Portfolio Management: Methods, Processes, and Tools.” Presentation to the committee, January 5, 2012.

real-time visualization of portfolio performance over time and support “what-if ” drills with schedule slips (see Table 3-1).14

Finding 3-4. Booz Allen Hamilton’s Capabilities-Based Portfolio Management process requires leadership engagement, diverse skill sets to analyze a portfolio, and stakeholder participation and transparency. The resultant assessments are repeatable and rigorous enough to enable long-term planning, yet agile enough to incorporate new scenarios, priorities, and missions. The process includes modeling of extant TCPED and communications architectures, which yields more realistic estimates of cost and performance and risk. Although many results are scalable, any consideration of broader, more complex enterprises requires good analytical judgment for the development of the right approach.

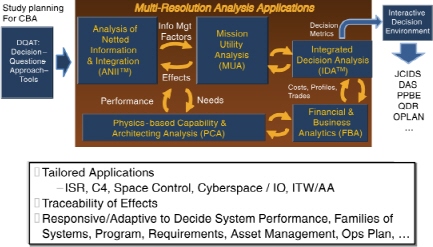

TASC provides systems engineering and integration solutions to the DoD, the intelligence community, and civil agencies. TASC illustrated its Capability-Based Assessment (CBA) process through Multi-Resolution Analysis (MRA) applying

_______

14 Ibid.

TABLE 3-1 Sample of Tools Employed by Booz Allen Hamilton for Facilitating Real-Time Visualization of Portfolio Performance Over Time and Supporting “What-If” Drills with Schedule Slips

| Tool Name | Category | Tool Product | Benefits |

| Simio® Simio Discrete-Event Simulation Software (Commercial off-the-shelf) | Mission Utility | Simulation | Higher-fidelity performance analysis. |

| Satellite Tool Kit (STK)® (Commercial off-the-shelf) | Physics-Based Capability | Modeling and Analysis Software | Higher-fidelity performance analysis. |

| EADSIM Extended Air Defense Simulation (Government owned) | Simulation | Higher-fidelity performance analysis. | |

| ISR FOCUS (Booz Allen Hamilton) | Integrated Decision Aides | Simulation: Tool product uses commercially available simulation such as Satellite Tool Kit® and Simio®. The product itself is currently an Excel-based dashboard built without Visual Basic for Applications (VBA). It can be deployed rapidly to any network. | The repeatable FOCUS assessment process enables managers and leaders to “see.” |

| Advanced Interactive Multidimensional Modeling Simulation (AIMMS)®(Commercial off-the-shelf) | Optimization | Repeatable, traceable optimization and intuitive dashboards. | |

| Expert Choice® Decision Support Software(Commercial off-the-shelf) | Decision Aides | Clearly solicits priorities. | |

| Resource Allocation Model (RAM)(Booz Allen Hamilton) | Portfolio Management | Aligns programs and capabilities to strategy and guidance. Establishes standardized metrics to compare programs. Streamlines inputs, processes, and outputs. Tailorable to one’s organization. | |

| Dynamic Capability Assessment Model (DCAM)(Booz Allen Hamilton) | Portfolio Management | Provides a unique dashboard visualization of cost, schedule, and performance in an interactive and dynamic environment. | |

| Decision Lens®(Commercial off-the-shelf) | Financial and Business Analysis | Financial and Business Analysis | Embedded prioritization and solver tools. |

NOTE: For a more complete list of tools used by both government and industry, see Appendix C in this report.

SOURCE: Booz Allen Hamilton. 2012. Written communication. Response to inquiry from the committee.

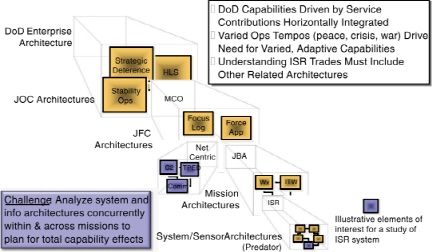

FIGURE 3-11 TASC’s solution to analyzing complex domains begins with a layered, iterative approach, segregating and describing architectures within and across missions. NOTE: Acronyms are defined in the list in the front matter. SOURCE: Doug Owens, Manager, Enterprise Analysis, Defense Business Unit, TASC. “An Enterprise Approach to Capability-Based Analysis: Best Practices, Tools, and Results.” Presentation to the committee, January 5, 2012.

various COTS/government off-the-shelf (GOTS) and TASC tools to the analysis of large-scale, complex domains.15 The TASC solution to analyzing complex domains begins with a layered, iterative approach, segregating and describing architectures within and across missions, as shown in Figure 3-11. In this way, TASC described a quantifiable analysis across complex domains, informed by affordability, and with traceability from requirements to decision outcomes.

The basic premise of the TASC approach is that complex domains of capability can be analyzed from different perspectives with tailored models and tools appropriate for each perspective, and the various segments of the analysis are integrated to provide traceability of cause and effect for the combined total impact, shown in Figure 3-12.16 For the ISR mission area, those perspectives include the following: sensor and collection platform performance; the network topology connectivity that enables the overall ISR mission; the command and control of the various assets; the communications capabilities and allocations; the vulnerabilities of the information architecture for the command, control, communications, and computers (C4) capabilities that enable ISR; the processes for TCPED information in

_______

15 Doug Owens, Manager, Enterprise Analysis, Defense Business Unit, TASC. “An Enterprise Approach to Capability-Based Analysis: Best Practices, Tools, and Results.” Presentation to the committee, January 5, 2012.

16 Ibid.

FIGURE 3-12 TASC’s approach to Multi-Resolution Analysis (MRA) integrates various perspectives. SOURCE: Doug Owens, Manager, Enterprise Analysis, Defense Business Unit, TASC. “An Enterprise Approach to Capability-Based Analysis: Best Practices, Tools, and Results.” Presentation to the committee, January 5, 2012.

support of operations; and the manpower and infrastructure through which the ISR missions are accomplished.17 MRA enables the examination of each of these elements within an integrated capability context using an interactive, iterative flow through the analysis. Multi-criteria methods are then correlated to cost estimating and program risk analysis, cost profiling, organization assessments, and six-sigma process improvement. The use of full-spectrum analytics within an integrated, interactive process combines the science of systems engineering and systems integrations with decision making.

TASC executes full-spectrum, cross-domain, multi-resolution analyses using a variety of GOTS, COTS, and custom tools to address the command, control, communications, computers, intelligence, surveillance, and reconnaissance (C4ISR) enterprise. For example, GOTS/COTS tools like ADIT (Advanced Data Integration Toolkit) for multi-intelligence data fusion analysis, GeoViz for geolocation performance analysis, STK for tracks and orbital coverage analysis, SEAS (System Effectiveness Analysis Simulation) for ISR mission effects and CONOPS development, JIMM (Joint Integrated Mission Model) for integrated operations analysis and detailed constructive analysis, and other tools provide the physics-based analysis for the quantification of capability impacts.

A sample of TASC custom tools and processes for ISR capability analysis is shown in Table 3-2. Among them are ANIITM and a-MINDTM, which allow the

_______

17 Ibid.

TABLE 3-2 Sample of Tools, with Products and Benefits of Each, Employed by TASC

| Tool Name | Category | Tool Product | Benefits |

| JFORCES: Joint Force Operational Readiness Combat Effectiveness Simulator (TASC) | Mission Utility | Stochastic and deterministic simulation | Provides robust analysis of total capability. Archives every element of a simulation to allow analysts to create new metrics to explore issues without re-executing simulation. |

| a-MINDTM with ANIITM Process:automated Mission Impact of Network Design (TASC) | Processing, Exploitation, Dissemination (PED): Analysis Communications Data Integration Decryption Language Translation Data Reduction | Statistical relational models; mission impact of network design, cyber impacts on mission effectiveness; cyber mitigation options | Reduces analysis cycle time by means of rapid diagnostic evaluation of network or architecture for mission impacts to identify alternative structures from potentially millions of options. Quantifies correlation of networks to missions. |

| TPAT: TCPED Process Assessment Tool (built in Extends IM)(TASC) | General simulation | Visualization of process provides quick reference point to identify bottlenecks and inefficiencies. Enables rapid exploration of process options. | |

| CACI: Collection Architecture Capability Influence (TASC) | Inference modeling (Infer potential changes in outcome or effects from possible variations in metric results) | Reduces architecture costs by identifying key elements and indifferent elements, allowing capability development and selection to focus on critical pieces. | |

| Tasking to Value (T2V): Geospatial Modeling Environment (TASC) | Simulation | Increased operational reality and ability to assess ISR operations effectiveness. |

| Tool Name | Category | Tool Product | Benefits |

| SMART: Strategic Multi-Attribute Resource Tool (TASC) | integrated uecision Aides | Multi-attribute Utility Analysis | Reduces decision complexities and provides real-time decision-maker interaction with metric data to explore trade space ot options. |

| QATO: Quick Automated Tool for Optimization (TASC) | Excel-based statistical model | Rapid correlation of metrics and cost profiles, reducing programming trades and impact analysis of program decisions. | |

| MIATI: Multi-theater Integrated Allocation Tool for ISR (TASC) | Asset allocation | Reduces time and cost of analysis by quickly narrowing the trade space of asset management. | |

| H-BEAM with MESA Process Horse Blanket Enterprise Architecture Methodology (TASC) | Architecture Analysis | Technical performance metrics of system and family of systems effectiveness. Assessment of system impacts to mission effects as scoping analysis for subsequent detailed Mission Utility Analysis (MUA). | Consolidated display of architecture effects and contributing elements. |

| CERA: Cost Estimating and Risk Analysis Process (TASC) | Financial and Business Analytics | System and family of systems cost estimates and profiles; correlation of costs to metric performance from PCA, ANIITM, or MUA | Develop credible estimates and profiles for systems and families of capability. Assess risks of programmatic changes on capability effects. |

NOTE: For a more complete list of tools used by both government and industry, see Appendix C in this report.

SOURCE: TASC. 2012. Written communication. Industry and Government ISR Tools and Processes. Response to inquiry from the committee.

analysis of interconnected capabilities and associated cyberspace vulnerabilities in a C4ISR information architecture; Strategic Multi-Attribute Research Tool (SMART), which supports metric-driven decision analysis, including uncertainty and cost-benefit trade-offs; Collection Architecture Capability Influence (CACI), which supports risk analysis in collection architectures; and the Mission Engineering and Systems Analysis (MESA) process paired with the Horse Blanket Enterprise Architecture Methodology and visualization tool (H-BEAM), which graphically traces capability across an enterprise architecture, from strategic guidance and requirements, to systems, to architecture options, to capability impacts.

In summary, TASC described to the committee the inherent challenge in trying to analyze system and information architectures concurrently within and across missions to plan for total capability effects. Specifically, the networked architectures are extremely complex, and the TASC solution is a layered analytic discipline to provide quantifiable analysis informed by affordability. TASC maintains that MRA manages this complexity while maintaining traceability of effects through engineering analysis, family of systems and architecture trade-offs, networked information and integrated C4 for ISR, mission utility effects, and decision and costing analysis. Further, MRA provides multiple views for decisions on system technical performance parameters, network connectivity and information vulnerabilities, family of capabilities, concepts of operations, policy, total capability versus cost trade-offs, operations planning, and asset allocation.18

Finding 3-5. TASC’s capability-based assessment process employs MRA, which in turn allows the complexity of ISR to be handled in a straightforward, transparent, tailorable, scalable, repeatable manner, incorporating a suite of tools that are optimized for a specific purpose. Such an approach can support a wide range of decisions and decision time lines.

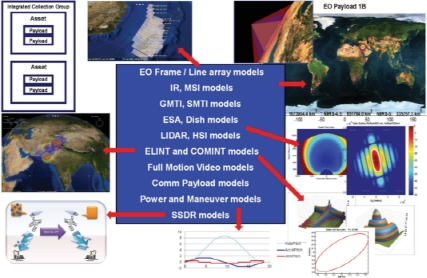

RadiantBlue, Inc., is a specialized provider of information technology development, consulting, and program support services for the DoD and the intelligence community.19 As with TASC, RadiantBlue implements the mission utility analysis and physics-based capability and architecture assessment phases of an MRA process using its “Blue Sim” Tool, an ISR high-fidelity simulator with agile software that easily accommodates new assets, payloads, and requirements scenarios. Figure 3-13 illustrates a typical BlueSim model with various payload types and relevant vehicle subsystems for the ISR trade space. RadiantBlue has used BlueSim to performed detailed analysis, including analysis in the following areas: space and air, sensor performance, flight profiling, attitude and orbitology, communications, TPED (tasking, processing, exploitation, and dissemination), collection satisfaction, force sizing, architecture, and visualization. The BlueSim simulator allows the integrated analysis of space, air, and ground systems—across integrated IMINT and SIGINT payloads, with cyberspace effects, against classic portfolios of ISR targets, or target decks, and vetted DoD scenarios.

_______

18 Ibid.

19 More information on RadiantBlue’s mission is available at http://www.radiantblue.com/about/. Accessed February 28, 2012.

FIGURE 3-13 RadiantBlue’s BlueSim accurately models the full depth of key subsystem and payload aspects of intelligence, surveillance, and reconnaissance (ISR) collection. NOTE: Acronyms are defined in the list in the front matter. SOURCE: Larry Shand, President, RadiantBlue, Inc. “RadiantBlue Modeling and Simulation Capabilities.” Presentation to the committee, January 5, 2012.

Government users have employed BlueSim across the full range of analysis, from large architecture studies to detailed collection planning studies. Likewise, industry users have employed BlueSim for diverse applications, including ISR architectures, system performance, predictive simulations, and detailed system and payload studies. Table 3-3 describes the tools employed by RadiantBlue Tool Set as well as its products and benefit.

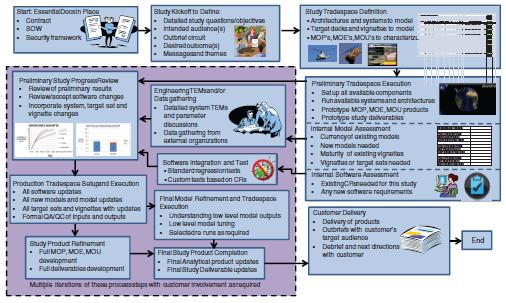

The following sections describe RadiantBlue’s study process (shown in Figure 3-14).20

Essential Study Documents in Place, Study Kickoff, Study Trade-Space Definition

The first three steps illustrated in the RadiantBlue process description define the details of the requested study, including study tasks, the study trade space, and desired outcomes, as well as a set of study messages and themes to guide the development of study output products. The trade space details the systems, payload variations, architectures, CONOPS, target decks, and analysis vignettes simulated

_______

20 RadiantBlue. February 7, 2012. Written communication to the committee.

TABLE 3-3 Description of Tools Employed by RadiantBlue Tool Set, with Products and Benefits

|

|

|||

| Tool Name | Category | Tool Product | Benefits |

|

|

|||

| BlueSim | Mission Utility | Simulation | Complete ISR mission effectiveness and utility. Measured in terms of classic ISR mission utility parameters (points and areas per day) or lower-level military/tactical utility parameters such as number or percentage of key enemy behavior detected. |

| BlueSim and supporting payload phenomenology tools | Physics-Based Capability | Simulation | Highly detailed physics-based model that simulates key ISR system and architecture features. These supporting models provide detailed inputs to BlueSim to enable mission- and architecture-level analysis with rigorous physics-based underpinnings. |

| BlueSim | Architecture Analysis | Simulation | Combines large architecture analysis and accurate physics-based modeling. Unique features: integrated cross-system tipping and cueing, the ability to model graduations between unified and stovepiped tasking systems, integrated dynamic tasking, and the ability to model intelligence gained through collection (information model). |

| BlueSim Ground Model | Processing. Exploitation. Dissemination (PED): Analysis Communications Data Integration Decryption Language Translation Data Reduction | Simulation | Enables PED analysis either combined with or separate from the ISR collection modeling, includes features to model cyber and input/output (10) effects such as intermittent outages, random packet loss, link degradation, node failure, and automatic communications, includes all of the key ISR wideband network node types to include routing, processing, storage area network (SAN) storage, exploitation, etc. |

|

|

|||

NOTE: For a more complete list of tools used by both government and industry, see Appendix C in this report.

SOURCE: RadiantBlue. 2012. Written communication. Response to inquiry from the committee.

FIGURE 3-14 RadiantBlue process description. SOURCE: RadiantBlue. 2012. Written communication to the committee.

in various combinations to create the raw quantitative data that will serve as the basis of the technical analysis to be conducted in the study. Also part of the detailed study definition are the measures of performance (MOPs), measures of effectiveness (MOEs), and measures of utility (MOUs) that are used to quantify trade-space performance and allow analysis thread comparisons as the study matures.

Preliminary Trade-Space Execution, Internal Model Assessment, Internal SoftwareAssessment

With the study trade space fully defined, RadiantBlue then uses an extensive pre-existing library of ISR system models, target decks, and vignettes to allow a study team to take existing, simulator-ready data and build significant portions of a trade space to begin runs immediately. These three parallel activities (trade space, model, and software assessments) provide analytical products that drive the next several process steps in an iterative and collaborative manner with the larger study team.

Engineering Technical Exchange Meetings, Software Integration and Test, andProgress Review

Each of the analytical products is subjected to internal model assessments and internal software reviews in which the preliminary trade-space run data results are reviewed, and a new version of the simulator is provided. Then a progress review is

conducted with the prime contractor or government point of contact (POC) during which the preliminary trade-space results are discussed, sample products are reviewed, and the status with respect to model additions and changes is reviewed. This is also an opportunity for the customer POC to refine or alter the direction of the study on the basis of these preliminary results and/or programmatic, financial, or political developments outside the study team. At the end of this process step, the study team is put on a refined vector for where to take the study in terms of priorities and trade-space definition.

Production Trade Space, Study Products, Final Study Products

With the refined study direction vector, updated executable(s), and updated models, the study team then sets up the full production trade space that includes all of the key models, target decks, vignettes, CONOPS, and software features. As the simulation data emerge from the trade-space runs, the MOP, MOE, and MOU products can be developed to address the messages and themes that were defined in the study kickoff phase. Technical measures can be refined or replaced as needed, and these then feed modifications to the messages and themes as required. In conjunction with refining technical measures, detailed low-level analysis of the simulator output data is conducted to make sure that the macro-level trends that are emerging are supported by coherent physics and technology-based micro-level system behaviors. The final study products are then formulated to meet the desired study outbriefing plan and the internal needs of the study team. These final study products are typically developed collaboratively and iteratively with the primary prime contractor or government POC and other key members of the larger study team as required.

Summary

RadiantBlue works collaboratively and iteratively with the customer to refine the details of the desired study.21 Continued collaboration with customer subject-matter experts serves to detail and enhance the understanding of the trade space, including systems, payload variations, architectures, CONOPS, target decks, analysis vignettes, MOPs, MOEs, and MOUs. With the trade space clearly defined, RadiantBlue conducts analysis of the trade space through multiple simulation runs of scenarios and vignettes. Iterative sessions between the customer and RadiantBlue serve to refine tools and scenarios, ultimately leading to study results and

_______

21 RadiantBlue. February 7, 2012. Written communication to the committee.

completion.22 Finally, RadiantBlue’s process (using the BlueSim simulator) requires iterative customer engagement and collaboration between operators and analysts and is supported by a large, pre-existing, model library of air and space systems.

RadiantBlue provided for the commiteee a second industry example of how a very complex set of assets and vignettes can be evaluated iteratively through an MRA process that is thoroughly documented for transparency, accuracy, and repeatability and can be tailored and scaled to customer desires.23

Both TASC and RadiantBlue identified analysis approaches that are responsive to their customers’ needs by taking full consideration of ISR assets and trade-offs across the enterprise, spanning air, space, and, to a lesser extent, cyber effects. What is most helpful is the approach of pairing physics-based, layered analysis tools, cost-estimating, risk analysis trade-offs, along with the cost projections over various planning horizons (e.g., Analysis of Alternatives and Program Objective Memorandums) when implementing full-spectrum MRA.

Finding 3-6. RadiantBlue’s modeling, simulation, and analysis capability focuses on the physics-based capability and architecture analysis and mission utility analysis found in MRA. The BlueSim tool, combined with RadiantBlue’s methodology, has been used to successfully support trade-space studies of various ISR and processing, exploitation, and dissemination (PED) architectures.

In the committee’s reviewing of the government and industry CP&A-like processes described in this chapter, it became apparent that multiple tools, including both commercial-off-the-shelf and proprietary tools, are utilized effectively across government and industry for modeling, simulation, and analysis, and that “one size does not fit all.” Second, none of the non-Air Force CP&A-like processes reviewed adequately addresses the emergent challenges posed by the cyberspace domain. Third, most of the non-Air Force CP&A-like processes reviewed do not adequately deal with the complexity of PCPAD, which, in turn, can affect cost, performance, and schedule. This latter issue can also result in capabilities that are not end to end and contributes to information and data that cannot be shared, correlated, or fused by users or customers. Finally, the objective of considering a wide range of government and industry CP&A-like processes was to gain insight into potential best practices to incorporate into this study’s overall recommendations. Table 3-4 maps findings to these best practices.

_______________

22 More information on RadiantBlue’s methodology is available at http://www.radiantblue.com/solutions/software-development/. Accessed February 28, 2012.

23 Congressional professional staff members who spoke with the committee identified RadiantBlue as the best modeling organization at the architecture level.

TABLE 3-4 Best Practices and Corresponding Findings

| Best Practice | Finding |

| Process includes consideration of “enterprise” ISR systems and/or capability. | Findings 3-3, 3-4, 3-5, and 3-6 |

| Process is transparent, responsive, scalable, and repeatable. | Findings 3-1, 3-4, 3-5, and 3-6 |

| Process is underpinned by multi-resolution-like analysis, modeling and simulation. | Findings 3-4, 3-5, and 3-6 |

| Process is collaborative and links planning, acquisition, and operations. | Findings 3-1 and 3-2 |

| Process is informed by operational metrics. | Finding 3-1 |

| Process incorporates network/PCPAD/TCPED architectures and cyberspace considerations. | Findings 3-2, 3-3, 3-4, 3-5, and 3-6 |

NOTE: Acronyms are defined in the list in the front matter.