Board on Health Sciences Policy

July 23, 2012

John Charles, Ph.D.

Chief Scientist, Human Research Program

National Aeronautics and Space Administration

Johnson Space Center

Houston, TX 77058

Dear Dr. Charles:

At the request of the National Aeronautics and Space Administration (NASA), the Institute of Medicine (IOM) convened the Committee on the Review of NASA Human Research Program’s (HRP’s) Scientific Merit Assessment Processes1 in December 2011. The committee was asked to evaluate the scientific merit assessment processes that are applied to directed research tasks2 funded through the HRP and to determine best practices from similar assessment processes that are used in other federal agencies; the detailed statement of task is provided in Box 1.

This letter report and its recommendations are the product of a 10-member ad hoc committee, which included individuals who had

![]()

1This study and its statement of task were derived from ongoing conversations between NASA and the IOM’s Standing Committee on Aerospace Medicine and the Medicine of Extreme Environments.

2For the purposes of the committee’s workshop and to provide clarity for other stakeholders, the committee used the following definition to describe the current HRP approach to directed research: directed research is commissioned or noncompetitively awarded research that is not competitively solicited because of specific reasons, such as time limitations or highly focused or constrained research topics. The research topic may be identified by the sponsor or by submission of an unsolicited proposal from external researchers. The language used throughout this letter report may be germane to NASA; the footnotes and listed references in the report provide further information about specific terms.

BOX 1

Statement of Task

The Institute of Medicine will conduct a review of the scientific merit assessment processes used to evaluate NASA Human Research Program’s directed research tasks. The study will include a public workshop focused on identifying and exploring best practices in similar peer-reviewed applied research programs in other federal government agencies. The study will also evaluate the scientific rigor of the NASA processes and the effectiveness of those processes in producing protocols that address programmatic research gaps.

The committee will produce a report that provides an evaluation of the review processes and decision-making criteria. The report will also recommend the metrics that are needed to assess the effectiveness of the scientific merit assessment process in approving directed research projects that meet the operational needs of NASA.

Questions to be addressed include

• What are the strengths and weaknesses of the current decision criteria and scientific merit assessment review process regarding directed research?

• Is this an adequate suite of options for review of directed research and technology tasks?

• What best practices can be identified in other federal or state agencies or other organizations that can inform the NASA processes and program?

• What metrics should the HRP use to assess the quality of the directed task merit review process?

previously conducted research under the HRP, were familiar with the HRP’s research portfolio and operations, had specific knowledge of peer review processes, or were familiar with scientific merit assessment processes used in other organizations and federal agencies, such as the Canadian Institutes of Health Research (CIHR); National Institutes of Health (NIH); National Science Foundation (NSF); and U.S. Departments of Agriculture (USDA), Defense (DOD), and Transportation (see Appendix B for committee biosketches). The committee appreciates this opportunity to advise the HRP’s efforts to improve the current scientific merit assessment processes for directed research and appreciates the

background information provided by HRP staff throughout the study process.

In conducting its review, the committee held four meetings to gather and review available information, plan and conduct a public workshop, and draft and fine-tune its report and recommendations. In January 2012, the committee held its first meeting via conference call. During this meeting, HRP staff briefed the committee on the mission and organization of the HRP and the scientific merit assessment processes3 that are currently used for its directed research tasks. At its second meeting, which was held in March 2012, the committee conducted a public workshop in Washington, DC, that included participants from a range of federal agencies and organizations, including the CIHR, Defense Advanced Research Projects Agency (DARPA), Departments of Energy (DOE) and Veterans Affairs (VA), Federal Aviation Administration (FAA), NASA, National Oceanic and Atmospheric Administration (NOAA), NSF, and the U.S. Army and Navy, as well as researchers who had submitted research proposals that had gone through the HRP merit assessment processes for directed research (see Appendix A for the meeting agenda and complete participant list). The workshop was organized into four roundtable discussions that allowed the committee to explore the practices and processes of federal agencies and other organizations in identifying directed research, assessing its scientific merit, monitoring and evaluating the progress of directed tasks, and evaluating the overall directed research processes to ensure high-quality outcomes.4 The workshop also provided the committee with an additional opportunity for an open dialogue with NASA staff to further discuss the HRP merit assessment processes for directed research. The committee’s third and fourth meetings were conducted via conference call in April and May 2012 to finalize the recommendations and report.

To augment the information-gathering sessions and background information provided by NASA, and to better inform the committee’s deliberations, a search was conducted to identify available literature,

![]()

3The scientific merit assessment processes for directed research are detailed in the HRP’s Unique Processes, Criteria, and Guidelines document (NASA, 2011d) and are also described in the PowerPoint slides presented at the IOM committee’s March 2012 workshop (Charles, 2012). The committee does not provide an in-depth description of the current merit assessment processes in this letter report.

4Prior to the workshop the committee asked the participants to provide background information and respond to questions about the merit assessment processes used for directed research in their agency or organization. This information is available by request through the National Academies’ Public Access Records Office.

including previous studies conducted by the National Academies that were relevant to the statement of task. A 2007 Cochrane review noted that “no studies assessing the impact of peer review on the quality of funded research are presently available” (Demicheli and Di Pietrantonj, 2007, p. 2), and Wood and Wessely indicated that “little research has addressed the relative merits of different peer review procedures” (2003, p. 31).

DIRECTED RESEARCH AT THE NASA HUMAN RESEARCH PROGRAM

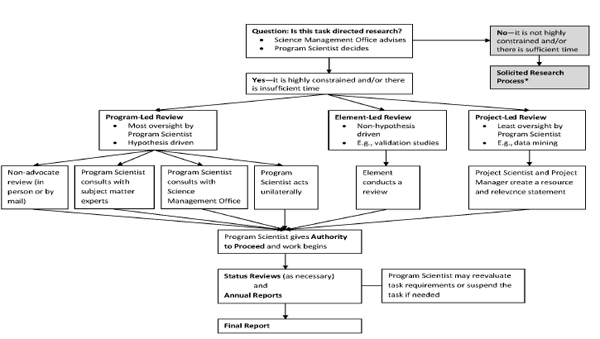

The HRP funds both solicited and directed research to contribute to its work in “discovering the best methods and technologies to support safe, productive human space travel” (NASA, 2012b). Directed research is carried out by NASA employees as well as external researchers and is funded through both contracts and grants (Personal communication, M. Covington, Wyle, April 11, 2012). Currently, directed research tasks are reviewed using scientific merit assessment processes that are described in detail in the HRP Unique Processes, Criteria, and Guidelines (UPCG) document (NASA, 2011d). Figure 1 provides a high-level overview of the existing merit assessment processes.

From 2009 to 2011, 28 percent of the research funded by the HRP represented directed research tasks (Charles, 2012). Table 1 includes the funding authorized for directed research and the number of tasks that were funded during that time. Given the amount of funding and the number of tasks funded, HRP’s directed research program is not a large component of its research portfolio. While some of the funded tasks are routine and provide support for future or ongoing research projects, others are high-profile due to their scope and end users. The number of tasks and the funding allocated to directed research varies from year to year and depends on the number of tasks that are identified to meet operational needs. These tasks must fulfill one of the two criteria for directed research: “highly constrained” research and “insufficient time” for solicitation, as defined in the HRP UPCG document (NASA, 2011d; Personal communication, M. Covington, Wyle, April 11, 2012).

FIGURE 1 Overview of current Human Research Program (HRP) scientific merit assessment processes for directed research.

NOTE: The process for the program-, element-, and project-led reviews are described in the HRP’s Unique Processes, Criteria, and Guidelines document.

*Not reviewed by the Institute of Medicine committee.

TABLE 1 Directed Research Funding and Tasks

|

|

||

| Year | Funding Authorized (in Millions of Dollars) | Number of Directed Tasks Funded |

|

|

||

| 2009 | $6.0 | 23 |

| 2010 | $2.5 | 25 |

| 2011 | $3.9 | 16 |

|

|

||

SOURCES: Charles, 2012; Personal communication, M. Covington, Wyle, April 11, 2012.

Although the directed research program constitutes a relatively small proportion of the HRP’s research budget, the committee was impressed with the scientifically rigorous and thorough processes that have been developed to conduct merit assessments of this research. The committee’s criteria for rigorous scientific merit assessment processes concur with those put forth by Wood and Wessely—the review process is “expected to be: effective … efficient … accountable … responsive … rational … fair … valid” (2003, pp. 15-16). The findings and recommendations offered in this report provide ways to streamline and bolster the accountability and transparency of the current processes.

In addition to the expectations defined by Wood and Wessely, the committee identified the following characteristics in completing its evaluation that it deemed to be essential to a valid and operationally relevant scientific merit assessment process:

• Scientifically rigorous: The integrity of the process used to review the proposed research relies on its ability to be independent and conflict-free, unbiased, and based on a thorough scientific and/or engineering assessment of merit and its potential for achieving the stated goals.

• Strategically focused and flexible: The process needs to meet the strategic goals of the HRP while also being nimble enough to respond to urgent operational needs that emerge.

• Transparent: Ensuring that the best ideas are brought to bear on the research question involves efforts to communicate broadly with the research community to inform them about the directed

-

research program and clearly outline the process for selecting and funding research tasks and their expected outcomes.

- Time-sensitive and outcomes-oriented: Given the applied nature of NASA’s research and the sequential impacts that the outcomes of the HRP research may have on engineering and operational requirements for space flight, a focus on directed research outcomes and ensuring that the merit assessment processes help achieve those outcomes in a timely manner is crucial.

The committee’s overall assessment is that the NASA scientific merit assessment processes for directed research fulfill these characteristics for the most part and are well suited for the operational requirements that they were designed to address. Where opportunities exist for improvement, suggestions are made by the committee throughout the remainder of the report, which covers the processes used to make the initial decisions on whether or not a proposal meets the definition of directed research, the scientific merit assessment processes, and quality improvement (QI) metrics for evaluating the overall assessment process.5

To determine whether a task is directed research, the HRP Science Management Office Working Group reviews a two-page task synopsis and advises the Program Scientist,6 who makes the initial decision about whether the task should be either (1) solicited and competed, which is the default, or (2) not competed, not solicited, and designated as directed research. Directed research can be conducted by internal or external investigators. As noted above, currently two criteria are used to make this decision: whether there is “insufficient time” and whether the research is

![]()

5Examples from different federal agencies and organizations are provided throughout this letter report to highlight relevant practices that may inform NASA’s processes. These examples are based on discussions at the IOM committee’s March 2012 workshop and the information provided to the committee as noted in footnote 4. This report does not aim to give a comprehensive summary of each federal department’s policies. Specific examples may represent the practice of an agency or office within the department. The general acronyms for the departments are used throughout the report for brevity. Specific affiliations are listed for each workshop participant in Appendix A.

6The HRP Program Scientist is the “senior science management official within the HRP and is the person delegated the responsibility for internal science management and coordination” (NASA, 2011c, p. 8).

“highly constrained.” The criterion “insufficient time” is fulfilled if there is urgency to complete the task that does not allow for the full solicitation and review process, which at present can take up to 15 months (Charles, 2012). Solicitation cycles of this duration can pose challenges for conducting the research task within the schedule of space operations; for example, the anticipated remaining operational period of the International Space Station is approximately 8 years, with research efforts already tightly scheduled. The criterion “highly constrained” is fulfilled if the task “requires focused and constrained data gathering and analysis that is more appropriately obtained through a noncompetitive proposal. For example, the research activity involves operational practices and the associated flight personnel or research very specific to NASA” (NASA, 2011d, p. 18).

A number of variations were noted during the March workshop in the ways in which directed research is conducted at other federal agencies and organizations. First, the language and nomenclature used to describe this type of research vary, with agencies using different terms to designate their categories of research. In common with the HRP, this type of research is a small portion of many agencies’ overall research portfolio, if they do this type of research at all. Under each of the HRP’s defining characteristics for directed research—noncompetitive, internal or external research proposals, and “insufficient time” and “highly constrained”—varying approaches and practices were described. Despite the differences, there was consensus that all of the agencies and organizations have some research that needs to be done in a time-sensitive manner, that needs to be focused on specific research questions, or that requires specialized resources. Using directed research to focus on urgent operational needs was a common theme discussed at the workshop. The HRP aligns its research portfolio, including its directed research program, with its operational needs by identifying risks and risk factors in its Program Requirements Document, outlining knowledge gaps about the risks, and defining tasks to fill the gaps in its Integrated Research Plan (NASA, 2011b). This attention to identifying and then devoting resources to some of its immediate and specific programmatic research gaps through directed research is a strength of the HRP program and provides NASA with a well-organized and responsive research mechanism.

Different interpretations were noted as far as the scope of directed research, specifically what should constitute a directed research task as

opposed to an activity that supports research efforts.7 For example, in order to conduct a larger research task a cold mitt was needed to test pain receptors. The development of the cold mitt’s design would currently be designated by the HRP as directed research; however, participants discussed that in other agencies this type of development work might be considered a supporting activity that is done by internal or contract staff and does not undergo peer review. DOD and VA representatives agreed that this is the type of work that should be done as part of the agency’s usual operations, if there is internal capability, without going through a solicitation process; however, the VA representative noted that perhaps in this specific example some form of an engineering review may be warranted. Emphasis among workshop participants focused on using internal capacity whenever possible for supporting activities. One way to make the distinction between research tasks and supporting activities is to differentiate between those that are hypothesis-driven and those that are not. Although every task or activity has specific goals, some efforts—particularly those associated with design, development, testing, and evaluation—are not hypothesis-driven; they do not predict the answer to specific, original research questions but rather are supporting activities (e.g., pilot tests, data mining, literature searches) that collect the information needed to develop a hypothesis or are used to create relevant models or technologies.

Federal agencies use a competitive solicitation process for the majority of their research or justify any sole source work in order to be in compliance with the Competition in Contracting Act of 1984 (41 U.S.C. 253). To meet operational research priorities, some agencies authorize directed research through the use of their national labs (e.g., DOE) or through agreements that are established with universities and other partners, which were previously awarded through competitive mechanisms (e.g., NOAA’s Scientific Services Contracts). Another option is to use a sole source agreement; FAA’s Acquisition Management System allows the FAA to award research noncompetitively through this mechanism. The FAA bases this decision on a market analysis, the cost of running a competitive solicitation, and the risks that could result if the work is not done.

The NIH uses the term “targeted research” for research that is derived from its priorities and mission, rather than being unsolicited suggestions from external investigators. The NIH completes this work

![]()

7Throughout the rest of the report, the committee differentiates between these two types of work by using the terms “directed research task” and “supporting activity.”

through requests for applications (RFAs) and requests for proposals (RFPs). Targeted research represents less than a third of the NIH budget. The CIHR also uses the term “targeted” to describe its directed research, which is about 2 percent of its budget. These grants are awarded directly to external investigators who have been identified as being the only research teams eligible to do the work. The proposals undergo external peer review. Criteria for the CIHR’s research are similar to that of the HRP: time-sensitive, strategically important, and feasible.

The NSF, which funds external research only, takes a unique approach to its two directed research programs, Grants for Rapid Response Research (RAPID) and EArly-concept Grants for Exploratory Research (EAGER), in comparison with the rest of its portfolio. RAPID tasks are defined as urgent due to the availability of resources at a particular time or due to unanticipated events (e.g., a natural disaster). EAGER tasks are exploratory in nature and described as “high-risk, high-payoff.” In both of these programs, the tasks have limited budgets (RAPID: ≤$200,000; EAGER: ≤$300,000) and are of limited duration (RAPID: ≤1 year; EAGER: ≤2 years). The VA participant noted that within the VA, which conducts its research intramurally, directed research can be studies that are either large, through the Cooperative Studies Program, or relatively small in budget and short in length.

DOD representatives described the DOD’s use of standing broad agency announcements (BAAs) as an alternative method to accomplish some of the goals of directed research through a competitive mechanism for external investigators. A standing BAA is continuously open to all researchers and can be as general or specific in its requirements as the funding agency would like. One advantage of using a BAA, as noted by the DARPA participant, is that the agency has the choice to fund all or part of a proposal—or to combine it with another. Proposals that are received through a standing BAA can be reviewed and awarded as frequently as is necessary. Currently, the HRP uses a range of formats for procurement, including RFPs, Requests for Information, and BAAs, which may be issued in several different formats (e.g., annual Research Announcements). However, the HRP does not consider BAAs as a feasible mechanism for directed research, in part because of the length of the solicitation cycle (NASA, 2011c). However, the DOD’s use of standing BAAs and other agencies’ use of alternative mechanisms, such as contracts with university and research center partners, for solicited research may present other options for the HRP to consider in facilitating the rapid

approval of highly constrained directed research, while also receiving broader input on research questions.

Workshop participants highlighted the importance of communicating with the general research community; for example, the NIH participant noted that if the NIH decides to do a short solicitation period due to time sensitivity, then the RFA or RFP is publicized broadly and not solely through the NIH Guide for Grants and Contracts.8 Similarly, a key attribute of the CIHR directed research program is its emphasis on transparency. The CIHR posts all directed research tasks, including the rationale for their designation as directed research, in the funding opportunity database of its public website so that the research community and public are informed about the research taking place. However, only a previously designated investigator is eligible to apply to do the research. In contrast, the HRP includes information about currently funded directed research (in addition to its solicited research) in its Human Research Roadmap, but it does not describe why the directed research tasks were designated as such (NASA, 2012a).

• The HRP has a structured process for identifying risks and gaps, which is outlined in its Integrated Research Plan. These risks and gaps inform the objectives of the HRP research portfolio, including directed research, and allow the HRP to align a specific task’s aims with the strategic goals of its program. NASA should continue to use this process.

• Nomenclature for, definitions of, and mechanisms for directed research vary among federal agencies and other organizations. During the March workshop, many agencies reported that all or nearly all of their research is competed, but a range of mechanisms were described that could be used to target research tasks to specific research areas.

• The HRP includes tasks in its directed research portfolio that are supporting activities. Supporting activities (e.g., pilot testing, data mining) can be directly approved to proceed without a formal scientific merit assessment process. Other agencies do not consider these types of tasks to be directed research that warrants peer review.

![]()

• The HRP’s current decision criteria for directed research—i.e., the research need is time-sensitive or highly constrained—are appropriate and similar to the decision criteria used in other agencies and organizations. Agencies and organizations differ in the mechanisms that are used to fill these research needs; some agencies use solicited and competed research mechanisms, while others use directed research processes similar to those used by the HRP. The length of time required for the merit assessment process does not need to be a consideration in these decisions, as agencies agreed that external peer review or other merit assessment processes can be accomplished quickly when needed without compromising the quality of the review (see below).

• Currently, the HRP website does not communicate the rationale for designating specific tasks as directed research, including the extent to which these tasks are internally defined by NASA or could be suggested by the external research community. One of the areas for improvement in the HRP process would be wider dissemination and clearer communication to researchers and other relevant stakeholders regarding the process for (1) identifying a specific task as directed research, (2) accepting unsolicited research proposals for consideration as directed research, and (3) the rationale and justification for decisions to fund directed research.

RECOMMENDATION 1 Narrow the Scope of Directed Research

NASA should narrow the scope of directed research and clearly define the distinction between directed research tasks and supporting activities based on whether they are

• hypothesis-driven or

• associated with design, development, testing, and evaluation or with collecting data or information needed to develop a hypothesis.

RECOMMENDATION 2 Expand Communications About Directed Research Opportunities and Awards

NASA should improve communication about the directed research processes to clearly disseminate its decision-making process and ensure that the research community is informed about funding opportunities and decisions. The HRP should consider using a standing BAA as an ongoing mechanism to widely disseminate research opportunities and receive unsolicited proposals for directed research, which could be funded through contract mechanisms as deemed appropriate for meeting operational needs. Information about awarded directed research tasks should be disseminated more widely, including through the HRP website. Publicized information should include justification for why specific tasks met the criteria for directed research.

SCIENTIFIC MERIT ASSESSMENT PROCESS

The committee was asked to examine the strengths and weaknesses of the HRP’s scientific merit assessment process for directed research and to provide input on the current suite of options for that process. To address these topics the committee looked at the criteria used to assess scientific rigor and then at the processes used for the merit assessment of directed research. As discussed below, during the workshop the committee heard that other agencies and organizations provide scientific assessment criteria to their peer reviewers that are similar to the HRP’s. Also similar to the HRP, a number of the organizations have scoring systems and ask reviewers to provide input on the strengths and weaknesses of the proposal as well as recommendations to improve the proposed work.

The assessment criteria used by the HRP for directed research (as outlined by the HRP in its Mail Review Evaluation Form) ask the reviewers to examine the specific aims of the research, assess whether the research design is adequate to meet the objectives, evaluate the feasibility of the proposed schedule and deliverables, examine whether the investigators have the requisite knowledge and experience, and consider the potential for the research to have significant impact. These criteria

are similar to those used by the NIH, NSF, USDA, and other organizations and were deemed by the committee as scientifically rigorous for assessing merit. For example, the NIH organizes its assessment criteria for external peer review in five areas: significance, investigators, innovation, approach, and environment. The NSF asks reviewers to consider two broad questions that encompass the criteria described above: “(1) What is the intellectual merit of the proposed activity? (2) What are the broader impacts of the proposed activity?” (NSF, 2004). The USDA’s National Institute of Food and Agriculture has similar criteria: “overall scientific and technical quality of the proposal … scientific and technical quality of the approach … relevance and importance of proposed research to solution of specific areas of inquiry … feasibility of attaining objectives; adequacy of professional training and experience, facilities and equipment … [and] the appropriateness of the level of funding requested” (USDA, 2001). In addition to the criteria mentioned above, several agencies—including the DOE’s Office of Biological and Environmental Research and NSF’s EAGER program—also consider whether the proposal encourages transformative research that may be high-risk/high-return as part of the review.

Processes Used to Conduct Scientific Merit Assessment

Although the criteria used to assess scientific merit are similar among the participating agencies and organizations, as noted above, the committee found more variation in the specific processes that are used. As discussed earlier, not all organizations conduct directed research and the scope of what is considered directed research varies. Additionally, the use of internal and external peer review differs as do the mechanisms used to complete the review process in a timely manner.

In looking at the HRP processes, the committee determined that one of the areas that could be improved is to streamline the processes used to make decisions on the supporting activities that make up much of the current project-led process. As noted above, activities such as data mining and pilot tests are not considered directed research by most agencies and organizations and could be taken out of the directed research pipeline. Decision making regarding the authority to proceed for supporting activities would be made by the HRP Program Scientist, and these activities would not go through peer review. The current HRP project-led process is already quite succinct—the project scientist and project manager create a resource and relevance statement and then the Program Scientist

makes the decision about the authority to proceed—but taking these types of supporting activities out of the directed research program may allow for more expedient decisions about them.

Many representatives from the agencies and organizations who participated in the workshop reported that they have a single process for their scientific merit assessment for directed research. These processes vary among organizations although the points of decision making for giving the authority to proceed and funding the research are similar. The CIHR solely uses external peer review and, because the proposal is not being compared with others, the peer review panel’s choices are to (1) recommend that the task be funded, (2) recommend that the task be funded depending on adequately responding to the panel’s concerns, and (3) recommend that the task not be funded. Final decisions are made by the CIHR’s Science Council. The RFAs and RFPs issued by the NIH for targeted research start with a concept approved by the institute director and then, after external peer review is conducted with scoring, the final funding decisions are made by the institute or center director, with input from staff and the advisory board. Depending on the scope and nature of the research, the VA typically uses a mix of internal and external reviewers. At the NSF, directed research proposals through the RAPID and EAGER programs are typically reviewed and recommended for award or decline by internal program officers, about half of whom are rotators (scientists or engineers from universities or research institutes who work at the NSF for a short period of time). The decision on authority to proceed is made primarily by the division director. Rotators can bring fresh perspective and scientific dialogue to their agency; this is a mechanism that the HRP could explore.

Some federal agencies do much of their directed, time-sensitive work through contracts that have been established with university or research center partners (e.g., the DOD uses university-affiliated research centers). NOAA uses this model, and the NOAA offices that request the directed work have input into the approval and funding decisions. Generally, the decision to give authority to proceed is made by the senior agency staff member, similar to decisions authorized by the HRP Program Scientist.

The committee considered the various scientific assessment review mechanisms that are currently used by the HRP (see Figure 1 on p. 5) and also discussed the benefits of using external or internal peer reviewers or a combination. External scientists can provide independent insights, and the committee believes that all HRP directed research should

have the independent perspective of external reviewers but that there are times when a mixed panel of internal and external reviewers may be needed. Internal scientists can bring input on the operational needs as well as their knowledge on how this research fits into NASA’s plans for a specific mission or objective. This fits within the HRP’s policy that “all investigations sponsored by the program will undergo independent scientific merit review. This includes proposals submitted in response to NASA Research Announcements, all directed study proposals, and all unsolicited proposals” (NASA, 2011b, p. 11).

The committee noted the importance of ensuring that there is a firewall between those formulating initial proposals to the directed research program and the person (e.g., for the HRP, the Program Scientist) who makes the decision (after peer review) about authority to proceed. This helps ensure that there are no actual or perceived conflicts of interest between the proposers and decisions about funding the research.

An additional topic discussed by workshop participants was the amount of time needed for peer review, and questions were asked about whether this was a rate-limiting step in the directed research process, which often deals with time-sensitive tasks. Although most of the agencies and organizations that use peer review are looking into ways to expedite the peer review process, the participants agreed that time need not be a major obstacle. Participants cited examples where research solicitation and peer review were accomplished in a short time frame (<60 days), when needed, without sacrificing quality. Innovative approaches that are being utilized include the use of various online and video conferencing capabilities.

• The HRP assessment processes for directed research are scientifically thorough and use similar standards and criteria as programs within other agencies and organizations that fund scientific research. The processes are scientifically rigorous, as they involve independent assessment by reviewers with scientific and other relevant expertise and also take into consideration factors related to conflict of interest and bias.

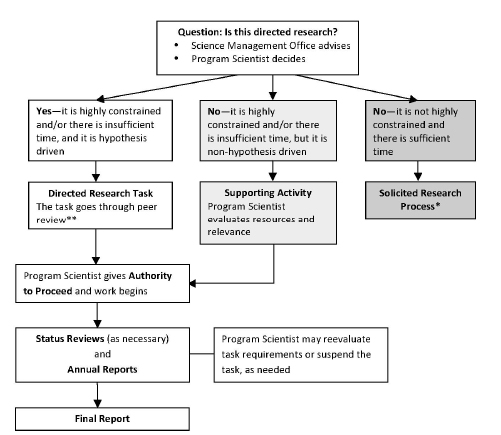

• The breadth of NASA’s current definition of directed research (as noted above) has led to a complex merit assessment process in comparison to streamlined definitions and processes at other agencies and organizations. Most agencies have narrowed the scope of what is considered directed research to focus on hypothesis-driven research and use peer review processes (external and internal) to assess the scientific rigor of the proposed research. NASA’s current three-level review processes (program, element, and project-led) could be simplified. This would include designating a portion of the current directed research portfolio as supporting activities that would not go through peer review, with the Program Scientist deciding who would do the work and how it would proceed (see Figure 2). Efforts to implement a more nimble peer review process for directed research—involving a panel of external reviewers or a combined panel of internal and external reviewers—would require discussions with NASA’s Research and Education Support Services, which supports the peer review process.

• Other agencies and organizations provide their high-level scientific staff with similar authority as is provided to the HRP Program Scientist to make decisions about supporting activities and authority to proceed for peer-reviewed research. The decisions made by the HRP Program Scientist regarding directed research tasks and supporting activities are commensurate with decisions made by DARPA program managers, DOE program managers, NSF scientific division directors, NIH institute or center directors, and USDA scientific quality review officers.

• If needed, many federal agencies and other organizations can complete the scientific merit assessment process, including external peer review, in a timely manner without jeopardizing the quality of the assessment. Many agencies and organizations continue to work to expedite the peer review process by exploring and implementing a variety of online and collaborative approaches.

FIGURE 2 Proposed HRP merit assessment process.

*Not reviewed by the Institute of Medicine committee.

**Peer review panels could be made up of external reviewers or a mix of internal and external reviewers.

RECOMMENDATION 3 Streamline the Merit Assessment Process for Directed Research

NASA should streamline the merit assessment process for directed research consistent with a narrower definition of directed research. Decisions regarding supporting activities should be made by the Program Scientist. All directed research should go through a peer review process with the Program Scientist deciding if it will be done by an external or mixed

-

(internal and external) panel of peer reviewers, depending on the scope and nature of the task. Implementation efforts should

- ensure that the Program Scientist has the authority to make decisions regarding supporting activities;

- continue to give the Program Scientist the authority to make the final decision to proceed on directed research tasks, taking into consideration peer review findings and his or her assessment of NASA’s priorities; and

- expedite the merit assessment process for directed research while also ensuring the high quality of the review process.

EVALUATION AND QUALITY IMPROVEMENT

At any point during the life of an individual directed research task, the Program Scientist may request a status review. On a yearly basis, directed research investigators are required to complete an annual report, which includes requirements such as background information, its preliminary results, and budget or personnel changes. As a result of the information presented in a status review or annual report, the Program Scientist may decide to alter the task or stop it altogether. A final report is prepared at the completion of the task, including requirements such as final results, planned future work, publications, and new technology developed. This report is due within 90 days of the task’s end date. An additional point for documenting the successful completion of the directed research task may be a review of the customer supplier agreement (developed before the work begins) to see if the expected deliverables have been met. The NASA program that will receive the deliverables (i.e., the customer) may complete a customer acceptance review at the end of the task to document its level of satisfaction.

The monitoring and reporting requirements during and immediately after completion of a specific task for the HRP directed research program are similar to those in other federal agencies and organizations. The CIHR, DOD, DOE, NIH, NOAA, NSF, and the USDA’s National Institute of Food and Agriculture all require at least annual reporting on progress, which is typically measured against the objectives and deliverables outlined at the task’s initiation. A number of participants in the workshop

highlighted the importance of having clear objectives and deliverables outlined from the beginning. Some agencies, such as the FAA and VA, require more frequent reporting, especially for larger projects. Workshop participants described the need to appropriately track and evaluate progress on the research while also avoiding undue interruptions. Additionally, representatives from agencies such as the NIH noted that the funding mechanism (e.g., contract vs. grant) played a major role in establishing the level of their involvement and supervision, with contracts providing the opportunity for more involvement by agency staff. The U.S. Navy uses a research project manager dashboard and the CIHR has an electronic information system database to assist in the collection and monitoring of data as the task progresses. The representative from NOAA noted that feedback from annual reports is typically incorporated into the research plan moving forward. In order to have data and information to evaluate a task and its progress, completion and submission of the required reports are necessary. Directed research tasks at the HRP have report compliance rates of approximately 75 percent for annual and final reports, which is below the reporting rates for HRP’s other types of research (84-93 percent in 2009-2011). NSF and NIH participants described their agencies’ policies for suspending funding if an investigator does not file an annual report.

Once a task is complete, an agency or organization evaluates its success. Ultimately, the question is whether the end result answers the research question and meets the needs of the customer. Metrics can be used to help answer this question, and generally these may include whether a useable product was delivered, whether the task was completed on time and on budget, whether a publication resulted, and whether the product is being used in operations over time. The specific metrics used for a task tend to vary according to the type of research and the objectives established at its outset, and some metrics cannot be assessed until sufficient time has passed to allow for implementation of the task’s deliverables.

A report of the National Research Council recommended the use of metrics for QI efforts that look at the steps and actions involved in the peer review process (i.e., activity metrics) as well as the effectiveness of it (i.e., performance metrics). For example, an activity metric could be “the degree of follow-up to recommendations of peer review panels,” and a performance metric could be “project impact” (NRC, 1998, p. 90). In judging the success of a particular task, there was skepticism expressed by many workshop participants that citation counts are a sufficient metric since applied science may or may not be published. If the

research is published, it may not be widely cited—and this may be particularly true for research that is space-related. Some agencies have adopted “customer satisfaction” evaluations more analogous to the private sector. For example, the FAA polls sponsors of the research task to determine whether the final results meet their needs; similarly, the Navy requests feedback from sponsors as well as investigators and end users. Some agencies, such as the DOD and the FAA, are focusing on the extent to which the end result or product from a research task has been implemented as a measure of program success.

Within the HRP, the deliverables are used to inform whether knowledge gaps, as outlined in the Program Requirements Document, have been sufficiently closed or whether further research is needed to provide additional knowledge or “mitigation capability” (NASA, 2011b, p. 3). To communicate with stakeholders, the results of directed research tasks may be documented in the HRP’s annual reports, but in recent reports, this has rarely been done (in 2009, no deliverables from directed research tasks were explicitly included; in 2010, the results of two directed research tasks were included; and in 2011, one was described) (NASA, 2009, 2010, 2011a).

In addition to evaluating individual research tasks, several agencies and organizations conduct QI activities that assess the policies and processes involved in identifying and reviewing research tasks. Some of these QI activities are aimed broadly and look at the entire scope of business processes, as in the DOE and NSF, which each have a review by an external Committee of Visitors every 3 years. Similarly, the USDA’s Agricultural Research Service operates on a 5-year cycle, which concludes with an assessment by external reviewers. Others are narrower in focus, as in the NIH, which completed a specific evaluation of its peer review process in 2008 (NIH, 2008). The NIH participant also noted that the concept of “continuous review of peer review” is realized in part through surveys of research applicants and awardees as well as of reviewers and NIH staff. Sometimes the results of an assessment are published; for example, Friedl (2005) published a 10-year retrospective of a research program within the DOD, which looked at how the peer review process worked for military women’s health research funded during that time period. The study found value in choosing to fund a task based on the reviewers’ comments and not just their scores. The importance of performing QI activities that are both specific to peer review and also that encompass the “efficacy of the system in fostering excellence in research” has been highlighted previously (NRC, 1995, p. 5). At this

time, the HRP does not have a formal ongoing QI mechanism for its directed research merit assessment process.

• Similar to other agencies and organizations, NASA asks for regular reporting during and after a directed research task (e.g., annual and final reports) to monitor its progress. Other agencies have means of enforcing compliance with reporting requirements (e.g., suspension of funding for investigators who have not completed their reports), which could inform the HRP approach to reporting for directed research tasks, where compliance rates currently lag those for other research types.

• As described in the Integrated Research Plan, the HRP documents and evaluates the results and impacts of its directed research and revises its risks and gaps accordingly. Consistent with Recommendation 2, the HRP may want to consider expanding its documentation of the deliverables and long-term impacts of its directed research tasks, including providing follow-up on completed tasks in its annual reports and on its website, as a part of increasing the transparency of the directed research program.

• Continuous QI efforts focused on the HRP merit assessment process are needed to ensure that the HRP has effective processes in place to identify directed research tasks that are feasible and valuable and that have a high probability of success. In addition, the HRP needs an improved understanding of the quality of the overall directed research process in terms of whether the HRP receives timely and useful results that fill programmatic research gaps. The committee believes that the metrics used to assess the process should ensure sufficient and thorough evaluation, but be kept in balance with the size and scope of the directed research program so as to not overburden it. Implementing continuous QI mechanisms could improve processes and inform decision making about which proposed directed research tasks will best contribute to the HRP’s mission.

RECOMMENDATION 4 Conduct Continuous QI Efforts NASA should consider conducting continuous QI efforts to

evaluate and improve the merit assessment process for the HRP directed research program by using a set of quantitative and qualitative metrics. These metrics could include

• activity metrics (e.g., length of time to completion of the merit assessment process; degree of concordance among peer reviewers; degree of concordance between the Program Scientist’s decisions regarding authority to proceed and the results of the peer review; feedback from relevant stakeholders, including researchers and reviewers); and

• performance metrics (e.g., whether the task resulted in a usable deliverable; whether it met the operational or strategic need; whether the result was implemented or informed future work; whether the peer reviewers’ evaluation predicted the success of the task; the proportion of HRP directed research funding given to successful tasks).

Additionally, QI efforts could consider whether the task was appropriately designated as directed research, the appropriateness of the funding mechanism (e.g., grant versus contract), and whether it could have been successful through another mechanism (e.g., solicited research). Evaluation of the merit assessment process for directed research should be conducted to ensure the process and outcomes continue to meet the needs of the HRP and are aligned with its mission and strategic goals.

The committee finds that the scientific merit assessment process used by NASA’s HRP for directed research is scientifically rigorous and is similar to the processes and merit criteria used by many other federal agencies and organizations for comparable types of research, including the DOD, NIH, NSF, and USDA. The committee notes the complexity of the various merit assessment pathways in the current HRP directed research program and recommends that these be streamlined into one common pathway requiring all directed research proposals to undergo

independent peer review by a panel consisting of all external reviewers or a mix of internal and external reviewers. Some of the proposals that are currently considered directed research could be redirected as supporting activities and decided on more expeditiously by the Program Scientist without undergoing peer review. Moreover, broad and ongoing input on research opportunities may be possible through the use of standing BAAs. In exploring the processes used by other agencies and organizations, the committee notes best practices in ensuring the transparency of the directed research process and also recommends that the HRP increase its communications about directed research. Additionally, continuous QI efforts to evaluate and improve the HRP merit assessment process are needed to enable NASA to actively monitor the effectiveness of merit assessment and fund directed research that will be of the highest possible value to its mission in a timely manner.

The members of the IOM Committee on the Review of NASA Human Research Program’s Scientific Merit Assessment Processes appreciate the opportunity to provide input to the HRP. We would be pleased to brief you and your staff regarding the findings and recommendations provided in this letter.

Sincerely,

James A. Pawelczyk, Chair

Committee on the Review of NASA Human

Research Program’s Scientific Merit Assessment Processes