The workshop participants began the second day’s discussions with four panels that sought to plunge deeper into some of the issues that arose during the five scenario presentations. The topics varied from rethinking the user-vendor relationship in robotics procurement, to enhancing the planning capabilities of agents and robots, to the deep-level meaning of communication, to the potential for real collaboration between humans and robots. The panels addressed research challenges and, in some cases, suggested possible approaches.

Panel One: Design, Evaluation, and Training

Moderator: Robert Hoffman

Group Members: Michael Freed, Robert Hoffman, Don Mottaz, Mark Neerincx, Jean Scholtz

The panel moderator, Robert Hoffman, provided the panel’s approach to the design-build-test-deployment process of human-machine systems. They found problems with the process at every stage. Users cannot describe what they want because they don’t know what is possible. They also don’t speak the same substantive language as the engineers who will build the systems, thus practically ensuring a mismatch between what the user wants and what the engineer will build. The human models that are used to construct human-machine systems are usually too crude; at the opposite end of the spectrum, such cognitive modeling architectures as Soar and ACT-R, while having many uses, may be too complex. Hoffman spoke of the paradox underlying the construction of human-machine systems: Although these systems would be better overall if they were based on more complex cognitive models, as the models become more complex, they also become more “brittle,” thus changing the original requirements of the system. Next, system components are often built in isolation from each other, with the result that they don’t fit with the overall workflow. Finally, user training of the

system is too little and too late, and it tends to focus more on the designer’s theory than the user’s needs.

To fix these problems, the panel offered a wish list of changes to current practice. The process should: (1) base models on knowledge and meaning and not just on data; (2) include hypotheses in cognitive models to make them less rigid and more adaptive; (3) create the role of “modeler” who can bridge the worlds of the user and engineer-builder; and (4) colocate testbeds with deployed systems so that user involvement can be rich from the start. Moreover, (5) engineers should not only train but also mentor the users of the system so as to maximize their usefulness. In addition, product deployment should not be the end of the relationship between the user and the vendor but, rather, the beginning of a second stage of empirical study by the vendor to deal with the unintended consequences of the system (both positive and negative) once it is in place. This second stage will improve the usability of the system at that particular site while offering lessons to the vendor for the next generation of the system.

During the Q&A portion of this panel discussion, Lin Padgham suggested that a looser funding model that focuses on the end product as opposed to item-by-item accounting could result in a cocreative process that more accurately reflects the vendor’s capabilities and the user’s needs.

Panel Two: Intent Recognition, Execution Monitoring, and Planning

Moderator: Andreas Hofmann

Group Members: Michael Beetz, Tal Oron-Gilad, Andreas Hofmann, Paul Maglio, Dirk Shulz, Lakmal Seneviratne, Liz Sonenberg, Satoshi Tadokoro

The moderator, Andreas Hofmann, spoke on behalf of the panel. As he explained, the panel focused on the challenges of intent recognition, execution monitoring, and planning that are associated with the sense-deliberate-act loop (also known as the robotics paradigm). He explained that most sensor-based data is “noisy” and requires filtering for quick and correct evaluation. The panel suggested that more sophisticated algorithms based on plan context might be able to filter out the “noise” related to visual and tactile sensors. This notion led the panel to consider the planning phase of the robotics paradigm: How would the agent(s), robot(s), or mixed teams assess the success of the plan itself?

Hofmann noted that it is unrealistic to define the successful outcome of a plan in terms of specific assumptions going in. A more realistic strategy would be to continually evaluate the plan’s success. Here the panel suggested that execution should include an evaluation capability that can give a probabilistic estimate of the plan’s success. If the estimate goes below a certain threshold, a human operator would be called in to re-plan or somehow alter the original plan. The challenge, according to Hofmann, is to do this sooner rather than later in the course of the plan’s execution. Another challenge is that it may be difficult to

design appropriate predictors for estimating a plan’s success because humans actually use a variety of methods to perform tasks. Thus it may be difficult to assess when execution has dipped below the expected threshold because there are, indeed, many potentially acceptable thresholds.

Next the panel turned to a basic problem of the planning phase of the robotics paradigm: in the real world, environments are uncertain and dynamic; moreover, sometimes, plans are simply infeasible. Because data keep changing, planning is computationally intensive. The challenge is for the planning phase to happen quickly enough to keep the loop robust. The panel speculated that incremental planning algorithms could address the changing data challenges. Hofmann also suggested collaborative plan diagnosis as a promising area of research. This method views plan failure as a diagnostic problem—algorithms look for conflicts that need to be resolved or constraints that need to be removed to make the plan feasible. Some members of the panel also suggested that planning domains could be made more realistic if they were defined by the robot’s action capabilities.

Hofmann concluded his discussion with a set of questions about the mental modeling that constitutes the foundation of intent recognition, execution monitoring, and planning. What is the right level of abstraction—quantitative, qualitative, or hybrid models? How should shared plans be represented? How should agent resource capabilities be represented? How should human resource capabilities be modeled? How should the human psychological or operational safety model be represented? What are the best estimation model learning algorithms that support estimation and control?

Panel Three: Communication

Moderator: GJ Kruijff

Group Members: Frank Dignum, GJ Kruijff, Yukie Nagai, Daniele Nardi, Lin Padgham, Matthias Scheutz, Candy Sidner

GJ Kruijff, the moderator, provided a summary of the panel’s discussions. Kruijff indicated that the panel addressed fundamental problems associated with communication—not simply the sharing of words and gestures but the depth of meaning that words and gestures represent. The panel’s goal was not to solve these problems as much as to describe them. Every dimension of communication, Kruijff noted, is composed of multiple sub-dimensions that affect the communication process. For example, what are the tasks in which communication occurs: single events or repeated ones? Structured or unstructured? Well or poorly understood? How many actors are communicating? What kind of knowledge is necessary for communication: Domain specific? Common sense? What kind of communication is going to take place: Face-to-face or side by side?

In the realm of human-robot interaction, Kruijff remarked, there are two functions that describe collaboration: teamwork and taskwork. Teamwork in this context refers to humans and robots coordinating their behavior to accomplish a task. Taskwork refers to the “doing” of the task itself.

Even before the task itself is undertaken, the team must communicate how to coordinate the team’s behavior: negotiating who does what, who is responsible for what, who is expected to succeed at a particular task, and so on. Plans may need to be adjusted, because things in the environment have changed. A robot needs to understand all these different aspects of the team’s coordination as well as what it means to progress for itself and others in carrying out these activities. The robot also needs to be able to identify when it or others need help.

Within the context of carrying out a task, communication is used to build up shared beliefs, or “common ground,” among the actors so that everyone on the team is at the same level of understanding. Traditional approaches to modeling and various other AI issues assume an objective model that everyone can map into. But from the perspective of communication, this is not the case. All the members of the team perceive and act subjectively. They have their own experiences and their own understanding of the world. This is particularly true for robots versus humans in the team context, Kruijff remarked.

How, then, is it possible to align all team members, given that people and robots perceive quite differently? Kruijff speculated on behalf of the panel that the problem of communication is how to fit everything together: simple communication, the social dimensions, collaboration in terms of planning and execution, and motivations and expectations. Scheutz ended the panel’s presentation by observing that sharing the deep representation of meaning is difficult enough between one human and one robot; it will take considerable research to be able to achieve this at the level of multi-member teams.

Panel Four: Collaboration

Group Members: Terry Fong, Mike Goodrich, Alex Morison, Gopal Ramchurn, Manuela Veloso, Tom Wagner, Rong Xiong

In contrast to the other panels that chose a moderator to speak for the entire group, each member of the group discussed aspects of collaboration of interest to him or her. Alex Morison discussed how collaboration involves reciprocity; team members cannot achieve their own goals without helping others. This means that each team member gives up some of his own goals in order to help others and to accomplish the overall mission. Yet in the world of human-robot interaction, Morison noted, reciprocity is not necessarily standard operating procedure. If a pilot sees a UAV, for example, he has orders to get out of the way because he cannot be sure what the UAV is going to do. Thus collaboration is still a work in progress for human-robot teams.

For Fong, collaboration should be seen as a spectrum from loosely coupled—even independent—coactivity to tightly coupled interaction. He suggested that a team can be productive as long as it coordinates what it does. Organization, which he defines here as the allocation of tasks, is central to successful coordination.

According to Rong Xiong, evaluation is an integral component of collaboration. Robots need a basis for self-evaluation that is derived from shared information. Similarly, when a human needs help, he needs to know what the robot can do and how to ask for it.

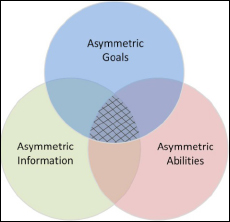

Goodrich would like to see collaboration research over the next ten years take place in the central area of overlapping circles (hatch marks) of the Venn diagram (shown left). Multiple human-robot teams have disparate or asymmetric goals, information, and abilities. Understanding collaboration will involve accounting for and aligning these asymmetries. Goodrich’s understanding of collaboration is relevant to comments made by Jeff Bradshaw during the previous breakout session, in which he described seven myths related to autonomous systems. Taken together, these myths suggest that autonomy is more multidimensional, complex, and collaborative than is often viewed in the literature.

Manuela Veloso suggested that the robot’s planning algorithms—such as Partially Observable Markov Decision Processes (POMDP), which enable robots to plan paths under partially observable conditions—would benefit from including models of the human that the robot may encounter in its environment. This will help the robot infer human intentions. In contrast to Goodrich’s approach to collaboration, Veloso questioned whether complexity is really necessary for collaboration. Does the robot need to know why the human needs it to do a particular task, such as “go to the door”? In her view, it would be a great contribution just to be able to coordinate on minimal knowledge of intentions or needs.

Tom Wagner defined coordination as the process of managing interdependencies between tasks or plans and suggested taking Veloso’s POMDP approach to the next step: to make an explicit representation of interdependence that would enable a robot to divert a human’s attention to help it. For example, a

robot waiting at an elevator would, instead of waiting opportunistically for the elevator door to open, ask a person walking by to press the button for it.

Gopal Ramchurn suggested that research is still to be done to find the balance between interaction design and mechanism design so that rules of engagement between and among humans and robots take incentives of team members into account.