Human Cognitive Modification as a Computational Problem

COMPUTATION FOR HUMAN PERFORMANCE MODIFICATION

Human performance modification (HPM) can be considered as an application of cognitive technologies in which different components have to work as an integrated system. The components and the system architecture and design are determined by the specifics of the HPM application. At a high level, all functional cognitive systems must have technologies to sense and measure, to process and analyze, and to control or achieve a desired outcome (Norman, 1980). For example, a system that assists a battle-vehicle operator in maneuvering through rough terrain might record images with a camera, process the information (for example, categorizing and locating objects in scenes), and alert the operator.

Many of today’s simpler information-processing requirements for HPM are met by conventional computing technologies, which are not discussed here. In discussing more advanced computing concepts, the committee does not distinguish between different forms of computing implementation (such as wearable computing versus stationary systems) but rather focuses on important trends in information technology (IT) development and research for both military and commercial computing applications. The trends include the challenge of managing what is referred to as big data, the need for alternative computational architectures, and an increasingly close coupling of humans and machines (as in augmented reality).

One of the greatest challenges for realizing more complex and advanced HPM applications in the cognitive realm is posed by the need for processing large amounts of often unstructured data, such as speech or video. In recent years, the notion of big data has emerged to describe this trend. More and more datasets are collected, and their size and complexity are not only well beyond human cognitive capabilities, but also often beyond today’s computer informationprocessing capabilities. The current projection of data growth is 40 percent per year, meaning that data volume would double every 2 years (Manyika et al., 2011; Miller, 1956; Bohn and Short, 2010; Simon, 1971; Maguire et al., 2007).

The projection on data growth is informed by the trend toward collecting data on everything, with numerous commercial ventures attempting to contain all the data in the world,1 along with intelligence agencies and other government agencies. Data mining, or machine learning, is now a big business. Data fusion, which originally meant the combining of data gathered with different kinds of sensors, now means the combining of data of various sorts from different databases and repositories. The data are often in incompatible formats with different categorization rules and standards and different semantics, but these limitations are being overcome. The current approach

![]()

1See, for example, http://www.factual.com.

of using structured databases to provide reasonable answers to queries is being supplanted by emerging technologies that are able to interpret, address, and integrate unstructured, natural data by using unstructured, natural-language queries that more closely reflect human language processing and analytic approaches.

Big data as a concept has implications for the demands placed on humans to understand rapidly growing bodies of information and for the possibility of augmenting human cognition through breakthroughs in computer-based processing. Sensor suites used in science, military applications, and complex system operations can flood a human operator with data and result in a decrease in system performance due to cognitive overload. Computational systems to augment human cognition are being developed to address this problem. Research is addressing the best ways for people to interact with and control systems that are increasingly complex, but the research seems to be limited in its generalizability. An example is the “optimal” cockpit in military aviation. Despite nearly 100 years of experience, the optimal design of cockpit displays, controls, and automation tradeoffs is still controversial. The question of how much a human should be involved in the operation of a system will be critical as HPM technologies associated with big data continue to evolve.

Big data has been made possible by a series of IT advancements, including the reduction in the cost of data storage and computing, the emergence of the Internet, widespread deployment of video and other sensor technologies, advanced computer networks, cloud-computing technologies, social networks, and smart phones. Although the notion of big data is discussed primarily in the context of commercial and business applications, where it promises great advances in productivity, it is clear that big data technologies will be critical for—and beneficial to—military-oriented HPM applications.

Managing big data poses substantial challenges, and the following discussion assumes that organizations, countries, or other entities that make the most progress toward solving the fundamental scalability challenges associated with big data and cognitive information processing will have the best basis for developing game-changing HPM applications.

Scientists and engineers working in the IT sector are making—and will continue to make—substantial improvements in many areas, including leveraging high-speed networking, parallel and cluster computer programming, cloud computing, machine learning, advanced data-storage technologies, continued scaling of Moore’s law (which states that the number of transistors on an integrated circuit board will double about every 2 years), and security technologies. However, as discussed in more detail below, the current trajectory does not support future computing requirements, especially as it applies to big data and cognitive information processing. Even today, serious challenges are evident in relation to scalability and sustainability of the current trends. Three examples illustrate the problems: unsustainable energy needs, the explosion of data produced, and the current inefficiency of computing solutions that mimic human intelligence.

Energy needs are a recognized challenge. Although IT technology continues to provide better performance with less power consumption every year, it is now well established that past advances have not been able to keep pace with information-processing demands (Izydorczyk, 2010). Specifically, a study estimated total U.S. data-center energy consumption in 2005 to be about 1.2 percent of total U.S. consumption, which is an increase of 15 percent from 2000 (Koomey, 2007). Total power consumption for IT is still low compared with other sectors, but the growth rate of 15 percent (that is, doubling every 5 years) is alarming and suggests that the continued expansion of IT is not sustainable. Clearly, improved IT energy efficiency will be critical to addressing the sustainability of growth. Major innovations will be required to scale big data technologies to levels where they will provide the anticipated benefits (U.S. Code, 2008).

The explosion of data is also a recognized challenge. The problem is illustrated by the new radio telescope developed by the Square Kilometer Array (SKA) Consortium (Crosby, 2012). The telescope will produce 1 exabyte (1 billion gigabytes) of data every day. To put that into context, the whole Internet today handles about 0.5 exabyte per day. It would be naïve to assume that

a viable exabyte computer can be built without major technology innovations. The innovations will need to include new architectures, new approaches to software, and new hardware technologies, including advanced accelerators, 3-D stacked chips for more energy-efficient computing, novel optical interconnect technologies to optimize data transfer and communication, and new high-performance storage systems based on next-generation tape systems and novel phase-change memory (IBM, 2012).

Finally, mimicking of human intelligence in today’s computing environment is recognized as inefficient. The IBM Watson computer is arguably one of the most advanced computing systems that specializes in natural human language and provides specific answers to complex questions at high speeds (Ferrucci, 2012). Specifically, the system “understands” questions in natural language, finds information in relevant sources, and determines the confidence of different options and responses with factual answers. Watson applies technologies from different fields, such as machine learning, natural-language processing, information retrieval, knowledge presentation, and hypothesis generation. In February 2011, the Watson computer won the game of Jeopardy against two all-time champions. But the cost of such achievement is high: the system consists of 90 Linux servers, 2,880 Power7 processor cores (3.55 GHz), and almost 16 terabytes (16,000 gigabytes) of random-access memory. The computer comes in four racks and weighs more than 10,000 pounds, needs 25 tons of cooling equipment, and consumes around 100 kW of electricity (the consumption of 40–50 households).

Although the Watson computer is one of the most impressive instances of artificial intelligence and cognitive information processing, it is far from the capabilities of the human brain, which by comparison uses only a few watts of electricity, weighs around 3 pounds, and fits into the palms of two hands. Specifically, given today’s technology trajectory, which improves power performance of computing systems by 25-30 percent per year, the Watson computer would still consume about 1,000 W in 15 years—still far from the power consumption of a human brain (apart from the fact that the human brain can do much more than the Watson computer).

It is clear from the three challenges discussed above that innovations in computing infrastructure are required if important advances are to be made. To provide context, the committee describes the current computational structure briefly below and then discusses needed changes.

Conventional Computers and Current Trends

The fundamental reason that the human brain is far superior to any of today’s computers is that the brain operates in a completely different manner from a conventional computer.

Conventional computers are commonly referred to as von Neumann machines; they were developed over 40 years ago for the applications and programming problems that were relevant then. A distinct feature of von Neumann machines is that they share a bus between a central processing unit (CPU) and memory. Information cannot be exchanged back and forth between the CPU and the memory at the same time, and this makes the bus a bottleneck—commonly referred as the von Neumann bottleneck (Backus, 1978). Before an instruction or word can be sent through the bus, the CPU must know the address, which had to be sent before. The von Neumann architecture results in an instruction-at-a-time processing approach, substantially unlike that of the human brain, which uses a massively parallel system of slower processing units (neurons) that are connected by weights.2 The weights are called synapses; each neuron has about 103 synapses. The strengths of the synapses are constantly modified by the learning experience and memory. A

![]()

2It should be noted that the comparison between the human brain and the von Neumann architecture is recognized as limited in applicability; other characteristics of the human brain slow responses in some circumstances, as in socially constrained environments.

human brain has about 1010 neurons (processing units) working in the kilohertz regime, whereas a CPU might have only four cores working in the gigahertz regime.

Although deficiencies of the von Neumann architecture have been known for a long time, the limitations have become evident only recently because the continued increase in clock frequency of microprocessors effectively hid the impact of the von Neumann bottleneck. In the past, faster transistor performance, achieved by shrinking the transistor device, offset the effects of the von Neumann bottleneck. With transistor sizes in the 20-nm regime, fundamental limitations have emerged whereby device performance is governed by finite size (or quantum) effects. As a consequence, whereas in the past transistors and microprocessors could be made faster without increasing power consumption (a fundamental feature of Dennard’s scaling laws), this is no longer possible (Dennard et al., 1974). The phenomenon is generally referred to as the power wall (Meenderinck and Juurlink, 2009). To continue to provide computational performance improvements at constant power, industry has moved quickly to multicore processors and accelerators (Iancu et al., 2010; Clark et al., 2005). That approach has delayed the impact of the power wall, but the multicore approach is not a “silver bullet.”

As a consequence of the trend toward multicore processing, another challenge has emerged: how to support each core with enough memory. Most of today’s multicore microprocessor chip space is memory or cache (memory wall) (Zia et al., 2009). In addition, the use of—and reliance on—specific accelerators to provide more performance has made the CPU less general. The variety of circuits and boards is exploding, and with them the size of the teams required for research and development; all this adds cost to the design and building of computing systems.

The current trends in the industry toward multicore processors, cell processors, general-purpose processors (GPUs), accelerators, and so on, can be viewed as “incremental” stages toward a non–von Neumann computing paradigm (Gschwind, 2007; Nickolls and Dally, 2010). These trends are expected to continue over the next 10-15 years. Nevertheless, major technology innovations would be required in the next 10-15 years to produce a Watson-like supercomputer that would be hand-held and have a footprint and power consumption comparable with those of a human brain. Such a device would have a game-changing impact, not only in the commercial arena for big data processing, but also for military and HPM applications.

Thus, the IT industry, driven by big data requirements and power efficiency needs, is at a critical juncture. Three scenarios can be envisioned for the next 15 years: business as usual, computing with low-voltage devices, and neuromorphic computing (see Table 2-1). These scenarios will most likely coexist in parallel, but also compete against one another. Each succeeding scenario is more disruptive to the status quo but promises greater improvements in IT energy efficiency and thus has more potential for cognitive computing applications.

It is expected that the “business as usual” scenario will continue to play an important role using (basically) today’s von Neumann architectures in which computations are carried out sequentially under program control by repeatedly fetching an instruction, decoding it, and executing it. Expected improvements include technologies for improving system integration (such as 3-D silicon, silicon photonics, storage-class memory, and so on) and new nanoscale devices (such as nanowires and carbon electronics).

As an alternative to the first scenario, new architectures (von Neumann and non–von Neumann) could emerge that could leverage very low-voltage (but also lower-performing) device technologies. This scenario would allow many more devices on a computer chip than today (hundreds of billions) while improving the energy efficiency of computation. It could include such technologies as spintronics.

The third scenario involves reconfigurable computing to realize non–von Neumann machines. It might lead to much more energy-efficient, brain-like computers without programming. These computers would feature self-learning, and would adjust to tasks and requirements with enhanced cognitive capabilities. As will become clear, this direction will require research and development on new neuromorphic devices and circuits in which computing

elements and memory are “fused” together or finely interleaved (Indiveri et al., 2011). Because this scenario would fundamentally change the nature of computing, it might be more than 15 years before such technologies are ready for real-world applications.

TABLE 2-1 Computing Technology Scenarios

|

|

||||

| Technology Scenario | Key Technologies | Prograninung | Efficiency Gains and Cognitive capabilities | Architecture |

|

|

||||

| 1—Business as Usual | 3-D packaging, storage-class memory, sihcon photonics, nanowiies. carbon electronics | Computing with programming | Moderate | Von Neumann |

| 2—Computing with low-voltage devices | Low-voltage devices, spintionics | Some programming | Medium | Von Neumann and non-von Neumann |

| 3—Neuromorphi computing | New neuromorphic circuits, phase-change memory, memnstors | Computing with no programming | High | Non-von Neumann |

|

|

||||

Innovations in Computers for Cognitive Information Processing

Some types of computer hardware (optical, analog, and quantum computers) are better suited than others to realizing a non–von Neumann computer architecture. Such architectures may use quantum and nonquantum information-processing methods. Research on alternative non–von Neumann computers is active around the world. The challenges facing the development of brainlike computers are enormous: very dense interconnect requirements between the neuron-like computing units, space constraints for the logical units, the analog nature of the neurosynaptic functions of the brain, and complex configuration requirements.

There have been important advances in reconfigurable computing in recent years, particularly in embedded systems (Hartenstein, 1997). Unlike hard-wired technology in the form of application-specific integrated circuits (ASICs) or CPUs, reconfigurable computing uses software-programmed logic. A circuit is flexible and is mapped to the application. Even more important, the circuit can be changed over the life of the system or application. In essence, reconfigurable computing allows the building of intelligent circuits that can be adjusted on the basis of experience and learning. The most common form of reconfigurable computing uses field-programmable gate arrays (FPGAs), which have an array of logic units. The functionality of each of these units can be set by programmable configurations. The connections between the elements or logical units are also programmable, and this makes an FPGA as flexible as software. FPGAs are well suited for artificial neural networks (NNs) or spiking neural networks (SNNs) because of their parallel and distributed network of relatively simple processing units, which can be dynamically adapted as to exploit concurrency and rapidly reconfigured to adapt to weights and topologies of an NN (Ghani et al., 2006; Glackin et al., 2005; Upegui et al., 2005; Pearson et al., 2007; Ros et al., 2006).

Substantial performance improvements in selected cognitive computing tasks have been demonstrated with FPGA technology, although FPGA technology specifications are poor by comparison to ASICs (Hartenstein, 1997). The reason for the good performance with “bad” technology specifications is that effective integration density of FPGAs can be much higher by

including a domain-specific mix of hardware and configured logical units within the FPGA fabrics. For example, the dataflow computer (a hardware architecture that uses FPGAs) is designed to utilize convolutional neural networks for general recognition and classification tasks, and can be applied to object recognition, face detection, or robot navigation (Farabet et al., 2009). The researchers demonstrated a system for providing “real-time detection, categorization and localization of objects in complex scenes, while consuming only 10 W,” which was “about about ten times less than a laptop computer, and producing speedups of up to 100 times in real-world applications” (Farabet et al., 2011).

Although the dataflow-computer example shows potential for useful HPM applications, real game-changers for scalable NN hardware implementations face fundamental challenges. Much more efficient and smaller implementations of synaptic junctions and neuron interconnects have to be developed. For example, existing FPGA implementation limits the density of synapses as they are mapped onto logic elements of the FPGA. These logic elements are still much too big and too power-hungry to be able to scale the technology (Maguire et al., 2007). Another challenge is presented by the Manhattan-style routing structures, which cannot accommodate the very high levels of neuron interconnectivity as the numbers of neurons grow. To be scalable, the numbers of interconnections and the associated power consumption have to be addressed as more “neurons” are realized on a chip. Similar issues exist in other implementations that use system-on-chip technologies. Alternative approaches using network-on-chip concepts are being researched and promise improvements because the higher levels of connectivity can be provided without incurring a large interconnect-to-device area ratio (Harkin et al., 2009; Maguire et al., 2007).

Other exploratory approaches intended to deal with the current challenges use silicon-based neuromorphic chips (Indiveri et al., 2011). Some implementations exploit the biophysical equivalence between the transport of ions in biological channels and charge transfer in transistor channels. It has been shown that it is possible to build biophysically faithful silicon implementations of different spiking neurons (Morgan et al., 2009; Hynna and Boahen, 2009). However, the circuits are very large and complex even if advanced silicon technology is exploited. More compact circuits have been demonstrated with the goal of connecting large numbers of them on a single chip (Yu and Cauwenberghs, 2010). A common application of the very compact, basic spiking circuits is their use in neuromorphic vision sensors (Schemmel et al., 2008). Research and development aimed at combining analog and digital circuits optimally are going on.

In the most prominent implementation of neuromorphic circuits, the SyNAPSE (System of Neuromorphic Adaptive Plastic Scalable Electronics) project uses digital circuits that exploit the scalability, noise characteristics, and robustness of silicon technology (Olsson and Häfliger, 2008). Digital neuron circuits are supported by transposable static random-access memory arrays that share learning circuits to enable efficient on-chip learning of cognitive tasks. In this architecture, the number of learning circuits grows only with the number of neurons, and this is a clear advantage (Seo et al., 2011). Neurons are connected with a crossbar fanout. An initial configurable chip was demonstrated with 256 neurons and 64 K binary synapses; it was built in 45-nm silicon-on-insulator complementary metal oxide–semiconductor technology. The power efficiency of the chip was optimized by using a near-threshold event-driven operation.

Interesting device options have been proposed to mimic plasticity, which is the neurons’ ability, through their synapses, to have memory, learn, adapt, and evolve in response to their environment. For example, phase-change memory materials have been proposed to perform neurosynaptic functions (Modha and Singh, 2010). Phase-change materials are considered to be a memory technology that replaces charge-based storage in the semiconductor industry. In contrast with charge-based storage, they allow nonbinary and nonvolatile information processing. Another approach is based on a memristive device, which was initially proposed in 1976 (Rusu, 2007).

Like a phase-change device, the memristor can remember its stimulation history without power (Chua and Kang, 1976).

Worldwide Research in Cognitive Computing

Research in cognitive computing is a very active and emerging field around the world; many groups and institutions are involved. It can be assumed that most innovations in this field will become pervasive and generally available, especially in light of the commoditization and globalization trends of the IT industry. A number of foreign groups are using FPGAs and reconfigurable computing for neural networks:

• Advanced Computing Research Centre (University of South Australia, Adelaide, Australia),

• Bio-Inspired Electronics and Reconfigurable Computing Research Group (National University of Ireland, Galway, Ireland),

• Department of Computer Engineering (Kocaeli University, Izmit, Turkey),

• Intelligent Systems Research Centre (University of Ulster, Magee Campus, Derry, Northern Ireland),

• Institute of Neuroinformatics (University of Zurich and ETH Zurich, Switzerland), and

• School of Information Technology and Electrical Engineering (University of Queensland, Brisbane, Queensland, Australia).

Other countries also have large programs for the development of new forms of computing. For example, the European FACETS (Fast Analog Computing with Emergent Transient States) consortium was created to drive research and development for novel neuromorphic computing architectures. The consortium includes participants in seven countries (Austria, France, Germany, Hungary, Sweden, Switzerland, and the United Kingdom). Leading research groups in this field around the world include:

• Institute of Neuroinformatics (University of Zurich and ETH Zurich, Switzerland),

• Integrated Systems Group (University of Edinburgh, UK),

• Institute of Microelectronics of Seville (National Microelectronics Center, Seville, Spain),

• Department of Electronic and Computer Engineering (Hong Kong University of Science and Technology, Hong Kong, China),

• School of Electrical Engineering and Telecommunications (University of New South Wales, Sydney, Australia),

• School of Electrical and Electronic Engineering (University of Manchester, Manchester, UK),

• Department of Informatics (University of Oslo, Oslo, Norway),

• Laboratoire de l’Intégration du Matériau au Système (Bordeaux University and IMSCNRS Laboratory, Bordeaux, France),

• Kirchhoff Institute for Physics (University of Heidelberg, Heidelberg, Germany),

• Department of Computer Science and Engineering (Shanghai Jiao Tong University, Shanghai, China),

• Department of Computer Science and Technology (Tsinghua University, Beijing, China),

• School of Electronics and Information Engineering (Tongji University, Shanghai, China),

• Department of Electrical Engineering and Information Systems (University of Tokyo, Tokyo, Japan), and

• Chinese Academy of Sciences, China.

Today’s computers are limited by “an-instruction-at-a-time” architecture (the von Neumann paradigm) and so are not designed to support complex cognitive processing tasks. The emergence of big data will drive innovation to non–von Neumann computers, which will have enhanced cognitive computing capabilities. The IT industry has taken first steps toward non–von Neumann computers by using GPUs, accelerators, multiprocessors, and so on.

Even today’s most powerful computers are many orders of magnitude away from the cognitive processing capabilities of a human brain. Fundamental innovations in hardware, software, and programming languages are required to make more substantial progress toward a computer that has brain-like efficiency and cognitive capabilities.

Reconfigurable computing (realizable with FPGAs) has made progress toward more advanced cognitive processing capabilities and will continue to evolve. However, there are substantial challenges (related to power, size, and interconnectivity) that must be overcome before reconfigurable computing can mimic more advanced SNNs. New forms of neuromorphic chips and devices are being explored to mimic brain circuits. Most near-term HPM applications will leverage off-the-shelf, commodity computers.

COMPUTATION FOR COGNITIVE ENHANCEMENT

It is important to distinguish research from practice. Research takes place in scientific laboratories and, in general, is done by academic, industrial, or government laboratories. Research helps to develop understanding of technologies and procedures, but laboratory research is often ill suited to commercial applications, being too specialized, insufficiently robust, and often prohibitively expensive.

Practical applications are usually developed by technology incubators, startups, or companies, although applications with military implications are often developed by military laboratories. Technology development is often held as a closely guarded secret. In the commercial realm, that is necessary to maintain a competitive advantage. In military domains, it is held as a secret for similar reasons: to maintain an advantage and to keep adversaries from developing powerful new techniques.

There are several potential technologies and methods for cognitive enhancement, including the use of cognitive artifacts and the integration of many technologies to create augmented reality (AR) capabilities.

Cognitive artifacts are things or technological systems that complement and enhance human cognitive abilities. Just as a car makes a human faster and a drill or crane enhances a human’s physical capabilities, cognitive artifacts enhance human mental processing capabilities. Writing is an example of a cognitive artifact that enhances memory, communication, education, and thinking. More advanced cognitive artifacts, such as smart phones and cochlear implants, are portable. A cognitive artifact does not make a person smarter: it is the combination of the human and the artifact that is smarter or more capable than the human alone (Norman, 1993). The effects of cognitive artifacts can be observed in enhanced methods of training and in enhanced communication and visualization methods, which make it possible to enhance the performance of distributed work teams dramatically. Distributed work teams may include nonhuman components: sensors, teleoperated systems, computational devices, and autonomous robots

(“automatons”). The largest benefits of cognitive enhancement will probably emerge in distributed work team concepts.

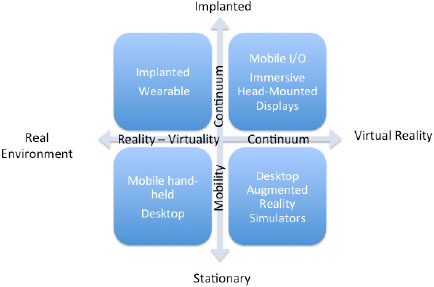

Cognitive artifacts can take on numerous forms, but it is convenient to categorize them along a mobility continuum from stationary external devices to mobile external devices to devices implanted in the body. It is also useful to understand the degree of virtuality of a device, from complete virtual reality to various degrees of augmented reality (see Figure 2-1).

FIGURE 2-1 Range of options for cognitive artifacts.

One special category is augmented reality (AR), in which, for example, a head-mounted display (HMD), eyeglass display, or embedded display identifies the objects around a person and supplies valuable information. AR has become common: smart phones have this capability, and it is being built into automobiles, sometimes within a head-up display (HUD) for the driver. Military uses dominate in this arena; HUDs are common in aircraft or mounted on the visors of combat helmets.

There are two forms of AR: strong and weak. In strong AR, information is superimposed on the perceived world. In weak AR, information is available on auxiliary displays. In the commercial world, the distinction is best illustrated by the difference between wearing goggles, which superimpose the names of perceived buildings and objects over the objects themselves (strong AR), and using a smart phone, whereby a photograph of an object is interpreted and overlaid with the name of the object (weak AR). Although the information conveyed by strong and weak AR systems is often identical, the format of strong AR is faster and easier to perceive and use than that of weak AR.

In either strong or weak AR, perceptual information is augmented by computer-generated displays that annotate the visual information and amplify or otherwise enhance auditory or tactile information. In some cases, the sensory modality may become transformed so that information in one domain is perceivable in others. Light waves outside the visible spectrum might get false coloring to make them perceivable. Acoustic signals outside the range of hearing might be

modified to lie within the normal frequency range. Sensory signals from the rear or side might be transformed into something within the sphere of attention (so that a vehicle outside the sphere might be represented by vibration on the back or relevant side), and electromagnetic spectra might get transformed into vibration, acoustic signals, or visible light.

There is a class of computational support that reduces human error, increases efficiency and throughput, and simultaneously avoids interference with command decisions or regulated (validated) software, such as some software found in the medical-device industry. Henry Lieberman defined agents for the user interface in his text with Ted Selker that describes a concept that he had introduced earlier: the advisor agent (Lieberman and Selker, 2003; Lieberman, 1998). Conceptually, the advisor agent provides digital support to human cognition—real-time management of complex, multidimensional, or dynamic issue tracking (for example, what not to forget in the process of going from state A to state B or alerts to what is changing in the background, how fast, and with what impact). Medtronic, Inc. described an advisor agent that has reduced error rate, increased throughput, and reduced costs by more than $ 300,000 per year in issue tracking and resolution for operating software in implantable medical devices (Drew and Gini, 2012; Drew and Goetz, 2008). The net effect has been to augment the cognitive capability of the software engineer by increasing precision and fidelity while reducing tedium.

Many of the ideas in this field are used in commercial applications, especially games and automobile safety systems. Military applications are obvious and are already being deployed.

Socially Distributed Augmented Reality

One important subject of research is socially distributed augmented cognition, whereby multiple people experience the same events and each is capable of contributing expertise. One of the presenters to this committee, Thad Starner, wore a head-mounted camera and display, a chord keyboard in his pocket, and a computer and communication system in his backpack. While in the committee meeting in Irvine, California, he was in continuous contact with his research group at Georgia Tech in Atlanta, Georgia. They were able to see what he was seeing, to hear the committee discussion, and to provide him with relevant information and literature sources in real time on his HMD. (Because of continual difficulties with the communication link, the demonstration did not always work—an illustration of both the strengths and the shortcomings of the current technology.)

This form of AR has great potential in command and decision modes, in which different external experts can provide relevant information and interpretation even while they are in remote and geographically dispersed locations.

Mobile augmented reality (MAR) extends the AR paradigm, which is to display digital information in the user’s view via HMD or other display so that objects in the physical world and digital world appear to coexist spatially (Milgram and Kishino, 1994). Through the use of location-aware mobile devices, MAR can broaden the interaction space of traditional AR, in which the augmented interaction space is limited to a predefined area, and contribute to mobile learning that takes advantage of the context and manner in which people receive information with respect to their environment (Liu and Milrad, 2010).

An example of a MAR learning tool is ARCHEOGUIDE, which provides virtual reconstructions of artifacts and buildings and site-specific information about historic places in the real environment (Gleue and Dähne, 2001). In this early MAR system, users wore an HMD and a backpack full of sensors and mobile equipment. The concept was extended to games and tourist applications, including TimeWarp (Herbst, et al., 2008) and Science in the City AR (Roschelle, 2003). It has also been extended to create new forms of artistic expression—artworks not visible outside the MAR environment (http://www.unseensculptures.com).

In MAR systems, augmentable targets can be streets, buildings, natural areas and objects, and even people and other moving targets. MAR enables the physical environment to be directly

annotated and described in situ, guiding users to pay attention to particular parts of objects in their environment. Virtually any object in the user’s environment could become a point of entry to new information; that is, “the world becomes the user interface” and material for learning (Höllerer and Feiner, 2004; Welch and Bishop, 1997). This is a great opportunity to design new educational technologies that aim to connect learning materials to students’ personal lives and experience beyond the classroom. In contrast with traditional educational materials, MAR systems can provide students with ways to connect classroom concepts more directly and interactively with examples found in the real environment.

In addition to its mobility, MAR is a unique enabler for mobile learning because of its multimodality and its ability to simulate new perspectives directly in the user’s environment. Through visually rich simulations and location-sensitive media, MAR can provide people with new or alternative ways of looking at the world. For example, the see-through vision system of Avery et al. (2008) allowed visualization of occluded objects in an outdoor setting, textured with real-time video information. Their MAR system gave people access to a kind of “x-ray vision”, revealing the activities that were taking place inside a building from outside. Similarly, an underground visualization system gave users 3-D models of the underground infrastructure superimposed on a construction site. The MARCH (Mobile Augmented Reality for Cultural Heritage) system was designed to help users to identify cave drawings by overlaying experts’ drawings on top of the surface of the cave (Choudary et al., 2009). The Earthquake AR explored how MAR could be used to visualize reconstructed buildings and to show other earthquake-related information on site after a disaster (Billinghurst and Grasset, 2010). After the earthquake in Christchurch, New Zealand, people walked around the city with Earthquake AR and saw life-sized virtual models of buildings where the buildings themselves had been.

In summary, instead of immersing people in a virtual world, MAR enables people to remain connected with their physical environment and invites them to look at the world from new, alternative perspectives (Ryokai and Agogino, in press). By presenting alternative perspectives in their everyday view, MAR could facilitate a richer perception of the everyday environment.

Enhanced Cognitive Performance Through Better Human-Centered Design

One important form of enhancement should not be overlooked: the design of humancentered systems. Today, many systems are not designed to enhance human performance. On the contrary, they are designed with the technical components of the system in mind and with an assumption that the person will handle any difficulties that arise. One result is that opportunities for enhancement are missed. A worse result is a high percentage of system failures, often blamed on “human error.”

A rich body of literature demonstrates that “human error” is often the result of poor design. Machines can execute precise, repetitive operations; people are poorly suited to these tasks. On the other hand, people can perform well where flexibility and creativity are involved, and can react to novel, unexpected situations, whereas machines do poorly in this regard. But instead of taking advantage of the human virtues in designing systems, we often force people to operate by machine rules and logic. The result is that most accidents (the percentage varies from 75 percent to 90 percent) are blamed on humans.

With better attention to the well-established principles of human-centered design and human-systems integration, including modeling and simulation of human cognitive performance, we could get huge enhancement in human cognitive capability with no need for new research or new applications. The principles are well known—they can be found in many books, courses, conferences, and tutorials. For example, the Army MANPRINT program tries to ensure that they are used. In the National Research Council, the Board on Human-Systems Integration has issued report after report on this problem (e.g., NRC, 1997a, 1997b, 2005, 2007, 2011). Donald A.

Norman’s comments to the committee show how the past reports have been operationalized (Norman, 2011).

The United States has a competitive advantage in the development of human-centered software. However, Siemens AG, a German company, and Delmia, a French company, are the two major suppliers of advanced human simulation software in this arena. Other countries either do not pay as much attention to these issues or are still dominated by an engineering mentality that puts the needs of the machines first and the capabilities of people second. Moreover, Eastern cultures, which emphasize the needs of society over those of the individual, are at a disadvantage in this particular domain in that the individual must often learn to comply with societal demands. This philosophy seldom translates into human-centered software design.

Thoughts on the Concept of Radical Innovation in Cognitive Enhancement

A major concern for this committee is how radical innovation might suddenly appear and redefine cognitive enhancement. Although it is often believed that this is a result of detailed research and the invention of new technologies, that is not necessarily the case.

Two recent examples are pertinent of off-the-shelf technologies that were given amazing new uses:

• Siri, a spinoff venture of SRI International, which launched the product, was bought by Apple one month later and now is a major part of the iPhone.

• Nintendo Wii revolutionized the game industry by using existing technologies that had been ignored by competitors.

Siri integrates Nuance Corporation's speech-recognition system (and text-to-speech) with standard simple artificial intelligence and database techniques, thus leveraging many services that were already on the Web (such as Wolfram's Alpha and Wikipedia) in a software-as-a-service architecture. Wii introduced the use of infrared imaging chips and inexpensive sensors (spurned by other game-makers because they were thinking only of bigger and faster graphics and processing) and redefined the game industry. Microsoft Kinect can be considered a descendant of the Wii concept in that once Microsoft engineers understood the Wii architecture, they were able to put together elements that were already in their laboratories with a commercially available 3-D ranging camera. Both Wii and Kinect have been shown to have HPM applications: Wii has been used for integrated cognitive and physical therapy in assisted-living environments (Rolland et al., 2007), and Kinect has been used to integrate cognitive tasks with physical movement for improvements in learning (Lo, 2012).

History repeatedly illustrates that a lot of so-called revolutionary technology comes about simply through the reframing and redefining of an existing product. New technologies and scientific breakthroughs are not always required. That presents a challenge in identifying and tracking “radical” innovation: when it is simply the result of reimagining what can be done with existing components, little warning is available.

Every technology that is meant to enhance human performance can, under the wrong circumstances, reduce human performance. This degradation of performance may not be deliberate (e.g., cell phone texting while driving) or it may be deliberate. In an example of the latter, two Japanese researchers developed a delayed-speech feedback system (Kurihara and Tsukada, 2012). It uses the well-known phenomenon in speech whereby playing a speaker’s voice back to the speaker with a delay of 0.2 - 0.3 seconds interferes with speech production; it can produce intense stuttering. The Japanese system uses commercial components: a directional

microphone coupled with a highly directional loudspeaker to record and delay a voice and then beam it back to the speaking human. It is conceivable that this technique would work over considerable distances.

The committee did not find other examples of degradation, but it appears to be a potentially valuable subject that is so far largely unexplored. Obvious forms of degradation, including the distribution of false information (such as spoofing, counterfeiting, false trails, and false leaks) are well known and are standard in novels and movies. Other disruptions interfere with the communication channel, as in the example above—it is a form of “denial of service.” This kind of attack is also well known. Nonetheless, the committee found little evidence of the use of technologies of cognitive enhancement for performance degradation.

Foreign Research in Cognitive Modification

The committee was unable to discover topics related to cognitive enhancement that were being pursued exclusively outside the United States. As in the United States, most such work is either being done in research laboratories and published openly or is being introduced into commercial products. Unpublished or classified work that cannot be discovered through normal channels might be occurring within the United States; and discovering such foreign work is even more difficult.

One of the committee’s advisors on technologies for augmented cognition reported that funding for applications was difficult to get in the United States but that other countries had increased their support:

…2005 …was the year that Europe and Asia really started betting on ubicomp/wearables (ubiquitous computing and wearables). We are seeing the results now—Europe has been pulling ahead of U.S. steadily in wearables/ubicomp research, and South Korea is starting to surpass U.S. in electronic textiles research, in my opinion. Much of my wearables research and funding is with the EU and South Korea. My colleagues in China seem to be suddenly in demand for projects they can't talk about with me or their European friends (military?). Though China is behind U.S. in research, Foxconn has an internal startup on Head Up Displays (HUDs)—they bought out much of the Myvu/MicroOptical management and patents after they closed. (Thad Starner, personnel communication, March 2012)