5

A Roadmap for Spatial Data Infrastructure Implementation

A well-designed spatial data infrastructure (SDI) for the U.S. Geological Survey (USGS) that is based on best practices and focused on the agency’s mission will have a high probability of success provided there is adequate planning and execution. Planning and execution will need to entail both a broad vision and steps that address specific needs, resources, and organizational structure in the USGS. On the basis of the experience of other agencies that have implemented SDI programs, the Survey may encounter some challenges as it prepares to implement an SDI, with challenges ranging from general organizational concerns to technical considerations. A roadmap tailored to address issues specific to the USGS can provide the help to ensure that its implementation is successful. This chapter provides a roadmap of both general guidance and specific actions needed for SDI implementation that is based on the committee’s findings and its vision for the USGS SDI.

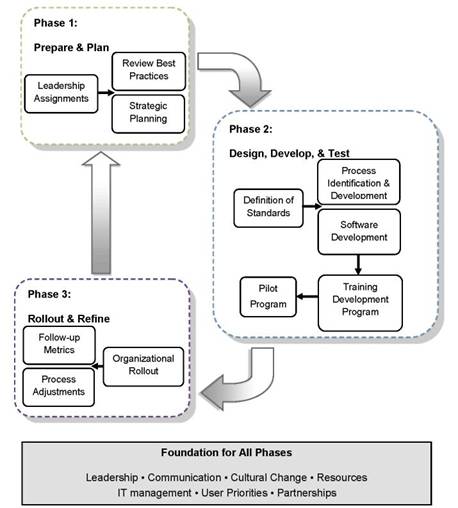

A roadmap can serve as a starting point for planning, developing, and implementing a comprehensive SDI program at the USGS. The committee proposes a roadmap for SDI implementation in Figure 5.1 and divides SDI implementation into three cyclical phases: (1) preparation and planning; (2) design, development, and testing; and (3) rollout and refinement. It proposes some general steps in each phase to assist the USGS in implementing an effective SDI. The general steps are described below.

Although the committee proposes a roadmap with phases and steps for the Survey to consider, it was beyond its task and expertise to provide details of

Figure 5.1 Roadmap for implementing the USGS spatial data infrastructure.

specific tasks for carrying out its vision for the SDI. Such a detailed roadmap will need to be developed within the agency and tailored to the specific needs and resource limitations of the USGS, and decisions will have to be made by the organization’s leaders who understand its strengths and limitations.

Phase 1: Preparation and Planning

Programmatic preparations and plans are critical in the first phase of SDI implementation. A first step is the appointment of key leaders for envisioning, establishing, and carrying out the vision for an effective SDI. One notable position is that of the SDI program director, who will need to report to the USGS Directorate level for such an agency-wide effort. The core team taking on the challenge of initially scoping and envisioning an SDI will need to consist of talented and skilled professionals that understand the value and purpose of an SDI and are capable of carrying out the vision through effective project planning and management. It may be necessary to attract outside professionals through financial incentives to complement the SDI talent already at the USGS. The work of the core team will include reviewing similar SDIs—such as those of the National Aeronautics and Space Administration (NASA), the National Geospatial-Intelligence Agency (NGA), and the British Geological Survey (BGS)—for best practices and lessons learned at a higher level of detail than those already outlined in this report; determining and defining SDI system requirements on the basis of the six science directions of the USGS Science Strategy and user needs of other agencies, local governments, academe, and the public; determining the organizational structure for the SDI; and identifying goals, establishing timeframes and milestones, and developing performance metrics. Once the initial planning is complete, it will be important to announce a general outline for implementing the SDI program because communication and outreach will play a decisive role in the success of the program.

Phase 2: Design, Development, and Testing

The second phase would entail designing, developing, and testing the SDI program. One of the first steps to undertake in designing the SDI is to identify and define standards that are specific to the USGS mission. Once the standards are determined, the next steps to consider are process identification and development and software development. The former identifies common and documented processes that can enable the SDI to function smoothly across the USGS, and the latter establishes tools for the acquisition, discovery, management, recording, archiving, and sharing of data. With standards, processes, and software in place for an SDI prototype, a training development program would be needed to allow staff to become acquainted with the SDI prototype. The training program will be crucial for providing technical training and support and for building organizational support and buy-in at all levels. After the prototype has been introduced, it will be beneficial to deploy a pilot program on a small scale in the USGS to test how well the pilot program works and to identify and rectify glitches and incorporate these improvements into the prototype and education efforts.

Phase 3: Rollout and Refinement

The third phase in implementing an SDI will need to include a process for rolling out the SDI throughout the USGS and a process for fine-tuning the program. To ensure that people are properly informed and trained, an institution-wide training program will need to be in place before the SDI is unveiled, and retraining will need to be offered periodically for users to understand the system better. The SDI program will need to implement follow-up metrics that feed back into review and planning (Phase 1) to determine how well it is being executed to meet its strategic goals. On the basis of findings gathered with those metrics, there will need to be a process whereby adjustments can be made to serve users and fulfill USGS priorities.

ORGANIZATIONAL CONSIDERATIONS FOR SUCCESSFUL SDI IMPLEMENTATION

Leadership

A designated project official would need to be identified early in the process to oversee SDI implementation. The SDI implementation program will require the efforts of a full-time staff officer, and the USGS could explore whether such an official should be placed at the executive level so that information would be clearly articulated both in and outside the Survey.

An oversight body would also be essential in advising the SDI staff official on strategic goals for SDI development and implementation. Oversight could take the form of a board of experts and could include key external stakeholders inasmuch as it would be important to determine how an SDI would best serve the needs of its users and to solicit input from external stakeholders.

SDI implementation would need to have high priority with USGS leadership. The SDI staff official would need support from all levels of leadership—from senior managers to the USGS director—and would need to be given commensurate authority to develop and deploy standards. Doing that will require a sense of ownership on the part of all levels of staff and a fundamental shift in corporate culture that will be partly driven by bringing employee rewards and incentives in-line with SDI goals.

The relationship between the considerations above and ongoing efforts at data integration and SDI development at the USGS will need to be determined early in the process. The National Map currently forms a central part of the SDI at the USGS, but the USGS also has many other data infrastructures, such as the National Biological Information Infrastructure, and these multiple SDI’s would need to be incorporated into the coherent SDI envisioned in this report. The newly created USGS Directorate for Core System Science appears to be a

positive step towards this goal and the organizational considerations above may be best carried out under that new structure.

Management

Implementing a complex, organization-wide system will require effective methods in program management. Communicating the goals and progress of SDI development is a critical component of leading and implementing an SDI that allows for transparency and accountability by internal and external parties. It is also a useful way to leverage favorable publicity—publicly committing to developing an improved and comprehensive SDI and publicizing progress during implementation.

It will be important to manage expectations at all levels because implementing an SDI will be disruptive and the process will take time to complete. Thus, it would be helpful to set priorities among deliverables to identify low-hanging fruit in order to build on early successes. To militate against back-sliding, it would be useful to consolidate steps that help with incremental progress.

A corporate information policy will need to be developed to address all aspects in and feeding into the SDI. If there is already an existing policy, it will need to be reviewed comprehensively and revised accordingly to accommodate SDI priorities. Incentives and protocols could be developed and deployed to encourage adherence to policy.

SDI implementation is a multi-tiered, multi-year process, therefore the USGS will need to consider a project-management structure that is flexible in allowing for personnel changes and reassignments. An adaptive-management approach would allow the USGS to respond to an evolving technological, data, and application environment. Furthermore, managers involved in implementing the SDI will need to clearly define current and future job responsibilities for their staff so that written job responsibilities reflect and comply with the corporate information policy. A paradigm shift in management practices will need to occur that embraces integrated team achievement and includes incentives and resources to encourage USGS scientists to share data. As mentioned in Chapter 2, an extra layer of accountability would be to include SDI implementation as a component of annual staff-performance evaluations.

In measuring the progress of SDI development, it would be helpful to develop realistic benchmarks based on the performance of analogous national and international organizations. The USGS could consider an international peer-review process to gauge the progress of SDI implementation.

Cultural Change

Success will take clear vision, clear direction, and, most important, buy-in from all USGS staff ranging from the responsible officer to the individual

contributing scientist. Implementation of an SDI is a major program for establishing a geospatial base for USGS professional staff and outside users, and it would need to be viewed as such by the Survey. Individual scientists are typically committed to their research; thus in implementing a new SDI, a similar level of commitment and shared responsibility is needed from researchers to format and share data, adhere to standards, and generate metadata. An example of cultural change that resulted in effective institutional leadership and staff buy-in is the Six Sigma quality program developed by Motorola (see Box 5.1). Such staff participation, adherence to new established processes, and instilling commitment constitute a necessary paradigm shift in the USGS.

Resources

Adequate resources are needed to design, develop, establish, operate, maintain, manage, and lead an SDI. These resources include personnel who have the proper training, knowledge, skills, and motivation including sufficient understanding of hardware, software, data, and support infrastructure (such as space and electric power) to keep up with expectations and technological changes. Financial resources will also need to be sufficient for the SDI program, but it should not be overfunded; overfunding can lead to waste, whereas underfunding can lead to frustration and the inability to reach goals in a reasonable time. For example, in implementing its SDI, BGS budgeted one-third of its expenses to the SDI program (Ian Jackson, personal communication, 2012). A similar percentage of the USGS budget may not be practical or required, but the BGS experience indicates that commitment and a substantial budget are necessary. Finally, SDI implementation will require substantial time and effort.

Partnerships

Partnerships are vital to a successful SDI and can be used to support the USGS Science Strategy. Partnering can help the USGS to align itself with federal and international priorities and with mandates to share scientific data. Benefits of partnerships include resource-sharing, access to high-value information resources that are not available otherwise, and the ability to network shared assets and address problems with information that a single agency usually cannot address (for example, in the case of climate change). In addition to leveraging expertise and experiences in different fields, such collaborations can also enable partners’ use of each other’s existing data and knowledge assets to be repurposed, creating greater efficiency through sharing of data resources. There is also the ability to mobilize quickly in response to critical studies and the ability to connect to domain users via application programming interfaces. Furthermore, partnering

Box 5.1

Driving Cultural Change: Implementation of Six Sigma Quality at Motorola

In the 1970s, Motorola embarked on a process to improve product quality by implementing a statistical process control called Six Sigma; it represented the goal of minimizing defects in all aspects of a product’s development (Montgomery and Woodall, 2008). Achieving a level of quality of about three to four defects per million opportunities required a substantial culture change in the corporation.

To invoke culture change, Motorola mounted a campaign with the goal of training and garnering buy-in from every employee (Luther Siebert, personal communication, 2011). An essential tenet of institutional culture change is commitment by top management, and the CEO of Motorola embraced the Six Sigma program. The support of senior managers enabled company officers to deploy the initiative, and its implementation was reinforced by tying the success of the initiative with individual performance, ensuring that each employee had a personal stake in quality. For example, scorecards weighed quality-performance achievement, and dashboards were developed for reporting up the chain of command. In addition, a bonus program, in which a high percentage of employees participated, was partially tied to quality.

The Six Sigma process was a multi-tiered campaign. The first step was training the trainer. Departments designated candidates to become experts in statistical process control and designated them as the Six Sigma “black belts” who would also be “change agents”. Staffing was determined as a percentage of organization population to ensure consistent saturation of the organization with program experts. Certification of black belts and entry level green belts was determined by length of training, examination completion, and practical project completion. It was conducted through Motorola University, the organization’s employee-training program that established a thorough curriculum in quality and quality management.

Senior management was then trained in the Six Sigma process so that they would understand the basic concepts and the benefits of such a change to the corporation. In addition to accountability for company oversight, the role of senior management included removing barriers and making sure that success was recognized. Lower-level executives and managers were also trained in courses that included reference textbooks and other materials. Simultaneously, training teams traveled to all corporate offices and provided extensive staff training courses with emphasis on engineering and manufacturing. Six Sigma training was required for employees, and each department had metrics and goals for Six Sigma performance. For example, managers were periodically evaluated on their performance in achieving Six Sigma goals, and training resources remained available in case a manager required assistance.

As a result of the Six Sigma process at Motorola, there was a gradual but dramatic elimination of defects and an increase in product quality, and Motorola eventually became known for its product quality. In 1988, Motorola was the recipient of the Malcolm Baldridge National Quality Award (given by the president of the United States) for its processes and documentation to determine a high-threshold measure of organizational quality.

and making data publicly available to partners present an opportunity to garner goodwill and political support.

Partnerships require time and effort to develop and maintain and each partner will need to reassess full control and ownership; this may cause participants to shift from their agencies’ missions or visions. It will also be difficult initially to establish and agree on shared domains and standards.

There are five categories of partnerships to consider: (1) strategic partnerships (with agencies such as NASA, the National Oceanic and Atmospheric Administration (NOAA), and NSF); (2) data partnerships (with agencies such as the Census Bureau);(3) standards partnerships;(4) academic partnerships; and (5) technology partnerships (with the commercial sector). The USGS will need to develop and expand strategic partnerships with key federal players—NASA, NOAA, NSF, NGA, the U.S. Department of Agriculture (USDA), Department of Energy (DOE), and the Department of Health and Human Services (HHS)— on science data to address common SDI issues; these are strategic in that they provide authority, expertise, and critical information resources for addressing the USGS Science Strategy. The Survey will also need to establish strategic partnerships with selected state, tribal, and local agencies to implement SDI functions that support effective exchange of spatial data among the local, state, and federal levels, perhaps starting with hazards or water data. It will also be important to engage key domestic and international scientific organizations in domain standards development (such as the Consortium of Universities for the Advancement of Hydrologic Science, Inc.) and interoperability standards (for example, OGC and ISO). Many of these partnerships will also increasingly include volunteered geographic information from the public and that is provided to the USGS through partners and from the USGS’s own volunteered science data programs. The USGS will need to develop partnerships with the science community, the private sector, and other federal agencies to address technical issues of SDI data quality, value-added data, and online services, perhaps through centers of excellence and working groups.

Federal

Once SDI implementation has begun in the USGS, the SDI will need to be accessible to external agencies and expand to encompass data contributed by external partners. USGS partnerships will be important for creating or augmenting USGS data assets through a collaborative process. Conversely, USGS scientists provide research support for an extensive array of external agencies while maintaining interfaces with state agencies that create statewide data content.

Ongoing USGS research support to external agencies is an example of successful partnerships already under way. The unique combination of biological, geologic, hydrologic, and mapping programs of the Survey provides independent high-quality data, research support, and assessments needed by federal, state, local, and tribal policy-makers, resource and emergency managers,

engineers and planners, researchers and educators, and the public. The USGS provides applied research and data to agencies such as the Federal Emergency Management Agency, the Army Corps of Engineers, NASA, NOAA, the U.S. Forest Service, the National Park Service (NPS), and the Bureau of Reclamation. The USGS Contaminant Biology Program collaborates with other agencies and organizations—including the Fish and Wildlife Service, the Bureau of Land Management, NPS, the National Park Service, U.S. Environmental Protection Agency (EPA), the Bureau of Reclamation, the Office of Surface Mining, and other federal, state, and local agencies—in the conduct of its research to improve understanding of the environmental effects of current and emerging contaminants.

Given the multidisciplinary activities of the USGS in support of research and of external agencies, digital data will need to be shared within the USGS and with other agencies. Digital data on various scales will need to be archived so that they are discoverable by researchers and so that they exist in standard formats to keep reformatting to a minimum. Both the USGS and states provide data, and the USGS fills the roles of coordination and dissemination.

States

Cooperation with states will be essential for ensuring that information is available and usable. States are users and providers of USGS data, so it is necessary for data to flow in both directions. Small, densely-populated states, such as Delaware, seldom use USGS datasets, because most of their data needs are on a fine spatial scale. In those small states, local and state governments often create datasets, such as high-resolution elevation databases and air photographs, whereas large states with low population densities, such as Montana, might use USGS datasets. However, state cooperation will be essential for updating the national-level USGS datasets whether a state is small or large.

Other Researcher Communities

The USGS is in the unique position of being a national provider of spatial data and the home for a large cadre of scientists and therefore has the potential to be a more effective national asset of the larger research community. Many research collaborations already exist with USGS scientists; these should continue, and new collaborations should be encouraged. It is important that all USGS data be discoverable, so at a minimum there should be data catalogs that describe the details of the data that currently exist. The government-wide effort to catalog spatial data on-line through Data.gov1 includes a large number of USGS spatial datasets and this effort provides a basis for a future catalog that is fully integrated into the USGS SDI. Online catalogs of USGS data can foster research collaborations with other research communities and ensure that data are used by as many researchers as possible to answer research questions that are important

________________

to the nation. Therefore, datasets need to be discoverable, and resources will need to be allotted specifically for data-sharing.

Public

The general public is a user of USGS products, and public interest in USGS products has increased as new ways of producing, displaying, and distributing the results of USGS data have evolved. The USGS will need to consider the public’s needs as it develops methods to ensure that the SDI provides public access to its vast array of high-quality products. Access can be provided through portals or by making USGS data accessible to others for distribution through other popular access capabilities. The ability to ingest volunteered geographic information from the public will become increasingly important.

Implementation Partnering

To ensure the broadest utility of the SDI, the USGS will need to consider implementing it by using open standards and making use of existing infrastructure. Previous SDIs typically made use of Geographic Information System (GIS) tools to manage and display data, and users of GIS tools typically need training and need to license or buy GIS software. Open standards for description and display of geologic and other Earth science data exist. If open standards are embraced, USGS data can be used and fused with other data for both traditional and new applications; this would result in a much wider use of the data than if they were represented in proprietary or internally developed formats and systems.

Adopting open standards for implementing its SDI will enable the USGS to leverage and partner with other agencies and organizations effectively. For example, NASA, NSF, EPA, and USDA collect or disseminate geospatial data. In addition, adoption of open standards will allow informal or implicit partnerships to be established without the need for formal and often expensive partnerships. For instance, ground-based field data that are collected by the USGS, USDA, or NSF can easily be used to validate or calibrate data collected from the air or from space, such as data on forest height or speciation observed with radar, light detection and ranging, or spectral imagers. In another example, crustal deformation data collected by NSF’s Plate Boundary Observatory can be fused with data collected by NASA through uninhabited aerial vehicle synthetic aperture radar and paleoseismic and seismic data collected by the USGS to improve earthquake understanding and forecasting.

Information Technology Management

Information technology (IT) is a fundamental aspect of the research environment, and the concept of an SDI is merging with similar concepts, such as “eScience” and “eResearch”, used in the scientific community. Those all point

to the important role of defining a “cyberinfrastructure” for undertaking research, collaborating, sharing data and documents, collecting sensor data, and archiving for provenance and long-term preservation. For a science organization like the USGS to support its diverse science workflows would require an evaluation of current IT infrastructure to ensure that it is aligned with the USGS Science Strategy.

The USGS will need to implement a comprehensive, long-term knowledge-management infrastructure that supports end-to-end spatial data management, including the collection, integration, maintenance, and delivery of multidisciplinary scientific data. To carry that out, the USGS would need to

• Identify data assets most critical for supporting the Science Strategy and give high priority to making them discoverable and interoperable.

• Implement structural and syntactic interoperability of USGS knowledge resources, starting with the highest-priority data assets, followed by semantic interoperability.

• Develop an effective data-archiving strategy to ensure a persistent and cumulative knowledge resource.

• Initiate a corporate process to create a comprehensive and consistent inventory and ensure the quality of the data and create standard metadata for it.

• Make “discovered” data structurally (and eventually semantically) interoperable so that they may be shared and integrated with other datasets in the USGS, elsewhere in the United States, and internationally.

• Implement internationally agreed-on data standards.

The Web as a Computing Platform

The USGS lacks a robust data-management structure that can manage and process knowledge on a continental or global scale. Such a system will need to serve as a foundation for the next generation of knowledge-driven services and applications. As mentioned in Chapter 3, several other governments and scientific organizations have recognized the necessity of layering infrastructure and have begun to develop systematic and general approaches to their SDIs with architecture that can scale into the future. The USGS will need to be supported by an adequate cyberinfrastructure that comprises both hardware (computing resources, data centers, and high-speed networks) and software tools and middleware. It will also require an SDI that can help to align the germane scientific research literature with research data. Additional IT developments in cloud-based computing infrastructures, semantic technologies, database-centric computing, and data integration on the Web are platform components that warrant further evaluation as the USGS embarks on its SDI development (See Box 5.2).

It is beyond the scope of this committee to draft a system design for the USGS SDI, but the agency can embark on the process by learning from others. It could establish an IT management team that solicits requirements from the new

Box 5.2

Information Technology Platforms to Consider for the Spatial Data Infrastructure

Cloud-Based Computing Infrastructures — Cloud-based computing infrastructures enable groups to host, process, and analyze large volumes of multidisciplinary data. Such consolidation and hosting help to minimize many organizational and technical barriers that impede multidisciplinary data-sharing and coordination. Cloud hosting can also facilitate long-term data preservation, a task that is challenging for universities and government agencies and is critical for conducting longitudinal experiments. Provisioning key core geospatial datasets on the cloud would increase the value of the data and facilitate exploitation by a large scientific community. Scientific organizations that currently manage their own scientific cyberinfrastructure will probably turn to cloud-based hosting as a more efficient alternative. Cloud-based computing infrastructures (such as that of Amazon.com) are being complemented by a new generation of data-intensive computing services, such as Google’s MapReduce, Apache’s™ Hadoop™, and Microsoft’s Dryad. However, a “smart” SDI still does not exist for automatic data discovery, acquisition, organization, analysis, and interpretation for information that is available on the Internet or on individual researchers’ hard drives.

Semantic Technologies — Semantic technologies will need to be considered as an SDI creates necessary links between various disciplines that use different jargon if information is be interlinked as part of a global network of facts and processes. The use of semantic technologies is gaining traction in scientific and engineering communities, including the life sciences, health care, ecology, marine science, and computer science. With increasing volume, complexity, and hetero-

generation of researchers and from external partners and research organizations. The IT management team could also solicit expertise and best practices from existing commercial software, hardware, and service vendors.

Enterprise Data Management

The USGS will need to implement enterprise data-management techniques in order to

• Create a robust data-management structure that enables individual scientist to make their data interoperable.

• Generate linkages between spatial and nonspatial data collections (such as spreadsheets, published reports, documents, photographs, and engineering drawings).

• Link spatial and nonspatial data to a unified model of a given environmental system (such as watershed, ecosystem, and regional climate).

• Direct substantial attention to maintaining appropriate privacy, security, and provenance.

geneity of data resources, scientists are turning to semantic approaches (in the form of ontologies—machine encoding of terms, concepts, and relations among them) to interconnect the different sources of data. The interoperable exchange of scientific data requires scientific communities to explore common vocabularies for capturing facts and information specific to their domains of expertise. In that way, the world’s data become joined together on the Web as a common interconnected database.

Database-Centric Computing — In database-centric computing, computations are brought to the data rather than the data being brought to the computations. This will become increasingly important as datasets increase in size beyond terabytes. The volume of data that Earth scientists will work with can be daunting, and new approaches to scientific discovery, collection, and analysis will increase the volume of data. A new generation of parallel database systems from Oracle, Teradata, and others leverage “MapReduce” as an effective data-analysis and computing paradigm that performs computations as close to the data as possible (Dean and Ghemawat, 2004).

Data Integration on the Web — SDI architects will need to determine the best way to aggregate huge amounts of semantically rich information and consider how the resulting information is generated and analyzed. It will be important to consider such requirements now to support higher-level knowledge-generation processes of the future. Cloud computing services currently focus on offering a scalable platform for computing, and future services will need to be built around the management of knowledge and reasoning over it. Services such as OpenCyc (www.opencyc.org), Freebase (www.freebase.com), and Wolfram/Alpha (www.wolframalpha.com) demonstrate how facts can be recorded in such a way that they can be combined and made available as answers to users’ questions.

Priorities for Users

Internal Use and Needs

Internal use–only databases that can be discovered and shared effectively within the USGS will continue to be needed and the proprietary nature of the information means that such databases will not be discoverable by external parties until there is official agreement about the broader accessibility of data to external agencies and the public. Given the multidisciplinary research activities of the USGS, digital data will need to be made available on various scales, archived so that they are discoverable by USGS researchers, and exist in standard formats so that reformatting is kept to a minimum when they are made accessible to outside users.

Application Services to Engage and Support Scientific Efforts

An SDI will need to create a foundation for a more open approach to application services that would promote greater transparency, easier integration,

Box 5.3

Key Application Services

Data Transformation — Data transformation services convert data between different formats and coding systems, between different spatial coordinate systems and projections, or between different levels of aggregation or disaggregation. Such services include certain types of image processing services that manipulate images from remote sensing instruments or aerial photography, geoparsing and geocoding services that convert location-based information (such as place names, addresses, and administrative and postal codes) to and from spatial coordinates, gridding algorithms that convert vector-based to raster-based data formats, and semantic translation services that facilitate interpretation of data between languages, disciplines, and applications.

Data Integration — Data integration services typically build on data transformations to support the assembly of data from different sources (for example, instruments, models, and disciplines) into a combined dataset or database or other derived product, such as a map. They can include linking services to identify data that have overlapping geographic coordinates or similar geographic features or characteristics, alignment services that adjust geometric models to improve spatial matching between different spatial datasets or images, and filtering services that select data for inclusion or exclusion on the basis of specified constraints and data characteristics.

Spatial and Statistical Analysis Spatial and statistical analysis services facilitate assessment of possible relationships within and between geographic distribu-

and greater reuse and repurposing of distributed data and services. Current approaches to SDI development, such as the Open Geospatial Consortium (OGC) Web Services Architecture (Whiteside, 2005), support an array of interoperable services focused on data transformation, integration, analysis and statistics, modeling, and visualization (see Box 5.3).

The SDI will need to adopt and implement an open Web services architecture and take a leadership role in establishing a framework for collaborative applications development and operations. For that to become a reality, the USGS will need to play a leadership role in establishing key elements of a community applications platform, including

• Specific open standards needed for data and service interoperability.

• Key reference datasets and parameters that would facilitate the interoperability and integration of data and services.

• Coordination mechanisms for ensuring development and implementation of new standards and reference datasets when and where needed.

• Mechanisms for quality control, measurement, and improvement.

tions, often on the basis of integrated data. A major development over the last several decades has been that of image classification methods that focus on characterizing and interpreting imagery data, for example, from satellites and aerial platforms. More recently, a new generation of statistical analysis methods tailored to spatial data has emerged that can provide new insights about spatio-temporal phenomena and processes and new methods for transformation, visualization, and prediction.

Modeling Services — Modeling services usually apply algorithms based on a combination of theory and statistics to generate estimates of past or current environmental conditions and changes and to project future events, trends, or risks. Models that have significant spatial dimensions or elements are increasingly prevalent in virtually all environmental and social science disciplines and many engineering and public health fields. The USGS has pioneered the development of diverse spatially enabled models, such as those related to earthquake prediction, hydrological resource management, and land-cover change.

Visualization — Visualization services not only support hypothesis generation, analysis, and modeling but provide a mechanism for scientists to use in communicating their findings to other scientists, applied users, and the public. An example of an online visualization service that is available at the USGS is the Disease Maps Web site (http://diseasemaps.usgs.gov). It is a simple tool that allows users to see the spatial distribution of wildlife and zoonotic diseases (such as West Nile virus) in different years at national or state levels. Another USGS Web site, WaterWatch (http://waterwatch.usgs.gov/), facilitates spatial data visualization and has daily local-level stream flow data, which can also be accessed through Google Maps. It displays real-time stream flow and is able to compare with historical stream flow by station.

The Spatial Data Infrastructure as a Workflow Platform

Over the last decade, various scientific programs have begun to incorporate workflow methods into their best practices. Most of the programs highlighted in Chapter 3 use workflows to collect raw observation data and make them available to thousands of researchers worldwide through specialized analytical and visualization tools (such as National Center for Atmospheric Research and National Science Foundation collaboratories). Workflow approaches are being developed by the OGC through its Open Web Services testbeds and through the Architecture Implementation Pilot of the Global Earth Observing System of Systems.

The USGS will need to consider implementing high-throughput workflow processes as a means of advancing the overall scalability of the SDI in support of the USGS Science Strategy and the SDI’s long-term role in geospatial knowledge capture, preservation, and reuse. Workflow techniques will need to become an essential technology layer in an SDI—one that enables research on a large scale by automating complex data preparation and analysis pipelines and by facilitating cross-disciplinary analysis, visualization, and predictive modeling. Providing computing, analytics, visualization, and other application processes as an SDI layer moves them closer to the data and makes it possible to leverage

unprecedented power and bandwidth to understand and predict the interaction of natural and human processes better.

The intent of this roadmap is not to be prescriptive regarding SDI development and implementation but to identify keys to success on the basis of lessons learned from other SDI implementation efforts. The committee recognizes that there are many feasible ways of implementing an SDI program successfully, but all will require that an SDI program be properly defined, led, and supported. The committee believes that such an effort can be best framed by the phrase “discover and share for the long term”, and it hopes that this phrase can become the mantra for spatial data handling throughout the USGS.

REFERENCES

Dean, J. and S. Ghemawat, 2004. MapReduce: Simplified Data Processing on Large Clusters, OSDI, doi: 10.1145/1327452.1327492.

Montgomery, D.C. and W. H. Woodall 2008. An Overview of Six Sigma, International Statistical Review, 76:329-346.

Whiteside, 2005. OpenGIS Web Services Architecture Description, OGC 05-042r2, 22pgs.