Credibility, Authenticity, and Reputation

The use of social media to disseminate information, both official and unofficial, during disasters raises questions about how to assess the information’s credibility and authenticity. For example, although the reach of an official message may be widened greatly if it is redistributed (e.g., retweeted), the message might have been modified in ways not anticipated or desired by its originators. Paul Resnick, University of Michigan; Dan Roth, University of Illinois, Urbana-Champaign; and David Stephenson, Stephenson Strategies, examined credibility, authenticity, and reputation in the context of social media and disaster response.

Paul Resnick observed that credibility problems have arisen in many online systems and that a variety of approaches have been explored to address them. One such approach is a reputation system, which formalizes the process of gathering, aggregating, and distributing information about individuals’ past behavior. The electronic commerce firm eBay operates one of the largest and best-known online reputation systems, which provides buyers with a history of a seller’s past transactions along with feedback from individuals who purchased items from the seller. Reputation systems have three principal functions:

• Inform participants about other participants, to help them determine if a particular participant is trustworthy.

• Create an incentive for good behavior. If participants know that they will be rated and that the rating is publicly available, they are more likely to provide accurate information (e.g., product listings), good service, and so on.

• Provide a selection effect. If participants know that good behavior will be noticed and rewarded, they are more likely to join the system. Similarly, would-be malicious participants will know that any incompetence or deliberate disruption will be made public—a deterrent to misbehavior.

Resnick cited two main challenges to reputation systems: the ability to create new pseudonyms and the high cost or other barriers to entry for newcomers. Most online sites use pseudonyms, and there are several valid reasons for not requiring “real” names.1 A user of a reputation system who develops a bad reputation can often easily create a new pseudonym. However, reputation systems can still succeed even when users are easily able to create pseudonyms, because those that establish positive reputations will continue to use their account, thus ensuring that positive information is available in the system. Similarly, a user who establishes a positive reputation has a disincentive to suddenly shift behaviors. The lack of reputation limits that user’s ability to participate in transactions since having a high approval rating with one transaction is much less valuable than having a high approval rating with 200 transactions.

Resnick also noted that the low value of having little or no reputation information creates barriers for newcomers. Research shows that it is not likely that one can treat each newcomer as having a positive reputation until they misbehave: when newcomers are treated as if they have a positive reputation, system managers become overwhelmed with the number of new, poorly behaved users that must be removed from the system. As a result, there seems to be no alternative to having newcomers pay their dues by developing a positive reputation over time. This tradeoff, between the utility of a well-managed reputation system and the high cost to newcomers, is a challenge to the growth of a reputation system, said Resnick.

Turning to the usefulness of reputation systems in the context of disaster response, Resnick commented that some participants may be able to develop a positive reputation through interactions before a disaster occurs, whereas other participants who may in fact have very useful information will not necessarily have established a prior reputation nor be able to establish their reputation quickly during an event. In such cases, additional measures are needed.

__________

1 For example, see National Research Council, The Internet’s Coming of Age, National Academy Press, Washington, D.C., 2001.

Another approach to enhancing credibility is to encourage Web users to spread correct information. When rumors of an event or disaster first appear, authorities and trusted information brokers are often tasked with broadcasting corrected information. However, rumors typically continue to spread despite having been found to be false—a problem that is not unique to the Internet or social media. Resnick mentioned that he has begun to examine the use of social processes in the context of political campaigns and elections. Traditional news media outlets have established online sites that examine the truthfulness of statements made by candidates. Although such sites are helpful to those who are already knowledgeable and are involved in politics, few others may visit these sites to verify information. One possibility is to use social media to mobilize individuals to create pointers to such correct information. A person motivated to correct false information could, for instance, begin to search tweets containing misinformation and then invite other users to respond to these incorrect tweets, including supplying links to where the information is corrected.

COMPUTATIONAL CLAIM VERIFICATION

Evaluating the trustworthiness of a particular piece of information can require connecting to many other pieces of information (either reinforcing or contradictory) from a wide variety of sources (e.g., news reports, official statements, blogs, wikis, and social media messages) that provide useful information. Metadata, such as embedded geographical coordinates or network activity associated with a piece of information, can also provide valuable information. Dan Roth observed that manually inspecting these potentially large amounts of data is difficult, a situation that prompted his research group to develop a tool to integrate relevant information to score the trustworthiness of claims and sources.2

Roth’s research aims to create a tool that judges trustworthiness in a manner similar to how a person might. Interestingly, accuracy is not the only important factor, because information can be technically accurate yet misleading. Furthermore, simply counting the number of times information is repeated is not sufficient either. Rather, a decision on the trustworthiness of claims and sources should, according to Roth, be based on several characteristics: support for a given piece of information across multiple trusted sources, source characteristics (such as reputation), the

__________

2 V.G. Vinod Vydiswaran, ChengXiang Zhai, and Dan Roth. Content-driven trust propagation framework. Proceedings of the 18th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD’11), 2011, pp. 974-982.

organizational type of the source (e.g., public interest, government, or commercial entity), verifiability of the provided information, and prior beliefs and background knowledge of the source.

Recognizing that a single metric based on accuracy is inadequate, Roth’s research group proposed three measures of trustworthiness: truthfulness (focusing on importance-weighted accuracy); completeness (thoroughness of a collection of claims); and bias (which results from supporting a favored position with untruthful statements or targeted incompleteness/lies of omission).3

Roth’s group’s initial design was based on computing trustworthiness using sources and claims. However, this approach proved too simplistic, and other factors had to be added. These included information on the certainty (and uncertainty) of the system’s technical ability to extract information, similarity across claims, attributes of group memberships, independence of sources, and so on. The system also had to incorporate prior knowledge, including some commonsense understanding and an understanding of how claims interact with one another—in order to reconcile competing truth claims, for instance.

Another aspect of checking trustworthiness is incorporating evidence. In adding another system layer for evidence, natural-language-processing techniques are needed to help determine what a message is actually saying—is it supporting or countering a specific claim?

APPLYING THE “CITIZEN SCIENCE” MODEL TO DISASTER MANAGEMENT

Past research at the University of Colorado, Boulder, and at the University of Delaware has found that during disasters individuals act largely in a self-directed, collaborative way to create emergent behavior. Their decentralized, pluralistic decision making, David Stephenson reported, finds imaginative and innovative ways to cope with the contingencies that typically appear in major disasters. Combining these emergent behaviors with social media tools could provide a significant opportunity to incorporate the public into disaster response, suggested Stephenson.

The success of citizen science initiatives, a form of crowdsourcing that harnesses individual observations to assist in scholarly research, suggests that similar techniques could be very useful in harnessing the public for help in coping with disasters. The concept is not new—the

__________

3 J. Pasternack and D. Roth. Comprehensive Trust Metrics for Information Networks. 27th Army Science Conference, November 29-December 2, 2010, Orlando, Florida.

112th Audubon Christmas Bird Count4 was held in December 2011—but smart phones and similar technology have made it much easier to collect information.

Although the primary goal in citizen science projects is to produce research and knowledge, these projects also serve as effective outreach mechanisms, Stephenson observed. Citizen science is a powerful educational tool because it involves volunteers directly in the research process and requires the creation of simple, easy-to-follow educational programs that enable nonexpert volunteers to participate effectively.

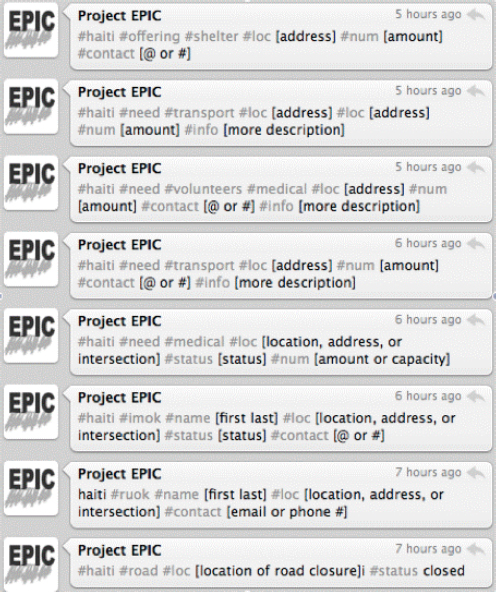

Today, new technologies make the reporting of information much easier. For example, in a National Weather Service (NWS) program,5 Twitter users are asked to report on weather conditions using the hashtag #WX. Through it, users can contribute information that may help the NWS better understand very localized conditions such as microbursts that may be missed by conventional instrumentation and sources. In another initiative, Tweak the Tweet,6 a special syntax using simple, short, and easy-to-remember hashtags was developed to make Twitter messages more focused and machine readable during disasters (Figure 4.1), thus making it easier for people to contribute information in a form useful in a disaster response. The syntax was rushed into service during the 2010 Haiti earthquake recovery and helped provide needed structure to the information that residents and aid workers were reporting from the scene.

Many other possibilities are evident for the use of recent technologies in disaster reporting. Smart phones generally have precise location information, and this can be made available along with a person’s messages (if a user activates this feature), making it possible for emergency managers to map the sources of reports. Another useful capability is the ability to send still images and video. Multiple pictures or video clips shot from multiple perspectives could provide authorities with a virtual comprehensive view of an event.

__________

4 The Audubon Christmas Bird Count is a census of birds performed annually by volunteer birdwatchers.

5 See http://www.nws.noaa.gov/stormreports/ and http://www.nws.noaa.gov/stormreports/twitterStormReports_SDD.pdf.

6 Kate Starbird, Leysia Palen, Sophia B. Liu, Sarah Vieweg, Amanda Hughes, Aaron Schram, Kenneth Mark Anderson, Mossaab Bagdouri, Joanne White, Casey McTaggart, and Chris Schenk, Promoting structured data in citizen communications during disaster response: An account of strategies for diffusion of the “Tweak the Tweet” syntax, Christine Hagar (Ed.), Crisis Information Management: Communication and Technologies, pp. 43-63, Chandos Publishing, 2012; K. Starbird and J. Stamberger, 2010, Tweak the tweet: Leveraging microblogging proliferation with a prescriptive grammar to support citizen reporting, short paper presented at the 7th International Information Systems for Crisis Response and Management Conference, Seattle, Wash., May 2010. Also see http://epic.cs.colorado.edu/?page_id=11.

OBSERVATIONS OF WORKSHOP PARTICIPANTS

Observations on credibility, authenticity, and reliability offered by workshop panelists and participants in the discussion that followed the panel session included the following:

• There are many information brokers, including many not traditionally viewed as official sources of news, who can serve as trusted sources in social media, such as individuals active in a particular geographical area or in organizations such as the Standby Task Force. Many of these brokers are taking steps to verify information. Those following these trusted brokers can in turn share this information with others who may not know who is a trusted broker.

• Technology allows us to use distributed approaches to establishing trust. For example, although an individual’s information may not be trustworthy, greater trust can be established if multiple reports with similar information can be found.

• The ways in which people seek information during a disaster can be different from the ways they seek information normally. For example, research has shown that during a disaster or mass emergency situation, people have a greater willingness to follow individuals who are different from themselves than they do under normal circumstances. Also, they tend to seek firsthand, “on the ground” information. Locality and hyperlocality matter. On Twitter, formal emergency response agencies or local media are retweeted more often than others.7

• What can be learned from past research on emergent behavior (where groups of individuals collectively complete complex tasks they could not do independently)? What prior results apply to emergent behavior with social media, and what aspects might be different?

• At the same time that they are learning how to evaluate information provided by the public, officials must also find ways to build their credibility with the public. An effort to build credibility can be as simple as an acknowledgment that an organization is listening to the public. This kind of direct communication between officials and volunteers builds a network of trust.

__________

7 Kate Starbird and Leysia Palen. Pass it on?: Retweeting in a mass emergency. Proceedings of the Conference on Information Systems for Crisis Response and Management (ISCRAM 2010). Seattle, Wash., May 2010.