4

Performance Appraisal: Definition, Measurement, and Application

INTRODUCTION

The science of performance appraisal is directed toward two fundamental goals: to create a measure that accurately assesses the level of an individual's job performance and to create an evaluation system that will advance one or more operational functions in an organization. Although all performance appraisal systems encompass both goals, they are reflected differently in two major research orientations, one that grows out of the measurement tradition, the other from human resources management and other fields that focus on the organizational purposes of performance appraisal.

Within the measurement tradition, emanating from psychometrics and testing, researchers have worked and continue to work on the premise that accurate measurement is a precondition for understanding and accurate evaluation. Psychologists have striven to develop definitive measures of job performance, on the theory that accurate job analysis and measurement instruments would provide both employer and employee with a better understanding of what is expected and a knowledge of whether the employee's performance has been effective. By and large, researchers in measurement have made the assumption that if the tools and procedures are accurate (e.g., valid and reliable), then the functional goals of organizations using tests or performance appraisals will be met. Much has been learned, but as this summary of the field makes explicit, there is still a long way to go.

In a somewhat different vein, scholars in the more applied fields—human

resources management, organizational sociology, and more recently applied psychology, have focused their efforts on usability and acceptability of performance appraisal tools and procedures. They have concerned themselves less with questions of validity and reliability than with the workability of the performance appraisal system within the organization, its ability to communicate organizational standards to employees, to reward good performers, and to identify employees who require training and other development activities. For example, the scholarship in the management literature looks at the use of performance appraisal systems to reinforce organizational and employee belief systems. The implicit assumption of many applied researchers is that if the tools and procedures are acceptable and useful, they are also likely to be sufficiently accurate from a measurement standpoint.

From a historical perspective, until the last decade research on performance appraisal was largely dominated by the measurement tradition. Performance appraisals were viewed in much the same way as tests; that is to say, they were evaluated against criteria of validity, reliability, and freedom from bias. The emphasis throughout was on reducing rating errors, which was assumed to improve the accuracy of measurement. The research addressed two issues almost exclusively—the nature and quality of the scales to be used to assess performance and rater training. The question of which performance dimensions to evaluate tended to be taken as a given.

Although, strictly speaking, we do not disagree with the test analogy for performance appraisals, it can be misleading. Performance appraisals are different from the typical standardized test in that the ''test" in this case is a combination of the scale and the person who completes the rating. And, contrary to standardized test administration, the context in which the appraisal process takes place is difficult if not impossible to standardize. These complexities were often overlooked in the performance appraisal literature in the psychometric tradition. The research on scales has tended to treat all variation attributable to raters as error variance. The classic training research can be seen as attempting to develop and evaluate ways of standardizing the person component of the appraisal process.

In the late 1970s there was a shift in emphasis away from the psychometric properties of scales. The shift was initially articulated by Landy and Farr (1980) and was extended by Ilgen and Feldman (1983) and DeNisi et al. (1984). They expounded the thesis that the search for rating error had reached the point of diminishing returns for improving the quality of performance appraisals, and that it was time for the field to concentrate more on what the rater brings to performance appraisal—more specifically, how the rater processes information about the employee and how this mental processing influences the accuracy of the appraisal. The thrust of the research was still on accuracy, but now the focus was on the accuracy of judgment rather than rating errors and the classical psychometric indices of quality.

Just as there was dissatisfaction with progress in performance appraisal research at the end of the 1970s, recent literature suggests dissatisfaction with the approaches of the 1980s. But this time the shift promises to be more fundamental. The most recent research (Ilgen et al., 1989; Murphy and Cleveland, 1991) appears to reject the goal of precision measurement as impractical. From this point of view, prior research has either ignored or underestimated the powerful impact of organizational context and people's perceptions of it. The context position is that, although rating scale formats, training, and other technical qualities of performance appraisals do influence the qualities of ratings, the quality of performance appraisals is also strongly affected by the context in which they are used. It is argued that research on performance appraisals now needs to turn to learning more about the conditions that encourage raters to use the performance appraisal systems in the way that they were intended to be used. At this juncture, therefore, it appears that the measurement and management traditions in performance appraisal have reached a rapprochement.

How do these varied bodies of research contribute to an understanding of performance appraisal technology and application? Can jobs be accurately described? Can valid and reliable measures of performance be developed? Does the research offer evidence that performance appraisal instruments and procedures have a positive effect on individual and organizational effectiveness? Is there evidence that performance appraisal systems contribute to communication of organizational goals and performance expectations as management theory would lead us to believe? What does the recent focus on the interactions between appraisal systems and organizational context suggest about the probable accuracy of appraisals when actually used to make decisions about individual employees? These questions and their treatment in the psychological research and human resources management literature form the major themes of this chapter.

In the following pages we present the results of research in the areas of psychometrics, applied psychology, and human resources management on performance description, performance measurement, and performance assessment for purposes of enhancing individual employee performance. The first section deals with measurement issues. The discussion proceeds from a general description of the research on job performance and its measurement to a description of the factors that can influence the quality of the performance assessment. Research relating to managerial-level jobs is presented as available, but most of the work in job performance description and measurement has involved nonmanagerial jobs.1 The second section deals with research on the more applied

issues, such as the effects of rater training and the contextual sources of rating distortion.

PERFORMANCE APPRAISAL AND THE MEASUREMENT TRADITION

The Domain of Job Performance

The definition and measurement of job performance has been a central theme in psychological and organizational research. Definitions have ranged from general to specific and from quantitative to qualitative. Some researchers have concentrated their efforts on defining job performance in terms of outcomes; others have examined job behaviors; still others have studied personal traits such as conscientiousness or leadership orientation as correlates of successful performance. The more general, qualitative descriptions tend to be used for jobs that are complex and multifaceted like those at managerial levels, while quantitative descriptions are used frequently to describe highly proceduralized jobs for which employee actions can be measured and the resulting outcomes often quantified. The principal purpose of this research has been to enhance employee performance (via better selection, placement, and retention decisions), under the assumption that cumulative individual performance will influence organizational performance.

When considering measures of individual job performance, there is a tendency in the literature to characterize some measures as objective and others as subjective. We believe this to be a false distinction that may create too much confidence in the former and an unjustified suspicion about the latter. Measurement of performance in all jobs, no matter how structured and routinized they are, depends on external judgment about what the important dimensions of the job are and where the individual's performance falls on each dimension. Our discussion in this chapter avoids the artificial distinctions of objective and subjective and instead focuses on the role of human judgment in the performance appraisal process.

Initially, applied psychologists were optimistic about their ability to identify and measure job performance. Job analyses were used as the basis for constructing selection tests, for developing training programs, and for determining the strengths and weaknesses of employees. However, many of the results were disappointing and, as experience was gained, researchers began to realize that describing the constituent dimensions of a job and understanding its performance requirements was not a straightforward task. Today it is recognized

that job performance is made up of complex sets of interacting factors, some of them attributable to the job, some to the worker, and some to the environment. Thus, in even the simplest of jobs many elements of "job performance" are not easily isolated or directly observable. It is also clear to social scientists that the definition of what constitutes skill or successful work behavior is contingent and subject to frequent redefinition. In any appraisal system, the performance factors rated depend on the approach taken to job analysis, i.e., worker attributes or job tasks. There is evidence that different expert analysis and different analytic methods will result in different judgments about job skills (England and Dunn, 1988).

Furthermore, the evaluation of job performance is subject to social and organizational influences. In elucidation of this point, Spenner (1990) has identified several theoretical propositions concerning the social definition of skill or of what is considered effective job behavior. For example, scholars in the constructionist school argue that what is defined as skilled behavior is influenced by interested parties, such as managers, unions, and professions. Ultimately, what constitutes good and poor performance depends on organizational context. The armed forces, for example, place a great deal of importance on performance factors like "military bearing." Identical task performance by an auto mechanic would be valued differently and therefore evaluated differently by the military than by a typical car dealership. In order to capture some of this complexity, Landy and Farr (1983) propose that descriptions of the performance construct for purposes of appraisal should include job behavior, situational factors that influence or interact with behavior, and job outcomes.

Dimensions of Job Performance

Applied psychologists have used job analysis as a primary means for understanding the dimensions of job performance (McCormick, 1976, 1979). There have been a number of approaches to job analysis over the years, including the job element method (Clark and Primoff, 1979), the critical incident method (Flanagan, 1954; Latham et al., 1979), the U.S. Air Force task inventory approach (Christal, 1974), and those methods that rely on structured questionnaires such as the Position Analysis Questionnaire (McCormick et al., 1972; Cornelius et al., 1979) and the Executive Position Description Questionnaire developed by Hemphill (1959) to describe managerial-level jobs in large organizations. All of these methods share certain assumptions about good job analysis practices and all are based on a variety of empirical sources of information, including surveys of task performance, systematic observations, interviews with incumbents and their supervisors, review of job-related documentation, and self-report diaries. The results are usually detailed descriptions of job tasks, personal attributes and behaviors, or both.

One of the more traditional methods used to describe job performance is

the critical incident technique (Flanagan, 1954). This method involves obtaining reports from qualified observers of exceptionally good and poor behavior used to accomplish critical parts of a job. The resulting examples of effective and ineffective behavior are used as the basis for developing behaviorally based scales for performance appraisal purposes. Throughout the 1950s and 1960s, Flanagan and his colleagues applied the critical incident technique to the description of several managerial and professional jobs (e.g., military officers, air traffic controllers, foremen, and research scientists). The procedure for developing critical incident measures is systematic and extremely time-consuming. In the case of the military officers, over 3,000 incident descriptions were collected and analyzed. Descriptions usually include the context, the behaviors judged as effective or ineffective, and possibly some description of the favorable or unfavorable outcomes.

There is general agreement in the literature that the critical incident technique has proven useful in identifying a large range of critical job behaviors. The major reservations of measurement experts concern the omission of important behaviors and lack of precision in working incidents, which interferes with their usefulness as guides for interpreting the degree of effectiveness in job performance.

Moreover, there is some research evidence—and this is pertinent to our study of performance appraisal—suggesting that descriptions of task behavior resulting from task or critical incident analyses do not match the way supervisors organize information about the performance of their subordinates (Lay and Jackson, 1969; Sticker et al., 1974; Borman, 1983, 1987). In one of a few studies of supervisors' "folk theories" of job performance, Borman (1987) found that the dimensions that defined supervisors' conceptions of performance included: (1) initiative and hard work, (2) maturity and responsibility, (3) organization, (4) technical proficiency, (5) assertive leadership, and (6) supportive leadership. These dimensions are based more on global traits and broadly defined task areas than they are on tightly defined task behaviors. Borman's findings are supported by several recent cognitive models of the performance appraiser (Feldman, 1981; Ilgen and Feldman, 1983; Nathan and Lord, 1983; De Nisi et al., 1984).

If, as these researchers suggest, supervisors use trait-based cognitive models to form impressions of their employees, the contribution of job analysis to the accuracy of appraisal systems is in some sense called into question. The suggestion is that supervisors translate observed behaviors into judgments about general traits or characteristics, and it is these judgments that are stored in memory. Asking them via an appraisal form to rate job behaviors does not mean that they are reporting what they saw. Rather, they may be reconstructing a behavioral portrait of the employee's performance based on their judgment of the employee's perseverance, maturity, or competence. At the very least, this research makes clearer the complexity of the connections between

job requirements, employee job behaviors, and supervisor evaluations of job performance.

The Joint-Service Job Performance Measurement (JPM) Project undertaken by the Department of Defense is among the most ambitious efforts at systematic job analysis to date (Green et al., 1991). This is a large-scale, decade long research effort to develop measures of job proficiency for purposes of validating the entrance test used by all four services to screen recruits into the enlisted ranks. By the time the project is completed in 1992, over $30 million will have been expended to develop an array of job performance measures—including hands-on job-sample tests, written job knowledge tests, simulations, and, of particular interest here, performance appraisals—and to administer the measures to some 9,000 troops in 27 enlisted occupations.

Each of the services already had an ongoing occupational task inventory system that reported the percentage of job incumbents who perform each task, the average time spent on the task, and incumbents' perceptions of task importance and task difficulty. The services also had in hand soldier's manuals for each occupation that specify the content of the job. From this foundation of what might be called archival data, the services proceeded to a more comprehensive job analysis, calling on both scientists and subject matter experts (typically master sergeants who supervise or train others to do the job) to refine and narrow down the task domain according to such considerations as frequency of performance, difficulty, and importance to the job. Subject matter experts were used for such things as ranking the core tasks in terms of their criticality in a specific combat scenario, clustering tasks based on similarity of principles or procedures, or assigning difficulty ratings to each task based on estimates of how typical soldiers might perform the task. Project scientists used all of this information to construct a purposive sample of 30 tasks to represent the job. From this sample the various performance measures were developed.

The JPM project is particularly interesting for the variety of performance measures that were developed. In addition to hands-on performance tests (by far the most technically difficult and expensive sort of measure to develop and administer) and written job-knowledge tests, the services developed a wide array of performance appraisal instruments. These included supervisor, peer, and self ratings, ratings of very global performance factors as well as job-specific ratings, behaviorally anchored rating scales, ratings with numerical tags, and ratings with qualitative tags. Although the data analysis is still under way, the JPM project can be expected to contribute significantly to our understanding of job performance measurement and of the relationships among the various measures of that performance.

For our purposes, it is instructive to note how the particular conception of job performance adopted by the project influenced everything else, from job analysis to instrument development, to interpretation of the data. First, it was decided to focus on proficiency (can do) and not on the personal

attributes that determine whether a person will do the job. Second, tasks were chosen as the central unit of analysis, rather than worker attributes or skill requirements. It follows logically that the performance measures were job-specific and that the measurement focus was on concrete, observable behaviors. All of these decisions made sense. The jobs studied are entry-level jobs assigned to enlisted personnel—jet engine mechanic, infantryman, administrative clerk, radio operator—relatively simple and amendable to measurement at the task level. Moreover, the enviable trove of task information virtually dictated the economic wisdom of that approach. And finally, the objectives of the research were well satisfied by the design decisions. During the 1980s the military was faced each year with the task of trying to choose from close to a million 18- to 24-year-olds, most with relatively little training or job experience, in order to fill perhaps 300,000 openings spread across hundreds of military occupations. It was important to be able to demonstrate that the enlistment test is a reasonably accurate predictor of which applicants are likely to be successful in a broad sample of military jobs (earlier research focused on success in training, not job performance). For classification purposes, it was important to understand the relationship between the aptitude subtests and performance in various categories of jobs.

In other words, the picture of job performance that emerged from the JPM research was suited to the organizational objectives and to the nature of the jobs studied. The same job analysis design would not necessarily work in another context, as the following discussion of managerial performance demonstrates.

Descriptions of Managerial Performance

Most of the research describing managerial behavior was conducted between the early 1950s and the mid-1970s. The principal job analysis methods used (in addition to critical incident techniques) were interviews, task analyses, review of written job descriptions, observations, self-report diaries, activity sampling, and questionnaires. Hemphill's (1959) Executive Position Description Questionnaire was one of the earliest uses of an extensive questionnaire to define managerial performance. The results, based on responses from managers, led to the identification of the following nine job factors.

FACTOR A: Providing a Staff Service in Nonoperational Areas. Renders various staff services to supervisors: gathering information, interviewing, selecting employees, briefing superiors, checking statements, verifying facts, and making recommendations.

FACTOR B: Supervision of Work. Plans, organizes, and controls the work of others; concerned with the efficient use of equipment, the motivation of subordinates, efficiency of operation, and maintenance of the work force.

FACTOR C: Business Control. Concerned with cost reduction, maintenance of proper inventories, preparation of budgets, justification of capital

expenditures, determination of goals, definition of supervisor responsibilities, payment of salaries, enforcement of regulations.

FACTOR D: Technical Concerns With Products and Markets. Concerned with development of new business, activities of competitors, contacts with customers, assisting sales personnel.

FACTOR E: Human, Community, and Social Affairs. Concerned with company goodwill in the community, participation in community affairs, speaking before the public.

FACTOR F: Long-range Planning. Broad concerns oriented toward the future; does not get involved in routine and tends to be free of direct supervision.

FACTOR G: Exercise of Broad Power and Authority. Makes recommendations on very important matters; keeps informed about the company's performances; interprets policy; has a high status.

FACTOR H: Business Reputation. Concerned with product quality and/or public relations.

FACTOR I: Personal Demands. Senses obligation to conduct oneself according to the stereotype of the conservative business manager.

FACTOR J: Preservation of Assets. Concerned about capital expenditures, taxes, preservation of assets, loss of company money.

An analysis of these factors suggests relatively little focus on product quality. Rather, most factors dealt with creating internal services and controls for efficiency and developing external images to promote acceptability of the company in the community.

More recently, Flanders and Utterback (1985) reported on the development and use of the Management Excellence Inventory (MEI) by the Office of Personnel Management (OPM). The MEI is based on a model describing management functions and the skills needed to perform each function. Analyses conducted at three levels of management suggested that different skills and knowledge are needed to be successful at different levels. Lower-level managers needed technical competence and interpersonal communication skills; middle-level managers needed less technical competence but substantial skill in areas such as communication, leadership, flexibility, concern with goal achievement, and risk-taking; and top-level managers needed all the skills of a middle-level manager plus sensitivity to the environment, a long-term view, and a strategic view. A review of these skill areas indicates that all are general, some are task-oriented, and some, such as flexibility and leadership, are personal traits.

The finding that managers at different levels have different skill requirements is also reflected in the research of Katz (1974), Mintzberg (1975), and Kraut et al. (1989). In essence, the work describing managerial jobs has concentrated on behaviors, skills, or traits in general terms. These researchers suggest that assessment of effective managerial performance in terms of specific behaviors is particularly difficult because many of the behaviors related

to successful job performance are not directly observable and represent an interaction of skills and traits. Traits are widely used across organizations and are easily accepted by managers because they have face validity. However, they are relatively unattractive to measurement experts because they are not particularly sensitive to the characteristics of specific jobs and they are difficult to observe, measure, and verify. In many settings, outcomes have been accepted as legitimate measures. However, as measures of individual performance they are problematical because they are the measures most likely to be affected by conditions not under the control of the manager.

Implications

In sum, virtually all of the analysis of managerial performance has been at a global level; little attention has been given to the sort of detailed, task-centered definition that characterized the military JPM research. (One exception is the work of Gomez-Mejia et al. [1982], which involved the use of several job analysis methods to develop detailed descriptions of managerial tasks.) This focus on global dimensions conveys a message from the research community about the nature of managerial performance and the infeasibility of capturing its essence through easily quantified lists of tasks, duties, and standards. Reliance on global measures means that evaluation of a manager's performance is, of necessity, based on a substantial degree of judgment. Attempts to remove subjectivity from the appraisal process by developing comprehensive lists of tasks or job elements or behavioral standards are unlikely to produce a valid representation of the manager's job performance and may focus raters' attention on trivial criteria.

In a private-sector organization with a measurable bottom line, it is frequently easier to develop individual, quantitative work goals (such as sales volume or the number of units processed) than it is in a large bureaucracy like the federal government, where a bottom line tends to be difficult to define. However, the easy availability of quantitative goals in some private-sector jobs may actually hinder the valid measurement of the manager's effectiveness, especially when those goals focus on short-term results or solutions to immediate problems. There is evidence that the incorporation of objective, countable measures of performance into an overall performance appraisal can lead to an overemphasis on very concrete aspects of performance and an underemphasis on those less easily quantified or that yield concrete outcomes only in the long term (e.g., development of one's subordinates) (Landy and Farr, 1983).

It appears that managerial jobs fit less easily within the measurement tradition than simpler, more concrete jobs, if one interprets valid performance measurement to require job-related measures, and the preference for "objective" measures (as the Civil Service Reform Act appears to do). It remains to be seen whether any approaches to performance appraisal can be demonstrated to

be reliable and valid in the psychometric sense and, if so, how global ratings compare with job-specific ratings.

Psychometric Properties of Appraisal Tools and Procedures

Approaches to Appraisal

As is true of standardized tests, performance evaluations can be either norm-referenced or criterion-referenced. In norm-referenced appraisals, employees are ranked relative to one another based on some trait, behavior, or output measure—this procedure does not necessarily involve the use of a performance appraisal scale. Typically, ranking is used when several employees are working on the same job. In criterion-referenced performance evaluations, the performance of each individual is judged against a standard defined by a rating scale. Our discussion in this section focuses on criterion-referenced appraisal because it is relevant to more jobs, particularly at the managerial level, and because it is the focus of the majority of the research.

In criterion-referenced performance appraisal the "measurement system" is a person-instrument couplet that cannot be separated. Unlike counters on machines, the scale does not measure performance; people measure performance using scales. Performance appraisal is a process in which humans judge other humans; the role of the rating scale is to make human judgment less susceptible to bias and error.

Can raters make accurate assessments using the appraisal instruments? In addressing this question, researchers have studied several types of rating error, each of which was believed to influence the accuracy of the resulting rating. Among the most commonly found types of errors and problems are (1) halo: raters giving similar ratings to an employee on several purportedly different independent rating dimensions (e.g., quality of work, leadership ability, and planning); (2) leniency: raters giving higher ratings than are warranted by the employee's performance; (3) restriction in range: raters giving similar ratings to all employees; and (4) unreliability: different raters rating the same rater differently or the same rater giving different ratings from one time to the next.

Over the years, a variety of innovations in scale format have been introduced with the intention of reducing rater bias and error. Descriptions of various formats are presented below prefatory to the committee's review of research on the psychometric properties of performance appraisal systems.

Scale Formats

The earliest performance appraisal rating scales were graphic scales—they generally provided the rater with a continuum on which to rate a particular trait or behavior of the employee. Although these scales vary in the degree of explicitness, most provide only general guidance on the nature of the underlying

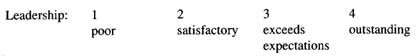

dimension or on the definition of scale points along the continuum. Some scales present mere numerical anchors:

Others present adjectival descriptions at each anchor point:

Raters are given the freedom to mark anywhere on the continuum—either at a defined scale point or somewhere between the points. Trait scales, which are constructed from employees' personal characteristics (such as integrity, intellectual ability, leadership orientation) are generally graphic scales. Many decades of research on ratings made with graphic scales found them fraught with measurement errors of unreliability, leniency, and range restriction, which many scholars attributed to the limited amount of definition and guidance they provided the rater.

In reaction to these perceived limitations of graphic scales, a second type of scale—behaviorally anchored rating scales (BARS)—was developed. The seminal work on BARS was done by Smith and Kendell (1963). Although BARS scales still present performance on a continuum, they provide specific behavioral anchors to help clarify the meaning of the performance dimensions and help calibrate the raters' definitions of what constitutes good and poor performance. Some proponents of behaviorally focused scales also claimed that they would eliminate unnecessary subjectivity (Latham and Wexley, 1977). The methodology used in BARS was designed by researchers to form a strong link between the critical behaviors in accomplishing a specific job and the instrument created to measure those behaviors. Scale development follows a series of detailed steps requiring careful job analysis and the identification of effective and ineffective examples of critical job behavior. The design process is iterative and there are often two or three groups of employees involved in review and evaluation. The final scales usually range from five to nine points and include behavioral examples around each point to assist raters in observing and evaluating employees' performance.

A third type of scale, the Mixed Standard Scale proposed by Blanz and Ghiselli (1972), was designed to be proactive in preventing rater biases. For each performance dimension of interest, three behavioral examples are developed that describe above-average, average, and below-average performance. However, raters are presented with a randomly ordered list of behavioral examples without reference to performance dimensions and are asked to indicate whether the ratee's performance is equal to, worse than, or better than the performance presented in the example. In this method, the graphic continuum and the

definitions of the performance dimensions are eliminated from the rating form. The actual performance score is computed by someone other than the rater.

Forced-choice scales represent an even more extreme attempt to disguise the rating continuum from the rater. This method is based on the careful development of behavioral examples of the job that are assigned a preference value based on social desirability estimates made by job experts. Raters are presented with three or four equally desirable behaviors and asked to select the one that best describes the employee. The employee's final rating is calculated by someone other than the rater.

We turn now to a discussion of the validity, reliability, and other psychometric properties of performance appraisals, pointing out (as the literature allows) any evidence as to the relative merits of particular scale formats.

Validity

Validity is a technical term that has to do with the accuracy and relevance of measurements. Since the validity of performance appraisals is a critical issue to measurement specialists and a basic concern to practitioners who must withstand legal challenges to their performance appraisal tools and procedures, we are presenting the following discussion of validation strategies and how they apply to the examination of performance appraisal.

Cronbach (1990:150-151) describes validation as an ''inquiry into the soundness of an interpretation." He sees the validation process as one of posing hypotheses, testing them, and supporting or revising the interpretation based on the findings. He makes the point that challenge to a proposition or hypothesis is as important as the collection of evidence supporting the interpretation. Within this framework, the researcher is continually recognizing rival hypotheses and testing them—the result is a greater understanding of the inferences that can be made about the characteristics of the individuals who take a test or who are measured on a performance appraisal scale.

If the discussion seems rarified thus far, a practical example drawn from one of the biggest success stories of the measurement tradition—testing to select aircraft crew members during World War II—may be of interest. In an article with the pithy title "Validity for What," Jenkins (1946) describes the development and use of a test to select pilots, navigators, and bombardiers. For each position, military psychologists found that those who scored well on the test were also the most successful in technical training, so the test was put into use to select aircrews. Several years into the war, uneasiness with the hit ratios on bombing runs led to Jenkins's follow-up study, which revealed that scores on the selection test, though they predicted success in bombardier training, were not correlated with success in hitting the target—and this, ultimately, was the performance of greatest interest.

At least three major validation strategies have been proposed in the area

of testing—criterion-related, content, and construct validation. Although these strategies often have been treated as separate in the past, current thinking emphasizes that validation should integrate information from all approaches (Landy, 1986; Wainer and Braun, 1988; Cronbach, 1990). Content validation gives confidence in a test or measure by exploring the match between the content of the measure and the content of the job (e.g., a test of typing speed and accuracy for a clerk/typist job). Criterion-related validation demonstrates statistically the relationships between people's scores on a measurement instrument and their scores on the performance of interest (e.g., scores on an employment test and supervisor ratings of on-the-job performance; Scholastic Aptitude Test [SAT] scores and college grade-point average). This is an important way of providing scores with meaning. For example, if a company finds that job applicants who score 8 on an entry test usually get positive supervisor ratings or are likely to be the ones chosen for promotion at the end of a probationary period, whereas those who score 4 are far less likely to, the scores of 4 and 8 begin to take on some meaning. The search for construct validity is an attempt to get at the attribute that makes some individuals score 4 and others 8.

Cronbach (1990:179) views construct validation as a continuous process. He states: "An interpretation is to be supported by putting many pieces of evidence together. Positive results validate the measure and the construct simultaneously. Failure to confirm the claim leads to a search for a new measuring procedure or for a concept that fits the data better." In traditional analysis, two forms of evidence have been used to demonstrate construct validity. The first is convergent evidence, which shows that the measure in question is related to the other measures of the same construct. In psychological testing there are many tests or parts of tests that purport to measure the same construct. The second form of evidence is discriminant validity, which shows that a given measure of a construct has a weak relationship with measures of other constructs. Discriminant validity, according to Angoff (1988), is a stronger test of construct validity than is convergent validity because discriminant validity implies a challenge from rival hypotheses. Recently, psychometricians have expanded the view of construct validity to include evidence of content and criterion validity as well as other sources of evidence that serve to test hypotheses about the underlying nature of a construct. This expanded definition provides the opportunity for introducing a variety of forms of evidence to test validity.

Content Evidence In performance appraisal, a determination of the content validity of the appraisal has been based on the type of analysis used in developing the appraisal instrument. If detailed job analyses or critical incident techniques were used and behaviorally based scales were developed, it has been generally assumed that the appraisal instruments have content validity. That is, the behaviors placed on the performance dimension scales look like they are representative of the behaviors involved in performing the job and they have

been judged by the subject matter experts to be so. Several researchers have used this approach (e.g., Campbell et al., 1970; DeCotiis, 1977; Borman, 1978).

However, any simple reliance on content validity to justify a measurement system has long since been dismissed by measurement specialists. Even if the accomplishment of particular tasks is linked to effective job performance, a comprehensive enumeration of all job tasks and rating on each of them does not give any guidance on what is important to effective job performance and what is not. For example, at a nonmanagerial level, Bialek et al. (1977) reported that enlisted infantrymen spent less than half of their work time performing the technical tasks for which they had been trained; in many cases, only a small proportion of a soldier's time was devoted to accomplishing the tasks contained in the specific job description. These results are reinforced by the work of Campbell et al. (1970) and Christal (1974). What is needed is to go beyond the list of behaviors to a testable hypothesis about the behaviors that constitute effective task performance for a specific job construct.

Moreover, for some jobs, such as those involving managerial performance, the content validity approach is not particularly useful because a large portion of the employee's time is spent in behaviors that are either not observable or are not related to the accomplishment of a specific task. This is particularly true for managers who do many things that cannot be linked unambiguously to the accomplishment of specific tasks (Mintzberg, 1973, 1975). Thus it appears that a content approach is not likely to be sufficient for establishing measurement validity for any job, and for some jobs it will be of little value in making the link between job behaviors and effective performance.

Criterion Evidence The criterion-related approach to validation is not as useful for evaluating performance appraisals as it is with selection tests used to predict later performance. The strength of the approach derives largely from showing a relationship (often expressed as a correlation coefficient) between the measure being validated and some independent, operational performance measure. The fact that course grades are moderately correlated with the SAT or American College Testing (ACT) examinations lends credibility to the claim that the tests measure verbal and quantitative abilities that are important to success in college. The crucial factor is the independence of the operational measure, and that is where difficulty arises. When the measure being studied is a behavioral one, it is difficult to find operational measures for comparison that have the essential independence.

So-called objective behavioral measures—attendance, tardiness, accidents, measures of output, or other indices that do not involve human judgment—appear to provide the best approximation of criteria for performance measures, but studies using such indices are rare. Heneman (1984) was able to locate only 23 studies with a total sample size of 3,178 workers, despite a literature search covering more than 50 years of published research. His meta-analysis

assesses the relationship between supervisory ratings and a variety of unspecified "operational indicators" of job performance that do not derive from the rating process. overall, the magnitude of the relationship between supervisor ratings and the results measures was, in the author's words, relatively weak (a mean correlation of .27, corrected for sampling error and attenuation, with a 90-percent confidence interval of -.07 to .61). The author concludes from this that supervisor ratings and results measures are clearly not interchangeable performance measures. Likewise, the overall results are not a terribly convincing demonstration of the criterion-related validity of performance appraisal, although that finding is hard to interpret since we know virtually nothing about the operational indicators used or, as the author points out, the many possible moderators of the relationship between the ratings and results measures. Furthermore, it is not clear from the article whether the objective measures and the performance ratings were used to evaluate the same performance dimension. Comparing ratings on one dimension with objective measures of another performance dimension tells us little about the relationship between the two measures.

John Hunter's (1983) meta-analysis takes a slightly different approach, looking at the relationships between tests of cognitive ability, tests of job knowledge, and two types of performance measures—job samples and supervisor ratings. He located 14 studies that included at least 3 of the 4 variables, 4 of them on military enlisted jobs (armor crewman, armor repairman, cook, and supply specialist), and 10 on civilian jobs such as cartographer, customs inspector, medical laboratory worker, and firefighter. The question that interested the author was whether supervisor ratings are determined entirely by job performance (the job sample measure) or whether the ratings are influenced by the employee's job knowledge. Hunter reaches the conclusion that job knowledge is twice as important as job performance in the determination of supervisor ratings. Thus his finding of a "moderately high" correlation between supervisor ratings and job performance (.35, corrected for unreliability) is "in large part due to the extent to which supervisors are sensitive to differences in job knowledge" (Hunter, 1983:265).

At least one old hand in the field interpreted Hunter's analysis as good news about performance appraisal. Guion (1983) commented that he had all but concluded that performance appraisal had only public relations value, but that the Hunter data showed to his satisfaction that ratings of performance are "valid, at least to a degree," because they are based to some degree on demonstrated ability to do the job (job sample measures) and on job knowledge. Guion offers a number of explanations for Hunter's finding that job knowledge is more highly correlated with supervisory ratings than are the performance measures. The nature of the performance measures may be part of the answer. Work samples are measures of maximum performance—what a person can do when being observed—rather than typical performance—what a person will do,

day in and day out. It may well be that most supervisory ratings are more influenced by typical performance than the occasional best efforts. Or it may simply be that supervisors are more influenced by job knowledge because the direct contact of the supervisor with the employee to be rated is usually some sort of discussion, and discussion is likely to be more informative about job knowledge than actual performance. Whatever the exact cause, Guion suggests an important implication of Hunter's analysis that has special salience for this study: supervisor ratings, if they are more influenced by what employees have learned about their jobs than what they actually do on a day-to-day basis, may be more accurately viewed as trainability ratings than performance ratings.

The Army Selection and Classification Project (Project A) offers another study of the relationship between performance ratings and other measures of job proficiency, including hands-on performance, job knowledge tests, and training knowledge tests (Personnel Psychology , 1990). One of the purposes of this large-scale project was to develop a set of criteria for evaluating job performance in 19 entry-level army jobs. There were five performance factors identified for the criterion model: (1) core technical proficiency (tasks central to a particular job); (2) general soldiering proficiency (general military tasks); (3) effort and leadership; (4) personal discipline; and (5) physical fitness and military bearing. All types of proficiency measures, including performance ratings, were provided for each factor. The results, as reported by Campbell et al. (1990) show that overall performance ratings correlated .20 with a totalhands-on score; when corrected for attenuation, the correlation increases to .36. This finding is consistent with the results presented in Hunter's meta-analysis (1983).

Convergent and Discriminant Evidence Since other measures of the job performance construct have not been readily available in most settings, it has been necessary for researchers in performance appraisal to rely on agreement among raters or to develop special study designs that produce more than one measure of performance. Campbell and Fiske (1959) proposed the multimethod-multirater method for the purpose of determining the construct validity of trait ratings. Using this approach, two or more groups of raters are asked to rate the performance of the same employees using two rating methods. Examples of methods include BARS, graphic scales, trait scales, and global evaluation. Convergent validity is demonstrated by the agreement among raters across rating methods; discriminant validity is demonstrated by the degree to which the rates are able to distinguish among the performance dimensions.

Campbell et al. (1973) used the multimethod-multirater technique to compare the construct validity of behaviorally based rating scales with a rating of each behavioral example separated from its dimension (like a Mixed Standard Scale approach). In the summated rating method, raters provided one of four descriptors about the behavior ranging from "exhibiting almost never" to "almost

always." The dimension score was the average of the item responses for that dimension. Both rating procedures were used for 537 managers of department stores within the same company. Ratings were provided by store managers and assistant store managers using each method. The behaviorally anchored scales were based on critical incidents collected and analyzed by study participants and researchers.

The results indicated significant convergent validity between rating methods and high discriminant validity between dimensions. That is, the raters agreed about ratees and about their perceptions of the dimensions as they were defined on the instruments. This suggests that the scales provided clear definitions of behaviors, which allowed the raters to discriminate among the behaviors with some degree of consistency. The behavioral rating scales were superior to the summated ratings in terms of halo (similarity of ratings across performance dimensions), leniency (inflated ratings), and discriminant validity. It is not surprising to find agreement between the rating methods, as they are based on the same dimension definitions and the raters were participants in the development of the rating instruments. It is worth noting that developing the behavioral scales was extremely time-consuming, but that the managers felt they gained a better understanding of critical job behaviors—those that could contribute to effective performance. This could be useful if the results were integrated into the management development process.

The weakness of this study is that it does not really compare substantially different methodologies. As Landy and Farr (1983) remarked with reference to a different set of studies, when a common procedure is used to develop the dimensions and/or examples, then the study is really only about different presentation modes—that is, the type of anchor.

Kavanagh et al. (1971) and Borman (1978) also used the multimethod-multirater method to examine convergent and discriminant validity. Kavanagh et al. (1971) compared ratings of managerial traits and job functions made by the superior and two subordinates of middle managers. The traits rated included intellectual capacities, concern for quality, and leadership, while job functions included factors like planning, investigating, coordinating, supervising, etc. The results showed agreement among raters about ratees (.44) but did not demonstrate the ability of the raters to discriminate among the rating dimensions. Raters were more consistent when evaluating personal traits than job functions and, according to Kavanagh et al. (1971), that finding suggests that ratings based on personality traits are more reliable than performance traits. However, they also show an increased level of halo over ratings based on job functions.

Borman (1978) examined the construct validity of BARS under highly controlled laboratory conditions for assessing the performance of managers and recruiting interviewers. Different groups of raters provided ratings for videotaped vignettes representing different levels of performance effectiveness on selected rating dimensions. Performance effectiveness on each dimension

was established by a panel of experts. Convergent validity was determined by comparing the performance effectiveness rating of the experts with those of the raters viewing the taped performances—the resulting correlations was .69 for managers and .64 for recruiters. The discriminant validity, as measured by differences in raters' ratings of ratees across performance dimensions, was .58 for managers and .57 for recruiters. According to Borman, these correlations are higher than those generally found in applied settings. However, the results show that, if rating scales are carefully designed to match the characteristics of the job and if environmental conditions are controlled, highly reliable performance ratings can be provided.

Other Evidence of Construct Validity Under the expanded definition of validation strategies, there is an opportunity to incorporate information from all sources that might enhance our understanding of a construct. Three useful sources of research evidence that can contribute to knowledge about the validity of performance appraisal measures are (1) research studies reporting positive correlations of performance appraisal ratings with predictors of performance, (2) research studies suggesting that, for the most part, performance ratings do not correlate significantly with systematic sources of bias such as gender and age of either the rater or the ratee, and (3) research studies showing a positive relationship between performance appraisal feedback and worker productivity.

Validity studies that employ supervisory ratings as criteria for measuring the strength of predictors, such as cognitive or psychomotor ability tests, provide indirect evidence for the construct validity of performance ratings. There are literally thousands of validation studies in which supervisors provided performance ratings for use as criteria in measuring the predictive power of ability tests such as the General Aptitude Test Battery (GATB). The Standard Descriptive Rating Scale was specifically developed and used for most of the GATB criterion-related validity studies—raters participating in these studies were told that their ratings were for research purposes only. The results, based on 755 studies, showed that the average observed correlation between supervisor ratings and GATB test score was .26 (Hartigan and Wigdor, 1989).

Many of the advances in meta-analysis suggested by Schmidt and Hunter (1977) and Hunter and Hunter (1984) were developed to provide integrations of the vast literature on job performance prediction. Hunter (1983) in a detailed meta-analysis showed a corrected correlation of .27 between cognitive ability tests and supervisor ratings of employee job performance. Two additional meta-analyses compared supervisor ratings with other criteria used for test validation (Nathan and Alexander, 1988; and Schmitt et al., 1984). Nathan and Alexander (1988) found that for clerical jobs, ability test scores correlated with supervisor ratings .34, with rankings .51, and with work samples .54 (correlations were corrected for test unreliability, sample size, and range restriction). The results obtained by Schmitt et al. (1984) were similar: the average correlation between

ability tests and supervisor ratings was .26 and between ability tests and work samples it was .40 (corrected for sampling error only). All of these studies demonstrate the existence of moderate correlations between employment test scores and supervisor ratings of employee job performance.

There are several studies that have examined the effects on performance appraisal ratings of the demographic characteristics of the ratee and the rater (e.g., race, gender, age). The hypothesis to be tested here is that these demographic characteristics do not influence performance appraisal ratings. On one hand, rejection of the hypothesis would mean that the validity of the performance ratings was weakened by the existence of these systematic sources of bias. On the other hand, if the hypothesis is not rejected, it can be assumed that the validity of the performance ratings is not being compromised by these sources of rating error.

There are meta-analyses of the research dealing with both race and gender effects. Kraiger and Ford's (1985) survey of 74 studies reported that the race of both the rater and the ratee had an influence on performance ratings; in 14 of the studies, both black and white raters were present. Over all studies supervisors gave higher ratings to same-race subordinates than to subordinates of a different race. The results showed that white raters rated the average white ratee higher than 64 percent of black ratees and black raters rated the average black ratee higher than 67 percent of white ratees. (The expected value, if there were no race effects, would be 50 percent in both cases.) In this analysis, ratee race accounted for 3.3 percent and 4.8 percent of the variance in ratings given by white and black raters, respectively. In a later study, the authors (Ford et al., 1986) attempted to assess the degree to which black-white differences on performance appraisal scores could be attributed to real performance differences or to rater bias. They looked at 53 studies that had at least one judgment-based and one independent measure (units produced, customer complaints) of performance. Among other things, they found that the size of the effects attributable to race were virtually identical for ratings and independent measures, which led the authors to conclude that the race effects found in judgment-based ratings cannot be attributed solely to rater bias—i.e., there were also real performance differences.

Carson et al. (1990) conducted a meta-analysis on 24 studies of gender effects in performance appraisal. In this review, gender effects were extremely small—the gender of both the ratee and the rater accounted for less than 1 percent of the variance in ratings. Although there was some evidence of a ratee-gender by rater-gender interaction (higher ratings for same gender versus mixed gender pairs), the interaction was not statistically significant. Murphy et al. (1986) reached similar conclusions in their review.

Age has also been shown to have a minimum effect on performance ratings. McEvoy and Cascio (1988) reported a meta-analysis of 96 studies relating ratee age to performance ratings. On average, the age of the ratee accounted for less

than 1 percent of the variance in performance ratings. In addition, Landy and Farr (1983) suggest that if age effects exist at all, they are likely to be small.

Another source of indirect evidence for suggesting that under some conditions supervisors can make accurate performance ratings is the strength of the relationship between performance appraisal feedback and worker productivity—by inference, if feedback results in increased productivity, then the performance appraisal must be accurate. There are several studies that have shown that performance feedback does have a positive impact on worker productivity as measured in terms of production rates, error rates, and backlogs (Guzzo and Bondy, 1983; Guzzo et al., 1985; Kopelman, 1986). Landy et al. (1982) have shown that performance feedback has utility that far exceeds its cost, and that a valid feedback system can lead to substantial performance gains. They reviewed several studies showing that individual productivity increased as much as 30 percent as a function of feedback. In one of the studies (Hundal, 1969), a correlation of .52 (p < .01) was found between the level of feedback specificity and productivity. The subjects of Hundal's research were 18 industrial workers whose task was to grind metallic objects. This evidence is particularly interesting because it gets to the relevance of the appraisal, whereas much of the evidence of interrater reliability does not.

Interrater Reliability

There have been several studies suggesting that two or more supervisors in a similar situation evaluating the same subordinate are likely to give similar performance ratings (Bernardin, 1977; see Bernardin and Beatty, 1984 for a review of research on interrater reliability). For example, Bernardin et al. (1980) reported an interrater reliability coefficient of .73 among raters at the same level in the organization.

Other studies have examined the agreement among raters who occupy different positions in the organization. Although there is evidence that ratings obtained from different sources often differ in level—for example, self-ratings are usually higher than supervisory ratings (Meyer, 1980; Thornton, 1980)—there is substantial agreement among ratings from different sources with regard to the relative effectiveness of the performance of different ratees. Harris and Schaubroeck (1988), in a meta-analysis of research on rating sources, found an average correlation of .62 between peer and supervisory ratings (correlations between self-supervisor and self-peer ratings were .35 and .36, respectively).

One question of scale format that has received a good deal of attention in the reliability research concerns the number of scale points or anchors. According to Landy and Farr (1980), there is no gain in either scale or rater reliability when more than five rating categories are used. However, the reliability drops with the use of fewer than 3 or more than 9 rating categories. Recent work

indicates that there is little to be gained from having more than 5 response categories.

Implications

There are substantial limitations in the kinds of evidence that can be brought to bear on the question of the validity of performance appraisal. The largest constraint is the lack of independent criteria for job performance that can be used to test the validity of various performance appraisal schemes. Given this constraint, most of the work has focused on (1) establishing content evidence through applying job analysis and critical incident techniques to the development of behaviorally based performance appraisal tools, (2) demonstrating interrater reliability, (3) examining the relationship between performance appraisal ratings, estimates of job knowledge, work samples, and performance predictors such as cognitive ability as a basis for establishing the construct validity of performance ratings, and (4) eliminating race, age, and gender as significant sources of rating bias. The results show that supervisors can give reliable ratings of employee performance under controlled conditions and with carefully developed rating scales. In addition, there is indirect evidence that supervisors can make moderately accurate performance ratings; this evidence comes from the studies in which supervisor ratings of job performance have been developed as criteria for testing the predictive power of ability tests and from a limited number of studies showing that age, race, and gender do not appear to have a significant influence on the performance rating process.

It should be noted that the distinction between validity and reliability tends to become hazy in the research on the construct validity of performance appraisals. Much of the evidence documents interrater reliabilities. While consistency of measurement is important, it does not establish the relevance of the measurement; after all, several raters may merely display the same kinds of bias. Nevertheless, the accretion of many types of evidence suggests that performance appraisals based on well-chosen and clearly defined performance dimensions can provide modestly valid ratings within the terms of psychometric analysis. Most of the research, however, has involved nonmanagerial jobs; the evidence for managerial jobs is sparse.

The consensus of several reviews is that variations in scale type and rating format have very little effect on the measurement properties of performance ratings as long as the dimensions to be rated and the scale anchors are clearly defined (Jacobs et al., 1980; Landy and Farr, 1983; Murphy and Constans, 1988).2 In addition, there is evidence from research on the cognitive processes of raters suggesting that the distinction between behaviors and traits as bases for

rating is less critical than once thought. Whether rating traits or behaviors, raters appear to draw on trait-based cognitive models of each employee's performance. The result is that these general evaluations substantially affect raters' memory for and evaluation of actual work behaviors (Murphy et al., 1982; Ilgen and Feldman, 1983; Murphy and Jako, 1989; Murphy and Cleveland, 1991).

In litigation dealing with performance appraisal, the courts have shown a clear preference for job-specific dimensions. However, there is little research that directly addresses the comparative validity of ratings obtained on job-specific, general, or global dimensions. There is, however, a substantial body of research on halo error in ratings (see Cooper, 1981, for a review) that suggests that the generality or specificity of rating dimensions has little effect. This research shows that raters do not, for the most part, distinguish between conceptually distinct aspects of performance in rating their subordinates. That is, ratings tend to be organized around a global evaluative dimension (i.e., an overall evaluation of the individual's performance—see Murphy, 1982), and ratings of more specific aspects of performance provide relatively little information beyond the overall evaluation. This suggests that similar outcomes can be expected from rating scales that employ highly general or highly job-specific dimensions.

RESEARCH ON PERFORMANCE APPRAISAL APPLICATION

Chapter 6 provides a summary of private-sector practices in performance appraisal. Our purpose here is to present a general review of the research in industrial and organizational psychology and in management sciences that contributes to an understanding of how appraisal systems function in organizations. The principal issues include (1) the role of performance appraisal in motivating individual performance, (2) approaches to improving the quality of performance appraisal ratings, and (3) the types and sources of rating distortions (such as rating inflation) that can be anticipated in an organizational context. The discussion also includes the implications of links between performance appraisal and feedback and between performance appraisal and pay.

Performance Appraisal and Motivation

Information about one's performance is believed to influence work motivation in one of three ways. The first of these, formally expressed in contingency theory, is that it provides the basis for individuals to form beliefs about the causal connection between their performance and pay. Two contingency beliefs are important. The first of these is a belief about the degree of association

between the person's own behavior and his or her performance. In Vroom's (1964) Expectancy X Valence model, these beliefs are labeled expectancies and described as subjective probabilities regarding the extent to which the person's actions relate to his or her performance. The second contingency is the belief about the degree of association between performance and pay. This belief is less about the person than it is about the extent to which the situation rewards or does not reward performance with pay, where performance is measured by whatever means is used in that setting. When these two contingencies are considered together, so goes the theory, it is possible for the person to establish beliefs about the degree of association between his or her actions and pay, with performance as the mediating link between the two.

The second mechanism through which performance information is believed to affect motivation at work is that of intrinsic motivation. All theories of intrinsic motivation related to task performance (e.g., Deci, 1975; Hackman and Oldham, 1976, 1980) argue that tasks, to be intrinsically motivating, must provide the necessary conditions for the person performing the task to feel a sense of accomplishment. To gain a sense of accomplishment, the person needs to have some basis for judging his or her own performance. Performance evaluations provide one source for knowing how well the job was done and for subsequently experiencing a sense of accomplishment. This sense of accomplishment may be a sufficient incentive for maintaining high performance during the time period following the receipt of the evaluation.

The third mechanism served by the performance evaluation is that of cueing the individual into the specific behaviors that are necessary to perform well. The receipt of a positive performance evaluation provides the person with information that suggests that whatever he or she did in the past on the job was the type of behavior that is valued and is likely to be valued in the future. As a result, the evaluation increases the probability that what was done in the past will be repeated in the future. Likewise, a negative evaluation suggests that the past actions were not appropriate. Thus, from a motivational standpoint, the performance evaluation provides cues about the direction in which future efforts should or should not be directed.

The motivational possibilities of performance appraisal are qualified by several factors. Although the performance rating/evaluation is treated as the performance of the employee, it remains a judgment of one or more people about the performance of another with all the potential limitations of any judgment. The employee is clearly aware of its character, and furthermore, it is only one source of evaluation of his or her performance. Greller and Herold (1975) asked employees from a number of organizations to rate five kinds of information about their own performance as sources of information about how well they were doing their job: performance appraisals, informal interactions with their supervisors, talking with coworkers, specific indicators provided by the job itself, and their own personal feelings. Of the five, performance appraisals

were seen as the least likely to be useful for learning about performance. To the extent that many other sources are available for judging performance and the appraisal information is not seen as a very accurate source of information, appraisals are unlikely to play much of a role in encouraging desired employee behavior (Ilgen and Knowlton, 1981).

If employees are to be influenced by performance appraisals (i.e., attempts to modify their behavior in response to their performance appraisal), they must believe that the performance reported in the appraisal is a reasonable estimate of how they have performed during the time period covered by the appraisal. One key feature of accepting the appraisal is their belief in the credibility of the person or persons who completed the review with regard to their ability to accurately appraise the employee's performance. Ilgen et al. (1979), in a review of the performance feedback literature, concluded that two primary factors influencing beliefs about the credibility of the supervisor's judgments were expertise and trust. Perceived expertise was a function of the amount of knowledge that the appraise believed the appraiser had about the appraisee's job and the extent to which the appraisee felt the appraiser was aware of the appraisee's work during the time period covered by the evaluation. Trust was a function of a number of conditions, most of which were related to the appraiser's freedom to be honest in the appraisal (Padgett, 1988) and the quality of the interpersonal relationship between the two parties.

A difficult motivational element related to acceptance of the performance appraisal message is the fact that the nature of the message itself affects its acceptance. There is clear evidence that individuals are very likely to accept positive information about themselves and to reject negative. This effect is often credited for the frequent finding that subordinates rate their own performance higher than do their supervisors (e.g., see Holzbach, 1978; Zammuto et al., 1981; and Shore and Thornton, 1986). Although this condition is not a surprising one, if the focus is on the nature of the response that employees will make to performance appraisal information, then the existence of the discrepancy means that the employee is faced with two primary methods of resolving the discrepancy: acting in line with the supervisor's rating or denying the validity of that rating. The fact that the latter alternative is very frequently chosen, especially when the criteria for good performance are not very concrete (as is often the case for managerial jobs), is one of the reasons that performance appraisals often fail to achieve their desired motivational effect.

Approaches to Increasing the Quality of Rating Data

Applied psychologists have identified a variety of factors that can influence how a supervisor rates a subordinate. Some of these factors are associated with the philosophy and climate of the organization and may influence the rater's willingness to provide an accurate rating. Other factors are related to the

technical aspects of conducting a performance appraisal, such as the ability of the rater (1) to select and observe the critical job behaviors of subordinates, (2) to recall and record the observed behaviors, and (3) to interpret adequately the contribution of the behaviors to effective job performance. This section will discuss the research designed to reduce errors associated with the technical aspects of conducting a performance appraisal. Specific areas include rater training programs, behaviorally based rating scales, and variations in rating procedures.

Rater Training

The results of the effects of training on rating quality are mixed. A recent review by Feldman (1986) concluded that rater training has not been shown to be highly effective in increasing the validity and accuracy of ratings. Murphy et al. (1986) reviewed 15 studies (primarily laboratory studies) dealing with the effects of training on leniency and halo and found that average effects were small to moderate. In a more recent study, Murphy and Cleveland (1991) suggest that training is most appropriate when the underlying problem is a lack of knowledge or understanding. For example, training is more necessary if the performance appraisal system requires complicated procedures, calculations, or rating methods. However, these authors also suggested that the accuracy of overall or global ratings will not be influenced by training.

Taking the other position, Fay and Latham (1982) proposed that rater training is more important in reducing rating errors than is the type of rating scale used. They compared the rating responses of trained and untrained raters on three rating scales (one trait and two behaviorally based scales). The results showed significantly fewer rating errors for the trained raters and for the behaviorally based scales compared with the trait scales. The rating errors were one and one half to three times as large for the untrained group.

The training was a four-hour workshop consisting of (1) having trainees' rate behaviors presented on videotape and then identifying similar behaviors in the workplace, (2) a discussion of the types of rating errors made by trainees, (3) a group brainstorming on how to avoid errors. The workshop contained no examples of appropriate rating distributions or scale intercorrelations; the focus was on accurate observation and recording. Researchers have found that instructing raters to avoid giving similar ratings across rating dimensions or giving high ratings to several individuals may not be appropriate; some individuals do well in more than one area of performance and many individuals may perform a selected task effectively (Bernardin and Buckley, 1981; Latham, 1988). Thus, these instruction could result in inaccurate ratings.

Other researchers have shown that training in observation skills is beneficial (Thornton and Zorich, 1980) and that training can help raters develop a common frame of reference for evaluating ratee performance (Bernardin and Buckley,

1981; McIntyre et al., 1984). However, the training effects documented in these laboratory studies are typically not large, and it is not clear whether they persist over time.

Behaviorally Based Rating Scale Design

Another approach used by researchers to reduce rating errors has involved the use of rating scales that present the rater with a more accurate or complete representation of the behaviors to be observed and evaluated. Behaviorally based scales may serve as a memory or observation aid; if developed accurately, they can provide raters with a standard frame of reference. The strategy of using behaviorally based scales to improve observation might be especially helpful if combined with observation skill training. However, there is some evidence that these scales can unduly bias the observations and the recall processes of raters. That is, raters may attend only to the behaviors depicted on the scales to the exclusion of other, potentially important behaviors. Moreover, there is no compelling evidence that behaviorally based scales facilitate the performance appraisal process in a meaningful way, when these scales are compared with others developed with the same care and attention.

Rating Sequence

Supervisors rating many individuals on several performance dimensions could either complete ratings in a person-by-person sequence or in a dimension-by-dimension sequence (rate all employees on dimension I and then go on to dimension II, etc.). Presumably, a person-by-person procedure focuses the rater's attention on the strengths and weaknesses of the individual, while the dimension-by-dimension procedure focuses attention on the differences among individuals on each performance dimension. A review of this research by Landy and Farr (1983) indicates that identical ratings are obtained with either strategy.

Implications