This page intentionally left blank.

Interoperability, Standards, and Linked Data

James Hendler

-Rensselaer Polytechnic Institute-

I will focus on the technological challenge of online knowledge discovery. I will first address interoperability issues and then talk about some promising developments.

Scientists can learn a lot about data sharing just from observing what is happening in the outside world. There are many data initiatives and developments outside academia to which we should be paying more attention.

Expressive ontologies are not a scalable solution. They are a necessary solution in certain domains, but they do not solve the interoperability problem widely. They allow a scientist to build his or her silo better, and sometimes they even let the silo get a little wider, but they are not good at breaking down the silos unless the scientist can start linking ontologies, in which case he or she has to deal with the ontology interoperability issue, as well as the costs and difficulty of building them and their brittleness.

I am known for the slogan “A little semantics goes a long way.” I have said this so often that about 10 years ago people started calling it the Hendler hypothesis at the Defense Advanced Research Projects Agency (DARPA).

We are used to thinking that a major science problem is searching for information in papers, and we have forgotten that we also have to find and use the data underlying the science. A traditional scientific view of the world might be, “I get my data, I do my work, and then I want to share it.” But the sharing should be part of how we do science, and other issues such as visualization, archiving, and integration should be in that life cycle too. I will talk about these issues, and then I will discuss the kinds of technologies that are starting to mature in this area. These technologies have not yet solved the problems of science and, in fact, largely have not been applied to the problems of science.

Scientists do use extremely large databases, and many of these data are crucial to society. On the other hand, we scientists tend to be fairly pejorative about something like Facebook, because Facebook is not being used to solve the problems of the world. I wish science could get the number of minutes per day that Facebook gets, which is roughly equivalent to the entire workforce of a very large company for a year. We are also not used to thinking about Facebook as confronting a data challenge per se, but it collects, according to the published figures, 25 terabytes of logged data per day. That sounds like the kind of numbers for large science data collections. Facebook’s valuation is estimated to be well over $33 billion, which is the size of the entire National Institutes of Health budget. Not surprisingly, they are able to deal with some of these data issues that are discussed here. We need to look at what they are doing and determine if we could take advantage of some of their approaches.

I do not have similar numbers for Google, because they have not been published. The last good estimate I could find was in 2008, which was 20 petabytes per day, but that was 3 years ago. That also does not include the exchange of or the storage of YouTube data, which I cannot find good numbers about either. Google’s valuation in 2011 is about $190 billion,

which is roughly a third of the Department of Defense budget. Not the research budget—the entire budget.

Therefore, if we think that this kind of work is expensive, yes, it is, but there are other people doing it, and they are investing large amounts of money. It is not surprising that they have been focusing on big data problems in a way different from scientists, and they have been able to explore some areas that are very difficult for us to explore. If a researcher wanted to buy 10,000 computers for data mining purposes, it would difficult to do because of the lack of resources.

Several speakers talked about semantics in the context of annotation and finding related work in the research literature. It is an important problem, but I do not think it is the key unaddressed problem in science. In fact, I would contend that we have spent a huge amount of money on that problem, much of it on trying to reinvent things that already exist in a better form from open sources in the real world.

Most companies today that want to work with natural language processing start with open-source software. For example, there is a company that is taking everything from the Twitter stream, running it through a number of natural language tools, and doing some categorization, visualization, and other similar work. They did not build any of their language processing software. I am also told that Watson, the IBM Jeopardy computer, had a large team of programmers, but, in fact, that the basic language technology used was mostly off the shelf, and that it was mostly statistical.

Semantic MedLine is a project that the Department of Health and Human Services has invested in. It does a fairly good job but does not understand the literature sufficiently to find exactly what we want. But we are not able to do that in any kind of literature, and Google is working on that problem as well. Hence, I do not see the point of yet another program to do that for yet another subfield or against yet another domain. Instead, we need to start thinking about how to put these kinds of technologies together.

The Web is a hard technology to monitor and track because it moves very fast. It has been moving very fast for a long time, however, and, as scientists, we need to start taking advantage of it much more than reinventing it.

There are a few different tools and models on the Web that are worth thinking about. One is to move away from purely relational models; from assuming that the only way to share data is to have a single common model of the data. In other words, to put data in a database or to create a data warehouse, we need to have a model, and that is done for a particular kind of query efficiency.

Sometimes, however, that efficiency is not the most important factor. Google had to move away from traditional databases to deal with the 20 petabytes of data generated every day. Nobody has a data warehouse that does that, so Google has been inventing many useful techniques. “Big Data” was one of their names for their file system. We cannot easily get the details of how Google does it, but we can get published papers from Google people that will be useful in learning how to do similar work. The NoSQL community is a fairly large and growing movement of people who are saying that when you are dealing with large volumes of data there is a need for something different from the traditional data warehouse.

I had a discussion with some server experts who said, “Just give us the schemas, and we can do this work.” I pointed out that we cannot always get the schemas, that some of these datasets were not even built using database technologies with schemas, and in some cases someone else owns the schema and will not share it. We can still obtain the data, however, either through an Application Programming Interface (API) or through a data-sharing Web site.

Although Facebook’s and Google’s big data solutions are proprietary, the economics of applications, cell phones, and other new technologies are pushing toward much more interoperability and exchangeability for the data, which is mandating some sort of a semantics approach. Consider, for example, the “like” buttons on Facebook. Facebook basically wants their tools and content to be everywhere, not just in Facebook, which means it has to start thinking more about how to get those data, who will get that data, whether it wants data to be shared or not, and what formats and technologies to use. As a result, there are many technologies behind that “like” button. Similarly, there are a number of approaches that Google employs to find better meanings for their advertising technologies. What happens is that Google recognizes that at one point it will not be able do the work by itself, because there is a long-tailed distribution on the Web. This means that it has to move the work to where Webmasters and other people will be able to develop the semantic representations for their domains.

That is happening with all the search engines, and the big issue now in that area is simple metadata and lightweight semantics. The notion of the complex ontology is getting replaced by the notion of fairly simple descriptive terms—that is, I can probably describe the data in my dataset with 10 or 12 different terms that will be enough for me to put in a federated catalog for people to decide whether they want to read my data dictionary.

The idea here is that if the data are going to be in a 200-page, carefully constructed metadata format with the required field-specific types, and they have to be compliant with the standards of the Internet Engineering Task Force and ISO (International Standards Organization), the vocabularies get harder to work with. It is an engineering problem that is very similar to the integration problem, and what many people are realizing is that you can arrange the data hierarchically and get good results for small investments.

Here is an example. Many governments are putting raw data on the Web now. They are not just putting visualizations of the data online; they are putting the datasets themselves. From a political point of view, the two biggest motivations are enhanced transparency and the chance to inspire innovation. Promoting innovation can proceed in two different ways. First, the governments are hoping that some people will figure out how to make useful and innovative tools using the data. More important, especially for local governments, is that they pay many people to build Web sites and interactive applications that they cannot afford to build anymore.

For instance, a government agency may be able to pay one contractor to build a big system for a problem that is of high priority, but it may have 57 more priorities that it cannot afford to fund. The governments need someone to develop those applications, so if it makes the data available, at least some of those applications are done by other people. The development of such applications is out of the agency’s control, but if the work is getting done at no cost or for some small amount of money, it can start planning strategically for other priorities.

The United States and the United Kingdom are the two leading providers in terms of organizing their data. The United States has about 300,000 datasets, most of them geodata. If

we take out the geodata, there are probably 20,000 to 30,000 datasets that have meaningful raw data. The United Kingdom has less, but it probably has the best in the sense that, for example, you can get the street crime data for the entire country for a number of years. They are releasing very high-quality data down to a local level.

Many countries, including ours, are releasing scientifically interesting data, but you would have to work to find them. To use these data, combining them with other data, can be more important than just looking at them. Those entities releasing data include countries, nongovernmental organizations, cities, and other groups. For example, one of the Indian tribes in New York State has released much of its casino data.

There are groups all over the world working with these data. My group is one, and the Massachusetts Institute of Technology has a group working jointly with Southampton University, mostly on U.K. data. Many of these groups are in academia, but there are many nonacademic groups doing this kind of work. One of my suggestions is to think about data applications. You may have a large database, and if parts of it can be made available through an application, an interface, or through an API, people would be using the data in a sharable—and often an attributable—way.

Getting back to science issues such as attribution and credit, we have one of our government applications in the iTunes store. I know exactly how many people downloaded it yesterday, and how many people are still using an old version.

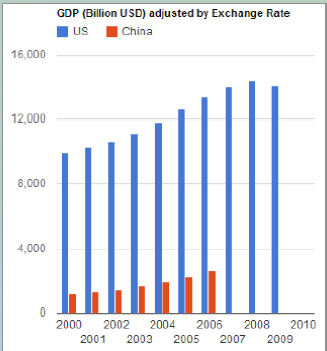

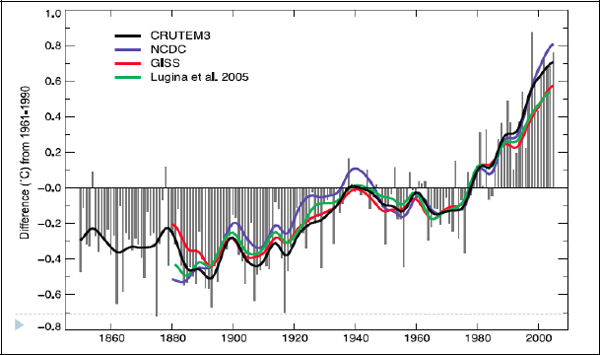

When some students and I were in China, we discovered that China was releasing much of its economic data, so we took China’s gross domestic product (GDP) data and the U.S. GDP data to do a comparison. To do that comparison, we needed to find the exchange rate. Luckily, there is a dataset from the Federal Reserve that has all of the exchange rates for the U.S. dollar weekly for the past 50 years or so. We got those data, we mashed them together, and we got a chart that looks like Figure 3-1:

FIGURE 3-1 GDP Chart.

SOURCE: James Hendler

We also divided the GDP by the population (data we found in Wikipedia), so that we could click a button to go between total GDP and per capita GDP. The model was built in less than 8 hours, including the conversion of the data, the Web interface, and the visualization. That is a game-changer. It would completely change the way we work if we could get this down to a few minutes. When my group started working with this kind of technology several years ago, it would take us weeks to do this kind of work. Part of the improvement is because some of the technology was immature, part of it is because the tools are now available commercially, and another part is because there is now visualization technology freely available on the Web. Building visualizations is labor intensive, so by moving to simple visualizations, we can use visualizations much earlier. We are also able to link government data to social media.

One interesting question that has not really been part of the discussion that we have had in the scientific community is how to find data. For example, we noticed that most of the U.S. government data were about the states, and the metadata would represent the data as being about the 50 states, but very few of the sets actually covered all the states. Some states were missing. Some databases included American Samoa, Guam, Puerto Rico, et cetera, and the District of Columbia (which is not officially a state). In this case, there is a very loose definition of a state as opposed to a territory, and no one has much problem with that. But if a researcher wants to find datasets about Alaska, it can be erroneous to just assume that all of the datasets that say they cover the states will actually have Alaska data.

The other problem is that we cannot search for the keyword “Alaska” within the datasets. If there is a column that represents the states, it may be called Alaska, it may be called AK, it may be called state two, or it may be called S17:14B/X. The government has terabytes of data, so how do we find the data that are for Alaska, for example? We cannot just call for building a data warehouse and rationalizing the process, because these datasets are being released by different people in different agencies in different ways.

Thus, metadata becomes very important. Simple and easy-to-collect metadata can allow building faceted browsers and similar tools. It is a real research challenge, however, to determine the kind of metadata for real scientific data that are powerful enough to allow useful searches. With the government data, one problem is that all of the foreign databases are in their own languages, and it is hard for those of us who are English language speakers to figure out what is in the Chinese dataset, unless you hire a Chinese student. You cannot just use Google Translate and expect the result to be academically useful.

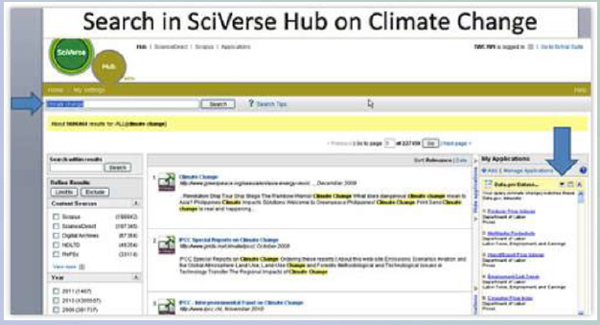

People are starting to consider integrating text search and data search. This is an application that one of my staff did, joined with Elsevier’s new SciVerse, which was featured on the U.S. Figure 3-2).

What this application does is that when we are doing a keyword search for scientific data, it is also looking for datasets that might be related to that same term. We are using very lightweight ontologies that mostly just use the keyword. We are working on making it better. We think it would be good when someone is looking for papers, the data in or about that paper were available, but also finding what is in the world’s data repositories that might be useful.

FIGURE 3-2 Search in SciVerse Hub on Climate Change.

Source: James Hendler

When we start thinking about data integration, using data, or searching for data, one thing that happens in the discovery space is that the data become part of the hypothesis formation. That means that looking at, visualizing, and exchanging the data cannot be an expensive add-on to a project. It has got to become a very key part of the standard scientific workflow, with appropriate tools to reduce the costs.

What is promising in this area? We have been looking at linked open data issues outside of science, and some of its promise has been explored (mostly within the ontology area). Genomics and astronomy are two fields where we have actually seen semantic Web technologies deployed, but many other science communities are still mostly thinking about their own data holdings, not about being part of a much larger data universe. It is interesting that when we talk to the Environmental Protection Agency, or to the National Oceanic and Atmospheric Administration, or similar agencies, they say that they are providing data to many communities, so they cannot easily use the ontologies of a particular one. Hence, we need to learn how to map between these approaches.

How do I know what is in a large data store? How do I know what is in a virtual observatory? I need services, metadata, and APIs. I also need to know the rules for using the data.

Other issues we need to think about are related to policies. If I take someone’s data from a paper, mix them with someone else’s data, and republish them, those people would probably like to get credit for the data being reused. Or if I do some work on your data that you do not like, you might want to rectify it. How do we do that?

National Technological Needs and Issues

Deborah Crawford

-Drexel University-

I am currently the vice provost for research at Drexel University, but I used to work at the National Science Foundation (NSF). I spent almost 20 years at NSF and was fairly significantly involved in the agency’s cyberinfrastructure activities. My experience within NSF and now at Drexel has provided me with an interesting perspective on what data-intensive science means in a research university that aspires to be more research intensive. The main message of my talk is that there have been many advances in data-intensive science in some fields, but we have massive amounts of work still to do if data-intensive science is to realize its full potential across all of the disciplines.

There have been many reports issued over the past decade that address the importance of data-intensive science and the role of information technology in advancing science. An important one is the Atkins report that Dr. Hey referred to earlier. In that report, Dan Atkins of the University of Michigan and his committee speak of revolutionizing the conduct of science and engineering research and education through cyberinfrastructure, and they examine democratizing science and engineering. Those were tremendously powerful statements in 2003, and they stimulated a great deal of excitement within the scientific community.

Since then, there have been a number of reports that have specifically addressed data-intensive science, several of which were released in the past couple of years. This is a quote from a joint workshop between the NSF and the U.K. Joint Information Systems Committee that was held in 2007: “The widespread availability of digital content creates opportunities for new kinds of research and scholarship that are qualitatively different from traditional ways of using academic publications and research data.”

What we have heard so far in this workshop was from those who I would describe as the visionaries and the trailblazers in science and engineering, representing those communities that were very motivated to take advantage of information technology in their work in order to advance their field of science.

I want to focus now on the long tail of science—the researchers in those fields where the immediate advantages of information technology and collaboration are not so readily apparent. There is tremendous opportunity in those communities, but we do not quite know how to realize those opportunities.

I would like to provide a snapshot of some surveys of researchers working in different communities. The main message is that computer-mediated knowledge discovery practices vary widely among scientific communities and among colleges and universities. In the United States and in the United Kingdom, for example, this is certainly true. There are some colleges and universities that know how to take advantage of their digital technologies and their digital capabilities, while there are others that simply are way behind the curve.

There are three fundamental issues that communities or universities must address: (1) What kinds of data and information are made open, at what stage in the research process, and

how? (2) To which groups of people are the data and information made available and on what terms or conditions? (3) Who develops and who has access to the tools and training to leverage the power of this discovery modality? These are fundamental questions and are key to the realization of data-intensive science.

I am going to talk about two case studies, one conducted in the United Kingdom by the Digital Curation Center, and one conducted within Yale University. Both point to some key features we need to address.

The study done by the Digital Curation Center in the United Kingdom was called “Open to All.” Its purpose was to understand how the principles of digitally enabled openness are translated into practice in a range of disciplines. These are the kinds of questions we need to be asking ourselves to determine the actions that we need to take to make sure that this modality of science is accessible and advantageous to everyone.

In this study, the authors characterized a research life-cycle model and asked different communities how they were using digital technologies within the context of that life cycle to further their science. They surveyed groups among six communities: chemistry, astronomy, image bioinformatics, clinical neuroimaging, language technology, and epidemiology. The range of responses within those different communities was fascinating to see. Surprisingly chemistry was the trailblazing community, at least among the individuals surveyed for this study. The chemists were using social networking tools, Open Notebook Science, wikis, and all the modalities of digital technologies to collaborate, and to collect, analyze, and publish their data. Everything was quite seamless from a community that I had not anticipated to be one of the trailblazing communities.

It was interesting to hear from the clinical neuroimaging group. They were so skeptical of the value of data-intensive science and open data sharing that in this study they refused to even disclose their identities as individuals. We therefore went from one extreme to the other, and we saw the range of practices and values within different scientific communities.

From their conversations with these communities, the group that conducted these case studies in the United Kingdom came up with a list of the perceived benefits of open data-intensive science. It included improving the efficiency of research and not duplicating previous efforts, sharing findings, sharing best practices, and increasing the quality of research and scholarly rigor. This last one was especially true for the members of the chemistry community who were surveyed. They saw a great opportunity in making available in blogs the day-to-day data that they collected—not just raw data, but derived data. They found tremendous benefits in making that open to the community to provide more scholarly rigor.

Among the other perceived benefits were enhancing the visibility of access to science, enabling researchers to ask new questions, and enhancing collaboration in community-building, which all of the groups surveyed agreed was a benefit. There was also a perceived benefit of increasing the economic and social impact of research about which the report was ambiguous. Although economic and social impacts each are treated separately as a perceived benefit, the sense was that the real value could not actually be measured. So, there was a question: Can we create economic value by making our data—essentially our intellectual property—much more openly accessible?

One of the perceived impediments was the lack of evidence of benefits. Researchers who felt this way were not motivated to make their data openly available. Other impediments were the lack of clear incentives to change and the values in academe not being consistent with open data sharing. For tenure and promotion and the drive to publish, the perceptions were that the only way to publish is in the open literature and that it is not in a researcher’s interest to share data, because someone else may use the data to advance farther than the one who shared the data. Competitiveness is a big issue.

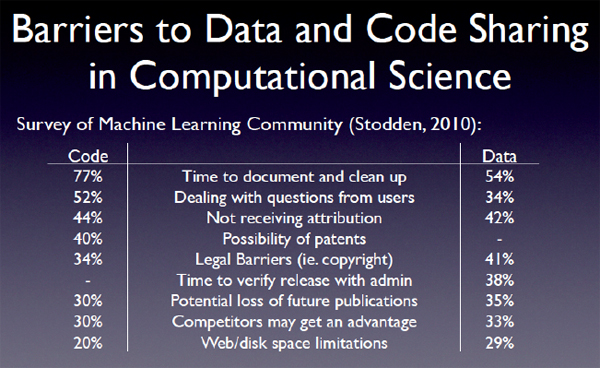

The conflict with the culture of independence and competition is absolutely related to the lack of clear incentives to change. Other impediments were inadequate skills, a lack of time, and insufficient access to resources. Another big concern was how to train both the scientists who are practicing today and the scientists and engineers of the future. Researchers were also worried about how long it took them to prepare their data for open access, about quality, and about ethical, legal, and other restrictions to access. Those were big issues, especially in the life sciences community.

The report’s recommendations called for policies and practices for data managing and sharing. Communities are desperate for guidance on these issues. What have the trailblazers learned that can be shared and applied more broadly? Contributions to the research infrastructure should be valued more—that is something we have heard often. Training and professional development should be provided, and there should be an increased awareness of the potential of open business models. That is related to the attitude among researchers that their data are their intellectual property and they want to derive some value from that; thus, they feel that if they make their data openly available, they are giving that value away. Assessment and quality assurance mechanisms should be developed.

The study done by the Yale University Research Data Taskforce was conducted by an organization within Yale called the Office of Digital Assets and Infrastructure, which is an organization established to accrue the value over time of the digital assets that result from research and scholarly activity within the institution. The office conducted this study to determine the requirements and components of a coherent technical infrastructure, to provide service definitions, and to recommend comprehensive policies to support the life-cycle management of research data at Yale.

Given the discussion earlier about research libraries, this is interesting. Here is Yale University doing a survey that includes both the librarians and the information technology enterprise staff at the university to determine what their faculty base most needs for managing digital data. Very much like the other survey, this one found that data-sharing practices vary widely among the disciplines they surveyed. The researcher has the most at stake when determining what the data-sharing practices are. Yale is going to create an institutional repository to secure, store, and share large volumes of data.

There are certainly some institutional pioneers in this area, such as the Massachusetts Institute of Technology and Indiana University, and much of what I characterize as “slow followers.” A major concern is how an institution can afford to create and maintain infrastructure like this.

Yale University understands that it needs to develop and deliver research data curation services and tools to all of its interested parties, not only in science and engineering, but also in the humanities and in the arts. Recognizing the importance of persistent access and

preservation, Yale is trying to establish a digital-preservation program to determine what they need to preserve data in the long term and what value will be accrued from it.

Data ownership was a big issue in the report. Who actually owns data? Most faculty believed they owned their data. It will be important to develop clear policies regarding data ownership, especially in those communities where data sharing is not a standard practice or is not valued.

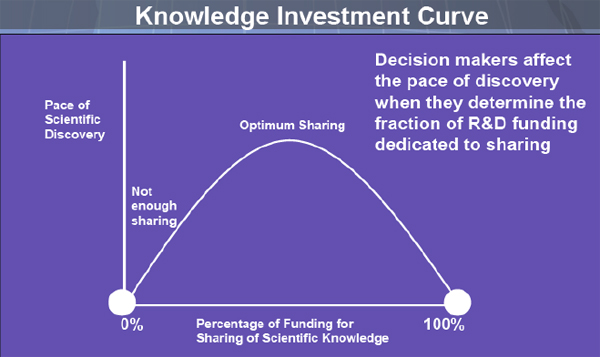

My main message, then, is that changes are in play at multiple scales: international, national, institutional, individual, and community. Most of the effort at this time has been focused on the community level, because communities have, for the most part, driven the transition to open data sharing in communities where clear value accrues from that sharing. Culture does not change because we desire it to change; it changes when our practices change. The bottom line is that all of this costs money. We will need to determine the appropriate balance between the cost of improved access to scientific opportunity and the benefits that accrue from it, and those are not easy questions to answer.

DISCUSSANT: Is it going to get to the point where IBM’s Watson knows the scientific literature and we do not ever need to do any work ourselves on the literature databases?

DR. HENDLER: No. For Jeopardy, Watson took many fairly high-end processors to beat Ken Jennings. For the medical version, they are trying to get that down to a more manageable number—maybe 20–50. They have already announced that the first issue they are pursuing with Watson is differential diagnosis, helping doctors access the medical literature. They designed the computer to be repurposable. This was the plan until 2009, but once they had the agreement that they were going to play Ken Jennings; they stopped doing anything except Jeopardy-related work. Now that they have won the game, they are back to the earlier focus. My guess is it will be 5 or 10 years until that becomes widely deployable.

DR. HEY: I am interested in the financial implications of sharing literature and data. Just as the music industry has to deal with what happens when someone makes a digital copy and distributes it for free, openly available data changes the business model. Similarly, with publications, we do not need printing presses anymore; we have the Web, and we can make digital copies and disseminate them. The big budgets that go to the publishers with their present business models perpetuate the system, but the system is being broken and it is up to universities and university libraries to respond. They should not be doing such deals with the publishers. They should be thinking about their role in the future. They should be thinking about how libraries can assist the research process of the institutions. I think the answer can be found in places like the iSchools. It is up to places like Drexel University and Rensselaer Polytechnic Institute to move forward, but how do we get that to take place? How do we catalyze the major research libraries and major research institutions to act together?

DR. CRAWFORD: There is nothing like a crisis to stimulate action. I know that at Drexel we are having conversations about the future of our libraries. We have an iSchool as well, so there is an opportunity there, because having shared interests in reinventing the model is essentially what it will take. There are trailblazer institutions, and it is a question of whether we can unite and define what the library of the future is in this digital world. But I absolutely agree that it is necessary. It is the future of the knowledge enterprise. I had a meeting with Drexel’s president last week in which we talked about the iSchool being the centerpiece of the twenty-first-century university and what that means in the context of research and scholarly activity.

DR. HENDLER: I would also mention projects like VIVO12, which is funded by the National Science Foundation. It has gotten a few schools involved, and it is growing. They have established the sharing of data and integrating that for the purpose of tracking researchers who agree to become a part of that system. Each library can maintain its own holdings. If I have a list of all my papers that I can get to my university in a way that they can curate, share, and archive, then it does not necessarily mean anymore that it must be in a particular book, in a particular building. We are seeing a lot of pressure in that direction, and it is promising that several of these big libraries are helping to lead that area.

__________________________

12 See: http://vivo.ufl.edu

I think the idea of a “catalog of catalogs,” the federated catalogs for not just the library holdings but also the people resources at a university—the individual papers and publications of the people, including who, what, when, where aspects—is a very powerful and interesting idea. Exactly how it will be funded remains to be seen, but once we get 100,000 or a million people accessing a page, the money can be found to maintain it. The problem is that until we reach that number, we cannot figure out how to monetize it. This is a situation in which scalable solutions that are experimented with and evolved may be better than trying to design a solution all in one step.

DR. ABELSON: When scientists say, “My data is my intellectual property,” I am not sure under what legal regime someone’s data is their intellectual property. Perhaps it is their trade secret or something similar. But there is tremendous confusion, and I think it is good to have some healthy criticism when people use that language. I would like to resist having people saying their data are their intellectual property.

DR. CRAWFORD: It is a misguided perception, but there is a lot of that.

DR. HENDLER: Dr. Abelson has been working on how we can make these policies more explicit through common-use licenses and the data more sharable, to actually tie the policies in a computable way onto the data and assets.

Sociocultural, Institutional, and Organizational Factors

Session Chair: Michael Lesk

-Rutgers University-

This page intentionally left blank.

Clifford Lynch

-Coalition for Networked Information-

Sociocultural factors in this area cover a very wide range of topics. In preparing these remarks, I deliberately wanted to avoid some of the very common themes that I expected to hear in this workshop and to focus on a few additional important, but less widely discussed, issues.

I will not thoroughly discuss, for example, the issue of incentives, which is obviously a major problem. The one point I will make in this regard, however, is that we have not talked much about the incentive to receive research funding in the first place, which is a fairly compelling one. If certain data-sharing behaviors are a condition of receiving research funds, and adherence to these behaviors is enforced as a condition of continuing eligibility for funding, this would be a real incentive.

It is interesting to see what is happening in some of the communities with foundations dedicated to the cure of specific, often rare, diseases. These foundations tend to be very focused on what they are doing, which is searching for treatments and cures. It is now common for them to insist that the scientists they fund should make their data openly available at least to other researchers within that community, on an immediate basis. This is the phenomenon of a funder who is focused on solving a problem and is not very interested in accommodating much long-term career-building by the participants. We will see more of this focus.

Let me move to the main issues I want to address. First, I want to talk about the scholarly literature and the realities of mining that literature, both as a research project in and of itself (i.e., to study what is going on in science) and as a pragmatic means of, for example, finding relevant earlier work. There is a longstanding history of researchers trying to extract hypotheses, insights, or facts by computational analysis of large bodies of scholarly literature, sometimes in conjunction with ancillary datasets of various kinds and sometimes not. What has happened with the way people compute on the Web is that we are coming to think about the scholarly record as an object of computation and to see that this computation can facilitate the discovery of new knowledge. It is important to understand that while the potential of this approach is great, the pragmatics of realizing it are phenomenally challenging.

It is clear that very large amounts of the scholarly literature are in one or another electronic format, some formats being far more hospitable to computation than others. They are often available in multiple formats, with the most computationally friendly ones only available internally on the publishers’ sites, since they are not designed to be directly read by human readers. But the licenses that universities typically enter into with publishers to use collections of online journals specifically forbid mass copying of the material from those journal collections, as someone would need to do to build up a corpus to compute upon. In fact, if a researcher tries to download a large number of journal articles from many of these databases, the librarian at that university will get a call from one of the publishers, because they have got mass-download detectors that notice a big spike of downloads. The publisher will demand that

the university librarian do something about this or it will shut off the university until it fixes this issue, at which point the researcher will get a phone call acquainting him or her with the terms and conditions under which the university has access.

Fixing this issue is not simply a matter of renegotiating one contract. A large university will have hundreds of such contracts, and thus far, publishers have not been very accommodating of this, with a few exceptions, mostly open-access publishers that do not require license agreements anyway. Of course, there are legitimate publisher concerns that need to be recognized in the renegotiations, but they are not simple to address. In short, there is a substantial pragmatic barrier to getting literature to mine. Note also that any comprehensive subject corpus, most of the time, needs to be drawn from a range of publishers, not just one.

Even if someone can get past the license issues, a second very real challenge to literature mining is the need to normalize data from a wide range of sources into a consistent format. One of the previous presenters talked about what it took to make sense out of one gene array dataset and the cleanup that was needed for that process. Imagine trying to deal with 50 or 60 different data sources, some of which vary depending on whether the journal issue is from 2003 or 1992, or is something from the 1960s that had to be converted into digital form. This is a very significant amount of work, particularly if it has to be done over and over again by different literature-mining groups.

A final subissue on barriers to mining the current scholarly literature base is related to copyright issues. Consider, for example, what happens if someone is performing a computational process on tens of thousands of copyrighted objects. Is the output a derivative work? If so, producing a derivative work from thousands of copyrights raises all sorts of questions. For example, if we did the same computation, but omitted 10 articles, does the fact that we included the 10 articles in our original computation make the computation a derivative of those 10 as well as of the other 9,990, even though they made no difference in the final product? There is not much legal certainty about anything in this area, as far as I know.

Let me address some practical barriers to mining the existing literature base. Today there is little consensus about the role of publishers in facilitating literature mining. We could imagine scenarios in which publishers, or some third party working with publishers, offer literature mining as a service for a database created just for that purpose. This might reduce some barriers, but it also raises concerns about the economics of text mining and the flexibility of the mining technology that it would be possible to apply to the literature base.

Focusing on the idea of computing on a corpus of scholarly literature and a body of experimental results, we also need to think about how to change the character and composition of this corpus to make it as valuable as possible for this kind of computational analysis. For example, it has often been observed that the published record, especially when it was read solely by human beings, has a strong bias toward positive results. People rarely get articles published in high-visibility venues saying, “I tried these six approaches, and none of them worked,” or “This compound seems to be good for nothing, it does not react with anything.” These are valuable contributions in a computational environment, and we need to think carefully, especially in a world full of robotic experimental equipment and large-scale screening systems, about how we get more of this negative information out there so that we can compute on it.

A closely related issue is the tremendous amount of data that industry produces and represents negative results or at least “prepositive results.” We should be able to disseminate and share these results. We need to sort through the notion of value in data, particularly (but far from exclusively) as it affects industry.

There is a school of thought that a database has value even if we invested in it and it proved to be a worthless investment, because if we keep it secret, we can force your competitors to waste time and money recreating that database themselves. Thus, it will consume resources that they could have spent elsewhere, and that will help us in competition. We need to reject that thinking, especially in environments such as the pharmaceutical arena, where we are struggling to contain costs and improve productive research. There is an enormous social cost to secrecy for negative advantage rather than for positive advantage. I do not know how we fix it other than by talking openly about it, but it does seem that we should look for ways to make as much negative data available as possible, in addition to positive data, to facilitate computation on the scholarly record.

One of the other challenges we face is how to make it easy for scientists and scholars to share data. We heard in some of the earlier presentations how difficult that can be. One key is simply giving scientists places to put materials that can be openly available to the world or that can be shared on a rather controlled basis. Anyone who has tried to do inter-institutional data sharing without making them public to the world—to share data just with groups of collaborators—knows how horrible that can be, because you do not have a common federated identity-management system across those institutions, so you have to resort to all kinds of clumsy ad hoc solutions.

One of the contributions that supporting organizations, such as information technology and libraries, can make is to help supply infrastructure that supports simple sharing. I see institutional repositories as key—but far from exclusive—players in that role. We see this from the discipline-related repositories that exist for specific genres of data. Those make sharing very easy, but we need to look for more mechanisms to facilitate that.

The last area I want to talk about is privacy. We face a big burden dealing with personally identifiable data of various kinds, the need in university settings to get involved with institutional review boards (IRBs), and the specific challenges of sharing and reusing data, which seems philosophically opposed to what most IRBs want to achieve. For personally identifiable data, they want to be as constraining and specific as possible. We need to recognize that we are getting into situations where we need to be able to reuse data more fluidly, and we are going to need some serious conversations about how this interacts with policies about privacy and informed consent.

There are several possible approaches in this regard. In many cases we want to anonymize the information, and we need to learn how to do a better job of that technically and to look for easy ways to share anonymized data with an agreement that the recipient will not attempt to de-anonymize it as a second line of defense. In other cases, personally identifiable information may be needed, particularly as we look at the interfaces between personal histories of various kinds and genomics or other kinds of population studies.

I wonder whether there are not some new ways to think about this issue. There is a tremendous interest in citizen science, as was mentioned earlier. There are many people who are interested in documenting their own behaviors, their own physical health. I was at a

meeting recently looking at developments in personal archiving, and it is clear there is a growing interest in collecting and sharing this kind of information among a sector of the population. We also have some very interesting developments in personal genotyping, for example, where communities of people are sharing the results that they get back from companies like 23andMe that do this sort of work.

I wonder if there is some way to connect these kinds of social changes into the process, or at least to think about whether we can do something better than the very constrained kinds of informed consent approaches that are in use today. We are significantly limited right now in doing the kinds of knowledge discovery that cross different kinds of databases, ranging from biological databases to databases about people and about behaviors and populations. I would suggest that is a major cultural and social issue that we need to be exploring in detail.

Paul Edwards

-University of Michigan-

The focus of my talk is on institutions and open data. I want to start with a success story. The meteorological data infrastructure is one of the oldest global infrastructures. It goes back to the 1850s. In the interwar period, a rudimentary, global data-sharing system emerged that used shortwave radio, telegraph, and many other technologies. At that time, meteorologists threw away almost all the data they received, because they had no way to use it. Once they got computers, they became able to process that data. Since the 1960s, the combination of a global observing system with many kinds of instruments linked to a global telecommunications system has been used. The system pours data from all over the world into computers that make forecasts and data products and then delivers those to national meteorological services, and it works well.

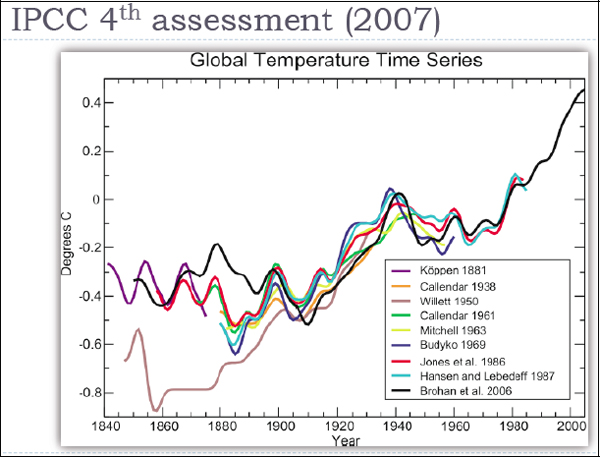

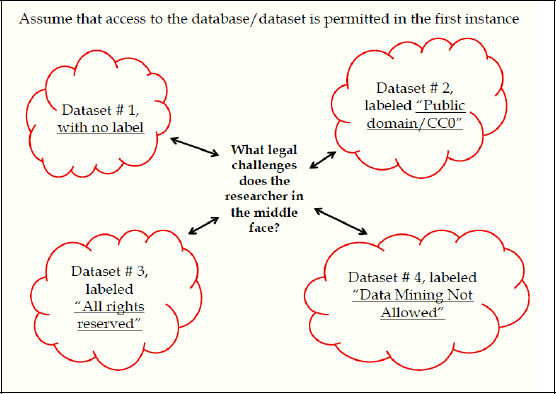

However, we do have a problem. Figure 3-3 is a graph of the famous global temperature curve as collected by different investigators since the nineteenth century.

FIGURE 3-3 Global temperature time series.

SOURCE: Intergovernmental Panel on Climate Change

There are enormous differences in the temperatures recorded by the datasets from the nineteenth century and the early part of the twentieth century, especially before 1940. This is not much data by today’s standards, but the ability to crunch these numbers was not there before computers. Before 1963, almost all of these datasets included fewer than 450 stations. They did this because these were the stations known to be highly reliable. They were the stations that had been there over the entire period, and which had not made many changes to their locations, instrumentation, and so on. So their data were considered reliable.

The later temperature curves, put together in 1986, 2006, and more recently, use computer models to add in data from stations that were not trustworthy enough earlier—either because their records were incomplete or because they had gone through some changes, such as having been moved or engulfed by an urban environment, which made their record suspect. A recent project at the University of California, Berkeley is trawling the Web to find station records and add them into a global dataset containing more than 30,000 stations. The quality of that dataset is open to question, but it is one of these Web-sourcing ideas that we have heard a lot about today in other presentations.

This story offers an example of an extremely open data system that has existed for more than 150 years. One of the reasons the weather data system works so well is that in the early days of weather data reporting, data were freely exchanged, because they had no economic value. We could not do anything with them, because it was difficult to make much use of them in many ways, so the international telegraph system began to carry weather data at no cost. Since sharing these data was advantageous to everyone, they did it freely.

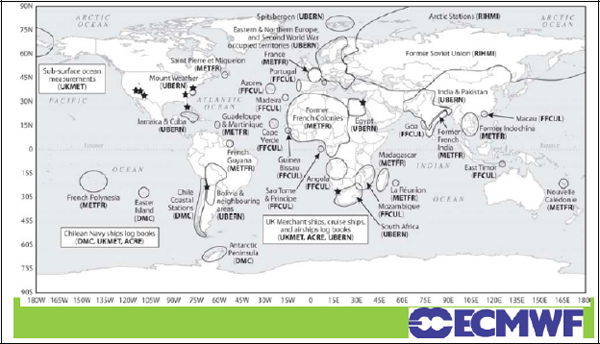

In the 1990s, that started to change. Some countries are charging for weather data products in an effort to recoup the costs of their observing systems. Figure 3-4 shows datasets that the European Center for Medium Range Weather Forecasts is trying to recuperate now, in order to add them into the global climate record and improve the quality of that record. There are many of these datasets.

FIGURE 3-4 Focus on pre-1957 meteorological data in sensitive regions.

SOURCE: European Centre for Medium-Range Weather Forecasts

The point of this story is partly that the effort to build this digital climate data record has already been going on for almost 30 years, and it is still under way. This is difficult work, even though the relative size of these datasets is small compared to the size of some of the databases we have now. The results of the global collection effort are good, however. Figure 3-5 presents four independent analyses of the global temperature record. They converge much better—even in the nineteenth century—than the versions created earlier.

FIGURE 3-5 IPCC AR4 (2007).

SOURCE: Intergovernmental Panel on Climate Change

I want to focus now on data problems. Some of these are institutional and some of them are not. Most of us still work at a local workplace, and almost all of us work for some kind of hierarchical organization that pays our salary. This means that the issues that concern us locally about the institution that we work for will almost always trump concerns about things that are remote. This is true for various psychological and institutional reasons. Studies of face-to-face interaction show, for instance, that it generally works better and faster than interaction at a distance. There is also a trust aspect. The people you know are the people you are more likely to share with, and whose data you are more likely to trust.

The story about meteorology and climate science has some temporal issues that have not come up much in this discussion, but are nonetheless very important. It is easy to think that the data we are trying to federate and collect will be there forever, but of course they will not. The audience may be familiar with the story when Mars data were lost, because the software with which data tapes were made had been lost. Digital information is quite fragile, because the strings of bits cannot be interpreted without the code that created them—and that code is dependent on other code, such as operating systems. Losing data by losing the software and hardware context is the kind of problem that could happen again and that already does happen quite often.

Metadata is another issue that appears in the climate and weather data story. It is not obvious to us what people in the future will need to know about the data we collect now—and

we cannot know that with any certainty. We may not collect what they will need at all, or what we collect may not be sufficient.

Finally, there is a figurative dimension to distance, which is the distance between disciplines. The great promise of open data is that we can work on problems that have never been studied before, because they required collaborations among different fields. That is also a potential failing, however, because if an ecologist wants to work with data from, for example, an atmospheric physicist, the ecologist might be willing to accept that person’s data at face value. After all, what does the ecologist know about atmospheric physics? Yet those data may be defective in various ways. We may need to make an extra effort to know more about those data and the problems they may have.

This raises the problem of trust. The further away that some user is from the source of the data, the more possible it is for the members of that user community to find it completely unpersuasive. Hence, sharing data will not automatically facilitate communication and understanding.

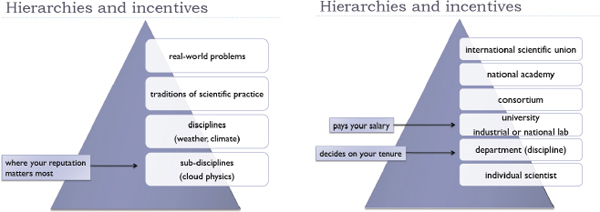

The world we think we are in, and want to be in, is now thoroughly networked, with easy sharing of data and information among all scientists and disciplines. But the world that we are actually in is often much more hierarchical and stove-piped. In science, the hierarchy begins with the subdisciplines, rises to the disciplines, and rises again to larger traditions of scientific practice (Figures 3-6 and 3-7). Studying real-world problems, such as climate change, involves many disciplines, but the place where your scientific reputation is made is at the much lower level of your subdiscipline.

FIGURES 3-6 and 3-7 Hierarchies and incentives.

SOURCE: Paul Edwards

The same observation is true for our institutions. Academic scientists are individual, they work in a department, and the department is part of a university. There might be levels above that all the way up to international professional societies—but the place that pays the salary is the university, and the place where the scientists’ reputation is evaluated is their department. Therefore, these relatively local entities can often trump larger collaborations, networks, virtual organizations, and so on.

One of the phenomena we see in the formation of many infrastructures is a phase in which we build gateways—systems that allow us to move information, for example, from one place to another without having to worry about the particular features of every infrastructure involved in the transfer. The meteorological information infrastructure, the World Weather Watch of the U.N. World Meteorological Organization, is a great example. Its bottom level consists of more than 150 national meteorological services. Each one contributes its observational data to the global observing system. The global communication system sends them to central processing centers, where data from the entire planet are processed and then returned to the national weather services.

This is great for understanding and forecasting the weather, but once we start confronting larger problems, such as climate (which involves many Earth systems besides the atmosphere), the World Weather Watch is no longer enough. Therefore, we get another, higher level of institution. For example, the Global Earth Observing System is a collection of systems, and each of those systems is, in fact, a collection of other systems. What I see when I look at the world of scientific institutions is a constant need to climb up a level to try to get an understanding of the general area that is being studied.

I have done some work with people in the Global Organization of Earth System Science Portals (GO-ESSP). These are people who build tools like the Earth System Grid. They are concerned about adequate support and coherence. The problem they face is that their activities for GO-ESSP are, in general, unfunded. They feel strongly that it is necessary, because they want to do things that link the various portals and make them into a more coherent, interoperating system—but nobody is paying them to do that. Thus, again, the institutional level of concern and the one at which they actually want to work are different and conflicting.

My late colleague, Susan Leigh Star, coined this phrase: “Metadata are nobody’s job.” Everybody wants good metadata, but nobody knows who is supposed to create them. We would like to push it off onto the scientists, but they do not want to do it. They are moving on to the next project after they have finished their datasets, and they do not want to go back and document what they have done. Perhaps there is a class of data managers who can handle it, or perhaps it should be the “crowd.” Maybe somehow younger scientists will do it. What we often see in labs is that this work is pushed down onto the graduate students. Maybe social scientists have the magic answer. I am a social scientist, and, as far as I know, we do not.

My research group has been doing some ethnographic work with environmental scientists, and we have observed that there is an obsession with metadata as a product. In other words, metadata are conceived as a kind of library catalog that will describe all the data that someone has. The reality of data sharing, however, is quite often that metadata exist mainly as an ephemeral process. Many people say that the most important issue when dealing with data collected by someone else is to talk to that person. “I need to know more about what you did, how you collected this data, what it means, what the formats are”—there is an endless list of questions, and it is impossible that all of those will ever be answered by a catalog-style metadata product. One lesson, therefore, is that when someone is conceiving metadata, creating channels for communication among researchers is at least as important as creating the ultimate catalog that we would all like to have.

Finally, I am going to talk about data and software. Data cannot be read without software. An even more important point is that many things that we call data now are actually the product of software. Climate model output is an example, as are instrument data after they have been collected and processed through some sort of data model to be put into the final dataset that is used in a general publication. Which of those things are we talking about? If it is the latter, we need to know exactly how it was processed.

For the last 15 years or so, the journal Money, Credit, and Banking has required authors to submit both their code and their data so that their results, in principle, could be replicated. In 2006, researchers found that of 150 articles only 58 actually had some data or a code, and of those, for only 15 could the results be replicated with the code and data the authors provided. The journal editors concluded that there needs to be a stricter system for people who are submitting their code and data. But my question is, how much is it worth? What they did not do was attempt to communicate with the authors to find the missing information.

My last points are related to the problem of the academic reputation system. This is the way the system works now: Scientists have some data sources, they use them to do research, they publish the results, get citations, and that builds their reputations. Somewhere in there are services, and that is where data sharing and software, as well as the writing of scientific software, both sit.

My colleague, James Howison at Carnegie Mellon University, has been thinking about how we might design a reputation system that would work for scientific software writing. Many scientists write little bits of code, or sometimes much more elaborate code. That is part of their job, but it is not their main job. Yet once they have written the software, they get involved in the problem that if their code is going to stay active, they have to maintain it. There is an ecology of software, and as the operating systems and associated software libraries change, this piece of scientific software will eventually stop working. Therefore, the scientist has to keep updating it, to keep modernizing it, if people are going to keep using it. Sometimes this turns into a sideline, and the scientist actually becomes a software developer. More often, however, what happens is that the code eventually just dies, and then there is the problem of replication. How can the research that used it be replicated?

I collect a lot of data, and then what happens to it? Sharing the data requires work from me. Writing out all the metadata is work. One of the best examples we have of a well-functioning collection of large datasets is the collection for CMIP-5, the Coupled Model Intercomparison Project, that my colleague from Unidata spoke about earlier. It is working well because there is a major incentive, which is that if someone wants to have data in the Intergovernmental Panel on Climate Change (IPCC) report, that is the only way to do it. The scientist must fill out the metadata questionnaire. How long does filling out the metadata questionnaire take? Up to 30 days for each model, because there are many runs and the questionnaire has to be filled out separately for each run.

The point is that data sharing is work, and it always will be work. Thus, one of our big issues is to find ways to pay people for that and then also to include it in reputation systems so that they can get credit for it.

There is a conflict between the mandates of institutions like the IPCC, the National Science Foundation, and the National Institutes of Health that want us to publish and share data, but there are few career incentives for sharing data. The career incentives are for the

results that come from the data. That is true of building software. It is also true of sharing data. Moreover, the problems that software developers face are also there for people who want to share data, though perhaps to a lesser degree. Data have to be maintained, which is why the issue of curation is a critical one. Who will maintain the data?

There are many possible solutions, however, and some of them will work for some areas. It is important to realize that there is no one thing called data, and that the differences among scientific fields are enormous. What works in one area may not work in another. Maybe we are talking about a virtual organization, or maybe it is a crowd-sourcing project. Among the people I have been working with, we have often heard Wikipedia, Linux, Mozilla, and Apache held up as models for the future: “This is what we will do. Open-source software works. Open-access models work.” I do not think so. For many fields, there is going to be a problem, because there are not enough people with the right skills.

Metadata standards may help, but someone needs carrots or sticks to get that process to happen. I have heard many people say that the young people will do it. They assume the young people will know how to do it, but if we do not know how to do it, why is it that they will know? They need to be trained. Studies of young people using even simple things like Google Search show that most of them do not know how to use Google Search in an effective and efficient way, and that is equally true of young scientists. Some of them will be very good, but most of them will not, and it might be that we actually need new paradigms of education.

I love the idea of science informatics. Perhaps it will come from an iSchool, but the iSchools we have are not set up for this yet. Perhaps it will come from science departments, but most of the science departments I know are not set up to do that either. Science informatics is not computer science, and most computer science departments are not interested in this problem at all except for their own kinds of models and data. Therefore, it will probably be some combination of computer science, iSchools, and domain science. And the combination may need to be different for different domains and problems.

DISCUSSANT: There was a discussion about how difficult it is to get large amounts of text for literature analysis, but people have been trying to do that since the 1970s, long before copyrights became a big issue, and there are large bodies of text that are not available. Do you think the technology works even if someone could get the text, because I do not see that?

DR. LYNCH: Parts of the technology work, and in fact there are some successes to which we can point. The notion that someone has a computer that reads the literature on a certain subject and understands it in a deep way is fairly farfetched. One of the things that Google has suggested, however, is that when someone gets enough data, he or she can do some interesting work through statistical correlations.

DISCUSSANT: There is a general problem about where the credit lies, where the value lies, and who is responsible. But the main question is: What is the business model? Who is responsible among universities for ensuring that there is a cyberinfrastructure and that appropriate credit is given?

DR. LYNCH: I do not think there are simple answers. I do suspect that even in a period where resources are very tight, if we look carefully at most of the cyberinfrastructure investment, it is largely cost effective. This investment should be making the overall research enterprise more effective and providing some real leverage. I think those are the parts that will probably move ahead.

One of the issues in the area of data that we need to be very mindful of is that data preservation per se is almost a pure overhead activity, especially when we get past the first couple of years when people might want to reproduce new results. The activity with the payoff is reuse. That, not preservation, leads to new discovery, so it is important to be focused on facilitating reuse and not just looking for the longest possible list of data that we need to preserve. There are clearly materials we should preserve even if there are not obvious reuses for them right now, but we need to be judicious in that category and compete for the resources.

DISCUSSANT: It used to be that the reason an institution would have a repository was because there were physical objects that made sense to keep locally at an institution. Individuals did not have a chance to use an institutional repository unless they were at an institution.

Consider, for example, a person’s personal photography collection. Perhaps this person is in a bizarre hybrid situation, where he or she tries different services and ends up with a few hard drives, where many copies of this material are saved. There should be a different solution, however, where that person could take advantage of a big institution. Long-term trust is the main problem. He or she does not want those photographs to be lost. What if those companies go out of business? That person would still want everything on a personal hard drive.

If we move from digital photographs to scholarly output, the issues are the same. Why is Harvard University, for example, going to have a repository for everybody’s information, when it could just have some enterprise solution that gave everything to Microsoft? What is the balance between an institution and the individual?

DR. LYNCH: I am not sure that I parse the landscape the same way you do. I would say that the very critical issue about an institutional repository is that an institution is taking

responsibility for long-term preservation. Institutions and corporations, unlike people, may live forever, or at least indefinitely, and have processes that allow them to operate on much longer timescales than individuals.

DISCUSSANT: If a university is paying money to another institution, is that still an institutional repository?

DR. LYNCH: Yes. The way I view this kind of arrangement is that if the institution identifies the data and takes responsibility for their preservation, then it is making a much longer commitment than an individual could do, perhaps setting up a trust fund or some other mechanism that outlives the individual. Operationally, we may see many universities over time contracting the preservation and curation of specific kinds of data to other universities, or to other for-profit or not-for-profit archives. We may also see the emergence of more consortia, as well as straight-out contracting. The locus of ultimate responsibility is the key thing here.

DISCUSSANT: Does that mean that the universities can act like the individual researcher?

DR. LYNCH: Except that presumably the universities have thought through issues like multisourcing and what happens when a supplier goes broke, how to back up the data, how to audit contractors and move data from one contractor to the next, and other similar issues. The other point I want to make is that we often confuse distribution systems with preservations systems. Flickr is a great example. People put their pictures up on Flickr, and I think of that as a means of distributing them, making them available, and building some social interaction around them. However, there are people who believe this is a preservation venue, and I would urge them not to think that. If you really want your pictures, by all means put them up on Flickr or anywhere else, but keep a set for yourself offsite and make lots of copies.

DR. LESK: In terms of how we get somebody to do the preservation and the data curation, assuming that scientific data is indeed worth having—I wish we had more data on that point—the data are either a public good or a private good. If they are a private good, it means that the owner gets credit for having them, presumably by getting tenure. However, that means waiting for universities to give tenure for collecting data instead of for research results or for the papers interpreting the data. Coming from a university where departments complain that the administration is too slow, I am not optimistic about that.

The other option is that the data are a public good. Dr. Berman made a comment earlier about unfunded mandates. I have the feeling that if the National Science Foundation (NSF) imposed a data management mandate—we are not going to get any more pages in the proposal, but we are going to get 5 percent more money as the upper limit of what we can ask for—I think the world would be completely different. Obviously there would have to be a requirement that the 5 percent be spent on the data curation, but it would completely change the attitude about how the research community does this.

DR. SPENGLER: It is important to point out that the NSF asks for a data management plan. It does not ask a scientist to preserve the data. It does not ask that there always be copies.

Even more important, if we read all the details, we do say that we are willing to have the scientist put requests in the proposed budget for curation purposes. We say that we are willing to support it.

DISCUSSANT: If scientists decide that their data are worth preserving, is there some sort of mechanism for them to appeal that?

DR. SPENGLER: Some of my colleagues and some of the directorates had planned to include in the NSF data management policy that a grantee has to keep the data for 5 years beyond the award period. That got taken out.

DISCUSSANT: Is there a current plan for that?

DR. SPENGLER: No. Some disciplines have mechanisms set up for that, however. For example, if we look at some of the programs within the NSF, we will see that they do not normally say that the NSF wants data management plans. However, researchers will get in trouble if they do not have the data deposited in the designated database when it is time for their annual report or project renewal, because it will not be approved. Hence, some places have thought long term about it, but it requires a very large commitment by a given domain. The social sciences have stepped up in their resources, as has astronomy. Many disciplines and organizations have made more of a commitment, but questions about the long-term cost are still open.

DR. LYNCH: There are some interesting developments at the institutional level as well. For example, earlier this year Princeton University offered a storage service for their researchers that allows them to pay in advance for storage at some rate per terabyte, allegedly for eternity, or as long as Princeton will exist. The university made some projections about the cost of technology, the refresh rate, and the return on capital for the prefunding. We can argue about whether the numbers are right, but it is interesting to see someone step forward with a model like that, and I think we will probably see some more of it.

Policy and Legal Factors

Session Chair: Michael Carroll

-Washington College of Law, American University-

There was a comment made earlier about how researchers think of themselves as hunter-gatherers. This goes to the philosopher John Locke’s labor theory: I did it, and therefore I own it, and I have a property right in it.

Lawyers think of property in different ways, but I want to highlight the fact that there is a “there” there, even though the words “intellectual property” are being imprecisely used in this context. The way that an intellectual property lawyer might think about ownership and rights over the data is in terms of the rights over the first copy of the zeroes and ones. In a way, this is almost like tangible property in the sense that when there is only one copy, these zeroes and ones might be owned insofar as I have the medium on which these zeroes and ones sit, and you cannot have it unless I say so. This is a claim of ownership over a form of property.

Intellectual property rights are a layer above the zeroes and ones. They are entirely intangible, and these are rights that tell us what uses are acceptable. May we access the zeroes and ones? May we copy them? May we change the order of the zeroes and ones? These are use regulations.

Think about intellectual property law as access and use regulations that apply to the zeroes and ones rather than being embedded directly in the zeroes and ones. Once we make this conceptual separation, when we think about the policy issues, we are thinking about the regulations over data acquisition, data reuse, and the like.

This page intentionally left blank.

Michael Madison

-University of Pittsburgh School of Law-

I want to highlight some central legal questions in this area. In particular, I am going to focus on intellectual property (IP) law questions. There are some interesting and important privacy law issues as well, but I am not going to discuss those.

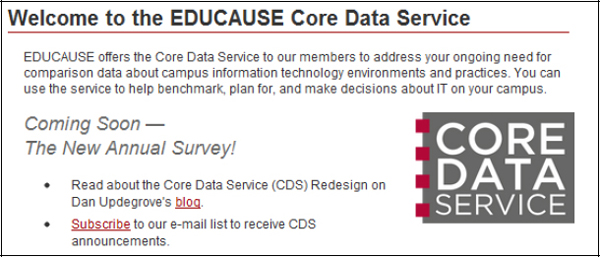

Figure 3-8 highlights in a very simplified, stylized way how I organize my thoughts on this topic.

FIGURE 3-8 Intellectual property challenges to data access.

SOURCE: Michael Madison

There are four clouds of different datasets, and a researcher in the middle who is extracting—or recombining or using—data from each of these sources. There is a different kind of a label—or, in one case at least, no label whatsoever—attached to each of those datasets, specifying terms and conditions under which data from that source might be used, recombined, shared, and so forth. The question is, What does the person in the middle do, and how does the legal system frame that set of questions?

In the upper left corner, there is no label whatsoever, just a pot of data. The lower left is “all rights reserved,” or the standard copyright-style notice that would be attached to any kind of copyrighted work and that is often attached to materials that are not actually copyrighted, often out of ignorance or sometimes in an attempt to deliberately claim rights in things that cannot be claimed. It is a default copyright label.

On the lower right side is a context-specific restriction that is phrased “data mining not allowed.” This is to illustrate the idea that people who collect data or create copyrighted works increasingly have the power to custom-design labels or notices that they attach to their works before they send their works out into the world, and they expect both the world to respect that label and the legal system to enforce it somehow.

In the upper right corner is a label of a type that is increasingly common, used to specify or declare that the material inside the pot is in the public domain or, if it was not in the public domain in the first place but in fact was copyrighted to begin with, has now been dedicated to the public domain by some kind of affirmative notice or label. It is the CC0 label, which is a form of Creative Commons license that basically declares that the material covered by the license was copyrighted, but the author of the material, or the owner of the copyrighted material, is affirmatively dedicating that content to the public domain. One of the questions I will address is the extent to which that kind of labeling or notice device is actually effective.

There are three general categories I want to cover, with questions in each. One is the default legal status of data in these environments, both with respect to the underlying data and also with respect to the adjacent information, formats, coding, metadata, and literature that arise from these things. The second one pertains to contracts, licenses, notices, restrictive notices, and enabling notices. The third category is what the legal system has to say about the problem of managing a commons or managing a community of shared resources.

I will begin with some default copyright rules within the intellectual property system—just what the rules are. First, data are treated in copyright law as facts and therefore cannot be copyrighted. They are by design in the public domain. This is one of the few black-letter legal rules that is easy to state. This would include experimental results, observations, sensor readings, and the like. So for people who assert that they own their data, if we were to apply a formal legal model to this, they cannot own the individual items in the dataset. They might, however, be able to claim a copyright in the compilation of the data or the database as a whole.