Core Areas of Geospatial Intelligence

Over the past several decades, the missions of agencies now represented in the National Geospatial-Intelligence Agency (NGA) have intersected with several academic fields, including geodesy, geophysics, cartographic science, geographic information science and spatial analysis, photogrammetry, and remote sensing. Advanced work in these fields depends on university research and curricula, the supply of graduate students, and technological advances. Agencies frequently sent employees to universities to gain specific expertise, for example to Ohio State University for geodesy (Cloud, 2000).

In recent years, many of these academic fields have become increasingly interdisciplinary and interrelated. For example, digital photogrammetry has so changed the field that its methods are barely distinguishable from remote sensing. Similarly, new labels such as geomatics have emerged, reflecting the overlap among surveying, photogrammetry, and geodesy. Few academic programs treat geographic information science, spatial analysis, and cartography as separate fields of study, but usually regard them as tracks or emphases within geography or another discipline. Professional organizations and academic journals reflect the interdisciplinary changes under way today. For example, mergers, name changes, and increasing overlap have characterized the professional organizations over the last decades (e.g., Ondrejka, 1997). This chapter examines how each of the core areas has evolved over time, the key concepts and methods that are currently taught, and the scope of existing education and professional preparation programs.

Geodesy is the science of mathematically determining the size, shape, and orientation of the Earth and the nature of its gravity field in space over time. It includes the study of the Earth’s motions in space, the establishment of spatial reference frames, the science and engineering of high-accuracy, high-precision positioning, and the monitoring of dynamic Earth phenomena, such as ground movements and changes in sea-level rise and ice sheets. Because much of contemporary geodesy makes use of satellite technology, such as the Global Positioning System (GPS), topics such as orbital mechanics and transatmospheric radio wave and light propagation also fall within its purview. Geophysics comprises a broad range of subdisciplines, including geodesy, geomagnetism and paleomagnetism, atmospheric science, hydrology, seismology, space physics and aeronomy, tectonophysics, and some ocean science. Given NGA’s historical focus on geodesy, the following discussion concentrates on geodesy, touching on other subdisciplines of geophysics where appropriate.

Evolution

Geodesy is one of the oldest sciences whose study goes back to the ancient Greeks (e.g., Vaníček and Krakiwsky, 1986; Torge and Müller, 2012). The first attempt to accurately measure the Earth’s size was made in the third century B.C. By measuring the lengths of shadows, Eratosthenes of Cyrene determined the Earth’s circumference with an accuracy that would not be improved until the 17th century. The assumption

that the Earth was a sphere was dispelled by Sir Isaac Newton. In the first edition of Principia, published in 1687, Newton postulated that the Earth was slightly ellipsoidal in shape, with the polar radius about 27 kilometers shorter than the equatorial radius. Refinements in field geodesy techniques slowly increased the accuracy of these estimates, but it was not until the dawn of the space age that knowledge of the Earth’s size and shape improved significantly. Through the analysis of perturbations of satellite orbits, scientists first refined the ellipsoidal dimensions of the Earth and then discovered that the shape of the Earth, as represented by its gravity field, was much more complicated.

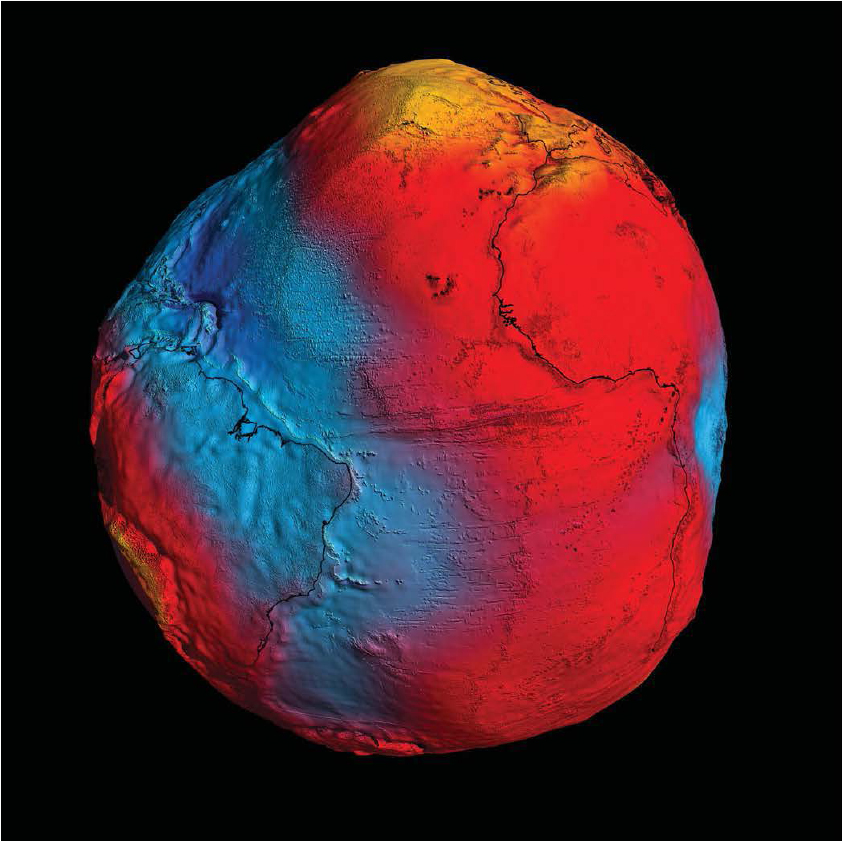

When geodesists talk about the shape of the Earth, what they actually mean is the shape of the equipotential surfaces of its gravity field. The equipotential surface that most closely approximates mean sea level is called the geoid. One of the major tasks of geodesy is to map the geoid as accurately as possible. An example of a highly accurate and precise geoid constructed using data from the Gravity field and steady-state Ocean Circulation Explorer (GOCE) satellite is shown in Figure 2.1 (Schiermeier, 2010; Floberghagen et al., 2011). Maps of the geoid provide information about the structure of the Earth’s crust and upper mantle, plate tectonics, and sea-level change. The geoid is needed to accurately determine satellite orbits and the trajectories of ballistic missiles. It also finds everyday use as the surface from which orthometric heights, the heights usually found on topographic maps, are measured. Improved knowledge of the gravity field can also be combined with GPS and/or inertial navigation sensors to produce a more accurate navigation system than can be provided by GPS alone.

NGA’s ongoing needs for geodesy stem primarily from work carried out by the former Defense Mapping Agency and include accurately and precisely determining the geoid, establishing accurate and precise coordinate systems (datums) and positions within them (e.g., World Geodetic System 1984; Merrigan et al., 2002), and relating different internationally used datums. In particular, NGA is responsible for supporting Department of Defense navigation systems, maintaining GPS fixed-site operations, and generating and distributing GPS precise ephemerides (Wiley et al., 2006).

Advances in geodesy are driven largely by continuing improvements to and expansion of space geodetic systems. New generations of GPS satellites are being deployed by the United States and several countries are developing global navigation satellite systems (GNSS), including the European Galileo, Chinese Compass, and Russian GLONASS systems. The use of GPS has become ubiquitous, with myriad civil and military applications. Improvements on the horizon include the development of less expensive and more accurate gravity gradiometry for determining the fine structure of the local gravity field and more accurate atomic clocks for measuring gravity and determining heights in the field.1

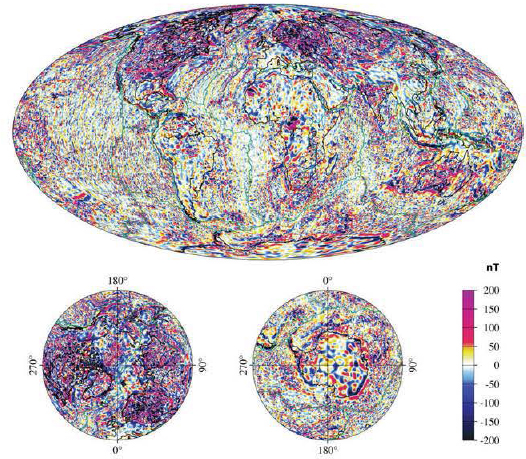

An important advance in geophysics that is relevant to NGA is the improvement in describing the Earth’s ever-changing magnetic field. The National Geophysical Data Center’s NGDC-720 model— compiled from satellite, ocean, aerial, and ground magnetic surveys—provides information on the field generated by magnetized rocks in the crust and upper mantle (Figure 2.2; Maus, 2010). This model is the first step toward producing a geomagnetic field model that would be useful for navigation.

Knowledge and Skills

Graduate study in geodesy encompasses the theory and modern practice of geodesy. Topics include the use of mathematical tools such as least-squares adjustment, Kalman filtering, and spectral analysis; the principles of gravity field theory and orbital mechanics; the propagation of electromagnetic waves; and the theory and operation of observing instruments such as GNSS receivers and inertial navigation systems. Modeling of observations to extract quantities of interest is a key technique learned by students. While course-only master’s degrees are available at some universities, most graduate degrees in geodesy require completion of a research project, some of which involve substantial amounts of computer programming. Graduates may carry out or manage research, and traditionally have a master’s or doctorate degree from a university specializing in geodesy and an undergraduate degree in a related field such as survey science, civil engineering, surveying

__________________

1 Presentation by D. Smith, NOAA, to the NRC Workshop on New Research Directions for NGA, Washington, D.C., May 17-19, 2010.

FIGURE 2.1 The Gravity field and steady-state Ocean Circulation Explorer mission has produced one of the most accurate geoid models to date. The deviations in height (–100 m to +100 m) from an ellipsoid are exaggerated 10,000 times in the image. The blue colors represent low values and the reds/yellows represent high values. SOURCE: ESA/HPF/DLR.

engineering, physics, astronomy, mathe matics, or computer science.

The knowledge taught at the undergraduate level is similar in breadth, but less in depth than that taught at the graduate level. Courses include specialized mathematics such as adjustment calculus (least-squares analysis), geodetic coordinate systems and datums, the elements of the Earth’s gravity field, and the basics of geodetic positioning techniques such as high-precision GPS surveying. Students should be well versed in the mathematical and physical principles underlying geodesy so that during their careers they can readily

FIGURE 2.2 The downward-direction component of the crustal magnetic field, in nanoteslas, from the NGDC-720 model. The figure shows the magnetic potential, represented by spherical harmonic degree 16 to 720, which corresponds to the waveband of 2500 km to 56 km. SOURCE: National Geophysical Data Center.

adapt to advances in the field. Graduates with an undergraduate degree with geodesy as a major component commonly work as geodetic or surveying engineers, who design and supervise data collection activities, carry out routine analyses, and solve small problems of a theoretical nature.

A bachelor’s degree in geophysics combines studies in geology and physics with mathematical training. Graduates commonly work as exploration geophysicists who prospect for oil, gas, or minerals; or as environmental geophysicists who assess soil and rock properties for various applications. A graduate degree in geophysics, preferably a doctorate, is required for research. Graduate-level knowledge and skills acquired in geophysics programs mirrors that in geodesy programs, with some overlap in subject areas. Additional topics of study include seismology and the structure and evolution of the Earth, including plate tectonics, the theory and measurement of the Earth’s magnetic field, and space physics, including the nature of the ionosphere and magnetosphere and the phenomena of space weather and its impact on modern technological systems.

Education and Professional Preparation Programs

At the undergraduate level, geodesy is primarily taught in geomatics programs (Box 2.1), typically in a geomatics or surveying engineering department or as an option in a civil engineering department, and sometimes in other departments (e.g., earth science, aerospace engineering, forestry). A few geography programs teach geomatics, but there is typically little geodesy content.

Only a handful of undergraduate geomatics programs (e.g., University of Florida, Texas A&M University, Corpus Christi) currently exist in the United States. More existed in the past2 but were terminated because of reduced demand or a change in institutional priorities. In some cases, the associated graduate program survived. At the graduate level, geodesy is taught in geomatics, geophysics, earth science, planetary

__________________

2 In the late 1970s, 13 schools in the United States offered 4-year bachelor’s programs in surveying or geodetic science, 8 offered a master’s degree in surveying, and 6 offered a Ph.D. in surveying and/or geodesy (NRC, 1978).

Geodesy provides the scientific underpinning for geomatics, a relatively new term used to describe the science, engineering, and art involved in collecting and managing geographically referenced information. A number of government agencies, private companies, and academic institutions have embraced this term as a replacement for “surveying and mapping,” which no longer adequately describes the full spectrum of position-related tasks carried out by professionals in the field. Geomatics covers activities ranging from the acquisition and analysis of site-specific spatial data in engineering and development surveys to cadastral and hydrographic surveying to the application of GIS and remote sensing technologies in environmental and land use management.

science, or engineering (primarily instrumentationrelated) departments. Again, only a few such degree programs (e.g., Massachusetts Institute of Technology, Ohio State University) currently exist in the United States. Notable examples of U.S. universities currently offering an undergraduate degree in geomatics or a graduate degree in geodesy are listed in Table A.1 in Appendix A.

Some 2-year colleges and associate degree programs in universities offer programs in surveying or geomatics technology, which provide basic instruction in the principles of geodesy, including coordinate systems and the use of GPS. There are many such colleges across the United States, whose primary purpose is to produce surveying and mapping technicians. Examples include the Geomatics Technology Program at Greenville Technical College (South Carolina) and the Engineering Technology Program at Alfred State College (New York).

Course-only master’s degrees offered by some of the institutions mentioned in Appendix A allow entry into some geodesy-related jobs. Some professionallevel education in geodesy is also available through continuing education programs and short courses offered by diverse organizations, such as the National Geodetic Survey, NavtechGPS, the Institute of Navigation, Pennsylvania State University, and the Michigan Technical University.

Undergraduate degrees or specialization in geophysics are available at a number of universities in departments of physics, earth and planetary sciences, and geology and geophysics (e.g., Stanford University, Harvard University; see Table A.1 in Appendix A). Many universities also offer master’s and doctorate degree programs in geophysics, including the California Institute of Technology and the Massachusetts Institute of Technology.

The term photogrammetry is derived from three Greek words: photos or light; gramma, meaning something drawn or written; and metron or to measure. Together the words mean to measure graphically by means of light. Photogrammetry is concerned with observing and measuring physical objects and phenomena from a medium such as film (Mikhail et al., 2001). Whereas photographs were the primary medium used in the early decades of the discipline, many more sensing systems are now available, including radar, sonar, and lidar, which operate in different parts of the electromagnetic radiation spectrum than the visual band (Kraus, 2004). Moreover, while most early activities involved photography from manned aircraft, platforms have since expanded to unmanned vehicles, satellites, and handheld and industrial sensors. Construction of a mathematical model describing the relationship between the image and the object or environment sensed, called the sensor model, is fundamental to all activities of photogrammetry (McGlone et al., 2004). Given these changes in the field, photogrammetry is now defined as the art, science, and technology of extracting reliable and accurate information about objects, phenomena, and environments from acquired imagery and other sensed data, both passively and actively, within a wide range of the electromagnetic energy spectrum. Although its emphasis is on metric rather than thematic content, imagery interpretation, identification of targets, and image manipulation and analysis are required to support most photogrammetric operations.

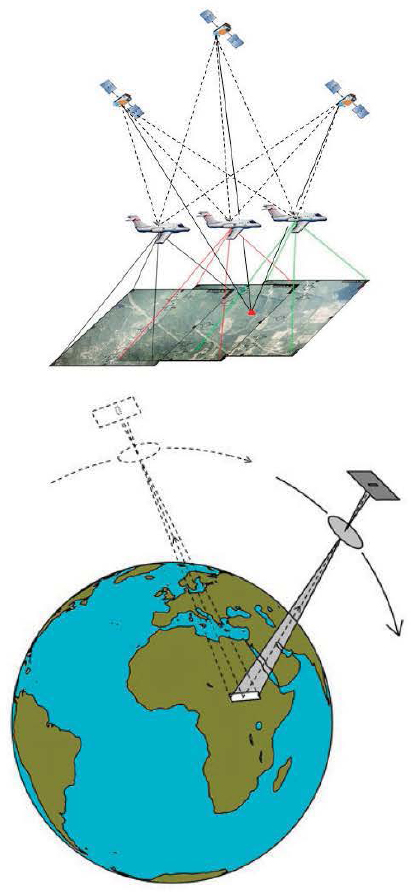

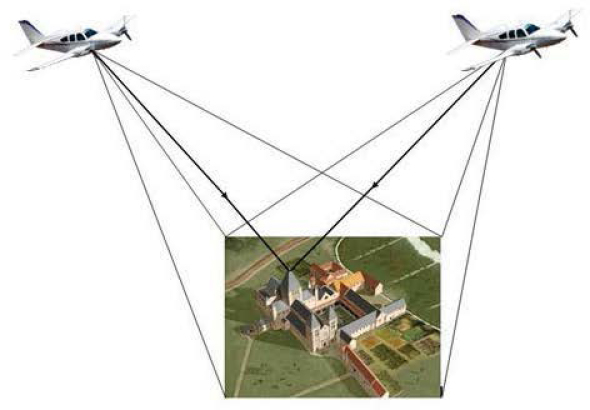

In photogrammetry, the Earth’s terrain is imaged using overlapping images (photographs) taken from aircraft or hand-held cameras, linear scans of an area from a satellite (Figure 2.3), or data from active sensors, such as radar, sonar, and laser scanners. A single image, which is a two-dimensional recording of the three-dimensional (3D) world, is not sufficient to

FIGURE 2.3 Accurate photogrammetric reconstruction of the imaged terrain requires overlapping images and metadata. (Top) Overlapping frame images produced by an aircraft; metadata include aircraft location determined from a constellation of GPS satellites, orientation determined from an inertial navigation system, and/or GPS-determined ground control (red triangle). (Bottom) Overlapping images produced by linear array scans from a satellite.

determine all three ground coordinates of any target point. Unless one of the three coordinates is known, such as the elevation from a digital elevation model, two or more images are required to accurately recover all three dimensions (Figure 2.4). Imagery, sensor and platform parameters, and metadata such as that from GPS and INS (inertial navigation system) are used in the photogrammetric exploitation.

Most photogrammetric activities deal with cameras and sensors that are carefully built and calibrated to allow direct micrometer-level measurements. However, an important branch of photogrammetry deals with less sophisticated instruments, such as those found on mobile phones, which require careful modeling and often self-calibration. This branch is gaining importance as the availability of imagery from nonmetric cameras grows.

Many digital photogrammetric workstations enable the overlap area of two images to be viewed stereoscopically. Automated algorithms are commonly used to extract 3D features with high accuracy. Frequently, however, human judgment is required to edit, or sometimes to override, the results from such algorithms.

Evolution

Photogrammetry began as a branch of surveying and was used for constructing topographic maps and for military mapping. It is still sometimes taught in surveying departments. Technological advances in surveying, the growth of photogrammetry, and the inclusion of related fields, such as geodesy, remote sensing (Box 2.2), cartography, and GIS, made the title “surveying” or “surveying engineering” inadequate for a department. The name geomatics or geomatics engineering was introduced to better capture this range of activities (see Box 2.1). At present, photogrammetry is taught in geomatics departments, as well as in other departments, such as geography and forestry.

Photogrammetry has gone through three stages of development: analog, analytical, and digital (Blachut and Burkhardt, 1989). Analog instruments were built to optomechanically simulate the geometry of passive imaging and to allow the extraction, mostly graphically, of information in the form of maps and other media. As computers became available, mathematical models of sensing were developed and algorithms were imple-mented

FIGURE 2.4 Recovery of three-dimensional target points requires at least two overlapping images, which is the basis for accurate stereo photogrammetry.

BOX 2.2

Photogrammetry and Remote Sensing

Both photogrammetry and remote sensing originated in aerial photography. Before it was called remote sensing, this field focused on identifying what is recorded in a photograph. By contrast, photogrammetry was concerned with where the recorded objects are in geographic space. Therefore, photogrammetry required more information about the photography, such as the camera characteristics (e.g., focal length, lens distortion) and aircraft trajectory (e.g., altitude, camera attitude Airphoto interpretation requires less precise knowledge of the geometry of the photographs; it may suffice to know the approximate scale.

The term remote sensing was introduced with the advent of systems that sense in several regions of the electromagnetic spectrum. For decactes, remote sensing involved many of the same activities as photogrammetry at a coarser resolution, but contemporary remote sensing can image at resolutions equivalent to those used in photogrammetry. What used to be almost entirely done by a human—the interpretation of photographs—has now been replaced by sophisticated algorithms based on mathematical pattern recognition and machine leaming. Nevertheless, the fundamental tasts of the disciplines remain essentially the same. In photogrammetry, one deals with the rigorous mathematical modeling of the relationship between the sensed object and its representation by the sensor. Through such models, various types of information can be extracted from the imagery, such as precise positions, relative locations, dimensions, sizes, shapes, and all types of features. High accuracy is critical. For example, accurate modeling is used in multiband registration of multispectral imagery. In remote sensing, the goal is usually to transform an image so that it is suitable for mapping some property of the Earth surface synoptically, such as soil moisture or land cover.

primarily in batch mode. The transition from analog to analytical was epitomized by the introduction of the analytical plotter in 1961, which incorporated a dedicated computer. The development of the digital photogrammetric workstation ushered in the stage of digital photogrammetry.

Advances in optics, electronics, imaging, video, and computers during the past three decades have led to significant changes in photogrammetry. Film is being replaced by digital imagery, including imagery from active sensors, such as radar and, more recently, lidar.3

The operational environment and the variety of activities and products have also changed dramatically. The range of products has broadened beyond image products (e.g., single, rectified, and orthorectified images; mosaics; radar products) to point and line products (e.g., targets, digital surface models, digital elevation models, point clouds, vectors) to relative information products (e.g., lengths, differences, areas, surfaces, volumes) to textured 3D models. Photogrammetry products now provide the base information for many geographic information systems (GIS). Finally, many processes are being automated, allowing near-real-time applications. The next phase may well be called on-demand photogrammetry, with many activities based online. It is likely that processing will be pushed upstream toward the acquisition platform, making it possible to obtain information products, rather than data, from an airborne or satellite sensor. Direct 3D imaging may be imminent. Photogrammetry will likely continue to play a significant role in ascertaining precision and accuracy of geospatial information, and to contribute to the complex problem of fusing imagery with other data.

Knowledge and Skills

Photogrammetry classes are taught in undergraduate programs in surveying, surveying engineering, geomatics, or geomatics engineering, but none of these programs in the United States offer a bachelor’s degree in photogrammetry. The graduates of such programs may be employed in mapping firms, particularly if they took an extra elective course in photogrammetry. They would know how aerial photography and other imagery is acquired and how to use it in stereoscopic processing systems to extract various types of mapping information. It is likely that they would receive significant on-the-job training by seniors in their firm.

The individuals who obtain a master’s degree in photogrammetry gain much more knowledge based on a strong mathematical foundation. Such photogrammetrists or photogrammetric engineers design algorithms to exploit various types of imagery. They understand the different platforms and have a command of the techniques of least-squares adjustment and estimation from redundant measurements. Photogrammetric scientists usually have a doctorate and are capable of supervising or carrying out research and modeling the various complex imaging systems. They conceive of novel approaches and ways to deal with technological advances, whether in new sensors, new modes of image acquisition from orbital platforms or aircraft, or in the integration and fusion of information from multiple sources.

Education and Professional Preparation Programs

Education programs in photogrammetry (e.g., Ohio State University, Cornell, Purdue University) flourished in the early and mid-1960s. At the time, photogrammetry was being used extensively by the Defense Mapping Agency, the U.S. Geological Survey, the U.S. Coast and Geodetic Survey, the military services, and the intelligence community. Demand for training was high, and these organizations sent significant numbers of employees to universities under programs such as the Long Term Full Time Training (LTFTT) program. By the late 1980s and early 1990s, more than 25 photogrammetry programs were offering both master’s and doctorate degrees in the field. At the undergraduate level, photogrammetry was introduced as a small part of undergraduate courses in surveying and mapping. In the 1980s and 1990s, several institutions (e.g., Ferris State, California State University, Fresno) offered lower-level photogrammetry courses as part of their undergraduate bachelor’s programs in forestry, geography, civil engineering, construction engineering, surveying engineering, and, most recently, geomatics. About that time, the Defense Mapping Agency embarked on a modernization program (MARK 85 and MARK 90) to convert to digital imagery and move toward automation. The agency’s focus on professional development shifted from learning fundamental principles to mastering skills to run software for photogrammetry applications. By the mid-1990s, the number of students taking classes through the LTFTT program and its successor Vector Study Program began to decrease significantly, and the decline in enrollment reduced support for educational programs offering a substantial emphasis in photogrammetry.

__________________

3 Although terms such as radargrammetry and lidargrammetry are sometimes used to emphasize the type of sensor data being analyzed, the fundamentals of photogrammetry apply to all types of sensor data.

At present, only a handful of programs in photogrammetry exist in the United States (see Table A.2 in Appendix A). A few, such as those at Ohio State University and Purdue University, are top tier, yet are struggling to survive. Retiring faculty are not being replaced, and the number of faculty will soon decline below the critical mass needed to sustain these programs. Some 2-year technology programs, such as in surveying or construction technology, offer hands-on training using photogrammetric instruments to compile data. Most of these provide some photogrammetric skills but lack the rigorous mathematical basis of photogrammetry programs in 4-year colleges.

Outside of formal academic education, employers often provide in-house training, and some educational institutions and professional societies offer short courses ranging from a half day to a full week. The American Society for Photogrammetry and Remote Sensing regularly devotes a day or more to concurrent half-day or full-day short courses on specific topics in conjunction with its annual and semiannual meetings. Most of those who take these courses are employees seeking professional development.

Remote sensing is the science of measuring some property of an object or phenomenon by a sensor that is not in physical contact with the object or phenomenon under study (Colwell, 1983). Remote sensing requires a platform (e.g., aircraft, satellite), a sensor system (e.g., digital camera, multispectral scanner, radar), and the ability to interpret the data using analog and/or digital image processing.

Evolution

Remote sensing originated in aerial photography. The first aerial photograph was taken from a tethered balloon in 1858. The use of aerial photography during World War I and World War II helped drive the development of improved cameras, films, filtration, and visual image interpretation techniques. During the late 1940s, 1950s, and early 1960s, new active sensor systems (e.g., radar) and passive sensor systems (e.g., thermal infrared) were developed that recorded electromagnetic energy beyond the visible and near-infrared part of the spectrum. Scientists at the Office of Naval Research coined the term remote sensing to more accurately encompass the nature of the sensors that recorded energy beyond the optical region ( Jensen, 2007).

Digital image processing originated in early spy satellite programs, such as Corona and the Satellite and Missile Observation System, and was further developed after the National Aeronautics and Space Administration’s (NASA’s) 1972 launch of the Earth Resource Technology Satellite (later renamed Landsat) with its Multispectral Scanner System (Estes and Jensen, 1998). The first commercial satellite with pointable multispectral linear array sensor technology was launched by SPOT Image, Inc., in 1986. Subsequent satellites launched by NASA and the private sector have placed several sensor systems with high spatial resolution in orbit, including IKONOS-2 (1 × 1 m panchromatic and 4 × 4 m multispectral) in 1999, and satellites launched by GeoEye, Inc. and DigitalGlobe, Inc. (e.g., 51 × 51 cm panchromatic) from 2000 to 2010. Much of the imagery collected by these companies is used for national intelligence purposes in NGA programs such as ClearView and ExtendedView.

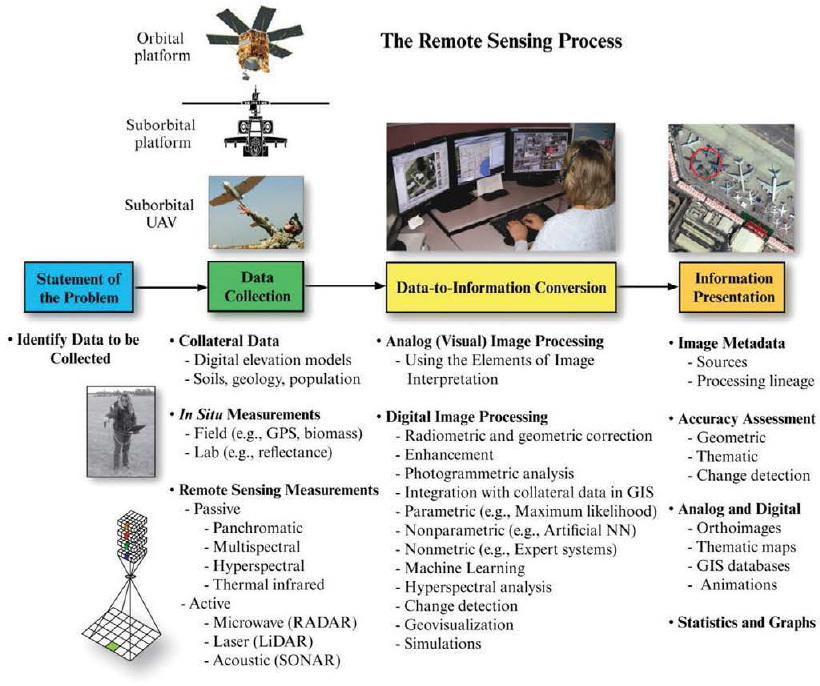

Modern remote sensing science focuses on the extraction of accurate information from remote sensor data. The remote sensing process used to extract information (Figure 2.5) generally involves (1) a clear statement of the problem and the information required, (2) collection of the in situ and remote sensing data to address the problem, (3) transformation of the remote sensing data into information using analog and digital image processing techniques, and (4) accuracy assessment and presentation of the remote sensing-derived information to make informed decisions ( Jensen, 2005; Lillesand et al., 2008; Jensen and Jensen, 2012).

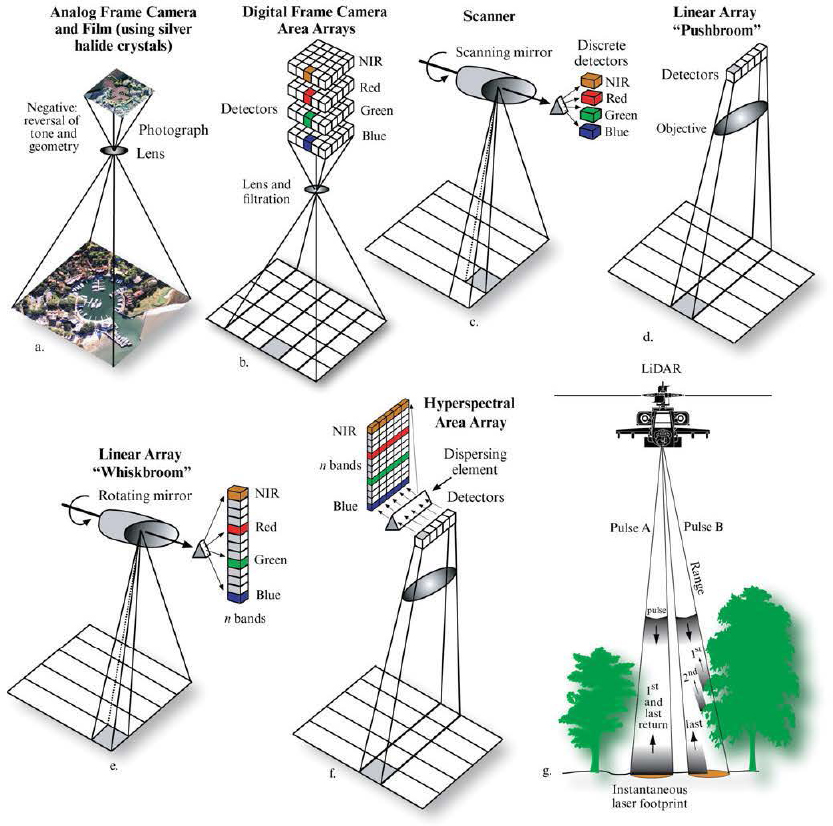

State-of-the-art remote sensing instruments include analog and digital frame cameras, multispectral and hyperspectral sensors based on scanning or linear/area arrays, thermal infrared detectors, active microwave radar (single frequency-single polarization, polarimetric, interferometric, and ground penetrating radar), passive microwave detectors, lidar, and sonar. Selected methods for collecting optical analog and digital aerial photography, multispectral imagery, hyperspectral imagery, and lidar data are shown in Figure 2.6. Lidar imagery is increasingly being used to produce digital surface models, which include vegetation

FIGURE 2.5 Illustration of the process used to extract useful information from remotely sensed data. SOURCE: Jensen, J.R. and R.R. Jensen, Introductory Geographic Information Systems, ©2013. Printed and electronically reproduced by permission of Pearson Education, Inc., Upper Saddle River, New Jersey.

structure and buildings information, and bareearth digital terrain models (NRC, 2007; Renslow, 2012).

Airborne and satellite remote sensing systems can now function as part of a sensor web to monitor and explore environments (Delin and Jackson, 2001). Unlike sensor networks, which merely collect data, each sensor in a sensor web has its own microprocessor and can react and modify its behavior based on data collected by other sensors in the web (Delin, 2005). The individual sensors can be fixed or mobile and can be deployed in the air, in space, and/or on the ground. A few of the sensors can be configured to transmit information beyond the local sensor web, which is useful for obtaining situational awareness (Delin and Small, 2009). Remote sensing systems are likely to find even greater application in the future when used in conjunction with other sensors in a sensor web environment.

Knowledge and Skills

Although curricula for educating remote sensing scientists and professionals have been developed,4 they have not been widely adopted. Ideally, undergraduate

__________________

4 See <http://rscc.umn.edu/>.

FIGURE 2.6 Selected methods of collecting optical analog and digital aerial photography, multispectral imagery, hyperspectral imagery, and lidar data. SOURCE: Jensen, J.R., Remote Sensing of the Environment: An Earth Resource Perspective, 2nd, © 2007. Printed and electronically reproduced by permission of Pearson Education, Inc., Upper Saddle River, New Jersey.

and graduate students specializing in remote sensing at universities are well versed in a discipline (e.g., forestry, civil engineering, geography, geology); understand how electromagnetic energy interacts with the atmosphere and various kinds of targets; are trained in statistics, mathematics, and programming; and know how to use a GIS (Foresman et al., 1997). Remote sensing scientists and professionals must be able to analyze digital remote sensor data using a diverse array of digital image processing techniques, such as radiometric and geometric preprocessing, enhancement (e.g., image fusion, filtering), classification (e.g., machine learning,

object-oriented image segmentation, support vector machines), change detection and animation, and the integration of digital remote sensor data with other geospatial data (e.g., soil, elevation, slope) using a GIS ( Jensen et al., 2009). Skills are also needed to interpret real-time video imagery collected from satellite, suborbital, and unmanned aerial vehicles.

Education and Professional Preparation Programs

There are no departments of remote sensing in U.S. universities (Mondello et al., 2006, 2008). Instead, a variety of departments offer degree tracks in remote sensing as part of a degree in other fields, including

• geography (all types of remote sensing),

• natural resources/environmental science (all types of remote sensing),

• engineering (sensor system design and all types of remote sensing),

• geomatics (all types of remote sensing),

• geology/geoscience (all types of remote sensing and ground penetrating radar),

• forestry (all types of remote sensing, but especially lidar),

• anthropology (especially the use of aerial photography and ground penetrating radar), and

• marine science (especially the use of aerial photography and sonar).

Few of these programs offer lidar courses; most lidar instruction takes place within other remote sensing courses.

Dozens of departments at 4-year universities offer degree tracks in remote sensing. A selected list of departments with a remote sensing-related concentration, track, or degree appears in Table A.3 in Appendix A. Geography programs offer more remote sensing courses and grant more degrees specializing in all types of remote sensing than any other discipline.

As far as can be determined, few remote sensing courses are offered at 2-year colleges, and no degrees are granted with a specialization in remote sensing. Remote sensing education is also available through workshops and webinars organized by professional societies and online instruction and degrees offered by universities.

Cartography focuses on the application of mathematical, statistical, and graphical techniques to the science of mapping. The discipline deals with theory and techniques for understanding the creation of maps and their use for positioning, navigation, and spatial reasoning. Components of the discipline include the principles of information design for spatial data, the impact of scale and resolution, and map projections (Slocum et al., 2009). Themes often analyzed include evaluation of design parameters—especially those involved with symbol appearance, hierarchy, and placement—and assessment of visual effectiveness. Other topics emphasized include transformations and algorithms, data precision, and data quality and uncertainty. Cartography also focuses on automation in the production, interpretation, and analysis of map displays in paper, digital, mobile device, and online form.

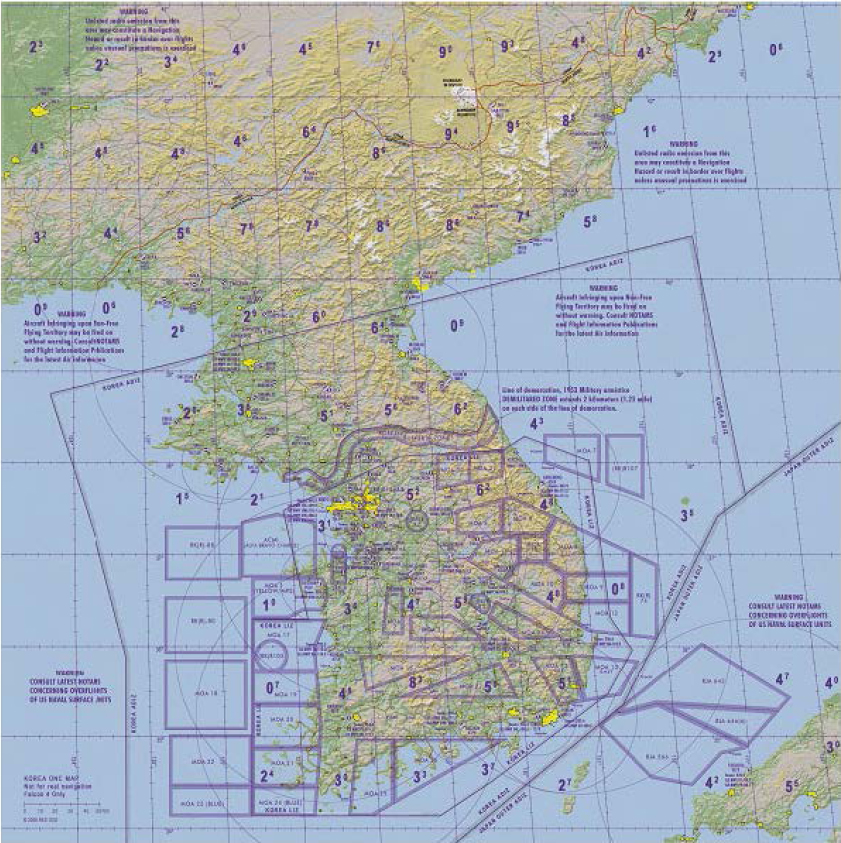

Among the key tasks that fall within cartography at NGA are maintaining geographic names data, producing standard map coverage for areas outside the United States, and nautical and aeronautical charting (e.g., Figure 2.7). The operational demands of the armed services for digital versions of standard maps and charts have expanded with the increased availability of automated navigation systems.

Evolution

The roots of cartography are positioned in geodesy and surveying, in exploration for minerals and natural resources, in maritime trade, and in sketching and lithographic renderings of landscapes by geologists and geographers. The formal discipline of cartography dates back to the late 1700s, when William Playfair began mapping thematic information on demographic, health, and socioeconomic characteristics. Military and strategic applications, particularly navigation and ballistics, have driven many of the major advances in cartography. Improvements in printing, flight, plastics, and electronics supported cartographic production, distribution, preservation, spatial registration, and automation.

The end of World War II created a surplus of trained geographers who moved from military intelligence to academic positions. During the 1970s and

FIGURE 2.7 NGA digital operational navigational chart covering the Korean peninsula at 1:1M scale, displayed in the Falconview software. SOURCE: Clarke (2013b).

1980s, graduate programs specializing in cartography began to emerge at about a dozen universities. Beginning in the early 1980s, GIS began to flourish, largely due to the decision to automate the U.S. Decennial Census and map production at the U.S. Geological Survey (McMaster and McMaster, 2002). Demands for personnel trained in processing spatial information increased. In response, the emphasis of university curricula shifted from cartography to geographic information science (Box 2.3).

Cartographic skills in information design, data modeling, map projections, coordinate systems, and

BOX 2.3

Geographic Information Science

Geographic information science is a term coined in a seminal article by Michael F. Goodchild (1992) to encompass the scientific questions that arise from geographic information, including both research about GIS that would lead eventually to improvements in the technology and research with GIS that would exploit the technology in the advancement of science (Goodchild, 2006). As such, geographic information science includes aspects of cartography, computer science, spatial statistics, cognitive science, and other fields that pertain to the analysis of spatial information, as well as societal and ethical questions raised by the use of GIS (e.g., issues of privacy).

statistical analysis for mapping remain an important foundation for many tasks in geospatial intelligence. For example, an ability to create and interpret interactive and real-time graphical displays of geographic spaces (e.g., streaming video footage of enemy terrain) or of statistical information spaces (e.g., statistical clusters of demographic, economic, political, and religious characteristics) could help identify latent or developing terrorist cells. Skills required for nautical charting include a working knowledge of calculus, solid programming skills, and expertise in converting among international geodetic datums and spheroids. A nautical charting specialist must also be able to compile information from various sources and establish a statistical confidence interval for each information source and to quantify data reliability.

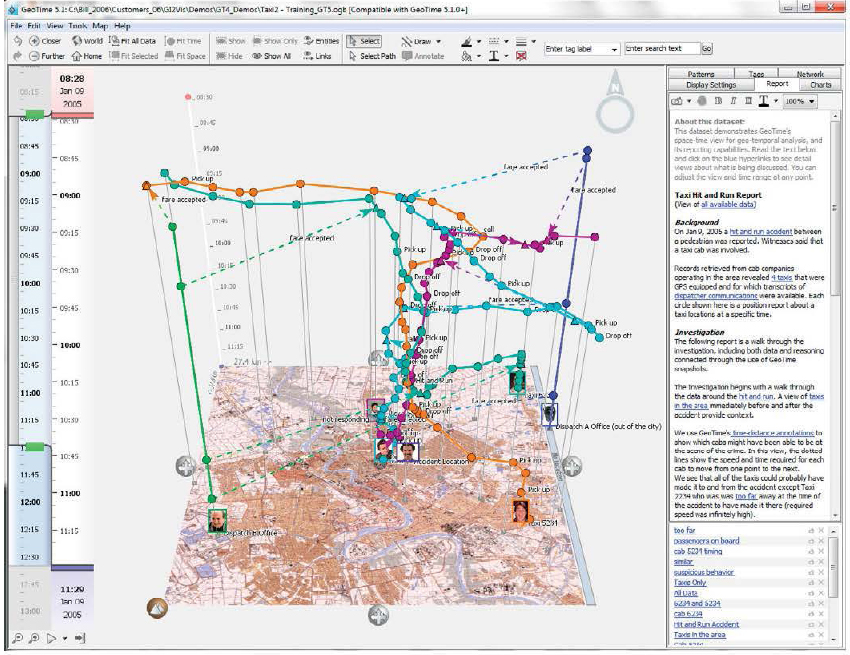

An emerging area of cartography, which addresses the design and analysis of statistical information displays, has been called geovisualization (Dykes et al., 2005) or geographic statistical visualization (Wang et al., 2002). Whereas scientific visualization is focused on realistic renderings of surfaces, solids, and landscapes using computer graphics (McCormick et al., 1987; Card et al., 1999), geovisualization emphasizes information design that links geographic and statistical patterns (e.g., Figure 2.8). The primary purpose of geovisualization is to illustrate spatial information in ways that enable understanding for decision making and knowledge construction (MacEachren et al., 2004). Its practical applications include urban and strategic planning, resource exploration in hostile or inaccessible environments, modeling complex environmental scenarios, and tracking the spread of disease. A superset of this area, called visual analytics, is described in Chapter 3.

The transition from traditional cartography to geographic information science in universities has changed the mix of knowledge and skills being taught. Basic cartographic skills remain a prerequisite to geographic information science training, which requires understanding of projections, scale, and resolution. Virtually all GIS textbooks include basic information on cartographic scale, map projections, coordinate systems, and the size and shape of the Earth. Knowledge about the principles of graphic display has been deemphasized in most curricula, even though map displays in GIS environments are often created by analysts and are subject to misinterpretation. The traditional cartographic training in map production has been replaced by training in cartography, in detection and identification of spatial relationships, in spatial data modeling, and in the application of mapping to spatial pattern analysis. Many curricula have also incorporated coursework to train students in the use of GIS. In the past decade, most curricula have introduced coursework in software programming, database management, and web-based mapping and data delivery.

The minimum cartographic skills needed for professional cartographers include a demonstrated ability to work with basic descriptive and inferential statistics; an ability to program in C++, Java, or a scripting language such as Python; understanding of the principles of information design (Bertin, 1967); and a working knowledge of current online and archived data sources and software for their display. Professional cartographers are capable of handling large data sets, of undertaking basic and advanced statistical analysis (difference of means, correlation, regression, interpolation) in a commercial software environment, of interpreting spatial patterns in data, and of representing these patterns effectively on charts and map displays.

Cartographic skills used in the subdiscipline of geovisualization include map animation, geographic data exploration, interactive mapping, uncertainty visualization, mapping virtual environments, and collaborative geovisualization (Slocum et al., 2009).

FIGURE 2.8 Example of a geovisualization technique that allows the display of events unfolding over time (vertical axis) and space (map). SOURCE: GeoTime is a registered trademark of Oculus Info Inc. Image used by permission of Oculus Info Inc.

Education and Professional Preparation Programs

A few dozen academic geography departments in the United States offer a degree track or concentration or a certificate with cartography or mapping in the title of the degree or certificate (see examples in Table A.4 in Appendix A). Students enrolled in these degree tracks or certificate programs are commonly required to take two or more courses related to cartography and mapping, as well as a course in basic statistics. At present, the most diverse undergraduate curriculum in cartography is offered by the University of Wisconsin. Strong graduate programs in cartography are harder to identify since so many graduate curricula have been folded into geographic information science work.

There are four major career paths in cartography: (1) information design, which focuses on design and graphic representation for topographic, reference (atlas), or thematic mapping; (2) GIS analysis (see below); (3) visual analytics (see Chapter 3); or (4) production cartography, which focuses on printing and reproduction. As the demand for production cartographers declines, fewer programs offer a primary or even a secondary focus on printing and reproduction. The demand for web, mobile, and online map production continues to grow, however. It is possible to take web or mobile coursework at some U.S. colleges and universities, but presently there are no certificate or degree programs in these topics. There is a demand for

professionally trained cartographic designers to produce atlas and topographic map designs, and undergraduate training in this area can be found at several universities, such as Oregon State University, Pennsylvania State University, and Salem State University.

A number of 2-year colleges offer coursework in cartography and geographic information science. The shorter time required to complete a degree coupled with smaller class sizes (relative to larger universities) provides an environment conducive to hands-on training, which is essential preparation for good cartographic practice. Laboratory assignments, courses including practical work, and semester projects which are offered in 2-year colleges may not be offered until junior or senior year at universities, simply due to the size of the student population. The disadvantage of the 2-year college curricula, however, is that less attention is paid to computational and statistical skills, mostly due to the shortened time span.

Many universities offer professional preparation in geographic information science, and, in the best programs, cartography courses are a prerequisite to GIS courses. Most professional preparation in cartography that is relevant to geospatial intelligence focuses on GIS analysis or visual analytics. GIS analysts with cartographic training have a better understanding of projections and scale dependence. Important spatial patterns may be evident only in data within specific scale ranges, and cartographers are trained to be sensitive to relationships between spatial process and spatial or temporal resolution. Visual analytics experts with cartographic training bring an understanding of spatial relationships (also known as spatial thinking or reasoning; see NRC, 2006), which is endemic to geographic training. Career preparation in cartography also includes training in basic statistics, which is necessary for exploring and interpreting spatial patterns. Geovisualization shows great promise for integrating geographic, cognitive, and statistical skill sets for creation, analysis, and interpretation of geographical and statistical information displays, all of which are valuable for military intelligence.

GEOGRAPHIC INFORMATION SYSTEMS AND GEOSPATIAL ANALYSIS

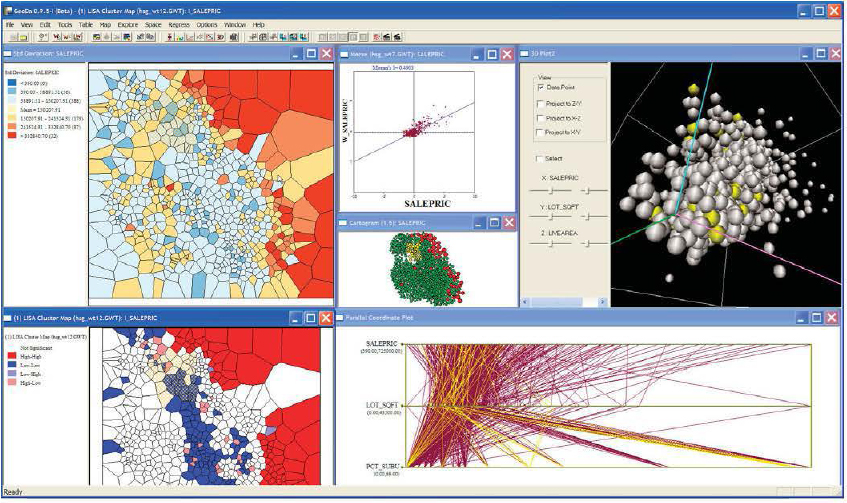

Geographic information systems are computerbased systems that deal with the capture, storage, representation, visualization, and analysis of information that pertains to a particular location on the Earth’s surface. Geospatial analysis emphasizes the extraction of information, insight, and knowledge from the GIS through the application of a wide range of analytical techniques, including visualization, data exploration, statistical and econometric modeling, process modeling, and optimization (e.g., Figure 2.9).

Evolution

GIS evolved to a reasonably well-defined discipline from a variety or origins, including cartography, land management, computer science, urban planning, and landscape architecture. Geospatial analysis has its roots in analytical cartography, the quantitative approach to geography pioneered at the University of Washington, and the development of quantitative spatial methods in regional science and operations research dating back to the early 1960s. Its early scope is represented by the classic book Spatial Analysis: A Reader in Statistical Geography (Berry and Marble, 1968). While often identified with spatial statistics, geospatial analysis encompasses a range of techniques from visualization to optimization. The need to develop analytical techniques to accompany the technology of GIS was raised by a number of scholars in the late 1980s and early 1990s (e.g., Goodchild, 1987; Anselin and Getis, 1992; Goodchild et al., 1992). Compilations of early progress in GIS and geospatial analysis appear in Fotheringham and Rogerson (1994) and Fischer and Getis (1997), and comprehensive overviews of the state of the art are provided in Fotheringham and Rogerson (2009), Fischer and Getis (2010), and de Smith et al. (2010).

Both GIS and geospatial analysis are changing rapidly as a result of the creation of Google Earth and similar services, the ready availability of technology to support location-based services and analysis, and the use of the Internet as cyberinfrastructure. These technological changes challenge the traditional model of an industry dominated by the products of a small number of vendors. Increasingly, GIS is offered as a web service and credible open-source competitors to the commercial platforms are appearing, supported by open standards developed by organizations such as the Open Geospatial Consortium. This development has significantly democratized access to geographic information,

FIGURE 2.9 Screen shot of an application of the GeoDa software for spatial data analysis (Anselin et al., 2006) illustrating an exploration of spatial patterns of house prices in Seattle, Washington. The different graphs and maps are dynamically linked in the sense that selected observations (highlighted in yellow) are simultaneously selected in all windows. SOURCE: Anselin (2005).

which relies increasingly on a web browser to query, analyze, and visualize spatial data. Crowdsourcing is becoming more important, changing the role of traditional data providers, and the notion of cyberGIS (extensions of cyberinfrastructure frameworks that account for the special characteristics of geospatial data and geospatial analytical operations, e.g., Wang, 2010) is around the corner. Research in geospatial analysis is embracing the study of space-time dynamics associated with both human and physical phenomena, increasingly supported by massive quantities of data. This new direction requires new conceptual frameworks, methods, and computational techniques and is driving a rapidly evolving state of the art.

Knowledge and Skills

GIS and geospatial analysis are taught in undergraduate and graduate curricula in a wide range of university programs, such as geography, urban planning, landscape architecture, ecology, anthropology, and civil engineering. The core curriculum for GIS education is laid out in the “Body of Knowledge” (DiBiase et al., 2006), which is used by many higher education institutions to help structure GIS offerings.5 The core curriculum outlines a range of necessary knowledge and skills, including a solid foundation in cartography, information systems, computer science, geocomputation, statistics, and operations research. Most university programs include coursework in a subset of these skills, but few deliver the full range of skills.

Education and Professional Preparation Programs

GIS educational programs and their degree of technical sophistication vary widely and range from community college training to undergraduate and

__________________

5 Community input is currently being gathered for the second edition of the Body of Knowledge.

graduate certificates to master’s and professional master’s programs. There are some 189 GIS degree programs in the United States, and more than 400 community colleges and technical schools offer some form of training in geospatial technologies (e.g., see Table A.5 in Appendix A). In contrast, only a handful of U.S. degree or certificate programs have an explicit focus on geospatial analysis. For example, the University of Pennsylvania offers a master’s in urban spatial analytics and Duke University offers a geospatial analysis certificate. Various aspects of geospatial analysis are also covered in graduate degree programs in statistics, public health, criminology, archeology, urban planning, ecology, industrial engineering, and other areas. For example, statistics programs with a heavy emphasis on spatial statistics include the University of Minnesota (biostatistics), the University of Washington (environmental and biostatistics), and Duke University (environmental and biostatistics). Advanced courses in spatial optimization are offered in the geography program at the University of California, Santa Barbara, in geography and industrial engineering programs at Arizona State University, and in various programs at Johns Hopkins University and the University of Connecticut.

Training in GIS and geospatial analysis is also delivered through other channels. Professional certificates or degrees are available from traditional or online university programs, both nonprofit and for-profit. Commercial vendors offer professional training or education, typically in the form of online training modules and in-person training sessions. Perhaps the largest and best known industry training is provided by Environmental Systems Research Institute (ESRI), which offers formal technical certification programs that deal with various aspects of GIS and spatial analysis (e.g., desktop, developer, enterprise). Coursework is offered online and in 1- to 4-day instructor-led workshops. After participants pass a test, they are provided with a certificate.

Professional societies (e.g., Association of American Geographers, American Planning Association) sponsor ad hoc training sessions in basic to advanced techniques. These sessions are commonly funded by federal agencies such as the National Science Foundation’s Center for Spatially Integrated Social Science, or carried out as part of advanced professional training programs. A number of scholarly conferences include 1- or 2-day short courses or workshops focusing on particular software programs or advanced methods. For example, the GeoStat 2011 conference had a 1-week course on spatial statistics with open-source software.