Panel IV

Measuring Success: Assessment and the Demands of the New Strategy

Moderator:

Deborah Nightingale

Massachusetts Institute of Technology

Dr. Nightingale said that a critical aspect of the MEP was how it measures its success. We need to know more, she said, both about how this has been measured in the past, and how it could better be measured in the future “to understand where we are and how to move forward.”

Relevant and accurate measurement is critical during a time of transition, she said, “to make sure that we are measuring the right kinds of things.” There are many different kinds of measurements, including outcome metrics and process metrics, which are quantitative, as well as qualitative metrics. “I think it’s going to be important as we move forward to really understand how and what we should measure so that assessments are aligned with the new strategy MEP is laying out.” She said that the speakers would discuss the current state of assessment, proposals for some future measures, and some of the challenges of measurement, “which are true for any organization I’ve ever worked with.”

Gary Yakimov

Manufacturing Extension Partnership

National Institute of Standards and Technology

Mr. Yakimov opened his talk by saying he was not an economist by training, but a policy person whose focus is on helping develop a system of measures “that can quickly and easily inform policy decisions.” When reviewing

performance and evaluation, he said, the focus is on three distinct levels of data and measurement. One is what happens at the level of the client with respect to impacts and performance improvements. The second is at the level of the MEP center. That is, what does the individual center do as an investor: Are they doing the kinds of things MEP wants them to do, and do they have positive impacts on their clients? Third, at a system level, is the MEP making people aware of its value, and does discussion about its activities inform policy?

This is not the first time the MEP program has been reviewed, Mr. Yakimov noted. The evaluation system and performance measures had been reviewed positively several years earlier by the Office of Management and Budget and by the National Academy of Public Administration (in 2003). OMB indicated that the program was well-managed, with regular reviews to assess performance, while NAPA found that the metrics used to evaluate programmatic performance and outcomes are “extensive,” and highlighted an SRI report which noted that “the significance of these efforts is not in the methods used or the results generated, but in the integration of evaluation into a longer-term, strategic framework.” In addition, he said, “we are constantly invited to talk to other federal agencies about our approach to data, which I think is a good signal.”

A Culture of Accountability

He said that one of the reasons why MEP is praised for its evaluations is that it “has a culture of measurement and accountability.” At the beginning of each year, each center files an operating plan, and the MEP uses many tools to “hold the center accountable to us and to our investment.” They report quarterly on their clients, and the MEP then surveys those clients. It also uses peer reviews; every other year a center is reviewed by a panel of peers which then analyzes their strategy and makes recommendations. The MEP also does an annual review in parallel, including a caucus of all staff that reviews best practices; this helps provide early warning signals about the centers. It does a longitudinal evaluation every few years, and research policy and analysis.

In managing the reporting and survey process, Mr. Yakimov said, the MEP receives detailed reports from the centers on their work with the clients, including the industry, hours worked, kinds of intervention, and contact information. Six months later a third-party survey firm asks a series of follow-up questions to determine how the MEP assistance affected sales, jobs, and other outcomes.

An ‘Incredible’ Survey Response Rate

The response rate to a quarterly survey in 2010 had a response rate of about 85 percent, he said, “which for a survey I think is incredible.” One of the reasons, Mr. Yakimov said, was that the sales staff is encouraged to tell clients at the outset that they would be surveyed at the end about how much each firm is

investing in itself, jobs, cost savings, and other results. “We find the centers that do that more proactively and throughout the process have a higher response rate to their surveys.”

The MEP also asks centers to report each quarter on any success. That success stories can be entered in a template on the MEP website, where they are grouped with others by geography or industry. Each story, guided by the questionnaire, has the same three elements: what was the problem, what was the intervention, and what was the impact, in narrative form. “We’ve had a lot of good feedback from our stakeholders around those success stories,” he said.

The survey will soon change, Mr. Yakimov said, as the MEP moves its system toward new metrics. In January 2010, the survey was scheduled to begin to ask two kinds of questions. One kind would ask whether the work of the MEP center helped the firm enter a new market, find a new customer, or develop a new product or service. A second kind would ask whether the firm actually invested in a new product or process. A larger upcoming survey would align with MEP’s next-generation strategy, which includes E3 (the government’s Economy, Energy, and Environment Initiative), as well as export plans.

A Snapshot of MEP Performance

Mr. Yakimov presented a snapshot of MEP’s performance, including a series of client impacts resulting from MEP services for FY 2009: new sales ($3.9 billion), retained sales ($4.9 billion), capital investment ($1.9 billion), cost savings ($1.3 billion), and jobs created/retained (72,075). The survey asked clients for their three biggest challenges. The top responses were (1) ongoing continuous improvement / cost reduction strategies, (2) identifying growth opportunities, and (3) product innovation and development. He said that this information could be interpreted in various ways. After all, every business wants to reduce costs—and yet this objective is not sufficient to fuel long-term growth or global leadership. Nor can number 2 be taken at face value. He noted the famous remark by Henry Ford, who said that if he had relied on his customers for advice on the most promising growth opportunities, they would have asked him to build a faster horse. “I think one of the challenges we have across our system is to create a sense of urgency in small/mid-size manufacturers about the need to grow, innovate, export and become more sustainable. What’s really important about this survey is whether we have products and services to meet this list of needs, and the fact is that we do, and we continue to develop them.”

Transition to a New Reporting and Evaluation System

Mr. Yakimov said that in the transition to a new reporting and evaluation system, the MEP would continue to hold centers accountable for three things: financial stability, market penetration, and client/economic impact. “That model will never change, whether it’s the current system of evaluation or the new system.” The current system evaluates clients on new sales, retained

sales, investment, cost savings, and jobs created and retained. The MEP holds the centers accountable for these results, and evaluates them on the basis of minimally acceptable impact measures (MAIM), annual and panel reviews, the operating plan, and quarterly data reporting.

The reason for the imminent change, he said, was that the MEP needs a “more balanced scorecard.” At the beginning of the MEP program, he said, the evaluation focused too much on activities and what the centers were doing to work with manufacturers. About a decade ago, the MEP moved to the client impact survey as the sole mechanism to hold centers accountable. “I think what we want to do now is reach a balance between those two things. We want to look at the activities in addition to the outcomes and impacts.”

Mr. Yakimov noted that because it is inherently difficult to quantify the impacts of social programs, this shift in balance recommended an emphasis on the “preponderance of evidence” in describing impacts with clients or individuals. “We want to be able to say, ‘There is a preponderance of evidence that the centers are doing the kind of good work that we want to see.’ We do focus on the client impact survey because it is entirely quantitative. But we want to build on that with qualitative analysis—to not only look at the centers’ performance, but to look at it through the eyes of an investor. Are they doing the kinds of things we want them to do?”

This shift in balance is scheduled to feature the following:

• Increased focus on growth through innovation.

• Increased focus on market penetration.

• Minimal performance is not sufficient for understanding and informing performance and investment; there should be threshold levels to distinguish levels of performance and investment.

• Maintain the historical focus on market penetration, client impacts, and financial viability.

• Invest intelligently in centers that are strategic and high-performing.

The first two bullets were new and critical, he said, urging clients to focus on growth and innovation, and on serving more clients. He said that the current system was a “very binary evaluation system. You either met our minimal standards or you don’t. We need a way to think about what is a high-performing center. We need to move beyond minimally acceptable impact metrics that allow us to distinguish our really strong performers.” He added that there would always be centers that struggle to meet minimal performance, and the MEP would work with them.

A New Scorecard

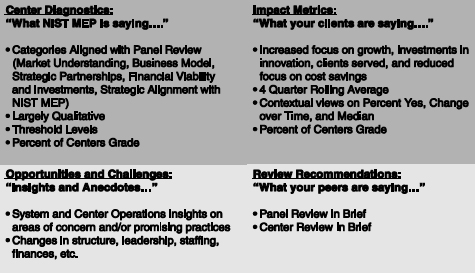

Mr. Yakimov showed an illustration of the “current scorecard without the detail,” a “center on a page” with four quadrants. In the upper right quadrant

were “the main indicators”—what clients think about the center. This showed an increased focus on growth, investments in innovation, and a new measure of the number of clients served. There was a continued focus on cost savings, but reduced from the previous system; a four-quarter rolling average of metrics, and a new entry for contextual views on the percentage of positive responses over time. “Right now a center can score 100 on the scorecard every quarter, but actually be declining in the number of clients they serve, the number of hours they’re working, or the impacts they’re having. And we don’t really focus on the historical performance of the centers, which we’re going to increase. This quadrant will be 80 percent of a center’s grade in the future.”

In the upper left quadrant was what he called the “preponderance of evidence indicators,” which was mostly qualitative. To avoid multiple naming conventions, he said, the qualitative metrics would be organized in the same categories as the panel reviews: Does a center understand and help lead its market, do they have a sustainable business model, what are their strategic partnerships, are they financially sound, and are they aligned with MEP strategies.

Mr. Yakimov praised the Manufacturers Resource Center of Pennsylvania, directed by Jack Pfunder, for its work in a Technology Scouting project with MEP. This is an effort to help firms find technologies that can “move them to the next level.” The work was highly effective, he said, in building a business model, but it required patience, because the impact on the

FIGURE 10 MEP SCORECARD: “Center on a page”

SOURCE: Gary Yakimov, Presentation at November 14, 2011, National Academies Symposium on “Strengthening American Manufacturing: The Role of the Manufacturing Extension Partnership.”

client is not as quick as it can be in other projects. “Something we may not see in the upper right quadrant,” he said, “will be rewarded in the upper left,” where the “preponderance of evidence” measures appear. These measures indicate whether a center is doing the kinds of work and using the new tools and products suggested by the MEP.

The bottom right quadrant, he said, reported “what your peers are saying about you.” This included the panel reviews and the center reviews. Finally, the bottom left quadrant showed “insights and anecdotes” that inform the scorecard as a whole. This quadrant might report a change in the center’s director or key staff, the loss or gain of a funding source, and other areas of concern or promise.

He showed the timeline for the transition to the new evaluation system, which was to be released to the centers with detailed metrics in late January 2012. The two systems would run in parallel for about a year until the new one becomes official.

In conclusion, Mr. Yakimov summarized the MEP’s work in research and analysis, including the use of MEP data, policy papers, case studies, and data tools. He highlighted two points. “We want to do a much better job of telling our own story, using our own data. I actually think that we could be informing manufacturing strategy better than anyone else if we can figure out a way to better mine our own data and produce custom reports on a regular basis.”

Daniel Luria

Michigan Manufacturing Technology Center

Dr. Luria, vice president for research at the Michigan Manufacturing Technology Center, an MEP, opened with the thought that “if we keep doing what we’ve always been doing, we’re probably going to get the same kinds of results.” He said that he was an economist whose major field of graduate study, done at the University of Michigan’s Institute for Social Research, was economic surveys. He had been working for an MEP center since it became one in 1991, and professed a long-standing interest in how to make the MEP more effective. This question, he said, that had been asked regularly since 1993, when members of the first five or six centers began gathering every quarter to discuss the topic.”

A Need for Improved Measurements

He reiterated that clients are surveyed for MEP about six to 12 months after the end of delivery of MEP services, asking for impacts results. These surveys, he said, generally reported very large numbers for new and retained sales, new and retained jobs, and cost savings/ cost avoidance; for example, the totals have been about $1.9 billion in cost savings and $50+ billion a year in

additional/ retained sales. But, he said, “it’s still the case that the medians on nearly every metric are at or near zero.” He said he would explain why that is a sign of an evaluation that isn’t making its measurements very well, but also why it is “not as bad as you might think.”

Dr. Luria said that three rigorous client control studies had been done for MEP: one for the 1987-1992 period, by Jarmin & Jensen, which found no sales effect and a 5 percent gain in productivity (value added per employee); another, for the 1992-1997 period, focusing on the seven MEP centers in Pennsylvania, by Eric Oldsman of Nexus Associates, which found the same results; and a third for 1997-2002 by an SRI/Georgia Tech, which found neither a sales nor a productivity effect. “I think that’s another way of saying that the medians are zero or close to zero,” he said.

He said that while he very much approved of the additions being made to the survey, an important feature of the ongoing survey was the stable, consistent instrumentation and questions it had used for more than 10 years. “The centers are used to it,” he said, “and it clearly influences their behavior. For example, part of the pay for our delivery staff at the Michigan center depends on the response rates of clients, whether those clients are able to quantify results, and other familiar behaviors.”

‘A Very Difficult Question to Answer’

However, Dr. Luria said, an inevitable problem with this kind of survey is the difficulty of expecting clients to compare their current situation as an MEP client with an imagined situation without MEP services. This difficulty is compounded by queries that require dozens of calculations to answer meaningfully. “For example, one of the cost reduction questions is: ‘Compared to had you not worked with a Center, how much lower are your labor, material, overhead, and inventory costs?’ Leaving aside the lack of agreement on a definition of overhead, and the problem that inventory costs are a one-time savings on the balance sheet, it is a very difficult question to answer.” Finally, he said, client control studies can be done only every five years as part of the census of manufacturers. This does not give feedback to MEP that is rapid enough to evaluate the functioning of centers.

Another complexity is the large “sum of impact” results. “We know those are driven by outliers,” he said, “because again, the medians are so close to zero.” However, he emphasized that a zero median finding does not prove that MEP “doesn’t work.” For example, in clinical trials, a medicine with 40 percent effectiveness versus a placebo with 15 percent effectiveness is deemed to have clinical value.

‘Complex, Counter-factual Questions’

The zero median findings, Dr. Luria said, were not “damning.” Instead, he said, they were “part of why I believe that the medians are not zero, but that

measurement problems are making them seem like they’re zero.” For example, he said, one question asks, ‘Would you recommend your MEP center to provide services to other companies?’ The companies that don’t report any impact themselves are just as likely to answer yes, they would recommend it. “So that tells me that the more honest you’re being as a company, the harder it is to answer these complex, counter-factual questions.”27

The true role of outliers is hard to assess, he continued, because the survey looks only at changes with no reference to base levels. Thus, a $1 million client reporting a $2 million impact is accepted, while a $100 million client reporting a $25 million impact has to be investigated. What is missing, he said, is a systematic guide for companies about how to think about the impact; for example, embedding instructions and worksheets in the survey. This lack opens the door to centers coaching clients on how to respond, he said, and almost certainly leads many clients not to quantify impacts. As an experiment, Dr. Luria built a simple spreadsheet for clients. It suggested that in regard to the change in sales, for example, “there are four or five questions you can ask yourself. We found that this gave companies a way to think about the question and quantify it. If we can do that, it’s going to tend to get the medians up.”

Dr. Luria noted, however, that ingrained habits would make it difficult to change techniques. There have been attempts to change the survey “in directions that I like,” but those attempts have been resisted by center directors “because they’re used to what they’ve got, and they know that if they serve enough companies, they’ll get enough outliers to make them look good. So I think that’s a cultural problem.” He agreed with Dr. Yakimov that MEP needs a culture of innovation, but for this to occur, he said, “how we measure ourselves maybe has to change as well.”

He returned to the planned survey changes, praising the emphasis on evaluating growth and innovation projects. From an evaluation standpoint, he saw little new about such projects, and approved of the changes being proposed for the survey. He agreed that the new sales that are credited to MEP activities are a valid metric.

The Two Elephants in the Room

“But there are two elephants in the room,” Dr. Luria cautioned. The first is that “it is highly unlikely that MEP or the centers can get most clients to ascribe new sales to MEP services.” It is easy to see the source of a change that eliminates bottlenecks in the production system if the MEP brings in industrial

_______________

27He offered the following example of this survey problem: An MEP center helped a client achieve compliance to the ISO 9000 standard required by customers accounting for 80 percent of its sales. Client A credits services with retaining 80 percent of its sales. Client B reasons that it would have achieved compliance somehow without MEP, and reports no impact. Client A generates an outlier; client B depresses the median.

engineers who correct the system and calculate the times. But it is more difficult for econometricians to explain variances in sales. “I think what it’s really about is that when companies grow, their managers feel like they’re geniuses, and when companies don’t grow, the managers feel that their customers are bad people. There’s not a lot of room for them to say, I am growing somewhat, and a significant part of that is because I learned something from my interaction with MEP.”

More serious, he said, is that all sales impacts must be presumed to be zero-sum or very nearly so for U.S. manufacturing. Centers often conclude that they had a large impact on sales or cost savings or exports, he said, but we really have no way of knowing if that work is really new sales, or whether it is being won from other companies, even within the same state. “So the displacement effect has to be measured if we’re going to do the evaluation right.” He added that this presumption does not apply to productivity growth, where one firm’s increase does not imply other firms’ decrease.

The key to evaluating the MEP, Dr. Luria said, is to agree on what we want to know. One business model problem is that the stakeholders who are investing in the program are defining it as an economic development or jobs program. This is not the case. “So helping companies become more efficient, and then thinking they ought to be adding employment strikes me as somewhat bizarre. It’s a problem with having a major stakeholder that wants to measure the wrong thing.”

A Better Metric: Change in Net Value Added

A better metric, Dr. Luria said—mentioned already by Dr. Tassey—is the change in net value added. “The first question is, do MEP services make U.S. manufacturing larger than it otherwise would be? We don’t want to measure that just as employment, because that would be measuring inefficiency. What we’re really interested in is the change in value added per FTE.” To measure this, he said, the survey needs data from both before and after MEP services: sales before, sales after; purchase inputs before, purchase inputs after. To solve the objection that new sales come at someone else’s expense, the result can be adjusted by the import-to-domestic-production ratio for the clients’ six-digit NAICS code.28

New Questions about Productivity

A good evaluation also wants to know about productivity, he said, and the survey needs to be improved in at least two ways for this purpose. He

_______________

28The North American Industry Classification System, developed under the Office of Management and Budget in 1997, is used to classify U.S. businesses for various purposes.

offered his first suggestion in the form of a question: “I’m an SME, and my productivity increased 10 percent last year with the same labor input, but my sales did not go up. What am I missing?” The problem, he said, is that the survey does not ask companies whether or not they had to reduce their prices to become more productive. “So there is a huge unmeasured customer surplus impact,” he said. “There needs to be a question like the following: ‘Thinking about products you made two years ago that you still make now, how much more expensive or cheaper are those products?’”

A second question MEP needs to ask, he said, is whether a client’s productivity is rising faster than non-client productivity, other things being equal. The most recent survey does not answer that question in the affirmative, he said, but certain changes could make clearer what is happening. “But until we understand whether or not the reports of no productivity growth and no cost savings by clients are meaningful or are problems of measurement, we’re not going to know the answer to that second question. And we’re not even asking the first question. We want to know whether the MEP is helping make manufacturing bigger than it otherwise would be, and whether their clients are advancing compared to non-clients.”

In summary, Dr. Luria offered the following conclusions:

• The current evaluation system has been logical and consistent. It works “passably well” in generating “large-seeming sum-of-impacts” that generally help the problem and motivates centers.

• However, claims of MEP impact need to be based on changes in value added and productivity. Failure to do so “invites a reasonable presumption of near-zero net impact.” The current evaluation does not address either question very well, he said, and does not tell centers what they should be doing to increase these outcomes. For example, he said, his center had reviewed which kinds of MEP interventions produced the largest reported increases in new sales. The results were that quality-based projects produced the largest increases, “lean” projects the next largest, and growth projects the least. “Now I don’t believe those results at some level,” he said. “But the problem is that our data don’t tell us what to do if we are striving for a certain impact.” He said that his center had designed a survey no longer than the current survey that would answer these two questions.

Robin Gaster

The National Academies

Dr. Gaster said that “what MEP has done with data is very impressive. ” He said that in his experience with SBIR and other agency programs, none of the agencies collected data with “anything like the amount or the detail that

MEP is using, so MEP deserves a lot of credit for that.” He also said the program deserved credit for its willingness to re-examine older data to see what needs to change. “That also is not something that’s characteristic of all federal agencies,” he said.

He said that a lot of what the MEP evaluation does is important, and that its three-tiered approach captured much of what is needed. He commended the new concept of the “balanced scorecard” as “clearly correct.”

Dr. Gaster also said that the discussion about the centers pointed to several key variables: inputs, outputs, capacity building, and process, “which is difficult to capture but very important.” Also desirable, he said, would be a metric on how stable the centers are. He agreed with Dr. Luria that the zero-sum problem of domestic and foreign sales was “really a deep problem, because you are in a state economy to start with and then in the U.S. economy, and you do have to find the value added, not just the extent to which you manage to cannibalize” from other firms.

A Need for Consistency in How Questions are Answered

Dr. Gaster said that Dr. Luria’s comment about the need for consistency in how questions are answered is important, as shown by his own experience in designing a large questionnaire for SBIR companies. ”When you look at these things under a microscope,” he said, “they dissolve. You look more and more closely at exactly how companies answer questions, and consistency melts away. It’s very important to give them clear guidance.”

On the issue of moving toward more emphasis on innovation, he said he had some reservation. Innovation can be something that happens either quickly or slowly, and “you have to be prepared to capture both if that’s your target. I think the survey has to be able to capture outcomes that don’t happen in six months.” Second, he encouraged more investigation of the use of data. He mentioned in particular Dr. Luria’s comment about how data for different kinds of interventions was being matched with different outcomes.

How to Reach Companies Ready to Advance

In guidance for the centers, Dr. Gaster said that detailed differentiation of the interventions is important, as is differentiation of populations: For example, a company may want to adopt a green manufacturing strategy, but no one at the meeting had talked about what percentage of companies could realistically be expected to adopt a green strategy. Similarly, while there is data on the number of SMEs now exporting, the MEP would benefit by knowing how many more are interested and capable, even with help; this would be a much smaller number. A strategy designed to have every SME exporting in the near future is not realistic. “It would be a tremendous success to reach a significant portion of the companies that were ready,” he said. “But you need to know who

they are, and for this we need to capture or develop some metrics for reaching them.”

Better metrics are also needed to bring the most appropriate strategy to each firm, he said. “Just cutting costs is great, and productivity is good. But are we taking a low road or a high road for this firm? I think it’s powerful to consider how to develop metrics that differentiate.”

Dr. Gaster concluded with a suggestion about the low barrier to entry into the program. For the SBIR program, he said, the success rate for the first round of funding is about 15 percent, and the success rate for the second round is 45 percent of that. So 7 percent of the applicants, not counting those who didn’t know about the opportunity, are deemed worthy of support. “It’s interesting to examine this program where basically you say, come on in, we’ll work with you ready or not. It may be worth developing a way to gauge the readiness of a company that comes in the door for help. This would also give you some kind of baseline for what they were like when they went out the door.”

DISCUSSION

Dr. Shapira asked Dr. Yakimov whether the MEP should do evaluations that are “more encompassing but less frequent”—why the centers went to the firms each quarter to pose the same questions as the previous quarter. He also prompted Dr. Luria to comment on his experiments with redesigning the MEP center in Michigan.

Testing a New Questionnaire

Dr. Luria said he had created a spreadsheet in which the current questions and the recommended questions are embedded. It had been tested with four or five companies, all of which were able to answer them all. With only five tests to date, he could not yet talk about the frequency of outliers, but the medians were all strongly positive, unlike those of the current survey, where they were typically zero.

Dr. Yakimov said that the centers currently report clients to the MEP at the end of the client interaction, and the client is surveyed six months after that. They can ask to be surveyed after a year instead, and they can be surveyed up to three times. “But we don’t consistently go back to the centers to ask the same clients the same questions.” The MEP is embarking on a longitudinal evaluation now, and designing ways to incorporate that into it or a future longitudinal evaluation.

He addressed Dr. Gaster’s suggestion that the MEP do a “pre-assessment” to determine whether a firm that is cutting costs is on the low or high road. We said that most of the centers have assessment tools they use when they first interact with a client that lets them understand what the client’s challenges are. This is not ordinarily used as a baseline, “because centers typically hold that information pretty close,” but “it’s something to think about

in the long term.” Also, some of the new questions are meant to determine whether the firms have an innovation and growth strategy. “We want to concentrate much more on those that are willing to invest in themselves and grow. Also, we want to know if they just use the good ideas that are already on the market, or are they creating those new ideas.” Finally, another item is the quality of the CEO, “which is another strong indicator.”

The Long-term Value of MEP Services

Diane Palmintera said she was concerned about capturing the long-term value of MEP services. She mentioned the example of Georgia Tech, where the MEP program gives a small amount of money to the entrepreneurial venture lab to help with business development. “The impacts on startups you’re not going to see for many years, and it’s quite diluted. How can you capture the value of that? Dr. Yakimov said the issue of mature vs. startup firms is something the MEP struggles with, as is the possibility that the MEP survey sometimes drives behavior. “I would say it’s definitely a place where we would love to hear the Academy’s input on how we can do it better.”