As long as there have been armies, there has been a need to assess soldiers’ capabilities and predict their likely performance. Speaking of the Army of Ephesus 2,500 years ago, Heraclitus observed:

Out of every 100 men, 10 shouldn’t even be there, 80 are just targets. Nine are the real fighters, and we are lucky to have them, for they make the battle. Ah, but the one. One is a warrior and he will bring the others back.

Jack Stuster, chair of the Committee on Measuring Human Capabilities, noted in his introductory comments to the workshop that, for thousands of years, one of the keys to successfully assembling an army is to select the right soldiers—to accurately predict which individuals are likely to succeed and which are likely to fail. “In the modern era,” Stuster also said, “you have probably heard the adage that amateurs talk about tactics, while professionals talk about logistics. Well, [the military writer] Tom Ricks wrote recently that real insiders talk about personnel policy, because they know it provides the foundation for everything in the military.”

To set the stage for the workshop, the initial presentations provided invited participants and audience members with the past, present, and future context of military assessment and assessment science. Following Stuster’s opening remarks, Gerald Goodwin, representing the U.S. Army Research Institute for the Behavioral and Social Sciences (ARI), the study sponsor, provided his perspective on the purpose and need for this workshop as well as the larger study, to include the committee’s final report.

To provide an overview of the variety of research that the military may be able to use in improving its assessments, Fred Oswald spoke on the present and future of assessment science.

THE CONTEXT OF MILITARY ASSESSMENT

In providing the study sponsor’s perspective, Gerald Goodwin, chief of foundational science at ARI, offered context for the discussions to come with a brief description of the U.S. Army’s current assessments and anticipated directions for the future. The current assessments used by the Army, he noted, have their roots in classical test theory, which was developed in the early 1900s [though not published until much later, see Novick, 1966]. From there, measurement theory slowly evolved until the current standard, item response theory (IRT), was developed in the 1950s and 1960s. Minor variants and additions have emerged along the way, such as generalizability theory, which was used for parsing measurement variance beyond “true” score and error. But on the whole, Goodwin noted, IRT is today’s dominant theory for cutting-edge psychological assessments.

“Given that item response theory first came onto the scene in the late 1950s, which is 50-plus years ago,” Goodwin observed, “What I am interested in is, what is next? What is the next wave that is going to drive psychological assessment for the next 50 years? Where is that coming from, and how do we start to codify that, so that we are moving in the direction of developing that theory and getting it to a robust state, so that we can all be using it for psychological assessments over the next 50 years?”

Today, the Army’s base assessment tool for cognitive assessment and vocational skills is the Armed Services Vocational Aptitude Battery, or ASVAB. It was originally developed in the 1970s, and in the 1990s it was updated for computerized adaptive testing as CAT-ASVAB (see Sands et al., 1999). Currently, the test includes nine sections of multiple-choice questions covering general science, arithmetic reasoning, mathematics knowledge, word knowledge, paragraph comprehension, electronics information, automotive and shop information, mechanical comprehension, and object assembling.1 The pool of questions from which the test draws is renewed every couple of years.

In addition, Goodwin said, the military services recently began supplementing the ASVAB with personality assessments. The Army developed, in collaboration with Drasgow Consulting Group, the Tailored Adaptive Personality Assessment System (TAPAS), which assesses many of the facets of the Big Five personality traits (openness, conscientious-

__________________

1See http://www.official-asvab.com/whattoexpect_rec.htm [July 2013] for the most up-to-date information on the content of the ASVAB.

ness, extraversion, agreeableness, and emotional stability; see Digman, 1990; Tupes, 1957; Tupes and Christal, 1961) and several of the subfacets, as well as a few personality measures that are specific to the Army. “That is really at a cutting-edge stage in terms of personnel assessment right now,” he said.

In explaining the rationale for requesting this study, Goodwin noted that one of ARI’s missions is to be “the developer of the science underlying the personnel assessment and testing program for the Army.” Goodwin explained that he reviewed ARI’s basic research program (for which he is responsible), as well as its applied program, in personnel assessment, and considered the dominant theories and measurements in use over the past 50 years or so. He then asked, “Given where we are, and the state of personnel testing in the military, can the committee provide recommendations for basic research in terms of scientific investment [to address the questions described in the following section]?” The workshop was thus intended and planned as the primary data gathering opportunity on potential advances and areas of research the committee may subsequently consider in recommending future investments by ARI’s basic research program.

Potential Future Directions

Having provided an overview of the past and present context of military assessments, Goodwin then described how he imagines assessments of the future. He outlined his vision through several questions concerning basic scientific research, which he identified for discussion during the workshop and which the committee has been asked to address in its final report (at the completion of the study’s second phase).

New Constructs

First, because new constructs and concepts may allow the Army to develop better measures of performance, Goodwin asked, “What are new constructs that we should be measuring, and how do we think about constructs in potentially a different way?” From Goodwin’s perspective, it is important that the search is not limited to simply looking broadly in the psychological literature for more constructs and for different constructs. Instead, he is interested in stepping back and asking whether it would be valuable to think about individual differences in a different format, in order to identify new constructs not previously considered.

The reason for the Army’s interest, he said, is that there is a lot of work going on now in the areas of psychophysiology and neuroscience that “speak to individual differences in a different format and in a dif-

ferent language than how we talk about them in psychological science.” Can that work be used, he asked, to improve the current understanding of individual differences and to provide new avenues for improving the prediction of performance?

New Measurement Methods and Theory

Goodwin also explained that, simultaneously with the exploration of new constructs, the Army is looking for ways to improve its measurement of various factors.2 To consider the theories necessary as foundations for potential improvements, Goodwin asked, “What are the advances in psychometric theory that can help us get past some of the hurdles and the boundary conditions that exist in psychometric theory now?” As an example, he noted that both classical test theory and item response theory work best when each item measures only one construct. But what happens, he asked, when a single item measures more than one construct? “How do we … assign scores for the individual constructs when we have one item that is measuring multiple things? How do we create a test that would work with that?”

A second way to improve measurement, according to Goodwin, is to develop new measurement methods. There are already a large number of different types of measurement methods, with new methods in development all the time. What else is out there that could help the Army develop better assessments? In particular, he asked, “What are the cutting-edge ideas that exist in the small pockets of science out there that haven’t hit the mainstream yet? We are looking for the good ideas, as places that we might be able to take personnel assessment in the future.” Of particular interest are “unobtrusive” methods of measurement—methods that measure behaviors or constructs while the individual is engaged in a complex task, without interfering in that task.

Future Applications

Finally, Goodwin explained that the Army is interested in moving beyond assessment for selection into the military and into assessment for a particular team or unit. “Right now,” Goodwin said, “we predict individual performance and individual potential fairly well” with respect to selecting recruits into the military and to assigning occupational job cat-

__________________

2Various presenters and participants at the workshop used the terms “construct,” “factor,” and “trait” in ways that appear to make them near-synonyms. This summary has kept the terminology used by a particular speaker, but readers should bear in mind that these terms may be used interchangeably.

egories. But the next step—assignment into a particular unit—will require additional thought on exactly what needs to be predicted.

Is the goal to predict individual performance in a unit? It is not that simple, Goodwin observed, because individual performance in a unit depends on a number of other factors, including characteristics of other team members. Thus it is possible that the goal may be to predict group performance or unit performance. If so, he said, “How do we start from individual level scores and individual constructs to get to unit performance, and how do we better understand that process?” There is some research being done in the area now, but it is not yet mainstream. Thus, he asked, “What are the good ideas in this area that we [ARI] might be able to invest in, that might turn into a reality 15 to 20 years down the line?”

Boundary Conditions

Ultimately, Goodwin cautioned, the adoption of any new assessment methods by the Army will require satisfaction of certain boundary conditions. First, testing methods must be mass administrable. The Army tests huge numbers of people—up to 400,000 each year—to bring about 200,000 into active service. “This is not something that we can do in a cost-inefficient way,” he said. “The Department of Defense … simply can’t afford it.”

Additionally, the tests must be administrable in an unproctored format or by personnel not skilled in highly technical test administration, such as military recruiters. “We can’t have tests that must be administered by folks who have advanced degrees in psychometrics or advanced degrees in neuroscience.” That leaves out such highly technical possibilities as using magnetic resonance imagery (MRI) exams as part of the assessment, he noted.

Furthermore, most if not all of the tests must be done in “pre-accessioning,” which is the period before a recruit joins the Army. “Again, this is a cost investment piece,” he explained. The cost to replace an individual who drops out of basic training is about $73,000 per person. “Using basic training as a selection venue isn’t workable because that is a huge cost investment.”

ARI, as a part of the scientific community, is tackling the challenge of assessment from a scientific perspective. Constructs considered for research and ultimately for application must be based upon fundamentally good scientific theory. “Understanding how the theory works—and how it works in concert with all of the other psychological theory out there—is critical to us,” Goodwin emphasized.

The final boundary condition Goodwin discussed is that any changes to the current assessment program should have the promise of maintain-

ing, if not increasing, testing efficiency—that is, providing as much or more measurement in the same or less amount of time and with the same or greater predictive value. New methods should maintain or improve the cost-benefit balance. If the tests are more expensive, they will need to provide extra benefits that justify the added cost. Goodwin underscored the point that the Army will not, and cannot, pay more for the same or less value.

THE PRESENT AND FUTURE OF ASSESSMENT SCIENCE

After Goodwin provided the past, present, and future context of military assessment, Fred Oswald, a professor of industrial and organizational psychology at Rice University in Houston, Texas, provided an overview of the current state and likely future directions of the science of assessment, with an eye toward areas that might be of particular interest to the military.

Domains of Performance

There are countless determinants of performance that are relevant to success in one’s job, Oswald noted. Furthermore, there are key determinants of performance that are indicated by different malleable and nonmalleable skills; declarative and procedural knowledge; technical, social, physical and psychomotor skills; and motivation that guides the direction, intensity, and frequency of performance. To provide a general picture of the many different aspects of performance, Oswald offered a list of broad domains of performance with a few examples of criteria for each nonphysical domain:

- Foundational behaviors

- Performs routine duties

- Communicates effectively

- Technical/intellectual behaviors

- Produces training or work products

- Solves individual and team problems

- Leadership behaviors

- Seeks internal and/or external strategic information

- Delegates, sets goals, and motivates others

- Develops work climate

- Intrapersonal behaviors

- Demonstrates initiative

- Perseveres under pressure

- Shows commitment (versus counterproductive work behavior)

-

- Handles work stress (versus withdrawal/attrition)

- Follows rules/procedures

- Interpersonal behaviors

- Helps others voluntarily

- Provides emotional support

- Offers technical support

- Acts flexibly with teammates

- Physical behaviors

“You will see that the span of criteria and complexity is vast,” he said. “It spans technical and nontechnical domains. It spans across individuals and groups, … and there is a time dimension that goes through all of this, to make things even more complex.”

Given this vast range, Oswald observed, it is a difficult and complex problem to determine which types of performance are important in any given setting. “Not all of these criteria are important for any particular setting,” Oswald explained, “but I suspect that more than one criterion is important for [a] particular situation, and therefore [one is] faced with a multivariate case, and complexity begins as soon as you start talking about that.” Oswald believes the development of new criteria will only make this problem more challenging.

Determinants of Performance

To predict the likely performance of an individual or a group, Oswald noted, it is necessary to measure performance or various determinants of performance, such as knowledge, skills, or motivation. Some types of performance are malleable and may improve or decline over time, he observed, while others remain constant; the same is true for determinants of performance. Which types are malleable and which are not, according to Oswald, is something that generally should be tested empirically.

Any reliable performance measure of interest to the Army, according to Oswald, should be a function of declarative knowledge, procedural knowledge, motivation, or a combination of these three determinants. Declarative knowledge includes knowing facts, rules, and strategies—all things that can generally be tested with direct questioning. Procedural knowledge can be demonstrated in one way or another, though not necessarily questioned directly. The knowledge does not have to be explicit, Oswald noted. There are various sorts of implicit procedural knowledge, such as skill in driving a car with a stick shift, which has some psychomotor aspects to it.

Motivation, the third performance determinant, is the willingness and ability to apply the knowledge possessed. “Whether one is willing to

perform,” Oswald explained, “depends on a number of different intrinsic and extrinsic factors that guide direction and choice, how intensely you perform, and how often you perform.”

Traditional Measurement

Next, Oswald turned toward the question of how to carry out complex measurements “where we are trying to pack many constructs in, in a short amount of time.” He discussed two different approaches that are typically applied, one that is more traditional and one that is more modern. In his discussion of integrating these practical approaches, he offered a brief factor analysis of each construct to be measured.

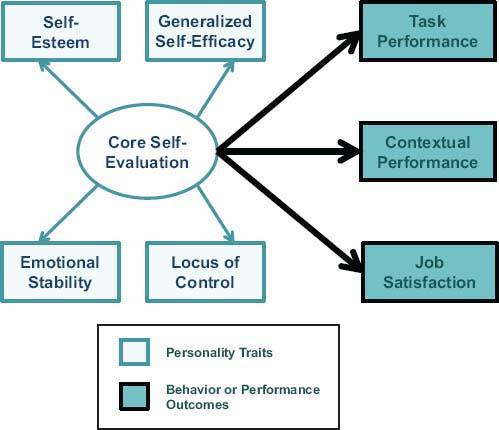

One way to carry out measurements on a number of constructs simultaneously, he said, is to examine a construct that is hierarchical in nature. One example of such a construct is core self-evaluation (CSE; defined as an individual’s appraisal of their self-worth and capabilities; see Chang et al., 2012, for a review), which is composed of four different personality traits: (1) self-esteem, (2) generalized self-efficacy, (3) emotional stability, and (4) locus of control (see Figure 2-1).

There are various approaches to measuring CSE. One could, for example, conduct a factor analysis on all the items used to measure the four individual constructs and extract the general factor; this general factor approach would result in a collection of items that are related to one another yet are sampled from across the four traits that contribute to the composite construct of CSE. However, with this approach, there is no guarantee that the items selected will represent each of the constituent traits evenly.

A second general factor approach, which Oswald described as a controlled heterogeneity approach, would sample deliberately from each of the four traits by conducting a factor analysis within each construct, identifying the items with the highest loadings within each of them, and then combining them into a single CSE scale. Oswald explained that CSE provides a general factor that predicts a wide variety of behavior or performance outcomes, such as task performance, contextual performance, and job satisfaction. This is a very practical strategy that can serve a wide array of purposes.

An alternative to a general factor approach, Oswald noted, could be used in the case that one particular outcome—job satisfaction, for example—was of great interest. Then one could focus solely on the particular trait or traits underlying CSE that are theoretically and empirically predictive—emotional stability, for example. This specific predictor approach makes it possible to look at a single relationship between predictor and criterion in a very refined way and perhaps then to build up to a broader

FIGURE 2-1 Measurement approach for a traditional construct: core self-evaluation.

SOURCE: Oswald presentation.

look at the network of important relationships that emerge from this bottom-up approach.

A fourth approach begins with a top-down exploratory analysis, in which one seeks to determine which relationships are important, before deciding whether to use a general factor or a specific predictor analysis. To explore the possibilities, one would look for relationships between each of the four traits ((1) self-esteem, (2) generalized self-efficacy, (3) emotional stability, and (4) locus of control) and each of the three criteria (task performance, contextual performance, and job satisfaction). Then, based on the pattern of predictions, one could decide which approach, general factor or specific predictor, might be most useful. The decision will also depend on various practical constraints, such as the amount of time available to develop or administer the measures, Oswald noted.

Moving beyond this specific example, Oswald pointed out that similar measurement approaches are applicable to any domain represented by

a hierarchical structure. For example, when considering general cognitive ability (g), one deals with such contributing traits as math, spatial, and verbal abilities and may want to predict such criteria as overall job performance or effective communication skills. One is again confronted with the question of whether to use a general factor or specific predictor approach. If the objective was to predict an individual’s ability to create statistical models, for instance, should one use math ability as a specific predictor or should one use an overall composite of the three traits because verbal expression may be essential to communicate the results from the models?

A similar situation arises in the personality domain, he noted, with respect to facets of personality as the factors for analysis. As an example, Oswald discussed conscientiousness as the broad general construct, with achievement striving, cautiousness, and orderliness as the facets contributing to that construct. Team-motivating behaviors, safety behaviors, and overall job performance are performance criteria that might be predicted by conscientiousness and these three personality facets.

Referring to the assessment of reliability for hierarchical traits, Oswald explained, “I call this ‘traditional measurement’ in quotes because we already have psychometric strategies to look at composite reliability. Classical test theory can apply here fairly readily, and this [factor analysis for hierarchical structures] has been addressed in the literature for decades.”

Future Measurement

Moving on, Oswald contrasted the traditional measurement approaches with future measurement approaches that might be required to reliably measure and study complex constructs like multitasking. Oswald noted that there are many definitions of multitasking, but they all involve the ability to shift attention effectively. Regardless of its definition, he said, the construct of multitasking is clearly complex. He asked, “How might we go about predicting it? It might require measures and technologies that go beyond classical test theory types of psychometric approaches and traditional approaches to measure development itself.”

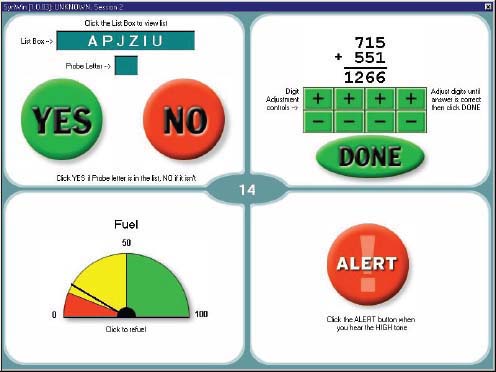

As a partial answer to that question, Oswald described some of his own multitasking research. The criterion was a computer monitoring task in which the subject had to simultaneously monitor four quadrants on the computer screen (as illustrated in Figure 2-2). Each quadrant required the subject to simultaneously engage in a memory search (upper left quadrant), arithmetic (upper right quadrant), visual monitoring (lower left quadrant), and auditory monitoring tasks (lower right quadrant). Oswald’s goal was to understand what individual differences might underlie differences in performance on this task.

FIGURE 2-2 Example of an approach for measurement of multitasking.

SOURCE: Oswald et al. (2007, p. 7).

In Oswald’s research results (see Table 2-1), general cognitive ability was the strongest predictor of multitasking performance, he noted, but it was not the only important predictor. Various other personality traits also predicted performance, but the strength of the correlation—and sometimes the direction—varied depending on the pace of the multitasking task: whether it was presented at a normal pace (see row labeled Baseline in Table 2-1) at which it was possible to accomplish all the tasks presented or at an extremely fast pace (see row labeled Emergency in Table 2-1), at which it was virtually impossible to complete all of the tasks.

Oswald added that at a normal pace, emotional stability was a strong predictor, such that “being calm and figuring out what was going on added incremental validity above ability.” In the fast-paced condition, “everybody was a little bit anxious, and therefore emotional stability was less of a predictor as a trait.” By contrast, conscientiousness had no correlation with performance in the normal-pace condition and had a negative relationship with task accomplishment in the fast-paced condition, presumably because greater attention to detail would make it harder to speed up performance to keep pace with the task.

TABLE 2-1 Correlations of Predictor Variables with Baseline Performance Versus Emergency Performance

|

|

||||||

| Performance Block | g | ES | E | O | A | C |

|

|

||||||

| Baseline | .25 | .21 | .01 | .13 | .01 | .00 |

| Emergency | .36 | .03 | .02 | .17 | –.08 | –.24 |

NOTES: A = agreeableness; C = conscientiousness; E = extraversion; ES = emotional stability; g = general cognitive ability; O = openness. Table values in boldface indicate statistical significance at p < 0.05.

SOURCE: Adapted from Oswald et al. (2007, p. 15).

The point of the example, Oswald said, was to show how these patterns of differential prediction can serve as ways of understanding the nature of a complex construct. “You might do this sort of pilot work before venturing further into a complex domain,” Oswald suggested.

Classical Versus Modern Psychometrics

Generally speaking, Oswald said, classical psychometrics tends to focus on “purer” constructs, unconditional reliability, and full-scale tests. By contrast, modern psychometrics tends to focus on complex constructs and conditional reliability, in which the reliability may be dependent upon a person’s trait level or upon where a subject is in the course of taking a test. “Conditional reliability gets modified over time,” he noted. Furthermore, instead of full-scale tests, part of the practice of modern psychometrics focuses on ipsative or partially ipsative scales, where a subject is given a forced choice—Do you prefer X or Y?—rather than rating both X and Y on a scale. Oswald believes this opens up new methods to potentially produce better estimations. Furthermore, modern psychometrics also makes use of adaptive tests, in which the test questions change in response to previous answers, often in order to tailor the test to, for example, the subject’s ability level.

In considering modern psychometric tests, Oswald said, it is important to keep in mind the principle of parsimony. “We can be lured by complex models, but from a scientific point of view, … complex models have to justify their departure from simpler models,” he warned. Echoing Gerald Goodwin’s earlier observations, Oswald emphasized that, if it is more expensive to use the more complex tests and technologies of modern psychometrics, then one should expect there to be a valuable payoff. This payoff could take the form of increased validity, but it could also come in the form of improved reliability, tests that are easier to adapt, better item security, improved ability to analyze subgroup differences, and so on.

Predictor Complexity

For Oswald, one of the more important differences between classical and modern psychometrics is in the level of predictor complexity. In classical psychometrics, the constructs—things like working memory, self-efficacy, interests, and personality factors—are purer and more determinant-like. That is, they correlate closely to one or more of the determinants of performance: declarative knowledge, procedural knowledge, or motivation.

By contrast, the complex measures of modern psychometrics involve amalgams of constructs. They are intended for such tasks as assessment of performance during multitasking activities and complex simulations, evaluation of work samples, and analysis of biodata. According to Oswald, it can be much harder to align them with the determinants of performance. Instead, they are more criterion-like, which makes it easier to apply them as measures relevant to various criteria. That can be a benefit in terms of providing greater validity, Oswald said.

The Big Picture

Continuing on, Oswald cautioned workshop participants that it is important to keep in mind that, whether the psychometrics being used are traditional or modern, the statistical concepts and practices underlying test development focus on just a small part of the broader context that is involved in understanding and predicting performance. To point out some of the things that are often missed, Oswald spoke briefly about what he called “the big picture.”

For example, validity studies are generally static: “You develop a new measure, you see whether it predicts a criterion down the road,” but often the data are all from one point in time. “I understand the constraints on a validity study firsthand,” Oswald said. “That may be all the data you have. But there is a bigger picture, whether you have the data to research it or not, and that is that dynamics are at play. Performance does change over time.” To the extent that it is possible to get data over time, such data can be quite valuable—not just of scientific value but also of practical value, as they allow an examination of changes in performance, commitment, attrition, and other criteria over time.

In addition, selection research typically reports validity coefficients that reflect direct effects between predictors and criteria—between working memory and job performance, for instance, or between interests and attrition. But there are a variety of mediators and moderators that lie between the predictors and the criteria and that play a role in explaining their relationships. Key explanatory variables include such things as organizational culture, management and leadership, team dynamics,

situational stability, intrinsic and extrinsic rewards, and training knowledge. “It is not a black box,” Oswald said. “It might be a black box in the sense that you might not [in a single study] be able to have all of these things [variables] for everybody at all points in time, but you might have enough people, at some points in time, to examine a mediating effect and get at this critical question [for selection] of whether the direct effect is really where all the prediction lies, or is the validity there because of the explanatory mediating effect?”

Finally, selection and classification—the focus of psychometric efforts—are part of a much larger organizational and even societal picture. Formal education and recruitment are part of a funnel that brings individuals into the Army, where they are subject to selection and classification and then to training and mentoring, which ultimately leads to individual and group performance. Then, based on performance, there is promotion, transfer, and transition. “Because selection is a critical part of this chain, selection is affected by this broader picture, and it affects the broader picture as well,” Oswald said. Therefore, he concluded, investment in an expanded and integrated systems approach to personnel selection and classification could provide great value.

In the discussion that followed, committee member Randall Engle expressed the opinion that essentially all of the constructs that Oswald spoke about—self-efficacy, working memory, and so on—are more or less poorly understood in some ways. He asked Oswald whether some subconstructs might be more important than others. Are there constructs that might either supplement or supplant the current ones in terms of their importance? It would seem that these sorts of things are important to understand, he said, and that it would be useful to have some sort of process for developing new and better understanding of the constructs.

Oswald agreed. “One danger of complex measurement is that you potentially obtain validity without that understanding,” he said. “By having a high validity, you can get stuck at the level of technology [the finding] and not the theory that is driving it.” He added that one benefit of understanding constructs better is that it helps one develop complex measures better.

Patrick Kyllonen, also a member of the committee, commented that Oswald had spoken a great deal about the relationships between constructs. He asked whether there might be cases where the measurement itself is as important as, if not more important than, the construct it is measuring. “For example,” he said, “we all might agree that conscientiousness as a construct is extremely important for success everywhere—

in education, the workforce. Yet, the way we measure conscientiousness primarily is with these rating scales….We are putting a lot of weight for a very important construct on this very simplistic measurement technique.” He asked what might be the expected “bang for the buck” in going after measurement methods, as opposed to figuring out such things as the relative weight of conscientiousness versus CSE.

Both avenues are important, Oswald replied, and he agreed with Kyllonen that it is important to pay attention to new and better measures.

Committee member Leaetta Hough added that while she agreed that it is important to address the measurement issue, she thought it would be premature to ignore constructs. “It wasn’t so long ago that we did not have conscientiousness or hard work as part of our performance models,” she said. “There may very well be other constructs out there that are important, too.”

Oswald responded that the two issues, measurement and constructs, are complementary. “The more we think about measurement, the more we have fidelity to the construct of interest. We need to have a deep conceptual understanding of our construct before we can go out substantiating it in one way or another.”

Chang, C., D.L. Ferris, R.E. Johnson, C.C. Rosen, and J.A. Tan. (2012). Core self-evaluations: A review and evaluation of the literature. Journal of Management, 38(1):81-128.

Digman, J.M. (1990). Personality structure: Emergence of the five-factor model. Annual Review of Psychology, 41(1):417-440.

Novick, M.R. (1966). The axioms and principal results of classical test theory. Journal of Mathematical Psychology, 3(1):1-18.

Oswald, F.L., D.Z. Hambrick, L.A. Jones, and S.S. Ghumman. (2007). SYRUS: Understanding and Predicting Multitasking Performance (NPRST-TN-07-5). Millington, TN: Navy Personnel Research, Studies, and Technology.

Sands, W.A., B.K. Waters, and J.R. McBride. (1999). CATBOOK Computerized Adaptive Testing: From Inquiry to Operation (Study Note 99-02). Alexandria, VA: U.S. Army Research Institute for the Behavioral and Social Sciences.

Tupes, E.C. (1957). Personality Traits Related to Effectiveness of Junior and Senior Air Force Officers (USAF Personal Training Research, No. 57-125). Lackland Air Force Base, TX: Aeronautical Systems Division, Personnel Laboratory.

Tupes, E.C., and R.E. Christal. (1961). Recurrent Personality Factors Based on Trait Ratings (ASD-TR-61-97). Lackland Air Force Base, TX: Aeronautical Systems Division, Personnel Laboratory.