Getting from Regulatory Compliance to Genuine Integrity: Have We Looked Upstream?

BRIAN C. MARTINSON

HealthPartners Institute for Education and Research

Introduction

My goals for this paper are (1) to discuss the importance of organizational climates as an important backdrop for the success of science and engineering ethics practice and education, and (2) to describe a recently validated climate assessment tool, the Survey of Organizational Research Climate (SORC), that organizational leaders can use to assess their own local climates, to motivate, target, and inform efforts to cultivate integrity in their institutions.

Organizational self-assessment is one form of the “looking upstream” mentioned in the title of this paper. What does it mean to “look upstream” and why might this be helpful for institutional leaders wishing to foster and maintain the integrity of research in their institutions? Among other things, it means looking beyond the individual researcher or scientist to understand the contexts in which they operate. It also implies that efforts to educate individuals in the responsible practice of research, by themselves, represent an incomplete and inadequate response. Some context may help to illustrate the idea.

Let’s consider the following propositions from the 2010 report of the Council of Canadian Academies (Council of Canadian Academies and the Expert Panel on Research Integrity 2010, p. 48, Box 3.2):

• Fabrication or falsification of data and plagiarism (FF&P) are rare but serious threats to the integrity of research and represent scientific misconduct as defined by the US government (OSTP 2000);

• Eradicating FF&P is primarily a matter of quickly catching and sanctioning individual “bad apple” researchers who engage in such behavior; and

• This is the primary means of ensuring integrity in research.

Just 25 years ago these statements would have been largely uncontroversial among most leaders of the US science community. That FF&P represent federally defined research misconduct is merely a statement of fact. However, can the assertion of the rarity of such behavior or the subsequent propositions be taken at face value? While some in the science community today would still readily endorse each of these propositions as factual, the accumulation of experience and evidence over the past 25 years has raised questions about their veracity.

Now let’s consider a completely unrelated but parallel set of propositions, again from the Council of Canadian Academies report:

• Mesothelioma is a rare but serious type of cancer that often affects the lung;

• Eradicating this type of cancer is primarily a matter of quickly identifying and treating (through surgery, radiation, and chemotherapy) “bad” or damaged mesothelial cells; and

• This is the primary means of ensuring lung health.

While the first proposition here seems incontrovertible, cancer researchers, those familiar with epidemiology, and others will quickly recognize that the second and third statements miss some important factors and should not be taken at face value. These propositions assert a tertiary prevention approach to dealing with mesothelioma that completely overlooks primary and secondary prevention efforts. One can choose to see mesothelioma as a problem of damaged cells in isolation from the systems of which they are a part, but such a limited perspective does not help at all in terms of understanding the etiology, causes, or prevention of such cell damage. One specific problem with this approach is that it misses the fact that environmental exposure to asbestos is a well-established risk factor for mesothelioma. It also misses threats to lung health other than mesothelioma.

A more comprehensive approach to ensuring lung health would include primary prevention efforts, such as population surveillance and screening, as well as reduced exposure to asbestos, tobacco smoke, and other airborne particulates, and would address threats to lung health other than mesothelioma. In other words, an appropriate understanding and response to ensuring lung health requires that one “look upstream” to understand the systemic aspects of the problem, attending to causally implicated environmental exposures and how they might be avoided or mitigated. Once diagnosed, the proper treatment of mesothelioma remains important, but in itself, without the application of other appropriate preventive measures, is an entirely insufficient approach to ensuring lung health more broadly.

In very much the same way, an appropriate understanding of integrity in research and actions to ensure it require that we look upstream. Guarding vigilantly against malfeasant individuals involved in the research process and properly handling cases of FF&P when they are discovered are clearly worthy endeavors—but in themselves insufficient to ensuring integrity in research. In this context, looking upstream means attending to relevant environmental exposures including the social, psychological, and economic conditions in and around the organizations in which researchers work.

One can consider the character of an organization in terms of its ethical culture or climate. In common usage when discussing organizations, the terms culture and climate are often used haphazardly and without clear definition. Yet the nature and meaning of these overlapping, but distinct concepts has been studied and hotly debated for more than 50 years among students of industrial/organizational psychology, and organizational behavior (Landy and Conte 2010). This is not the place to plumb the depths of this conversation, but briefly, in my use of the term organizational climate, I primarily have in mind what Schein would refer to as the first level of organizational culture—the visible organizational structure and processes that represent “artifacts” of the organizational culture (Schein 1991), which itself represents shared but underlying and often unspoken patterns of values and beliefs held by organizational members.

Having started by identifying US government–defined scientific misconduct, it is also useful to briefly consider what we mean by the term “research integrity.” I posit that, just as lung health

is about more than the absence of mesothelioma, integrity in research must encompass more than just the absence of a small but pernicious set of behaviors (FF&P). One useful definition comes from a 2010 report of the Irish Council for Bioethics (p. 6):

upholding standards in research refers to the application of particular ethical (and personal) values. Values that cannot, and should not, be separated from the research enterprise. Taken collectively, these core values encompass the concept of research integrity.…

Until fairly recently, the concept of “research integrity” had been largely defined in terms of its absence, with no real consensus statements about what the core values are that encompass the concept. That changed in October 2010 when no fewer than three largely independent but overlapping definitions appeared nearly simultaneously on the world stage. One comes from the report of the Irish Council for Bioethics cited above, Recommendations for Promoting Research Integrity (Irish Council for Bioethics, Rapporteur Group 2010). The second comes from a report, also referenced above, of the Council of Canadian Academies, Honesty, Accountability and Trust: Fostering Research Integrity in Canada (Council of Canadian Academies and the Expert Panel on Research Integrity 2010). The third definition, from the Second World Conference on Research Integrity, held in Singapore in July 2010, is called The Singapore Statement on Research Integrity (Steneck and Mayer 2010). More recently, a fourth definition was put forth by the InterAcademy Council of the InterAcademy Panel, in their November 2012 report, Responsible Conduct in the Global Research Enterprise: A Policy Report (InterAcademy Council and IAP 2012). All four definitions are grounded in a statement of core values or principles, all of which will be recognized as values that would be endorsed by most reasonable people as being fundamental.

• From the Irish Council of Bioethics: Honesty, Reliability and accuracy, Objectivity, Impartiality and independence, Open communication, Duty of care, Fairness, and Responsibility for future science generations

• From the Council of Canadian Academies: Honesty, Fairness, Trust, Accountability, and Openness

• From the Singapore Statement on Research Integrity: Honesty, Accountability, Professional courtesy and fairness, Good stewardship

• From the InterAcademy Council report: Honesty, Fairness, Objectivity, Reliability, Skepticism, Accountability, Openness

Beyond brief definitions of the listed values, each source identifies best practices that, if observed diligently, represent its specific vision of science conducted with integrity. In each case, these practices are directed largely toward individual scientists and researchers. Importantly, however, each source, to a greater or lesser extent, also identifies institutional responsibilities for ensuring the integrity of research. The 2012 report of the InterAcademy Council provides the most fully articulated list, with specific responsibilities identified for multiple institutional entities, including universities and other research institutions; public and private sponsors of research; and professional societies, journals, national academies of science, and interagency entities (InterAcademy Council and IAP 2012).

With these values in mind, as we define the state of ideal “health” for the science enterprise, we must look upstream to consider the systemic and local institutional factors that influence the extent to which the behavior of scientists evinces such health.

Looking Upstream

So, what can leaders of universities and other research institutions do to focus locally on factors that influence the integrity of science? For this we need to consider the local institutional climates within which scientists work. A useful resource for guidance in such an effort is the 2002 US Institute of Medicine (IOM) report, Integrity in Scientific Research: Creating an Environment That Promotes Responsible Conduct, to which I will refer as the 2002 IOM report.

The 2002 IOM report explicitly recognized the role of the local environment—the lab, the department, the university—in shaping the behavior of scientists. This is important because, unlike structural issues, the local organizational environment is something over which institutional leaders do have some authority and influence. Moreover, as I stated at the outset of this paper, I believe that the organizational settings and climates within which science and engineering ethics education takes place are key to the success of such an endeavor.

The 2002 IOM report promoted a performance-based, self-regulatory approach to fostering research integrity and made several specific recommendations to institutions seeking to create environments that promote responsible research conduct and foster integrity:

(1) establish and continuously measure their organizational structures, processes, policies, and procedures;

(2) evaluate the institutional environment supporting integrity in the conduct of research; and

(3) use the knowledge gained for ongoing improvement efforts.

These recommendations, along with the conceptual model at the heart of the 2002 IOM report, provide the primary basis and rationale behind the climate assessment tool I describe below, the Survey of Organizational Research Climate, and recommendations my collaborators and I have made for its use in a reporting and feedback system to organizational leaders.

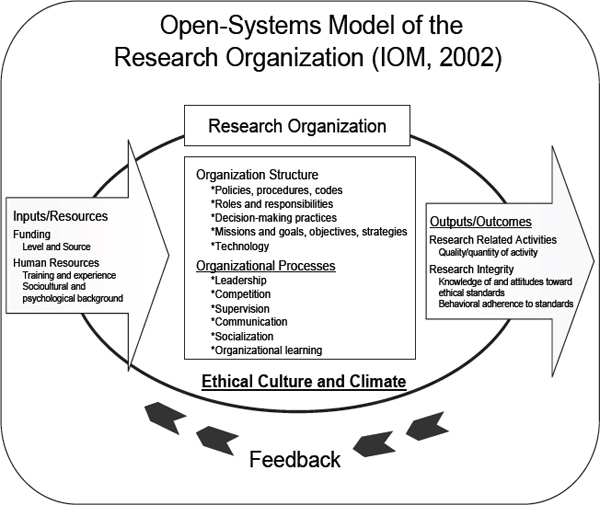

Chapter 3 of the 2002 IOM report includes a conceptual diagram (p. 51) that describes the “open systems model” of the research organization. This model explicitly acknowledges that what happens in organizations is in part a function of the inputs and resources available to them but that an organization’s outcomes and outputs—including the quality and integrity of the researchers and their research products—are also a function of the character of the organization itself. The IOM diagram (Figure 1) illustrates a dynamic system that has at its center the climate and culture of the research organization itself. This system takes inputs from the outside world (in the form of human resources, funding stream sources and levels, etc.) and its outputs take the form of both researchers and research-related activities, including the quality of both. I find this framing particularly helpful because it signals to organizational leaders that research integrity, and efforts to ensure it, are key indicators of the quality of their products, not merely boxes to check off to satisfy regulatory compliance requirements. The IOM conceptual framework thus draws attention to the central importance of organizational climates, in terms of structures, processes, policies, and practices.

FIGURE 1 IOM Conceptual Model

Assessment and Feedback Are Effective Mechanisms for Promoting Organizational Change

Since the publication of the IOM report on patient safety in health care, To Err Is Human (Kohn et al. 2000), extensive discussions and ongoing initiatives have aimed to shift the practice of medicine away from a culture of “shame and blame” and to create a culture of patient safety in medicine, particularly in hospital settings. Clear parallels exist between such patient safety initiatives and efforts to promote ethical climates in organizations (Fryer-Edwards et al. 2007; Sexton et al. 2006; Dyrbye et al. 2010; Wasserstein et al. 2007).

In a 2010 commentary, Dr. Lucian Leape makes the case for and against three methods for encouraging hospital engagement in safer practices to avoid infections (Leape 2010): regulation and accreditation, financial incentives, and reporting and feedback. He identifies reporting and feedback as the most potent of these, with more equivocal benefits for the other methods. Leape presents the National Surgical Quality Improvement Program (NSQIP), pioneered by the Department of Veterans Affairs in the 1990s, as an excellent example of how such a reporting and feedback system improved clinical care in the VA. In this system, the VA gathered information on the performance of surgical services that was then summarized into self- and

comparative performance reports and fed back to the VA surgical services to inform surgeons about their own absolute performance as well as their performance relative to other surgeons. Changes resulting from this process included large improvements in below-average units and systemwide declines in complication and mortality rates (Leape 2010).

I believe that applying this kind of reporting and feedback process can be similarly effective for promoting improvements in research integrity climates at universities and other research institutions. A key distinction is that, unlike the methods focused on compliance with external regulatory forces, such as regulation, accreditation, or the use of financial incentives, the type of reporting and feedback system I propose is grounded in generating the knowledge and motivation that will support genuine self-regulation in organizations.

A Tool for Organizational Self-Assessment: The Survey of Organizational Research Climate (SORC)

At the time of the 2002 IOM report, no gold-standard measures of institutional environments existed to facilitate a proactive, self-regulatory approach to research integrity. Beginning in 2006, partly in response to the IOM report, Carol Thrush and colleagues began developing a survey-based instrument based largely on the IOM’s conceptual framework (Vander Putten and Thrush 2006; Thrush et al. 2007). My own collaboration with Dr. Thrush began shortly thereafter, and our subsequent work has led most recently to the development and validation (content, criterion, and predictive validity) of the Survey of Organizational Research Climate, which measures key institution-level factors to deal with threats to research integrity (Martinson et al. 2012; Crain et al. 2012).

The SORC is a self-assessment tool for organizations to gauge employee perceptions of responsible research practices and the state of an organization’s research climate. It is appropriate for use in a broad range of fields/disciplines and across multiple positions (e.g., graduate students, postdoctoral fellows, faculty, research scientists). The SORC provides institutional leaders with both baseline assessments of the research integrity climate and metrics to assess aspects of climate that are mutable and/or subject to change in response to organizational change initiatives aimed at promoting research integrity. By focusing on measurement of organizational structures and processes, the SORC is distinct from other recent efforts to measure “ethical work climates,” which have focused primarily on tapping the moral sensitivity and motivations of organizational members (Arnaud 2010).

Our primary validation of the instrument was conducted with a sample of faculty and postdocs from academic medical centers at 40 top-tier research universities in the United States. Secondary, external validation data are from the Council of Graduate Schools’ Project on Scholarly Integrity, for which a preliminary, prevalidated version of the instrument was used to assess the research integrity climates of seven participating universities (see paper by Julia Kent in this publication).

The SORC consists of seven subscales to assess mutable aspects of universitywide and department-specific climates that might be targeted for change:

• quality of regulatory oversight activities by institutional review boards (IRBs) and institutional animal care and use committees (IACUCs) (3 question items)

• quality and availability of resources pertaining to the responsible conduct of research (RCR) (6 items)

• extent to which research integrity norms exist in departments/centers (4 items)

• extent to which activities take place to socialize researchers into these norms (4 items)

• quality of advisor-advisee relations (3 items)

• reasonableness of research productivity expectations in departments/centers (2 items)

• extent to which factors in the local environment may inhibit research integrity (6 items)

The instrument is sufficiently short (32 question items total) that it can be used in its entirety or, if there are particular areas of interest, specific subscales can be selected for use. The survey measures individual perceptions of responsible research practices and conditions in local environments, as illustrated in the following sample questions:

• How committed are the senior administrators at your university (e.g., deans, chancellors, vice presidents) to supporting responsible research?

• How consistently do administrators in your department (e.g., chairs, program heads) communicate high expectations for research integrity?

• How effectively are junior researchers socialized about responsible research practices?

• How effectively do the available educational opportunities at your university teach about responsible research practices (e.g., lectures, seminars, web-based courses)?

• How respectful to researchers are the regulatory committees or boards that review the type of research you do (e.g., IRB, IACUC)?

• How true is it that people in your department are more competitive with one another than they are cooperative?

The measures themselves do not inform us about individuals’ behavior or performance, but from our validation study we know that the measures of climate correlate as expected with self-reported measures of research-related behavior ranging from the ideal to the undesirable (Crain et al. 2012). Moreover, by aggregating individual responses in organizational subunits (e.g., departments), the SORC enables meaningful assessments of group-level perceptions of an organization’s environmental conditions.

Feedback of SORC Data to Organizational Leaders

In keeping with the notion that this reporting and feedback system is designed for “internal consumption” of the information, after the collection of responses to the SORC instrument from the appropriate segments of their organizational membership, the data must be summarized and set up for feedback to department chairs, deans, program leaders, and others in organizational leadership positions. Given competing demands on their time and attention, it is important to summarize the data in readily digestible formats. Appropriate aggregation of data by organization and organizational subunit is also necessary to protect the identity of individual respondents.

Table 1 provides an example of a “dashboard” report that might be used to convey the survey results. In this example, we obtained responses from 16 members of department “A,” which has been anonymized with respect to both institution and field of study. The rows display the seven climate scales and a score for global climate of integrity. The columns show the department’s average scores on the SORC scales and its percentile ranking, providing comparative data for this department relative to others in this institution. The four columns in the right-hand panel of the table present further comparative data, with the relative climate scores for this department compared to the scores of others aggregated across multiple universities.

TABLE 1 Example summary report for anonymous “Department A”

| Graduate Program: N of Cases: |

Department A 16 |

Field of Study: Broad Field of Study: |

|||||

| Your Department’s Results | Comparative Results (Relative to Avg) | ||||||

| Integrity Climate Scales |

Average Score |

Percent ≥ 4.5 (Scale of 1-5) |

Program’s Percentile Rank |

All Depts 75th Percentile |

Field of Study Average |

Broad Field Of Study Average |

University Average |

| Integrity Norms | 4.15 | 16.7% | 43 | 4.28 | 4.15 | 4.17 | 4.17 |

| Integrity Socialization | 3.63 | 15.4% | 64 | 3.73 | 3.63 | 3.50 | 3.52 |

| Integrity Inhibitors | 4.42 | 54.5% | 93 | 4.16 | 4.42 | 4.03 | 3.94 |

| Advisor-Advisee Relations | 4.04 | 21.4% | 71 | 4.06 | 4.04 | 3.89 | 3.90 |

| Departmental Expectations | 4.00 | 35.7% | 64 | 4.04 | 4.00 | 3.70 | 3.83 |

| Regulatory Quality | 3.90 | 28.6% | 73 | 3.91 | 3.90 | 3.72 | 3.72 |

| Integrity Resources | 3.43 | 33.3% | 52 | 3.63 | 3.43 | 3.43 | 3.42 |

| Global Climate of Integrity | 4.47 | 60.0% | 75 | 4.47 | 4.47 | 4.26 | 4.34 |

Ultimately, we envision a national data repository that would accumulate anonymized data from a large number of institutions, thereby allowing more tailoring of data such that a university or department could receive comparative data specific to similar organizations; for example, a Tier 1 research university might best be compared to other Tier 1 research universities, and a department of chemistry is most appropriately compared to the aggregation of other departments of chemistry.

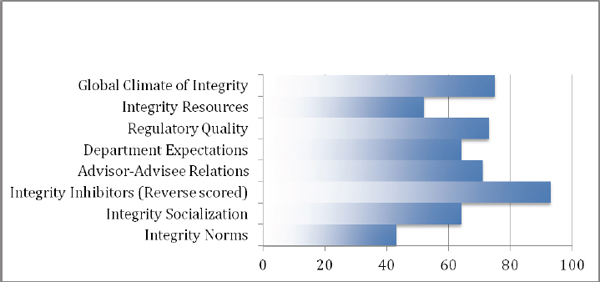

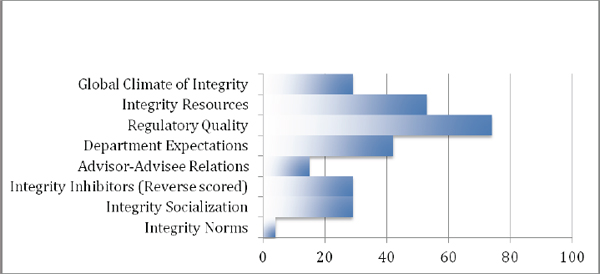

In addition to the numeric presentation of these data, some leaders may prefer a more graphical/visual presentation of the information. Figure 2 gives such a visually oriented presentation of the percentile-based comparative data for Department A (Figure 2A), as presented in Table 1, and for another anonymized Department B (Figure 2B), whose percentile rankings differ substantially from those of Department A.

Among the valuable aspects of this kind of feedback is the fact that a given organization receives tailored information not only about which aspects of climate are particularly strong or weak for their institution overall but also about which organizational subunits are particularly strong or weak. Such information can help organizational leaders expend their efforts to support research integrity with some specificity and intentional targeting—as opposed to implementing blanket policies or practices that may be both more expensive and less effective. Moreover, to further facilitate such targeting, if an organizational unit scores very low or very high on a particular scale, the leader of that unit can obtain the summary data, appropriately aggregated to protect individual anonymity, for the individual question items comprising that scale.

Figure 2A Example graphical display of percentile rank data-Department A

Figure 2B Example graphical display of percentile ranks-Department B

Conclusion

Misbehavior in science has typically been interpreted as a failing of the individual. But scientists don’t behave in a void and are not immune to the influence of the situational imperatives of their positions in the structures of the science enterprise. And while structural and cultural reforms may be needed to address the root causes of a variety of undesirable behaviors, it seems clear that the local organizational climate is an important influence on researchers, and one that is subject to influence by local organizational leaders. Tools are increasingly available to assist organizational leaders in creating and sustaining local research environments that evince genuine research integrity, not just regulatory compliance. Have you looked upstream?

Acknowledgements

I want to acknowledge the colleagues who have contributed to this work: Carol R. Thrush, University of Arkansas for Medical Sciences (Co-PI), and A. Lauren Crain, HealthPartners Institute for Education and Research (Co-PI), R21-RR025279 from the NIH National Center for Research Resources and the DHHS Office of Research Integrity

through the collaborative Research on Research Integrity Program. I would also like to acknowledge the collaborators with whom we had the pleasure of consulting (with the financial support of Michigan State University) on the Council of Graduate Schools’ Project on Scholarly Integrity: James A. Wells, Eileen C. Callahan, Karen L. Klomparens, Terry A. May, Michelle Stickler, Suzanne C. Adair, and Hank Foley.

Disclaimers: The content is solely the responsibility of the author and does not necessarily represent the official views of the National Center for Research Resources, the National Institutes of Health, or the Office of Research Integrity. There are no conflicts of interest for the author of this manuscript or any of his collaborators.

References

Arnaud A. 2010. Conceptualizing and measuring ethical work climate: Development and validation of the Ethical Climate Index. Business & Society 49(2):345–358. doi:10.1177/0007650310362865.

Council of Canadian Academies and Expert Panel on Research Integrity. 2010. Honesty, Accountability and Trust: Fostering Research Integrity in Canada. Ottawa, ON. Available online at www.scienceadvice.ca/uploads/eng/assessments%20and%20publications%20and%20news%20releases/research%20integrity/ri_report.pdf.

Crain AL, Martinson BC, Thrush CR. 2012. Relationships between the Survey of Organizational Research Climate (SORC) and self-reported research practices. Science and Engineering Ethics. Published online (October 25): 1–16. doi:10.1007/s11948-012-9409-0.

Dyrbye LN, Massie FS, Eacker A, Harper W, Power D, Durning SJ, Thomas MR, Moutier C, Satele D, Sloan J, Shanafelt TD. 2010. Relationship between burnout and professional conduct and attitudes among US medical students. JAMA 304:1173–1180. doi:10.1001/jama.2010.1318.

Fryer-Edwards K, Van Eaton E, Goldstein EA, Kimball HR, Veith RC, Pellegrini CA, Ramsey PG. 2007. Overcoming institutional challenges through continuous professionalism improvement: The University of Washington experience. Acad Med 82:1073–1078.

InterAcademy Council and IAP. 2012. Responsible Conduct in the Global Research Enterprise: A Policy Report. Amsterdam.

IOM [Institute of Medicine]. 2002. Integrity in Scientific Research: Creating an Environment that Promotes Responsible Conduct. Washington: National Academies Press. Available online at www.nap.edu/openbook.php?isbn=0309084792.

Irish Council for Bioethics, Rapporteur Group. 2010. Recommendations for Promoting Research Integrity. Dublin: Irish Council for Bioethics. Available online at http://irishpatients.ie/news/wpcontent/uploads/2012/04/Irish-Council-of-Bioethics-Research_Integrity_Document.pdf.

Kohn LT, Corrigan JM, Donaldson MS, eds. 2000. To Err Is Human: Building a Safer Health System. Washington: National Academies Press.

Landy FJ, Conte JM. 2010. Work in the 21st Century: An Introduction to Industrial and Organizational Psychology. New York: Wiley.

Leape LL. 2010. Transparency and Public Reporting Are Essential for a Safe Health Care System. The Commonwealth Fund. Available online at www.commonwealthfund.org/Publications/Perspectives-on-Health-Reform-Briefs/2010/Mar/Transparency-and-Public-Reporting-Are-Essential-for-a-Safe-Health-Care-System.aspx#citation.

Martinson BC, Thrush CR, Crain AL. 2012. Development and validation of the Survey of Organizational Research Climate (SORC). Science and Engineering Ethics. Published online (October 25): 1–22. doi:10.1007/s11948-012-9410-7.

OSTP [Office of Science and Technology Policy]. 2000. Federal Policy on Research Misconduct. Available online at www.ostp.gov/cs/federal_policy_on_research_misconduct.

Schein EH. 1991. What Is Culture? In Reframing Organizational Culture, ed. Frost PJ, Moore LF, Louis MR, Lundberg CC, Martin J. pp. 243–253. Newbury Park, CA: Sage Publications.

Sexton JB, Helmreich RL, Neilands TB, Rowan J, Vella K, Boyden J, Roberts PR, Thomas EJ. 2006. The safety attitudes questionnaire: Psychometric properties, benchmarking data, and emerging research. BMC Health Serv Res 6:44.

Steneck N, Mayer T. 2010. Singapore Statement on Research Integrity. Drafted at the Second World Conference on Research Integrity, July 21–24, Singapore. Available online at www.singaporestatement.org/.

Thrush CR, Vander Putten J, Rapp CG, Pearson LC, Berry KS, O’Sullivan PS. 2007. Content validation of the Organizational Climate for Research Integrity (SORC) Survey. Journal of Empirical Research on Human Research Ethics 2:35–52.

Vander Putten J, Thrush CR. 2006. Organizational culture for research integrity in academic health centers.” In Office of Research Integrity 2006 Conference on Research Integrity. Tampa, FL.

Wasserstein AG, Brennan PJ, Rubenstein AH. 2007. Institutional leadership and faculty response: Fostering professionalism at the University of Pennsylvania School of Medicine. Acad Med 82:1049– 1056.