3

Improving Job Performance Criteria for Selection Tests

Every employer is faced with the need to balance the costs of selecting high-ability personnel against the anticipated performance gains. Yet all too often, selection policy is made in the absence of reliable information about the performance gains that can reasonably be expected when people are selected on the basis of test scores (or other indicators of ability), not to mention the paucity of knowledge about whether such performance gains have a payoff commensurate with the costs. The Job Performance Measurement/Enlistment Standards (JPM) Project was prompted by the need of the military policy community for a better grasp of the complicated linkages between entry characteristics, job performance, and costs.

The design of the JPM Project is best appreciated against the historical backdrop of public debate about the future prospects of the all-volunteer force. As we recounted in the Overview, the uncertainties introduced by the misnorming of the Armed Services Vocational Aptitude Battery (ASVAB) and the general dismay in Congress and the Department of Defense when it became clear that the resulting inflation of scores meant that, not 20 percent, but closer to 50 percent of those enlisted between 1976 and 1980 were in Category IV (percentile score range 10 to 30) created a good deal of doubt about the ASVAB, not to say skepticism about voluntary military service.

To combat this doubt about the DoD-wide selection test and its value in building a competent military force was going to require a concrete demon

stration of that value. From its inception, the central premise of the JPM Project was that a good behavioral criterion measure was the necessary condition for demonstrating the predictive value of the ASVAB.

THE CONCEPTUAL FRAMEWORK

Phase I: Developing Performance Measures

The first—and in the early years, the most pressing—goal of the JPM Project was a retrospective examination of the ASVAB to determine if its value for predicting job performance was sufficient to support its continued use.

Given that the purpose of employment testing is to identify those who will be capable of successfully meeting the demands of the job or jobs in question, the testing enterprise should begin with a conceptual and operational definition of success. Grade-point average is an unusually pertinent measure of success for validating tests used to screen applicants for admission to colleges, universities, and professional schools. No equally satisfying measure is readily available in most employment situations, however.

Traditionally, the use of military entrance tests to determine enlistment eligibility has been justified with the criterion of success in technical training school. Training school outcomes are recorded in each enlistee 's administrative file and, at least until the widespread introduction of pass/fail grading and self-paced instructional systems, they provided a reasonable measure of the relative success of trainees that could be related to scores on selection tests. Minimum entrance standards were typically set so that at least 90 percent of recruits could pass the regular training course (Eitelberg, 1988).

But, as Jenkins (1946, 1950) taught an earlier generation, performance in technical training is not the same as performance on the job. Although technical training is organized fairly narrowly by job specialty (e.g., jet engine mechanic, avionics specialist, machinist's mate), it provides only a partial introduction to a job—witness the amount of on-the-job training required for many military occupations. Moreover, the instructional emphasis in technical training is largely job knowledge, which does not translate directly into actual performance. And finally, success in technical training requires academic skills that may not be of great importance in the workplace. To the extent that training departs from actual job requirements, either in what it omits or in the additional skills or characteristics it demands, its value for validating selection and classification procedures is lessened.

In the context of the debate about voluntary military service and congressional reactions to the misnorming episode, the training criterion no longer offered a credible defense of the ASVAB as an effective instrument for

selecting a competent military force. Hence, DoD's overriding concern became the development of criterion measures that were as faithful as possible to actual job performance.

The JPM Project is highly unusual in having applied classical test construction methods to the development of criterion measures. It is perhaps unique in having chosen the hands-on, job-sample test as the premier, or benchmark, measure. That decision could bring about a substantial change in the way empirical validation studies are conducted in the future.

Jobs Viewed as a Collection of Tasks

The project's commitment to building a measurement system that closely replicates what people do on the job ordained a preference in the research design for the concrete and observable over the abstract. This orientation influenced both the definition of what constitutes a job and what aspects of performance would be assessed.

The JPM Project defines a job as “a formally specified set of interrelated tasks performed by individuals in carrying out duty assignments” (internal memorandum, October 10, 1983). Each task in turn can be broken down into a series of steps to be executed, so that job performance consists of a prescribed set of observable acts or behaviors. This definition of a job corresponds with what the Army and Marine Corps call a military occupational specialty (MOS), and the other two Services, an Air Force specialty (AFS) and a Navy rating. It is important to note the words formally specified and prescribed in the definition above; a job in the military is what policy makers say it is. In the 1970s, the Air Force developed occupational surveys that have become the model for all the Services. Each Service now conducts periodic surveys of job incumbents, using a task-inventory approach, to define the content of each occupational specialty in terms of job tasks and to determine specific job requirements. Detailed specifications of job requirements are vetted by officials in each Service and then become a matter of doctrine.

In adopting the existing and highly articulated system of defining jobs in terms of their constituent tasks, the JPM Project availed itself of a rich body of data on the task content of particular jobs. These data are contained in the Soldier's Manuals (Army), the Individual Training Standards (Marine Corps), and the computerized task inventories (based on the Air Force Comprehensive Occupational Data Analysis Program, or CODAP, system), from which they are drawn. At the same time, conceptualizing a job as a set of tasks is a highly particularistic approach because the exact content of a task or the sequence of its component steps might vary, say, from one type of jet engine to another. The project designers recognized from the beginning that the very concreteness of tasks and steps raised the possibility that

performance measurements might not be comparable across apparently similar jobs or even across subspecialties within a job.

Assessment of Job Proficiency

The JPM Project defines job performance as “those behaviors manifested while carrying out job tasks.” Since the primary aim of the project is to provide performance-based empirical support for enlistment and classification decisions, individual (as opposed to group or unit) performance was made the object of measurement. To delimit further the universe of interest, a distinction was drawn between the can-do and will-do aspects of job performance, which translate roughly into proficiency and motivation. Job proficiency was chosen as the central criterion because the AFQT tests are measures of cognitive skills and have little or no relation to motivational factors.

In line with these decisions, the project has concentrated on the evaluation of the individual performance of enlisted personnel who are in their first tour of duty and who have had at least six months experience on the job. The primary indicator of job performance is individual proficiency on a set of tasks specific to a job—tasks that elicit “manifest, observable job behaviors” that can be scored dichotomously (go/no go, pass/fail) as a prescribed series of steps (internal memorandum, October 10, 1983).

The JPM Criterion Construct

In the field of personnel research, the use of work samples to predict job success is becoming increasingly popular. A work sample is an actual part of a job, chosen for its representativeness and importance to success on that job, that is transformed into a standardized test element of some sort so that the performance of all examinees can be scored on a common metric. Thus, for the job of secretary, a work-sample test might include a word processing task, a filing task, and a form completion task. For the job of police sergeant, it might involve detailing officers to particular sectors, checking completed police reports for accuracy, and resolving a dispute between two officers about the use of a two-way radio. The notion is that if one can identify an aspect of job performance that is crucial to success in that job, one cannot help but have a valid predictor. The validity is built into the predictor. Historically, the use of work samples has been assumed to satisfy the demands of the content-oriented validation model or design (Campbell et al., 1987; Landy, 1989). The strength of this approach is that it ensures that the construct of job performance is fairly represented in the predictor. If the work-sample units are chosen with care to represent the important aspects of the job, the validity should be axiomatic.

The JPM Project took this work-sample logic as a point of departure and then added a dramatic twist. Instead of developing work-sample measures for the predictor side of the equation, the project embarked on an ambitious attempt to develop work samples for the criterion side of the equation. The heterogeneity of jobs and work environments in the Services militates against the widespread use of work-sample tests for selection and classification, as does the fact that most recruits are without prior training or job experience. The Services, for example, do not enlist electricians or mechanics, by and large; they train people to become such. With over 600 jobs across the four Services and the need to screen approximately 1 million young people annually during the 1980s to select some 300,000 recruits to fill those jobs, there was substantial interest in the continued use of the ASVAB, perhaps revised or expanded, for selection and classification.

Given the desire to strengthen the credibility of the ASVAB, it followed that the best possible criterion should be chosen for inclusion in a validation study in order to give the ASVAB a reasonable opportunity to reveal its predictive value. For the same reasons that work samples are chosen as predictors, they are prime candidates for the criterion side of the equation. They bring with them an aura of self-evident validity because they consist of hands-on performance of actual job tasks or portions of tasks (change a tire, dismantle a claymore mine). They are as faithful to actual job performance as criterion measures can be, short of observing people in their daily work, and they also allow for standardization (albeit with difficulty, as Chapter 5 illustrates).

As the most direct indicator of the underlying construct of job performance, the hands-on performance test was given primacy in the project in terms of resource expenditures. It was also accorded scientific primacy. Project designers agreed that the hands-on test would be the benchmark measure, the standard against which other, less faithful representations of job performance would be judged.

Benchmarks and Surrogates

The hands-on test is an assessment procedure honored more in the breach than in the observance. Despite the inherent attractiveness of assessing actual performance in a controlled setting, the enormous developmental expense and the logistical difficulties in administering hands-on tests have meant that the methodology has been largely unrealized until now. The performance rating, despite its many and well-documented frailties, continues to be, by far, the most common criterion measure used to validate employment tests (Landy and Farr, 1983).

The expense and sheer difficulty of collecting hands-on data convinced the project designers of the need to develop a range of performance mea

sures, one or a combination of which might turn out in the long run to be a viable substitute for the hands-on measures. All four Services were to develop hands-on measures for several jobs, and each Service agreed to take the lead in developing a particular type of surrogate measure for its jobs as well. For example, the Army developed paper-and-pencil job knowledge tests covering both the tasks to be tested hands on and additional tasks; the Air Force developed interview procedures; and the Navy developed simulations of its hands-on tasks. Most of the surrogate measures also reflect a task-based definition of jobs as the point of departure, but they differ from the hands-on tests in the fidelity with which they represent job performance.

Phase II: Linking Enlistment Standards to Job Performance

Assuming success with Phase I—the development of accurate, valid, and reliable measures of job performance, the second objective of the project was to link job performance to enlistment standards. The meaning of that linkage has evolved during the life of the project, however. Initially, to allay concerns about the quality of military recruits, the project designers focused on using the performance data in validation studies of the ASVAB. The validation effort would determine whether individual differences in ASVAB scores were correlated with individual differences in performance scores. The index of validity would indicate how closely the ranking of a group of people on job performance replicated their ranking on the predictor.

Strengthening the empirical basis of military selection by itself, however, would not resolve the policy concerns that prompted the project. As the current economic climate makes obvious, the quality of the enlisted force becomes at some point a question of resources, of weighing performance against costs. To contribute to this decision, the project would have to provide information that enables policy officials to make rational inferences from test scores to overall job competence. From this vantage point, the crucial question with regard to enlistment standards is not so much whether the ASVAB is a valid selection instrument, but how high the standards need to be to ensure that service personnel can do their assigned jobs competently. The hope was that the job performance data gathered in Phase I of the project would permit development in Phase II of models for setting quality standards that would enable DoD to estimate the minimum cost required to achieve alternative levels of performance and to evaluate the policy trade-offs.

The critical concept here is the relation of the mental enlistment standard (a combination of minimum test score and high school graduation status) to the distribution of expected job performance. If the Services were in a position to select from just the very high scorers on the AFQT—say, cat

egories I and II, and if the correlation between the AFQT scores and job performance was reasonably strong, then one could expect that many, if not most, of those selected would turn out to be highly competent performers. But this would be neither socially acceptable nor economically feasible. In reality, a far wider range of scores must be included in the selection pool, and military planners cannot expect a preponderance of outstanding performers. They must anticipate a mix of performance based on selection from several predictor categories (I through IV). Each aptitude category adds an additional layer to the mix. Policy makers need to know what the effect will be on the accomplishment of each Service's mission if changes are made in enlistment standards—if the mix of recruits from each predictor category (I through IV) is altered in some particular manner. The extent to which such sophisticated projections can be made depends, to a great extent, on how closely enlistment standards (e.g., ASVAB scores) can be tied to on-the-job performance. The effort to develop the linkage between enlistment standards, performance across military jobs, and a variety of recruiting, training and other costs got under way in 1990. The dimensions of the problem are discussed in Chapter 9.

THE RESEARCH PLAN

The long-term goal of military planners is to have a DoD-wide program for collecting job performance data that can be related to recruit capabilities. With the hands-on test as its anchor in reality, the JPM Project sought to develop a comprehensive set of performance measures for a sample of military jobs so that it would be possible to evaluate the relative merits of the various types of measures. It was also hoped that the project would be able to identify one or a combination of measures that would be less expensive and easier to administer for an ongoing program of performance measurement than the hands-on test. The initial hands-on test data were also intended to be robust enough psychometrically to provide the data pool for testing the Phase II standard-setting models.

Selection of Occupations for Study

The project's emphasis on concrete, observable job behavior necessarily implied a focus on specific jobs and the development of proficiency measures that would reflect the performance requirements of a particular job. The Services used the following seven criteria, enumerated in the Second Annual Report to Congress on Joint-Service Efforts to Link Standards for Enlistment to On-the-Job Performance (Office of the Assistant Secretary of Defense—Manpower, Reserve Affairs, and Logistics, 1983), to select the occupational specialties for study:

-

The military specialties selected should be of critical importance.

-

There should be enough people assigned to the job to ensure adequate sample size.

-

The population of job incumbents should be sufficiently concentrated to allow data collection at a small number of military bases.

-

Important tasks of the job should be measurable.

-

Problems in the specialty (e.g., attrition) should be known and well documented.

-

The job should include enough minorities and (where applicable) women to permit evaluation of the impact the measurement procedures would have on these groups.

-

The set of jobs selected should be a reasonable cross-section of the major aptitude areas measured by the ASVAB (electronic, mechanical, administrative, and general aptitudes).

There was some overlap in the jobs selected for the project by the four Services, but each Service also selected a number of occupational specialties that best represented its special mission—for example, infantryman for the Marine Corps and air traffic controller for the Air Force. The jobs for which each Service agreed to develop performance measures are identified below.

Army

The Army selected the following nine military occupational specialties for study:

Infantryman

Cannon crewman

Tank crewman

Radio teletype operator

Medical specialist

Light wheel vehicle/power generation mechanic

Motor transport operator

Administrative specialist

Military police

As part of a larger study of job performance, called Project A, the Army developed criterion measures for an additional 10 occupations, but the critical hands-on measures were developed only for the 9 jobs listed above.

Navy

The Navy is developing hands-on performance measures for six ratings (jobs):

Machinist's mate

Radioman

Electronics technician

Electrician's mate

Fire control technician

Gas turbine technician, mechanical

In addition, for a special Joint-Service technology transfer demonstration, the Navy is adapting a package of performance measures developed by the Air Force to the corresponding Navy and Marine Corps job of aviation machinist's mate (jet engine). And in another demonstration of technology transfer, the Navy is recasting the test items developed for the machinist's mate and aviation machinist's mate ratings for a related Navy rating, gas turbine technician (mechanical).

Air Force

The following eight specialties were selected for the Air Force component of the project:

Jet engine mechanic (M)

Aerospace ground equipment mechanic (M)

Personnel specialist (A)

Information systems radio operator (A)

Air traffic control operator (G)

Aircrew life support specialist (G)

Precision measurement laboratory equipment specialist (E)

Avionic communications specialist (E)

The specialties are evenly divided among the four ASVAB aptitude area composites: In descending order, the first two specialties listed are predicted by the mechanical (M) composite; the third and fourth, by the administrative (A) composite; the fifth and sixth specialties by a general (G) composite and the final two by the electronic (E) composites.

Marine Corps

The Marine Corps focused on testing multiple specialties within occupational fields (functionally similar collections of specialties). Occupational fields were selected to be representative of the four Marine Corps aptitude composites. The infantry occupational field was tested to represent the general technical aptitude composite. Performance measures were developed for five infantry specialties:

Rifleman

Machinegunner

Mortarman

Assaultman

Infantry unit leader (a second-tour position)

In the spring of 1989, the Marines began the study of eight specialties in the motor transport and aircraft maintenance occupational fields (representing the mechanical maintenance aptitude composite). Data collection for the following specialties was completed in 1990:

Motor transport occupational field:

Organizational automotive mechanic

Intermediate automotive mechanic

Vehicle recovery mechanic

Motor transport maintenance chief (a second-tour position)

Aircraft maintenance occupational field:

Helicopter mechanic, CH-46

Helicopter mechanic, CH-53A/D

Helicopter mechanic, U/AH-1

Helicopter mechanic, CH-53E

Future plans call for similar data collection efforts of multiple specialties for the data communications maintenance and avionics occupational fields (representing the electronics repair aptitude composite) and the personnel administration and supply administration occupational fields (representing the clerical/administrative aptitude composite).

Types of Criterion Measures Developed

Hands-On Tests

Hands-on tests were developed for 28 occupational specialties. Each hands-on test consisted of a sample of about 15 tasks and took from 4 to 8 hours to administer. Task performance was evaluated by having the examinee actually do a particular instance of a real task or a very close replica of it. Each behavioral step in the performance of the task was scored either pass or fail by a trained observer. The number of steps in each task ranged from about 10 to 150.

The hands-on work-sample test involved observation of a job incumbent performing a sample of important, difficult, and/or frequently performed tasks in a controlled setting and with the appropriate equipment or tools.

For example, the testing of naval machinist's mates took place aboard ship in the engine room. One task required the sailor to perform casualty control procedures that would be put into action if an alarm signaled loss of pressure in the main engine lube oil pump. Examinees were graded as they went through the prescribed responses to the emergency—checking for proper operation of stand-by pumps, checking the appropriate gauges, meters, and valves to locate the source of the problem, taking corrective action if appropriate, and reporting the casualty to the appropriate supervisor. For any steps that were not observable, such as visual checks of a gauge or valve, examinees were instructed to touch the relevant piece of equipment and tell the test administrator what action was taken and what the examinee had determined (Chapter 5 includes additional details on how testing was conducted).

In many complicated jobs involving machinery, technical manuals specifying repair or maintenance procedures are used in day-to-day operations. Jet engine mechanics, for example, are required to work according to the manual. The potential for damage to expensive equipment or loss of life is simply too great to rely on memory. Part of the hands-on test in such jobs was the selection of the appropriate technical manual and identification of the relevant procedures for accomplishing the task at hand.

Certain kinds of tasks, particularly tasks that are primarily intellectual in character, do not lend themselves easily to hands-on tests. Ways were found, albeit with some loss of fidelity, to do so. One of the tasks for Marine Corps infantrymen, for example, assessed the subject 's ability to move a platoon through enemy territory and respond to various impediments, such as artillery emplacements or passing convoys. In order to elicit the first-termer's understanding of small-unit tactical maneuvers, the Marine Corps used a TACWAR board, a large, three-dimensional model of a stretch of terrain. Such models are typically used in training or in preparation for military action. In this instance, the test administrator presented a scenario and the subject responded by moving chips representing an infantry platoon.

Walk-Through Performance Tests

The Air Force developed walk-through performance tests, a novel type of test that combines hands-on measures with interview procedures. Examinees actually perform some tasks; for others, they describe how they would do the task. The interview component was developed as a means of assessing those tasks for which hands-on tests would be too time-consuming or expensive or would entail too great a risk of injury or damage to equipment.

Like the straight hands-on procedure described above, the walk-through performance test is administered in a worklike setting, with the relevant

equipment at hand. The interview tasks are administered as a structured questionnaire, and the examinee demonstrates proficiency on the task by way of oral responses and gestural demonstrations of how the steps in the task would be carried out. This is a more interactive procedure than the hands-on test, and the behaviors assessed are both less concrete and less observable.

Simulations

The Navy took the lead in developing performance tests that are detailed simulations of a real task. Two levels of fidelity to actual performance were attempted. The first, and more faithful, measure was administered through computer-based interactive video disks; for example, dials representing pressure gauges in a ship's engine room would respond with higher or lower pressure readings according to the actions of the examinee. The second simulation was a paper-and-pencil analogue that made liberal use of illustrations and pictures of actual equipment.

Job Knowledge Tests

The project also made use of the traditional multiple-choice test instrument to measure task proficiency in specific military jobs. The Army and the Marine Corps developed job knowledge tests for each job they studied, and the Air Force for half of the specialties. Some individual test items corresponded to a certain task or to a specific step within a task, and others related to more global aspects of the job that could not be assessed in the hands-on mode. Whatever the level of specificity, the items tended to ask about or portray perceptions, decisions, and actions that corresponded closely to behavioral operations. All of the tasks the two Services assessed in the hands-on mode were also covered in this format to permit comparisons of the two assessment procedures. For the sake of more extensive coverage of the job domain, additional tasks were included as well.

Ratings

Performance evaluations or ratings are the most common technique for assessing job performance, and as such, they were included among the surrogate performance measures studied in the project. The Army, the Navy, and the Air Force developed rating forms of one type or another to elicit the judgments of supervisors, peers (those who worked side-by-side with the subject), and the examinee. These included ratings of task-level and more global performance related to a specific job, task-level performance common to many jobs (in the military, even a cook or a musician must know

how to use a weapon or protect against biological or chemical agents), dimensional ratings, and ratings of general performance and effectiveness (e.g., military bearing and leadership).

Two types of rating scales were experimented with, one calling for an absolute rating and the other for an indication of the standing of the subject relative to others. To make the rating process more concrete, the absolute rating scales were accompanied by behavioral illustrations of each anchor point.

Types of Predictors Assessed

In addition to studying the relation between various criterion measures and the ASVAB, the Services looked at a variety of other predictors as possible supplements to the ASVAB. These tests were intended to assess cognitive processing abilities not well addressed by the ASVAB, such as perceptual speed and spatial skills. The Army and the Navy developed computerized batteries of cognitive, perceptual, and psychomotor skills. The Army also developed predictors of will-do performance, based on biographical and interest inventories.

Development of Performance Measures

The specific procedures used to develop hands-on and other performance measures differed from Service to Service, but the project's emphasis on fidelity to actual job performance is evident in the common approach to defining the criterion domain. All the Services began the process of specifying the performance domain for a job by consulting task-inventory data (e.g., the Air Force CODAP system) and manuals (e.g., the Marine Corps Individual Training Standards) that provide detailed information about the tasks within an occupational specialty. These sources provided the substance for job analyses and, in some cases, the percentage of incumbents performing each task, the frequency of task performance, and the relative amount of time spent on each task. In addition to detailed information on the tasks or job responsibilities for each job, the Marine Corps had lists of the specific skills or knowledge required by the tasks, so that its table of specifications for each job was composed of both task and behavioral requirements. This table then became the blueprint for constructing the performance tests.

All the Services used subject matter experts to help identify and select tasks and to review test content. The experts were usually noncommissioned officers who knew the job at firsthand and had supervised the work of entry-level job incumbents. All the Services also used purposive or judgment-based methods of sampling from the universe of possible tasks to

select those to be turned into hands-on, interview, and written test items. The Navy agreed to conduct a side experiment to study the feasibility of selecting tasks using stratified random sampling techniques, but the Services generally (and from the point of view of advancing the field, unfortunately) shied away from random sampling techniques for fear the resulting performance tests would seem arbitrary to the military community (sampling issues are discussed in Chapter 4 and Chapter 7).

The process of turning tasks into hands-on test items involved breaking down each task into its subcomponents, or steps, and identifying the associated equipment, manuals, and procedures required to perform the steps. The steps were then translated into scorable units the test administrator could check off as either go or no go as the examinee performed the task. Not infrequently, tasks were too long to be included in full and the test development staff had to select a coherent segment for the test. Chapter 4 provides more detail on how the hands-on tests were developed.

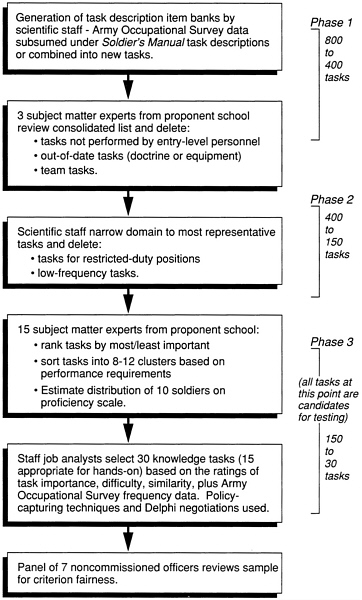

A closer look at how one Service developed its hands-on tests might be useful. The Army team designed particularly elaborate task-selection procedures (see Figure 3-1). The process began with an examination of the Soldier's Manual for each occupational specialty. These manuals, which contain task lists and task descriptions that represent Army doctrine on the content of the job, determine the content of technical training. Supplementary task descriptions were drawn from the Army Occupational Survey Program (AOSP), a job-task inventory system based on questionnaires circulated to job incumbents. About 800 tasks were designated for each job under study.

The central development activity consisted of refining and narrowing the task domain until only a sample of tasks remained to represent the job. A panel of three subject matter experts reviewed the initial list of tasks put together by the scientific staff and deleted any tasks not usually performed by first-term personnel or no longer current because of changes in doctrine or equipment. This culling reduced the number of tasks by about half. The scientific staff further reduced the number of tasks under consideration by removing tasks that were performed infrequently or in restricted-duty positions. The remaining 150 tasks per job were considered candidates for selection and were subject to further analysis. A total of 15 subject matter experts from the appropriate proponent school1 were asked to rank the 150 tasks for a particular job on the basis of their importance in a European theater combat situation; they were also asked to group the tasks into 8 to 12 clusters based on similarity of procedures or principles. As a basis for

|

1 |

Proponent schools provide technical training for each military occupational specialty. They are called proponent because they determine the doctrine for the specialty, i.e., the content of the job and how the job is to be used in carrying out the overall mission of the Army. |

ascribing a level of difficulty to each task, they were also asked to estimate how many in a typical group of 10 soldiers could do the task and with what frequency (the five possible answers ranged from “all of the time” to “never”).

Informed by these expert judgments as to the importance, similarity, and difficulty of the tasks, project scientists selected 30 tasks to represent each job, 15 of which were to be suitable for hands-on testing. All 30 tasks were to be incorporated into the written job knowledge tests. The group was not given strict decision rules to follow, but policy-capturing techniques were used to introduce consistency into each scientist's selections, and the scientists were brought to consensus on the final list of 30 tasks through a process of Delphi negotiations. A review panel of seven noncommissioned officers, including minorities and women, reviewed this set of tasks for fairness. (In the second wave of test development, project scientists worked with military experts to make the final task selection and the fairness review become part of the overall task selection process.) The prototype performance measures were then field tested, after which they were sent to the commander of the appropriate proponent school for approval.

SIZE OF THE RESEARCH EFFORT

This overview of the JPM Project concludes with a brief discussion of the human and monetary resources that were expended on developing the criterion measures and collecting data. Listed below are the dollar amounts expended for the project between fiscal 1983 and 1989, with budget projections through fiscal 1991:

|

1983 |

$3.2 million |

|

1984 |

3.8 million |

|

1985 |

4.8 million |

|

1986 |

4.2 million |

|

1987 |

3.7 million |

|

1988 |

4.4 million |

|

1989 |

3.8 million |

|

1990 |

(4.1) million |

|

1991 |

(4.3) million |

The four Services and the Office of the Secretary of Defense (OSD) reported expenditures through September 1989 totaling almost $28 million, about half of which was devoted to the Army's research. By the time the Marine Corps and Navy finish their data collection in 1992, it is anticipated that an additional $8 million will have been spent by all Services and OSD.

It is not possible to estimate the human resources devoted to the JPM Project with any precision. The largest class of participants, of course, was

the examinees. In addition to 1,369 soldiers who participated in field tests of the criterion measures, the Army gathered the full complement of criterion data from 5,200 soldiers in 9 occupational specialties at 14 sites in the United States and Europe. Another 4,000 subjects provided data on a more limited set of measures (excluding the hands-on tests).2 In its initial data collection, the Marine Corps tested over 2,500 subjects in 5 specialties in the infantry occupational field at Camps Lejeune and Pendleton. Of the 1,200 riflemen tested, 200 were retested with alternate forms of the performance tests to determine the reliability of the measures. A second set of performance measures was administered to approximately 1,800 helicopter and ground automotive mechanics in 1990. Test development is in progress for several electronics specialties (e.g., ground radio repairman and avionics technician) with data collection scheduled for 1992. And plans are under way to develop measures for administrative jobs (e.g., personnel clerk and supply clerk). The third and fourth rounds of testing are expected to add 1,600 and 2,000 subjects, respectively, to the Marine Corps data base. For the eight Air Force specialties investigated, 1,493 airmen took the hands-on and interview procedures at 70 bases. Performance rating forms were also completed by each examinee and by his or her supervisor. Over 3,400 additional rating forms were completed by peers of the examinees. Faced with even more complicated logistics, the Navy tested 184 first-term machinist's mates assigned to either the engine room or the generator room aboard 25 1052-class frigates. The radioman sample consisted of 257 first-term personnel, 185 of them male and 75 female. Of these, 131 were ship based and 126 (including most of the women) were land based.

These are but partial numbers, since the Navy and Marine Corps are still involved in data collection. In addition to the service personnel who were test subjects, many hundreds of research scientists, test administrators, and base personnel who provided logistical support played a part in the JPM Project. The level of effort is not likely to be duplicated by any other institution, or indeed by the military in the foreseeable future.

|

2 |

In addition, the Army conducted a longitudinal validation during which 49,397 soldiers were tested on predictor measures (1986-1987), 34,305 on end-of-training tests (1986-1987), and 11,268 on first-tour performance measures (1988-1989). |