Active Electro-Optical Component Technologies

As has been described in Chapters 2 and 3, current and emerging active electro-optical (EO) sensing systems are implemented in many different modalities. All require components such as lasers, detectors, optics, and processing techniques to generate photons, bounce them off targets, and transform detected photons into usable information. Other components may be required as well, depending on the implementation. The specific requirements, complexity and sophistication of the components vary with the manner of implementation of the active EO sensing system and the usable information it is trying to extract. For example, no particular laser or detector technology meets all the requirements of the various active EO sensing approaches. An important factor in the last ten years’ progress has been technological advances in each of the components of the active EO system: improved lasers, detectors, software, advances in robotics, and improved manufacturing technologies. This chapter discusses the variety of components currently used and some of the key technologies being developed for future systems.

Active EO sensors employ coherent sources in the wavelength region from the long-wavelength infrared (around 10 µm) to the atmospheric transmission limit for UV light, around 200 nm. The sources can be based either on lasers or on nonlinear optical systems driven by lasers. Lasers are typically categorized by the type and format of the medium used to generate their output, which at the highest level are gases, liquids, and solids.

Solid materials are further categorized by their electrical characteristics. Solid-state lasers employ insulating solids (crystals, ceramics, or glasses) with elements added (dopants) that provide the energy levels needed for laser action. Energy to excite the levels is provided by other sources of light, either conventional sources such as arc lamps or other lasers, a process called optical pumping.

Solid-state lasers in turn are divided into two broad categories, bulk or fiber, with the latter having recently emerged as an important technology for generation of high average powers with high beam quality, as discussed below.

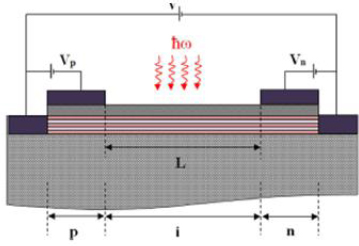

Even though they are also made from solid-state materials, semiconductor lasers are considered to be a separate laser category. While the lasers can be made to operate by optical pumping, if the semiconductor material can be fabricated in the form of an appropriate p-doped, n-doped (PN) junction, it is possible to pass electrical current through the junction and generate laser output directly. These “diode lasers” are by far the most widely used form of semiconductor lasers, and have led to major advances in source technology for active EO sensors.

The use of lasers for active EO sensors started with the use of the first laser to be operated (1960), the solid-state ruby laser.1 With the development of techniques to generate nanosecond-duration pulses, ruby lasers provided the first example of laser rangefinders. Since 1960, almost every type of laser has been employed in demonstrations of active EO sensors. At this point, with a few exceptions, sensors now

____________________

1 T.H. Maiman, 1960, “Stimulated optical radiation in ruby” Nature 187(4736): 493.

employ either diode or solid-state lasers, with the latter often combined with nonlinear optics. This looks to be the case for the foreseeable future, owing to a favorable combination of output format, operating wavelength, relatively high efficiency, ruggedness, compact size, and reliability.

With regard to nonlinear optics, most systems employ crystals with special properties that can convert a laser output to shorter wavelengths by harmonic conversion or to longer wavelengths, by parametric processes. The latter have the added advantage that the wavelengths generated can be tuned by a variety of techniques, an advantage for sensors that require specific wavelengths—for example, in the detection of specific gases. In some cases parametric and harmonic processes can be combined for added wavelength tuning.

The next sections go into more detail regarding the dominant active EO sensor source technologies. A reasonable amount of space is devoted to diode lasers, because they now feature prominently as both stand-alone devices for low-cost, short-range sensors and as optical pumps for solidstate lasers used for long-range sensors.

Diode Lasers

There are two major types of diode lasers, interband and cascade. The former is by far the most widespread in use, but the recently developed cascade lasers have emerged as an important source of mid- and long-wave infrared emission.

Diode Lasers: Interband, Edge-Emitting

Interband diode lasers employ electronic transitions between energy states in the conduction band and the valence band of the semiconductor crystal. First and foremost, the semiconductor material used for the diode laser must have a direct optical bandgap—that is, the electronic transitions giving rise to laser operation must occur without the assistance of mechanical vibrations (phonons) in the semiconductor. This eliminates the most common semiconductor material, silicon, as well as the related material germanium.

Laser operation in direct-bandgap semiconductors occurs by optical transitions between the lowest-lying electronic states in the conduction band to the highest-lying states in the valence band, with the requirement that there be a higher density of states occupied in the conduction band than in the valence band. This nonequilibrium condition, or “population inversion,” occurs in diode lasers through the injection of sufficient electrical current into the lasing region.

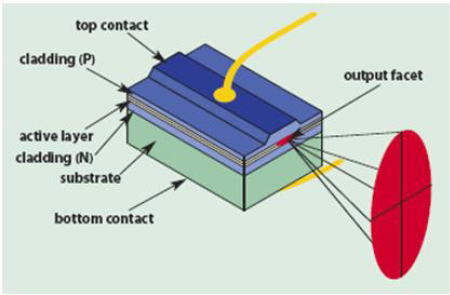

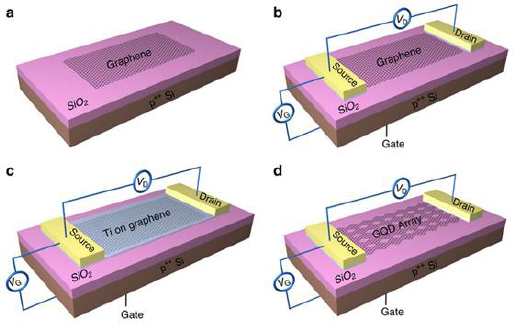

Fabrication of a diode laser (Figure 4-1 for an edge-emitting device) requires that the semiconductor material be available in both n-type (electrons the majority current carrier) and p-type (holes the majority current carrier), with this achieved by the addition of certain impurities to the materials. While there are many semiconductors with direct bandgaps, many of these, for a variety of reasons, cannot be doped to make both n- and p-type materials to form diodes, notably all of the so-called II-VI binary semiconductors such as CdS, CdTe, ZnS, ZnO, and ZnSe. (The Roman numerals refer to the relative positions of the elements in the periodic table.) Infrared lasers have been operated based on IV-VI materials such as PbS and PbSe but require cryogenic cooling for efficient operation. To date, III-V binary, direct-bandgap semiconductors such as GaAs, GaSb, InAs, InSb and, most recently, GaN, as well as alloys of these crystals with other III-V elements, are by far the most widely used materials for use as diode lasers. Noncryogenic operation in the 350-2,000-nm region is possible with devices based on III-V materials.

FIGURE 4-1 Reprinted with permission from The Photonics Handbook, online at http://www.PhotonicsHandbook.com. Copyright 2013. Laurin Publishing, Pittsfield, Mass.

Figure 4-1, though a very simplified diagram, shows the key features, of an edge-emitting interband diode laser. Electrical current passes through a wire bond to a stripe top contact, which confines the current in two dimensions. In the device shown, the current passes (is “injected”) through p-type material into the “active layer,” fundamentally a junction between p- and n-type material, then through n-type material to an electrical contact to complete the circuit. Laser gain occurs in a thin, 3-D region comprising the active layer, with the third dimension set primarily by the width of the stripe contact. The injected current and the structure of the device act to form an optical waveguide that confines the laser light to the same region as the gain, both vertically (perpendicular to the plane of the junction) and horizontally (parallel to the junction plane.) Most edge-emitting diode lasers operate with the laser cavity formed by the cleaved ends of the waveguide region. One end is coated with a deposited stack of dielectrics to form a highly reflecting mirror, while the other end is coated to provide only a small amount of reflectivity. Typical lengths between the two faces are in the sub-to several-millimeter range. In some cases one diode end has an antireflection coating to enable use of an external mirror and/or tuning element to obtain more control of the diode wavelength.

In reality, the details of the junction are much more complex than the simple PN junction implied by Figure 4-1. Major developments in diode-laser performance, from the first demonstrations of operation in the early 1960s, have been the result of developing the fabrication technology to make multilayer semiconductor structures (heterostructures) that better confine the lasing region and reduce the current needed to get laser operation, as well as increase the efficiency in converting electrical power to laser power. As the thickness of the layers has been reduced with improved processes to tens of nanometers, quantum effects that fundamentally change the nature of the semiconductor-material energy levels have been utilized to further improve performance. In addition to structuring in the plane of the junction, additional material structuring in the horizontal direction to confine the current and laser power has led to better laser properties.

A critical and fundamental characteristic of a diode is the nature of the region where laser action occurs. Referring to Figure 4-1, in the vertical direction the region of laser emission is limited to a dimension of about 0.5 µm, set by the fundamental nature of the junction. This is comparable to or smaller than the wavelength of the light emitted, which assures that the light (“fast axis”) emitted in the vertical direction is diffraction limited, consisting of only a single transverse mode. In Figure 4-1 the beam dimension is larger in the vertical direction because the fast-axis light rapidly diverges from the

small emitting region. To effectively capture all of the power from the diode laser requires fast optics, at least for the fast-axis light, and this is accomplished with specialized aspheric optics, often fastened directly to the diode-laser package.

The limiting feature of the small dimension is that even relatively low absolute power levels lead to very high power densities at the surface of the edge-emitting diode laser, on the order of 10 MW/cm2, and can lead to catastrophic optical destruction (COD) of the diode laser beyond certain power levels. COD results from the inevitable defects at the surfaces of semiconductors, which absorb the laser light, heat up, and, with enough power, melt the material at the surface. Means to improve the COD level are often considered proprietary or, at a minimum, patentable, by the diode manufacturers. For some semiconductor materials, particularly compounds designed to operate at wavelengths of 1,500 nm and longer, the diode power levels are limited by simple heating effects, well below the levels set by COD.

No matter the cause of the limited power, one can increase the power output of an edge-emitting diode laser by increasing the width of the lasing region along the horizontal direction, which reduces the intensity at the surface for a given absolute power level and also the generated heat density. Unfortunately, beyond a dimension of several microns, the light emitted in the direction along the plane of the junction becomes multimode. State-of-the-art diode lasers are able to generate diffraction-limited continuous wave (CW) powers on the order of 0.1 W/µm of horizontal width, so that diode lasers with strictly single-mode outputs are capable of only 0.1-0.2 W of output power. The low power is adequate for many applications, and for optical data storage devices (CDs, DVDs and Blue-Ray discs) the yearly volume of low-power diode lasers approaches 1 billion.

An upper limit to the power from a single emitting region is found when the laser action, rather than occurring through the cavity formed by the cleave ends, starts in a perpendicular direction along the width of the stripe. Typical junction widths for so-called broad-stripe lasers are in the 100-400µm range. State-of-the-art diode lasers operating in the 900-nm wavelength region can generate 10-15 W of CW power with a 100 µm stripe width.

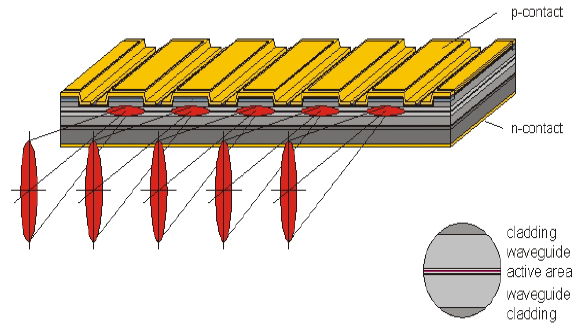

In order to produce diode lasers with higher average power, the semiconductor laser industry, starting in the 1980s, took advantage of improvements in material quality and lithographic techniques to manufacture multiple diode lasers on one piece (“chip”) of semiconductor material.

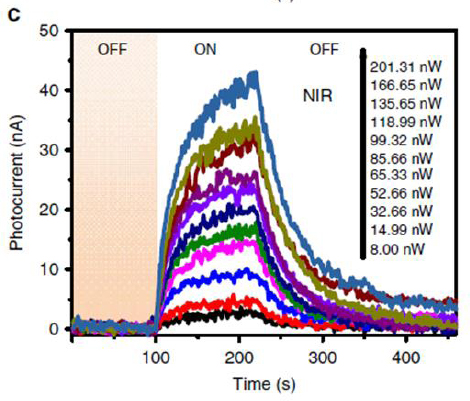

Figure 4-2 is a schematic of a multi-emitter diode “bar,” and the most common overall horizontal dimension is 1 cm. The bar fabrication requires a means to suppress laser action along the length of the bar, and an additional manufacturing challenge is mounting the bar on a heat sink to remove the heat efficiently while also keeping the entire bar in one plane, so all of the emitters line up exactly. If they do not, the net divergence of all of the beams after collection by external optics increases. Key bar parameters are the number of emitters per bar and the stripe width of each emitter, leading to the “fill factor” for the bar, with typical stripe widths in the 100-200µm range. Unlike single-emitters, the power output of bars running in the CW mode is thermally limited, although recent improved thermal engineering of bar mounts and cooling may lead to COD starting to become a limit as well. The thermal limits to power can be overcome if the diodes are run in pulsed mode, sometimes referred to as quasi-CW (QCW). Typically, this mode is used for pumping solid-state lasers, with pulsewidths and on-time (duty cycle) percentages in the 0.1-1-msec and 1-10 percent ranges.

Present state-of-the art bars, designed for CW operation, have fill factors of 30-50 percent, and for the most efficient semiconductor materials, operating in the 900-nm region (“9xx devices”), commercially available power levels are as high as 160 W. Higher powers are possible with reduced device lifetimes, which drop rapidly as the temperature of the semiconductor junction increases. QCW bars run with fill factors of 75-90 percent and peak power levels of 250-300 W for 9xx devices.

Appendix C provides a number of tables that review the state of the art today in lasers. Table C-1 summarizes the key features of edge-emitting diode lasers, in both single-emitter and bar format. Given the significance of such devices for a number of commercial and military applications, there is continuing development of devices over the entire spectral range, and the performance (power, efficiency) and, to a lesser extent, the wavelength coverage can be expected to improve in the next 10-15 years.

FIGURE 4-2 Diagram of “bar” lasers. SOURCE: © Jeniptik Laser GmbH.

The specific configurations of edge-emitting diode lasers as optical pumps for solid-state lasers are discussed in a later section.

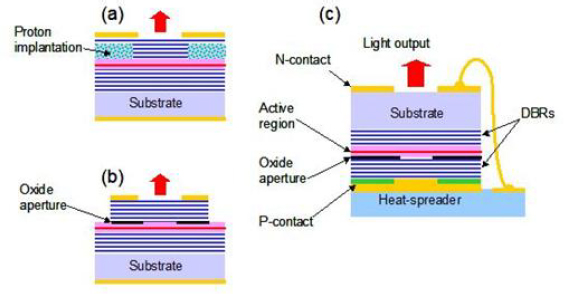

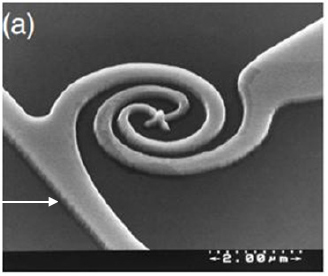

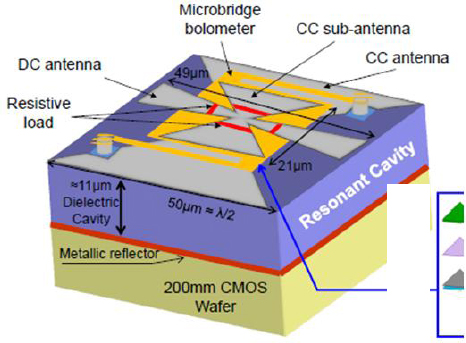

Diode Lasers: Interband, Vertical Cavity

Another class of interband diode lasers, developed more recently than the edge-emitting devices just discussed, is based on improved semiconductor-processing technology. Several designs of these new devices, vertical-cavity surface-emitting diode lasers (VCSELs), appear in Figure 4-3. The key feature is that the device is constructed by building up a series of semiconductor layers and that the direction of optical gain in the junction is perpendicular to the plane of the junction. The latter, since the path length is very short, requires much higher reflectivity mirrors in the laser cavity to permit oscillation, with the output coupling mirror having less than 1 percent transmission. The bottom and top mirrors (distributed Bragg reflectors (DBRs in Figure 4-3) consist of alternating layers of high- and low-index semiconductor materials, and the active region, employing quantum-well-structure semiconductor heterojunctions, is sandwiched between the DBRs. Electrical contacts on the top and bottom allow electrical pumping with current passing through the structure, and a circular hole is etched into one of the contacts to allow the output beam to emerge. The entire length of the VCSEL is on the order of 10 µm for devices operating in the 900-nm region. To generate a diffraction-limited output in that region, the emitting-region diameter has to be kept below 4 µm, with power levels in the 5-mW range, but higher multimode powers are possible as a result of recent work, with Princeton Optronics claiming operation of a 5 W, 976 nm, CW laser with a 300 µm aperture.2

Once VCSELs became commercially available, they found widespread application as 850-nmregion transmitters for short-haul, high-bandwidth fiberoptic data links. The circular output beam of VCSELs and comparatively small natural divergence make their coupling to fibers an easy task. Because of the extremely short optical cavity, the devices are operate on a single frequency and can be turned on

____________________

2 See http://www.princetonoptronics.com/technology/technology.php#VCSEL.

FIGURE 4-3 Several different VCSEL structures. SOURCE: Courtesy of Princeton Optronics, Inc.

and off in the 10-100 ps range, allowing direct current modulation for data rates in the tens of gigahertz. Since the fabrication process is similar to semiconductor integrated circuits (ICs), it is possible to construct many devices at one time, providing a major cost advantage over edge-emitting diode lasers. Finisar3 claims to have shipped over 150 million devices, which also find application as the source in an optical mouse. Future large-scale applications are likely in optical interconnects for high-speed electronics systems and in fiber-to-the-home.

More recently several groups have developed arrays of VCSEL devices to produce much higher total powers, although the beam quality is not high since the devices are incoherently combined. The main challenge for high-power arrays is heat removal, where the desire to have a high areal density of devices to improve brightness conflicts with the need to remove power. Princeton Optronics has demonstrated 230 W of 976-nm CW power from a 5 × 5-mm array and 925 W of QCW power from the same area.4

VCSELs had been limited in wavelength coverage to 650-1,300 nm primarily because the GaAsbased technology used to manufacture the electrically pumped devices cannot extend beyond that region. Recently, however, devices operating in the 1,500-nm region have been reported, based on the InP semiconductor, and are expected to find wide use for higher-speed fiber applications where the low dispersion of silica fibers at 1,500 nm allows 10 GHz and higher long-distance links. Development of the longer-wavelength VCSELs is intense at this writing, given their large potential in the telecom industry.

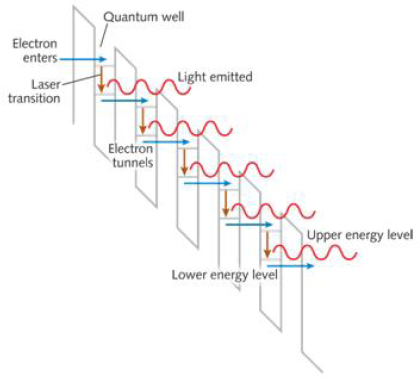

Quantum Cascade Lasers

Unlike the interband diode lasers just discussed, quantum cascade lasers (QCLs) rely on a totally artificial gain medium made possible by quantum-well structures, a process sometimes referred to as band-structure engineering. The gain from a QCL relies on transitions entirely from electronic states within the conduction band of the material, as modified by the quantum well structure. Typical devices are fabricated on InP and employ GaInAs/AlInAs quantum wells, which are formed from nanometer-thick

____________________

3 See http://www.finisar.com/sites/default/files/pdf/Finisar_infographic_timeline_web.pdf.

4 J.-F. Seurin, C.L. Ghosh, V. Khalfin, A. Miglo, G. Xu, J.D. Wynn, P. Pradhan, and L.A. D’Asaro, 2008, “High-power vertical-cavity surface-emitting arrays,” in High-Power Diode Laser Technology and Applications VI, M.S. Zediker, ed., Proc. SPIE 6876: 68760D.

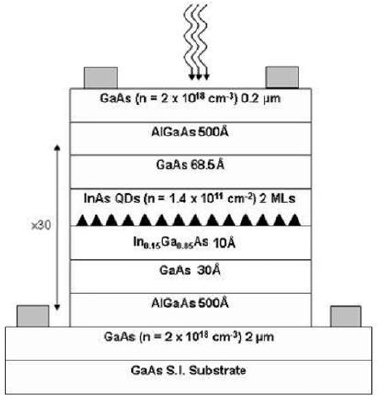

layers, typically 10-15 in number. Devices based on other material combinations have been demonstrated but show inferior performance. Since the energy separation of the states is a function of the structure, it can be adjusted to generate a wide range of wavelengths, covering approximately 3.5-30 and 60-200 µm, with the latter falling in the terahertz region. The gap in long-wavelength coverage is due to the strong phonon absorption region of the semiconductor, which overcomes the gain in the quantum wells, while the short-wavelength limit is set by several factors, including fabrication difficulties with the required quantum-well alloys and losses due to scattering among different energy states in the conduction band. Different materials systems, now under development, allow operation at shorter wavelengths.

Laser operation comes through injection of a current of electrons through the quantum-well structure, resulting from a voltage applied across the structure. Both the efficiency and gain would be low if the device employed just one quantum well, since the energy of the emitted photon is small compared to the energy required to put an electron in the conduction band. This drawback is overcome by fabricating a structure consisting of multiple (typically 25-75) quantum wells in series, with another heterojunction structure, the “injector” through which the electron tunnels from one quantum well to another. Figure 4-4 is a simplified, partial schematic of a QCL structure with distance as the horizontal axis and energy as the vertical, showing the energy levels for the electron with voltage applied across the structure length that creates the gradient in energy. The QCL name comes from the “cascade” process of one electron interacting with multiple quantum wells as it crosses the structure. The one electron creates multiple photons, corresponding to the number of quantum wells in the device, thereby greatly improving the efficiency. As with edge-emitting interband lasers, the laser power is emitted perpendicular to the current flow through the layers, so that a given quantum well interacts with only a thin slice of the laser mode.

One of the remarkable features of QCLs is that they are able to operate in the mid- and long-wave IR regions without the need for cryogenic cooling, even in the CW mode. This is not possible with interband diode lasers at the same wavelengths, since the high thermal population of the conduction band turns such narrow-bandgap diodes into, essentially, short circuits at ambient temperatures.

FIGURE 4-4 Simplified diagram of a quantum cascade laser. SOURCE: Courtesy of Laser Focus World.

Interband Cascade Lasers

A related semiconductor laser that has features of both interband and cascade diode lasers is the aptly named interband cascade laser (ICL). The operation of the device relies on multiple quantum-well structures, but now both electrons and holes are involved, and the quantum-well transitions are between an upper state in the conduction band and a lower state in the valence band. The material system commonly used involves either GaSb or InAs substrates, with alloys of InAs, GaSb, and AlSb employed for the quantum-well and injector structures. There are two advantages of the ICL over the QCL: (1) shorter-wavelength operation (at room temperature from 2.9 to 5.7 µm, with cryogenic operation from 2.7 to 10.4 µm), and (2) the ability to lase with much lower electrical powers (by a factor of 30 or so) than QCLs, since the transition lifetimes are much longer. As with conventional interband edge-emitting diode lasers, one would expect difficulty with long-wavelength operation at room temperature, and QCLs have an advantage there.

The current state of the art is summarized in Appendix C, Table C-2, for both QCLs and ICLs, but, as with conventional interband lasers, these numbers will change in the next 10-15 years.

Solid-State Lasers

The laser transitions for solid-state lasers occur (with a few exceptions) between energy levels of ionized atoms from the 3-D transition-metal group (Sc to Zn), from the ionized rare earth group, or from the lanthanide series of elements (La to Yb). The atoms are “hosted” in solids (crystals, glasses or ceramics) and are added as dopants to the mix of elements used to make the solids. The ions giving rise to laser operation are referred to as the active ions.

The first laser to operate was based on an artificial ruby crystal, which, in fact, consists of 3-D transition-metal Cr3+ ions hosted in Al2O3, a material known as corundum or sapphire. (“Sapphire” to the technical community is a transparent crystal, not to be confused with the naturally occurring, colored gemstone of the same name.) For transition metals, laser operation can be found from doubly to quadruply ionized atoms. The 3-D electronic states of transition metals have a reasonably large spatial extent, and thus interact strongly with the host crystal. Accordingly, the laser properties of transition-metal-doped solid-state lasers depend strongly on the host crystal.

In contrast, for the rare earths the laser transitions are primarily among different energy levels of 4f electronic states, and these, because they have a much smaller spatial extent than the 3-D states, are much more insensitive to the host crystal. With a few exceptions, a given rare earth in the same ionization state (almost exclusively triply ionized) will provide laser operation at a wavelength that is nearly independent of the host crystal.

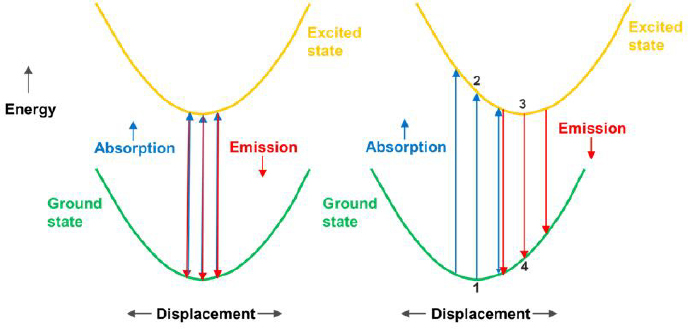

The interaction between the host crystal and the active ion also has a profound effect on the span of frequencies/wavelengths where there is optical gain for the laser, referred to as the “linewidth.” In the case of the rare earths, and for some levels of the transition metals, when the ion changes electronic state, the surrounding atoms (the lattice) stay in the same position. Figure 4-5, left, illustrates the case when there is no lattice change, with a drawing referred to as a configuration-coordinate diagram. The horizontal axis is some measure of the lattice position (say, the separation of all the surrounding atoms from the active ion) while the vertical axis represents the combined energy of the lattice and the active ion. In the so-called harmonic approximation, the system energy increases quadratically as the coordinate moves on either side from the lowest-energy, or equilibrium position. In the classical physics view, this movement will occur as the lattice vibrates from being at some finite temperature. In a more quantum-mechanical treatment, even at absolute zero, the uncertainty principal means that there is a distribution of displacements about equilibrium. The quantum-mechanical treatment also shows that the vibrational energy is quantized, with the vibrational quantum states called “phonons.” From Figure 4-5, left, assuming that there is no displacement in the equilibrium position between the ground state and the excited state, and that the quadratic change energy with displacement is the same, then the energy

difference for light being absorbed (upward transition) and that being emitted (downward transition) is the same and independent of the displacement. One key and generally valid assumption is that the electronic transition occurs much faster than the lattice vibrates (Franck-Condon approximation) and so the lattice remains “frozen” during the transition. In the diagram, this is why upward and downward transitions are shown as vertical arrows.

In this simple picture, the spectral width of both absorption and emission would be zero, and would be at the exact same energy. In reality, other effects, especially that of phonons in disrupting the phase of the light making the transitions, lead to a finite linewidth that is a tiny fraction of the transition energy, on the order of 10-4 to 10-5 of the transition frequency/wavelength. This is several orders-of-magnitude smaller than for semiconductor diode lasers.

Figure 4-5 right, shows a configuration coordinate diagram for the case when the electronic transition causes the lattice to shift its equilibrium position. One important dynamic is that when the active ion is put into the excited state, the lattice reaches its new equilibrium position on a timescale of picoseconds. As noted, when an electronic transition takes place, it is much faster than this, but for the ions of interest, the probability of this transition actually occurring is very low on a picosecond timescale, so the lattice gets to its new position (“relaxes”) before any light is emitted by a transition back to the ground state.

Two important things result from this. First, the energies, and hence the frequencies of light absorbed in electronic transitions from the ground state to the excited state, are generally higher than for light emitted by transitions from the excited to the ground states. Second, it is evident from Figure 4-5 and the lengths of the arrows, that there can be a range of energies for either absorption or emission, due to the displacement of the two parabolas. The linewidths for absorption and emission can be very broad, on the order of 10-20 percent of the central energy.

As noted in the introductory portion of this section, unlike semiconductor diode lasers, which convert electrical power directly to laser power, solid-state lasers need a source of light to operate, which typically is driven by electrical power. The optical pumping process requires that the solid medium absorb light, which then must result in a population inversion between the upper and lower laser levels.

FIGURE 4-5 Left: Configuration coordinate diagram, no change in crystal. Right: Same but with a shift in crystal atomic positions.

A simple electronic system with just two energy levels and no lattice displacement (Figure 4-5 left) cannot be used to make a laser, since the material becomes transparent when a population inversion occurs. At least one higher level is needed to absorb the light, but that level must be able to transfer its excitation to the upper laser level. If the laser transition then occurs to the lowest energy level of the active ion, laser action is possible only when a substantial fraction of the ions are pumped into the upper laser level. This type of laser operation is referred to as “3-level.” If the laser operation is not to the lowest energy level but to a higher level, and that level is high enough in energy that in thermal equilibrium the population of the level is small enough to not affect laser operation, the laser operation is “4-level.” For systems where the lower level is above the ground state but has a non-negligible population, the designation is sometimes called “3½-level.”

In Figure 4-5 right, it is evident that, even with only two electronic levels, it is possible to have 4-level operation. As marked in the diagram, pump light creates a transition from the ground state in equilibrium position (1) to the first excited state in nonequilibrium (2), which then relaxes to the equilibrium position (3) and makes a laser transition to the nonequilibrium ground state (4). This type of laser operation is often called phonon-assisted or vibronic.

The ruby laser is an example of a 3-level laser, with a narrow-line (nonvibronic) laser transition (the so-called “R-line”) terminating on the ground state but with the pumping absorption lines caused by broadband vibronic transitions. These, with very high probability, deliver their excitation to the upper laser level by a so-called nonradiative process that makes up the difference in energy between the pump levels and the upper laser level by the generation of multiple phonons, often called “multiphonon relaxation.” The measured absorption coefficient for ruby shows three broad peaks at 250, 400, and 550 nm due to vibronic transitions, all of which are effective in exciting the upper level of the R-line transition at 694.3 nm.

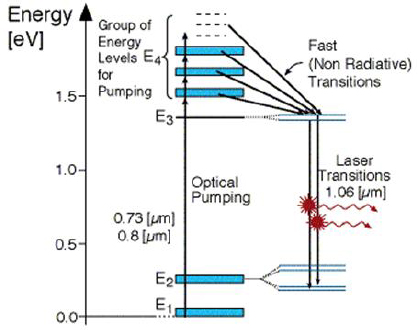

Shortly after the ruby laser was demonstrated, laser researchers were able to operate solid-state lasers based on rare earth elements. The challenge for rare earths was in finding the right combination of energy levels among all the possible ions from that series. The most effective dopant was quickly established as neodymium (Nd3+); a partial, simplified energy-level diagram for the ion appears in Figure 4-6. The most common laser transitions for the ion occur in the 1050-1,080-nm region, from level E3 to level E2. Optical pumping is possible from the ground state (E1) to a multiplicity of levels (E4), all of which are efficient in exciting level E3 by multiphonon relaxation, with energy cascading down one level to another and finally ending up in the upper laser level. A key reason that the Nd3+ ion works well as a laser is that there is a large gap in energy from E3 to the next-lower level, so much that there is very little probability of multiphonon relaxation, and energy that winds up there can be extracted effectively by laser action. Another key to the success of Nd3+ lasers is that level E2 is sufficiently above the lowest energy level so that it has only a negligible population at normal temperatures. On the other hand, energy in that level can rapidly cascade down to the ground state, and not build up to the point that laser action stops. Thus, the Nd3+ ion provides true 4-level operation.

The right-hand side of Figure 4-6 indicates that the simplified energy levels shown as thick bars are in fact composed of multiple levels, a result of the splitting of the energy states of the rare earth ions by interactions with the surrounding atoms of the host crystal. The cluster of multiple levels indicated by the thick bars are often referred to as “manifolds.”

Given all these conditions for effective laser operation, it may not be surprising that, among the total of 13 triply ionized rare earth ions that could support laser operation, only Nd3+ has all of the characteristics just described. The other common rare earth laser ions, which include Er3+, Tm3+, Ho3+, and Yb3+, will be discussed below, but they all have one or two unfavorable characteristics that must be overcome for effective laser operation. As discussed below, a combination of laser-based optical pumping and the use of fiber formats has opened up a wide variety of uses for these ions.

FIGURE 4-6 Simplified energy-level diagram for an Nd3+ ion.

SOURCE: Courtesy of Rani Arieli, Weizmann Institute of Science.

An alternative Cr3+-doped crystal, BeAl2O4, known as alexandrite from the gem of the same name, emerged from work in the late 1970s and presented a case where the level giving rise to the vibronic transition was, like ruby, higher in energy than the R-line level but close enough so that it was partially excited by optical pumping. Vibronic laser action is possible at room temperature around 760 nm, but the optical gain is low and the tuning range is reduced due to excited state absorption (ESA). By running the crystal well above room temperature, it is possible to improve the laser characteristics and provide tuning from about 720-860 nm. The low gain has limited applications in areas requiring generation of high-energy pulses.

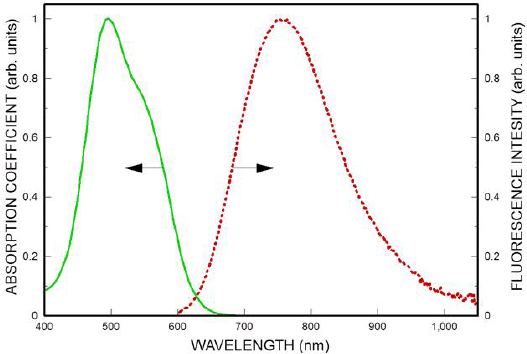

The demonstration in 1982 of the Ti3+-doped sapphire (Ti:sapphire) laser provided a system with a well-known host crystal and a new laser ion. The single d electron of the Ti3+ ion provided a system similar to that diagrammed in Figure 4-5 right, with somewhat more complexity due to the details of the vibronic levels. ESA is not a factor since there are no higher-lying 3-D electronic levels and, in sapphire, other possible transitions are too high in energy. Absorption and emission intensities as a function of wavelength appear in Figure 4-7, along with the spectral distribution of the optical gain, which is red-shifted owing to the details of the Einstein relation of the measured emission spectra to the gain spectrum. The half-width of the gain spectrum is about 100 THz in frequency space, the widest of any known laser medium, and the system has been tuned from 660 to 1,100 nm.

A unique class of solid-state media has recently emerged, based on divalent transition metals doped in semiconductors, the most well-developed of which is the dopant Cr2+ in ZnSe. As with Ti:sapphire, the vibronic transitions lead to very broad linewidths, but the transitions are centered in the mid-IR region, around 2,500 nm for the case of Cr:ZnSe, which has been tuned from about 2,000-3,500 nm.

Further discussion of solid-state lasers below considers the two main formats for these systems, bulk and fiber.

FIGURE 4-7 Absorption and emission spectra for Ti:sapphire. SOURCE: Courtesy of Peter Moulton, Q-Peak, Inc., http://www.qpeak.com/.

Bulk Format

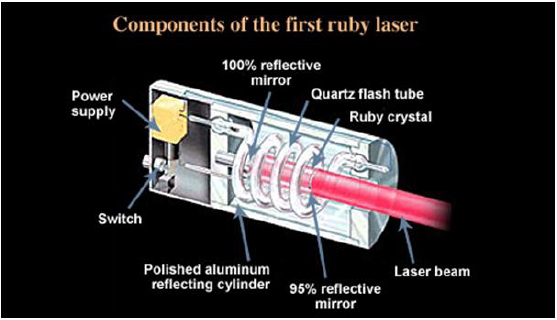

Solid-state lasers in bulk format are based on active ions doped in crystals, glasses, or ceramics. The material can be fabricated in a variety of shapes and must allow optical pump power to be transmitted into the material, as well as allow the generated laser power to spatially overlap with the region of material that is pumped. Figure 4-8 shows a schematic of the first ruby laser, where the ruby crystal was fabricated in the form of a rod, 1 cm in diameter by 1.5 cm long and the laser cavity employed mirrors deposited directly on the polished ends of the rod. A helical xenon-gas-discharge, quartz-envelope lamp, originally developed for flash photography, surrounded the rod and was in turn surrounded by a reflecting cylinder (“pump cavity”) to help concentrate the light from the lamp onto the barrel surface of the rod. Ruby has strong visible-UV-region absorption bands, which overlap well with the emission spectrum of the lamp.

The basic arrangement in Figure 4-8 is still employed today for many lamp-pumped solid-state laser systems. The helical lamp is now usually replaced with a linear-discharge lamp, and pump cavities may be formed as a cylindrical ellipse, whose inside is coated with a highly reflecting metal like silver and with the rod and lamp each centered in one of the foci. Alternative cavities may employ a diffuse reflector to help even out the distribution of pump power in the rod. Typical rod diameters are 3-12 mm and lengths are 50-150 mm, though high-energy systems may use much larger sizes.

The simple example of the lamp-pumped laser of Figure 4-8 is used to make three key points about bulk-format solid-state lasers:

• Compared to semiconductor lasers, the spatial region over which laser action occurs is generally many orders of magnitude larger, for both the laser beam area and the volume involved in lasing. Bulk solid-state lasers can generate much higher powers from a single laser in CW operation and even more peak power simply because of the larger areas and volumes involved. For efficient laser operation, it is important that (1) a large fraction of incident pump light is absorbed in the material and (2) the spatial overlap is high between the large pumped volume and the laser beam either generated or

FIGURE 4-8 Schematic diagram of first ruby laser. SOURCE: Lawrence Livermore National Security, LLC and the Department of Energy.

amplified in the material. For the simple design in Figure 4-8, the latter is accomplished by making an optical cavity out of the rod itself.

• For optical pumping, at least some fraction of the absorbed pump power must be of a shorter wavelength than that of the laser, in order to create the inverted energy level population in the material. Generally, all of the energy difference between pump and laser photons is taken up via nonradiative processes that generate phonons, and the measurable effect is a rise in the laser material temperature. Unless the heat is removed, laser action will eventually stop from a variety of high-temperature effects, the most extreme being melting of the material. For the laser in Figure 4-8, heat from the rod could be removed by flowing air around the rod or, more commonly, by placing the rod inside a transparent cylinder and flowing a transparent liquid, usually water, around the outer rod surface.

• Once the laser material is actively cooled, since the material has a finite thermal conductivity, the flow of heat leads to a variation in temperature in the material. For the case of the rod in Figure 4-8, the center of the rod will be hotter than the cooled outside surface. All materials expand (or in a few cases contract) with increased temperature, and the temperature difference inside the rod leads to different levels of expansion, which in turn create stress in the material. Ultimately, at sufficiently high levels of power the material may fracture from this stress. Below the fracture limit, the optical properties of the material are changed. The stress changes the refractive index and polarization properties of the material through the stress-optic effect. More important, since the refractive index of materials changes with temperature, the gradient in temperature leads to a nonuniform refractive index. The net effect of the changes is to change the properties of the laser beam passing through the material. The changes are pump-power dependent.

Many of the engineering challenges and performance limitations of bulk solid-state lasers center on the issues described above.

Host Materials

The effect that the choice of host material has on these issues is considered first. The desirable host material properties are these:

1. Availability in a size suited for the pumping, lasing configuration; low optical loss at the pump and laser wavelengths; ability to support active-ion doping levels high enough to absorb the pump light in the desired volume.

2. High mechanical strength to avoid fracture at the desired power level, high thermal conductivity, and low thermal expansion coefficient.

3. High thermal conductivity, small change in refractive index with temperature, and small change in refractive index and polarization properties with stress.

Particularly for the rare earth dopants, several hundred different host crystals have demonstrated laser operation, but only a handful have found widespread use because they best satisfy all of the requirements listed above:

• Y3Al5O12 (YAG). A member of the very large family of garnet-structured crystals, YAG emerged from research in the 1960s as having the best combination of properties for hosting rare earth materials, including high thermal conductivity and good mechanical strength. It remains in wide use at present for a variety of industrial and medical applications and is also the basis for all laser-designator systems, as its primary operational wavelength when doped with Nd3+ ions, 1,064 nm, matches the current inventory of laser-guided munitions. A serious drawback is in the growth of single crystals, which require furnaces to operate above 1,950° C to produce a liquid from which crystals are pulled. (Reportedly, at one point the production of YAG in New Jersey represented the largest single use of electrical power in that state.) Recent advances in ceramic YAG, which is made up of randomly oriented, micrometer-sized single crystals, have allowed fabrication of much larger materials. The successful use of ceramic materials for lasers is a recent development that required significant advances in technology to essentially eliminate the scattering of light associated with the boundaries between the microcrystals making up the ceramic. The demonstration of the most powerful CW solid-state laser, 100 kW from Nd:YAG, as part of the Joint High Power Solid State Laser (JHPSSL) program at the U.S. High Energy Laser Joint Technology Office involved the use of ceramic laser material.5

• YVO4 (vanadate). One important parameter of the electronic transitions involved in pumping and laser action is the “cross section,” given in units of area. When it is multiplied by the volume concentration of laser ions, the resultant value provides a measure of the pump absorption or laser gain per unit length. Electronic transitions for solid-state lasers ions generally take place in the same electronic shell (3-d or 4-f) and are thus “parity forbidden,” which in practical terms means they would have very small, or in some cases zero, cross sections if they could somehow be suspended in free space. Once the ion is placed in a crystal, the surrounding ions create a lower symmetry than free space, mix in other electronic states to the electronic wave functions for the ion, and greatly enhance the cross section. This process is sometimes called activation of the transitions. For most of the host crystals for rare earths, the laser cross sections fall in a fairly limited range of values. The crystal YVO4 and some related compounds have unusually low-symmetry surroundings for rare earths and induce cross sections about an order of magnitude higher than other hosts. This leads to efficient absorption of pump light in short lengths of material and very high optical gains for rare earths, allowing construction of diode-laser-pumped miniaturized devices, such the common “green” laser pointer, as well as moderate-power, efficient, high-pulse rate lasers for applications in laser materials processing and high-pulse rate laser imaging systems. By a fortunate coincidence, the prime Nd: YVO4 wavelength of 1,064 nm overlaps that of Nd:YAG, making it possible to build specialized hybrid lasers for some applications.

• LiYF4 (YLF). The fluoride-based material YLF has several unique properties, including much weaker thermal distortion effects than oxide materials and natural birefringence (see below), along with favorable laser properties for some rare earth dopants. The main drawback is a reduced mechanical strength compared to most oxides.

____________________

• Al2O3 (sapphire). Sapphire has thermal and mechanical properties superior to those of all other host crystals, and when undoped it finds applications ranging from high-end watch crystals to tank windows. These properties were key to operation of the ruby laser, as many other host crystals would have fractured when pumped to the levels (several hundred Joules into the flash lamp) required by the 3-level Cr3+ transition. Unfortunately, sapphire does not accommodate rare earths at any reasonable doping level, and so after ruby it found little use in solid-state lasers until the demonstration of the Ti:sapphire broadly tunable laser. The sapphire host, besides having excellent thermo-mechanical properties, also situates the Ti3+ ion in a low-symmetry crystal environment, thereby inducing large absorption and emission cross sections. Due to this unique combination of properties, sapphire remains the only effective host for Ti3+-based, broadly tunable solid-state lasers.

• ZnSe. The material ZnSe and similar II-VI compounds have in the past found widespread use for IR-transmitting windows, with acceptable levels of mechanical strength and hardness. Chemical vapor deposition (CVD) techniques have been developed to grow large slabs of polycrystalline (i.e., ceramic) material with very low optical losses. As a host material for Cr2+ and other divalent transition metals, it provides a low-symmetry (tetragonal) environment, which leads to lower-energy transitions with large cross sections. The low phonon energy of the material minimizes nonradiative decay of the longer-wavelength transitions characteristic of divalent ions in the tetragonal environment.

• Glasses. Glass materials represent a disordered, or amorphous, arrangement of atoms. For lasers, glasses are generally based on silicate or phosphate structures, or “formers.” On a microscopic scale, active ions in glasses have slightly differing surroundings for each ion. The fine-detailed structure in the manifold levels for rare earth ions in glasses is thus smeared out (inhomogeneously broadened) when one measures their absorption and emission properties. This allows glass-based materials to have much broader absorption bands for pump light and broader continuous tuning ranges than crystal and ceramic hosts. On the other hand, the effective cross sections are lowered as well, reducing the gain and length for laser action. A big advantage for glass hosts is that they can be cast from liquid form into arbitrary and very large shapes to allow generation of very high energy pulses. The world’s most energetic laser, the Nd:glass-based, 4-megajoule-level, National Ignition Facility in Livermore, California, uses about 3,000 large plates of laser glass, each 0.46 × 0.81 m in area,6 occupying a space equivalent to three football fields. The significant disadvantage of glasses for bulk lasers is their poor thermal conductivity, a direct result of their amorphous nature. They are not capable of high-averagepower operation and find use primarily in single-shot or low-pulse-rate applications ranging from handheld rangefinders (1 pulse/s) to the NIF (1 pulse/day). (A 4-MJ pulse in 24 hours leads to an average power of around 50 W, less than that of a 1-cm-long diode laser.)

Beam Quality and Thermal Effects

One of the significant challenges in bulk solid-state laser design and engineering centers on the effects of thermal distortion of the laser material’s optical properties (“thermo-optic distortion”) on the beam quality of the laser output generated by the material. The choice of pumping devices (lamps or diode lasers) plays an important role and is discussed in detail in the next section.

For bulk solid-state lasers, modern system designs for oscillators almost always employ “external” resonators formed by dielectric-coated mirrors, sometimes including internal lenses and sometimes using a coated end of the laser material as one of the resonator mirrors. The properties of the laser output beam are set by the resonator configuration, as modified by any thermo-optic effects in the laser material.

For materials shaped like simple cylindrical rods and cooled through the cylindrical surface, the temperature distribution can be approximated by a parabolic profile (transverse to the beam), peaking in the center of the rod. The corresponding optical effect is to make a rod behave, to a first level of

____________________

6 See https://lasers.llnl.gov/about/nif/how_nif_works/amplifiers.php.

approximation, as a lens. The lens is positive for the majority of materials, which exhibit a positive change in the refractive index with temperature. Additional optical distortions come about from the stress-optic effect, which converts the stress in the material from the thermal gradient into a change in refractive index. In rod configurations the stress-optic effect can also be approximated as creating a simple lens. For many materials, the two effects are additive, but in a few, particularly fluoride-based materials, the change of refractive index with temperature is negative, while the stress term is positive, leading to a reduced level of “lensing.” Smaller levels of optical distortion result from changes in the shape of the laser material due to nonuniform thermal expansion of the material as a result of the temperature gradient.

Another stress optic-effect is a distortion of the polarization of light passing through the material due to “stress-induced birefringence,” which in rods leads to a polarization state that changes over the cross section of beam. For crystals that are naturally birefringent, such as vanadate and sapphire, the natural birefringence typically dominates stress birefringence, and one can avoid any change in, say, a linearly polarized beam by aligning the polarization with one of the crystal axes. Isotropic materials such as YAG and all glasses are, however, susceptible to stress-induced birefringence, and this is an issue since linearly polarized light is required to drive many of the nonlinear processes discussed below.

In theory, it should be possible with any bulk solid-state laser to effectively generate a large fraction of power from a single, diffraction-limited, transverse mode of the resonator, since the diameter of the single mode can be made arbitrarily large by the choice of cavity design. In practice, for the laser wavelengths and resonator lengths used for bulk solid-state lasers, operating with “stable” cavities that produce more than several millimeters of beam diameters is impractical, since either the required angular alignment of the mirrors becomes unreasonably exacting or the thermo-optic effects in the laser material make the beam properties highly dependent on the exact pump power of the system. For high-gain lasers, such as Nd:YAG-based devices running in a pulse-pumped mode, it is possible to employ so-called “unstable” resonators that can provide single-mode 5-10-mm diameters, but these have to date been employed only in laboratory-based lasers.

To get around the limits of cavities, one can design a relatively low-power laser oscillator optimized for single-transverse-mode operation, and then amplify the oscillator in one or several amplifier stages, where the laser mode is expanded by telescopes to fill the size of the pumped region in the amplifier. This is the standard approach for most high-power systems and is termed a master oscillator power amplifier (MOPA) system.

Ultimately, thermo-optic effects in the laser material will limit the beam quality of the system by adding phase distortions across the area of the laser beam. While simple external lenses can correct the first-order effects in rod-geometry designs, as the thermal gradients increase with pump power, the higher-order levels of distortion become significant. In recent high-power laser systems design, so-called adaptive optics, first developed to correct for the linear phase aberration effects of atmospheric turbulence on laser beams, can be applied to correcting the more complex distortions in materials running at high powers, typically through the use of feedback loops that seek to maximize beam quality.

There are several material geometries beyond simple rods that attempt to reduce thermo-optic effects. Notably, if the heat flow is along the direction of the optical beam, the thermal gradient is as well. When the material is uniformly pumped, all sections of the beam experience the same refractive-index change and thus the beam phase-front is undistorted. Typical designs employ thin plates, often in the shape of a disk, where the large surface can be cooled by high-velocity liquids or by contact with a transparent, high-thermal conductivity crystal. Power limits are from stress, as always, and this is minimized by the use of thin crystals. However, if the crystal becomes too thin, it will not absorb, in a single pass of light through the material, a sufficient fraction of the incident pump power.

A recent design, the so-called thin-disk geometry, has been made possible through the development of diode pump lasers (discussed below.) Here a thin disk of material (on the order of 0.1-mm thick) is cooled by contact of one of the large faces to a metal heat sink, with the contacted face employing a dielectric coating that is designed to be highly reflective at both pump and laser wavelengths. The design employs optics that arrange for the pump light to make many passes back and forth through the laser material, effectively increasing the optical path for the pump light and facilitating efficient

absorption. (This arrangement would not be possible with lamp pumping.) Ultimate limits to beam quality are set by the inevitable deviation of heat flow from the desired direction along the thin axis of the material and the resultant stresses. For thin-disk lasers the mechanical distortion of the material cannot be ignored as a thermo-optic effect, and there is a limit to how large an area of the crystal can be used while still maintaining a high beam quality. One disadvantage of the thin-disk design is the relatively low gain of the system because of the short length of active material, and typical designs are with oscillators only, with low-loss laser cavities.

Another geometry used for high-power, high-beam quality systems is the zig-zag slab design. In this case the material is fabricated as a thin slab, but with a rectangular geometry. The laser beam is arranged to reflect back and forth in the thin direction (hence the descriptor zig-zag), off polished faces of the slab. This has the advantage that all parts of the optical beam experience the same thermo-optic distortion in the zig-zag direction. Distortion in the other direction is minimized by designing the pump beam to be as uniform as possible. Lamp pumping is typically done through both large faces of the slab, with cooling of the same faces by a flowing liquid. Diode pumping can be done through one face, with the other face cooled by contact with a metal heat sink. Another configuration, with the diode pump light directed along the long length of the slab, is discussed in the next section. This is the configuration used in the most powerful bulk solid-state laser (as of this writing).

Many common solid-state lasers, such as those used for materials processing, laser target designation, range-finding, and imaging at relatively short distances do not require diffraction-limited output, and resonator designs for such systems emphasize some combination of efficiency and high stability against vibration or system temperature change.

The actual relation between power output and beam quality is set not only by the laser material and resonator design but also by the pumping source.

Pumping Systems

Incoherent, lamp-based pump sources were the standard for bulk solid-state lasers until the 1980s, when development of high-power diode lasers brought about a major revolution in high-performance systems.

Lamps are still widely used for systems where cost or simplicity is important. For lamps operating in the pulsed mode (“flashlamps”), the typical design uses approximately atmospheric-pressure xenon gas, typically contained in a fused silica tube with tungsten-based electrodes on both ends and driven by a high-current electrical discharge. Lamp diameters are from 3 to 19 mm, and lengths can range from 2 cm to 1 m, depending on the system and energy required. Well-designed xenon lamps are 50-60 percent efficient in converting electricity to light, which they produce in a spectrum that resembles a hightemperature (6000-10000 K) blackbody with discrete emission lines in the 820-1,000-nm region. As the lamp discharge current increases, the line spectrum becomes a smaller fraction of the total spectrum. The energy limit for a flashlamp is set by its “explosion energy,” which is proportional to the inner area of the containing tube, and if the lamps are run at less than 30 percent of their explosion energy typical shot lifetimes before lamp failure are 107 to 108, with NASA claiming to have shown a 109-shot lifetime in one system.7

For CW lasers, one employs arc lamps, which differ in some details from flashlamps in the electrode and tube designs, as well as use of a multi-atmosphere fill pressure. For the most widely used laser application for pumping Nd:YAG lasers, arc lamps employ krypton rather than xenon gas, since the narrow-line krypton spectrum is a better match to the absorption spectrum of that material. Arc lamp lifetimes range from 200 to 2,000 h, depending on the operating cycle of the laser, with the longest lifetime seen for lamps running 24/7.

____________________

7 L.J. Richter, F. Schuda, and J.J. Degnan, 1990, “Billion-shot flash lamp for spaceborne lasers,” Proc. SPIE 1223: 142, Solid State Lasers, doi:10.1117/12.18406.

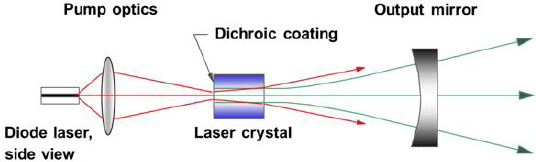

FIGURE 4-9 Schematic of diode end-pumped solid-state laser.

Lamp-pumped Nd:YAG lasers convert 2-3 percent of the lamp energy into laser output, with much of the lamp output not absorbed by the laser material. For flash lamps, as the pulse energy increases more of the pump power is delivered into higher levels, increasing the heat dissipated in the crystal. Despite these difficulties, lamps remain in widespread use, especially in pulsed lasers, for reasons to be discussed below.

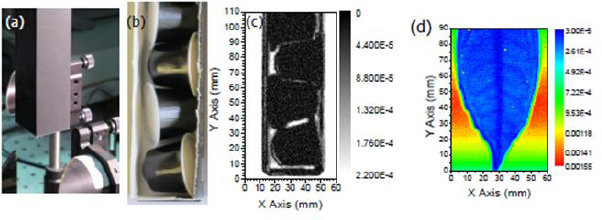

The use of diode lasers as optical pumps for solid-state lasers was demonstrated in the 1960s, but the limited power and performance of early diode lasers kept them from widespread adoption. With improvements in semiconductor materials and processing technology in the 1980s, commercial high power single-emitter diodes with outputs of 0.2 W became available, with operating wavelengths around 808 nm, suited for pumping Nd:YAG lasers. The first important design scheme for diodes was the socalled longitudinal (or end-) pumping configuration, shown schematically in Figure 4-9. The singleemitter diode laser output is collected and focused into an approximately round beam at the surface of the laser crystal through appropriate optical design. The pump beam diameter, and the diameter of the fundamental laser-cavity mode formed by one coated mirror on the crystal and one curved external mirror, are designed to be about the same, which results in the solid-state laser output being single-transverse mode. Typical laser crystal lengths are from 1 to 10 mm.

Several major advantages of diode over lamp pumping are evident with the end-pumping design of Figure 4-9:

• Essentially all of the diode output, rather than the small fraction typical with lamps, can be absorbed by the laser material. Thus, even though lamps and diode lasers are roughly comparable in overall conversion of electricity to light, the diode output to laser output (optical-to-optical) efficiency is more than ten times greater than that of lamps.

• With the proper choice of wavelength, the amount of heating in the material from laser action for a given power is smaller with diode pumping and reaches a minimum when the pump wavelength directly excites the upper laser level in a normally 4-level laser. This leads, in turn, to less thermo-optical distortion of the laser beam by the laser material.

• As noted above, the correct choice of pump-beam and laser-cavity beam area produces a diffraction-limited output, an important characteristic. Diode lasers are limited in their ability to produce large diffraction-limited powers, but they can make high multimode powers. The diode-pumped solidstate laser acts as a “brightness converter” for high-power diode lasers, and even with the inevitable loss in absolute power from the diode pumps, the brightness of the solid-state laser is between one and two orders of magnitude brighter.

• Heat generated in the laser material can be extracted by bringing the outer surface of the laser material into contact with a high-thermal conductivity material, eliminating the need for flowing a coolant over the material. Combined with the generally more stable pump power from a diode laser, this can lead to very stable operation of the solid-state laser, important for, say, generation of a stable laser wavelength/frequency.

• Diodes can be powered by low-voltage power supplies or batteries, in contrast to the high-voltages needed to drive lamps.

• With improvements in diode fabrication, laser operating times before pump replacement are typically three orders of magnitude longer than with lamps.

Disadvantages of the diode pumps compared to lamps include the following:

• The much higher cost of diodes.

• The need to maintain the diode operating temperature within a fairly narrow range, since the wavelength of diodes does shift with temperature. For typical diode lasers in the 800-1,000-nm range, the tuning rate is about 0.3 nm/°C. For Nd:YAG pumping at 808 nm, where the absorption linewidth is about 2 nm, one must hold the diode temperature well within a 10° C range for a simple end-pumped design. More complex designs can work over much wider ranges by using several diodes, each of which works for a certain temperature range, optical designs that provide multiple passes of the pump light, and/or diodes that have built-in wavelength-stabilization optics.

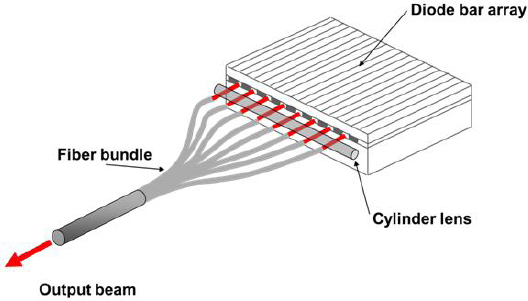

Initially, with the first single-emitter diodes, the power available for pumping was well below 1 W. Today, the available power from a single device with a 100-µm-widestrip is on the order of 10 W. For higher powers there are a number of techniques that make it possible to combine the outputs of multiple single-emitters into one pump beam. Figure 4-10 shows a simple approach that converts the multiple outputs of a diode bar laser into a single fiber, whose output can then be used for end-pumping. Because the simple design does not take advantage of the fact that diode outputs are single-mode in one transverse direction, it produces a low-brightness pump beam. More sophisticated bar-based pump sources use complex imaging systems to “stack” the diffraction-limited beam components of multiple emitter outputs in a way that greatly improves brightness, leading to fiber-coupled devices that can provide CW power levels of 200-300 W from a fiber with a 200 µm diameter and a 0.22 numerical aperture fiber.

At some power level, the simple end-pumped solid-state laser design of Figure 4-9 becomes impractical. Even with improvements in diode brightness, the pump beam in the crystal diverges rapidly enough such that, for good overlap of pump and laser beams, the length over which pump power is absorbed must be kept to 1 cm or less. Power limits are due either to crystal fracture from the large thermal load in a small volume or a large amount of thermal distortion.

FIGURE 4-10 Optical schematic showing coupling of bar output to a single fiber.

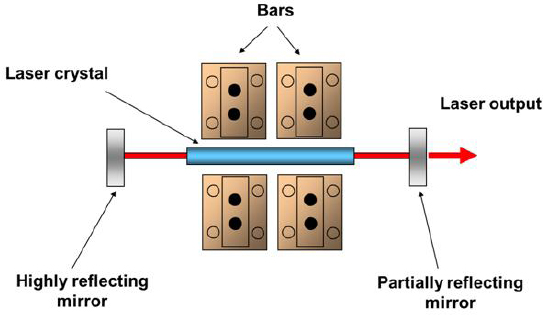

FIGURE 4-11 Schematic of diode side-pumped laser.

So-called side- or transverse-pumped designs with diodes can be employed to generate higher powers (and energies in the pulsed mode, see below), as shown schematically in Figure 4-11. Similar to lamp-pumped lasers, the pump light direction is roughly perpendicular to the laser direction, requiring the laser material to be fabricated appropriately. Cylindrical rods, thin, zig-zag slabs and other geometries can be employed, with cooling by either flowing liquid or bringing a portion of the material into contact with a metal heat sink. Obtaining good overlap with the lowest-order mode of the laser cavity is much more of a challenge than with end-pumped designs, and optical-optical efficiencies in general are lower. On the other hand, it is possible to couple much higher powers from the diode pump lasers, as many pumps can be used with one laser medium.

For side-pumping designs, diode bars are more effective in transporting light to the laser materials when they are closely stacked together, and extracting heat becomes a thermal engineering challenge. For pulsed systems with low average powers but high peak powers many more bars per square area can be put together than for CW systems. There are designs for low-average-power systems, as well as water-cooled bars designed for CW lasers such as that shown in Figure 4-12.

An example of the current state of the art of diode-pumped bulk lasers is the “gain module” design employed by Northrop Grumman in the 100-kW, CW laser built as part of the U.S. High-Energy-Laser Joint Technology Office, JHPSSL program. The module design has been commercialized by Northrop Grumman as part of the Vesta series of lasers.8

A Northrop-Grumman array employs two 36-bar vertical stacks with spacing between bars of 1.8 mm. Each bar has a cylinder lens attached to collimate the fast axis of the bar and, after that, a narrowwavelength reflecting element (a volume Bragg grating) designed to form an external resonator and control the diode wavelength. For the device design used for the JHPSSL, the operating wavelength is set to center at 885 nm and efficiently pump the upper laser level of Nd:YAG, minimizing the heat generated

____________________

8 See http://www.irconnect.com/noc/press/pages/news_releases.html?d=107455.

FIGURE 4-12 High-brightness, high-power stacked bars. SOURCE: Courtesy of DILAS.

in the solid-state laser material. The output of the two stacks is combined with an interleaving mirror to increase the intensity of the pump beam. Micro-channel water coolers, which provide high heat extraction per unit area, remove heat from the arrays and allow each 36-bar stack to generate a total CW power of 2.5 kW.9

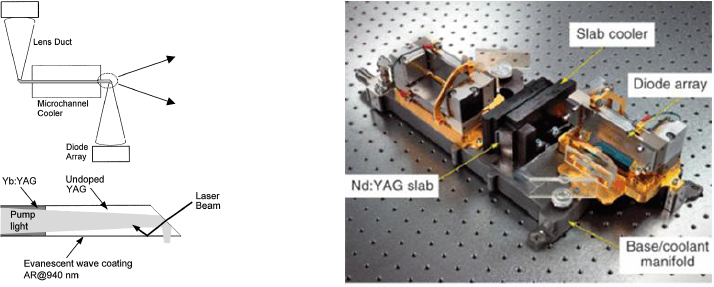

Figure 4-13 (left panel) shows a schematic of the pumping and laser-material configuration for an earlier version of the gain module, based on the material Yb: YAG.10 The solid-state laser material is configured as a thin zig-zag slab, with a pumping source on each end of the slab, and pump light entering through one of the slab faces, coated to act as a reflector for the pump power. As with longitudinal pumping, the pump and laser light propagate in the same general direction, with both reflecting off the large slab faces. A majority of the slab surface area is cooled by conduction to water-cooled metal heatsinks. The figure shows lens ducts used to collect the light from the pump arrays, while the newer, Nd:YAG-based JHPSSL uses simple lenses to properly format the pump light, as is evident in Figure 4-13 (right panel), a photograph of the Vesta gain module. Four gain modules for the basis for the lowestpower Vesta laser system, and generate 15-kW of CW power in a near-diffraction-limited beam. The JHPSSL system coherently combined the outputs of seven 15-kW modules to generate the record 100 kW of power.

Alternative Rare Earths

Diode pumping has also brought back into common use several rare earth dopants, all of which had been demonstrated in the early years of solid-state lasers, but subsequently abandoned in favor of Nd3+ -doped lasers for lamp-pumped lasers. These include:

____________________

9 See http://www.northropgrumman.com/BusinessVentures/CEO/Documents/TechNote17_wavelength_stabiliz.pdf.

10 G.D. Goodno, S.Palese, J. Harkenrider, and H. Injeyan, 2001, “Yb: YAG power oscillator with high brightness and linear polarization,” Opt. Lett. 26: 1672.

FIGURE 4-13 Left: Diagram of end-pumped slab design used for Vesta gain module. Right: Photograph of the gain module. SOURCE: G.D. Goodno, S. Palese, J. Harkenrider, and H. Injeyan, 2001, “Yb: YAG power oscillator with high brightness and linear polarization,” Opt. Lett. 26: 1672 (left) and Courtesy of Northrop Grumman Corporation (right).

• Yb3+. The Yb3+ has only a single 4-f electron and as a result has a very simple 4-fenergy-level structure, a ground manifold, and one excited-state manifold. There is enough energy separation among the levels within the ground manifold to facilitate 3½-level laser operation. For lamp pumping, the lack of excited levels leads to low efficiency in absorbing broadband pump power, but with diodes tuned to the ground-state-to-excited-state absorption band, efficient laser operation is possible. In Yb: YAG, the typical laser wavelength is 1,030 nm, while a common pump band is centered at 941 nm. The difference in pump and laser wavelength is much smaller than for typical Nd:YAG pump/laser wavelengths of 808/1,064 nm, leading to a thermal load in Yb: YAG that is about 38 percent that of Nd:YAG and 53 percent for the less common 885/1,064 combination. There are some drawbacks to Yb: YAG compared to Nd:YAG, including a much higher threshold for operation at room temperature due to the partial thermal occupation of the lower laser level, as well as a smaller gain cross section. If Yb: YAG crystals are cooled to cryogenic temperatures, laser operation becomes truly 4-level, the gain becomes comparable to Nd:YAG since the spectral linewidth decreases, and the thermal conductivity rises by an order of magnitude. Moreover, recent demonstrations of cryogenically cooled systems suggest they could find use as very high power, pulsed sources for a variety of applications. Other host crystals for Yb exhibit large gain linewidths, and these have been exploited to build diode-pumped, 100-femtosecond-pulse, high-energy sources. The Yb ionic size is very close to the Y-ion size in YAG and other Y-based materials and can be doped to levels as high as a 100 percent replacement of the Y ions. This is used to advantage in Yb: YAG thin-disk lasers, where typical doping levels can be on the order of 10 percent to raise the amount of absorbed single-pass power.

• Er3+. The ground-to-first-excited-state transition of the Er3+ ion provides laser operation in the 1,530 to 1650-nm wavelength region, desirable for active sensors since it falls in the eye-safe wavelength range. These laser transitions are 3½-level, leading to the need for high populations of the upper level to reach threshold. In addition, the energy-level structure of the ion promotes upconversion processes, where two nearby ions in the upper laser level interact, with one ion dropping to the ground state and the other ion moving to a higher energy state. This process competes with optical pumping and makes it difficult to obtain efficient operation. The best way to minimize this process is to reduce the Er doping level, but that also reduces the absorption strength and makes pumping less efficient. This

problem is worsened by the relatively small cross sections for the Er transitions, for both absorption and emission. One solution to the problem is to add Yb3+ ions into the crystal. Energy put into the Yb energy levels can transfer over to Er ions, since the two rare earths have excited states that are coincident in energy, a process called sensitization. Materials can be doped with high levels of Yb ions to provide good pump absorption and low-enough levels of Er to minimize upconversion. (For lamp pumping, Cr3+ ions can also be added, and they in turn transfer energy to Yb ions.) Unfortunately, to date, the only host materials where the sensitization process is effective are phosphate-based glasses. With diode pumping, the average-power capabilities and efficiencies of glass-based Er lasers have been increased but are still limited.11 Yb and Er glasses have found use in low-pulse-rate range finders, particularly handheld, shortrange devices. In the fiber-laser section, hybrid systems that use fiber lasers to pump bulk lasers are discussed, and Er:YAG is one bulk material that has been employed in hybrid designs.

• Tm3+ and Ho3+. As with Er-doped lasers in the 1,550-nm region, the common laser transitions for Tm- and Ho-doped materials in the 1,900-2,100-nm wavelength region, also eye-safe, terminate on the ground manifold and are thus 3½-level systems. Of the two ions, the Ho laser-transition cross sections are generally higher (by a factor of 5 in YAG, for example), but Ho has limited and weak absorption transitions for either lamp pumping or diode pumping. A common scheme for pumping employs Tm sensitization of the Ho laser, with Cr3+ sensitization added for lamp pumps. Pumping of Tm in the region around 795 nm has the special property that, with a high enough Tm doping level, there is a cross relaxation between pairs of nearby ion that generates two ions in the upper level per absorbed pump photon. Recent developments in diode-laser materials show promise for the emergence of efficient, high-power pump lasers that can drive the upper laser levels of Tm or Ho. The ions are of interest for application in a variety of eye-safe active sensors, including coherent-detection wind-sensing systems. Ho-doped bulk materials also feature in hybrid bulk-fiber solid-state lasers, discussed below.

• Pr3+. All of the ions discussed so far generate wavelengths in the infrared. Pr-doped materials have laser transitions at green and red wavelengths, as well as some near-IR transitions. The ion has narrow and weak pumping transitions at blue and shorter wavelengths and suffers from cross-relaxation processes that reduce laser efficiency at high doping levels and thus can barely operate with lamp pumping. The emergence of GaN-based blue diode lasers has made it possible to construct diode-pumped systems, and as GaN technology improves, Pr-doped lasers may emerge as a new source of direct laser operation in the visible wavelength range, with possible applications in underwater sensing.

Energy Storage and Q-Switching

Pumping power, either electrical for diodes or optical for solid-state lasers, leads to excitation of the upper laser level. If there is no laser operation, and the pump power is turned off, energy remains stored in the laser material, which decays away by spontaneous emission of photons and, in some materials, also by generation of phonons through nonradiative relaxation. In most cases, the decay is exponential, characterized by a single number, the upper-state lifetime or “storage” time. For a given pump power, the stored energy is directly proportional to the decay time, provided that only a small fraction of the ions in the material have been excited. With laser action, the stimulated emission process that gives rise to optical gain acts to extract the stored energy. The rate of extraction (or inversely, the stimulated lifetime) depends on the power in the laser beam but is of the same order as that of the spontaneous emission.

For semiconductor lasers, storage times are in the nanosecond range at best and lower in quantum-cascade devices. Transitions in solid-state lasers have storage times between 0.1 and 15 ms, with the notable exception of the 3.2-µs lifetime of Ti:sapphire. Thus, per watt of pump power, stored energies are in the nanojoule range for diode lasers and the millijoule range for solid-state lasers.

____________________

11 T. Yanagisawa, K. Asaka, K. Hamazu, and Y. Hirano, 2001, “11-mJ, 15-Hz single-frequency diode-pumped Q switched Er, Yb:phosphate glass laser,” Opt. Lett. 26: 1262.

The ability to store large amounts of energy in the laser medium is one of the key advantages of bulk solid-state lasers and is unique to them. (As noted below, fiber-format lasers, although capable of high CW power levels, have major challenges in storing energy.) The process of Q-switching allows bulk materials used as laser oscillators to deliver the stored energy in very short pulses, thereby generating high peak powers useful for many applications, including, notably, active sensing.