Analysis Through Triangulation and Synthesis to Interpret Data in a Mixed Methods Evaluation

Important Points Made by the Speakers

- Synthesis and triangulation among multiple sources of information and multiple types of methods can strengthen the quality and credibility of the evidentiary support for findings and recommendations, especially in complex interventions where any single data source will have inherent limitations.

- A theory of change provides an analytical framework for triangulation, but the theory may need to change as data are analyzed.

- Triangulation has benefited from the development of protocols, procedures, and methodologies.

- Triangulation benefits from multidisciplinary teams of investigators.

In this session, the workshop explored some of the key considerations of data analysis and interpretation for a complex, mixed methods evaluation, and particularly the use of triangulation and synthesis among multiple complementary sources of information and multiple evaluators to enhance the robustness, quality, and credibility of the evidence for evaluation conclusions and recommendations.

Triangulation, explained session moderator Carlo Carugi, who is senior evaluation officer and team leader at the Global Environmental Facility

(GEF), refers to the use of multiple sources of qualitative information, quantitative information, and data collection and analysis methods to arrive at evaluation findings or conclusions. “In research, [triangulation] is usually done either to validate the results in a study or to deepen and widen one’s understanding and insights into study results.” He noted that the literature contains several articles describing how data, theories, and methods are triangulated in a range of fields, but there are also articles critical of triangulation because of its lack of theoretic or empirical justification and its ad hoc nature. The development of standardized protocols, procedures, and methodologies for triangulation have helped address this criticism.

TRIANGULATION IN THE GLOBAL ENVIRONMENTAL FACILITY

Carugi described the use of triangulation in the GEF, which is a partnership among 183 countries with international institutions, civil society organizations, and the private sector to address global environmental issues while supporting national sustainable development initiatives. In the evaluation process, methodological triangulation is most commonly used in situations where data are unreliable or scarce. For example, the GEF uses triangulation among methods and observers in its country portfolio evaluations. Triangulation reduces the risk of giving excessive importance to the results of one method over those of the other methods used to analyze the collected data.

Common challenges the GEF faces when evaluating country portfolios, Carugi said, include the absence of country program objectives and indicators over the 20 or so years of a typical evaluation and the scarcity or unreliability of national statistics on environmental indicators and data series over that time frame, particularly in the least developed countries. He noted, too, that because the GEF is a partnership institution, it has no control authority over the national monitoring and evaluation systems that feed data into the GEF’s central monitoring information system hub. There are also challenges in evaluating the effects of GEF projects and establishing attribution, as well as the intrinsic difficulties in defining the GEF portfolio of projects prior to undertaking an evaluation.

To address these challenges, the GEF has adopted an iterative and inclusive approach that engages stakeholders during the evaluation process to help identify and address information and data gaps. This step is essential at the country level, said Carugi. The GEF conducts original evaluative research, including theory-based approaches, during the evaluation to assess progress toward desired impacts in the face of sparse data. It also uses qualitative methods and mixes emerging evidence with available quantitative data through systematic triangulation with the ultimate goal of identifying evaluation findings.

Carugi said the GEF’s country portfolio evaluations are all conducted in a standardized way using standard terms of reference and questions for comparability purposes. The initial terms of reference are made country specific through stakeholder consultation during a scoping mission to the country. The GEF then uses a standard set of data gathering methods and tools that include methods such as desk and literature reviews, portfolio analyses, and interviews, in addition to GEF-specific methods, such as analyses of a country’s environmental legal framework and reviews of outcomes to impact, which is a theory-based approach to examine the progress from outcomes to impact. All of these methods are deployed within the context of an evaluation matrix that the GEF develops for each evaluation.

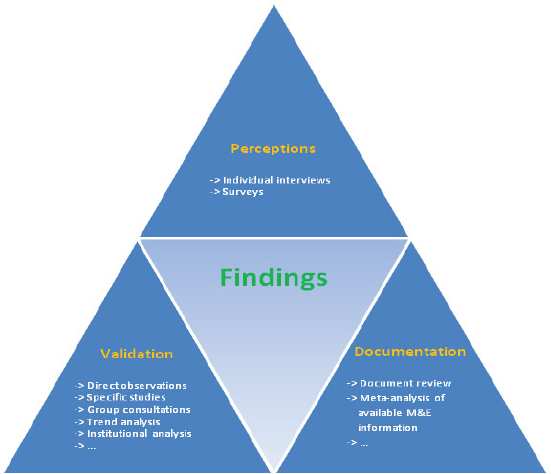

The evaluation matrix then feeds into a triangulation matrix. “We categorize the evaluative evidence in three major research areas of perceptions, validation, and documentation,” said Carugi, explaining that perceptions, while not always reflecting reality, need to be accounted for in these evaluations (see Figure 8-1). In the triangulation process, the evaluation team

FIGURE 8-1 Three major areas of evaluative evidence in the Global Environmental

Facility country program evaluations, as presented by Carugi.

NOTE: M&E = monitoring and evaluation.

SOURCE: Carugi, 2014.

brainstorms question by question to populate the matrix and discuss which findings are real and which need further analysis. “After the triangulation brainstorming meeting, we go back to the drawing board and try to confirm or challenge the key preliminary evaluation findings and try to identify what we can do to fill in the missing key preliminary evaluation findings,” said Carugi.

DEVELOPING A DEEPER AND WIDER UNDERSTANDING OF RESULTS

As described previously, the AMFm evaluation, said Catherine Goodman, senior lecturer in health economics and policy in the Department of Global Health and Development at the London School of Hygiene and Tropical Medicine, was a before-and-after study that did not have control areas. The study used outlet surveys at baseline and endline, household survey data for some of the countries being evaluated, and documentation of key contextual factors. The focus of Goodman’s talk was on using triangulation of the data gathered through different sources to deepen and widen the AMFm evaluation team’s understanding of the evaluation results.

The AMFm evaluation team developed a theory of change to depict the causal pathways through which AMFm interventions were intended to work. This theory of change was used to target the collection of quantitative and qualitative data that would be used to prepare case studies for each country. To understand the AMFm implementation processes and contextual factors within each country, the evaluation team collected qualitative data through key stakeholder interviews with public- and private-sector actors and reviewed key documents. The evaluation team also used some quantitative data from the outlet surveys on process-related outcomes such as coverage of training and exposure to communications messages. The evaluators analyzed these data separately for each country case study and then synthesized findings across countries.

The country case studies allowed the evaluation team to understand the level of AMFm implementation within each country and to compare implementation strength across countries and these data were helpful for interpreting the survey results related to outcomes and key success metrics. For example, Madagascar only met one success metric out of six, but the data from the case study revealed some process and contextual reasons for the country’s relatively poor performance. Orders for medication were low to begin with because of low confidence among importers, and the information and communications campaign was limited because in-country regulators decided that direct-to-consumer advertising was inappropriate and banned the practice 1 month into the program. In addition, several activities in the country muddled the baseline measures, and a coup d’état

and deterioration of the country’s economy during the pilot study further complicated matters. “I am not sure if I could say which one of these is the most important or if you were to remove one of those problems things would have been okay. It is not possible to really go that far, but it does help us identify some of the factors contributing to the poor performance.”

While triangulating data from multiple sources deepened the evaluators’ understanding of evaluation results within countries, synthesizing the findings across countries contributed to an understanding of how an AMFm intervention could work in other countries in the future by identifying the key factors that contributed to strong performance as well as those associated with weaker performance. She noted that one of the lessons learned from this type of evaluation is how important it is to document the process of implementation through a theory of change model when such a large-scale, complex intervention is being implemented in a messy, real-world setting. “Context was incredibly important,” she said, and “context probably made the most crucial difference between countries” in terms of performance.

One of the major challenges inherent in multidisciplinary science, said Jonathan Simon, Robert A. Knox Professor and director of the Center for Global Health and Development at Boston University, is working with the multidisciplinary team of investigators needed to do good multidisciplinary science. One ingredient of success, he continued, is respectful skepticism. The President’s Malaria Initiative evaluation team took an approach in which for every key issue it matched the team member who had the dominant relevant expertise with somebody who had completely different expertise, or as Simon put it, “somebody who would just take a different perspective such that when our team got together there were two presentations of their interpretation.” This led to a richer understanding of the analysis, which Simon described as “a healthy approach.”

Simon briefly discussed why discordant results do not worry him. Timing, for example, is one factor that can produce discordance if events such as management changes occur during the course of an evaluation. “On all of these evaluations, we are looking at 3-, 5-, and 8-year periods, and we should be very careful about the timing of the slice of information from the informant or from the survey, given that these are retrospective looks.” He suggested performing an analysis of discordance that looks at the different sources of information, the different methods being used, and the different levels of analysis to try to make sure that findings are as rich as possible.

Sangeeta Mookherji, assistant professor in the Department of Global Health at George Washington University, spoke about the lessons she has learned conducting large-scale mixed methods evaluations. She began by saying one of the biggest concerns with this type of evaluation is ensuring that data quality is as good as it can be in order to have the highest confidence possible in the results of the evaluation. “We need to think about validity and reliability regardless of what method we are using, but triangulation is one method to help with these things,” said Mookherji. She added that there are different ways of doing triangulation and that “you need to be mindful of choosing an appropriate way depending on what your objective is.” The theory of change is the analytical framework for triangulation or any other analytical method used, said Mookherji. However, she noted, “You have to be willing to also approach your theory with healthy skepticism and make the modifications and changes as you learn from your data and learn through your analysis and validation.”

Drawing from her experiences conducting multiple mixed methods evaluations, she noted that triangulation approaches, or processes for integrating or combining qualitative and quantitative data, can vary depending on the questions being addressed by the evaluation. It can also depend on the data sources and the formats of the data, including the available software and other tools for working with the data.

For a multicountry case study of routine immunization in sub-Saharan Africa funded by the Bill & Melinda Gates Foundation, which was asking what has driven improvements in immunization performance, Mookherji described how the evaluation team created systematic formats that every analyst used to extract information from the raw data and put it into categories that they then grouped by theme. “That is how we started to [ask] are we actually reaching consensus on an understanding of a key preliminary finding? [The findings then] went back to the external evaluation advisory group, as well as the larger team and the country teams, for validation,” Mookherji explained. This integration process, she said, enabled the team to understand what it was validating and how it was using triangulation.

Mookherji then spoke about synthesizing knowledge across countries. “Are there generalizable and relevant findings that you can draw out of the context and use in a wider perspective? That is really where I find that we need to do better with mixed methodologies,” she said. “We need to articulate what we need to do to ensure legitimate synthesis and legitimate drawing of more generalizable findings.” Validating preliminary findings within the evaluation team is an important first step, she said, and for that it is important to have divergent perspectives within the team to continu-

ally challenge any conclusions being made. Mookherji added that she has teams doing qualitative interviews so that two people are listening to every response and can make sure they both heard the same thing. She also includes an articulated process for alternative theory testing to ensure her team challenges its assumptions about the pathways by which an outcome or impact was achieved or not achieved. Finally, looking at the findings in terms of context is important when synthesizing findings.

LESSONS FROM THE IOM’S PEPFAR EVALUATION

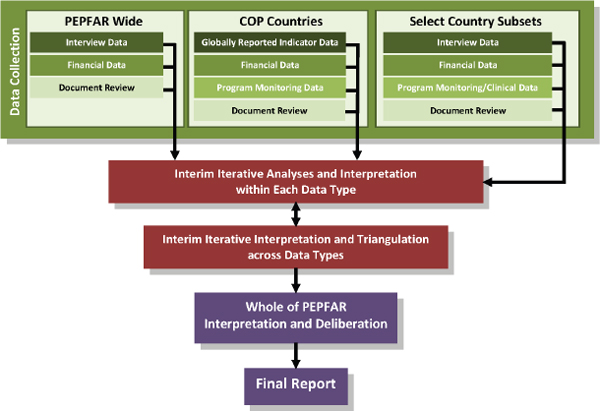

In this session’s final presentation, Bridget Kelly, senior program officer with the IOM Board on Global Health and the IOM/NRC Board on Children, Youth, and Families, spoke about the many layers of embedded and integrated triangulation in the IOM’s evaluation of PEPFAR, for which she was the study co-director. The many layers were needed to deal with different data sources, different investigators, and different subsets of the initiative. She noted that this analysis sometimes came up with discordant results that were not discarded but instead reported along with any evidentiary support for such findings. However, the overall process sought to find resonances among data sources to enhance trust in the reliability, credibility, and applicability of conclusions (see Figure 8-2).

One issue with the analysis, she noted, was that few data sources were available consistently across the entire PEPFAR program. The evaluation team did not try to integrate data. Instead, said Kelly, “We thought about integration happening at the level of analyses and interpretation,” acknowledging that “there is sometimes a gray area between what is data and what is analysis because you are thinking about what the data mean even as you are looking at the data.”

Instead of integrating data, the evaluation team focused on using rigorous methods for data collection and analysis within each type of data and matching the appropriate analytical methods to the different types of data. It was important, she explained, to document clearly what analytical methods were used for each type of data to ensure that the analysis was as purposeful and rigorous as possible. You want to have “mixed methods, and not just mixed up methods,” said Kelly, borrowing a phrase used previously by Larry Aber of New York University.

One strength of the evaluation process was that with a single institution managing the entire evaluation, the staff were working closely together at all times, which enabled the team to engage in frequent group discussions. The team also engaged in an iterative process of reading and responding to questions from committee members and each other that enabled the development of narratives that were consistent within countries and from which broader findings could be drawn. Kelly noted that this type of triangulation

FIGURE 8-2 The PEPFAR evaluation used multiple types and sources of data in an iterative triangulation process for integrated analysis and interpretation.

Note: COP = Country Operational Plan.

SOURCE: IOM, 2013.

across evaluators within the entire team of committee members, consultants, and staff continued throughout the entire analysis and deliberation phase of the evaluation.

Because this process was highly iterative, in some cases it resulted in having to go back to the original data or asking for more data. For example, once the team examined all of the program monitoring data that were available across the countries that were within the scope of the evaluation, it was able to get a sense of how limited the findings would be from that data source alone. As a result, the team requested data from other sources, such as CDC. From the team’s perspective, this triangulation process strengthened the quality and credibility of the evidentiary support for its findings and recommendations.

One drawback to this approach is that it is time consuming, said Kelly. At any one time, the evaluation team had 8 staff, 3 consultants, and 20 committee members. “That is a lot of people thinking, and ultimately all 20 of our committee members had to reach consensus on our conclusions and recommendations,” she noted. Not only was this process time consuming, but it was also operationally challenging because it forced the IOM staff

to plan for draft data presentations that the committee could examine and respond to in a way that was both iterative and efficient.

OTHER TOPICS RAISED IN DISCUSSION

During the discussion session, several workshop participants raised the question of whether triangulation is possible between just two sources of data, such as one source of quantitative and one source of qualitative data. Several panelists agreed that two sources is often not enough to have confidence in results. “That is why I think multiple members of our panel said it is multiple methods, not always mixed methods,” said Simon. Kelly noted, however, that it depends on the data available and the evaluation question. Sometimes it might take eight data sources to trust a conclusion, while on narrow issues one reliable data source may be sufficient.

Mookherji emphasized the importance of context, which sometimes gets removed in the course of analysis. At that point it becomes necessary to look at theory again and understand the effects of context. Common elements across different contexts can serve as a form of triangulation, she said, even though each setting also can have discordant elements.

In response to a question, Kelly described the process in general of arriving at consensus on the IOM committees. Recommendations are often the areas where the strongest resonance emerges among committee members, as well as areas that are most important for the study. Reports also contain information that is not necessarily at this level, though all committee members have to agree to author the final report. In some areas, it may be possible only to state findings and not progress to conclusions or recommendations.

Panelists also discussed the need for rigor when using mixed methods. Data gathering and analyses need to be as transparent as possible, said Mookherji, even if they are never completely reproducible. Publishing a protocol in advance and sticking to it is one way to achieve rigor, Goodman noted, but in an evaluation the protocol may need to change if the pathway to impacts is unclear. Data gathering and analysis may need to be iterative, though this process also can be documented. Simon observed that the level of rigor required for decision making and program improvement is not a fixed entity. Evaluators do the most rigorous analysis necessary to meet the needs of a program, and that level is highly variable. “Often we allow rigor to get in the way of utility and usefulness,” he said.