7

Derivation of Toxicity Values

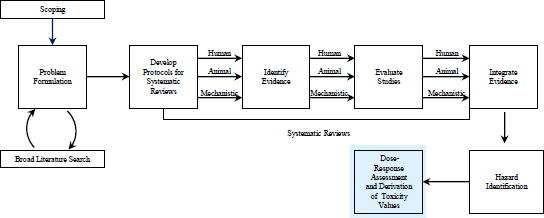

This chapter addresses the derivation of quantitative indicators of toxicity in the Integrated Risk Information System (IRIS) process (see Figure 7-1). By this stage in the IRIS process, evidence has been collected and evaluated, clearly defined adverse outcomes have been identified, and different streams of evidence have been integrated for hazard identification. The next phase in an IRIS assessment is to quantify the hazards through the computation of toxicity values—reference doses (RfDs), reference concentrations (RfCs), or unit risks—when the dose-response data support such computation. To clarify discussions in this chapter, the committee provides definitions of some terms and concepts used in this phase in Table 7-1.

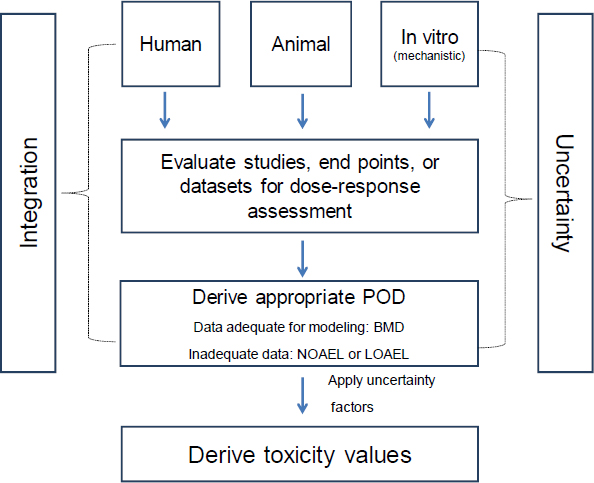

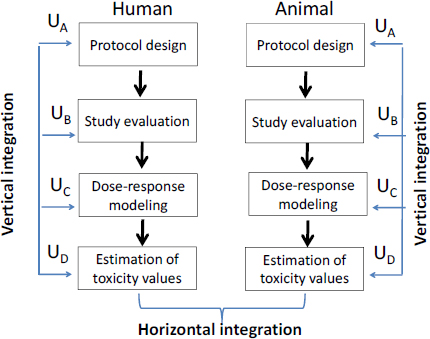

The derivation of a toxicity value consists of several steps as depicted in Figure 7-2, which is an expansion of the “Dose-Response Assessment and Derivation of Toxicity Values” box in Figure 7-1. The first step involves the evaluation of the human, animal, and in vitro (mechanistic) studies to determine whether the reported dose-response data are sufficient for dose-response modeling and assessment. Multiple studies with several end points are likely to be considered for dose-response assessment. The next step involves conducting a dose-response assessment and determining a point of departure (POD). The POD is used as the starting point for later extrapolations and analyses. Dose-response modeling is conducted when the data are adequate. In those cases, either an effective dose (ED) for cancer effects or a benchmark dose (BMD) for noncancer effects is calculated with lower confidence limits. If the data are inadequate for modeling, as sometimes occurs for noncancer effects, the dose-response assessment determines a no-observed-adverse-effect level (NOAEL) or a lowest observed-adverse-effect level (LOAEL) when a NOAEL cannot be determined. The next step involves calculation of a unit risk for cancer effects or an RfD or RfC (EPA 2002). Reference values are often calculated by using a BMD (or its lower confidence limit) or a NOAEL (or a LOAEL when a NOAEL is unavailable) and then applying one or more uncertainty factors. Although the NOAEL-LOAEL approach remains in practice, the BMD approach is preferred because it provides and uses dose-response information to a greater extent and reduces uncertainty (EPA 2012).

FIGURE 7-1 The IRIS process; the step for dose-response assessment and derivation of toxicity values is highlighted. The committee views public input and peer review as integral parts of the IRIS process, although they are not specifically noted in the figure.

TABLE 7-1 Definitions of Terms Related to Derivation of Toxicity Valuesa

| Term | Definition |

| Dose-response relationship | The relationship between the level of exposure to a chemical (dose) and the probability or magnitude of a biologic response. |

| Benchmark dose (BMD) or effective dose (ED) | An exposure level determined from a dose-response model that corresponds to a particular level of response, often 1-10% in excess of the control response. The response level is indicated by a subscript; for example, ED10 is the effective dose for a given response level that corresponds to a 10% response. |

| Lower bound benchmark dose (BMDL) or lower bound effective dose (LED) | The lower bound of a confidence interval for the BMD or ED. The response level is indicated by a subscript; for example, LED10 is the lower bound of a given response level that corresponds to a 10% response. |

| No-observed-adverse-effect level (NOAEL) | “The highest exposure level at which there are no biologically significant increases in the frequency or severity of adverse effect between the exposed population and its appropriate control; some effects may be produced at this level, but they are not considered adverse or precursors of adverse effects” (EPA 2013a). In practice, however, it is determined by a statistically significant difference. |

| Lowest observed-adverse-effect level (LOAEL) | “The lowest exposure level at which there are biologically significant increases in frequency or severity of adverse effects between the exposed population and its appropriate control group” (EPA 2013a). In practice, however, it is determined by a statistically significant difference. |

| Point of departure (POD) | A BMD or ED or its lower confidence limit or a NOAEL when a BMD is unavailable or a LOAEL when a NOAEL is unavailable. A POD is used as the starting point for later extrapolations and analyses. |

| Unit risk or slope factor | The increase in the probability of cancer incidence or related risk per unit dose exposure as determined from a POD (effective dose or its lower confidence limit). It is also the slope of an implied linear dose-response relationship below the POD. |

| Reference dose (RfD) | “An estimate (with uncertainty spanning perhaps an order of magnitude) of a daily oral exposure to the human population (including sensitive subgroups) that is likely to be without an appreciable risk of deleterious effects during a lifetime” (EPA 2013a). |

| Reference concentration (RfC) | “An estimate (with uncertainty spanning perhaps an order of magnitude) of a continuous inhalation exposure to the human population (including sensitive subgroups) that is likely to be without an appreciable risk of deleterious effects during a lifetime” (EPA 2013a). |

| Reference value | A reference dose or reference concentration |

| Central estimate | The “best” estimate of an unknown parameter, such as a BMD. Often determined using maximum likelihood estimation or the posterior mean. |

| 95% confidence interval or bounds | A statistical statement about the most reasonable range of estimates of an unknown parameter, constructed in such a manner that the interval will contain the true value of the parameter with 95% probability when the underlying dataset is replicated. |

| Lower and upper bound | The two points that define a confidence interval. |

aReaders might also wish to consult the glossary at http://www.epa.gov/risk_assessment/glossary.htm.

FIGURE 7-2 Derivation of toxicity values. Data integration and uncertainty analysis must be considered in the process.

Toxicity values depend on several factors, including the choice of studies (study design), the health outcomes, the application of dose-response models, the selection of one or more PODs, and the choice of uncertainty factors. Differences among toxicity values are often attributable to variability (for example, differences due to species, sex, and age) and uncertainty (for example, unknown mechanism of action and choice of dose-response model or POD). Therefore, it is critical to consider systematic approaches to synthesizing and integrating the multitude of toxicity values in light of variability and uncertainty.

Derivation of toxicity values is governed by several Environmental Protection Agency (EPA) guidance documents, including A Review of the Reference Dose and Reference Concentration Processes (EPA 2002), Methods for Derivation of Inhalation Reference Concentrations and Application of Inhalation Dosimetry (EPA 1994), Guidelines for Carcinogen Risk Assessment (EPA 2005a), Supplemental Guidance for Assessing Susceptibility from Early-Life Exposure to Carcinogens (EPA 2005b), and Benchmark Dose Technical Guidance (EPA 2012). The topic of dose-response assessment was also discussed in the National Research Council (NRC) report Science and Decisions: Advancing Risk Assessment (NRC 2009), which identified the need to develop guidance related to the handling of uncertainty and variability and urged development of a unified dose-response assessment framework for chemicals that links the understanding of disease processes, mechanisms, and human heterogeneity in cancer and noncancer outcomes.

This chapter discusses the status of the EPA response to the recommendations in the NRC report Review of the Environmental Protection Agency’s Draft IRIS Assessment of Formaldehyde (NRC 2011) that have to do with calculation of toxicity values. In addition, the committee describes current practice in deriving toxicity values, suggests some approaches that can help EPA to implement the formaldehyde report recommendations fully, and provides its findings and recommendations.

The 2011 NRC formaldehyde report recommended that EPA evaluate its methods used in the IRIS process to select studies for the derivation of reference values and unit risks. In particular, the report advocated that EPA “establish clear guidelines for study selection, balance study strengths and weaknesses, weigh human vs experimental evidence, and determine whether combining estimates across studies is warranted” (EPA 2013b, p. 15). The report did not specify the methods that EPA should use to develop guidelines and update its approaches. It also made a number of recommendations related to the calculation of reference values and unit risks (see Box 7-1).

As described in Chapter 1, the committee reviewed the EPA reports Status of Implementation of Recommendations (EPA 2013b) and Chemical-Specific Examples (EPA 2013c) and recent draft IRIS assessments for methanol (EPA 2013d) and benzo[a]pyrene (EPA 2013e) to compare progress made against the NRC formaldehyde report recommendations (NRC 2011) regarding calculation of toxicity values. In particular, the committee focused on EPA’s response to the major issues noted in the formaldehyde report, which included the need to establish clear guidelines for study selection and to describe, justify, and assess the assumptions and models used in deriving toxicity values.

EPA has made a number of responsive changes in the IRIS program since the publication of the NRC formaldehyde report, including (a) development of a process for study selection that requires transparent documentation of study quality, credibility of the evidence of hazard, and adequacy of quantitative dose-response data for determining a POD; (b) the derivation and graphical presentation of multiple toxicity values; and (c) documentation of the approach for conducting dose-response modeling. The new assessment template (EPA 2013b) includes a streamlined dose-response modeling output and consideration of organ-specific or system-specific and overall toxicity values. EPA has also developed tools and methods for managing data and ensuring quality in dose-response analyses. It stated that its objectives are “to minimize errors, maintain a transparent system for data management, automate tasks where possible, and maintain an archive of data and calculations used to develop assessments” (EPA 2013b, p. 17). The committee encourages EPA to meet the stated goal of having IRIS documents discuss model processes and derivation of toxicity values and associated uncertainties more completely.

Establish Clear Guidelines for Study Selection

The NRC formaldehyde report identified a need for clearly stated criteria for the selection of studies used to derive toxicity values. EPA acknowledges the need for selection criteria by stating that “once these studies have been identified, the basic criterion for selecting a subset for the derivation of toxicity values is whether the quantitative exposure and response data are available to compute a NOAEL, LOAEL or benchmark dose/concentration. When there are many studies, the assessment may focus on those that are more pertinent or of higher quality” (EPA 2013b, p, F-53). EPA provides additional guidance regarding the attributes used to evaluate studies for derivation of toxicity values (see Box 7-2), including balancing strengths and weaknesses and weighing human vs experimental evidence (EPA 2013b, Appendix B, Sections 3-6, and Appendix F, pp. F-53 to F-55). The committee encourages EPA to develop detailed criteria that take

• Describe and justify assumptions and models used. This step includes review of dosimetry models and the implications of the models for uncertainty factors; determination of appropriate points of departure (such as benchmark dose, no-observed-adverse-effect level, and lowest observed-adverse-effect level), and assessment of the analyses that underlie the points of departure.

• Provide explanation of the risk-estimation modeling processes (for example, a statistical or biologic model fit to the data) that are used to develop a unit risk estimate.

• Assess the sensitivity of derived estimates to model assumptions and end points selected. This step should include appropriate tabular and graphic displays to illustrate the range of the estimates and the effect of uncertainty factors on the estimates.

• Provide adequate documentation for conclusions and estimation of reference values and unit risks.

Source: NRC 2011, pp. 165-166.

into consideration common technical issues and notes, for example, that group-average exposures as represented by a median or mean of the exposure groups are not as reliable as specific individual exposures. A fitted dose-response model based on a group-average exposure can distort the true underlying dose-response relationship. The committee also encourages EPA to summarize study information in a table, including all end points, datasets for dose-response assessment, and toxicity-value derivations. Careful attention should be paid to study design, including the doses, dose spacing, and number of subjects.

Describe and Justify Assumptions and Models Used to Calculate Toxicity Values

EPA has developed guidance on modeling dose-response data, assessing model fit, selecting suitable models, and reporting POD modeling results (EPA 2005a,b; EPA 2012; EPA 2013b). The draft handbook (EPA 2013b, Appendix F) currently has a placeholder for detailed technical guidance on dose-response models and assumptions. The EPA Guidelines for Carcinogen Risk Assessment (Section 3.2, EPA 2005a) provides guidance on many issues raised by the NRC formaldehyde report, including choice of dosimetry, toxicodynamic models vs empirical curve-fitting, and choice of and narrative concerning POD. The present committee encourages EPA to complete the IRIS assessment preamble in accordance with EPA’s existing guidelines. It also encourages EPA to include more detailed technical guidance on model assumption, selection, and process in the draft handbook.

Provide Explanation of the Risk-Estimation Modeling Process

Because the draft handbook (EPA 2013b, Appendix F) and the draft preamble (EPA 2013b, Appendix B) are yet to be completed for the risk-estimation modeling process, the committee reviewed chemical-specific examples in EPA (2013c)—specifically, example 6 (“Dose-Response Modeling Output”) and example 7 (“Considerations for Selecting Organ/System-Specific or Overall Toxicity Values”)—to assess the changes that EPA has made regarding this and related recommendations in the NRC formaldehyde report. The detailed presentation of dose-response modeling output and derivation of toxicity values is helpful. In example 6, EPA

BOX 7-2 Considerations in Deriving Toxicity Values

• Species: a preference for the use of human data or mammalian data when human data are unavailable.

• Relevance of exposure paradigm: a preference for studies that use an environmentally relevant exposure route, sufficient exposure duration (chronic or subchronic studies when chronic toxicity values are developed), and multiple exposure levels.

• Potential selection bias: a preference for studies as appropriate with low risk of selection bias and higher participation and follow-up rates.

• Potential confounding: a preference for studies with a design (such as matching procedures) or analysis (such as procedures for statistical adjustment) that adequately address the relevant sources of potential confounding for a given outcome.

• Exposure measurements: a preference for studies that evaluate exposure during a biologically relevant time window for the outcome of interest, that use high-quality exposure assessment methods that reduce measurement error, that are not influenced by knowledge of health outcome status, and that include individual exposure measurements.

• Measurement of health outcome: a preference for studies using widely accepted, valid, and reliable outcome assessment methods. Measurement or assignment of the outcome should not be influenced by knowledge of exposure status.

• Power and precision: EPA evaluates the following factors when choosing studies: numbers of test subjects and doses and appropriate experimental design.

Source: EPA 2013b, p. F-54.

shows how multiple dose-response models (particularly empirical curves) can be fitted to a given dataset and how to use statistical criteria to select a best model and later a toxicity value. Although that approach might remain acceptable under some circumstances, the NRC formaldehyde committee encouraged EPA to move away from that old paradigm and to develop approaches for integrating multiple toxicity values rather than selecting one value or study that appears to be the “best.”

Example 6 also shows how EPA uses goodness-of-fit or information criteria in conjunction with the spread of the lower confidence limits on the BMD (BMDLs) to select a preferred model. Specifically, among all models that fit the data reasonably well (p > 0.1), the one with the lowest Akaike information criterion1 is chosen if the corresponding BMDLs are all within a range of a factor of 3; otherwise, the model with the lowest BMDL is selected. Several implications regarding EPA’s criteria should be noted. First, although the criteria are easy to implement, EPA should articulate the pros and cons of adopting such model-selection criteria. In particular, if the difference is more than 3-fold among the BMDLs derived from different models fitted to the same dataset, questions arise as to the consistency of the models below the benchmark-response level. In that case, the model that yields the lowest BMDL can be an outlier even though its selection might appear more protective. Choosing the one that has the smallest BMDL could result in selection of the study that has the lowest quality (for example, the smallest sample). Second, goodness-of-fit tests might fail to find a lack of fit because of a small sample but indicate a poor fit when the sample is sufficiently larger. Third, caution must be exercised if those criteria were to be used to compare models fitted to different datasets. Information criteria are designed to differentiate models that have different numbers of parameters but under the same distribution.

_____________________________

1The Akaike information criterion “is an estimate of a measure of fit of the model” (Akaike 1974, p. 716).

The information criteria might prefer a simpler model with fewer parameters because the underlying data are insufficient to show that another model with more parameters is statistically better than the simpler model even if the latter might be a “true” model. Fourth, if only one toxicity value is selected, an opportunity to quantify uncertainty associated with the model, the model parameters, and POD could be lost.

Example 6 illustrates dose-response modeling using EPA’s BMD software. That example demonstrates that particular model parameters are sometimes set to a default (or boundary) value with no explanation or justification. Example 6 also contains cases in which a saturated model—in which the number of parameters is equal to the number of dose groups—is fitted. It is well established that statistical estimation cannot accommodate a model that has more parameters than distinct data points (dose groups, in this case). Any single statistical criterion for model selection has its limitations and pitfalls. Thus, multiple criteria should be used simultaneously, and all details regarding assumptions and justifications of dose-response modeling should be included in the IRIS assessments.

It should be noted that most of the dose-response models implemented in EPA’s BMD software do not accommodate for adjustment for covariates that are independent risk factors or confounders, whereas most epidemiologic studies adjust for multiple covariates. If smoking modifies the risk of the effects of an exposure, EPA’s BMD software currently requires separate dose-response models to be fitted for smokers and nonsmokers; this is feasible only if the study is of sufficient size for each group to provide adequate power. However, if there are multiple covariates, including continuous ones, it becomes much less feasible to conduct even a stratified dose-response assessment or toxicity-value estimation specific to each group. There is a clear need for EPA to facilitate model and software development for dose-response modeling of studies that have more complex design than one-generation, single time point settings. Examples include studies in which the exposure is time-dependent or the end point is measured over time (repeated-measurement experiments). EPA’s BMD software has implemented a beta version of dose-time-response models for neurobehavioral-toxicity end points. With those models, an RfD can vary greatly depending on when a neurobehavioral end point is observed (Zhu 2005; Zhu et al. 2005a,b). Those models allow the use of random effects to account for both between-subject and within-subject variability.

Although the present committee commends EPA’s initiative in deriving an organ-specific, system-specific, or overall toxicity value among multiple candidates for various end points or from various studies, it has some concerns about EPA’s approach. The draft handbook (EPA 2013b, Appendix F) lists the following criteria for evaluating each candidate toxicity value: (a) strength of evidence of hazard for the health outcome or end point, (b) attributes previously evaluated in selecting studies for deriving candidate toxicity values, (c) the basis of the POD, (d) other uncertainties in dose-response modeling, and (e) uncertainties due to other extrapolations. On the basis of the criteria, the organ-specific or system-specific toxicity value might be based on a single candidate value that is considered to be the most appropriate for protecting against toxicity to the given organ or system. Alternatively, the value might be based on a derived composite value that is supported by multiple candidate toxicity values that protect against toxicity to the given organ or system. The present committee recommends that the result of the evaluation of individual toxicity values be presented in a tabular form to show which ones meet or fail to meet particular criteria. In example 7, EPA selects a particular RfD “because it is associated with the application of the smaller composite UF [uncertainty factor] and because similar effects were replicated across other studies” (EPA 2013c, p. 45). EPA should also make clear whether and how the criteria are weighed in determining the selected toxicity value to ensure that the process is transparent and consistent. The committee strongly suggests that EPA consider approaches to integration of as much of the evidence as possible rather than selecting a limited segment of the evidence in deriving an organ-specific, system-specific, or an overall toxicity value. The committee discusses the latter point further below.

Dose-response modeling and estimation of toxicity values can be improved when mechanistic data are available. Although incorporating mechanistic evidence into dose-response modeling remains challenging, potential benefits include natural integration of evidence among various end points with the same or similar mechanisms and facilitating extrapolation among species and from higher to lower doses. It has also been suggested that mechanistic information can reveal the functional form of the dose-response relationship, but it is worth noting that it is impossible to determine the correct functional form of a population dose-response curve solely from mechanistic information derived from animal studies and in vitro systems. Consider the threshold model, for example. As described in the NRC report Assessing Human Health Risks of Trichloroethylene: Key Scientific Issues (NRC 2006, pp.318-323), the dose threshold separating the low-dose mechanism from the high-dose mechanism is likely to differ among individuals because of widely varied human environments and genetic susceptibilities; this often creates a sigmoidal population dose-response curve even if the dose-response relationship has a clear threshold in a single rodent species or cell line. The committee encourages EPA to develop and apply physiologically based models that incorporate both mechanism and human-population variability into dose-response modeling when feasible.

Assess the Sensitivity of Derived Estimates to Model Assumptions and End Points Selected

As advised in the NRC formaldehyde report (NRC 2011), EPA is adopting the principles of systematic review to select multiple studies, multiple end points, and multiple datasets for dose-response assessment and toxicity-value estimation. The availability of multiple datasets makes it possible to analyze the sensitivity and variability of the toxicity-value estimates to demonstrate uncertainties inherent in study design, population exposed, exposure estimate, mechanism of action, and model choice. The draft IRIS assessments for methanol (EPA 2013d) and benzo[a]pyrene (EPA 2013e) include easy-to-understand tabular and graphic displays that clearly show the PODs for selected end points with corresponding applied uncertainty factors to illustrate the range of the estimates and the effect of uncertainty factors on the estimates.

Provide Adequate Documentation for Conclusions and Estimation of Toxicity Values

Recent EPA IRIS assessments have included extensive detail on published studies, evaluation of the evidence base regarding toxicity, and pharmacokinetic and dose-response modeling. Elements in dose-response analysis for which additional documentation is needed include the decision processes used by EPA to select studies for derivation of an RfC, RfD, or unit risk; the process used to select a particular value for the RfC, RfD, or unit risk from a range of values determined by using separate studies; and the process used to select a response level (typically 1, 5, or 10%) for the POD. Although EPA provides some general guidance regarding those decisions, it is not always clear how it applied the guidance in the selection of final values for any particular chemical assessment. One way to enhance the documentation of the estimates is to use established systematic algorithms, such as meta-analysis, when more than one relevant study is available. Recognizing that subjective judgments are a feature of all systems for combining and evaluating evidence, the committee encourages EPA to be explicit and detailed regarding such judgments.

Additional Progress in Calculating Toxicity Values

EPA has developed standard descriptors to characterize the level of confidence in each reference value on the basis of the likelihood that the value would change with further testing (see Box 7-3). Development of the descriptors is consistent with guidelines for deriving recommendations from systematic reviews that evaluate the quality of evidence.

BOX 7-3 Standard Descriptors to Characterize Level of Confidence

• High confidence: The reference value is not likely to change with further testing, except for mechanistic studies that might affect the interpretation of prior test results.

• Medium confidence: This is a matter of judgment, between high and low confidence.

• Low confidence: The reference value is especially vulnerable to change with further testing.

Source: EPA 2013b, Appendix B, p. B-14.

Overall, the committee considers that EPA has made good progress in implementing the recommendations of the NRC formaldehyde report. Because implementation is a continuing process, the committee provides a brief review of several additional approaches that are relevant for the development of toxicity values that could be considered as the IRIS program continues to evolve. The committee has focused its attention largely on two main subjects: meta-analytic and Bayesian approaches and analysis and communication of uncertainty.

Combining Data for Dose-Response Modeling: Meta-Analytic and Bayesian Approaches

Historically, EPA has often selected a single “best” study to derive RfCs, RfDs, and unit risks. That approach might be preferable when one study is clearly more reliable and valid than all other studies for estimating human dose-response relationships. However, varying study strengths and weaknesses often precludes the identification of any one study as preferred for estimating such relationships. In those cases, it is preferable to use multiple studies to derive the RfCs, RfDs, and unit risks because that approach should provide more reliable estimates of human dose-response relationships than the use of a single study. Moreover, the use of multiple studies takes full advantage of the systematic-review process that led to evidence integration and reduces the potential for bias from selecting the most extreme study.

In recent assessments, EPA has embraced the use of multiple studies, primarily by estimating PODs, RfCs, RfDs, or unit risks separately for all relevant studies and then choosing final toxicity values from within the observed ranges. However, the process that EPA uses to select the final values is still not sufficiently transparent and appears somewhat subjective, and documentation varies among draft IRIS assessments. Formal statistical methods are widely available for combining estimates from multiple studies and might be useful for this step in the IRIS process. For example, meta-analysis (Stroup et al. 2000) and Bayesian hierarchical models (Sutton and Abrams 2001) have been used to combine information from various studies in many kinds of application.2 Those statistical approaches also can be used to combine toxicity estimates from dose-response studies related to multiple species given exchangeability assumptions (for example, rat and mouse studies are equally relevant surrogates for human dose-response relationships) or with modifications that give more weight to human studies.

_____________________________

2A Bayesian hierarchical model can also be used for meta-analysis.

Meta-Analytic Approaches

Information from multiple studies can be combined either at the individual level (pooling all observations from each study into one large data set that is used to fit a dose-response model) or at the aggregate level (collecting only the reported dose-response estimate from each study as in typical meta-analyses). The draft handbook (EPA 2013b, Appendix F) addresses that issue in two sections: “Considerations for Combining Data for Dose-Response Modeling” and “Considerations for Selecting Organ/System-Specific or Overall Toxicity Values.” The first section describes criteria for pooling data at the individual level, and the second section indicates that either using a single study or combining aggregate estimates from different studies to produce a composite toxicity value might be acceptable if the methods are documented.

In the first section, on pooled data analysis, EPA includes the following reasons for not combining data sets: heterogeneity in datasets because of differences in laboratory procedures, subject demographics, or route of exposure; and biologic or study-design limitations. Criteria that EPA uses to determine whether data should be combined include whether multiple studies have sufficient quality for deriving PODs, whether common specific outcome measurements are reported, whether common measures of dose or validated physiologically based pharmacokinetic models are available, whether exposure duration and observation duration are comparable, whether there is evidence of homogeneous responses to dose, and whether there is no clear preference for any single study. The criteria used by EPA are relevant, cautionary, and more restrictive than widely accepted approaches for analysis of pooled epidemiologic data (also called individual-level meta-analysis). For example, epidemiologic data from multiple studies that had disparate procedures, exposure duration, and participant demographics can often be combined before dose-response modeling by using appropriate methods and heterogeneity statistics or other empirical evaluations to judge the similarity of dose-response relationships among studies (Steenland et al. 2001; Stroup et al. 2000; Lyman and Kuderer 2005). Indeed, when heterogeneity in dose-response estimates is modest, pooling individual-level data before dose-response modeling has several advantages over meta-analysis and other aggregate methods. The advantages include the ability to use dose-response models other than those used in the original publications, to adjust for a common set of confounding variables, and to evaluate risks in susceptible groups that were not evaluated in the original publications (Blettner et al. 1999). The committee agrees with EPA that pooling data requires careful consideration. Modeling of pooled data often requires specification of all study covariates and the use of random effects to capture remaining study differences. Additional obstacles include reluctance or inability to share data; heterogeneity of study organization, protocols, or data formats; language barriers; missing data; and the need to harmonize information among studies (Schmid et al. 2003).

When individual-level data are unavailable, are not readily obtained and evaluated, or do not meet the criteria for being combined, meta-analysis of aggregate dose-response estimates is a reasonable alternative. Under some conditions, the estimates from aggregate meta-analysis are equivalent to those from pooled individual-level data analysis, and similar evaluation methods are used in both types of analyses (Lyman and Kuderer 2005). As discussed above, using multiple studies to derive dose-response estimates is generally preferable to relying on single studies. Guidelines for these methods are readily available (Blettner et al. 1999; Stroup et al. 2000; Orsini et al. 2012) and could be adapted for EPA’s purposes.

Bayesian Hierarchical Models

Bayesian methods are based on the premise that information regarding unknown parameters (in this case, dose-response parameters) can often be obtained apart from the results of any one study (see Appendix C). In the context of dose-response evaluation, the outside information

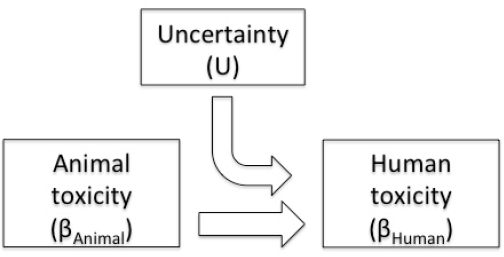

could include results of mechanistic studies, maximum plausible slope factors based on US cancer prevalence, dose-response relationships for similar or related chemicals, or any other relevant information. In the Bayesian system, the outside information is represented quantitatively in the form of a “prior” probability distribution, which is then formally combined with dose-response data to generate a “posterior” probability distribution that attempts to summarize quantitatively all relevant information regarding the dose-response relationship. In fact, current EPA methods for dose-response characterization fit within a simple Bayesian framework as shown in Figure 7-3. The figure corresponds to a simple model of the dose-response relationship between humans and animals for any particular chemical:

βhuman = U × βanimal

where βhuman is the dose-response parameter (such as a BMD) in humans, βanimal is the same dose-response parameter in animals, and U is the uncertainty ratio of the two parameters. If no human dose-response data are available for a chemical, EPA’s calculation (RfD = BMDL/UF) can be viewed as the computation of a lower credible limit for βhuman by using this Bayesian formulation and the assumption that ln(βanimal) and ln(U) are normally distributed. Because traditional uncertainty factors reflect multiplicative uncertainty, they can be represented by lognormal distributions (that is, normal distributions on the log scale). Table 7-2 shows the conversion of common uncertainty factors to log standard deviations.

FIGURE 7-3 Simple Bayesian framework for estimating human toxicity from results of an animal study.

| Uncertainty Factor | 95% One-Sided 90% Two-Sided | 97.5% One-Sided 95% Two-Sided | 99.5% One-Sided 99% Two-Sided | |

| 3 | 0.668 | 0.561 | 0.427 | |

| 5 | 0.978 | 0.821 | 0.625 | |

| 10 | 1.400 | 1.175 | 0.894 | |

| 100 | 2.800 | 2.350 | 1.788 | |

| 300 | 3.468 | 2.910 | 2.214 | |

| 1,000 | 4.200 | 3.524 | 2.682 | |

For example, consider an RfD calculation that is based on a single animal study, such as the oral RfD of 0.3 mg/kg-day for phenol that is derived from 1-standard deviation decrease in maternal weight gain in rats (EPA 2002). The RfD was calculated on the basis of a BMD of 157 mg/kg-day and a 95% lower confidence limit (BMDL) of 93 mg/kg-day. Dividing the BMDL by an uncertainty factor of 300 results in 0.31 mg/kg-day, rounded to 0.3 mg/kg-day when reported as the RfD. To apply the Bayesian framework shown in Figure 7-3, one first computes ln(BMD) and ln(BMDL), which are 5.06 and 4.53, respectively. If one assumes that the phenol assessment used a two-sided 95% confidence interval to obtain the BMDL, the standard deviation for the confidence interval around the ln(BMD) is (5.06 – 4.53)/1.96 = 0.27. Thus, the prior distribution for ln(βanimal) has a mean of 5.06 and a standard deviation of 0.27. A prior distribution for ln(U) with a mean of 0 and a standard deviation of 2.91 corresponds to a best estimate of equivalent animal and human toxicity (U = 1) with an uncertainty factor of 300 (see Table 7-2 for conversion between traditional uncertainty factors and Bayesian prior standard deviations). Assuming normal distributions of ln(βanimal) and ln(U), one can show that ln(βhuman) is normally distributed with a mean of 5.06 and a standard deviation of sqrt(0.272 + 2.912) = 2.92. Without any human data to inform the dose-response relationship, that distribution for ln(βhuman) would usually be referred to as an induced prior rather than a posterior. The lower bound of the two-sided 95% credible interval for βhuman is thus exp(5.06 – [1.96 × 2.92]) = 0.51 mg/kg-day. This example illustrates the ease with which the uncertainty-factor approach could be modernized by using formal Bayesian methods and the Bayesian lower bound of βhuman in place of the traditional RfD.

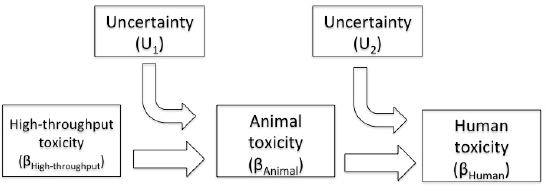

If EPA adopted Bayesian methods for dose-response assessment, it would be helpful for it to focus on considerations of relevance and exchangeability of each study for human toxicity assessment. The two concepts are easily incorporated into Bayesian modeling by grouping exchangeable information in the same stage and sequentially updating each stage in order from least relevant to most relevant. For example, a Bayesian framework for combining data from multiple studies of cancer for a particular chemical could start with an assumption that available high-throughput tests (including structure-activity relationship models, in vitro mutagenicity tests, DNA methylation tests, and nonmammalian studies) are equally likely to reflect human cancer risk (that is, they are “exchangeable”) but might be less relevant than data from other available studies, such as mammalian studies or epidemiologic studies. After pharmacokinetic, mechanistic, or default assumptions are used to model the dose-response relationship for each study on a common human-equivalent dose-response scale, estimates from exchangeable studies can be averaged by using familiar meta-analytic methods (frequentist or Bayesian, as in Sutton and Abrams 2001). A similar meta-analysis could be conducted within each class of exchangeable studies. For example, rat and mouse studies that used different strains might be considered exchangeable with each other for the purpose of estimating human-toxicity values but deemed more relevant than the in vitro and nonmammalian studies; a separate meta-analysis of human-equivalent dose-response estimates would then be determined for the rodent studies. Finally, epidemiologic studies with sufficient exposure characterization and adequate confounding control (if any such studies are available) could be grouped as yet another set of exchangeable studies.

As discussed in Chapter 6 of the present report, Bayesian methods also offer a natural framework for combining the three meta-analysis estimates in order of increasing relevance: high-throughput studies, mammalian studies, and human studies. The committee notes that human cell lines are increasingly used for high-throughput studies. If they become more relevant for estimating human risks than animal studies, the order of relevance should be modified accordingly (that is, mammalian studies, high-throughput studies, and human studies).

In the first stage, the high-throughput meta-analysis estimate can be used as a prior mean that can be updated with data from the more relevant mammalian-study meta-analysis estimate. The first-stage posterior mean can then be used as a prior mean that can be updated in the second stage with data from the more relevant human-studies meta-analysis estimate (see Figure 7-4).

FIGURE 7-4 Bayesian framework for combining studies of different types. High-throughput studies can switch positions in the model with animal studies if they are more relevant to human toxicity.

The model can be written as follows:

βanimal = U1 × βhigh-throughput

βhuman = U2 × βanimal

where βhuman is the dose-response parameter (such as a BMD) in humans, βanimal is the same dose-response parameter in animals, βhigh-throughput is the same dose-response parameter in high-throughput studies, and U1 and U2 are uncertainty ratios of the dose-response parameters that relate high-throughput studies to animals and relate humans to animals, respectively. Prior variances of U1 and U2 for each of the two stages of updating would be selected to reflect the general reliability of each type of data source for estimating toxicity in accordance with EPA’s guidance on uncertainty-factor selection (Stedeford et al. 2007).

This framework ensures that information at each higher stage of relevance will quickly overcome prior information from previous stages. For example, high-quality epidemiologic studies that used large samples should dominate the posterior dose-response estimates when available, and mammalian studies that used samples of sufficient size should dominate the posterior dose-response estimates when epidemiologic studies are unavailable. More important, however, the framework offers a coherent system for combining dose-response information from disparate studies when no one study group is clearly dominant. In other words, updating a Bayesian estimate at each stage can be seen as providing a weighted average of the prior mean and the data that are entered in the current stage. The weight of the new data, for example, is proportional to the ratio of prior variance to total variance; less weight is given to the component that has a higher variance. For example, application of the Bayesian framework to a situation with extensive high-throughput data but only one small rodent study would result in a posterior dose-response estimate somewhere between that for the rodent study and that suggested by the high-throughput data. A larger sample size in the single small rodent study or the addition of a second rodent study with a similar dose-response estimate would decrease the variance for the meta-analysis estimate and therefore increase the weight given to the rodent studies in computing the posterior dose-response estimate.

As another example, consider a hypothetical scenario in which the ED10 for a new chemical is estimated to be 0.1 mg/kg-day and the LED10 (two-sided 95% confidence) is 0.07 on the basis of in vitro tests of inflammatory response (such as ELISA) with an uncertainty factor of 1,000 for predicting the ED10 for animal studies. On a natural log scale, ln(ED10) is -2.30, ln(LED10) is -2.66, and the standard deviation of U1 is 3.52 (corresponding to UF = 1,000 in Table 7-2 for 95% confidence). The standard deviation of ln(ED10) from the high-throughput data is therefore (-2.30 – [-2.66])/1.96 = 0.184, assuming that the LED10 is the lower bound of the two-sided 95% confidence interval, and the induced prior standard deviation for the ln(ED10) in animals is sqrt(0.1842 + 3.522) = 3.52. If no animal or human data are available and an uncertainty factor of 10 is thought to be appropriate for extrapolation from animals to humans, U2 has a prior

standard deviation of 1.17 (see Table 7.2), and the induced prior standard deviation of the ln(ED10) in humans is sqrt(3.522 + 1.172) = 3.71. Therefore, EPA might report a central estimate for the ED10 of exp(-2.30) = 0.1 mg/kg-day (the prior median) and a lower bound ED10 of exp(-2.30 – [1.96 × 3.71]) = 0.00007 mg/kg-day (the lower bound on the 95% two-sided credible interval) for humans.

Suppose that two small rat studies become available, and a meta-analysis is conducted that results in a ln(ED10) of -0.161 with a standard error of 0.9. Using a normally distributed prior, the Bayesian analysis at stage 1 (see Figure 7-4) results in a posterior mean that is a simple weighted average of the prior mean and the meta-analysis mean for the animal studies. In that weighted average, the weight given to the animal meta-analysis is the ratio of the prior variance to the total variance (3.522/[3.522 + 0.92] = 0.94). Thus, in this example, the Bayesian posterior mean animal ln(ED10) that combines the high-throughput data and results of mammalian studies places 94% weight on the animal studies, and this results in a posterior mean animal ln(ED10) of [(0.94) (-0.161)] + [(0.06)(-0.230)] = -0.165 and a posterior standard deviation of sqrt([3.52-2 + 0.9-2]-1) = 0.872. If EPA chooses an uncertainty factor of 10 (which corresponds to a prior standard deviation of 1.17 for U2, according to Table 7-2) for using this posterior animal estimate as a surrogate for human data, the induced prior standard deviation of the ln(ED10) in humans is sqrt(0.8722 + 1.172) = 1.46, and the agency might report a central estimate ED10 of exp(-0.165) = 0.85 mg/kg-day and a lower bound ED10 of exp(-0.165 – [1.96 × 1.46]) or 0.048 mg/kg-day.

Finally, suppose that a single human study becomes available and reports a ln(ED10) of -3.0 with a standard error of 0.5. Under the normality assumption, the weight of the human study estimate is 1.462/(1.462 + 0.52) = 90%, and this results in a posterior mean ln(ED10) of (0.90) (-3.0) + (0.10)(-0.165) = -2.72. The posterior variance is the reciprocal of the sum of the inverse variances of the prior and the human estimates, (1.46-2 + 0.5-2)-1 = 0.224. Thus, EPA might report a central estimate ED10 of exp(-2.72) = 0.066 mg/kg-day and a lower bound ED10 of exp(-2.72 - 1.96 × sqrt[0.224]) = 0.026 mg/kg-day.

The results of each stage of updating for this hypothetical example are shown in Table 7-3 with a traditional RfD calculated by using only one data stream (high-throughput, animal, or human) at a time with no intraspecies uncertainty factor. After the two-stage Bayesian updating that combines all three evidence streams, the posterior lower bound for the ED10 is 0.026 mg/kg-day compared with traditional RfDs of 0.000007, 0.015, and 0.019 mg/kg-day for the high-throughput, animal, and human studies, respectively. In this example, the Bayesian lower bound ED10 is slightly higher than the traditional RfD based on the human study alone because the animal and high-throughput studies suggest that the chemical is less toxic than the human study suggests and because standard error is smaller in the posterior distribution. In situations in which the central estimate of the ED10 is lower for the animal studies than for the human studies, the Bayesian lower bound could be less than the traditional RfD that is based on the human studies alone.

Adding a second human study that reports the same ln(ED10) of -3.0 would produce a meta-analysis with the same ln(ED10) but a smaller standard error than that reported for the first study alone; this reflects increased precision of the dose-response estimate. The smaller standard error would result in more than 90% weight for the human data and thus a central estimate ln(ED10) closer to -3.0. It would also result in a smaller posterior variance that would be closer to the human meta-analysis variance. Thus, with more consistent high-quality epidemiologic data, the in vitro data and animal data would have less and less effect on the dose-response estimates.

Although the Bayesian framework described here is relatively simple, it can be expanded to allow additional stages (new categories of exchangeable studies), assumptions other than normality, different dose-response parameters (such as the cancer slope factor), and assessment of the potential effects of bias and other sources of uncertainty on the animal to human dose extrapolation (DuMouchel and Harris 1983; Peters et al. 2005). For example, EPA might wish to group

TABLE 7-3 Summary of Results of the Two-Stage Bayesian Example

| ED10: Central Estimate (mg/kg-day) | ED10: Lower Bound (mg/kg-day) | Traditional RfD (mg/kg-day) | ||

| High-throughput studies | 0.1 | 0.07 | 0.000007 | |

| Animal studies | 0.85 | 0.15 | 0.015 | |

| Bayesian posterior—first stage | 0.85 | 0.048 | — | |

| Human study | 0.05 | 0.019 | 0.019 | |

| Bayesian posterior—second stage | 0.066 | 0.026 | — | |

epidemiologic studies separately according to study design, such as grouping cross-sectional studies as one category of exchangeable studies and grouping cohort studies and nested case-control studies as a different set of more relevant exchangeable studies. That approach might be particularly useful when cross-sectional designs are deemed to have a higher risk of bias, such as a greater potential for reverse causation noted for some biomarker-based epidemiologic studies (Loccisano et al. 2012). Incorporation of specific mechanisms of action could be handled by updating multiple dose-response parameters at each stage, when each parameter reflects a particular biologic step, such as the amount of aryl hydrocarbon receptor binding or DNA methylation. Bayesian methods are also compatible with quantitative uncertainty analysis because uncertainty distributions can be incorporated as additional prior components, and alternative dose-response model specifications can be incorporated via Bayesian model averaging (Hoeting et al. 1999; Gustafson 2004). Indeed, a Bayesian formulation for computing RfDs and unit risks might help EPA to move forward with quantitative uncertainty analysis in a manner that does not depart radically from existing methods, such as that used in the above examples. As toxicity databases expand, EPA could establish Bayesian priors on the basis of empirical evaluation of the distribution of the human-to-animal dose-response parameter ratios for chemicals in the same class or for all available chemicals in place of default lognormal distributions.

Other, more sophisticated Bayesian approaches have been proposed for combining dose-response estimates for multiple species and multiple chemicals (DuMouchel and Harris 1983; Jones et al. 2009). Those approaches might also be useful to EPA if guidance for selection of appropriate models and priors is developed.

Analysis and Communication of Uncertainty

As discussed earlier, estimation of toxicity values is the culminating step of the IRIS process. The reference values and unit risks draw on data from heterogeneous and dynamic systems that underpin hazard identification, exposure assessment (for epidemiologic studies), and dose-response assessment. Regardless of the studies included, the analytic tools used, and the underlying models assumed, there will always be uncertainties surrounding the final estimates because of incomplete knowledge about the systems involved. Uncertainty can be characterized and reduced by the use of more or better data and should be managed. It should be distinguished from variability, which is the result of inherent differences in susceptibility among humans regarding exposures and related health effects. Variability can be better characterized with more data but cannot be reduced.

How to address uncertainty has been a recurring issue in IRIS assessments and other agency risk-related activities (NRC 2009; 2010; 2012; IOM 2013). The NRC report Science and Decisions states (NRC 2009, p. 107) that

There are different strategies (or levels of sophistication) for addressing uncertainty. Regardless of which level is selected, it is important to provide the decision-maker with information to distinguish reducible from irreducible uncertainty, to separate individual vari-

ability from true scientific uncertainty, to address margins of safety, and to consider benefits, costs, and comparable risks when identifying and evaluating options.

It further recommends (NRC 2009, p 107) that “to make risk assessment consistent with such an approach, EPA should incorporate formal and transparent treatment of uncertainties in each component of the risk-characterization process and develop guidelines to advise assessors on how to proceed.” The present committee agrees with the previous NRC committee and recommends that analysis and communication of uncertainty be an integrated component of IRIS assessments even when a default used in the assessment is consistent with EPA’s own guidelines. At a minimum, that approach would include a demonstration of variation in the final toxicity-value estimates under different assumptions, options, models, and methods.

Communication of uncertainty can be mistakenly interpreted by some as indicating poor-quality or insufficient science (Johnson and Slovic 1995; Freudenburg et al. 2008); in some cases, explicit acknowledgments of uncertainty have been misinterpreted as acceptance of lower scientific standards that might weaken a scientific body’s authority (Funtowicz and Ravetz 1992). However, experimental research also demonstrates that conveying appropriate information about uncertainty—and in particular balanced characterization of the range rather than one-sided bounds of variation—can be seen as improving transparency (Johnson and Slovic 1995) and can improve risk-management decisions (Joslyn and LeClerc 2012; Joslyn et al. 2013). Furthermore, failure to acknowledge uncertainties leaves EPA vulnerable to attacks on management decisions or policies that are based on the best available science (Brickman et al. 1985) and might be considered unethical by some stakeholders (Smithson 2008).

As EPA revises the IRIS process to make it more efficient, flexible, and transparent, there is an opportunity for the agency to develop a framework for uncertainty analysis and communication and to make uncertainty analysis and communication integral to the IRIS process. In the discussion below, the committee offers suggestions on how the IRIS program can improve uncertainty analysis and communication.

Uncertainties arise in all stages and components of the IRIS process. Those in an earlier stage cascade and propagate to later stages and eventually aggregate to form the overarching uncertainty surrounding a final toxicity estimate. Characterizing this overarching uncertainty fully requires a vertical integration of uncertainties over every stage of the assessment process, including the initial protocol design, study identification and evaluation, dose-response modeling, low-dose extrapolation, cross-species extrapolation, and any other extrapolations that are needed to yield the final toxicity estimate. Omission of one source of uncertainty in a given stage can result in an inaccurate or even distorted characterization of the overall uncertainty. Although it is critical for understanding the uncertainties and their overall effect on a final toxicity estimate, such a vertical integration of uncertainties is rarely done in IRIS assessments (NRC 2009, pp.100-101) partly because of the lack of data, especially for some intermediate steps, and because such resources as in-house expertise and readily applicable tools are insufficient (EPA 2004, p. 34). Uncertainties arising from particular sources or in particular stages might be especially relevant to a specific risk-management decision. Thus, identifying and focusing on uncertainties that contribute the most to the overall uncertainty (have the largest effect on the final toxicity value) is a more practical treatment of uncertainties (NRC 2009; IOM 2013), although it is not always clear which sources contribute the most to the overall uncertainty unless a comprehensive analysis is performed.

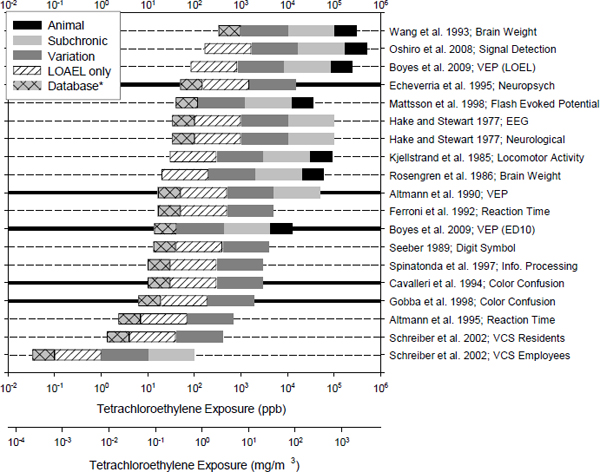

Uncertainty analyses have become more common in IRIS assessments, but they are conducted for select individual intermediate stages in the process (such as dose-response modeling and low-dose extrapolation), often in isolation from one another. In the IRIS assessment of dioxin and dioxin-like compounds, for example, EPA examined uncertainty in unit risk estimates for cancer that was due to assumption of different forms of the dose-response model; EPA also examined uncertainty independently for different cancers (NRC 2006). In the IRIS assessment of tetrachloroethylene, EPA examined uncertainty (and variability) in RfC estimates and used mul-

tiple noncancer end points observed in multiple studies (NRC 2009): the uncertainty and variability could be attributed to differences in study design, species, end point, and dose-response form, among others. Those examples demonstrate a horizontal integration of variation associated with one or more factors, sources, or stages (such as model form and dose metric) for several options or combination of assumptions; uncertainties from other sources or stages were not considered. Horizontal integration can characterize the effect of uncertainty from selected sources and is a part of the overall vertical integration of uncertainties (see Figure 7-5).

When it is feasible, overall uncertainty can be characterized through a probabilistic distribution of the toxicity values that corresponds to all possible option combinations or, to a lesser extent, to the range of variation under feasible option combinations that are actually considered. Such a distribution would be ideal, but a range of variation is more commonly estimated in practice. Still, that range of variation simply reflects the observed part of the distribution of the overarching uncertainty (NRC 2010). Within the observed range, one or more toxicity values might be selected for a risk-management decision, and this selection is supported by and can be communicated with the uncertainty analysis. Using a distribution or range-finding approach to assess overall uncertainty offers a systematic approach to combining observed toxicity estimates into one or several groups according to homogeneity criteria with respect to, for example, a common mechanism or similar end points. To that end, the meta-analysis and Bayesian multilevel models discussed earlier are useful tools. Empirical tools, such as graphical displays, are also useful (NRC 2011). For example, Figure 7-6 is a cumulative distribution of 18 RfCs derived from multiple neurotoxicity end points from a collection of epidemiologic studies and laboratory animal experiments (NRC 2009). The smallest RfC and the two largest RfCs stand out, and the rest cluster between 0 and 100. Grouping the toxicity estimates appropriately requires that the systems underpinning the individual toxicity values be comparable or homogeneous regarding such elements as study design, exposure regimen, and health effects. For example, when health end points are plausible for a common mechanism, there is good support for using the variation range of the corresponding toxicity values as the horizontal integration of the overarching uncertainty (NRC 2011). Conversely, caution should be exercised in attempting to combine multiple studies to conduct dose-response modeling of combined data or to group toxicity estimates when the studies are different in design, exposed species, exposure regimen, and generalizability to human populations. If some study designs, species, or exposure regimens are more relevant to human toxicity, it might be better to group the most relevant studies than to group all available studies in the characterization of uncertainty.

FIGURE 7-5 Characterization of overarching uncertainty requires vertical and horizontal integration of uncertainties in every stage of the assessment process. Note that not all steps are shown in this illustration.

FIGURE 7-6 Cumulative distribution of reference concentrations (RfCs) derived from multiple neurotoxicity end points from a collection of epidemiologic studies and laboratory experiments on humans or animals. The length of a bar represents a 3-fold or 10-fold uncertainty factor, the shade of the bar represents the source of uncertainty as indicated in the figure legend, and the left end of a bar represents an RfC. Source: NRC 2010.

Characterizing the empirical-variation range of the overall uncertainty that is due to differences between studies or end points is useful in elucidating the totality of uncertainty (NRC 2010). The limitation of the empirical-variation approach is that it often does not differentiate the relative effect of uncertainties from different sources or stages because the toxicity values arise from different studies or used different end points, dose-response models, exposure metrics, or other factors. Hence, the range of uncertainty estimates is the result of partial horizontal and partial vertical integrations of some elements of uncertainty. That limitation highlights the fact that only a horizontal integration can tell the size of a single source of uncertainty, and only a vertical integration can tell the contribution of a single source uncertainty to the overarching uncertainty relative to others. When data support a fuller assessment of total uncertainty, Bayesian hierarchical modeling (Spiegelhalter et al. 2002) and multilevel probabilistic modeling (Small 2008) are examples of approaches that support vertical integration of multistage uncertainties. An improved uncertainty analysis within an individual IRIS assessment does not necessarily dictate a complex level of sophistication in mathematical, statistical, or computational methods. Simple analyses or qualitative elucidation of various uncertainties—for example, due to plausible mechanisms—can be adequate especially when few data are available or risk-management decisions are robust under competing options (NRC 2009; IOM 2013).

Another short-term strategy that EPA could adopt to improve uncertainty communication is to present clearly two dose-response values in each future toxicity assessment: a central estimate (such as a maximum likelihood estimate or a posterior mean) and a lower-bound estimate

for a POD from which a final toxicity value is derived. The lower bound becomes an upper bound for a cancer slope factor but remains a lower bound for a reference value. That might improve public risk communication for those who are not well versed in the IRIS process and support cost-benefit analysis and other policy evaluations while remaining health-protective. The recommendation is consistent with the EPA Guidelines for Carcinogen Risk Assessment (EPA 2005a), which notes that central estimates might be more useful for some purposes, such as uncertainty analysis and ranking of hazardous agents.

Central estimates can be obtained from dose-response modeling software, such as EPA BMDS. For example, EPA (2013c) includes output for a multistage cancer model fitted to hepatocellular-tumor data on female mice exposed to diisononyl phthalate (Example 6). Although the full form of the probability function for this model is P[response] = background + (1-background) × [1-exp(-[β1 × dose1]-[β2 × dose2]-[β3 × dose3]-[β4 × dose4])], the model is approximately linear at low doses with a slope of β1. For this example, the maximum likelihood estimate for β1 is shown as about 0.001155 kg-day/mg in the BMDS output. In contrast, the upper bound cancer slope factor based on EPA’s POD method is reported as 0.00206 kg-day/mg. The two values correspond to the low-dose slopes of the red and black curves, respectively, in Figure 6-3 of EPA (2013c). Presenting both toxicity values in IRIS summaries would improve the clarity and utility of IRIS assessments.

Several frameworks—including EPA’s methods for considering uncertainty analysis in policy analysis—could be considered by the IRIS program. The RIVM/MNP Guidance for Uncertainty Assessment and Communication developed by the Netherlands Environmental Assessment Agency National Institute for Public Health and the Environment (Janssen et al. 2003, Petersen et al. 2003; van der Sluijs et al. 2003, 2004, 2008) provides one such example. Several elements of that framework could be incorporated into IRIS guidelines for uncertainty analysis and communication, including the following:

• Develop a plan early in the IRIS process (for example, in parallel with the literature-review phase) for the conduct of the uncertainty analysis (Janssen et al. 2003; Petersen et al. 2003; NRC 2009; IOM 2013). The goals of this planning phase include screening of uncertainty sources to identify optional methods, setting priorities for resource allocation for analyses, and finally developing a strategy for uncertainty communication (NRC 2009, pp.120-121). The planning stage should consider the end use in mind (NRC 2009) and can be tailored for each toxicity assessment because the need, scope, and feasibility of uncertainty analyses can vary from one toxicity assessment to another; indeed, in some cases or stages, uncertainties cannot be easily quantified (IPCS 2006; NRC 2007).

• Establish a consistent framework for the application of approaches (for example, from a qualitative discussion to a full probabilistic distribution of uncertainty) and criteria for their conduct (van der Sluijs et al. 2003, 2004; NRC 2009, p. 100). The framework should recognize and permit various degrees of technical sophistication and rigor corresponding to the objectives and feasibility of a particular uncertainty analysis. The guidelines should also establish an inventory of standard methods for uncertainty analysis according to the stage of an assessment and the nature or source of the uncertainty and should offer insights into method choice (van der Sluijs et al. 2004). The framework would help to reveal the distinct sources and nature of uncertainties, including whether they are unquantifiable system-level uncertainties, indeterminacy, or ignorance (van der Sluijs et al. 2003). The level of uncertainty analysis might be tiered according to quantification level, from a single default (no variation); to qualitative and systematic characterization; to quantitative characterization with bounds, ranges, and sensitivity; to a probabilistic distribution (van der Sluijs et al. 2003; EPA 2004; IPCS 2006). The tiered classification matches the degree of sophistication in uncertainty analysis with the level of concern for the problem and feasibility of conducting the analysis. In general, a lower-tier analysis can be used to screen first to determine whether it is adequate or whether there is sufficient concern to warrant an in-depth uncertainty analysis. Uncertainty analyses can also be tied to the range of potential effects on a chosen management decision or policy issue. The uncertainty might be negligible if it has a small

effect on a policy and invites careful examination if the stakes are high. The RIVM/MNP guidance adopted an approach that ranks uncertainties according to their importance for the policy issue and identifies where a more elaborate uncertainty assessment is warranted and its feasibility (van der Sluijs et al. 2003; Walker et al. 2003).

• Develop a template to support unified documentation of uncertainty analysis by stage or source of an IRIS assessment. For example, the template should summarize the options used, the methods used, the rationale of the choice, relevant results (such as range of variation), and open issues. IRIS assessments often use EPA BMDS software for dose-response modeling and declare that a model “fits the underlying dataset” if it meets a conventional goodness-of-fit criterion and then uses the fitted model for toxicity estimation. However, model-fitting has sometimes been achieved by fixing some model parameters rather than estimating them or by deleting some dose groups (NRC 2010; 2011). Failure to document the technical details amounts to omitting a source of uncertainty and potential bias.

• Develop strategies for communicating uncertainties. Engaging stakeholders earlier in the planning process as EPA is now doing can help to formulate critical messages. For example, it helps to ask such questions as, What are the main messages, and how will the stakeholders and general public receive these messages? What are the major assumptions involved in the main messages of policy decision? How robust are the major conclusions in light of the assumptions? Which aspects of uncertainties require additional attention and analysis? How clear should the statements on uncertainty be, and how can uncertainty be reported in a balanced and consistent fashion (van der Sluijs et al. 2004; Kloprogge et al. 2007)? IOM (2013) recommends always clearly acknowledging the existence of uncertainty and describing its source, magnitude, reducibility in the short term, and importance for the policy decision. Good planning and documentation of uncertainty analysis facilitate better communication and will probably improve stakeholders’ confidence in the IRIS process.

Finding: EPA develops toxicity values for health effects for which there is “credible evidence of hazard” after chemical exposure and of an adverse outcome.

Recommendation: EPA should develop criteria for determining when evidence is sufficient to derive toxicity values. One approach would be to restrict formal dose-response assessments to when a standard descriptor characterizes the level of confidence as medium or high (as in the case of noncancer end points) or as “carcinogenic to humans” or “likely to be carcinogenic to humans” for carcinogenic compounds. Another approach, if EPA adopts probabilistic hazard classification, is to conduct formal dose-response assessments only when the posterior probability that a human hazard exists exceeds a predetermined threshold, such as 50% (more likely than not likely that the hazard exists).

Finding: EPA has made a number of substantive changes in the IRIS program since the publication of the NRC formaldehyde report, including the derivation and graphical presentation of multiple dose-response values and a shift away from choosing a particular study as the “best” study for derivation of dose-response estimates.

Recommendation: EPA should continue its shift toward the use of multiple studies rather than single studies for dose-response assessment but with increased attention to risk of bias, study quality, and relevance in assessing human dose-response relationships. For that purpose, EPA will need to develop a clear set of criteria for judging the relative merits of individual mechanistic, animal, and epidemiologic studies for estimating human dose-response relationships.

Finding: Although subjective judgments (such as identifying which studies should be included and how they should be weighted) remain inherent in formal analyses, calculation of toxicity values needs to be prespecified, transparent, and reproducible once those judgments are made.

Recommendation: EPA should use formal methods for combining multiple studies and the derivation of IRIS toxicity values with an emphasis on a transparent and replicable process.

Finding: EPA could improve documentation and presentation of dose-response information.

Recommendation: EPA should clearly present two dose-response estimates: a central estimate (such as a maximum likelihood estimate or a posterior mean) and a lower-bound estimate for a POD from which a toxicity value is derived. The lower bound becomes an upper bound for a cancer slope factor but remains a lower bound for a reference value.

Finding: Advanced analytic methods, such as Bayesian methods, for integrating data for dose-response assessments and deriving toxicity estimates are underused by the IRIS program.

Recommendation: As the IRIS program evolves, EPA should develop and expand its use of Bayesian or other formal quantitative methods in data integration for dose-response assessment and derivation of toxicity values.

Finding: IRIS-specific guidelines for consistent, coherent, and transparent assessment and communication of uncertainty remain incompletely developed. The inconsistent treatment of uncertainties remains a source of confusion and causes difficulty in characterizing and communicating uncertainty.

Recommendation: Uncertainty analysis should be conducted systematically and coherently in IRIS assessments. To that end, EPA should develop IRIS-specific guidelines to frame uncertainty analysis and uncertainty communication. Moreover, uncertainty analysis should become an integral component of the IRIS process.

Akaike, H. 1974. A new look at the statistical model identification. IEEE T. Automat. Contr. 19(6):716-723.

Altmann, L., A. Bottger, and H. Wiegand. 1990. Neurophysiological and psychophysical measurements reveal effects of acute low-level organic solvent exposure in humans. Int. Arch. Occup. Environ. Health 62(7):493-499.

Altmann, L., H.F. Neuhann, U. Kramer, J. Witten, and E. Jermann. 1995. Neurobehavioral and neurophysiological outcome of chronic low-level tetrachloroethene exposure measured in neighborhoods of dry cleaning shops. Environ. Res. 69(2):83-89.

Blettner, M., W. Sauerbrei, B. Schlehofer, T. Scheuchenpflug, and C. Friedenreich. 1999. Traditional reviews, meta-analyses and pooled analyses in epidemiology. Int. J. Epidemiol. 28(1):1-9.

Boyes, W.K., M. Bercegeay, W.M. Oshiro, Q.T. Krntz, E.M. Kenyon, P.J. Bushnell, and V.A. Benignus. 2009. Acute perchloroethylene exposure alters rat visual-evoked potentials in relation to brain concentrations. Toxicol. Sci. 108(1):159-172.

Brickman, R., S. Jasanoff, and T. Ilgen. 1985. Controlling Chemicals: The Politics of Regulation in Europe and the United States. Ithaca: Cornell University Press.

Cavalleri, A., F. Gobba, M. Paltrinieri, G. Fantuzzi, E. Righi, and G. Aggazzotti. 1994. Perchloroethylene exposure can induce colour vision loss. Neurosci. Lett. 179(1-2):162-166.

DuMouchel, W.H., and J.E. Harris. 1983. Bayes methods for combining the results of cancer studies in humans and other species: Rejoinder. J. Am. Stat. Assoc. 78(382):313-315.

Echeverria, D., R.F. White, and C. Sampaio. 1995. A behavioral evaluation of PCE exposure in patients and dry cleaners: A possible relationship between clinical and preclinical effects. J. Occup. Environ. Med. 37(6):667-680.

EPA (U.S. Environmental Protection Agency). 1994. Methods for Derivation of Inhalation Reference Concentrations and Application of Inhalation Dosimetry. EPA/600/8-90/066F. Office of Research and Development, U.S. Environmental Protection Agency, Research Triangle Park, NC [online]. Available: http://www.epa.gov/raf/publications/pdfs/RFCMETHODOLOGY.PDF [accessed December 20, 2013].

EPA (U.S. Environmental Protection Agency). 2002. A Review of the Reference Dose and Reference Concentration Processes. Final report. EPA/630/P-02/002F. Risk Assessment Forum, U.S. Environmental Protection Agency, Washington, DC [online]. Available: http://www.epa.gov/raf/publications/pdfs/rfd-final.pdf [accessed December 18, 2013].

EPA (U.S. Environmental Protection Agency). 2004. An Examination of EPA Risk Assessment Principles and Practices. EPA/100/B-04/001. Risk Assessment Task Force, Office of Science Advisor, U.S. Environmental Protection Agency, Washington, DC [online]. Available: http://www.epa.gov/osa/pdfs/ratf-final.pdf [accessed December 19, 2013].

EPA (U.S. Environmental Protection Agency). 2005a. Guidelines for Carcinogen Risk Assessment. EPA/630/P-03/001F. Risk Assessment Forum, U.S. Environmental Protection Agency, Washington, DC. March 2005 [online]. Available: http://www.epa.gov/raf/publications/pdfs/CANCER_GUIDELINES_FINAL_3-25-05.PDF [accessed October 3, 2013].

EPA (U.S. Environmental Protection Agency). 2005b. Supplemental Guidance for Assessing Susceptibility from Early-Life Exposure to Carcinogens. EPA/630/R-03/003F. Risk Assessment Forum, U.S. Environmental Protection Agency, Washington, DC [online]. Available: http://www.epa.gov/ttn/atw/childrens_supplement_final.pdf [accessed December 20, 2013].

EPA (U.S. Environmental Protection Agency). 2012. Benchmark Dose Technical Guidance. EPA/100/R-12/001. Risk Assessment Forum, U.S. Environmental Protection Agency, Washington, DC [online]. Available: http://www.epa.gov/raf/publications/pdfs/benchmark_dose_guidance.pdf [accessed December 18, 2013].

EPA (U.S. Environmental Protection Agency). 2013a. EPA Risk Assessment Glossary [online]. Available: http://www.epa.gov/risk/glossary.htm [accessed December 19, 2013].

EPA (U.S. Environmental Protection Agency). 2013b. Part 1. Status of Implementation of Recommendations. Materials Submitted to the National Research Council, by Integrated Risk Information System Program, U.S. Environmental Protection Agency, January 30, 2013 [online]. Available: http://www.epa.gov/iris/pdfs/IRIS%20Program%20Materials%20to%20NRC_Part%201.pdf [accessed October 22, 2013].

EPA (U.S. Environmental Protection Agency). 2013c. Part 2. Chemical-Specific Examples. Materials Submitted to the National Research Council, by Integrated Risk Information System Program, U.S. Environmental Protection Agency, January 30, 2013 [online]. Available: http://www.epa.gov/iris/pdfs/IRIS%20Program%20Materials%20to%20NRC_Part%202.pdf [accessed December 19, 2013].

EPA (U.S. Environmental Protection Agency). 2013d. Toxicological Review of Methanol (Noncancer) (CAS No. 67-56-1) in Support of Summary Information on the Integrated Risk Information System (IRIS). EPA/635/R-11/001Fa. U.S. Environmental Protection Agency, Washington, DC. September 2013 [online]. Available: http://www.epa.gov/iris/toxreviews/0305tr.pdf [accessed October 22, 2013].