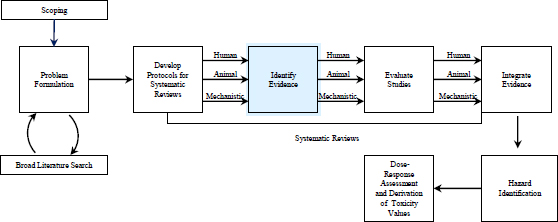

This chapter addresses the identification of evidence pertaining to questions that are candidates for systematic reviews as described in Chapter 3 (see Figure 4-1). Systematic reviews for US Environmental Protection Agency (EPA) Integrated Risk Information System (IRIS) assessments, as for any topic, should be based on comprehensive, transparent literature searches and screening to enable the formulation of reliable assessments that are based on all relevant evidence. EPA has substantially improved and documented its approach for identifying evidence in response to the report Review of the Environmental Protection Agency’s Draft IRIS Assessment of Formaldehyde (NRC 2011) and other criticisms and advice. As a way to encourage further progress, the present committee compares recent EPA materials and assessments with the guidelines developed for systematic review by the Institute of Medicine (IOM 2011) and offers specific suggestions for improving evidence identification in the IRIS process. The committee makes this comparison with the IOM guidelines because they are derived from several decades of experience, are considered a standard in the clinical domain, and are thought to be applicable to the IRIS process.

CONSIDERATION OF BIAS IN EVIDENCE IDENTIFICATION

Systematic reviews of scientific evidence are preferable to traditional literature reviews partly because of their transparency and adherence to standards. In addition, the systematic-review process gathers all the evidence without relying on the judgment of particular people to select studies. Nonetheless, systematic reviews are prone to two types of bias: bias present in the individual studies included in a review and bias resulting from how the review itself was conducted (meta-bias). Meta-bias cannot be identified by examining the methods of an individual study because it stems from how a systematic review is conducted and from factors that broadly affect a body of research.

FIGURE 4-1 The IRIS process; the evidence-identification step is highlighted. The committee views public input and peer review as integral parts of the IRIS process, although they are not specifically noted in the figure.

It might be argued that the most important form of meta-bias that threatens the validity of findings of a systematic review results from the differential reporting of study findings on the basis of their strength and direction. Since the early focus on publication bias or the failure to publish at all because of potential implications of study findings, investigators have come to recognize that reporting biases encompass a wide array of behaviors, including selective outcome reporting. Reporting biases have been repeatedly documented in studies that show that research with statistically significant results is published more often (sometimes more often in English-language journals) and more quickly, and in journals that have higher citation frequencies than research whose results are not statistically significant (Dickersin and Chalmers 2011). The reporting bias related to the publication of research with statistically significant results might also be exacerbated by increased publication pressures (Fanelli 2010). Reporting biases have been shown to be associated with all sorts of sponsors and investigators; for example, industry-supported studies in health sciences have been shown to be particularly vulnerable to distortion of findings or to not being reported at all (Lundh et al. 2012). Moreover, an investigator’s failure to submit (as opposed to selectivity on the part of the editorial process) appears to be the main reason for failure to publish (Chalmers and Dickersin 2013). There is evidence that reporting biases might also be a subject of concern in laboratory and animal research (Sena et al. 2010; Korevaar et al. 2011; ter Riet et al. 2012). The potential for reporting biases is one reason to search the gray (unpublished) literature. Specifically, the gray literature might be less likely to support specific hypotheses than literature sources that might be biased toward publication of “positive” results.

Systematic review does not identify the presence of reporting biases themselves. However, a comprehensive search will include the types of studies particularly prone to reporting biases, such as industry-supported studies in the health sciences. A failure to find studies in such categories that are particularly prone to reporting bias should raise concern that reporting bias is present. In addition, a systematic review provides the opportunity to compare findings among different groups of funders and investigators and to identify any indication of meta-bias.

A second type of meta-bias is information bias, which occurs when data on the groups being compared (for example, animals exposed at different doses or control vs exposed animals) are collected differentially (nonrandom misclassification). Such bias can affect a whole body of literature. Incorrect information can also be collected in error (random misclassification) without a direction of the bias. Random misclassification is understandably undesirable in toxicity assessments.

This chapter specifically addresses two steps that are critical for minimizing meta-bias: performing a comprehensive search for all the evidence, including unpublished findings, and screening and selecting reports that address the systematic-review question and meet eligibility criteria specified in the protocol. Error can also arise when data are abstracted from studies during the review process. Systematic-review methods should be structured to maximize the accuracy of the data extracted from the identified studies in the systematic review. Therefore, the committee addresses an additional step in the systematic-review process in this chapter: extracting the data from studies included in the IRIS review (see Table 4-1, section on IOM Standard 3.5).

RECOMMENDATIONS ON EVIDENCE IDENTIFICATION IN THE NATIONAL RESEARCH COUNCIL FORMALDEYDE REPORT

The National Research Council (NRC) formaldehyde report (NRC 2011) recommended that EPA adopt standardized, documented, quality-controlled processes and provided specific recommendations related to evidence identification (see Box 4-1). Implementation of the recommendations is addressed in the following section of this chapter. Detailed findings and recommendations are provided in Table 4-1, and general findings and recommendations are provided at the conclusion of the chapter.

• Establish standard protocols for evidence identification.

• Develop a template for description of the search approach.

• Use a database, such as the Health and Environmental Research Online (HERO) database, to capture study information and relevant quantitative data.

Source: NRC 2011, p. 164.

Relatively little research has been conducted specifically on the issue of evidence identification related to hazard identification and dose-response assessments for IRIS assessments. To capitalize on recent efforts in this regard, the committee used the standards established by IOM to assess the effectiveness of health interventions as the foundation of its evaluation of the IRIS process. The standards are presented in Finding What Works in Healthcare: Standards for Systematic Review (IOM 2011). The general approaches and concepts underlying systematic reviews for evidence-based medicine should be relevant to the review of animal studies and epidemiologic studies (Mignini and Khan 2006). In animal studies, the work on testing of various methods of evidence identification is in the early stages (Leenaars et al. 2012; Briel et al. 2013; Hooijmans and Ritskes-Hoitinga 2013).

The IOM standards relied on three main sources: the published methods of the Cochrane Collaboration (Higgins and Green 2008), the Centre for Reviews and Dissemination of the University of York in the United Kingdom (CRD 2009), and the Effective Health Care Program of the Agency for Healthcare Research and Quality in the United States (AHRQ 2011). Those standards reflect the input of experts who were consulted during their development. Although the IOM standards for conducting systematic reviews focus on assessing the comparative effectiveness of medical or surgical interventions and the evidence supporting the standards is based on clinical research, the committee considers the approach useful for a number of aspects of IRIS assessments because the underlying principles are inherent to the scientific process (see Hoffman and Hartung 2006; Woodruff and Sutton 2011; Silbergeld and Scherer 2013). Some analysts, however, have noted challenges associated with implementing the IOM standards (Chang et al. 2013; IOM 2013).

Table 4-1 summarizes elements of the IOM standards for identifying information in systematic reviews in evidence-based medicine, presents the rationale or principle behind each element, and indicates the status of the element as reflected in materials submitted to the committee that document changes in the IRIS program (EPA 2013a,b) as described in Chapter 1 (see Table 1-1). Two chemical-specific examples (EPA 2013c,d,e) are included in Table 4-1 to assess the intent and application of the new EPA strategies as reflected in the draft preamble (EPA 2013a, Appendix B) and the draft handbook (EPA 2013a, Appendix F). The committee interprets those portions of the draft preamble and handbook that address the literature search and screening as constituting draft standard approaches for evidence identification. It assumes that the preamble summarizes the methods used in IRIS assessments and that the handbook is a detailed record of methods that are intended to be applied in evidence assessments. In other words, the committee assumes that people who are responsible for performing systematic reviews to support upcoming IRIS assessments will rely on the handbook as it continues to evolve.

| IOM Standard (IOM 2011) and Rationale | Draft IRIS Preamble (EPA 2013a, Appendix B) | Draft IRIS Handbook (EPA 2013a, Appendix F) | Draft IRIS Benzo[a]pyrene Assessment (EPA 2013c) | Draft IRIS Ammonia Assessment (EPA 2013d,e) | Considerations for Further Development | |

| 3.1 | Conduct a comprehensive systematic search for evidence | |||||

| 3.1.1 Work with a librarian or other information specialist trained in performing systematic reviews to plan the search strategy (p. 266). Rationale: As with other aspects of research, specific skills and training are required to navigate a wide range of bibliographic databases and electronic information sources. |

Not mentioned. | The initial steps of the systematic review process involve formulating specific strategies to identify and select studies related to each key question (p. F-2). EPA refers to tapping HERO resources (which include librarian expertise) and advises consulting a librarian early (to develop search terms) and often. Nevertheless, the outline suggests that their search process begins with literature collection. EPA acknowledges that the process developed for evidence-based medicine is generally applied to narrower, more focused questions and nonetheless provides a strong foundation for IRIS assessments; EPA notes that IRIS addresses assessment-specific questions. However, the materials do not describe information specialists trained in systematic reviews. |

Not mentioned. | Not mentioned. | Begin by referencing the key role played by information specialists who have expertise in systematic reviews in planning the search strategy and their role as members of the IRIS team throughout the evidence-identification process. | |

| IOM Standard (IOM 2011) and Rationale | Draft IRIS Preamble (EPA 2013a, Appendix B) | Draft IRIS Handbook (EPA 2013a, Appendix F) | Draft IRIS Benzo[a]pyrene Assessment (EPA 2013c) | Draft IRIS Ammonia Assessment (EPA 2013d,e) | Considerations for Further Development |

| 3.1.2 Design the search strategy to address each key research question (p. 266). Rationale: The goal of the search strategy is to maximize both sensitivity (the proportion of all eligible articles that are correctly identified) and precision (the proportion of all articles identified by the search that are eligible). With multiple research questions, a single search strategy is unlikely to cover all questions posed with any precision. |

Not mentioned. | No mention of key research questions. Step 1 of the proposed process sets the goal of identifying primary studies. Step 1b (p. F-6) on selecting search terms specifies “the appropriate forms of the chemical name, CAS number, and if relevant, major metabolite(s).” EPA also describes the possible addition of secondary search strategies that include key words for end points, the possibility of other more targeted end points, and the use of filters and analysis of small samples of review results to assess relevance (pp. F-6–F-7). | Not mentioned. The section titles imply the general search questions (for example, developmental effects, reproductive effects, immunotoxicity, other toxicity, and carcinogenicity), but they are not listed anywhere explicitly. |

No mention of key research questions. | In the protocol, describe the role of key research questions and their relationship to the search strategies. Do not omit any helpful information related to this standard; rather, include this information in the same section as appropriate. |

| 3.1.3 Use an independent librarian or other information specialist to peer review the search strategy (p. 267). Rationale: This part of the evidence review requires peer review like any other part. Given the specialized skills required, a person with similar skills would be expected to serve as peer reviewer. |

Not mentioned. | Not mentioned. | Not mentioned. | Not mentioned. | Add a review of the search strategy by an independent information specialist (that is, one who did not design the protocol), who is trained in evidence identification for systematic reviews to strengthen the search process. |

| 3.1.4 Search bibliographic databases (p. 267). Rationale: A single database is typically not sufficient to cover all publications (journals, books, monographs, government reports, and others) for clinical research. Databases for reports published in languages other than English and for the gray literature could also be searched. |

The literature search follows standard practices and includes the PubMed and ToxNet databases of the National Library of Medicine, Web of Science, and other databases listed in EPA’s HERO system. Searches for information on mechanisms of toxicity are inherently specialized and might include studies of other agents that act through related mechanisms (p. B2). | Step 1A describes specific databases for IRIS reviews (Table F-1), including PubMed, Web of Science, Toxline, TSCATS, PRISM, and IHAD, several of which are accessible through the EPA HERO interface. EPA identifies the HERO interface, directly searching the named databases, or supervising the search process conducted by contractors. |

Table LS-1 and Table C-1 (Appendix C) outline the online databases searched. There is not 100% agreement between the tables. | Appendix D in Supplement provides search strings for some of but not all the databases listed in Table LS-1 as searched. Table LS-1 provides keywords used for bibliographic databases. | Systematically and regularly assess the relevance and usefulness of the identified databases (PubMed, Web of Science, Toxline, TSCATS, PRISM, IHAD, and others) for finding primary studies. Ensure that the search process conducted by contractors follows specific (detailed) guidelines for systematic literature reviews established by EPA (considering the elements outlined here) and that the contractor searches regularly undergo peer review or outside assessment. |

| 3.1.5 Search citation indexes (p. 267). Rationale: Citation indexes are a good way to ensure that eligible reports were not missed. |

See 3.1.4—The literature search includes Web of Science. | EPA mentions citation indexes, such as Web of Science, but a suggestion to search them and how is not specified (p. F-7). | The preamble mentions that Web of Science is searched; not mentioned otherwise. | The preamble mentions that searching the Web of Science is standard practice, but it is not mentioned in text otherwise. | Document specific guidance or protocols for searching citation databases (for example, to ensure that searches look for citations to the identified literature). |

| IOM Standard (IOM 2011) and Rationale | Draft IRIS Preamble (EPA 2013a, Appendix B) | Draft IRIS Handbook (EPA 2013a, Appendix F) | Draft IRIS Benzo[a]pyrene Assessment (EPA 2013c) | Draft IRIS Ammonia Assessment (EPA 2013d,e) | Considerations for Further Development |

| 3.1.6 Search literature cited by eligible studies (p. 268). Rationale: The literature cited by eligible studies (for example, references provided in a journal article or thesis) is a good way to ensure eligible reports were not missed. |

Not mentioned. | EPA appropriately discusses this as a strategy. | References from previous EPA assessments and others were also examined. | References from other EPA assessments were also examined. | |

| 3.1.7 Update the search at intervals appropriate to the pace of generation of new information for the research question being addressed (p. 268). Rationale: Given that new articles and reports are being generated in an ongoing manner, searches would be updated regularly to reflect new information relevant to the topic. |

Not mentioned. | EPA appropriately discusses this step. | A comprehensive literature search was last conducted in February 2012. Appendix C gives dates of all searches as February 14, 2012. | Search was first conducted through March 2012 and updated in March 2013. Search string in Appendix D-1 should provide exact dates included in search in addition to the date when the search was performed. | Develop standardized processes for updating the literature searches to enable efficient updates on a regular basis, for example, during key stages of development for IRIS assessments. |

| 3.1.8 Search subject-specific databases if other databases are unlikely to provide all relevant evidence (p. 268). Rationale: If other databases are unlikely to be |

See entry 3.1.4—The literature search includes ToxNet of the National Library of Medicine and other databases listed in EPA’s HERO. | EPA recommends searching “regulatory resources and other websites” for additional resources. | Table LS-1: Pubmed, Toxline, Toxcenter, TSCATS, ChemID, Chemfinder, CCRIS, HSDB, GENETOX, and RTECS; listed as searched. Appendix Table C-1: Pubmed, | Appendix D provides search strings for four subject-specific databases. Although Table LS-1 provides additional database names, | Consider and specify other databases beyond those listed in handbook (Appendix F, Figure F-1) and EPA (2013b, Figure |

| comprehensive, search a variety of other sources to cover the missing areas. | Toxline, Toxcenter, TSCATS, TSCATS2, and TSCA recent notices; does not mention ChemID, Chemfinder, CCRIS, HSDB, GENETOX, and RTECS. | search strings are not provided. | 1-1). For example, consider additional resources from the set identified on the HERO website. | |||

| 3.1.9 Search regional bibliographic databases if other databases are unlikely to provide all relevant evidence (p. 269). Rationale: Many countries have their own databases and either because of language or other regional factors the reports are not necessarily also present in US-based databases |

Not mentioned. | Currently, non-English language is considered a criterion for excluding studies, and foreign language databases are not included in the discussion of search strategies. | Not mentioned. | Not mentioned. | Assess (conduct research to determine) whether studies in non-English-language countries are examining topics relevant to IRIS assessments. Revisit findings periodically to assess the effects of including or excluding non-English-language studies. | |

| 3.2 | Take action to address potentially biased reporting of research results | |||||

| 3.2.1 Search gray literature databases, clinical trial registries, and other sources of unpublished information about studies (p. 269). Rationale: Negative or null results, or undesirable results, might be published in difficult to access sources. |

Not mentioned. | EPA recommends searching “regulatory resources and other websites” for additional resources. | Not mentioned. | Not mentioned. | Consider searching other gray literature databases beyond those listed in handbook (Appendix F, Table F-1) and other sources of unpublished information about studies (also see IOM Standard 3.1.8 above). | |

| IOM Standard (IOM 2011) and Rationale | Draft IRIS Preamble (EPA 2013a, Appendix B) | Draft IRIS Handbook (EPA 2013a, Appendix F) | Draft IRIS Benzo[a]pyrene Assessment (EPA 2013c) | Draft IRIS Ammonia Assessment (EPA 2013d,e) | Considerations for Further Development |

| 3.2.2 Invite researchers to clarify information about study eligibility, study characteristics, and risk of bias (p. 269). Rationale: Rather than classify identified studies as missing critical information, it is preferable to ask the investigators directly for the information. |

Not mentioned. | Not mentioned. | Not mentioned. | Not mentioned. | As needed, request additional information needed from investigators to determine eligibility, study characteristics, and other information. |

| 3.2.3 Invite all study sponsors and researchers to submit unpublished data, including unreported outcomes, for possible inclusion in the systematic review (p. 270). Rationale: So as to include all relevant studies and data in the review, ask sponsors and researchers for information about unpublished studies or data. |

EPA posts the results of the literature search on the IRIS Web site and requests information from the public on additional studies and current research. EPA also considers studies received through the IRIS Submission Desk and studies (typically unpublished) submitted under the Toxic Substances Control Act or the Federal Insecticide, Fungicide, and Rodenticide Act. Material submitted as Confidential Business Information is considered only if it includes health and safety data that can be publicly released. If a study that might be critical for the conclusions of the assessment has not been peer-reviewed, EPA will have it peer-reviewed. | EPA endorses requesting public scrutiny of the list of identified studies from the initial literature search and requests reviews of the list by independent scientists active in research on the topic to ensure that all relevant studies are identified (pp. F-3, F-7). It is also noteworthy that EPA duly identifies the importance of tracking why studies later identified were missed in the initial literature search. | Section 3.1 of the Preamble to the benzo[a]pyrene report states that unpublished health and safety data submitted to the EPA are also considered as long as the data can be publicly released. Per Figure LS-1, the American Petroleum Institute submitted 30 references, but it is not clear whether all study sponsors and researchers were invited to submit unpublished data. |

Section 3.1 of the Preamble to the ammonia report states that EPA considers studies submitted to the IRIS Submission Desk and through other means. Many of them are unpublished. Section 3.1 of the Preamble describes inviting the public to comment on the literature search and suggest additional or current studies that might have been missed in the search. |

Create a structured process for inviting study sponsors and researchers to submit unpublished data. |

| 3.2.4 Hand search selected journals and conference abstracts (p. 270). Rationale: Hand searching of sources most likely provides relevant up-to-date information and contributes to the likelihood of comprehensive identification of eligible studies. |

Not mentioned. | Not mentioned. | Not mentioned. | Not mentioned. | Assess (conduct research to determine) whether the IOM standard suggesting hand-searching of journals and conference abstracts is applicable and useful to the EPA task. |

| 3.2.5 Conduct a web search (p. 271). Rationale: Web searches, even when broad and relatively untargeted, can contribute to the likelihood that all eligible studies have been identified. |

Not mentioned. | As noted for IOM Standard 3.2.1, EPA recommends searching regulatory and other Web sites. | Not mentioned. | Not mentioned. | Assess (conduct research to determine) whether Web searches are likely to turn up additional useful information and, if so, determine which Web sites would be appropriate. |

| 3.2.6 Search for studies reported in languages other than English if appropriate (p. 271). Rationale: There is limited evidence that negative, null, or undesirable findings might be published in languages other than English. |

Not mentioned. | As noted for IOM Standard 3.1.9, studies published in languages other than English are currently excluded from review and non-English-language databases are not included in the discussion of search strategies. | Non-English-language articles were excluded, per Figure LS-1. | Not mentioned. | Assess (conduct research to determine) whether to search for studies reported in languages other than English for IRIS assessments and revisit question periodically. |

| IOM Standard (IOM 2011) and Rationale | Draft IRIS Preamble (EPA 2013a, Appendix B) | Draft IRIS Handbook (EPA 2013a, Appendix F) | Draft IRIS Benzo[a]pyrene Assessment (EPA 2013c) | Draft IRIS Ammonia Assessment (EPA 2013d,e) | Considerations for Further Development | |

| 3.3 | Screen and select studies | |||||

| 3.3.1 Include or exclude studies based on the protocol’s pre-specified criteria (p. 272). Rationale: On the basis of the study question, inclusion and exclusion criteria for the review would be set a priori, before reviewing the search results (see 3.3.5) so as to avoid results-based decisions. |

Exposure route is a key design consideration for selecting pertinent experimental animal studies or human clinical studies. Exposure duration is also a key design consideration for selecting pertinent experimental animal studies. Short-duration studies involving animals or humans might provide toxicokinetic or mechanistic information. Specialized study designs are used for developmental and reproductive toxicity (p. B-3). | EPA specifically mentions that “casting a wide net” is a goal of the search process and that results might not address the question of interest. A two- or three-stage process is suggested (review title and abstract, then full text, or screen title and abstract in separate steps) for relevance. Table F-5 specifies excluding duplicates, studies for which only abstracts are available, and examples of criteria that might be defined for excluding studies. | Protocol not provided so unable to judge whether criteria are prespecified. Sections 3.1, 3.2, and 3.3 of the preamble to the benzo[a]pyrene report provide information on types of studies included, and Figure LS-1 provides reasons for report exclusions. | Protocol not provided so unable to judge whether criteria are prespecified. Sections 3.1, 3.2, and 3.3 of the preamble to the ammonia report provide information on types of studies included, and Figure LS-1 provides reasons for report exclusions. | Provide inclusion and exclusion criteria in IRIS assessment protocol, and use these criteria in figure describing “study selection” flow. | |

| 3.3.2 Use observational studies in addition to randomized controlled trials to evaluate harms of interventions (p. 272). Rationale: Predetermine study designs that will be eligible for each study question. |

Cohort studies, case-control studies, and some population-based surveys provide the strongest epidemiologic evidence; ecologic studies (geographic correlation studies) that relate exposures and effects by geographic area; case reports of high or accidental exposure provide information on rare effects or relevance of results from animal testing (p. B-3). | In Step 1, literature search, it is recommended that articles be sorted into categories (for example, experimental studies of animals and observational studies of humans). Later, in 2B, the Appendix says that studies could include acute-exposure animal experiments, 2-year bioassays, experimental-chamber studies of humans, observational epidemiologic studies, in vitro studies, and many other types of designs. No restriction by study design is intended. | Described in Section 3.2 of preamble to the benzo[a]pyrene report. | Described in Section 3.2 of preamble to the ammonia report. | Not applicable. | |

| 3.3.3 Use two or more members of the review team, working independently, to screen and select studies (p. 273). Rationale: Because reporting is often not clear or logically placed, having two independent reviewers is a quality-assurance approach. |

Not mentioned. | It appears that the handbook does not require independence of the screeners on the basis of this statement: “Review of the title and abstract, and in some cases, the full text of the article, should be conducted by two reviewers. If a contractor is used for this step, one of the reviewers should be an EPA staff member…One strategy for accomplishing this task is to have one member do the initial screening and sorting of the database, with the second member responsible for checking the accuracy of each of the resulting group (i.e., assuring that the reason for exclusion applies to each study in this group)” (pp. F-11, F13). | Not mentioned. | Not mentioned. | Two or more members of the team should work independently to screen and select studies, and inter-rater reliability should be assessed. |

| 3.3.4 Train screeners using written documentation; test and retest screeners to improve accuracy and consistency (p. 273). Rationale: Training and documentation are standard quality-assurance approaches. |

Not mentioned. | The handbook includes minimal discussion of training: “The two reviewers need to assure they have the same interpretation of the meaning of each category. For large databases especially, this may involve working through selected batches of 50-100 citations as ‘training’ exercises” (lines 6-7, p. F-13). | Not mentioned. | Not mentioned. | Provide written documentation and formally train screeners; specify testing procedures for screeners to improve their accuracy and consistency. |

| 3.3.5 Use one of two strategies to select studies: 1) read all full-text articles identified in the search or 2) screen titles and abstracts of all articles and then read the | Not mentioned. | Figure F-1 suggests that titles and abstracts are screened first, and then possibly full text of relevant articles is screened. The handbook also says, however, “in some situations, a three-stage process | Not mentioned. | A preliminary manual screen of titles or abstracts was conducted by a toxicologist. A more detailed | Clearly document screening and selection process. Until research confirms a different approach, ensure |

| IOM Standard (IOM 2011) and Rationale | Draft IRIS Preamble (EPA 2013a, Appendix B) | Draft IRIS Handbook (EPA 2013a, Appendix F) | Draft IRIS Benzo[a]pyrene Assessment (EPA 2013c) | Draft IRIS Ammonia Assessment (EPA 2013d,e) | Considerations for Further Development | |

| full-text of articles identified in initial screening (p. 273). Rationale: Data are not clear, even for clinical intervention questions, regarding which method is best, although 2) appears to be more common. |

may be more efficient, with an initial screen based on title, followed by screening based on abstract, followed by full text screening. There is not a ‘right’ or ‘wrong’ choice; however, whichever you choose, be sure to document the process you use” (pp. F-10, F11). | review of the reports identified was conducted by a person not described. | that screeners follow a process that reflects the concepts underlying the IOM standards. | |||

| 3.3.6 Taking account of the risk of bias, consider using observational studies to address gaps in the evidence from randomized clinical trials on the benefits of interventions (p. 274). Rationale: Rather than exclude evidence where it is sparse, it might be necessary to use data from studies using design more susceptible to bias than a preferred design. |

See entry 3.2.2. | Not applicable because all types of study designs are potentially eligible (and randomized clinical trials are not conducted for IRIS assessments). | Not applicable because all types of study designs are potentially eligible (and randomized clinical trials are not conducted for IRIS assessments). | Not applicable because all types of study designs are potentially eligible (and randomized clinical trials are not conducted for IRIS assessments). | Not applicable. | |

| 3.4 | Document the search | |||||

| 3.4.1 Provide a line-by-line description of the search strategy, including the date of search for each database, web browser, etc. (p. 274). | Each assessment specifies the search strategies, keywords, and cutoff dates of its literature searches. | The handbook supports careful documentation of the search strategy and provides Tables F-3 and F-4 as examples of the types of information that would be retained. No specific statement is | Table LS-1 provides more database names than Appendix C but does not provide search | Table LS-1 provides more database names than Appendix D but does not provide search | Document, line-byline, a description of the search strategy, including the dates included in the search of each | |

| Rationale: Appropriate documentation of the search processes ensures transparency of the methods used in the review, and appropriate peer review by information specialists. | made about documenting a line-by-line search strategy. | strings for them. Appendix C: Table C-1 provides search strategies for more than four databases searched with date of search, but exact dates for what was included in search are not provided—for example, for PubMed, “Date range 1950’s to 2/14/2012.” | strings for them. Appendix D: Table D-1 provides search strings for four subject-specific databases, but exact dates for what was included in search are not provided—for example, for PubMed, Appendix D states “Date range: 1950’s to present.” | database and the date of the search for each database and any Web searches. | ||

| 3.4.2 Document the disposition of each report identified, including reasons for their exclusion if appropriate (p. 275). Rationale: The standard supports creation of a flow chart that describes the sequence of events leading to identification of included studies, and it also supports assessment of the sensitivity and precision of the searches a posteriori. |

Not mentioned. | Some support is given to documenting the reasons for excluding each citation at the full-text review stage. “In these situations, the citation should be ‘tagged’ into the appropriate exclusion category” (p. F-16). | Summary data are provided in Figure LS-1. | The disposition of identified citations is summarized in the study-selection figure but is otherwise not mentioned. The disposition of articles identified in the search is documented in HERO. | Consider a more explicit statement in the handbook regarding documenting the disposition of each report identified by the search. Flowcharts can also be used to illustrate dispositions by category, similar to the LitFlow diagram in HERO. | |

| 3.5 | Manage data collection | |||||

| 3.5.1 At a minimum, use two or more researchers, working independently, to extract quantitative or other critical data from each | Not mentioned. | This item is not fully described in the process of data collection: “Ideally, two independent reviewers would independently identify the relevant | Not mentioned. | Not mentioned. | Ensure the quality of the data collected. For example, at a minimum, use two or more researchers | |

| IOM Standard (IOM 2011) and Rationale | Draft IRIS Preamble (EPA 2013a, Appendix B) | Draft IRIS Handbook (EPA 2013a, Appendix F) | Draft IRIS Benzo[a]pyrene Assessment (EPA 2013c) | Draft IRIS Ammonia Assessment (EPA 2013d,e) | Considerations for Further Development |

| study. For other types of data, one individual could extract the data while the second individual independently checks for accuracy and completeness. Establish a fair procedure for resolving discrepancies—do not simply give final decision-making power to the senior reviewer (p. 275). Rationale: Because reporting is often not clear or logically placed, having two independent reviewers is a quality-assurance approach. The evidence supporting two independent data extractors is limited and so some reviewers prefer that one person extracts and the other verifies, a time- saving approach. Discrepancies would be decided by discussion so that each person’s viewpoint is heard. |

methodological details, and then compare their results and interpretations and resolve any differences” (p. F-21). | working independently to extract quantitative and other critical data from each study document. For other types of data, one person could extract the data while a second independently checks for accuracy and completeness. Establish a fair procedure for resolving discrepancies—do not simply give final decision-making power to the senior reviewer (per the IOM wording, p. 275). |

| 3.5.2 Link publications from the same study to avoid including data from the same study more than once (p. 276). Rationale: There are numerous examples in the literature where two articles reporting the same study are thought to represent two separate studies. |

Not mentioned. | It is acknowledged implicitly that there can be more than one publication per study, but there are no specific instructions about linking the publications from a single study together. | Not mentioned. | Not mentioned. | Create an explicit mechanism for linking multiple publications from the same study to avoid including duplicate data. |

| 3.5.3 Use standard data extraction forms developed for the specific systematic review (p. 276). Rationale: Standardized data forms are broadly applied quality-assurance approaches. |

Not mentioned. | An example worksheet is provided for observational epidemiologic studies and items to be extracted from the articles for animal toxicologic studies. A structured form may be useful for recording the key features needed to evaluate a study. An example form is shown in Figure F-3; details of such a form will need to be modified based on the specifics of the chemical, exposure scenarios, and effect measures under study. | Data-extraction forms are not described, and it is not known whether forms were used; evidence tables and exposure-response arrays provide a structured format for data-reporting. | Data-extraction forms are not described, and it is not known whether forms were used; evidence tables and exposure-response arrays provide a structured format for data-reporting. | Create, pilot-test, and use standard data-extraction forms (see also 3.5.4 below). |

| 3.5.4 Pilot-test the data extraction forms and process (p. 276). Rationale: Pre-testing of the data collection forms and processes are broadly applied quality-assurance approaches. |

Not mentioned. | Not mentioned. | Not mentioned. | Not mentioned. | Create, pilot-test, and use standard data-extraction forms (see 3.5.3 above). |

In general, EPA has been responsive to the recommendations from the NRC formaldehyde report. As discussed in Chapter 1, the timing of the publication of the IOM standards was such that EPA could not have been expected to have incorporated the standards into its assessments to date. Nevertheless, comparison of statements made in the draft preamble (EPA 2013a, Appendix B) and draft handbook (EPA 2013a, Appendix F) with the 2011 IOM standards demonstrates that EPA has not only responded to the recommendations made in the NRC formaldehyde report but is well on the way to meeting the general systematic-review standards for identifying and assessing evidence.

Thus, the table is useful primarily for pointing out where further standardization might be helpful, not as a test and demonstration of whether IOM standards have been met. Sometimes the information that the committee sought is not mentioned in the sources examined but is present in other sources, for example, in explanatory materials provided on the EPA IRIS Web site and in chemical-specific links on the EPA Health and Environmental Research Online (HERO) Web site. After discussion, the committee elected to retain “not mentioned” in the table because the information sought was not mentioned in the documents reviewed even though it might have been noted elsewhere. A key goal was to see whether the information appeared where the average reader might expect to find it, notably in documents describing the methods used in developing IRIS assessments. For transparency, there should be no difficulty in accessing all aspects of review methods.

In addition, the subset of documents reflected in the table does not represent all the materials available. Because EPA’s transition to a systematic process for reviewing the evidence is evolving, the committee expects that more recent documents will reflect an increasingly standardized and comprehensive response. The committee had to halt its examination of recent example documents in September 2013 so that the present report could be drafted, with the understanding that some elements that appear undeveloped in Table 4-1 have been addressed in materials released more recently.

Establish Standard Strategies for Evidence Identification

The IOM standards for finding and assessing individual studies include five main elements: searching for evidence, addressing possible reporting biases, screening and selecting studies, documenting the search, and managing data. As Table 4-1 shows, in most instances, the draft preamble (EPA 2013a, Appendix B) focuses on principles and does not address specific elements of the IOM standard. Because identifying evidence for IRIS involves all five elements reflected in the IOM standards, a concise preamble would not be expected to serve as a stand-alone roadmap for evidence-identification methods in IRIS assessments.

The draft handbook (EPA 2013a, Appendix F), however, should include that level of detail and does cover the IOM standards more completely, although some gaps exist. To address the gaps, the committee recommends expanding the handbook as itemized in Table 4-1. In general, EPA might find it helpful to include a table of standards in the handbook (perhaps repeated in the preamble) and to adopt the wording in Table 4-1 for each standard (for example, from IOM) or to modify the wording to be specific to the IRIS case, as appropriate.

As an overarching recommendation, the committee encourages EPA to include standard approaches for evidence identification in IRIS materials and to incorporate them consistently in the various materials. For components that are intentionally less detailed, such as the preamble, the committee encourages EPA to refer the reader elsewhere, notably to relevant parts of the handbook, for those interested in additional detail. The handbook serves as a valuable complement to the preamble, but without pointers to more detailed resources the average reader might not understand the relationship between the two documents or be aware that detailed strategies or standards exist.

Develop a Template for Description of the Search Strategies

EPA has provided the committee with a substantial set of tables, figures, and examples that demonstrate marked progress in implementing the recommendations from the NRC formaldehyde report. In reviewing the materials provided (EPA 2013a,b), the committee did not see evidence that a consistent search template was being used. The preamble (EPA 2013a, Appendix B) and the handbook (EPA 2013a, Appendix F) are helpful with regard to illustrating the overall structure and flow of the evidence-identification process. For example, Figures F-1 and F-2 in EPA (2013a, Appendix F) and Figure 1-1 in EPA (2013b) illustrate the literature-search documentation for ethyl tert-butyl ether. The committee recognizes that the process of developing and refining materials for the IRIS process is still going on and that representations of the search approach have probably continued to evolve. However, materials provided show that the approach is not yet specified consistently and in equivalent detail among the various documents. For example, Figure 1-1 in EPA (2013b) includes Proquest—a step that involves reviewing references cited in papers identified by the search—whereas the preamble (EPA 2013a, Appendix B) and Figure F-1 in the handbook (EPA 2013a, Appendix F) do not. It is also unclear whether inconsistencies are deliberate (and thus desirable) and related to the specific IRIS assessment being undertaken or are unintentional (and perhaps undesirable). For example, the preamble specifies searching “other databases listed in EPA’s HERO system” (p. B-2) and a number of other, mostly unpublished sources, whereas Figure F-1 specifies “OPP databases” and also refers to searching other sources.

The draft materials provided to the committee do not yet appear to include some quality-control and procedural guidelines identified in the IOM standards (see Table 4-1) that are relevant to identifying the evidence. In particular, the materials do not consider whether prespecified research questions were used to guide the evidence identification (see Chapter 3 for a discussion of the development of the research question). The committee encourages EPA to consider prespecifying research questions when establishing the standard template for evidence identification to ensure that a search reflects the research goals appropriately. The committee commends EPA’s collaboration with the National Toxicology Program of the National Institute of Environmental Health Sciences in this regard and encourages incorporation of insights gained into the IRIS process.

Use a Database to Capture Study Information and Relevant Quantitative Data

The NRC formaldehyde report recommended that EPA use a database, such as HERO, to serve as a repository for documents supporting its toxicity assessments. The HERO database was developed to support scientific assessments for the national ambient air quality standards, notably integrated science assessments for the six criteria pollutants. EPA responded to the recommendation with a substantial expansion of HERO to support IRIS (EPA 2013f,g). The extensive effort has involved incorporating more than 160,000 references relevant to IRIS since 2011, and updating has continued to today. For example, from August to September 2013, nearly 2,400 references were added to the IRIS set in HERO.

The committee encourages EPA to adapt HERO or create a related database to contain data extracted from the individual documents. Although it is not yet evident in the draft preamble or handbook (EPA 2013a), the HERO Web site (EPA 2013f) suggests such an adaptation. In describing what data HERO provides, the Web site (EPA 2013g) states “for ‘key’ studies: objective, quantitative extracted study data [future enhancement].” It further states that “HERO revisions are planned to broaden both the features and scope of information included. Future directions include additional data sets, environmental models, and services that connect data and models.”

The committee recognizes that EPA has expanded the HERO database to capture information about documents relevant to IRIS assessments. Searching HERO, in addition to other databases, will be increasingly useful to identify relevant studies for IRIS assessments. As noted, the committee encourages EPA to expand HERO or build a complementary database into which data extracted from the documents in the HERO database can be entered. By creating a data repository for study information and relevant quantitative data, EPA will be able to accumulate and evaluate evidence among IRIS assessments. Such a repository of identified data (see Goldman and Silbergeld 2013) would further enhance the process and its consistent application for IRIS, as well as enhancing data-sharing (for example, see related discussion in IOM 2013).

COMMENTS ON BEST PRACTICES FOR EVIDENCE IDENTIFICATION

IOM (2011) standards as highlighted in Table 4-1 capture recent best practices. Searching for and identifying the evidence is arguably the most important step in a systematic review. Accordingly, a standardized search strategy and reporting format are essential for evidence identification. As discussed in Chapter 3, the protocol frames an answerable question or questions that will be addressed by the assessment, states the eligibility criteria for inclusion in the assessment, and describes in detail how the relevant evidence will be identified. Searches should always be well documented with an expected format, as described in the articles on search filters for Embase (deVries et al. 2011) and for PubMed (Hooijmans et al. 2010). As noted above, the committee could not always find some of the critical information in the draft materials that it reviewed, although it has found that more recent IRIS assessments and preliminary materials for upcoming assessments reflect increasing standardization (for example, see, EPA 2013h,i), which is commendable. Standardizing the search strategy and reporting format would aid the reader of IRIS assessments and would facilitate an evaluation of how well the standards and concepts set forth in the preamble and handbook are being applied. In addition, standardization would help to minimize unnecessary duplication, overlaps, and inconsistencies among various IRIS assessments. An example of format and documentation issues related to searching for animal studies can be found in Leenaars et al. (2012).

The IOM standards also emphasize the role of various specialists in the review process, including information specialists (also referred to as informationists) and topic-specific experts. Those screening the studies and abstracting the data also need explicit training, and typically topic-specific experts are involved at this step. The roles of all team members should be identified in the protocol.

The evidence supporting the IOM standards is likely to be useful in the IRIS domain, but it would be appropriate for EPA to perform research that examines evidence specifically applicable to epidemiology and toxicity evaluations underlying IRIS assessments. For example, a targeted research effort could address the question of whether it is useful and necessary to search the gray literature—research literature that has not been formally published in journal articles, such as conference abstracts, book chapters, and theses—and the non-English-language literature in systematic reviews for IRIS assessments. Given how quickly methods for systematic reviews are evolving, including databases and indexing terms, methodologic research related to systematic reviews for IRIS assessments should be kept current to ensure that standards are up to date and relevant.

The findings and recommendations that follow are broad recommendations on evidence identification; specific suggestions or considerations for each step in the process are provided in Table 4-1.

Finding: EPA has been responsive to recommendations in the NRC formaldehyde report regarding evidence identification and is well on the way to adopting a more rigorous approach to evidence identification that would meet standards for systematic reviews. This finding is based on a comparison of the draft EPA materials provided to the committee with IOM standards.

Recommendation: The trajectory of change needs to be maintained.

Finding: Current descriptions of search strategies appear inconsistently comprehensive, particularly regarding (a) the roles of trained information specialists; (b) the requirements for contractors; (c) the descriptions of search strategies for each database and source searched; (d) critical details concerning the search, such as the specific dates of each search and the specific publication dates included; and (e) the periodic need to consider modifying the databases and languages to be searched in updated and new reviews. The committee acknowledges that recent assessments other than the ones that it reviewed might already address some of the indicated concerns.

Recommendation: The current process can be enhanced with more explicit documentation of methods. Protocols for IRIS assessments should include a section on evidence identification that is written in collaboration with information specialists trained in systematic reviews and that includes a search strategy for each systematic-review question being addressed in the assessment. Specifically, the protocols should provide a line-by-line description of the search strategy, the date of the search, and publication dates searched and, as noted in Chapter 3, explicitly state the inclusion and exclusion criteria for studies.

Recommendation: Evidence identification should involve a predetermined search of key sources, follow a search strategy based on empirical research, and be reported in a standardized way that allows replication by others. The search strategies and sources should be modified as needed on the basis of new evidence on best practices. Contractors who perform the evidence identification for the systematic review should adhere to the same standards and provide evidence of experience and expertise in the field.

Finding: One problem for systematic reviews in toxicology is identifying and retrieving toxicologic information outside the peer-reviewed public literature.

Recommendation: EPA should consider developing specific resources, such as registries, that could be used to identify and retrieve information about toxicology studies reported outside the literature accessible by electronic searching. In the medical field, clinical-trial registries and US legislation that has required studies to register in ClinicalTrials.gov have been an important step in ensuring that the total number of studies that are undertaken is known.

Finding: Replicability and quality control are critical in scientific undertakings, including data management. Although that general principle is evident in IRIS assessments that were reviewed, tasks appear to be assigned to a single information specialist or review author. There was no evidence of the information specialist’s or reviewer’s training or of review of work by others who have similar expertise. As discussed in Chapter 2, an evaluation of validity and reliability through inter-rater comparisons is important and helps to determine whether multiple reviewers are needed. This aspect is missing from the IOM standards.

Recommendation: EPA is encouraged to use at least two reviewers who work independently to screen and select studies, pending an evaluation of validity and reliability that might indicate that multiple reviewers are not warranted. It is important that the reviewers use standardized procedures and forms.

Finding: Another important aspect of quality control in systematic reviews is ensuring that information is not double-counted. Explicit recognition of and mechanisms for dealing with multiple publications that include overlapping data from the same study are important components of data management that are not yet evident in the draft handbook.

Recommendation: EPA should engage information specialists trained in systematic reviews in the process of evidence identification, for example, by having an information specialist peer review the proposed evidence-identification strategy in the protocol for the systematic review.

Finding: The committee did not find enough empirical evidence pertaining to the systematic-review process in toxicological studies to permit it to comment specifically on reporting biases and other methodologic issues, except by analogy to other, related fields of scientific inquiry. It is not clear, for example, whether a reporting bias is associated with the language of publication for toxicological studies and the other types of research publications that support IRIS assessments or whether any such bias (if it exists) might be restricted to specific countries or periods.

Recommendation: EPA should encourage and support research on reporting biases and other methodologic topics relevant to the systematic-review process in toxicology.

Finding: The draft preamble and handbook provide a good start for developing a systematic, quality-controlled process for identifying evidence for IRIS assessments.

Recommendation: EPA should continue to document and standardize its evidence-identification process by adopting (or adapting, where appropriate) the relevant IOM standards described in Table 4-1. It is anticipated that its efforts will further strengthen the overall consistency, reliability, and transparency of the evidence-identification process.

AHRQ (Agency for Healthcare Research and Quality). 2011. The Effective Health Care Program Stakeholder Guide. Publication No. 11-EHC069-EF [online]. Available: http://www.ahrq.gov/research/findings/evidence-based-reports/stakeholderguide/stakeholdr.pdf [accessed December 4, 2013].

Briel, M., K.F. Muller, J.J. Meerpohl, E. von Elm, B. Lang, E. Motshall, V. Gloy, F. Lamontagne, G. Schwarzer, and D. Bassler. 2013. Publication bias in animal research: A systematic review protocol. Syst. Rev. 2:23.

Chalmers, I., and K. Dickersin. 2013. Biased under-reporting of research reflects biased under-submission more than biased editorial rejection. F1000 Research 2(1).

Chang, S.M., E.B. Bass, N. Berkman, T.S. Carey, R.L. Kane, J. Lau, and S. Ratichek. 2013. Challenges in implementing The Institute of Medicine systematic review standards. Syst. Rev. 2(1):69.

CRD (Centre for Reviews and Dissemination). 2009. Systematic Reviews: CRD’s Guidance for Undertaking Reviews in Health Care. York, UK: York Publishing Services, Ltd [online]. Available: http://www.york.ac.uk/inst/crd/pdf/Systematic_Reviews.pdf [accessed December 4, 2013].

de Vries, R.B., C.R. Hooijmans, A. Tillema, M. Leenaars, and M. Ritskes-Hoitinga. 2011. A search filter for increasing the retrieval of animal studies in Embase. Lab Anim. 45(4):268-270.

Dickersin, K., and I. Chalmers. 2011. Recognizing, investigating and dealing with incomplete and biased reporting of clinical research: From Francis Bacon to the World Health Organisation. J. R. Soc. Med. 104(12):532-538.

EPA (U.S. Environmental Protection Agency). 2013a. Part 1. Status of Implementation of Recommendations. Materials Submitted to the National Research Council, by Integrated Risk Information System Program, U.S. Environmental Protection Agency, January 30, 2013[online]. Available: http://www.epa.gov/IRIS/iris-nrc.htm [accessed October 22, 2013].

EPA (U.S. Environmental Protection Agency). 2013b. Part 2. Chemical-Specific Examples. Materials Submitted to the National Research Council, by Integrated Risk Information System Program, U.S. Envi-

ronmental Protection Agency, January 30, 2013 [online]. Available: http://www.epa.gov/iris/pdfs/IRIS%20Program%20Materials%20to%20NRC_Part%202.pdf [accessed October 22, 2013].

EPA (U.S. Environmental Protection Agency). 2013c. Toxicological Review of Benzo[a]pyrene (CAS No. 50-32-8) in Support of Summary Information on the Integrated Risk Information System (IRIS), Public Comment Draft. EPA/635/R13/138a. National Center for Environmental Assessment, Office of Research and Development, U.S. Environmental Protection Agency, Washington, DC. August 2013 [online]. Available: http://cfpub.epa.gov/ncea/iris_drafts/recordisplay.cfm?deid=66193 [accessed Nov. 13, 2013].

EPA (U.S. Environmental Protection Agency). 2013d. Toxicological Review of Ammonia (CAS No. 7664-41-7), In Support of Summary Information on the Integrated Risk Information System (IRIS). Revised External Review Draft. EPA/635/R-13/139a. National Center for Environmental Assessment, Office of Research and Development, U.S. Environmental Protection Agency, Washington, DC. August 2013 [online]. Available: http://cfpub.epa.gov/ncea/cfm/recordisplay.cfm?deid=254524 [accessed October 14, 2013].

EPA (U.S. Environmental Protection Agency). 2013e. Toxicological Review of Ammonia (CAS No. 7664-41-7), In Support of Summary Information on the Integrated Risk Information System (IRIS), Supplemental Information. Revised External Review Draft. EPA/635/R-13/139b. National Center for Environmental Assessment, Office of Research and Development, Washington, DC [online]. Available: http://cfpub.epa.gov/ncea/cfm/recordisplay.cfm?deid=254524 [accessed October 14, 2013].

EPA (U.S. Environmental Protection Agency). 2013f. Health and Environmental Research Online (HERO): The Assessment Process [online]. Available: http://hero.epa.gov/index.cfm?action=content.assessment [accessed October 14, 2013].

EPA (U.S. Environmental Protection Agency). 2013g. Health and Environmental Research Online (HERO): Basic Information [online]. Available: http://hero.epa.gov/index.cfm?action=content.basic [accessed October 14, 2013].

EPA (U.S. Environmental Protection Agency). 2013h. Preliminary Materials for the Integrated Risk Information System (IRIS) Toxicological Review of tert-Butyl Alcohol (tert-Butanol) [CASRN 75-65-0]. EPA/635/R-13/107. National Center for Environmental Assessment, Office of Research and Development, Washington, DC. July 2013 [online]. Available: http://www.epa.gov/iris/publicmeeting/iris_bimonthly-oct2013/t-butanol-litsearch_evidence-tables.pdf [accessed October 14, 2013].

EPA (U.S. Environmental Protection Agency). 2013i. Systematic Review of the tert-Butanol Literature (generated by HERO) [online]. Available: http://www.epa.gov/iris/publicmeeting/iris_bimonthly-oct2013/mtg_docs.htm [accessed October 14, 2013].

Fanelli, D. 2010. Do pressures to publish increase scientists’ bias? An empirical support from U.S. States data. PLoS One 5(4):e10271.

Goldman, L.R., and E.K. Silbergeld. 2013. Assuring access to data for chemical evaluations. Environ. Health Perspect. 121(2):149-152.

Higgins, J.P.T., and S. Green, eds. 2008. Cochrane Handbook for Systematic Reviews of Interventions. Chichester, UK: John Wiley & Sons.

Hoffman, S., and T. Hartung. 2006. Toward an evidence-based toxicology. Hum. Exp. Toxicol. 25(9):497-513.

Hooijmans, C.R., and M. Ritskes-Hoitinga. 2013. Progress in using systematic reviews of animal studies to improve translational research. PLOS Med. 10(7):e1001482.

Hooijmans, C.R., A. Tillema, M. Leenaars, and M. Ritskes-Hoitinga. 2010. Enhancing search efficiency by means of a search filter for finding all studies on animal experimentation in PubMed. Lab Anim. 44(3):170-175.

IOM (Institute of Medicine). 2011. Finding What Works in Health Care: Standards for Systematic Reviews. Washington, DC: National Academies Press.

IOM (Institute of Medicine). 2013. Sharing Clinical Research Data: Workshop Summary. Washington, DC: National Academies Press.

Korevaar, D.A., L. Hooft, and G. ter Riet. 2011. Systematic reviews and meta-analyses of preclinical studies: Publication bias in laboratory animal experiments. Lab. Anim. 45(4):225-230.

Leenaars, M., C.R. Hooijmans, N. van Veggel, G. ter Riet, M. Leeflang, L. Hooft, G.J. van der Wilt, A. Tillema, and M. Ritskes-Hoitinga. 2012. A step-by-step guide to systematically identify all relevant animal studies. Lab Anim. 46(1):24-31.

Lundh, A., S. Sismondo, J. Lexchin, O.A. Busuioc, and L. Bero. 2012. Industry sponsorship and research outcome. Cochrane Database Syst. Rev. (12):Art. MR000033. doi: 10.1002/14651858.MR000033.pub2.

Mignini, L.E., and K.S. Khan. 2006. Methodological quality of systematic reviews of animal studies: A survey of reviews of basic research. BMC Med. Res. Methodol. 6:10. doi:10.1186/1471-2288-6-10.

NRC (National Research Council). 2011. Review of the Environmental Protection Agency’s Draft IRIS Assessment of Formaldehyde. Washington, DC: National Academies Press.

Sena, E.S., H.B. van der Worp, P.M. Bath, D.W. Howells, and M.R. Macleod. 2010. Publication bias in reports of animal stroke studies leads to major overstatement of efficacy. PLoS Biol. 8(3):e1000344.

Silbergeld, E., and R.W. Scherer. 2013. Evidence-based toxicology: Strait is the gate, but the road is worth taking. ALTEX 30(1):67-73.

ter Riet, G., D.A. Korevaar, M. Leenaars, P.J. Sterk, C.J.F. Van Noorden, L.M. Bouter, R. Lutter, R.P.O. Elferink, and L. Hooft. 2012. Publication bias in laboratory animal research: A survey on magnitude, drivers, consequences and potential solutions. PLOS ONE 7(9):e43404.

Woodruff, T.J., and P. Sutton. 2011. An evidence-based medicine methodology to bridge the gap between clinical and environmental health sciences. Health Aff. (Millwood) 30(5):931-937.