Procedures for Eliciting and Using Judgments of the Value of Observed Behaviors on Military Job Performance Tests

Richard M. Jaeger and Sallie Keller-McNulty

THE PROBLEMS ADDRESSED

As part of a Joint-Service job performance measurement project, each Service is developing a series of standardized hands-on job performance tests. These tests are intended to measure the “manifest, observable job behaviors” (Committee on the Performance of Military Personnel, 1984:5) of first-term enlistees in selected military occupational specialties. Once the tests have been constructed and refined, they will be examined for use as criteria for validating the Armed Services Vocational Aptitude Battery (ASVAB), or its successor instruments, as devices for classifying military enlistees into various service schools and military occupational specialties.

Three problems are addressed in this paper. The first concerns the development of standards of minimally acceptable performance on the newly developed criterion tests. Such standards could be used to discriminate between enlistees who would not be expected to exhibit satisfactory (or, perhaps, cost-beneficial) on-the-job performance in a military occupational specialty and those who would be expected to exhibit such performance.

The second problem concerns methods for eliciting and characterizing judgments on the relative value or worth of enlistees' test performances that are judged to be above the minima deemed necessary for admission to one or more military occupational specialties. Practical interest in this problem derives from the need to classify enlistees into military occupational spe-

cialties in a way that maximizes their value to the Service while satisfying the enlistees' own requirements and interests.

The third problem concerns the use of enlistees' behaviors on the handson tests, and judgments of their value, in the classification of enlistees among military occupational specialties. As was true of the second problem, interest in this problem reflects the need to assign enlistees to military occupational specialties in a way that satisfies the needs of the Services and the enlistees. In a scarce-resource environment, it is essential that the classification problem be solved in a way that maximizes the value of available personnel to the Services while maintaining the attractiveness of the Services at a level that will not diminish the pool of available enlistees.

The three problems considered in this paper are not treated at the same level of detail. Since there is an extensive methodological and empirical literature on judgmental procedures for setting standards on tests, we have addressed this topic in considerable detail. There is little research that supports methodological recommendations on assigning relative value or worth to various levels of test performance. Therefore, our treatment of this problem is comparatively brief. Finally, our discussion of the problem of assigning enlistees to the military occupational specialties should be viewed as illustrative rather than definitive. This problem is logically related to the first two, but is of such complexity that complete development is beyond the scope of this paper.

Establishing Test Standards

To fulfill the requirements of a military occupational specialty, an enlistee must be capable of performing dozens, if not hundreds, of discrete and diverse tasks. Indeed, each Service has conducted extensive analyses of the task requirements of each of its jobs (Morsch et al., 1961; Goody, 1976; Raimsey-Klee, 1981; Burtch et al., 1982; U. S. Army Research Institute for the Behavioral and Social Sciences, 1984) that have produced convincing evidence of the complexity of the various military occupational specialties and the need to describe military occupational specialties in terms of disjoint clusters of tasks. Even when attention is restricted to the job proficiencies expected of personnel at the initial level of skill defined for a military occupational specialty, the military occupational specialty might be defined by several hundred tasks that can reasonably be allocated to anywhere from 2 to 25 or more disjoint clusters (U.S. Army Research Institute for the Behavioral and Social Sciences, 1984:12-19).

In view of the complexity of military occupational specialties, it is unlikely that the performance of an enlistee on the tasks that compose a military occupational specialty could validly be characterized by a single test

score. In their initial development of performance tests, the service branches have acknowledged this reality by (1) defining clusters of military occupational specialty tasks; (2) identifying samples of tasks that purportedly represent the population of tasks that compose a military occupational specialty; and (3) specifying sets of measurable behaviors that can be used to assess enlistees' proficiencies in performing the sampled tasks. The problem of defining minimally acceptable performance in a military occupational specialty must therefore be addressed by defining minimally acceptable performance on each of the clusters of tasks that compose the military occupational specialty. Methods for defining standards of performance on task clusters thus provide one major focus of this paper.

Eliciting and Combining Judgments of the Worth of Job Performance Test Behaviors

Scores on the job performance tests that are currently under development are to be used as criterion values in the development of algorithms for assigning new enlistees to various military occupational specialties. Were it possible to develop singular, equivalently scaled, equivalently valued measures that characterized the performance of an enlistee in each military occupational specialty, optimal classification of enlistees among military occupational specialties would be a theoretically simple problem. In reality, the problem is complicated by several factors. First, as discussed above, the tasks that compose a military occupational specialty are not unidimensional. Second, even tests that assessed enlistees' performances on task clusters with perfect precision and validity would not be inherently equivalent. Third, the worth or value associated with an equivalent level of performance on tests that assessed proficiency in two different task clusters would likely differ across those clusters. Fourth, the worth or value associated with a given proficiency level in a single task cluster would likely differ, depending on the military occupational specialty in which the task cluster was imbedded.

To address these issues, the problem of establishing functions and eliciting judgments that assign value to levels of proficiency in various military occupational specialties (hereafter called “value functions”) must be examined at the level of the individual tasks and at the level of the task clusters. In this regard, two of the major problems considered in this paper are equivalent.

To develop value functions for military occupational specialties, several component problems must be addressed. First, the task clusters defined by job analysts for each military occupational specialty must be accepted or revised. Second, value functions associated with performances on tasks sampled from task clusters must be defined. Third, operational procedures

for eliciting judgments of the values of various levels of performance on tasks sampled from task clusters must be developed. Fourth, methods for weighting and aggregating value assignments across sampled tasks, so as to determine a value assignment for a profile of performances on the tasks that are sampled from a military occupational specialty, must be developed. Related issues that must be considered include the comparability of value assignments across tasks within a military occupational specialty, as well as the scale equivalence of value assignments to levels of performance in different military occupational specialties.

Using Predicted Test Performances and Value Judgments in Personnel Classification

Assuming it is possible to predict enlistees' performances on military job performance tests from the ASVAB or other predictor batteries, and assuming that judgments of the values of these predicted performances can be elicited and combined to produce summary scores for military occupational specialties, there remains the problem of using these summaries in classifying enlistees among military occupational specialties. This problem can be addressed in several ways, depending on one's desire to consider as primary the interests of individual enlistees and/or the Services, and the types of decision scenarios envisioned.

If it was desired to satisfy the interests of individual enlistees with little regard for the needs of or costs to the military, predicted performances in various military occupational specialties would be used solely for guidance purposes. The only value functions that would be pertinent would be those of the individual enlistee. Enlistees would be assigned to the military occupational specialties they most desired, after having been informed of their likely chances of success in each.

If the interests of the military were viewed as primary, the best classification strategy would depend on the decision scenarios envisioned and the decision components to be taken into account. In a scenario in which each enlistee was to be classified individually, based on his/her predicted military occupational specialty job performances and the set of available military jobs at the time of his/her classification, the obvious classification choice would be the one that carried maximum value. In a scenario in which enlistees were to be classified as a group (e.g., the group of enlistees who completed the ASVAB during a given week), the predicted job performances of all members of the group, and the values associated with those predictions could be taken into account, in addition to the average values associated with the performances of personnel currently assigned to military occupational specialties with jobs available at the time of classification.

These alternatives are considered in a discussion of the problem of using

enlistees' predicted scores on job performance tests in classifying enlistees among military occupational specialties. A specific mathematical programming model for the third alternative is developed and illustrated.

ESTABLISHING MINIMUM STANDARDS OF PERFORMANCE

One of the two major problems considered in this paper is the establishment of standards of performance that define minimally acceptable levels of response on the new criterion tests that are under development by the Services. In addressing this problem, we first discuss the consequential issues associated with standard setting. We next describe the most widely used standard-setting methods that have been proposed for use with educational achievement tests. In the third section, we consider the prospects for applying these methods to the problem of setting standards on military job performance tests. Finally, we examine a variety of operational questions that arise in the application of any standard-setting procedure, such as the types and numbers of persons from whom judgments on appropriate standards are sought, the form in which judgments are sought, and the information provided to those from whom judgments are sought. Rather than recommending the one “best” standard-setting procedure, it is our intent to illuminate the alternatives that have been applied elsewhere, to bring forth the principal considerations that affect their applicability in the military setting, and to bring to light the major operational issues that must be addressed in using any practical standard-setting procedure.

Consequences of Setting Standards

There are no objective procedures for setting test standards. It is necessary to rely on human judgment. Since judgments are fallible, it is important to consider the consequences of setting standards that are unnecessarily high or low. If an unnecessarily high standard is established, examinees whose competence is acceptable will be failed. Errors of this kind are termed false-negative errors. If the standard established is lower than necessary, examinees whose competence is unacceptable will be passed. Errors of this kind are termed false-positive errors. Both individuals and society are placed at risk by these kinds of errors.

When tests are used for selection—that is, for determining who is admitted to an educational program or an employment situation—society or institutions bear the primary effects of false-positive errors. The effects of false-negative errors are borne primarily by individuals when applicant pools greatly exceed institutional needs. However, limitations in the pool of personnel available for military service increase the institutional consequences of making false-negative errors. Adequate military staffing depends on the availability of personnel for a variety of military occupational specialties.

Since the military now relies on an all-volunteer force, it is particularly vulnerable to erroneous exclusion of qualified personnel.

When tests are used for purposes of classification—that is, for allocating examinees among alternative educational programs or jobs—the effects of false-positive and false-negative errors are shared by institutions and individuals. When false-positive errors are made, individuals are assigned to programs or jobs that are beyond their levels of competence. This results in less-than-optimal utilization of personnel and the possibility of costly damage for institutions. It also results in psychological and physical hazards for individuals. When false-negative errors are made, individuals are not assigned to programs or jobs for which they are competent. Although this is unlikely to result in physical damage to individuals or institutions, it does produce less-than-optimal use of personnel by institutions and the risk of psychological distress for individuals.

In the military context, the risk to human life and the national security associated with false-positive classification errors is particularly great. Although they might cause psychological distress, false-negative classification errors are unlikely to be life-threatening for individuals. But the Services compete with the civilian sector for qualified personnel. Therefore, the military consequences of false-negative classification errors are likely to be severe for military occupational specialties that require personnel with rare skills and abilities.

Conventional Standard-Setting Procedures

The number of procedures that have been proposed for setting standards on pencil-and-paper tests has been estimated as somewhere between 18 (Hambleton and Eignor, 1980) and 31 (Berk, 1985). The difference between these figures has more to do with the authors' criteria for identifying methods as “different” than with substantively new developments during the years 1980 to 1985. These same authors, as well as others (Meskauskas, 1976; Berk, 1980; Hambleton, 1980), have proposed a variety of schemes for classifying standard-setting procedures. Since this review of standard-setting procedures will be restricted to those that have been widely used and/or hold promise for use in establishing standards on military job performance tests, a simple, two-category classification method will be used. Procedures that require judgements about test items will be described apart from procedures that require judgments about the competence of examinees.

Procedures That Require Judgments About Test Items

Many of the procedures used for setting standards on achievement tests are based on judgments about the characteristics of dichotomously scored

tests items and examinees' likely performances on those items. Both the types of judgments required and the methods through which judgments are elicited differ across procedures. The most widely used procedures of this type are reviewed in this section.

The Nedelsky Procedure. This standard-setting procedure is, perhaps, of historical interest since it is the oldest procedure in the modern literature on standard setting that still enjoys widespread use. It was proposed by Nedelsky in 1954, and is only applicable to tests composed of multiplechoice items.

The first step in the procedure is to define a population of judges and to select a representative sample from that population. Judges who use the procedure must conceptualize a “minimally competent examinee ” and then predict the behavior of this minimally competent examinee on each option of each multiple-choice test item. Because of the nature of the judgment task, it is essential that judges be knowledgeable about the proficiencies of the examinee population, the requirements of the job for which examinees are being selected, and the difficulties of the test items being judged.

For each item on the test, each judge is asked to predict the number of response options a minimally competent examinee could eliminate as being clearly incorrect. A statistic termed by Nedelsky the “ minimum pass level” (MPL) is then computed for each item. The MPL for an item is equal to the reciprocal of the number of response options remaining, following elimination of the options that could be identified as incorrect by a minimally competent examinee. The test standard based on the predictions of a single judge is computed as the sum of the MPL values produced by that judge for all items on the test.

An initial test standard is computed by averaging the summed MPL values produced by the predictions of each of a sample of judges. Nedelsky (1954) recommended that this initial test standard be adjusted to control the probability that an examinee whose true performance was just equal to the initial test standard could be classified as incompetent due solely to measurement error in the testing process. The adjustment procedure recommended by Nedelsky depends on the assumption that the standard deviation of the test standards derived from the predictions of a sample of judges is equal to the standard error of measurement of the test. If the assumption were correct, and if the distribution of measurement errors on the test were normal, the probability of failing an examinee with true ability just equal to the initial recommended test standard could be reduced to any desired value. For example, reducing the initial test standard by one standard deviation of the distribution of summed MPL values would ensure that no more than 16 percent of examinees with true ability just equal to the initial recommended test standard would fail. Reducing the initial recommended test standard by two standard deviations would reduce this probability to about 2 percent.

The initial recommended test standard produced by Nedelsky's procedure derives from the assumption that examinees will make random choices among the item options that they cannot eliminate as being clearly incorrect. Examinees are assumed to have no partial information or to be uninfluenced by partial information when making their choices among remaining options. If these assumptions were correct, and if judges were able to correctly predict the average number of options a minimally competent examinee could eliminate as being clearly incorrect, the initial tests standard resulting from the Nedelsky procedure would be an unbiased estimate of the mean tests score that would be earned by minimally competent examinees. However, studies by Poggio et al. (1981) report that, when Nedelsky 's procedure was applied to pencil-and-paper achievement tests in a public school setting, school personnel were unable to make consistent judgments of the type required to satisfy the assumptions of the procedure.

The Angoff Procedure. Although he attributes the procedure to Ledyard Tucker (Livingston and Zieky, 1983), William Angoff's name is associated with a standard-setting method that he described in 1971. The procedure requires that each of a sample of judges consider each item on a test and estimate (1971:515):

the probability that the “minimally acceptable” person would answer each item correctly. In effect, the judges would think of a number of minimally acceptable persons, instead of only one such person, and would estimate the proportion of minimally acceptable persons who would answer each item correctly. The sum of these probabilities, or proportions, would then represent the minimally acceptable score.

As was true of Nedelsky's procedure, the first step in using Angoff's procedure is to identify an appropriate population of judges and then to select a representative sample from this population. Judges are then asked to conceptualize a minimally competent examinee. Livingston and Zieky (1982) suggest that judges be helped to define minimal competence by having them review the domain that the test is to assess and then take part in a discussion on what constitutes “borderline knowledge and skills.” If judges can agree on a level of performance that distinguishes between examinees who are competent and those who are not, Zieky and Livingston recommend that the definition of that performance be recorded, together with examples of performance that are judged to be above, and below, the standard. Using as an example a test that was designed to assess the reading comprehension of high school students, Zieky and Livingston suggest that judges be asked to reach agreement on whether a minimally competent student must be able to “find specific information in a newspaper article, distinguish statements of fact from statements of opinion, recognize the main idea of a paragraph,” and so on. To be useful in characterizing a

minimally competent examinee, the behaviors used to distinguish between those who are competent and those who are not should represent the domain of behavior assessed by the test for which a standard is desired.

The judgments required by Angoff's procedure are as follows: Each judge, working independently, considers the items on a test individually and predicts for each item the probability that a minimally competent examinee would be able to answer the test item correctly.

The sum of the probabilities predicted by a judge becomes that judge 's recommended test standard and, if the predictions were correct, would equal the total score on the examination that would be earned by a minimally competent examinee. The average of the recommended test standards produced by the entire sample of judges is the test standard that results from Angoff's procedure.

If for each item on the test the average of the probabilities predicted by the sample of judges was correct, the test standard produced by Angoff's procedure would equal the mean score earned by a population of minimally competent examinees. In any case, the result of Angoff 's procedure can be viewed as a subjective estimate of that mean.

Angoff's procedure has been modified in several ways, so as to make it easier to use and/or to increase the reliability of its results. One modification involves use of a fixed scale of probability values from which judges select their predictions. This technique allows judges' predictions to be processed by an optical mark-sense reader for direct entry to a computer, this saving a coding step and reducing the possibility of clerical errors. Educational Testing Service used an asymmetric scale of probabilities when setting standards on the subtests of the National Teacher Examinations (NTE). Livingston and Zieky (1982:25) objected to the use of an asymmetric scale, since they felt it might bias judges' predictions. Cross et al. (1984) used a symmetric scale of 10 probability values that covered the full range from zero to one, thus overcoming Livingston and Zieky's objections.

Other modifications of Angoff's procedure include the use of iterative processes through which judges are given an opportunity to discuss their initial predictions and then to reconsider those predictions. Cross et al. (1984) investigated the effects of such a process coupled with the use of normative data on examinees' actual test performances. They found that judges recommended a lower test standard at the end of a second judgment session than at the end of an initial session. These results were not entirely consistent with findings of Jaeger and Busch (1984) in a study of standards set for the National Teacher Examinations. They found that mean recommended standards were lower at the end of a second judgment session than at the end of an initial session for four out of eight subtests of the NTE Core Battery; they found just the reverse for the other four subtests. However, the variability of recommended test standards was consistently reduced by

using an iterative judgment process. The resulting increase in the stability of mean recommended test standards suggests that use of an iterative judgment process with Angoff's procedure is advantageous.

The Ebel Procedure. The Ebel (1972:492-494) standard-setting procedure also begins by defining a population of judges and selecting a representative sample from that population. After conceptualizing a “minimally competent ” examinee, judges must complete three tasks.

First, judges must construct a two-dimensional taxonomy of the items in a test, one dimension being defined by the “difficulty” of the test items and the other being defined by the “relevance” of the items. Ebel suggested using three levels of difficulty, which he labeled “easy,” “medium,” and “hard.” He suggested that four levels of item relevance be labeled “essential,” “important,” “acceptable,” and “questionable.” However, the procedure does not depend on the use of these specific categories or labels. The numbers of dimensions and categories could be changed without altering the basic method.

The judges' second task is to allocate each of the items on the test to one of the cells created by the two-dimensional taxonomy constructed in the first step. For example, Item 1 might be judged to be of “medium difficulty” and to be “important;” Item 2 might be judged to be of “easy difficulty” and to be of “questionable” relevance, etc.

The judges' final task is to answer the following question for each category of test items (Livingston and Zieky, 1982:25):

If a borderline test-taker had to answer a large number of questions like these, what percentage would he or she answer correctly?

When a test standard is computed using Ebel's method, a judge's recommended percentage for a cell of the taxonomy is multiplied by the number of test items the judge allocated to that cell. These products are then summed across all cells of the taxonomy to produce a recommended test standard for that judge. As in the procedures described earlier, the recommendations of all sampled judges are averaged to produce a final recommended test standard.

The Jaeger Procedure. This procedure was developed for use in setting a standard on a high school competency test (Jaeger, 1978, 1982), but can be adapted to any testing situation where a licensing, certification, or selection decision is based on an examinee's test performance (Cross et al., 1984).

One or more populations of judges must be specified, and representative samples must be selected from each population. As in the procedures described above, judges are asked to render judgments about test items. More specifically, judges are asked to answer the following question for each item

on the test for which a standard is desired: Should every examinee in the population of those who receive favorable action on the decision that underlies use of the test (e.g., every enlistee who is admitted to the military occupational specialty) be able to answer the test item correctly? Notice that this question does not require judges to conceptualize a “minimally competent” examinee.

An initial standard for a judge is computed by counting the number of items for which that judge responded “yes” to the question stated above. An initial test standard is established by computing the median of the standards recommended by each sampled judge.

Jaeger's procedure is iterative by design. Judges are afforded several opportunities to reconsider their initial recommendations in light of data on the actual test performances of examinees and the recommendations of their fellow judges. In its original application, judges were first asked to provide “yes/no” recommendations on each test item on a 120-item reading comprehension test. The judges were then given data on the proportion of examinees who had actually answered each test item correctly in the most recent administration of the test, in addition to the distribution of test standards recommended by their fellow judges. Following a review of these data, judges were asked to reconsider their initial recommendations and once again answer, for each item, the question of whether every “successful” examinee should be able to answer the test item correctly. These answers were used to compute a new set of recommended test standards in preparation for a final judgment session. Prior to the final judgment session, judges were given data on the proportion of examinees who completed the test during the most recent administration who would have failed the test had the standard been set at each of the score values between zero and the maximum possible score. In addition, judges were shown the distribution of test standards recommended by their fellow judges during the second judgment session. A final judgment session, identical to the first two, was then conducted. The “yes” responses were tabulated for each judge, and the final recommended test standard was defined as the median of the standards computed for each judge.

Jaeger (1982) recommends that more than one population of judges be sampled, and that the final test standard be based on the lowest of the median recommended standards computed for the various samples of judges. He also suggests that prior to the initial judgment session each judge complete the test under conditions that approximate those used in an actual test administration.

Procedures That Require Judgments About Examinees

Unlike the standard-setting procedures that have been described to this point, several widely used procedures do not require judgments about the

characteristics or difficulty of test items. Instead, judges are asked to make decisions regarding the competence of individual examinees on the ability measured by the test for which a standard is sought. Proponents of these procedures claim that the types of judgments required—concerning persons rather than test items—are more consistent with the experience and capabilities of educators and supervisory personnel. The resulting test standards are thus claimed to be more reasonable and realistic.

The Borderline-Group Procedure. This standard-setting procedure was proposed by Zieky and Livingston (1977). As is true of all standard-setting procedures, the first step in applying the procedure is to define an appropriate population of judges and then to select a representative sample from that population. Livingston and Zieky (1982:31) indicate the importance of sampled judges knowing, or being able to determine, the level of knowledge or skill in the domain assessed by the test of individual examinees they will be asked to judge. Careful and appropriate selection of judges is thus critical to the success of the procedure.

Judges are first asked to define three categories of competence in the domain of knowledge or skill assessed by the test: “adequate or competent,” “borderline or marginal,” and “inadequate or incompetent.” Ideally, these definitions would be operational, would be consensual, and would be reached collectively following extensive deliberation and discussion by the entire sample of judges. In reality, this ideal might not be achieved, nor might a process of face-to-face discussion among judges be feasible.

Once definitions of the three categories of competence have been formulated, the principal act of judgment in the borderline-group procedure requires judges to identify members of the examinee population whom they would classify as “borderline or marginal” in the knowledge and/or skill assessed by the test. It is essential that the judges use information other than the score on the test for which a standard is sought in reaching their classification decisions. If scores on this test were used, the standard-setting procedure would be tautological. Additionally, classification decisions based on scores on the test for which a standard is sought might well be biased. Interpretations of test performances are often normative, and individual judges are unlikely to know about the performances of a representative sample of the population of examinees.

The test for which a standard is sought is administered, under standardized conditions, after a subpopulation of examinees has been classified as “marginal or borderline.” The standard produced by the borderline-group method is defined as the median of the distribution of test scores of examinees who are classified as “marginal or borderline.”

Although the borderline-group procedure has some definite advantages,

it is subject to several factors that threaten the validity of the test standard it produces. First, unless the sample of examinees that is classified by the judges is in its distribution of test scores representative of the population of examinees to which the test standard is applied, a biased standard will result. Second, in making their classifications it is essential that judges restrict their attention to knowledge and/or skill that is assessed by the test for which a standard is sought. To make reasoned decisions, judges must be familiar with the performance of examinees they are to classify. However, the better they know these examinees, the more likely they are to be influenced in their judgments by factors other than the knowledge and/or skill assessed by the test; halo effect is a pervasive influence in judgments that require classification of persons. Finally, the “borderline” category is the middle position of a three-point scale that ranges from “competent” to “incompetent.” Numerous studies of judges' classification behavior have shown that the middle category of a rating scale tends to be used when judges do not have information that is sufficient to make a valid judgment. Contamination of the “borderline” category with examinees that do not belong there would bias the test standard produced by the borderline-group procedure.

The Contrasting-Groups Procedure. Proposed by Zieky and Livingston in 1977, this procedure is similar in concept to the criterion-groups procedure suggested by Berk (1976). The principal focus of judgment in the contrasting-groups procedure is on the competence of examinees rather than the characteristics of test items, just as in the borderline-group procedure.

The first two steps of the contrasting-groups procedure are identical to those of the borderline-group procedure. First, a population of judges is defined and a representative sample of judges is selected from that population. Second, the sampled judges must develop operational definitions of three categories of competence in the domain of knowledge and/or skill assessed by the test for which a standard is sought: “adequate or competent,” “borderline or marginal,” and inadequate or incompetent.”

The principal judgmental act of the contrasting-groups procedure requires judges to assign a representative sample of the population to be examined to the three categories of competence they have just defined. That is, each member of the sample of examinees is assigned to a category labeled “adequate or competent,” “borderline or marginal, or “inadequate or incompetent.”

Once classification of examinees has been completed, the test for which a standard is sought is administered to the examinees about whom judgments have been made. The standard that results from the contrasting-groups method is based on the test score distributions of examinees who

have been assigned to the “adequate or competent” and “inadequate or incompetent” categories.

Several methods have been proposed for analyzing the test score distributions of examinees who have been assigned to the “adequate or competent ” and the “inadequate and incompetent” categories. Hambleton and Eignor (1980) recommended that the two test score distributions be plotted on the same graph and that the test standard be defined as the score at which these two distributions intersect. This procedure assumes that the score distributions will not be coincident and that they will be overlapping. Under these conditions, the test standard that results from this algorithm has the advantage of minimizing the total number of examinees who were classified as “competent” and who would fail the test, plus the total number of examinees who were classified as “incompetent” and who would pass the test. If the loss attendant to passing an incompetent examinee were not equal to the loss attendant to failing a competent one, this test standard would not minimize total expected losses. However, if the loss ratio was either known or estimable, the standard could be adjusted readily so as to minimize expected losses.

Livingston and Zieky (1982) proposed an alternative method of analyzing the test score distribution of “competent” examinees for the purpose of setting a standard. They suggested that the percentage of examinees classified as “competent” be computed for the subsample of examinees who earned every possible test score. The test standard would be defined as the test score for which 50 percent of the examinees were classified as “ competent.” Since for small samples of examinees, the distribution of test scores is likely to be irregular, Livingston and Zieky (1982) recommend the use of a smoothing procedure prior to computing the score value for which 50 percent of the examinees were classified as “competent. ” They describe several alternative smoothing procedures.

Most of the cautions enumerated above for the borderline-group procedure apply to the contrasting-groups procedure as well: Judges must have an adequate and appropriate basis for classifying examinees, yet avoid classification on bases outside the domain of knowledge and/or skill assessed by the test. A representative sample of examinees must be classified so as to avoid distortion of the distributions of test scores of “competent” and “incompetent” examinees. Since not only the shapes of test score distributions but the sample sizes on which they are based will affect their point of intersection, use of a representative sample of examinees is essential to the fidelity of the standard resulting from the contrasting-groups procedure.

Berk's (1976) criterion-groups procedure is operationally identical to the contrasting-groups procedure apart from the definition of groups. In Berk's method, instead of classifying examinees as “competent” or “incompetent,”

“criterion” groups are formed from examinees who are “uninstructed” and “instructed” in the material assessed by the test for which a standard is sought. Of course, judgment is needed to define groups that can appropriately be termed “uninstructed” and “instructed.” A fundamental assumption of Berk's method is that the “uninstructed” group is incompetent in the knowledge and/or skill assessed by the test, and that the “instructed” group is competent in that knowledge and/or skill.

Prospects for Applying Conventional Standard-Setting Procedures

Although three Services are developing new pencil-and-paper tests as components of their job performance criterion measures (Laabs, 1984; Committee on the Performance of Military Personnel, 1984), a principal interest of the military is establishment of standards on the performance components of the new measures. Since all of the conventional standard-setting procedures reviewed above were developed for use with pencil-and-paper tests in a public education setting, they might not be applicable to hands-on and/or interview procedures used in a military setting. We will consider the applicability of the procedures in the order of their initial description.

Procedures That Require Judgments About Test Items

The Nedelsky procedure may be only partially applicable in setting standards on military job performance tests because it can be used only with multiple-choice test items, while the assessment of “manifest, observable job behaviors” is a central purpose of the military job performance tests. The performance components of these tests (in the Joint-Service lexicon, the hands-on portions) typically measure active performance of a task in accordance with the specifications of a military manual. Because the behavior to be measured is appropriate action, not discrimination among proposed actions, a multiple-choice item format would appear to be inconsistent with specified measurement objectives.

The Nedelsky procedure could be used to establish standards on the “knowledge measures” portion of the criterion measures being developed by the Army, and on similar tests developed by other Services, provided the measures consist of items in multiple-choice format. In civilian settings, the Nedelsky procedure often has provided standards that are somewhat more lenient than those provided by other procedures. In the proposed military setting, lenient standards of performance on individual tasks still lead to stringent standards on an entire job performance test. This would be true if a separate standard had to be established for each task and satisfactory performance were required on all sampled tasks. For example, suppose that pencil-and-paper measures had been developed for 10 tasks that com-

posed a military occupational specialty job performance test. If the standard of performance adopted for each measure resulted in just 5 percent of enlistees failing, and if examinee performances on the various measures were independent (an admittedly unlikely occurrence, used here merely to illustrate the extreme case), the percentage of examinees who would satisfy the overall military occupational specialty criterion on the pencil-and-paper portion of the job performance test would be 100 × (1 − 0.05)10 = 59.9 percent. Thus almost 40 percent of the examinees would fail the pencil-and-paper portion of the job performance test, even though only 5 percent would fail to complete any given task. An alternative standard-setting procedure that resulted in more stringent standards for each task would result in an even higher (and perhaps unacceptable) failure rate on the pencil-and-paper portion of the job performance test.

The stimulus question that defines the fundamental judgment task of the Nedelsky procedure could be stated in any of several seemingly reasonable ways. A central issue would be the appropriate referent for a “minimally competent examinee.” An example using the Army military occupational specialty (MOS) 95B (military police) should clarify the issue. Suppose that one tested task from this military occupational specialty was “restraining a suspect.” Should the judges being asked to recommend a standard for the test of knowledge of this task be asked to:

Think about a soldier who has just been admitted to MOS 95B who is borderline in his/her knowledge of restraining a subject. Which options of each of the following test items should this soldier be able to eliminate as obviously incorrect?

Or should the judges be asked to:

Think about a soldier who has just been admitted to MOS 95B who is just borderline in his/her knowledge needed to function satisfactorily in that MOS. Which options of each of the following test items should this soldier be able to eliminate as obviously incorrect?”

The difference here is in the referent population. One is task-specific and the other refers to the entire military occupational specialty. Either choice could be supported through logical argument. Since the test is task-specific, the task-delimited population is consistent with Nedelsky's specifications. However, the tested task is one of many that could have been sampled from those that compose the military occupational specialty and the domain of generalization of fundamental interest is the military occupational specialty rather than the sampled task. Perhaps the stimulus question should be constructed on practical rather than purely logical grounds. Judges could be asked whether it is easier to conceptualize a minimally competent soldier who has just been admitted to the military occupational specialty or a soldier who was minimally competent in performing the task being tested.

Some experiments could be conducted to determine the referent population that produced the smallest variation in recommended test standards. Mean recommended standards resulting from use of the two referent populations could be compared to determine whether they differed and which appeared to be most reasonable.

The standard-setting procedures proposed by Angoff, Ebel, and Jaeger can be used with any test that is composed of items or activities that are scored dichotomously. Since all of the military job performance measures we have reviewed are of this form, any of these standard-setting procedures could be used with these tests.

Like the Nedelsky procedure, both the Angoff and Ebel procedures require that judges define a minimally competent examinee. The issue of an appropriate referent population, discussed in the context of the Nedelsky procedure, would therefore be of concern with these procedures as well. Once the question of an appropriate referent population was settled, adaptation of the Angoff procedure to military job performance tests with dichotomously scored components would appear to be straightforward. For example, when used with the performance component of a criterion test for military occupational specialty 95B, the stimulus question might be:

Think about 100 soldiers who have just been admitted to MOS 95B who are borderline in their ability to restrain a suspect. What percentage of these 100 soldiers would position the suspect correctly when applying handcuffs?

Similar questions could be formed for each tested activity in the “restraining a suspect” task.

Ebel's procedure might not be applicable to military job performance tests for several practical reasons. The procedure presumes that the “ items” that compose a test are unidimensional but stratifiable on dimensions of difficulty and relevance. Many of the military job performance measures we have reviewed contain very few activities or items, so that stratification of items might not be possible. Asking a judge “What percentage of the items on this test should a minimally competent examinee be able to answer correctly?” is tantamount to asking “Where should the test standard be set?”. Without stratification of items into relatively homogeneous clusters. Ebel's method is unlikely to yield stable results. Theoretically, Ebel's method could be applied to an overall job performance test to yield a standard for an entire military occupational specialty rather than a single task. However, several assumptions inherent in the method would then be highly questionable. The most obvious basis for stratification of items or activities on the test would be by task, but it is not likely that the activities or items used to assess an examinee's performance of a single task are homogeneous in relevance or difficulty. Also, the relative lengths of subtests that assess

an examinee's performance of different tasks are probably a consequence of the level of detail contained in the military procedures manual that describes the tasks, rather than the relative importance of the tasks. Since Ebel's method weights item strata in proportion to their size, a task that contained a larger number of activities would receive more weight in determining an overall test standard than would a task that contained fewer activities, regardless of the relative importance of the two tasks. In computing an overall test standard, Ebel's procedure has no provision for weighting item strata by importance or by any other judgmental consideration.

From a purely mechanical standpoint, Jaeger's standard-setting procedure could be used readily with either the pencil-and-paper or performance components of military job performance tests. Since it does not require judges to conceptualize a minimally competent examinee, the problem of defining an appropriate referent population, a central issue with the other item-based standard-setting procedures, would not arise with Jaeger's procedure. However, if the empirical results observed in civilian public-school settings are also found in the military context, Jaeger's procedure might yield test standards that are unacceptably high. If standards are established for tests of each sampled task, this problem is likely to be greatly exacerbated. The principal advantage of Nedelsky's procedure, illustrated above, might well be the principal disadvantage of Jaeger's procedure, since stringent test standards for each task would translate to an impossibly stringent standard for admission to a military occupational specialty.

When using Jaeger's procedure, judges might be asked the following question for each activity in a test designed to assess performance on a designated task: “Should every enlistee who is accepted for this MOS be able to perform this activity?”. On the tests we have reviewed, it appears that the activities listed closely mirror descriptions of standard practice as specified in applicable military procedures manuals. If judges based their recommendations on “the book” they would likely answer questions about most, if not all, activities affirmatively, thus resulting in impossibly high test standards.

Our expectation then, is that Jaeger's procedure could be adapted to military job performance tests quite readily, but would likely yield test standards that were impractically high. This expectation should not preclude small-scale empirical investigations of the procedure.

Procedures That Require Judgments About Examinees

When considered for setting standards on military job performance tests, both the borderline-group procedure and the contrasting-groups procedure offer appealing characteristics and features. These procedures can be used with tests composed of any type of test item, regardless of item scoring, as

long as the tests assess some unidimensional variable and yield a total score. Although the small sample of military job performance tests we have reviewed contained tasks that were made up of discrete units that could be treated as “items,” some performance tests might not be assembled in this way, thus rendering the item-based standard-setting procedures inapplicable. For example, a test concerned with operation of a simple weapon might be scored on the basis of time to effect firing or accuracy of results. If the variable representing “success ” can be scored continuously, the borderline-group or contrasting-groups standard-setting procedures could be used to determine a standard of performance, even though the item-based procedures could not.

A second advantage of the standard-setting procedures that require judgments about examinees is that the types of judgments required are probably similar to those made routinely by military supervisors, both in training schools and active units. In fact, somewhat similar judgments were requested of military job experts in the study published by the U.S. Army Research Institute for the Behavioral and Social Sciences (1984). Appendix A of the Army report contains a scale for assessing the abilities of soldiers to perform various tasks associated with specified military occupational specialties.

Despite their advantages, the borderline-group and contrasting-groups standard-setting procedures present several operational problems that might be difficult to overcome. Both procedures require classification of examinees into groups labeled unacceptable, borderline, and acceptable, and subsequent testing of persons in at least one of these groups. When discussing the item-based standard-setting procedures, we suggested that the appropriate referent population of “minimally competent examinees” was not obvious. In a somewhat different form, the same problem must be dealt with for the person-based procedures: Should judges be asked to classify examinees as “unacceptable,” “borderline,” or “acceptable” in the skills defined by the task cluster represented by the test or in all skills needed to function within a military occupational specialty? Since a standard is likely to be desired for a test that is restricted to a single task cluster, one could argue that the appropriate referent population is obvious. On the other hand, eliminating personnel who cannot meet all the demands of a military occupational specialty is the ultimate goal.

A second, and perhaps more serious problem, is obtaining judgments, and test data on examinees who are “unacceptable.” Under current military classification procedures, such persons would rarely be assigned to active duty in a military occupational specialty. First, potentially unacceptable enlistees are screened out on the basis of their ASVAB performances. Few such persons are assigned to service schools that provide the training necessary to enter a military occupational specialty for which they are “unaccept-

able.” Second, since success in an appropriate service school is prerequisite to assignment to active duty in a military occupational specialty and screening takes place prior to graduation from military service schools, the population of potentially unacceptable personnel assigned to active duty in a military occupational specialty is further reduced. The contrasting-groups procedure requires the identification of personnel who are “acceptable” and “unacceptable” in the skills assessed by the test for which a standard is sought. The number of “unacceptable” examinees must be sufficiently large to obtain a stable distribution of test scores. If very few “unacceptable ” persons are admitted to a military occupational specialty, obtaining a sufficient number of nominations might not be possible. Recall that classification of examinees to the “unacceptable,” “borderline,” and “acceptable” groups must be based on information other than scores on the tests for which standards are sought. In the present context, that information would have to consist of observations of on-the-job performance of enlistees early in their initial tours of duty in a military occupational specialty. Again, to the extent that current military classification systems are effective, the number of “unacceptable” enlistees will be very small.

Operational Questions and Issues

Regardless of the procedure used to establish standards on military job performance tests, a set of common operational issues must be considered. Since judgments are required, one or more appropriate populations of judges must be identified. The numbers of judges to be sampled from each population must be specified. The stimulus materials used to elicit judgments must be developed. The substance and process of training judges must be specified and developed. The information to be provided judges, both prior to and during the judgment process, must be specified. A decision must be reached on handling measurement error within the process of computing a standard, and if measurement error is to be considered an algorithm for doing so must be developed. We will discuss each of these issues briefly.

Types of Judges to be Used

All of the item-based standard-setting procedures (with the possible exception of Jaeger's procedure) require judges to be knowledgeable about the distribution of ability of examinees on the skills assessed by the test and about the distributions of performance of examinees on each item contained in the test. Judges used with these procedures should therefore have experience in observing and working with the examinee population, either in a service-school setting or, preferably, in the actual working environment of

the military occupational specialty for which the test is a criterion. Judges used with the person-based standard-setting procedures must meet even more stringent criteria. Since they must classify individual examinees, they must be knowledgeable about the abilities of these individuals to perform specific tasks in the actual work settings of the military occupational specialty.

These requirements suggest that either instructional personnel in appropriate military service schools or immediate unit supervisors (such as non-commissioned officers) in military occupational specialty field units could serve as judges for the item-based standard-setting procedures, but only the latter personnel would be suitable as judges for the examinee-based standard-setting procedures.

Numbers of Judges to be Used

In any standard-setting procedure, the numbers of judges to be used should be determined by considering the probable magnitude of the standard error of the recommended test standard as a function of sample size. Since in all of the standard-setting procedures described in this paper, the recommendations of individual judges are derived more or less independently and are aggregated only at the point of computing a final test standard, the standard error of that recommendation will vary inversely as the square root of the size of the sample of judges.

Ideally, the size of the sample of judges would be sufficient to reduce the standard error of the recommended test standard to less than half a raw score point on the test for which the standard was desired. In that case, assuming that the recommended test standard varied normally across samples of judges, the probability that an examinee whose test score was equal to the test standard recommended by a population of judges would pass or fail the test, just due to sampling of judges, would be no more than 0.05.

In practice, the size of a sample of judges needed to reduce the standard error of the recommended test standard to the ideal point might be prohibitively expensive or otherwise infeasible to obtain. An alternative criterion for sample size might be based on the relative magnitudes of the standard error of the recommended test standard and the standard error of measurement of the test for which a standard was desired. Since these sources of error are independent and therefore additive, it is possible to determine the contribution of sampling error to the overall error in establishing a test standard. For example, if the standard error of the mean test standard was half the magnitude of the standard error of measurement of the test, the variance error of the mean would be only one-fourth the variance error of measurement, and the overall standard error would be increased by a factor of (1.25)1/2 = 1.12 or 12 percent. Alternatively, if the standard error of the mean test standard was one-tenth the magnitude of the standard error of

measurement of the test, the variance error of the mean would be only one one-hundredth the variance error of measurement, and the overall standard error would be increased by a factor of (1.01)1/2 = 1.005, or 0.5 percent.

Empirical work by Cross et al. (1984) and Jaeger and Busch (1984) showed that, for the subtests of the National Teacher Examinations, relative magnitudes of standard errors of the mean and measurement closer to the latter example than the former were realized with samples of 20 to 30 judges. A modified, iterative form of Angoff's standard-setting procedure was used in each of these studies.

Stimulus Materials to be Used in Setting Standards

The specific stimulus materials to be used in a standard-setting procedure must, of course, be based on the steps involved in conducting that procedure. However, it is essential that the materials be explicit and standardized. All judges must engage in the same standard-setting process, must be fully informed about the types of judgments required of them, and must be privy to the same types of information given all other judges. Experience has shown that judges should be given written as well as standardized oral instructions on the purposes for which their judgments are sought, the types of judgments they are to make, and the exact procedures they are to follow.

The questions judges are asked to answer must be developed with caution and care. For example, in the Angoff standard-setting procedure, judges are asked to estimate the probability that a “minimally competent ” examinee would be able to answer each test item correctly. Different responses should be expected, depending on whether judges are asked whether examinees “would be able to answer test items correctly” or “should be able to answer test items correctly. ” The first question requires a prediction of actual examinee behavior, while the second one requires a statement of desired examinee behavior. Another subtle, but important, distinction in the Angoff procedure concerns the issue of guessing. Since the Angoff procedure is frequently applied to multiple-choice items, examinees might well answer a test item correctly by guessing. Judges will likely estimate different probabilities of actual examinee behavior, depending on whether they are told to consider guessing, to ignore the possibility of guessing, or are given no instructions about guessing. The latter procedure is the least desirable, since consideration of guessing on the part of examinees becomes a source of error variance in the responses of judges.

This example illustrates one of many details that must be addressed if the stimulus materials used in a standard-setting procedure are to function correctly. The goal in developing stimulus materials should be to minimize the variance of recommendations across judges due to factors other than true differences in their judgment of an appropriate test standard.

Training of Judges

Obviously, if judges are to provide considered and thoughtful recommendations on standards for military job performance tests, they must understand their tasks clearly and completely. Judges will have to be trained to do their jobs if these ends are to be achieved.

Although the specifics of a training program for judges must depend on the standard-setting procedure used, some common elements can be identified. First, judges must thoroughly understand the test for which standards are desired. One effective way to meet this need is to have judges complete the test themselves under conditions that approximate an operational test administration. This training technique has been used successfully in setting standards on high school competency tests (Jaeger, 1982) and knowledge tests for beginning teachers (Cross et al., 1984; Jaeger and Busch, 1984).

Second, judges must understand the sequence of operations they are to carry out in providing their recommendations. Since in some standardsetting procedures a single set of judgments is elicited, the procedures are likely to be straightforward and easily learned through a single instructional session followed by a period for answering questions. However, other standard-setting procedures are iterative and require judges to provide several sets of recommendations. In these cases, a simulation of the judgment process might be necessary to ensure that judges know what is expected of them. Jaeger and Busch (1984) used such a simulation, together with a small, simulated version of the test for which standards were desired, in a three-stage standard-setting procedure. Following the actual standard-setting exercise, almost all judges reported that they fully understood what they were to do.

Third, in some standard-setting procedures, judges are provided with normative data on the test performances of examinees. Typically, these data are provided in graphical or tabular form, and since the types of graphs or tables used might not be familiar to them, judges might require instruction on their interpretation. For example, in modified versions of the Angoff and Jaeger standard-setting procedures, judges are shown a “p-value” for each item on the test. It should not be assumed that judges will know that these numbers represent the proportions of examinees who answered each test item correctly when the test was last administered. Normative data on examinees' test performances have also been provided in the form of an ogive (cumulative distribution function graph). It is not reasonable to assume that all judges will know how to read and interpret such graphs without specific training.

The overall objective of training should be to ensure that all judges are responding to the same set of questions on the basis of accurate and common understanding of their judgment tasks.

Information to be Provided to Judges

Citing both logical and empirical grounds, several researchers (Glass, 1978; Poggio et al., 1981) have questioned the abilities of judges to make sensible recommendations of the sort required by most item-based standard-setting procedures. In support of their contentions, these authors cite a number of studies in which recommended test standards would have resulted in outlandish examinee failure rates. For example, Educational Testing Service's study to determine standards for the National Teacher Examinations in North Carolina produced recommendations that would have resulted in denial of teacher certification to half the graduates of the state's accredited and approved teacher education programs.

For some military and civilian occupations one could reasonably argue that examinee failure rates are irrelevant to decisions on an appropriate test standard. For example, brain surgeons, jet engine mechanics, and pilots must be able to perform well all tasks that are essential to their jobs. For these types of positions, it is clearly more damaging to employ less than fully competent persons than to have unfilled positions. But for other, less critical jobs, or where on-the-job training might reasonably be used to compensate for marginal qualification at the time an applicant is hired, one could argue that judges' recommendations for test standards should be based on a realistic assessment of the capabilities of the examinees to whom the test will be administered, as well as the requirements of the job itself.

In such situations, it has been recommended that judges be provided with normative information on examinees' test performances to enable them to evaluate the consequences of their proposed test standards. As mentioned in the preceding section, several iterative standard-setting procedures provide judges with item p-values as well as cumulative distributions of the total scores earned by a representative sample of examinees during the most recent administration of the test. Studies have shown that judges use such information when formulating their recommendations (Jaeger, 1982) and that the predominant effect of such data is to reduce the variability of judges' recommendations (Cross et al., 1984; Jaeger and Busch, 1984).

The principal logical argument in support of such use of normative test data is that judges who are well informed on the capabilities of the examinees to whom the test will be administered are likely to provide more reasoned (and therefore better) recommendations on appropriate test standards. That normative test data also appear to reduce the variability of judges' recommended test standards, thereby increasing the reliability of their recommendations, is a serendipitous finding that adds nothing to the logical argument.

Another type of information judges might be provided during an iterative standard-setting procedure is a summary of the recommendations of their

fellow judges. A large body of social psychological literature, dating from the work of Sherif (1947), suggests that most persons are influenced by the judgments of their peers in decision situations. The manner in which information on the judgments of peers is provided has a crucial influence on the outcome of the judgment process. Summary data in the absence of justification might induce a shift in judgment toward the central tendency of the group, thereby reducing variability, but are unlikely to result in better informed, and hence more reasoned, judgments. A more defensible procedure would allow judges to state and justify the reasons for their recommendations. If this procedure is followed, it is essential that it be carefully controlled to avoid domination by one or a few judges, and to ensure that a full spectrum of judgments is explained.

Measurement Error

Errors of measurement on tests typically are assumed to be normally distributed (Gulliksen, 1950; Lord and Novick, 1968). Based on this assumption, a person whose true level of ability was equal to the standard established for a test would have a 50 percent chance of earning an observed score that fell below the standard, just due to errors of measurement. A person whose true level of ability was one standard error of measurement above the standard would still have a 16 percent chance of earning an observed score that fell below the standard.

To protect against the possibility of failing an examinee as a result of measurement error, several researchers have proposed that initial test standards be adjusted downward by some multiple of the standard error of measurement of the test for which a standard is desired. As described above, Nedelsky (1954) recommended such an adjustment as an integral part of his standard-setting procedure. Unfortunately, he falsely assumed that the standard error of measurement of a test would be well approximated by the standard deviation of judges' recommended standards and based his adjustment on the latter value.

It is unnecessary to adopt Nedelsky's assumption. In most standard-setting situations, the standard error of measurement of the test can be estimated through an internal-consistency reliability estimation procedure, if by no other means, and the recommended test standard can be adjusted accordingly.

Whether a test standard should be adjusted to compensate for errors of measurement is an arguable point, and some might even suggest that the proper adjustment is upward, rather than downward. At issue in the current application are the relative merits of protecting enlistees, or the military, from the consequences of failing a competent examinee or passing an in-

competent one. It is likely that such consequences will vary substantially across tasks and military occupational specialties.

DEFINITION AND CONSTRUCTION OF VALUE FUNCTIONS

The problems discussed in this section concern the establishment of functions that assign value (or worth) to different levels of proficiency in completing various military occupational specialty tasks, and the use of these value functions in assessing the overall worth of an enlistee in a specific military occupational specialty. A method is proposed for defining a value function for a given task. The use of task value functions to establish an overall military occupational specialty value function is then demonstrated. The discussion focuses particularly on hands-on job sample testing.

As in all evaluation processes, judgments must be made. In this situation judgments are needed on the value or worth of task performance levels. Methods for eliciting these judgments are discussed, as are operational issues that are common to several methods.

Defining Task Value Functions

Psychological decision theory (Zedeck and Cascio, 1984) and social behavior theory (Kaplan, 1975) would appear to lend some insights into the problem of establishing task value functions. Psychological decision theory evaluates an individual's (or institution's) decision making strategy by studying the behavior of the individual (or institution). It is in this somewhat circular way that individual (or institutional) behavior is assigned a value. Social behavior theory is not unlike psychological decision theory. In social behavior theory, the value of an individual's behavior is judged on the basis of existing information about that individual. In both of these theoretical frameworks, the behavior being evaluated is the ability to discriminate among proposed actions.

In carrying out their job tasks, enlistees are to behave in accordance with the specifications of a military manual. We assume that the type of behavior (or performance) of most interest in military job performance tests is enlistees' abilities to begin and carry through specific activities. If this is true, judges have less need to judge enlistees' abilities to discriminate among proposed actions than to evaluate their ability to begin a specific task and carry it through to some level of completion. The value assigned will likely depend on an enlistee's level of completion of the task and how accurately the enlistee adhered to the specifications that define the particular task.

One way to define task value functions would be to treat the problem as a multiattribute-utility measurement problem (Gardiner and Edwards, 1975).

In this setting, each “dimension” of each task performance would be evaluated separately. The dimensions of a task would be defined as the set of measurable behaviors (hereafter called a “performance set”) being used to assess enlistees' proficiencies in performing the task. Based on the job performance tests we have reviewed, task performance sets might contain such dimensions as knowledge (measured by a pencil-and-paper test), speed (measured by time taken to complete a hands-on test), and fidelity (measured by the total number of successes on sequences of dichotomously scored hands-on test activities). The same performance set would be used in determining minimally acceptable performance levels when establishing job performance test standards. A task value function would be defined as a weighted average of the values assigned to each of the dimensions in the performance set.

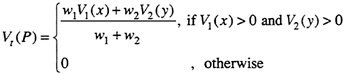

Consider an example: Suppose the task to be assessed is an enlistee 's proficiency at putting on a field or pressure dressing. Dimensions of this task could be defined as “pressure administered properly” (measured by the total number of successfully completed activities in a “hands-on” job proficiency test) and “time elapsed before pressure administered” (measured by time taken to complete this portion of the job proficiency test). Judgments of the values of varying levels of performance on these two dimensions would have to be elicited. Assuming these judgments could be secured, the value functions for these dimensions might be similar to those in Figure 1. If these were the only dimensions, the value function for this task, Vt, would be a weighted average of the value functions for these dimensions. Mathematically,

where P is a set of performance scores on the dimensions in the performance set. In this example the set P contains the time, x, elapsed before pressure was administered and the performance level (or consistency with specified procedures), y, of administering pressure. The weights w1 and w2 would be determined by assessing the relative importance of the two dimensions.

Value functions for the dimensions of a task could be constructed to range between 0 and 1. Performance-test score distributions could be used to determine realistic maximum performance levels, which would be assigned values equal to 1. Performance scores below minimally acceptable levels would be assigned values equal to 0. The general location and spread

FIGURE 1 Sample value functions.

of the distributions of performance scores could help define therates of increase or decrease of value functions.

There is no reason to believe that value functions would be linear. Intuitively, it would seem that small deviations from minimally acceptable performance levels would result in large changes in value, whereas at some higher levels of performance value functions would change more gradually. The actual shapes of value functions would be determined from the judgments elicited on the value (or worth) of different levels of performance (or proficiency) on the dimensions in performance sets.

Structuring Cluster and Military Occupational Specialty Value Functions

A review of the methods used by the Services in choosing the tasks to be included in performance tests indicates that they all used very similar strategies (Morsch et al., 1961; Goody, 1976; Raimsey-Klee, 1981; Burtch et al., 1982; U.S. Army Research Institute for the Behavioral and Social Sciences, 1984). First, each military occupational specialty was partitioned into nonoverlapping clusters, each of which contained similar or related tasks. Judgments were made on the frequency with which each task is performed, on the relative difficulty of each task compared to others in its cluster and the military occupational specialty, and on the relative importance of each task, compared to others in its cluster and the military occupational specialty. Some clusters of tasks appeared in several military occupational specialties and others only appeared in one military occupational specialty. A representative (judgment-based) sample of tasks from each cluster was chosen for the performance test. Based on face validity, it was determined that performance on the tasks sampled from a cluster could be considered to be generalizable to performance on all tasks in the cluster.

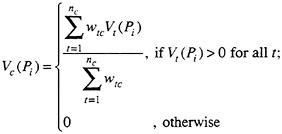

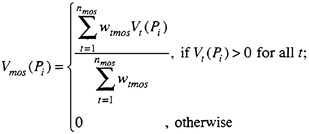

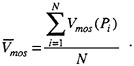

If enlistees' performances on the tasks sampled from a cluster are generalizable, the value of their performances on all tasks in that cluster can be computed from a weighted average of the value functions of the individual tasks sampled. The weights should reflect the already determined relative importance of the tasks sampled from the cluster. Note that, with the problem structured in this way, those who make task value judgments need only consider the frequency with which each task is performed and the difficulty of the task. The importance of each task will be reflected in the weights used to compute cluster value functions.