A1

INTRODUCTION

In March 1990, the FAA Technical Center established a task force of independent consultants1 to undertake testing and evaluation of the Thermal Neutron Analysis (TNA) technology as implemented by SAIC at Kennedy International Airport in New York (JFK). Based on the results of that work, the task force was then asked to prepare a protocol for the conduct of operational testing of explosive detection devices (EDD) or systems (EDS) for checked or carry-on airline baggage (bags, containers, etc). The FAA also intended to use the protocol in the certification of bulk explosive detection systems.

This version of the protocol has been developed in consultation with the National Research Council's Committee on Commercial Aviation Security. The committee has differentiated between those testing aspects that pertain to certification and those that pertain to verification testing; added to the discussion regarding the use and composition of a standard set of baggage for certification testing; clearly recommends a FAA dedicated test site for certification testing; requires that the rationale for deviations from the protocol be documented; and provides for revisions to the protocol as additional testing experience is gained, added some discussion on testing with countermeasures, required analysis of false alarm data for verification testing, and required the documentation of deviations from the protocol.

This protocol is specific to testing of production hardware, as opposed to developmental brass/bread board models or prototype versions of the equipment. This protocol is applicable to:

-

the testing of devices or systems that are automated (i.e., no human intervention used for the detection process);

-

test methods that do not change the characteristics of the item as a result of the test;

-

test methods that detect explosives and explosive devices via bulk properties (e.g., vapor detection devices are excluded).

This protocol does not provide sufficiently detailed plans and procedures to allow the testing and evaluation of a specific hardware device or system. However, the protocol provides the guidelines and framework for planning more detailed test procedures.

As experience is gained in the testing of equipment, this protocol should be updated annually to incorporate additional or modified guidance.

For each application, the FAA will establish the specific threat package (including size, shape, amount and type of explosive) to be detected. Although there will only be one overall threat package, one could envision (in a long range plan) that technologies could be appropriate for, or apply to, a subset of the threat package but not the total package. The FAA will also provide a description of any likely countermeasures that the equipment should be tested against.

This protocol addresses two types of testing: Pass/Fail testing, required for certification testing; and parametric testing, used to obtain statistically-valid verification performance data. Table A1 summarizes the primary differences between these two types of tests.

TABLE A1. Certification Versus Verification Operational Testing

|

|

Test Outcome |

Type Of Equipment |

Test Location |

Threat Package |

Bag Population |

Test Time |

|

EDS Certification Testing |

Pass/Fail |

Low rate or full-scale production units |

FAA Dedicated Site |

Live Explosives, types and quantities specified in the FAA's EDS Requirements Specification |

FAA Standard Set |

Limited Duration |

|

EDD Performance Verification Testing |

Parametric Data on Functional Characteristics |

Low rate or full-scale production units |

FAA Dedicated Site, or Airport Environment |

Live Explosives, or Simulants (at Airport Sites) |

FAA Standard Set, or Actual Passenger Bags |

Limited Duration, or Extended Duration (at Airport Site) |

In order for the FAA to make a decision on the operational functional characteristics of the device for systems, the FAA must consider:

-

estimated probability of detecting explosives p(d), (as observed in the testing of the EDD/EDS.

-

estimated probability of false alarm, p(fa), (as observed in the testing of the EDD/EDS).

-

estimated processing rate of the bags, r.

The FAA may also be interested in the trade-off between the two probabilities, p(d) and p(fa), especially for those approaches that can readily adjust detection thresholds (thus affecting these fractions).

Other factors that should be considered by the FAA, in determining the characteristics of the detection equipment, include:

-

reliability/maintainability/availability,

-

cost, initial and recurring; size; and weight,

-

significant operational constraints (environment, manpower, etc.),

-

bag processing time distribution

-

as appropriate, false alarm types and causes.

The FAA will: provide the test team (which will be responsible for generating the specific test plan preparing and executing the test, analyzing and evaluating the test data, and preparing the report on the findings of the test), and establishing where and when the test will take place.

The test team should have a test director and be composed of experts in the technology being tested, test and evaluation planners, and analysts who can design the statistical plan and conduct the evaluation of the test results. An independent observer should also be a member of the test team. This observer should comment on all activities associated with the testing and evaluation of the EDD/EDS, including: adherence to the test plan, adequacy of the plan, perceived testing limitations, and potential sources of test bias.

All test baggage and test articles, the threat package (explosives or simulants), and personnel will be provided by the FAA.

Chapter A2 of this protocol provides some general requirements associated with the operational testing process. Chapter A3 addresses a set of issues that must be considered and specific requirements that must be fulfilled prior to the development of a detailed test and evaluation plan. In Chapter A4, specific aspects of the detailed plan are discussed. Chapter A5 deals with issues related to the conduct of the test, while Chapter A6 discusses the data analyses and evaluations of the test data. Finally, Annex I discusses how the FAA could establish a standard set of bags and explosives to conduct operational tests or certification tests at a dedicated FAA test site. Annex II contains a suggested approach for validating explosive simulants and Annex III contains a model testing scenario.

A2

GENERAL REQUIREMENTS

In order to develop a specific test plan, the test team must consider all of the factors that may: influence the conduct of the test; bias the measurements related to the detection equipment under test; and affect the results obtained from the test and/or the reliable interpretation of those results. The test team must establish the conditions under which the test is to be conducted and the characteristics (i.e., attributes or variables) of any bag as it is processed.

There are a number of steps or topics that must be considered before development of a final specific test plan for the equipment under test. These may be separated into two groups: general topics and specific topics. The general topics are discussed in this chapter, and the specific topics are covered in the next chapter. However, since this is a generic protocol for a variety of explosive detection devices or systems (using different technologies), there may be some additional factors that may need to be considered, and the discussion that follows should not preclude additional factors from being included in the final test plan if those factors are considered to be relevant by the test team.

It is assumed that prior to this point, the following activities have already occurred:

-

The specific device or system presented to the FAA for testing would first have been tested by the manufacturer using, to the extent possible, the same protocols and performance criteria that the FAA will use. These procedures and results would then, in most cases, have been reviewed by the FAA for adequacy before the candidate device or system would be accepted for testing.

-

The FAA would have appointed an independent test director and a test team to prepare a detailed test procedure from this general test protocol.

-

The test team would be expected to conduct the test in accordance with the detailed test procedure.

-

An agreement would be finalized with the equipment supplier regarding what results will be provided by the FAA after the testing is completed.

A. Explosive Characteristics to be Measured

After the specific equipment to be tested has been identified, the set of characteristics of the explosives that the equipment will measure for detection must be identified and specified. The physical principles employed

by the instrument for detection will determine which physicial characteristics of the explosive material are of interest. This determination is especially important when the use of explosives—such as at airport facilities—will be prohibited during the test of the equipment, so that the use of a simulant should be considered. For example, one of the primary characteristics of explosives measured by TNA devices is the nitrogen content. The test team must determine if simulants can be identified which will exactly mimic the characteristic nitrogen content for the equipment under test.

In addition, any countermeasure techniques to be included in the testing should be identified prior to the test initiation by the FAA, or by the test team in consultation with the FAA. Some technologies are relatively easy to countermeasure while others may be more difficult.

B. Identification of the Set of Threat Explosives

The set of threat explosives (type, shape, and weight) to be used in the testing must be specified by the FAA. For example, the FAA may require that testing must include x pounds of sheet explosive of RDX/PETN base (Semtex). The FAA must also specify the relative frequency of expected occurrence for each item in the set of threat explosives.

The FAA should identify the placement location for the explosives in the containers. During the course of testing, it may be observed that the instruments response is sensitive to the placement location of the explosive in the bag. If this occurs, the FAA should consider additional testing with the threat in most disadvantageous locations.

C. Identification of Potential Bag Populations

The test data base should contain observations for the test bags of all the major characteristics that will be measured by the detection system being tested. This database will be used by the test team to select representative groups of test bags. The actual set of bags used for testing could be: (1) actual passenger bags; (2) fabricated by the FAA appointed team; or, (3) selected from the set of FAA ''lost'' bags. Bag selection is a crucial topic in designing a test plan that is fair and effective.

For certification testing of an EDS, the testing should be performed on a standard set of bags to provide a fair and consistent comparison against the EDS Standard. Annex I discusses how a standard set of bags could be established.

For non-certification testing, the goal will be the generation of parametric performance data. The test team must determine the characteristics of bags typical of those that will be processed at a designated airport. If the testing itself will not be conducted at the airport, data on actual passenger bags being processed at those facilities could be collected. If these data were collected over a sufficiently long period of time, the effect of seasonal and bag-destination differences on bag characteristics could be determined.

Additional information on bag selection techniques is contained in Chapter A3.

D. System Calibration and Threshold Settings

This protocol applies to devices or systems that are totally automatic in their response; i.e. operator independent. The manufacturer will not be allowed to change or modify the settings of the equipment once the test for a given bag population has been initiated. Thus prior to the start of the test, the manufacturer should be allowed to have access to the set of bag populations that will be used for the testing so that they can determine the associated response of the equipment to the characteristics, and thus calibrate and establish the threshold settings of the equipment. The manufacturer should provide the FAA with the complete calibration protocol.

The manufacturer should not be provided details regarding the relative frequency of threat occurrence and location of the threats in the bags, since the tests must be as blind as possible.

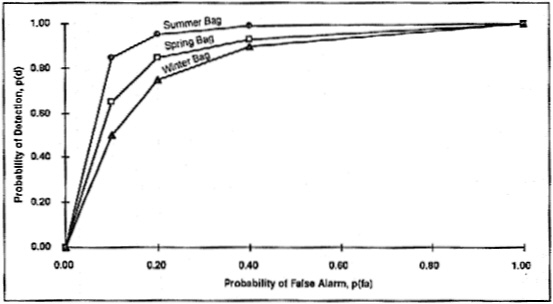

While the manufacturer is establishing the threshold settings, they should also be required to provide the FAA test team with the p(fa) versus p(d) relationship as a function of the threshold setting. This data should be made available prior to the testing unless the equipment can store the basic data so that, after the fact, the p(fa) versus p(d) curves for the different threshold settings could be reconstructed.

E. Manufacturer/Contractor Participation

Although the test team may be required to rely heavily on manufacturer or contractor personnel for support in conducting the testing, procedures should be established to minimize the possibility that they could influence the test results. Toward this end, the manufacturer may be required to train FAA chosen personnel to operate the equipment during the test. These personnel should have the same general skill levels as those who will be expected to operate the equipment in the airport environment.

F. Test Sites

For EDS certification testing, an FAA-dedicated test site is required that can be controlled and characterized, particularly with respect to background contamination. Explosives representing the standard threat set must be used. The standard bag population also must be used. Similar considerations apply to EDD verification testing at the FAA test site although a bag population representative of a specific airport may be used.

For testing conducted at airports, available passenger bag populations could be used. If tests are conducted over an extended period of time, the effect that various seasons have on the characteristics of the bags can be determined. In most cases, the use of explosives will not be acceptable and simulants will be required. (Simulants would also be needed to monitor the performance of the devices or systems while they are operational at airports.)

A major disadvantage of airport testing is that it could interrupt operations in the airport terminal. On the other hand, if the equipment is to be used in conjunction with other equipment already in place at the airport—such as baggage handling equipment or another EDD—time and expense could be saved by not having to provide the other hardware at the off-airport site.

G. A Standard Set of Bags and Threats

At the present time, the FAA has not developed a standard set of bags, a standard suite of explosives, and a dedicated test site. Annex I suggests how the FAA can establish these three items for EDS qualification testing. This protocol also addresses other approaches so that testing can proceed prior to the establishment of any or all of these test resources.

A3

SPECIFIC REQUIREMENTS

Before a test plan can be developed, the test location and the constraints on the use of threats must be known. For certification testing, the preferred location of the tests should be the FAA dedicated site. Furthermore, it is preferred that actual explosives samples be used when testing for the detection capability of the equipment. Finally, it is preferred that standard bags be used when testing for both the detection and false alarm capability of the equipment. Unfortunately however, it may not be possible to conduct the tests in the above preferred manner. The test team must first determine what deviations will take place and use alternative methods for achieving as realistic and meaningful tests as possible, given these deviations. The test director will identify the deviations and provide supporting rationale.

If the tests are conducted at FAA test facilities, it most likely will not be possible to use passenger bags to estimate the fraction of false alarms, PFA. It may also be difficult to fully address operational processing rates of bags. If, on the other hand, the tests are conducted at airport facilities, it may not be possible to use actual explosive samples, and the use of simulants may be required. Under either situation, the test team must determine the set of bags that should be used in estimating both PD and PFA. The selection process will depend on the purpose and location of the test.

The following three sections discuss specific areas that must be addressed before the test team can develop the detailed test plan: identification of the set of distinct bag populations that must be used in the testing of the equipment; selection of the threat package to be used; and, specification of the procedures used to measure baggage processing rates. In addition, a short discussion of the issues involved with pre-testing is presented.

A. Identification of Distinct Bag Populations

One of the most important aspects of the test plan and subsequent analyses is the selection of the bags to be used for the tests. These bags must reflect the types that will be operationally encountered and processed by the equipment, over time at various locations. From experience, it is known that bags destined for one location, at a given time of year, are packed with different items than bags going to another location, or even going to the same location during a different season. Also, tourists tend to pack different items than business travelers. The contents of the processed bags, and the

effect that background levels and interferrents can have on the measurements taken of the characteristics of the explosive, must be considered in any test plan. Toward this end, the FAA will specify the constraints/conditions placed on measurements associated with estimating PD and PFA. For example, the size and weight of a test bag may be restricted, their destination and/or time of year may be specified. Depending on the device, other constraints/conditions will have to be determined.

The test team must address the issue of the number of different bag populations that the detection equipment will process during the test. For testing which does not involve EDS certification, different applications or situations may be such that the performance of the equipment will change significantly from one application to another. If it is determined that these differences are important to the estimation of PD, and PFA, then the different bag populations should be used for the OT&E testing.

After the test team has identified the potential set of bag populations and established the various factors that are significant in determining the detection of an explosive, the team must collect data to finalize the test bag set. In order to evaluate these bags, the data collected should include the numerical values of the measured characteristics of the bags.2 For example, the TNA device measures among other things, the quantity and distribution of nitrogen in the passenger bag.

In order to collect parametric data, the equipment under consideration can be physically located at an airport facilities for which the potential baggage populations can be observed or at the FAA dedicated site using a standard bag set. For each of these locations, the equipment manufacturer should be informed by the FAA what maximum fraction of false alarms will be acceptable, so that the equipment can be properly calibrated and the detection threshold established.

For testing at an airport, the test team will determine who will process passenger bags from these populations to provide the observed measurements of the explosive characteristics for each processed passenger bag. A sufficiently large sample of processed bags is required, so that a reasonable estimate of the multi-variate frequency distribution of the set of characteristics being measured (for each of the populations) can be obtained. At the same time, data is being collected for estimating the PFA, which could be of use to the manufacturer in establishing and/or reconfirming the calibration and threshold setting. If the distributions for one or more of the potential populations are not statistically different, then the data from those populations should be pooled to represent one population. In this manner, a new set of populations will be established that will represent the final set of populations to be tested. The pooling process cannot now be rigorously defined and will require a considerable amount of judgment.3 The

rationale used for pooling should be documented by the test director so that an experience base can be developed.4

When testing at the FAA dedicated facilities, the test team must prepare a set of bags from each of the potential bag populations. This requires that the test team know the bag characteristics, as measured by the equipment, for each of the populations. To this extent, some uncertainty will be introduced into the testing process, since the true bag populations are defined by the actual passenger bag populations and the test team is generating artificial populations. In this situation, the test team must attempt to prepare these sets of bags to be as close to the populations of interest as possible. Annex I addresses the use of an FAA standard bag set for use at the FAA dedicated test site.

B. Identification and Selection of the Threats

The threat as specified by the FAA for the EDD/EDS should include:

-

type of explosive (C-4, PETN, etc.),

-

minimum quantity (mass),

-

shape (bulk, sheet, thickness, etc.),

-

relative frequency of use of each threat,

-

the location of the threat in the container,

-

the set of containers to be used (bags, electronic devices, etc.) and other pertinent features, and

-

potential countermeasures.

As previously stated, the detection equipment must measure a set of characteristics associated with the bag as it is processed and, based only on these measurements, decide if a threat is present. In order to develop a meaningful test plan, the test planners must know the characteristics of the explosive that are being measured and how these measurements might be affected by the container in which the threat is placed. In order to select the appropriate groups of test bags (when this is required) and/or produce simulants of explosives (when this is required) the following information is necessary:

-

The characteristics of the explosive being measured.

-

Bag-related items that can affect measured explosive characteristics.

-

The relationship between the measured values of the explosive characteristics and the shape, weight and type of explosive being considered.

-

The distribution of the observed measurements on the characteristics of the explosive and if the different characteristics are related or independent of each other.

-

The discriminate function being used to assimilate the observed values of the characteristics into a detect/no-detect decision. This

-

information is particularly crucial if simulants will be required in conducting the OT&E.

If particular equipment measurements are affected by interferrents, it is important to know: if the explosive characteristic is masked (i.e. the explosive characteristic is hidden in the bag); or, if the response is additive (i.e. the observed value of the characteristic of an explosive in a bag is statistically equal to the observed value of the bag plus the observed value of the explosive without the bag).

If possible, testing should be done using samples of the standard explosive threats defined by the FAA. All explosive samples must be verified relative to type, purity, weight, and chemical composition, if that characteristic is significant for the EDD/EDS being tested. Samples for the analyses should be taken from the explosives under the supervision of the test team. The number of samples of each threat type is determined by the test design; i.e., the number of different bags being processed and the variability of the measurements of the characteristics of different samples of each threat type.

If simulants must be used instead of explosives then it is important to ensure that the simulants faithfully represent the explosives relative to the characteristics of the explosives that are measured by the equipment and used for the detection process. In order to do this, each simulant, representing a specific mass of a particular explosive, must be compared to the actual mass of real explosive material with the equipment being tested. The simulant and explosive should both be in the same geometrical shape; i.e., sheet or block.

Once the explosives have been checked and the simulants produced (the number of each simulant type should be determined by the test team and should be adequate to ensure that a sufficient quantity will be available for the testing program), a validation test is required to verify that the simulants faithfully representing the explosive. Obviously, this validation testing cannot be done at a airport facility. Annex II outlines the recommended procedure for the validation testing of the simulants.

C. Bag Processing Rates

A very significant aspect of the operational suitability of a detection device or system is the average time required to process each bag, R. If this time is excessive, the airlines will have difficulty in incorporating the device or system so as to not affect its schedule of activities. While conducting the various tests associated with the estimation of the detection and false alarm rates, it will be necessary to collect data on this operationally important issue.

The first opportunity to collect operational data is when the test team is obtaining data to select the final set of bag populations. During these tests, procedures should be established to ensure that meaningful temporal data is collected. Individual processing times should be collected for each bag, as opposed to the total time required to process a set of bags, so that the mean and the variance of the processing time can be determined.

The second time that operational data can be collected is during the operational tests. It may be more difficult to collect data at this time since there will be on-going test activity (such as: placement of explosives in bags, marking bags, etc.) which might add to the processing times. To avoid these problems, the processing rate data should be generated with that testing associated with the false alarm rate estimation since almost all of the operational processing will take place with passenger bags (not containing explosives). Again, processing time should be associated with each bag, including the re-processing of a bag when an initial false alarm has occurred.

If the above testing is conducted at airport facilities, the processing time data should be fairly descriptive of the operational processing time of baggage. If, however, the testing does not take place at an airport facility then the test team must set up the testing facility to mimic the airport facilities of interest. Here again, some artificiality will necessarily be built into the collection of this data.

The test team should record any malfunctioning of the equipment, unusual processing activity, manual interference with the automated processing function, etc. This information should be reported in the final report on operational testing activities.

D. Pre-Testing

Prior to full scale operational testing of a device or a system, the test team should conduct a pre-test to determine if the device or system can meet the FAA requirements against a standard, but limited, number of target bags randomly intermingled with a standard, but limited number of normal bags. In this manner, the test team can pre-screen equipment without having to proceed to complex operational testing since most test results do not tend to be "near misses" and should easily be sorted out in such a pre-test. The above standards could also serve as a cross comparison set.

A4

DEVELOPMENT OF TEST AND EVALUATION PLANS

A. General Factors

The test team should be aware of all factors that may influence the conduct of the test. They must also understand how the measurements relate to the detection process as implemented by the instrumental technology. At this point, the team should be ready to complete the T&E plan.

The following items address various factors that should be considered in developing the detailed test plan.

-

The test design should be robust so that studies of the key operational factors can be made. All planned pre-and post-test activities, as well as the test activities, should be explicitly identified to all concerned parties—who, when, how, where, why, etc.

-

Data collection should be automated (if possible) and also manually recorded by independent data collectors. As a minimum, the collectors should identify and record the order and bag number of each bag entering the detection equipment and record each alarm response and other appropriate data. All data runs should be numbered, and the time of each run recorded.

-

The test team should consider the use of barcode labeling techniques, with all reading done by hand-held barcode readers, and the data automatically fed into a central processing unit. All bags, threats, and countermeasure items should be barcoded.

-

Test conditions should be clearly understood and agreed to by the equipment operators. Specific evaluation plans, including all test conditions, should be reviewed by the equipment manufacturer and the operators. The FAA test director will determine if the plan is complete and suitable.

-

As appropriate, the acceptable minimum detection probability and maximum false alarm probability required to certify an EDS should be clearly identified to the test team. When confidence statements are made, they will be made at the 95 percent level.

-

For qualification testing, the types and causes (if known) of false alarms will be recorded. There are generally two types of false alarms: nuisance alarms caused by the detection instrument being "fooled" by a substance that triggers a response in the detection system that is

-

similar enough to that of an explosive material that the response cannot be adequately discriminated from a real explosive; and, false alarms caused by low signal-to-noise ratio when the sensitivity setting is at the maximum (i.e., signal strength cannot be increased any further). Low signal can be caused by: the inherent low response of the detection method for the quantity of explosive material of interest; attenuation of the signal due to a design inadequacy or component failure, etc. High noise can be caused by large background signal inherent in the detection method, by improper design, failure of the electronic system, etc.

-

For certification testing, the primary response variable at a given setting is binary, i.e., detection/no detection.

-

The procedure to screen bags should be described in detail, including the specific role of the operators during the conduct of the test.

-

Procedures should be in place to accurately record the average time required to process a bag. Sufficient data should be collected regarding the time history (e.g. date/time) associated with each tested bag so that the test run could later be reproduced if desired.

-

For parametric tests, the relationship between the probability of detection and the probability of false alarms, as a function of the detection threshold level is an important result. Determining this relationship will require the testing of various bag populations at different detection thresholds. The means to adjust the detection threshold of the equipment must be available to the operators, and all such adjustments recorded.

B. Data Analysis Plan

The specific analysis plan should be developed prior to testing. The analysis plan must describe the data that will be required to be collected during the test, show how the data will be analyzed, and describe the statistical tests that will be used in analyzing the collected data. It is recommended that the data analysis plan be validated by generating artificial data and performing the analysis on that data.

The resulting data collection and analyses plan should be a principle part of the test plan. The test team should be allowed flexibility in case of unanticipated data outcomes, and thus should not be rigorously held to the initial plan. However, each deviation from the plan should be documented with supporting rationale. In any event, the initial hypotheses and the associated criteria must not be changed.

C. Selection of Test Bags

In order to determine the quantity and the characteristics of the specific bags to be used in the testing, a statistically valid approach must be followed. The final set of bags will be selected by the test team from a distinct population of bags (generated by the process described in Chapter A3)

for which the data collected on bag characteristics will have been collated to statistically describe the multi-variate frequency distribution of the characteristics.

The set of bags to be used could be selected from FAA-held ''lost'' bags, fabricated by an FAA appointed team, or actual passenger bags. When non-passenger bags must be used, it would be beneficial if the test team could use several groups of bags for each given population, each group representing a typical set of bags for that population.

One of the following approaches should be used for selecting the final bag set.

- Select Representative Bags When Non-Passenger Bags Are Required. For each of the different populations, designated in Chapter A3, a set of test bags must be available that reflects the multi-variate frequency distribution of the characteristic measurements of bags from that population. A stratified sample of bags from that population should be used; i.e. for each characteristic, its range is partitioned so that an equal frequency of observations are observed in each of the partitions. This generates multi-dimensional cells such that the marginal frequencies of occurrence of each characteristic are equal. Then the multi-variate frequency in each cell is observed and a proportional number of bags are selected to represent each of the cells. For example, if 20 cells are established (each cell representing 5 percent of the population which has the multi-variate frequency of the characteristics as represented by that cell), one bag should be selected from each of the cells, each bag having the characteristics associated with that cell. This would generate a sample of 20 test bags representative of the population. If a sample size of 40 is required, then two bags should be selected from each cell, etc.

If it is not possible to do the above—for example because the number of characteristics is too large to allow for this approach—drop the least critical characteristic and continue, reducing the set of characteristics until the process can be accomplished. The unaccounted-for characteristics can then be handled by statistical techniques. Alternatively, ensure that at least one bag is selected for each of the non-zero cells, and then use the same statistical techniques to account for the non-representative sample of bags.

Another possibility is to estimate the correlation between the variables being measured and attempt to use a transformation function, which might assist in the above process.

- Select Bags At Random. If the above process is not possible because of the large number of characteristics or not having an understanding of the correlation between the characteristics, etc., statistically random samples can be taken from the population of bags.

- Use Actual Passenger Bags. If the equipment is set up at an airport terminal (handling bags from one of the chosen populations) passenger

checked bags can be used to estimate the false alarm rate (as discussed in Chapter A3). To test for the detection rate, it may sometimes be possible, with the cooperation of the passengers, to use their bags for incorporating explosives (or simulants).5

Alternatively, a "Red Team" can be used to package bags with explosives or simulants that appear to be headed for the same destinations as the actual passenger bags. These bags should be clearly identifiable to the test team (but not the equipment operators) so that they can be retrieved once they have been processed. Under this approach, the "Red Team" determines what will be placed in the bag and where it will be located. This approach will provide estimates of the false alarm probability for the time period being observed, using actual passenger bags, and estimates of the detection probability either using the modified passenger bags or fabricated bags.

D.Number of Test Bags Required

The FAA may specify the minimum number of test bags and observations required to be processed for certification testing. In any event, the number of observations required for the test can be computed from the values of the FAA-provided minimum PD and maximum PFA, the particular statistical evaluation methodology employed, and the associated desired levels of statistical confidence.6

Confidence interval estimation is a standard method used in classical statistical inference. (In general, an interval estimate of a population parameter is a statement of two values between which there is a specified degree of confidence that the parameter lies.) It is normally desirable to have a narrow interval with a high confidence level. Using this approach to calculate the number of observations required to estimate PD, assuming a 95 percent confidence level with a confidence interval half-width of 0.03, the required sample size of bags with explosives can be approximated by the relation:7

Substituting for the constants and ignoring the higher order term, the expression reduces to: 4,300*(PD) (1-PD). For a PD of 0.90, the required sample size would be 387 bags containing explosives.

Another classical statistical inference method is hypothesis testing. A null hypothesis is a statement about a population parameter; the alternative hypothesis can be a statement that the null hypothesis is invalid. The hypothesis testing methodology chooses between competing choices at a specified significance level. There are two possible sources of error: reject the null hypothesis when it is in fact true (Type I error), and accept the null hypothesis when it is in fact false (Type II error). While the interval estimation method only allows for the control of the Type I error (a 95 percent confidence level sets this error at 5 percent), the hypothesis testing method allows for the control of both Type I and Type II errors. For example, assume that the null hypothesis is: "the probability of detection, PD, of the tested device is equal to or greater than 90 percent." The alternative hypothesis can be formulated as: "the probability of detection, PD, of the tested device is less than 90 percent." For a PD, of 0.90, setting the Type I error at 5 percent, setting the Type II error at evaluating it at PD of 85 percent, the sample size required to discriminate between the two hypotheses is estimated to be 470 bags containing explosives.8

The above methods can also be used to determine the number of non-explosive containing bags required to estimate the false alarm rate. The specified minimum PFA should be substituted for PD, and the same formula applied.

When non-passenger bags are being used, it is recommended that the number of observations be obtained by sampling a given bag without the explosive and the same bag with the explosive threat a minimum of six times each. Thus approximately 1/6 if the above number of calculated bags would be required for the number of observations. It is further recommended that these bags be chosen such that there are multiple groups (i.e., 6 groups of bags) which are each representative samples of the population being considered.

If the "Red Team" approach is being used, it may not be feasible to test each bag with and without explosives.

E. Selection of the Number of Threat Articles

The actual number of threat articles (explosives or simulants) to be used for detection determination will depend, in part, on the number of bags available to conduct the testing. For each defined threat, multiple samples may be required to facilitate the testing. For example, if there are 5 distinct threats, then 4 samples of each threat would be required to provide 20 test articles.

The determination of the number of detection test articles must be made early in the planning process if simulants are required, so that the simulant validation testing can be completed prior to the testing and a suitable amount of the simulants made available.

A5

TEST EXECUTION

At this point, the test team should have accomplished the following:

-

Examined and studied the explosive detection equipment to be tested, and fully understand the relevant technical issues and characteristics of the equipment. (If the test team does not fully understand the equipment to be tested, they may not be able to assure the FAA that the equipment has been adequately tested.)

-

Examined the raw data and processed data pertaining to the critical characteristics of the explosives and the baggage that will be used in the measurements.

-

Selected the set of bags to be used in the testing and understand how these bags represent the population of bags being considered.

-

Determined the threat samples and countermeasures to be used during the testing.

The following areas should be considered by the test team in the execution of the testing:

- Independence from the Manufacturer

-

Although the test team may be required to rely heavily on factory personnel for support in conducting the tests, procedures must be established to minimize the possibility that the contractor could influence the test results. Toward this end, at least one member of the test team should participate in:

-

supervising and overseeing all operations of each test including placement of threats in bags;

-

numbering and sequencing bags through the tested equipment;

-

identifying and manually recording data on each bag as it was being processed;

-

verifying that any internal computer identification system does not affect the measurement system; and

-

-

disconnecting computer modems and any other outside manipulative devices to isolate the equipment from the outside world.

As required, the equipment manufacturer should train FAA selected personnel to operate the equipment during the test. The personnel chosen should be representative of types that are expected to operate it when it is in the field, in terms of education, experience, skill level, etc.

- System Reproducibility

-

During all test periods, the test team should collect data for the purpose of assessing measurement reproducibility by taking repeated measurements of a controlled set of bags. For example, during each test period a set of control bags should be repeatedly processed before, during, and at the conclusion of each days test, in order to provide assurance and documentation that the detection response has not changed significantly during the test sequence. If the test team determines that the response has changed, the test may have to be repeated unless an acceptable answer to why it occurred can be provided to the test team.

- Other Areas

-

-

The test team shall directly supervise the placing, moving or removing the threats in the bags being tested.

-

The test team should control the threats with an established chain-of-custody procedure.

-

At the end of each test day, print outs of all test data should be collected by the test team; a backup package should be available for the FAA, and this data retained for possible future use/confirmation.

-

All available data should be collected, even if it will not be used immediately. At a minimum, threshold values and alarm/no-alarm readings on each item tested should be recorded. All parties should sign off on the data packages each day.

-

The equipment manufacturer should be informed of all data requirements (by the test team) as early as possible.

-

Prior to any testing, the team should dry run each significant set of test conditions to insure that the equipment is properly functioning in an operational mode.

-

If possible and appropriate, all tests and test activities should be video/audio recorded.

-

-

The equipment being tested will be used as set up by the manufacturer i.e., there will be no non-routine changes or adjustments made during the evaluation testing.

Annex III contains a model test scenario that could be used to guide actual testing.

A6

ANALYSIS AND EVALUATION OF TEST DATA

Once the tests have been completed, the test team will be required to prepare a report of the test findings. It is important that the original test data for detection devices (EDD) be retained so as to allow the FAA to estimate EDS capabilities prior to certification testing. The testing program should have produced most of the following data and information:*

-

Detection characteristics measured.

-

Det of explosives/simulants used.

-

Bag populations used, including justifications.

-

System calibration results.

-

Threshold settings used.

-

Number of bags used and the selection process.

-

Simulant validation data, as appropriate.

-

Detections observed data.

-

False alarm observed data, including for verification testing, the types and causes (if known) of false alarms.

-

Bag processing rates data.

-

Observed sensitivity of instrumental response to placement location of explosives in a bag.

-

Vulnerability to countermeasure as appropriate. Using well known statistical procedures.9–10

-

The fraction of detections observed, p(d) for different bag populations and different threats.

-

The fraction of false alarms observed p(fa) for different bag populations.

-

The estimated processing rate of the baggage, r.

For illustrious purposes, if one assumes that all tested bag populations are equally likely, stratified sampling of the bag populations is used, and all threats and threat locations tested were equally likely, then unbiased estimates of PD, PFA, and r are given by:

-

p(d)=(# of detections)/(total # of possible detections),

-

p(fa)=(# of false alarms)/(total # of possible false alarms),

-

r=(time required to process all bags)/(# of bags processed).

If any one of the above assumptions is not true, then these fractions must be computed for appropriate subsets of the test data and these fractions weighted to account for any differences (for example, if the bag populations or threats are not equally likely). At some point the test team should provide a composite fraction for the test for use by the FAA.

Finally, the test team report should contain all of the raw data generated during the course of the testing, as well as the reduced data, and include all of the detailed calculations showing the complete test design and how the results were obtained from the data collected. This report should be sufficiently detailed and transparent so that any competent technically trained individual could completely follow the test and results to its logical conclusions, and be able to duplicate the test program if desired.

Annex I

A STANDARD SET OF BAGS AND THREATS FOR TESTING BULK EXPLOSIVE DETECTION DEVICES OR SYSTEMS AT AN FAA DEDICATED TEST SITE

It is recommended that the FAA take the following actions:

-

A Standard Bag Set should be established for use by all EDS tests. The Set should be large, say on the order of 1,000. The bags should be selected from FAA-owned "lost" bags, altering bags as necessary to ensure that the Set contains random samples of actual passenger bags with representative contents for different times of the year (seasonal varations) to different destinations (e.g. cold or hot climates) by different types of travelers (e.g. business travelers, tourists, etc.). They should include different types of bag material, varying contents, different shapes, sizes, and weights.

-

A Standard Threat Package should be established which would include the specified threat types, at the minimum weights deemed to be important and in the shapes that are of concern. It is recommended that at least five replicates of each specific threat item be produced with the actual explosive, as well as at least five simulants of each threat type.

-

Certification testing should be conducted at an FAA facility, using the above Standard Bag Set and the Standard Threat Package. These items, or a duplicate, should be made available to the vendor prior to the actual testing so that the contractor can determine using parametric tests, the thresholds of the system being offered for test. Since the above items must not be tampered with, they must be sealed in a manner to protect their integrity. The vendor should be encouraged to conduct their tests at airport facilities whenever possible.

Four subsets of bags with the Standard Bag Set should be established, each subset containing bags of the same material, i.e., 100 aluminum bags, 500 cloth bags, 50 leather bags and 450 plastic bags, or whatever is deemed to be operationally representative of the proportion of passenger bags. The contents of the bags in each of the subsets should reflect typical seasonal wardrobes for spring, summer and winter.11 Approximately one-third of the bags in each subset should contain contents reflecting each of the three seasons. For each subset, approximately one-third should be light in weight,

one-third should be average in weight, and one-third should be heavy in weight. Table A1 is representative of the above allocation. The FAA will need to determine the proportion of bags according to bag material. Once this is done, the allocation of number of bags in each cell can be determined. This suggested break-out of bags should be updated as additional testing experience is gained.

These bags should then be barcoded and well marked so that they can be easily read by a barcode reader as well as by visual means.

TABLE A2. Possible Make Up Of 1000 Standard Bags

|

TYPE OF BAG |

|

LIGHT-WEIGHT |

MEDIUM-WEIGHT |

HEAVY-WEIGHT |

|

ALUMINUM |

SPRING SUMMER WINTER |

11 11 11 |

11 11 11 |

11 11 11 |

|

CLOTH |

SPRING SUMMER WINTER |

56 55 56 |

55 56 55 |

56 55 56 |

|

LEATHER |

SPRING SUMMER WINTER |

6 5 6 |

5 6 5 |

6 5 6 |

|

PLASTIC |

SPRING SUMMER WINTER |

50 50 50 |

50 50 50 |

50 50 50 |

With respect to the Standard Threat Package, the FAA should also determine if the threats need to be inserted into the bag for test purposes. If not, then threat packages should be developed which can be placed on the outside of the bags which are being tested for threat detection. The threat packages should also be barcoded and well marked. If the threats must be placed inside of the bags, then the threat package should be so constructed so that it will not move inside of the bag.

There should be two different kinds of threats, one in which the bag is the bomb (i.e., the explosive is molded along the side(s) of the bag in sheet form), and the other in which the bomb is placed inside of the bag (i.e., it is not in sheet form).

For parametric testing, the tests should be conducted so as to allow estimation of the PD versus PFA curve for different detection levels. This will require that the threshold of the equipment be changed several times during the test to accommodate, for instance, variations in the bags. One PD, PFA point will be (0,0) and another will be (1, 1). If three more points can

be established, then the FAA will have a reasonable estimate of the equipment capability. Figure A2 depicts a possible outcome of the operational test of a TNA unit.

Parametric testing should give the FAA significant insight as to whether or not the system has a reasonable possibility of passing certification testing. If at the end of the testing, the perceived likelihood of passing the certification test is low, the contractor should be told that no certification testing will be conducted until the FAA determines there is a reasonable possibility of success.

With respect to certification testing, the FAA should, at a minimum, specify the relative weights assigned to each of the threat items, as well as the minimum acceptable average PD (i.e., across all appropriate weighted threats) and maximum acceptable average PFA. The outcome of this test should be pass or fail.

Figure A1. Seasonal Variation in TNA Detectivity Versus False Alarm Rate

ANNEX II

VALIDATION OF SIMULANTS

Annex II contains a detailed statistical analysis procedure for validation simulants.

1. Test Procedures.

Explosives will be placed in bags representative of the total set of population of bags being tested. The bags are then inspected by the equipment, and the resultant values of each of the characteristics are recorded. Then, using the same set of bags but replacing the explosives in the same location with explosive simulant, repeat the test. Note: if possible, the selection of the location should be such as to provide high signal-to-noise ratio in order to facilitate the explosive-simulant comparison. All bags should be sampled at least ten times with the explosive, with the corresponding simulant and with neither. By comparing the average and the variances of the reading of the characteristics with explosives in the bag, with the average and the variances of the readings of the characteristics with the simulated explosive in the same bag, the validity of each simulant can be determined.

For example, in order to test simulants of ten explosive types identified by FAA, fifteen bags, covering the population range of the characteristics being measured, are recommended for use. Five empty bags (containing neither explosive nor simulant, but only the normal contents of the bag) would be randomly placed among the ten bags containing either explosives or simulants on every one of the major runs that are used to obtain data on the explosives and the simulants. One purpose of using the same empty bags on each run is to collect data to evaluate if the equipment has ''memory,'' in that if higher values of characteristics are being measured on a given run (as a result of ten bags containing explosives or simulants) then the equipment might be reading higher on all bags, including the empties. A second purpose is to collect large quantities of data of a selected number of bags to estimate the variability of the measurements from the selected bag set. This should be used to reconfirm the bag selection process for validation testing and to help guide the test team in the bag selection process for testing at an airport terminal. The test team must monitor all aspects of the validation testing, record data for each bag processed, verify the sequencing of bags through the detection equipment, place all explosives and simulants in the appropriate bags, and observe the verification tests of each of the samples of explosives used for comparison against the simulants.

2. Test Results.

The detailed data collected on each bag will be maintained by the FAA. The mean value and standard deviation of the measurements of the characteristics for empty bags, the bags with explosive and the same bags with the simulated explosive should be recorded and compared. For each

characteristic, its mean value for the bags with an explosive is compared to its mean value for the bags with the simulated explosive using conventional statistical analyses, such as F-tests and t-tests. A priori, the test team should select the critical region for both the F and t tests (i.e. , reject the hypothesis that the simulant is representative of the explosive with respect to the characteristics being considered). This region is usually selected to be at the a=5 percent level.

Based on this data, simulants are accepted or rejected using the "t test." The rejected simulants may be reworked (if possible) and rerun through the equipment. This process may be continued in an attempt to validate as many simulants as possible. For overall confirmation, analysis of variance can be performed to determine if differences of the mean value of the characteristic of the bags with real explosives and the mean value of the characteristic of the bags with simulated explosives were zero (using the F-test, with the associated test of homogeneity). The detailed statistical approach that can be used is described in any statistical analysis book.

The validated simulants should be suitably marked and immediately placed in the custody of the test team who will then deliver them to the test site at the appropriate time. If the simulant characteristics can change over time, the simulants should be revalidated after appropriate time periods.

The decision to be made is whether or not a given simulant gives a similar enough response to that given by the real explosive as far as equipment operation is concerned, namely detect or no detect. The following testing is recommended for the validation process.

Start with a representative group of J+Q bags where J equals the number of different explosive types being simulated and Q represents a small number of additional bags. For the j-th explosive type, there are m(j) simulants which need to be validated. Here the m(j) depends on the test design. These J+Q bags are run through N times for each of the three conditions, (1) with the explosive, (2) with the matching simulants, and (3) with neither (to check on additivity of the measurements and to ensure that the bag characteristics have not changed over the testing period). The explosive and its matching simulant are also placed in the same bag in the same position, that position being where the measurements of the characteristics are considered to be optimal for measurement purposes.

The set of characteristic measurements of the bag are the determining operational variables used in the test. Thus the primary data can be considered as three matrices:

where (1) is the observed value of the i-th characteristic in the k-th bag, (2) is the observed value of the i-th characteristic with explosive type E(j) in the k-th bag, and (3) is the observed value of the i-th characteristic with

the explosive replaced by the m-th simulant of the j-th type explosive, S(j, m), in the k-th bag. Here,

The following process is recommended:

-

Run K+Q bags through N=10 times without explosives or simulants. This data is needed to ensure that the bags being used are representative of the bag population they are thought to be representing and to check for additivity of the measurements;

-

Place the J explosives in the K bags, selecting the assignment at random, all in the same location, that being where the a{} readings are expected to be least variable. If appropriate, use the flip/twist move procedure, described above, to sample the a{} at different relative locations in the equipment. Run the K+Q bags through N=10 times;

-

Replace each of the J explosives with a simulant of that explosive, S(j,m), and repeat (ii), continuing this until all simulants have been tested in bags which contained the appropriate explosive;

-

At the beginning of each new day of testing and at the end of the testing, repeat (i).

3. Analyses for the Validation of Simulants

Let

A{}=Sa{}/10

be the mean value of the respective reading, taken over the 10 observations on the k-th bag, and

v()=S[a{}-A{}]2 /9

be the sample variance.

In order to validate a simulant, we are testing the hypothesis that the population mean as estimated by A{i,E(j),k} is equal to the population mean as estimated by A{i,S(j,m),k}, given the populations are normally distributed with equal variances. Three statistical tests can be used to determine if these samples came from the same distribution, so as to justify the validation of the m-th simulant of the j-th type explosive.

a. The Significance of the Difference Between Two Sample Means.

In this approach only one bag is used for the testing of the explosive and the simulant of that explosive. That bag (say the k-th bag) should be randomly assigned for a given simulant and explosive pairing.

For a simulant of the j-th type explosive, one now tests that A{i,S(j,m),k}=A{i,E(j),k}, for all m=1,. . .,m(j) and for all characteristics i=1,. . .,I. If, for each of the I characteristics, the two sample means are not significantly different at the 1-a level of significance using the two-sided alternative, we will declare the m-th simulant to be validated, at the 1-(1-a)I level of significance.

To make such a statistical test, one must first determine if the two observable random variables, for each of the I characteristics, have equal variances or not. To test this one uses the Snedecor F statistic computing

F(9,9)=V(i,j,m)/v(i,j,m),

where by convention V() represents the larger of the two variances, and v() the smaller, and (9,9) are the respective degrees of freedom.

If the F-value proves to be not significantly different than 1, using once again the 1-a level of significance, one can pool the two variances into a single variance

v(i,j,m)=[9v(i,E(j))+9v(i,S(j,m))/18

Then the student t-test statistic is

t(18)=[A{i,E(j),k}-A{i,S(j,m),k}]/[v(i,j,m)*2/10].5

If |t(18)|<t(18|1-a/2), for each of the I characteristics, the simulant will be declared to be validated at the 1-(1-a)I.

If, however, the F test of the two variances shows them to be significantly different, then the simulant does not exhibit the same properties as the explosive and should be rejected. In this validating approach each of the m simulants are considered independently even though some of them are simulating the same type of explosive.

b. The Paired Difference Approach.

Since the values of a{} come from the same bag with the explosive and its matching simulant in the same position in the bag, it is natural to consider the paired difference approach to test the correspondence between a simulant and the explosive.

In this approach, one uses the differences

d{i,j,m}=a{i,E(j),k}-a{i,S(j,m),k},

where the pairing over the 10 observations are randomly assigned. The population of such differences has an expected value, E(d{i,j,m})=0 if indeed the m-th simulant faithfully represented the j-th explosive for the i-th characteristic. An appropriate null hypothesis to test would be

H(0): E(d{i,j,m})=0,

against the two-sided alternative,

H(1): E(d{i,j,m})=|= 0.

Once again the m-th simulant could be accepted as being validated if the null hypothesis is not discarded at the a level of significance for all of the I characteristics. The test statistic to use in making this test is:

t(9)=D(i,j,m)/[v(d(i,j,m].5

where D(i,j,m)=Sd(i,j,m)/10, taken over the 10 samples, and

v(i,j)=S[d(i,j,m)-D(i,j,m)]2/9.

Note that in using this test we have lost 9 degrees of freedom and as a result this test would be preferred only when there is a relatively high correlation between a{i,E(j),k} and a{i,S(j,m),k}. Here again, each simulant would be subject to its own validating decision.

c. The Analysis of Variance Approach.

It should be noted that d(i,j,m) as defined above, can be considered in an Analysis of Variance for each explosive type j. The Analysis of Variance approach provides a statistical test of the composite null hypothesis:

H(0): E[D(i,j,m)]=C, for all i and m

against the alternative,

H(1): E[D(i,j,m)]=|=C, for some i or m.

The Analysis of Variance table would simply be

Analysis of Variance

|

Source of Variation |

Sum of Squares |

Degrees of Freedom |

Mean Squares |

|

Among simulants |

SS (Simulants) |

m(j)-1 |

SM |

|

Within simulant (Experimental Error) |

SSE |

m(j)*(I-1) |

SP |

|

Total |

SST |

I*m(j)-1 |

|

Here

SM=I*S(D(j,m)-D(j)2/(m(j)-1)

SP=S(S(D(i,j,m)-D(j,m))2/m(j)*(I-1)

and

D(j,m)=SD(i,j,m)/I, D(j)=SSD(i,j,m)/m(j)*I

We can then test the above hypothesis by selecting the level of significance a and assuming homogeneous variance, establish the critical region (reject H(0)) as

F>F1-a(m(j)-1, m(j)*(I-1))

where

F=SM/SP

This test can then be applied for every one of the J explosives. Tests such as this are available on most computer's statistical packages.

If the null hypothesis is accepted, then one needs to test the hypothesis that the constant C=0. One approach to this test is the use of the t-test.

In using this test if the composite null hypothesis is not discarded all simulants of the j-th type explosive would be declared to be validated. However, if the null hypothesis is discarded, none of the simulants would be validated. There is an approach that allows one to also examine a wide variety of possible differences, including those suggested by the data itself. This test is based on the range of the m(j) observed means (we are looking at the difference between the smallest and the largest observed values). The reader is referred to Introduction to Statistical Analysis, Dixon & Massey, Second Edition, pages 152 to 155.

d. Measurement Additivity.

As was mentioned above only one bag is used to validate the simulants of a given explosive. This is acceptable if the measurement system is additive, that is, if the measurement of the characteristic of the explosive plus the measurement of the characteristic of the bag statistically equals the measurement of the explosive in the bag. If this is not the case, then the validation of the simulants will require testing over a representative set of bags, for each explosive threat. Hence one must first test for additivity of the measurements. This is done by taking measurements of the explosive in the absence of any background which would interfere with the measurement of the characteristics of the explosive. These measurements are repeated 10 times (as in the above). One then compares the sum of average reading of the explosive and the average reading of the bag (which is associated with the testing of that explosive, but not containing the explosive) with the average

reading of the bag with the explosive. The above Analysis of Variance approach is suggested for testing for additivity of the measurements.

4. A Recommended Approach for Validating Simulants

Repeated use of the Analysis of Variance approach appears to be appropriate. If in its initial use, none of the simulants are validated, individual t-tests should be run and the simulant which differed most significantly from its null hypothesis would be declared to be invalidated and dropped from the group of simulants being tested. The Analysis of Variance approach would then be repeated using the reduced group. This process would be repeated until a final reduced group of simulants would be declared to be validated. This is then repeated for all of the J explosives.

ANNEX III

MODEL TESTING SCENARIO

The following testing scenario is a model that could be used for the actual testing. For explanatory purpose only, it will be assumed that 20 threats are available to conduct the test. If passenger bags cannot be used, then it is suggested that for each of the bag populations, six groups of twenty bags be selected as representative of that population. If it is not possible to "reproduce" the distribution because an adequate number of bags is not available, a statistical weighting process could be used to "match" the distribution.

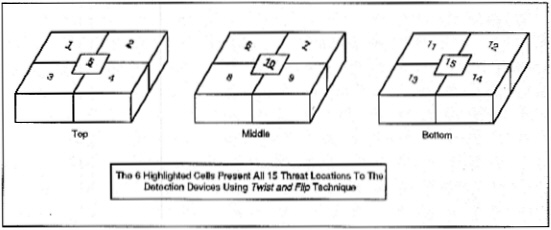

After having selected the six groups of twenty bags, randomly order the bags in each group with the first group, called A1, A2, . . ., A20, the second group called B1, B2, . . ., B20, etc. To accomplish this, one can use a random number generator or a table. Next, the first 10 B bags are randomly intermingled with the first 10 A bags and the second 10 B bags with the second 10 A bags. Twenty threats are then randomly assigned to the 20 A bags. Each newly defined group of ten A and ten B bags are then processed through the equipment. Each time the group of twenty bags is processed, the threat location in the bag is changed by flipping and/or twisting the bag. Figure A2 shows the 15 possible locations in the bag and 9 locations that should be used since they represent all 15 locations.

Figure A2. Threat Placement Locations

The threats are then transferred to the B bags in the group and again processed through the equipment. In this manner, one is able to obtain measurements on A bags with threats and B bags without and on B bags with threats and A bags without, obtaining 9 observations on each bag. Then testing proceeds with C & D bags (using the same process-replace A with C and B with D) obtaining 9 observations on each combination. Finally testing is done with E & F bags (using the same process—replacing C with E and D with F) obtaining 9 observations on each combination. The total number of observations on empty bags is 1080 while the number of observations on bags with threats is 1080, with each threat being tested in 6 different bags, and in 9 locations in each bag.

Before running the six groups of twenty bags through the equipment, two control bags should be processed 10 times, one bag without a threat and the second bag contained one of the threats. This set of two bags should be processed in a similar manner at various times during the tests and also at the end of each day. Comparing these measurements assure the test team that the equipment readings are not varying over the duration of the tests.