5

Methods for Assessing Exposure to Lead

INTRODUCTION

The purpose of this chapter is to discuss analytic methods to assess exposure to lead in sensitive populations. The toxic effects of lead are primarily biochemical, but rapidly expanding chemical research databases indicate that lead has adverse effects on multiple organ systems especially in infants and children. The early evidence of exposure, expressed by the age of 6–12 months, shows up in prenatal or postnatal blood as lead concentrations that are common in the general population and that until recently were not considered detrimental to human health (Bellinger et al., 1987,1991a; Dietrich et al., 1987a; McMichael et al., 1988). As public-health concerns are expressed about low-dose exposures (Bellinger et al., 1991a,1987; Dietrich et al., 1987a; McMichael et al., 1988; Landrigan, 1989; Rosen et al., 1989; Mahaffey, 1992), the uses of currently applicable methods of quantitative assessment and development of newer methods will generate more precise dosimetric information on small exposures of numbers of sensitive populations.

Ultraclean techniques have repeatedly shown that previously reported concentrations of lead can be erroneously high by a factor of several hundred (Patterson and Settle, 1976). The flawed nature of some reported lead data was initially documented in oceanographic research: reported concentrations of lead in seawater have decreased by a factor of 1,000 because of improvements in the reduction and control of lead contamination during sampling, storage, and analysis (Bruland, 1983). Parallel decreases have recently been noted in reports on lead concentrations

in fresh water (Sturgeon and Berman, 1987; Flegal and Coale, 1989).

Similar decreases in concentrations of lead in biologic materials have been reported by laboratories that have adopted trace-metal clean techniques. The decreases have been smaller, because lead concentrations in biologic matrixes are substantially larger than concentrations in water, and the amounts of contaminant lead introduced during sampling, storage, and analysis are similar. Nevertheless, one study revealed that lead concentrations in some canned tuna were 10,000 times those in fresh fish, and that the difference had been overlooked for decades because all previous analyses of lead concentrations in fish were erroneously high (Settle and Patterson, 1980). Another study demonstrated that lead concentrations in human blood plasma were much lower than reported (Everson and Patterson, 1980). A third demonstrated, with trace-metal clean techniques, that natural lead concentrations in human calcareous tissues of ancient Peruvians were approximately one five-hundredth those in contemporary adults in North America (Ericson et al., 1991).

Problems of lead contamination are pronounced because of the ubiquity of lead, but they are not limited to that one element. Iyengar (1989) recently reported that it is not uncommon to come across order-of-magnitude errors in scientific data on concentrations of various elements in biologic specimens. The errors were attributed to failure to obtain valid samples for analysis and use of inappropriate analytic methods. The former includes presampling factors and contamination during sampling, sample preservation and preparation, and specimen storage. The latter includes errors in choice of methods and in the establishment of limits of detection and quantitation, calibration, and intercalibration (Taylor, 1987).

Decreases in blood lead concentrations reportedly are associated with the decrease in atmospheric emissions of gasoline lead aerosols. The correlation between the decreases in blood lead and gasoline lead emissions is consistent with other recent observations of decreases in environmental lead concentrations associated with decreases in atmospheric emissions of industrial lead (Trefry et al., 1985; Boyle et al., 1986; Shen and Boyle, 1988). However, the accuracy of the blood lead analyses has not been substantiated by rigorous, concurrent intercalibration with definitive methods that incorporate trace-metal clean techniques

in ultraclean laboratories. Moreover, previous blood lead measurements cannot be corroborated now, because no aliquots of samples have been properly archived. Nonetheless, within the context of internally consistent and carefully operated chemical research laboratories, valuable blood analyses have been obtained.

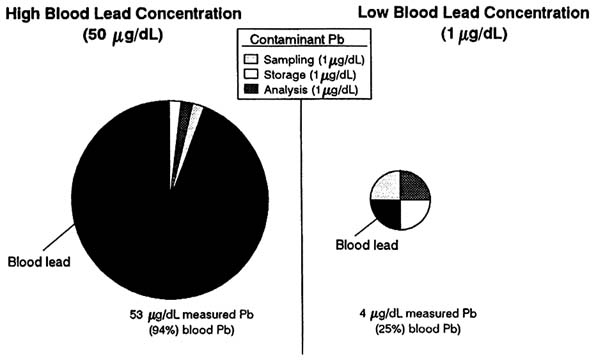

Future decreases in blood lead concentrations will be even more difficult to document, because the problems of lead contamination will be greater. Figure 5-1 depicts the relative amounts of blood lead and contaminant lead measured in people with high (50 µg/dL) and low (1 µg/dL) blood lead concentrations when amounts of contaminant lead introduced during sampling, storage, and analysis were kept constant (1 µg/dL each). The blood lead concentration measured in the person with a high blood lead concentration (53 µg/dL) will be relatively accurate to within 6%, because the sum of contaminant lead is small relative to blood lead. The same amount of contaminant lead, however, will erroneously increase the measured blood lead concentration of the other person by a factor of 4 (i.e., to 400%). That would seriously bias studies of lead metabolism and toxicity in the latter person. It would also lead to the erroneous conclusion that there was only about a 12-fold difference, rather than a 50-fold difference, in the blood lead concentrations of the two people. Both problems will become more important as the average lead concentration in the population decreases and as more studies focus on the threshold of lead toxicity.

In general, techniques to measure internal doses of lead involve measurement of lead in biologic fluids. Tissue concentrations of lead also provide direct information on the degree of lead exposure after lead leaves the circulation by traversing the plasma compartment and gaining access to soft and hard tissues. Once lead leaves the circulation and enters critical organs, toxic biochemical effects become expressed. It is of great importance for the protection of public health from lead toxicity to be able to discern the quantities of lead in target organs that are prerequisites for biochemically expressed toxic effects to become evident. The latter has been difficult, if not impossible, in humans, but lead measurement of the skeletons and placenta might make it more approachable with respect to fetuses, infants, women of child-bearing age, and pregnant women. Furthermore, measurements of lead in the skeleton of workers in lead industries has substantial potential for revealing the body burden of lead required for evidence of biochemical

toxicity to become manifest. Hence, measurements of lead in bone and placenta have the potential to couple quantitative analyses of lead at the tissue level to biochemical expressions of toxicity at the cellular level.

Noninvasive x-ray fluorescence (XRF) methods of measuring lead in bone, where most of the body burden of lead accumulates, have great promise for relating dosimetric assessments of lead to early biochemical expressions of toxicity in sensitive populations if their sensitivity can be improved by at least a factor of 10. The L-line XRF technique (LXRF) appears to be of potential value for epidemiologic and clinical research related to infants, children, and women of child-bearing age, including studies during pregnancy (Rosen et al., 1989, 1991; Wielopolski et al., 1989; Kalef-Ezra et al., 1990; Slatkin et al., 1992; Rosen and Markowitz, 1993). The K-line XRF method (KXRF) appears to be suited for studies in industrial workers and postmenopausal women, in addition to probing epidemiologic links between skeletal lead stores and both renal disease and hypertension (Somervaille et al., 1985, 1986, 1988; Armstrong et al., 1992).

Measurements of bone lead and blood lead in pregnant women throughout the course of pregnancy and assessments of amniotic-fluid lead concentrations and placental lead concentrations at term collectively hold promise for further characterizing the dynamics of maternal-fetal lead transfer.

Clinical research studies are examining epidemiologic issues related to the best measures of exposure and of the duration of exposure. Needleman et al. (1990) have reported that tooth lead concentrations constitute the best short- and long-term predictors of lead-induced perturbations in neurobehavioral outcomes. Longitudinal studies are examining whether cumulative measures (indexed by bone lead content based on LXRF), exposures during the preceding 30–45 days (indexed by blood lead concentrations), or exposures during critical periods are most important in the CNS effects of lead and in the reversibility of toxic effects on CNS function in children treated promptly with a chelating agent.

Some health effects of lead most likely depend on recent exposure; but knowledge of whether exposure was in the preceding few days, few months, or few years is extremely relevant clinically and epidemiologically. Previous reliance on blood lead concentrations alone has limited the use of time in treatment and outcome protocols. The half-time of

lead in blood is short and reflects primarily recent exposure and absorption (Rabinowitz et al., 1976, 1977). Moreover, blood lead concentration does not reflect lead concentrations in target tissues that have different lead uptake and distribution or changes in tissue lead that occur when lead exposure is modified. Even lead in trabecular bone has a shorter duration than does lead in cortical bone. The most appropriate measure will likely vary with the end point in question. It is apparent, however, that current methods can strengthen epidemiologic and treatment efficacy studies by using multiple markers with different averaging times. The recent development of the ability to measure lead averaged over short periods (blood lead), intermediate periods (trabecular bone), and long exposure intervals (cortical bone) promises new techniques for measuring lead exposure in sensitive populations.

SAMPLING AND SAMPLE HANDLING

It is universally accepted that a crucial part of monitoring of lead in biologic material is the quality of sample collection and sample handling. Lead is pervasive and can contaminate samples randomly or systematically. In addition, the lead content of substances can be reduced by inappropriate collection, storage, or pretreatment. Protocols for acceptable sampling and sample handling vary with the material being sampled and the analytic technique being used, but most precautions apply across the board.

In all cases, sample containers, including their caps, must be either scrupulously acid-washed or certified as lead-free. That is particularly important for capillary- and venous-blood sampling as now incorporated into the guidelines of the 1991 CDC statement (CDC, 1991). For example, as little as 0.01 µg (10 ng) of contaminant lead entering 100 µL of blood in a capillary tube adds the equivalent of a concentration of 10 µg/dL, the CDC action level. Reagents added to a biologic sample before, during, or after collection especially must be lead-free. Lead concentrations in plasma or serum are generally so low to begin with and relative environmental lead concentrations so high that it is extremely difficult to collect and handle such samples without contamination. Urine sampling, especially the 8-hour or 24-hour sampling associated with chelator administration, requires collection in acidwashed

containers. Although the amounts of lead being removed to urine with chelation are relatively high, the large volumes of sample and correspondingly large surface areas of collection bottles affect contamination potential.

A particularly important step in sample collection is the rigorous cleaning of the puncture site for capillary- or venous-blood collecting. The cleaning sequence of particular usefulness for finger puncture, the first step in blood lead screening, is that recommended by the Work Group on Laboratory Methods for Biological Samples of Association of State and Territorial Public Health Laboratory Directors (ASTPHLD, 1991). Fingers are first cleaned with an alcohol swab, then scrubbed with soap and water and swabbed with dilute nitric acid; and a silicone or similar barrier is used.

Sample storage is very important. Whole blood can be stored frozen for long periods. At -20°C in a freezer, blood samples can be stored for up to a year and perhaps longer.

Sample handling within the laboratory entails as much risk of contamination as sample collection in the field. Laboratories should be as nearly lead-free as possible. Although it is probably impractical for most routine laboratories to meet ultraclean-facility requirements (see, e.g., Patterson and Settle, 1976; EPA, 1986a), minimal steps are required, including dust control and use of high-efficiency particle-accumulator (HEPA) filters for incoming air and ultrapure-reagent use.

Collection and analysis of shed children's teeth entail unavoidable surface contamination, but this complication can be reduced by confining analysis to the interior matrix of a tooth, preferably the secondary (circumpulpal) dentin segment. The contaminated surface material is discarded. Isolation of the secondary dentin requires use of lead-free surfaces of cutting tools, lead-freework surfaces, and so forth.

MEASUREMENT OF LEAD IN SPECIFIC TISSUES

Whole Blood

The most commonly used technique to measure blood lead concentrations involves analysis of venous blood after chemical degradation (for example, wet ashing with nitric acid), electrothermal excitation (in a

graphite furnace), and then measurement with atomic-absorption spectroscopy, or AAS (EPA, 1986a). With AAS, ionic lead is first vaporized and converted to the atomic state; that is followed by resonance absorption from a hollow cathode lamp. After monochromatic separation and photomultiplier enhancement of the signal, lead concentration is measured electronically (Slavin, 1988). Because it is much more sensitive than flame methods, the electrothermal or graphite-furnace technique permits use of small sample volumes, 5–20 µL. Physicochemical and spectral interferences are severe with flameless methods, so careful background correction is required (Stoeppler et al., 1978). Diffusion of sample into the graphite furnace can be avoided by using pyrolytically coated graphite tubes and a diluted sample applied in larger volumes.

Electrochemical techniques are also widely used for measurement of lead. Differential pulse polarography (DPP) and anodic stripping voltammetry (ASV) offer measurement sensitivity sufficient for lead analyses at blood concentrations characteristic of the average populace. The sensitivity of DPP is close to borderline for this case, so ASV has become the method of choice. It involves bulk consumption of the sample and thus has excellent sensitivity, given a large sample volume (Jagner, 1982; Osteryoung, 1988). This property is, however, of little practical significance, because, of course, sample size and reagent blanks are finite.

That ASV is a two-step process is advantageous. In the first step, lead is deposited on a mercury thin-film electrode simply by setting the electrode at a potential sufficient to cause lead reduction. The lead is thus concentrated into the mercury film for a specified period, which can be extended when higher sensitivity is needed; few techniques offer such preconcentration as an integral part of the process. After electro-deposition, the lead is reoxidized (stripped) from the mercury film by anodically sweeping the potential. Typically, a pulsed or stepping operation is used, so differential measurements of the peak current for lead are possible (Osteryoung, 1988; Slavin, 1988).

The detection limit for lead in blood with ASV is approximately 1 picogram (pg) and is comparable with that attainable with graphite-furnace AAS methods. The relative precision of both methods over a wide concentration range is ±5% (95% confidence limits) (Osteryoung, 1988; Slavin, 1988). As noted, AAS requires attention to spectral

interferences to achieve such performance. For ASV, the use of human blood for standards, the presence of coreducible metals and their effects on the measurement, the presence of reagents that complex lead and thereby alter its reduction potential, quality control of electrodes, and reagent purity must all be considered (Roda et al., 1988). It must be noted, however, that the electrodeposition step of ASV is widely used and effective for reagent purification. The practice of adding an excess of other high-purity metals to samples, thereby displacing lead from complexing agents and ameliorating their concomitant interference effects, has demonstrated merit. Copper concentrations, which might be increased during pregnancy or in other physiologic states, and chelating agents can cause positive interferences in lead measurements (Roda et al., 1988).

The general sensitivity of ASV for lead has led to its use in blood lead analyses. The relative simplicity and low cost of the equipment has made ASV one of the more effective approaches to lead analysis.

As described in Chapter 4, the measurement of erythrocyte protoporphyrin (EP) in whole blood is not a sensitive screening method for identifying lead-poisoned people at blood lead concentrations below 50 µg/dL, according to analyses of results of the NHANES II general population survey (Mahaffey and Annest, 1986). Data from Chicago's screening program for high-risk children recently analyzed by CDC and the Chicago Department of Health indicated further the current limitations of EP for screening. The data, presented in Table 5-1, provide specificity and sensitivity values of EP screening at different blood lead concentrations. The sensitivity of a test is defined as its ability to detect a condition when it is present. The EP test has a sensitivity of 0.351, or about 35%, in detecting blood lead concentrations of 15 µg/dL or greater. This means that on average the EP test result will be high in about 35% of children with blood lead concentrations of 15 µg/dL or greater. It will fail to detect about 65% of those children. As the blood lead concentration of concern increases, the EP test becomes more sensitive. At blood lead concentrations of 30 µg/dL or greater, the sensitivity of the EP test is approximately 0.87. However, if it is used to detect blood lead concentration of 10 µg/dL or greater, the EP test has a sensitivity of only about 0.25.

The specificity of a test is defined as its ability to detect the absence of a condition when that condition is absent. As seen in Table 5-1,

TABLE 5-1 Chicago Lead-Screening Data, 1988-1989a

|

Definition of Increased Blood Lead, µg/dL |

Sensitivity (Confidence Intervalb) |

Specificity |

Predictive Value Positive |

Prevalence of Increased Blood Lead as Defined at Left |

|

≥10 |

0.252 (0.211-0.294) |

0.822 |

0.734 |

0.660 |

|

≥15 |

0.351 (0.286-0.417) |

0.833 |

0.503 |

0.325 |

|

≥20 |

0.479 (0.379-0.579) |

0.818 |

0.322 |

0.152 |

|

≥25 |

0.700 (0.573-0.827) |

0.814 |

0.245 |

0.079 |

|

≥30 |

0.871 (0.753-0.989) |

0.806 |

0.189 |

0.043 |

|

≥35 |

1.00 (0.805-) |

0.794 |

0.119 |

0.030 |

|

≥40 |

1.00 (0.735-) |

0.788 |

0.084 |

0.019 |

|

≥45 |

1.00 (0.590-) |

0.782 |

0.049 |

0.011 |

|

Definition of Increased Blood Lead, µg/dL |

Sensitivity (Confidence Intervalb) |

Specificity |

Predictive Value Positive |

Prevalence of Increased Blood Lead as Defined at Left |

|

≥50 |

1.00 (0.158-) |

0.775 |

0.014 |

0.003 |

|

a Data indicate sensitivity, specificity, and predictive value positive of zinc protoporphyrin (ZPP) measurement for detecting increased blood lead concentrations. Increased ZPP is defined as ≥35 µg/dL. Definition of increased blood concentration varies. Data derived from systematic sample (2% of total) of test results for children 6 mo to 6 yr old tested in Chicago screening clinics from July 22, 1988, to September 1, 1989; these clinics routinely measure ZPP and blood lead in all children. n = 642. Data from M.D. McElvaine, Centers for Disease Control, and H.G. Orbach, City of Chicago Department of Health,unpublished; and McElvaine et al., 1991. b Confidence intervals calculated by normal approximation to binomial method at 95% level for two tails. For estimates of sensitivity of 1.00, only lower-tail confidence interval is calculated. Exact binomial method is used. |

||||

roughly 83% of children with blood lead below 15 µg/dL will have a low EP result, and about 17% will have a high EP result. The test has a specificity of 0.83. The specificity of the test decreases as the cutoff increases. Because EP also increases in iron deficiency, a condition not uncommon among young children and occasional among pregnant women, the specificity of the EP test is reduced.

Although the sensitivity and specificity values appear higher than those obtained in the NHANES II population survey, in large part because of concurrent iron deficiency, the data confirm that unacceptably high numbers of children with increased blood lead concentrations will be missed by EP screening, particularly at blood lead concentrations below 25 µg/dL. Unfortunately, there is no feasible substitute for this heretofore convenient, practical, and effective tool as a primary screen. Measurements of alternative heme metabolites are available, but they require more extensive laboratory analyses and are largely surrogates of measurements of blood lead concentration.

Marked advances in instrumentation for blood-lead analysis during the last 10 years have yielded excellent precision and accuracy. The final limiting factors are now related specifically to technical expertise and cleanliness.

Plasma

Because of the high concentration of erythrocyte-bound lead, precautions must be taken to obtain nonhemolyzed blood when blood samples are collected for measuring the low concentrations of lead in plasma. Furthermore, an ultraclean laboratory setting and ultraclean doubly distilled column-prepared reagents are absolutely necessary (Patterson and Settle, 1976). Everson and Patterson (1980), using isotope-dilution mass spectrometry (IDMS) and strictly controlled collection and preparation techniques in an ultraclean laboratory, measured plasma lead concentration in a control subject and a lead-exposed worker. The control concentration of lead in plasma was 0.002 µg/dL, and that in the exposed worker was 0.20 µg/dL. Not surprisingly, these values were much lower than those obtained with graphite-furnace AAS; it can be concluded that graphite-furnace AAS methods do not yield sufficiently precise quantitative results for these measurements. Moreover, higher

plasma lead concentrations have been reported even with IDMS (Rabinowitz et al., 1976); these results can be ascribed to problems in laboratory contamination. Collectively, therefore, it appears unlikely that measurement of lead in plasma can be applied widely to delineating lead exposure in sensitive populations.

Urine

The spontaneous excretion of lead in children and adults is not a reliable marker of lead exposure, being affected by kidney function, circadian variation, and high interindividual variation at low doses (Mushak, 1992). At relatively high doses, there is a curvilinear upward relationship between urinary lead and intake measures. Urinary lead is measured mainly in connection with the lead excretion that follow provocative chelation, i.e., the lead-mobilization test or chelation therapy in lead poisoning of children or workers. Such measurements are described later in this chapter.

Teeth

As noted elsewhere, shed teeth of children reflect cumulative lead exposure from around birth to the time of shedding. Various types of tooth analysis can be done, including analysis of whole teeth, crowns, and specific isolated regions.

Sampling and tooth-type selection criteria are particularly important. Teeth with substantial caries—i.e., over about 20–30% of surface area—should be discarded. Teeth of the same type should be selected, preferably from the same jaw sites of subjects in an epidemiologic study, to control for intertype variation (e.g., Needleman et al., 1979; Grandjean et al., 1984; Fergusson and Purchase, 1987; Delves et al., 1982). Replicate analyses are required, and concordance criteria are useful as a quality-assurance quality-control measure for discarding discordant values.

Preference in tooth analysis appears to lie with circumpulpal dentin, where concentrations are high. The higher concentrations in circumpulpal dentin are of added utility where effects are subtle. The use of

whole teeth, crowns, or primary dentin is discouraged on two counts: random contamination is a problem on the surface of the outer (primary) enamel, and areas other than circumpulpal dentin are much lower in lead. Such problems would tend to enhance the tendency toward a null result in dose-effect relationships, i.e., Type II errors (Mushak, 1992).

In the laboratory, special care must be taken to avoid surface contamination, once sagittal sections of circumpulpal dentin have been isolated. A rinse with EDTA solution is helpful. For analysis, tooth segments are either dry-ashed or wet-ashed with nitric acid, perchloric acid, or a mixture of the two that is ultrapure as to lead contamination (e.g., Needleman et al., 1979). For calibration data, one can use bone powders of certified lead content (Keating et al., 1987).

Both AAS (Skerfving, 1988) and ASV (Fergusson and Purchase, 1987) methods have been used for tooth analysis. With AAS, both flame and flameless variations are often used. Lead concentrations are often high enough to permit dilution or chelation-extraction, thereby also minimizing calcium-phosphorus effects. Either method appears to be satisfactory (assuming that ASV entails complete acid dissolution of the tooth sample).

Although the use of tooth or dentin lead to assess the cumulative body burden of lead was an important discovery, it has practical limitations. It is necessary to wait for teeth to be shed and to rely on children to save them. Moreover, teeth are shed when children are 5-8 years old, so shed teeth cannot be used to estimate the body burden of lead in younger children. Furthermore, the newer capability to assess skeletal lead longitudinally with XRF improves the utility of tooth lead measurements.

Milk

To monitor daily lead intake from milk for metabolic balance studies or to estimate intake in areas where there is excessive external exposure to lead from other sources, milk samples should be collected in acid-washed polyethylene containers and frozen until analysis. After reaching room temperature, milk samples are sonicated and acid digested in a microwave oven, the residue is dissolved in perchloric acid, and the samples are subjected to AAS or ASV (Rabinowitz et al., 1985b).

Placenta

Placental lead measurements have been carried out after blotting of tissue and later digestion in acid at 110°C overnight. The dry residue was then dissolved in nitric acid, and lead is preferably measured with graphite-furnace AAS (Korphela et al., 1986). For placental and amniotic fluid measurements, standard reference materials are needed to ensure quality control in the laboratory. Furthermore, to assess placental lead concentrations accurately, it should be noted that region-specific concentrations of lead might exist in the placenta, and care must be taken to remove trapped blood in the placenta before analyses.

MASS SPECTROMETRY

The standard method by which all lead measurement techniques are evaluated is isotope-dilution thermal-ionization mass spectrometry (TIMS). Analyses of lead concentrations with this definitive method provide excellent sensitivity and detection limits. Recent analyses with this technique, when coupled with ultraclean procedures, have repeatedly demonstrated that many previously reported lead concentrations in biologic materials are erroneously high by orders of magnitude.

Mass spectrometers can also be used to measure stable lead isotopic compositions; to identify different sources of contaminant lead, from cellular to global; and to investigate lead metabolism without exposing people to radioactivity or artificially increased lead concentrations.

Mass spectrometers have a special niche in lead analyses, even though the measurements are relatively expensive, sophisticated, and time-consuming. Applications of mass spectrometers in analyses of lead in the biosphere are on the verge of being substantially broadened as a result of recent developments. Conventional TIMS is becoming much more sensitive and efficient. At the same time, inductively coupled plasma mass spectrometry (ICPMS) is becoming a relatively inexpensive and efficient alternative to TIMS and other established methods for elemental-lead analysis, and advances in secondary-ion mass spectrometry (SIMS), glow-discharge mass spectrometry (GDMS), and laser-microprobe mass analysis (LAMMA) have improved their capabilities for surface analysis and microanalysis of lead concentrations.

The primary differences among those techniques are the form of sample analyzed and the mechanism of introducing sample ions to a flight tube, where different isotopes are separated by magnetic and electric fields. In TIMS, a sample extract is deposited on a filament and then thermal ions are released by increasing the filament temperature with a vacuum. Sample solutions are ionized at atmospheric pressure with a highly ionized gas in ICPMS. Atoms in a solid source in an electric field are sputtered by an ionized gas and then thermalized by atom collisions in GDMS. Gas ions are also used as primary ions in SIMS, where they are focused on a solid surface to produce secondary ions by bombardment. Similarly, lasers are focused on a solid surface to vaporize, excite, and ionize atoms in microscopic areas of solid surfaces in LAMMA.

This section addresses both existing and projected applications of mass spectrometry in analysis of lead concentrations and isotopic compositions in biologic and environmental matrices. Definitive measurement of lead concentration with isotope-dilution TIMS is first reviewed. The use of stable lead isotopes to identify sources of contaminant lead and as metabolic tracers are then summarized. Finally, lead-related uses of four rapidly evolving types of mass spectrometry (ICPMS, SIMS, GDMS, and LAMMA) are briefly described.

Isotope-Dilution Mass Spectrometry

Lead concentrations in biologic tissues can only be approximated with analytic techniques, because no known analytic method can measure a true elemental concentration in any matrix. The definitive methods, including isotope-dilution mass spectrometry (IDMS), use TIMS. Relatively accurate measurements are derived with reference methods, which are calibrated with standard reference materials. Less-accurate measurements are acquired with routine methods, which provide assigned relative values to judge the analyzed results. The hierarchy in accuracy of these different analytic techniques is listed in Table 5-2.

The most accurate method of analyzing lead concentrations in biologic matrices is IDMS, which is independent of yield and extremely sensitive and precise (Webster, 1960). Mass spectrometric analyses distinguish lead from false signals by measuring the relative abundances

TABLE 5-2 Hierarchy of Analytic Methods with Respect to Accuracy

|

Analytic Data |

Analytic Method |

|

True value |

No known method |

|

Definitive value |

Definitive method, e.g., IDMS |

|

Reference-method value |

Reference method |

|

Assigned value |

Routine method |

|

Source: Heumann, 1988. Reprinted with permission from Inorganic Mass Spectrometry; copyright 1988, John Wiley & Sons. |

|

of the four stable lead isotopes (204Pb, 206Pb, 207Pb, and 208Pb). False signals in lead-isotope measurements are identified by simultaneous measurements of fragment ions in the adjacent masses (203 and 205 amu). IDMS is superior to any reference method that requires separate measurements of lead signal intensities for sample and reference materials. The advantages and primary analytic disadvantages of IDMS are summarized in Table 5-3. In IDMS, a spiked sample enriched in one isotope (206Pb) is prepared. The 206Pb-to-208Pb isotopic ratio of the spiked sample is then measured in a mass spectrometer. The ratio of 206Pb to 208Pb is then used to determine the concentration of lead in the original sample.

IDMS analyses, like all other elemental analyses, require a correction for the contaminant-lead blank. This includes all contaminant lead added during sampling, storage, and analyses (Patterson and Settle, 1976). The individual contribution of each of those contaminant-lead additions to the total lead signals for each analysis must be determined separately for highly accurate measurements. Blank measurements are especially appropriate for IDMS, because high concentrations of sensitivity and precision are required to measure the lead concentrations of ''trace-metal-clean'' reagents and containers accurately. That is illustrated in Table 5-4, a tabulation of lead blank measurements for blood lead analyses in a trace-metal-clean laboratory.

Lead concentration measurements with IDMS must also correct for

TABLE 5-3 Advantages and Disadvantages of Lead Concentration Analyses with IDMS

|

Advantages |

Disadvantages |

|

Offers precise and accurate analysis |

Is destructive |

|

Permits nonquantitative isolation of the substance to be analyzed |

Requires chemical preparation of sample |

|

Offers ideal internal standardization |

Is time-consuming |

|

Multielement, as well as oligoelement and monoelement, analyses possible |

Is relatively expensive |

|

Offers high sensitivity with low detection limits |

|

|

Source: Heumann, 1988. Reprinted with permission from Inorganic Mass Spectrometry; copyright 1988, John Wiley & Sons. |

|

isotopic variations of lead, including natural variations among samples and isotopic fractionation during the analyses. The latter correction, common to all elemental analyses with IDMS, is addressed with standard IDMS techniques (Heumann, 1988). The former, which is relatively unusual among the heavier elements, requires separate isotopic analyses of unspiked samples. The necessitates additional analyses for lead concentration measurements, but it also provides unique applications of lead isotopic composition measurements that are addressed in the following section.

Lead Isotopic Composition in the Identification of Lead Sources

Measurable differences in stable lead isotopic compositions throughout the environment are caused by the differential radioactive decay of 238U (t1/2 = 4.5 x 109 years), 235U (t1/2 = 0.70 x 109 years), and 232Th

TABLE 5-4 Quantification of Lead Contamination in Analyses of Blood Lead Concentrations with Isotope-Dilution Thermal-Ionization Mass Spectrometry and Trace-Metal-Clean Techniquesa

(t1/2 = 1.4 x 1010 years) to form 206Pb, 207Pb, and 208Pb, respectively (Faure, 1986). The fourth stable lead isotope, 204Pb, has no long-lived radioactive parent. Stable lead isotopic compositions differ among geologic formations with different ages, parent-daughter isotope ratios, and weathering processes.

There is no measurable biologic, chemical, or physical fractionation of lead isotopes in the environment, and natural differences in lead isotopic compositions in geological formations persist after the lead has been extracted and processed (Russell and Farquhar, 1960; Barnes et al., 1978). Differences in the lead isotopic composition of urban aerosols in the United States, for example, are shown in Table 5-5. The differences reflect regional and temporal variations in isotopic compositions of ores used for lead alkyl additives in the United States, emissions of other industrial lead aerosols in the United States, and the atmospheric transport of foreign industrial lead aerosols to the United States (Patterson and Settle, 1987; Sturges and Barrie, 1987).

Because there is no measurable isotopic fractionation of lead in the biosphere, sources of industrial lead can be identified by their isotopic composition (Flegal and Stukas, 1987). For example, Yaffee et al. (1983) used stable lead isotopic compositions to identify the primary source of lead (soils contaminated with leaded paint) in a group of lead-poisoned children in Oakland, California. Other investigators have also used the technique, which was pioneered by C.C. Patterson, to identify sources of contaminant lead in U.S. populations (Table 5-6).

Stable Lead Isotopic Tracers in Metabolic Studies

Most tracer studies of lead metabolism have used radioisotopes (203 Pb, 210Pb, and 212Pb) of lead (Table 5-7). Those studies have been few, because the half-lives of radioisotopes of lead (e.g., 203Pb, 51.88 hours; 210Pb, 22.5 years; and 212Pb, 10.64 hours) are not generally conducive to this type of research. Moreover, applications of any radioisotopes in metabolic studies are now severely limited: even minimal radiation exposure is avoided unless it is clinically necessary.

The analytic and clinical limitations of using lead radioisotopes as metabolic tracers, in conjunction with recent advances in the analytic

TABLE 5-5 206Pb:207Pb Ratios of Aerosols in the United States

|

Location |

Year |

206Pb:207Pba |

Reference |

|

Houston, Tex. |

1970 |

1.220 |

Chow et al., 1975 |

|

St. Louis, Mo. (urban) |

1970 |

1.230 |

Rabinowitz and Wetherill, 1972 |

|

St. Louis, Mo. |

1970 |

1.220 |

Rabinowitz and Wetherill, 1972 |

|

Berkeley, Calif. |

1970 |

1.199 |

Rabinowitz and Wetherill, 1972 |

|

Benecia, Calif. |

1970 |

1.157 |

Rabinowitz and Wetherill, 1972 |

|

San Diego, Calif. |

1974 |

1.211 |

Chow et al., 1975 |

|

Narragansett, R.I. |

1986 |

1.196 |

Sturges and Barrie, 1987 |

|

Boston, Mass. |

Pre-1981 |

1.191 |

Rabinowitz, 1987 |

|

Boston, Mass. |

1981 |

1.207 |

Rabinowitz, 1987 |

|

West Coast |

1963 |

1.143 |

Shirahata et al., 1980 |

|

West Coast |

1965 |

1.153 |

Chow and Johnstone, 1965 |

|

West Coast |

1974 |

1.190 |

Patterson and Settle, 1987 |

|

West Coast |

1978 |

1.222 |

Shirahata et al., 1980 |

|

Midwest |

1982–1984 |

1.213 |

Sturges and Barrie, 1987 |

|

Midwest |

1986 |

1.221 |

Sturges and Barrie, 1987 |

|

a95% confidence limit of 206Pb:207Pb measurements ≤0.005. |

|||

capabilities of mass spectrometry, have given new impetus to the use of stable lead isotopes in this type of analysis. The primary advantage of using stable isotopes as tracers is that neither subjects nor researchers are exposed to radiation. Different sources of exposure and metabolic processes can be monitored simultaneously, because there are three independent isotopes of stable lead for which ratios can be calculated. Because only very small amounts of stable isotopic tracers are required for highly precise analyses, the potential for metabolic perturbations due to large exposures to lead is minimized.

The high precision and precision and accuracy required for lead isotopic tracer studies have made TIMS the method of choice. That has been recognized in several recent reviews of the use of stable isotopes in metabolic

TABLE 5-6 Studies Using Lead-Isotopic Compositions as Tracers of Environmental and Biologically Accumulated Contaminant Lead in United States

|

Lead Sources and Organisms |

Reference |

|

Industrial aerosols |

Chow and Johnstone, 1965 |

|

|

Patterson and Settle, 1987 |

|

|

Sturges and Barrie, 1987 |

|

Surface waters |

Flegal et al., 1989 |

|

Coastal sediments |

Ng and Patterson, 1981, 1982 |

|

Terrestrial ecosystems |

Shirahata et al., 1980 |

|

|

Elias et al., 1982 |

|

Lead-smelter emissions and equines |

Rabinowitz and Wetherill, 1972 |

|

Seawater and marine organisms |

Smith et al., 1990 |

|

|

Flegal et al., 1987 |

|

Paint lead and children |

Yaffee et al., 1983 |

|

|

Rabinowitz, 1987 |

|

Aerosols and humans |

Manton, 1985, 1977 |

studies, even though TIMS analyses are slower than most other methods and require rigorous pretreatment to eliminate organic contaminants and interfering elements (Janghorbani, 1984; Turnlund, 1984; Hachey et al., 1987; Janghorbani and Ting, 1989a). Newer types of mass spectrometry (e.g., ICPMS) have not yet achieved the sensitivity and precision of TIMS, which are required for most analyses of lead isotopic compositions in biologic and environmental matrices. Other types of mass spectrometry still in development (e.g., resonance-ionization mass spectrometry) might become applicable to lead isotopic tracer studies. Conversely, gas-chromatography mass spectrometry (GC-MS) does not appear to be a likely option, because its maximal attainable precision is insufficient for reliable isotope-ratio determinations.

TABLE 5-7 Lead-Metabolism Studies Using Radioisotopes

|

Radioisotope |

Study Subject |

Reference |

|

203Pb |

Bone cell lead-calcium interactions |

Rosen and Pounds, 1989 |

|

203Pb |

Gastrointestinal lead absorption |

Watson et al., 1986 |

|

203Pb |

Lead retention |

Campbell et al., 1984 |

|

203Pb |

Gastrointestinal lead absorption |

Blake and Mann, 1983 |

|

203Pb |

Gastrointestinal lead absorption |

Blake et al., 1983 |

|

203Pb |

Lead absorption |

Flanagan et al., 1982 |

|

203Pb |

Gastrointestinal lead absorption |

Heard and Chamberlain, 1982 |

|

203Pb |

Oral lead absorption |

Watson et al., 1980 |

|

203Pb |

Gastrointestinal lead absorption |

Blake, 1976 |

|

210Pb |

Osteoblastic lead toxicity |

Long et al., 1990 |

|

212Pb |

Gastrointestinal lead absorption |

Hursh and Suomela, 1968 |

Inductively Coupled Plasma Mass Spectrometry

One of the major advances in analyses of both lead concentrations and isotopic compositions has been the recent development of inductively coupled plasma mass spectrometry (ICPMS). It has rapidly assumed a prominent position in many research laboratories since the first commercial instrument was introduced in 1983 (Houk and Thompson, 1988). There has been a nearly exponential increase in ICPMS publications in the last two decades (Hieftje and Vickers, 1989), and it has recently been identified as one of the "hottest areas" in science (Koppenaal, 1990).

Although ICPMS is recognized as potentially the most sensitive multielemental method, it is still not established. It has not yet permitted widespread and inexpensive analyses of lead in laboratories with routine procedures—especially in medical research, where applications of ICPMS are still in their infancy—and it has yet to be used in any

manner other than cursory and illustrative examples (Janghorbani and Ting, 1989a).

The advantages and limitations of ICPMS are discussed in several recent reviews (e.g., Houk and Thompson, 1988; Kawaguchi, 1988; Koppenaal, 1988, 1990; Marshall, 1988). A typical multielement analysis with ICPMS takes only a few minutes to conduct. In addition, the spectra are relatively simple, because there are only a few different molecular ions and doubly-charged ions produced in this technique, compared with other multielement techniques (Russ, 1989). However, the precision and sensitivity of ICPMS are still poorer than those of TIMS. ICPMS has not demonstrated the capacity to produce high-precision (±1%) measurements of lead-isotope ratios in biologic matrices (Ward et al., 1987; Russ, 1989). Other limitations include problems of internal and external calibration, interference effects, deposition of solids during sample introduction, requirements for microgram quantities of the element of interest, and loss in electron multiplier amplification.

Calibration problems—which have been detailed by Puchelt and Noeltner (1988), Vandecasteele et al. (1988), Doherty (1989), and Ketterer et al. (1989)—are being resolved on several fronts (Koppenaal, 1988, 1990). Beauchemin et al. (1988a,b,c) have demonstrated the applicability of external standards for parts-per-billion analyses of biologic materials. Additionally, numerous investigators are now addressing the need to compare ICPMS with other analytic techniques (Hieftje and Vickers, 1989), including the first intercalibrated measurements of lead isotopic compositions in blood (Delves and Campbell, 1988; Campbell and Delves, 1989).

Other investigators are studying the fundamentals of ICPMS and the applicability of different plasmas, nebulizers, and techniques to minimize problems caused by dissolved solids in samples with inherently high solid contents (Koppenaal, 1988, 1990). The latter techniques include flow-injection (Dean et al., 1988; Hutton and Eaton, 1988), complexation-preconcentration (Plantz et al., 1989), and ion-exchange (Lyons et al., 1988) techniques. They could be adapted to investigate different isotopes of lead in biologic fluids (i.e., blood and urine).

The potential of ICPMS for research in medical research has been recognized in recent reviews (e.g., Dalgarno et al., 1988; Delves, 1988). There have already been several reports of relatively good

agreement between intercalibrated measurements of blood lead concentrations based on ICPMS and other established analytic techniques. For example, Douglas et al. (1983), and Brown and Pickford (1985) found good agreement in results of analyses with ICPMS and graphite-furnace AAS. Diver et al. (1988) have investigated the applicability of albumin as a reference material in an intercalibration of ICPMS, AES and AAS. There have also been numerous intercalibrations of elemental concentrations in different biologic matrices based on ICPMS and other techniques (e.g., Douglas and Houk, 1985; Munro et al., 1986; Pickford and Brown, 1986; Ward et al., 1987; Beauchemin et al., 1988a,b,c; Berman et al., 1989).

The most extensive study of ICPMS measurements of lead isotopic compositions in medical research has investigated selenium metabolism (Janghorbani et al., 1988; Janghorbani and Ting, 1989b). Dean et al. (1987) measured lead isotopic compositions in milk and wine, and Caplun et al. (1984), Date and Cheung (1987), Longerich et al. (1987), Sturgis and Barrie (1987), and Delves and Campbell (1988) measured lead isotopic compositions in other environmental matrices. However, most ICPMS measurements of lead isotopic compositions have not included ratios for the least common lead isotope, 204Pb (1.4% of the total mass), and that is required for definitive isotopic composition analysis. It is also generally recognized that ICPMS measurements are not precise enough to show significant variations in most lead isotopic compositions (Ward et al., 1987; Russ, 1989).

In spite of the limitations just described, the phenomenal advances in ICPMS instrumentation in the last 2 decades indicate that it has passed through a remarkably short adolescence and is now in a mature stage with a prolific record of instrument acquisition and application (Koppenaal, 1990). Widespread application of ICPMS in medical research and public-health studies of lead contamination and metabolism might still require improvement, including the development of alternative sample introduction techniques, such as vapor generation, recirculating nebulizers, ultrasonic nebulizers, and electrochemical furnaces. The sensitivity of ICPMS analyses must also be improved to the point where nanogram quantities of lead are sufficient to determine isotopic ratios to the limits of the instrument's precision (0.1%), so that counting statistics will not limit the precision of the analysis. That will require faster and more linear ion detectors, such as the Daly detector (Huang et al.,

1987), and higher-resolution mass analyzers, such as more sophisticated quadrupoles or magnetic sector instruments, to obtain the resolution needed to separate polyatomic and oxide peaks from elemental isotopes (Gray, 1989). Corresponding reductions in noise and turbulence in the plasma might also involve the use of other gases for plasma support (Monstasser et al., 1987; Satzger et al., 1987). Other improvement is needed in multiplier longevity and analytic precision (Russ, 1989).

Secondary-Ion Mass Spectrometry

Secondary-ion mass spectrometry (SIMS) has recently been recognized as a major technique for surface composition analyses and microstructural characterization (Lodding, 1988), because of its high sensitivity and good topographic resolution, both in depth and laterally. The applicability of SIMS for analysis of lead in environmental and biologic matrices has not been fully realized, because too few instruments are available for that type of analysis and their accuracy is too low. This was discussed in a recent review of atomic mass spectrometry by Koppenaal (1988), who noted that SIMS was popular in electronics and the materials sciences, but not in environmental and biologic fields. His comprehensive review of articles on SIMS analysis in those two fields was limited to seven references, and only one of those involved lead analysis in organisms (Chassard-Bouchaud, 1987). Koppenaal (1990) later indicated that some analyses with SIMS might be replaced with glow-discharge mass spectrometry (GDMS), because SIMS had a "notoriously dismal reputation" for accuracy. The potential for SIMS analysis of lead in biologic and environmental samples remains in question.

Glow-Discharge Mass Spectrometry

Advances in glow-discharge mass spectrometry have indicated its potential for elemental analyses in solid matrices. That potential has not been realized, because of the high cost of GDMS instrumentation, difficulties of direct solids analyses, and low accuracy of current GDMS measurements. However, those obstacles have recently been reduced by

the introduction of relatively inexpensive quadrupole-based GDMS and a pronounced increase in the number of studies on the applicability of GDMS analyses in a variety of matrices.

The evolving applicability of GDMS analysis is summarized in recent reviews (Sanderson et al., 1987, 1988; Harrison, 1988; Harrison and Bentz, 1988; Koppenaal, 1988, 1990). GDMS might soon succeed spark-source mass spectrometry and SIMS for bulk-solids analysis. GDMS analyses are highly precise (SD ±5%) in the low parts-per-billion range. Although the accuracy of GDMS (±10–300%) is still too variable for analysis of lead in environmental samples (Huneke, 1988; Sanderson et al., 1988), quantitative results (±5%) appear possible (Harrison, 1988). Therefore, GDMS might soon become a valuable technique for monitoring elemental concentrations in geologic matrices, including lead in contaminated soils.

Laser-Microprobe Mass Spectrometry

Applications of lasers in medical research continue to expand, as reported in two reviews by Andersson-Engels et al. (1989, 1990). Others have provided complementary reviews of advances in laser-microprobe mass spectrometry and related laser-microprobe techniques (e.g., Koppenaal, 1988, 1990; Verbueken et al., 1988). Those methods use lasers as an alternative to electron and ion beams for localized chemical analysis. They have been incorporated in laser-microprobe mass analysis (LAMMA), laser-induced mass analysis (LIMA), laser-probe mass spectrography (LPMS), scanning-laser mass spectrometry (SLMS), direct-imaging-laser mass analysis (DILMA), time-resolved laser-induced breakdown spectroscopy (LIBS), laser-ablation and laser-selective excitation spectroscopy (TABLASER) and laser-ablation and resonance-ionization spectrometry (LARIS).

Laser techniques have several advantages for microprobe analyses of lead concentrations in biologic matrices, including its high detection efficiency (about 10-20 g), speed of operation, spatial resolution (1 µm), capabilities for inorganic and organic mass spectrography, and potential for separate analysis of the surface layer and core of particles. Disadvantages of the technique are that it is destructive, the quality of the light-microscopic observation is poor, and quantification of elemental

concentrations is questionable. Some of those features are listed in Table 5-8 in comparison with other microanalytic techniques, including electron-probe x-ray microanalysis, secondary-ion mass spectrometry, and Raman microprobe analysis.

Linton et al. (1985) have reviewed laser- and ion-microprobe sensitivities for the detection of lead in biologic matrices. Their analyses indicate that the relative detection limit of lead in biologic material with a lateral resolution of 1 µm with LAMMA (5 µg/g) is about 100 times better than that obtained with a Cameca IMS-3F ion microscope and that the useful yield of lead ions was about 100 times better with LAMMA (10-3) than with the ion microscope (10-5). The sensitivity for lead in LAMMA is also much better than in SIMS, when normalized to potassium (Verbueken et al., 1988).

LAMMA was developed specifically to complement other microanalytic techniques for determining intracellular distributions of physiologic cations and toxic constituents in biologic tissues. It has already been used to investigate the distribution of lead in various tissues while still in a development stage in studies of the localization of lead in different cell types of bone marrow of a lead-poisoned person (Schmidt and Ilsemann, 1984), of the topochemical distribution of lead across human arterial walls in normal and sclerotic aortas (Schmidt, 1984; Schmidt and Ilsemann, 1984; Linton et al., 1985), and of the distribution of lead in placental tissue and fetal liver after acute maternal lead intoxication. The potential of LAMMA in medical and environmental research has been summarized by Verbueken et al. (1988), who concluded:We have every reason to expect that routine quantitative LAMMA analysis will develop reasonably successfully within the next few years. Important in the achievement of quantitative accurate analysis will be necessary fundamental studies of the processes involved in the measurement, including laser-matter interactions, plasma chemistry, and physics.

We have every reason to expect that routine quantitative LAMMA analysis will develop reasonably successfully within the next few years. Important in the achievement of quantitative accurate analysis will be necessary fundamental studies of the processes involved in the measurement, including laser-matter interactions, plasma chemistry, and physics.

ATOMIC-ABSORPTION SPECTROMETRY

Atomic-absorption spectrometry (AAS) in routine use has been available to laboratories for almost 30 years, and it has been established in its current instrumented forms for about 20 years (Ottaway, 1983;

Van Loon, 1985; EPA, 1986a; Shuttler and Delves, 1986; Miller et al., 1987; Angerer and Schaller, 1988; Osteryoung, 1988; Slavin, 1988; Delves, 1991; Jacobson et al., 1991; Mushak, 1992). In brief, AAS involves thermal atomization of lead from some transformable sample matrix, absorption of radiation by the sample's lead-atom population (at one of lead's discrete wavelengths) from some element-specific source, and minimization or removal of diverse spectral interferents to provide a clean, lead-derived detection signal. The source has typically been a lead-specific hollow cathode or electrodeless discharge lamp.

AAS methods that use commercially available equipment are generally single-element methods. Simultaneous analyses of lead and other elements usually require different analytic approaches and are beyond the scope of this report. As is also the case with anodic-stripping voltammetry (described later), lead is routinely measured in biologic media as a concentration of the total element. Speciation methods have used AAS-based metal-specific detectors in which AAS units are interfaced with additional instruments, e.g., gas-liquid and liquid chromatographs (Van Loon, 1985; EPA, 1986a).

Much of the methodologic improvement in AAS in the last 30 years has centered on the nature of the thermal excitation and the relative efficiency in controlling spectral interference. The evolution of commercially available atomic-absorption (AA) spectrometers over the last 30 years has concerned principally improvements in atomization and detection. Originally, liquid samples containing the lead analyte were aspirated into the flame of an AA spectrometer. That approach was generally unsatisfactory for trace analysis because a large sample was required and the detection limit was too high. Often, preconcentration was required by such means as chelation and extraction with an organic solvent.

In 1970, the Delves Cup microflame technique appeared on the scene; it was a marked improvement with respect to detection-limit and sample-size requirements (Delves, 1977, 1984, 1991). The Delves Cup approach also helps to minimize the spectral interferents that result from an organic matrix if one uses a preignition step (Ediger and Coleman, 1972). As noted by Delves (1991), this approach does not lend itself to automation, but a high sample throughput is nonetheless achievable. Accuracy and precision are quite satisfactory. Although this technique

TABLE 5-8 Summary of Characteristics of Four Types of Microphobesa

|

Characteristic |

X-Ray Microanalysis |

Ion Microprobe (Ion Microscope)b |

Laser Microprobe (Transmission Geometry) |

Raman Microprobe |

|

Probe |

Electrons |

Ions |

Photons (laser) |

Photons (laser) |

|

Detection method |

Characteristic X-rays: WDS or EDS |

Ions (''+'' or "−"); Double-focusing MS |

Ions ("+" or "−"); TOF MS |

Photons (Raman); Double monochromator; PM |

|

Resolution of detection |

WDS, 20 eV; EDS, 150 eV |

M/ΔM = 200,10,000 |

M/ΔM = 800 |

0.7 cm-1 (spectr); 8 cm-1 (image) |

|

Lateral resolution (analyzed area) |

WDS, 1 µm; EDS, 500–1,000Åc |

1–400 µm |

1 µm |

1 µm |

|

Imaging (spatial resolution) |

SEM, 70 Å; STEM, 15 Å |

0.5 µm (SII) |

1 µm |

1 µm |

|

Information depth |

≤ 1 µm |

Tens of Å |

— |

— |

|

Detection limits |

WDS, 100 ppm; EDS, 1,000 ppm |

ppmd |

ppm |

Major comp. |

|

Elemental coverage |

WDS, Z ≥ 4; EDS, Z ≥ 11 |

H-U |

H-U |

— |

|

Characteristic |

X-Ray Microanalysis |

Ion Microprobe (Ion Microscope)b |

Laser Microprobe (Transmission Geometry) |

Raman Microprobe |

|

Isotope detection |

No |

Yes |

Yes |

No |

|

Compound information |

No |

Yese |

Yes |

Yes |

|

In-depth analysis |

No |

Yes |

Difficult |

No |

|

Destructive |

No |

Yes |

Yes |

No |

|

Quantitative analysis |

Yes |

(Yes) |

(Yes) |

Yes |

|

a EDS: energy-dispersive spectrometer. PM: photomultiplier. SEM: scanning electron microscope. SII: secondary-ion image. STEM: scanning transmission electron microscope. TOF-MS: time-of-flight mass spectrometer. WDS: wavelength-dispersive spectrometer. b Ion microscope Cameca-3f. c High-concentration deposit. d Depends on element of interest and on chemical environment, including nature of primary ion beam (Ar+, O+2, O-, Ca+). e More in "static SIMS." Source: Verbueken et al., 1988. Reprinted with permission from Inorganic Mass Spectrometry; copyright 1988, John Wiley & Sons. |

||||

is not as popular as flameless GF-AAS, it performs well in competent hands.

Over the last 10–15 years, GF-AAS has become the most favored analytic variant of AAS. Liquid-sample requirements are modest (10 µL routinely), and sensitivity is 10 times that of the Delves Cup method or better and up to 1,000 times that of conventional aspiration-flame analysis. Across various sample types, detection is readily achievable below the parts-per-billion level; in simple matrices, the detection limit is around 0.05–0.5 ppb. High sensitivity permits matrix modification with diluents. GF-AAS has the added advantage of adaptability to automation, and the literature contains a number of examples of such adaptation for analyzing large numbers of samples (e.g., Van Loon, 1985).

A potentially persistent problem with all micro-AAS methods is spectral interference of diverse physicochemical and matrix types, and the phenomenon might be most problematic with GF-AAS. Various means of interference control, termed background correction, have been developed. The deuterium-arc correction was first, and removal of spectrometric interference with a Zeeman-effect spectrometer was second; other approaches are being developed (Van Loon, 1985).

AAS has a number of general advantages for the clinical-chemistry and analytic-toxicology laboratory with respect to quantitative measurements of lead in biologic media. Equipment in various forms is generally available commercially at moderate cost. Methods are relatively straightforward and impose only moderate requirements for expert personnel. AAS, particularly in the form of GF-AAS but also in the Delves Cup flame AAS (micro-FAAS) variation, has the requisite analytic sensitivity and specificity for whole blood, provided that overall laboratory proficiency is appropriate. Of particular importance, AAS has a well-established track record of accuracy and precision and has been adopted for reference-laboratory use in proficiency testing and external quality-assurance programs. Therefore, likely sources of problems for an analyst using AAS will probably have been identified and described elsewhere. AAS has performed well in ordinary laboratories, according to results of external proficiency-testing and quality-assurance programs.

ANODIC-STRIPPING VOLTAMMETRY AND OTHER ELECTROCHEMICAL METHODS

The electrochemical technique of anodic-stripping voltammetry (ASV) in its trace analytic applications has been available for about 20 years and is based on early studies of polarography by Heyrovsky and colleagues in the 1920s (see, e.g., Nurnberg, 1983). Its current analytic characteristics for lead in various media, developed from the studies of Matson (Matson et al., 1971; Nurnberg, 1983; Stoeppler, 1983a; EPA, 1986a; Delves, 1991). Unlike AAS, ASV can be used as a multielement quantitation technique, provided that deposition and stripping characteristics are favorable.

ASV works on a two-step principle of lead analysis. First, lead ion liberated from some matrix is deposited by a two-electron reduction on a carbon-supported mercury film as a function of time and negative voltage. The deposited lead is then reoxidized and electronically measured via anodic sweeping. Those electrochemical processes generate current-potential curves (voltammograms) that can be related to concentration of the metal, e.g., lead ion. In common with all electrochemical techniques, ASV measures total quantity. Given infinite time and sample volume, electrochemical methods would theoretically have infinite sensitivity. In practice, both laboratory time and available sample sizes are limited, and this results in finite sensitivity. The ASV process collects all the metal of interest at the deposition step, which is the principal factor in ASV's high operational sensitivity (low detection limit).

ASV, like most electrochemical techniques, is affected by the thermodynamic and oxidation-reduction characteristics of the analytic matrix. In practice, the lead ion must be liberated into a chemical matrix that permits deposition and stripping without interference. Early methods used wet chemical degradation of organic matrices, including biologic media, such as whole blood (Matson et al., 1971; Nurnberg, 1983; EPA, 1986a). That approach, although it ensured mineralization, introduced a high risk of contamination by lead and was time-consuming. More recently, decomplexation has involved use of a mixture of competitive ions, including chromium and mercury, which liberate lead

from binding sites by competitive binding. This approach poses problems of adequate liberation; it gives low readings at concentrations below 40 µg/dL and high readings at high concentrations (Delves, 1991). In the case of blood analyses, however, one can effectively overcome the problems by extending the time of decomplexing and carefully resolving lead and interfering copper signals (Roda et al., 1988).

Two methodologic advantages of ASV make it popular in laboratories that measure lead in various media, including whole blood. The advantages parallel those of GF-AAS and explain why ASV and GF-AAS are the two most widely used techniques for measuring lead in biologic media in the United States and elsewhere. First, the necessary accuracy and precision required for trace and ultratrace analysis of heavy metals are achievable with ASV in competent hands, according to many reports and a performance record. Second, the sensitivity is such that it is appropriate for the low average lead concentrations in media now being encountered. As in the case of AAS, detection limits are medium-specific; in simple media, such as water, 10–100 pg can be measured. Required analyst expertise is modest, and the equipment is commercially available at costs lower than those of AAS.

ASV is not the only electrochemical method that can be used for quantitative analysis of lead. Jagner et al. (1981) reported lead measurement in blood with computerized potentiometric-stripping analysis (PSA); the reported sensitivity with the plating time used was reported to be 0.5 µg/dL, and within-run precision was reported to be 5% at a mean blood lead of 7 µg/dL. Another technique, differential-pulse polarography (DPP), has not found a wide reception, although its analytic characteristics and advantages approach those of ASV (Angerer and Schaller, 1988). New instrumentation uses this method, but its long-term utility for routine analysis of biologic media needs to be determined.

NUCLEAR MAGNETIC RESONANCE SPECTROSCOPY

Nuclear magnetic resonance spectroscopy (NMR) is finding increased application in the study of lead, for three specific reasons. First, the

development of high-magnetic field instruments has enhanced the sensitivity of NMR measurement of lead in biologic systems. Second, the evolution of specialized techniques for resolving complex spectra has made it possible to obtain clear spectra on solid, as well as liquid, samples. Finally, the increasing availability of NMR instruments for computed axial tomographic (CAT) scanning has opened up new potential for diagnostic exploration involving lead deposition.

The use of NMR for lead characterization has been aptly reviewed by Wrackmeyer and Horchler (1989). The NMR properties of lead are determined by the presence of 207Pb (abundance, 22.6%). Studies have indicated that resonance frequencies of 207Pb are influenced by the molecular environment around that isotope. Thus, the observed chemical shifts range over several thousand parts per million, as opposed to the few parts per million observed for 1H or 13C. With this broad spectral range, identification of specific chemical forms of lead is simplified because of the increased resolution. One can anticipate increased application of NMR in the study of lead speciation in both environmental and clinical settings. The ultimate potential of the technique in these settings is yet to be defined; it will be limited by the expense of the equipment and the high level of operating expertise required.

Some preliminary conclusions concerning the basic mechanisms of early toxicity at the cellular level have been reached with 19F NMR. 19F NMR on biologic samples containing the reagent 1,2-bis(2-amino-5-fluorophenoxy) ethane-N,N,N', N'-tetraacetic acid, initially described by Smith et al. (1983), has been developed to measure intracellular free calcium and free lead simultaneously in the rat osteoblastic osteosarcoma cell line ROS 17/2.8 (Schanne et al., 1989, 1990a,b). Treatment of the cells with lead produced marked increases in intracellular free calcium; and concurrent measurements of intracellular free lead yielded a concentration of about 25 pM (Schanne et al., 1989). This in vitro technique in clinical studies is useful in the measurement of intracellular free calcium and lead in erythrocytes of exposed persons. It can also help in characterizing the dose-response relationships in intracellular free calcium transport.

In addition to its ability to characterize molecular mechanisms of lead toxicity at the cellular level in humans, 19F NMR can also be used for simultaneous measurement of free cytosolic lead, calcium, zinc, iron, and other metals. Even if such a discrete and early marker of lead toxicity

at the cellular concentration is uncovered, the high expense of instrumentation and the technical expertise required for NMR will limit its application to selective studies of women, children, and adult workers in lead industries.

THE CALCIUM-DISODIUM EDTA PROVOCATION TEST

The calcium-disodium EDTA (CaNa2EDTA) provocation test is a diagnostic and therapeutic test to ascertain which children with blood lead concentrations between 25 and 55 µg/dL will respond to the chelating agent CaNa2EDTA with a brisk lead diuresis (Piomelli et al., 1984; CDC, 1985). Children with a brisk response qualify for a 5-day hospital course of CaNa2EDTA (Markowitz and Rosen, 1984; Piomelli et al., 1984; CDC, 1985). The test constitutes a chemical biopsy of exchangeable lead, which is considered to be the most toxic fraction of total body lead (Chisolm et al., 1975, 1976). CaNa2EDTA is confined to the extracellular fluid, and lead that is excreted originated primarily in bone and, to a lesser extent, in soft tissues (Osterloh and Becker, 1986).

An 8-hour provocation test in an outpatient department has been shown to be as reliable as a 24-hour test (Markowitz and Rosen, 1984); and measurement of urinary lead excretion with graphite-furnace AAS (for example) is convenient and accurate, if urine is collected quantitatively in a lead-free apparatus. However, the provocation test is impractical, cumbersome, and labor-intensive in young children, from whom quantitative urine collection is very difficult. EDTA chelation has potentially toxic side effects in children and the lead redistribution caused by chelation might actually increase the toxicity of lead in some target tissues, such as brain. Therefore, it is unlikely that the test will ever find wide application in sensitive populations.

In a recent study that used blood lead concentration and net corrected LXRF photon counts as predictors of CaNa2EDTA-test outcomes, 90% of lead-poisoned children were correctly classified as CaNa2EDTA-positive or -negative with a high degree of specificity and sensitivity (Rosen et al., 1989). Therefore, LXRF with blood-lead concentration measurement might ultimately replace the CaNa2EDTA test and prove suitable

for assessing large numbers of individual subjects in sensitive populations to select those at risk for toxic effects of lead. Furthermore, new effective oral chelating agents might substantially advance the treatment of childhood lead intoxication. However, it is likely to be difficult to use such agents for outpatient treatment, unless lead-poisoned children are treated in a clean environment (transition housing) without excessive lead ingestion, so that increasing lead absorption by the use of an oral chelating agent will be precluded.

X-RAY FLUORESCENCE MEASUREMENT

During the last 2 decades, methods have been developed to measure lead in bone noninvasively. The residence time of lead in bone is long, and these methods could broaden the range of information available in biologic monitoring of lead exposure to reflect long-term body stores associated with chronic exposure and thereby complement plasma and whole-blood lead measurements, which respond principally to acute exposure.

Bone lead measurements might or might not be related directly to adverse biologic effects of lead exposure. As with any emergent technology, their utility needs to be assessed both with respect to unanswered questions about lead effects and with respect to other means of predicting them. The physiologic availability of lead from different bone compartments has received little investigation.

The relation between bone lead measurements and exposure or biologic dose of lead is not clear. There is some evidence that cumulative exposure can be estimated from bone lead measurements; but what this means, both for lead monitoring and for research into health effects of lead exposure, has yet to be explored in full detail.

The techniques for in vivo measurement of bone lead with XRF can be divided into two groups, and there are variations within each group. The major difference between the two groups is that one relies on detecting K-shell x rays and the other on L-shell x rays for lead measurement. Radiation from an x-ray machine or other radiation source penetrates to the innermost shells of lead atoms, thereby ejecting either an L- or K-shell electron. As a result of the filling of a vacancy left by an ejected electron, a K- or L-fluorescent x ray is emitted; and the

emitted energy is characteristic of the element that absorbed the original x ray, in this case lead. A detection system that collects, counts, displays, and analyzes emitted x rays according to their energy is thus able to determine how much lead is present in the sample. The characteristics of L-line and K-line XRF techniques are summarized in Table 5-9. Both techniques use γ rays or x rays of low energy. Low energy is below about 150 keV. In this energy range, a photon can undergo three types of interaction: photoelectric, Compton, and elastic (or coherent).

In photoelectric interaction, the incoming photon gives up all its energy to an inner-shell electron of an atom. The electron is ejected from the atom with a kinetic energy equal to the energy of the incoming photon minus the electron binding energy. The result is a vacancy in the inner electron shell of the atom. The vacancy is filled by a less tightly bound electron, and energy is released either as an x ray or by the ejection of valence electrons that have kinetic energy. Detecting the energy of the x ray is the basis for the quantitative measurement of lead in bone.

In Compton scattering, the incoming photon interacts with an electron (usually a valence, loosely bound, electron), and the photon energy, minus a small amount of electron binding energy, is then divided between the electron in the atom (as kinetic energy) and the resulting emitted photons. The energy of the electron and the scattered photon depends on the angle of scatter, and the scattered photons are the most intense feature of XRF spectra. There are two strategies for minimizing the extent to which Compton-scattered photons limit the precision of XRF. One uses the angular dependence of Compton scattering and a choice of energy of incoming photons to minimize the interference of this scattering in the measurement of lead x rays (Ahlgren et al., 1976; Somervaille et al., 1985). The other produces a polarized incoming x-ray beam (Wielopolski et al., 1989). Such x rays have the property that they cannot be Compton-scattered at right angles to their plane of polarization; thus their interference in the measurement of lead x rays is minimized.

In elastic scattering, the incoming photon interacts with an atom as a whole. There is a change in direction, which is usually slight, and there is virtually no energy absorption, so the photon continues with, in effect, its full energy. The probability of elastic scattering depends on

photon energy, angle of scatter, and atomic number of the scattering atom. It is more probable for low-energy photons, for small scattering angles, and for high atomic numbers. In XRF spectra, it produces photons nearly equal in energy to the incoming photons and interferences that are smaller than those produced by Compton scattering but larger than those from lead x rays from samples with lead concentrations in the range of biologic interest. The importance of elastic scattering for XRF lies in the fact that its strong dependence on atomic number means that it is provoked primarily by calcium and phosphorus, and not by lead, in biologic tissue samples containing bone. This feature of elastic scattering can be used to standardize lead concentration to bone in practical measurements (Somervaille et al., 1985).

Dosimetry