7 The Effects of Needle Exchange Programs

This chapter assesses the effects of needle exchange programs on HIV infections and drug use behaviors. Five major sources provide the evidentiary basis for the panel's assessment: (1) a 1991 review carried out by congressional request of the effectiveness of needle exchange programs (U.S. General Accounting Office, 1993), (2) a second comprehensive evaluation carried out by University of California researchers for the Centers for Disease Control and Prevention (Lurie et al., 1993), (3) selected studies published since the two 1993 literature reviews, (4) detailed examination of a set of recent studies in New Haven, Connecticut, and (5) detailed examination of a set of recent studies in Tacoma, Washington. This chapter concludes with the panel's conclusions and recommendations, which are based on the evidence presented in the chapter and throughout the report.

Evaluations of needle exchange programs have been published by Stimson et al. (1988), Des Jarlais (1985), the U.S. General Accounting Office (GAO) (1993), and the University of California (Lurie et al., 1993). We highlight the findings of the latter two reports, which were commissioned specifically by the federal government as evaluations of needle exchange programs. Both were published in 1993 and deal with needle exchange programs as they existed up to that time.

Needle exchange programs operate in a rapidly changing environment, and the panel reviews a number of studies that were published subsequent to the major reviews by GAO and the University of California.

Two strong lines of evidence emerged from the panel's examination of recent research on the effects of needle exchange programs on the spread of

HIV infection: studies from New Haven and Tacoma. These two sets of studies were selected on the basis of the wealth of published information available about the programs they analyze. That is, not only do they provide a sizable amount of information on various endpoints of interest (i.e., incident infection and risk behaviors), but they also have carefully addressed potential alternative explanations for their reported findings.

POTENTIAL OUTCOMES

At the outset, it is important to recognize that the effects of needle exchange programs can be viewed from a number of different perspectives. Some of these perspectives involve outcomes relevant to improving the health status of injection drug users, and others reflect community-level concerns regarding potential negative effects that may be associated with the implementation of such programs. The following section identifies the outcome domains that are relevant to those distinct perspectives and are most germane to the panel's task of assessing the effects of needle exchange programs.

Possible Positive Outcomes

Needle exchanges are established in order to: (1) increase the availability of sterile injection equipment and (2) at the same time, remove contaminated needles from circulation among the program participants. Operation of the exchange, then, is expected to result in a supply of needles with reduced potential for infecting program participants with HIV and also to reduce sharing between individuals because of easier access to clean needles for any program participant. Typical exchanges also maintain such services as education concerning risk behaviors, referral to drug treatment programs (a step toward eliminating the route for all infection), and distribution of condoms. These measures offer independent prospects for reducing the spread of HIV. Appraisal of the success of a needle exchange program may involve measuring, for example, the numbers of needles exchanged; the cleanliness of circulating needles; the prevalence and incidence of HIV and other needle-borne diseases; referrals to drug treatment programs; enrollments in treatment programs; and changes in the risk behaviors of needle exchange participants. An observed pattern of favorable outcomes would reflect health benefits from the operation of the program.

Possible Negative Outcomes

The possibility of negative results from needle exchange program operations also demands attention. One possible negative outcome is an increase

TABLE 7.1 Possible Outcomes and Expectations of Successful Needle Exchange Programs

|

Possible Positive Outcomes: |

Possible Negative Outcomes: |

|

Reduction in pool of infected needles |

Increase in drug use among needle exchange program clients |

|

Reduction in drug-related risk behavior |

Increase in new initiates to injection drug use |

|

Reduction in sexual risk behavior |

Increase in drug use in wider community |

|

Increase in referrals to drug abuse treatment |

Increase in number of contaminated needles unsafely discarded (e.g., on streets) |

|

Reduction in new infections among client population |

|

in the number of improperly discarded used needles. Another possibility is that the issuance of injection equipment, condoned by government, will "send a message" undercutting efforts at combatting illegal drug use and will promote more drug use (with more attendant HIV incidence). A third possible negative outcome is that needle exchanges will lower the perception of risk of injection drug use and thus attract more users to inject drugs and to other forms of illegal drug use.

Appraisal of the success of a needle exchange program, then, should also attend to measures of these possible negative outcomes. An observed pattern of negative outcomes would weigh against the idea that needle exchange programs are beneficial.

Assessment of the effects of needle exchanges involves the simultaneous consideration of a number of intended positive and unintended negative outcomes (Table 7.1). Ideally, a successful exchange program would reduce the risk of new infection among injection drug users without increasing drug use and health risks to the public.

THE PANEL'S APPROACH TO THE EVIDENCE

The purpose of this section is to clearly explain the panel's perspective in assessing the effectiveness of needle exchange programs. We first briefly review the basis for the traditional review procedures. We then outline the argument for a different approach—one that examines the patterns of evidence in order to assess effectiveness.

The Traditional Approach: Considering the Preponderance of the Evidence

Traditional reviews of experimental analysis search for studies with well-controlled research designs. By well-controlled designs, we mean ones

that can substantially protect against the introduction of systematic influences (i.e., bias) other than the intervention condition under consideration. High ratings on credibility are usually given to comparative designs that involve: (1) random assignment of participants to conditions (i.e., needle exchange program versus "usual services"); (2) minimal attrition of participants from being measured; (3) measurement procedures that minimize the role of response biases; and (4) sufficient statistical sensitivity (i.e., statistical power).

It is unlikely that evaluations of needle exchange programs will ever be carried out with ideal controls that warrant high confidence in the conclusions that can be drawn from a single definitive study. There are at least two broad reasons for this: (1) multiple actions generally are initiated in a given community setting, making it difficult to separate the effects of a needle exchange program from those of other prevention efforts by studying time trends and (2) the development of a comparative research design that relies on random assignment of individuals to receive needle exchange program services (or not) has technical, ethical, and logistical difficulties. Given these limitations, it seems reasonable to explore alternative means of assessing the credibility of the evidence's underlying claims about the effectiveness of needle exchange programs. Before doing so, however, it is useful to examine how previous research reviews have attempted to incorporate the traditional emphasis on design-induced control.

Two reviews were commissioned by the federal government and published in 1993: one by the U.S. General Accounting Office (1993) and one by the University of California at San Francisco (Lurie et al., 1993). Prior to 1993, a number of other studies were published (Des Jarlais et al., 1985; Stimson et al., 1988).

A close examination of the manner in which these studies were conducted strongly suggests their reliance on the quality of the evidence in individual studies, which is based on the strength of their research designs. The language of the assessments also reflects the expectation that, when they are taken as a collective across studies, even though the designs are less than ideal, the preponderance of evidence will weigh in favor of or against a definitive conclusion about needle exchange programs.

Taken together, these studies tend to suggest that needle exchange programs are either neutral or positive in terms of potential positive effects and that they do not demonstrate any potential negative effects. However, each study's conclusions are often less than firm because of its methodological limitations.

An Alternative Approach: Looking at the Patterns of Evidence

When the designs of a group of studies are limited, little inferential clarity is gained by looking at the preponderance of evidence, even if it

converges across all available studies. At a minimum, there must be a sufficient number of higher-quality (i.e., high-credibility) studies within the pool of studies to assess whether the evidence from the lower-quality studies is biased in a particular fashion.

Rationale

For well-designed interventions with well-designed experimental assessment procedures, examining each outcome one at a time is obviously justifiable on statistical and logical grounds. However, in light of the fact that most studies that have attempted to assess the effectiveness of needle exchange programs have limited study designs and that there are serious practical constraints associated with conducting a randomized control trial, some may conclude that it is impossible to ever determine whether needle exchange programs are effective. In the panel's view, however, such a conclusion is both poor scientific judgment and bad public health policy. Indeed, to adopt the position that evidence short of a randomized trial is useless amounts to denying the possibility of learning from experience—which, though often difficult, is not impossible.

In many areas of social sciences and public health research, the so-called definitive study—a randomized control experiment (that is, a randomized double-blind placebo controlled trial)—is an ideal that cannot be implemented. For example, it is unethical to consider use of a clinical trial design to show that smoking causes lung cancer (Hill, 1965). Scientific judgment develops instead through a series of studies using cross-sectional retrospective and prospective designs, in which later research avoids the flaws of earlier work but may introduce problems of its own. The improbability of being able to carry out the definitive study of the effects of certain HIV and AIDS prevention programs, including needle exchange programs, does not necessarily preclude the possibility of making confident scientific judgments about the effects of such prevention programs. As A. Bradford Hill (1965:300), one of this century's foremost biostatisticians, commented three decades ago:

All scientific work is incomplete—whether it be observational or experimental. All scientific work is liable to be upset or modified by advancing knowledge. That does not confer upon us a freedom to ignore the knowledge we already have, or to postpone the action that it appears to demand at a given time.

Sooner or later there comes a time for decision on the basis of evidence in hand. In the case of the efficacy of needle exchange programs, urgency is added because the disease in question—AIDS—is fatal, is contagious, and has been seen to spread rapidly in various settings. Previous assessments

of individual studies (as well as the panel's own) did not rate them as highly conclusive, because none of them used the gold standard of randomized controlled research designs. The panel therefore elected to rely on an approach that assesses the pattern of evidence in determining the effects of these HIV and AIDS prevention programs rather than relying on a preponderance of evidence approach.

In this approach to assessing the effects of needle exchange programs and the credibility of evidence surrounding a needle exchange program, we look at the consistency of the pattern of evidence that is available from multiple data sources about the same program. Taking this approach greatly expands the depth and breadth of the evidentiary base, because we try to understand the relationships among the parts of the intervention model, the process, and their outcomes. Rather than interpreting the effects of the intervention on individual outcomes, in isolation, the pattern of evidence approach considers interrelated conditions, such as intermediate outcomes (Cordray, 1986).

For example, consider the evaluation of a needle exchange program that reveals a reduction in new HIV infections over time among injection drug users who used the program. By traditional standards, this design would be classified as relatively weak because there is no control or comparison condition. Without further information, it is not possible to confidently conclude that the introduction of the needle exchange program is responsible for the observed decline on the basis of this one piece of evidence (the observed decline) alone.

Ruling In Plausibility

By examining whether certain required conditions were present, it is possible to probe the plausibility that the needle exchange program was responsible, at least in part, for the reduction. This type of assessment requires the specification of a series of if-then propositions. That is, if there is a real connection between the introduction of the needle exchange program and the observed decline in new infections, then a series of conditions must be present in order to increase confidence in the conclusion that the program is at least partially responsible for the observed outcome. The conclusion that the needle exchange program is plausibly connected to the decrease in new HIV infections is more credible if there is evidence that, as the putative causal agent, it was actually present in the community. This means that there must be an empirical pattern of evidence that, in effect, rules in its plausibility. Programmatically, the pattern of evidence might include:

-

information that the needle exchange program was established;

-

data that it exchanged a sufficient number of needles;

-

data that it provided needles to a substantial enough portion of the injection drug users in the community;

-

data that those who used the needle exchange reduced their level of drug-use risk behaviors (e.g., sharing, use of bleach); and

-

data that those who used the needle exchange most intensively show a greater level of risk reduction.

The argument that undergirds this approach is that programs have a structure and mechanisms that establish a logical pattern of expectations that can be tested empirically. To the extent that the empirical evidence supports these propositions, the plausibility that the needle exchange program was responsible for the observed change should increase. That is, the plausibility increases through repeated assessments. As a simple example, if there is a reduction in HIV incidence but the needle exchange program failed to exchange a single needle, it is not reasonable to conclude that the needle exchange program was responsible for the decline, regardless of the strength of the design underlying the HIV incidence data. However, through multiple assessments, involving a logical network of evidence, it may be possible to derive a portrait of the plausibility that the needle exchange program is implicated in the change process.

Ruling in the plausibility that the needle exchange program is a causal agent, through empirical assessment, is only half the story. It is still possible that other features of the program or research process contain biases that affect the HIV incidence. In traditional discussions of causal analysis, the notion of excluding (or rendering implausible) rival explanations has been the hallmark of competent experimental analysis. To the extent that repeated efforts to probe the results fail to disconfirm the plausibility that the intervention was at least partially responsible, its plausibility should be enhanced. Therefore, an assessment of the pattern of evidence not only entails ruling in the plausibility that the needle exchange program is a causal agent, but also requires ruling out plausible alternative explanations.

The Panel's Synthesis

The panel analyzed the patterns of evidence from five sources: two evaluations of the research published before 1993, the findings of studies published since 1993, and two sets of studies that provide the best available detailed account of how needle exchange programs impact risk behaviors and viral infections—one on New Haven, the other on Tacoma.

The process of selecting studies for detailed examination involved a comprehensive analysis of the research findings of individual needle exchange and bleach distribution projects. The panel generated a list of published

papers and presentations on needle exchange evaluation projects in the United States, Canada, and Europe. A meeting was held to judge which reports included data that might be used in a review. The projects were subsequently grouped by city and divided among panel members so that each city project had two independent reviewers. The studies from each city were reviewed, annotated on a formal evaluation form, and then discussed with the full panel at a subsequent meeting. Following this review, at a separate meeting, the panel decided to limit itself to studies conducted in the United States, because the legal and cultural environments of other countries are sufficiently different to raise questions about whether data are applicable to the United States. Two U.S. cities, New Haven and Tacoma, were found to have a sufficient number of data published from a variety of perspectives (e.g., incident infection, behavioral risk) to warrant inclusion in this review.

Various criteria were used in deciding to pursue the New Haven and Tacoma sets of studies. Consistent with the logic of the patterns of evidence approach, the first criterion applied in selecting studies was that the site or project had to have been comprehensively studied. That is, there had to be empirical evidence establishing that the needle exchange program was operational, that the mechanisms of the exchange process had been studied, and that there was an estimate of HIV incidence or, as in the case of New Haven, a proxy measure.

The level of activity in the prevention environment can make it difficult to isolate the influence of the needle exchange program. A second criterion was that, in the sites and projects selected, the needle exchange program had to be the predominant (if not the only) intervention ongoing at the time of the assessment. This criterion implies a selection process that focuses on high-contrast sites (i.e., the needle exchange program intervention dominates prevention activities in the area), thus yielding estimated effects of needle exchange that cannot readily be attributed to other prevention activities reaching the program participants.

U.S. GENERAL ACCOUNTING OFFICE REVIEW

In late 1991, the House Select Committee on Narcotics Abuse and Control requested that the U.S. General Accounting Office carry out a review of the effectiveness of needle exchange programs.1

Procedure

GAO researchers carried out an extensive review of the literature to identify empirical evaluation studies that had appeared in refereed or peer-reviewed

journals. They conducted site visits to programs located in Tacoma, Washington, and New Haven, Connecticut. Their review identified a total of 20 published studies and 21 abstracts on evaluations of needle exchange programs originating from nine distinct research projects, all but one of which (the Tacoma study) involved programs outside the United States. Among the nine research projects were one from Australia, one from Canada, two from the Netherlands, one from Sweden, and three from the United Kingdom.

The GAO team developed a list of eight relevant outcome measures: (1) rate of needle sharing; (2) prevalence of injection drug use; (3) frequency of injection; (4) rate of new HIV infections; (5) rate of new entrants to injection drug use; (6) incidence rate of other blood-borne infections; (7) rate of other HIV risk behaviors; and (8) risk to the public's health. They also identified three methodological criteria that had to be satisfied before findings could be considered: (1) the findings had to have been published in a scientific journal or government research monograph; (2) they had to have reached statistical significance; and (3) the reported effects of the needle exchange program could not have been attributed by the authors to any other source. Of the eight listed outcome measures, only three outcomes met the methodological standard of evidence set by the GAO team: (1) rate of needle sharing, (2) prevalence of injection drug use, and (3) frequency of injection. The GAO team summarized descriptive information, whenever it was available, on the ability of needle exchange programs to reach out to injection drug users and refer them to drug treatment and other health services.

Results

Tables 7.2 and 7.3 summarize the GAO findings. Regarding the potential positive outcomes, of the nine research projects reviewed, two reported a reduction in needle sharing, and a third reported an increase. It should be noted that the increase in sharing by needle exchange participants resulted from their passing on more used injection equipment (Klee et al., 1991); this finding was not replicated in a follow-up report by the same investigator (Klee and Morris, 1994). The earlier finding from that study appears to have been a transient effect that occurred before the needle exchange programs in the area reached full operation; that is, needle exchange participants were being used as a source of needles among their respective networks of injection drug users (Klee and Morris, 1994). The researchers concluded, moreover, based on the data available from six of the nine projects, that the needle exchange programs were successful in reaching injection drug users and providing a link to drug treatment and other health services.

Regarding potential negative outcomes of needle exchange programs,

TABLE 7.2 GAO Review: Results of Needle Exchange Program Study Projects

all five projects that reported findings on injection drug use by program participants—four on frequency of injection and one on prevalence of use—found that use did not increase. (Note that three of these findings did not reach statistical significance.) This led GAO to conclude that ''some research suggests programs may reduce AIDS-related risk behavior" (p. 6) and "most projects suggest that programs do not increase injection drug use" (p. 8). GAO reported that there was sufficient evidence to suggest that needle exchange programs "hold some promise as an AIDS prevention strategy" (p. 4).

In summary, the GAO report, which was the first government report to evaluate needle exchange programs, concluded that such programs hold promise as interventions to limit HIV transmission. The criteria for assessing the validity of the study findings and for including reports in the review were quite stringent. In particular, the criterion of statistical significance means that studies that showed no difference in the frequency of injection or needle sharing were excluded. Therefore, the argument that needle exchange programs cause no harm is not fully characterized because studies

TABLE 7.3 GAO Review: Needle Exchange Program Outcomes Measured and Reported

|

Project Number by Country |

Attracted Injection Drug Users Not in Treatment |

Referred Injection Drug User to Drug Treatment |

Referred Injection Drug User to Other Health Services |

|

Australia |

|

|

|

|

1 |

|

|

|

|

Canada |

|

|

|

|

2 |

|

Yes |

Yes |

|

Netherlands |

|

|

|

|

3 |

|

|

|

|

4 |

Yes |

|

|

|

Sweden |

|

|

|

|

5 |

Yes |

|

Yes |

|

United Kingdom |

|

|

|

|

6 |

Yes |

|

|

|

7 |

Yes |

Yes |

Yes |

|

8 |

|

|

|

|

United States |

|

|

|

|

9 |

Yes |

Yes |

|

|

SOURCE: Adapted from Needle Exchange Programs: Research Suggests Promise as an AIDS Prevention Strategy (U.S. General Accounting Office, 1993:10). |

|||

with high level of statistical power that showed no difference were excluded.

UNIVERSITY OF CALIFORNIA REPORT

In September 1993, a second government report, The Public Health Impact of Needle Exchange Programs in the United States and Abroad , was published by the University of California for the Centers for Disease Control and Prevention (CDC). This report consists of a summary volume with two supporting volumes and addresses a number of the questions that this panel was asked to address.

Procedure

The University of California report was the work of a team of 12 individuals with expertise in clinical medicine, nursing, psychology, anthropology, sociology, cost-benefit modeling, and epidemiology. None of the team

members was identified in published writings as either in favor of or opposed to needle exchange programs. In a process that included discussions with an advisory committee, public health officials, needle exchange program staff members, researchers, experts in drug abuse treatment and injection drug use, and community leaders, a list of 14 research questions was generated: (1) How and why did needle exchange programs develop? (2) How do needle exchange programs operate? (3) Do needle exchange programs act as bridges to public health services? (4) How much does it cost to operate needle exchange programs? (5) Who are the injection drug users who use needle exchange programs? (6) What proportion of all injecting drug users in a community uses the needle exchange program? (7) What are the community responses to needle exchange programs? (8) Do needle exchange programs result in changes in community levels of drug use? (9) Do needle exchange programs affect the number of discarded syringes? (10) Do needle exchange programs affect rates of HIV drug and/or sex risk behaviors? (11) What is the role of studies of syringes in injection drug use research? (12) Do needle exchange programs affect rates of diseases related to injection drug use other than HIV? (13) Do needle exchange programs affect HIV infection rates? and (14) Are needle exchange programs cost-effective in preventing HIV infection?

The investigators conducted a formal review of existing research; made site visits and sent mail surveys to needle exchange programs; formed focus groups with injection drug users; and applied statistical modeling techniques. Data collected from each approach were sorted into 1 of the 14 questions about impact of needle exchange programs. The aim of the literature review was to identify a maximum of written works relating to the effectiveness of needle exchange programs. Computer searches of AIDS line and Medline provided a first cut and were augmented by items from the bibliographies of articles found therein. In addition, the research team reviewed abstracts from the annual International Conference on AIDS from 1988 to 1993 and the annual meetings of the American Public Health Association from 1987 to 1992. To identify unpublished materials, needle exchange program staff were contacted about internal reports, and a search was made for newspaper and magazine clippings, government and institutional reports, and relevant book chapters.

From this effort, 1,972 data sources were identified, which included 475 journal articles, 381 conference abstracts, 236 reports, 159 unpublished materials, 499 newspaper and magazine articles, 94 books or chapters, and 128 personal communications or other sources. All materials were reviewed and coded according to which research question(s) they addressed. Project members were assigned responsibility for synthesizing information for each of the 14 research questions. Each of the studies was assessed using a standardized format and ranked on a scale from 1 to 5:

-

incomplete, inadequate, or not relevant to the research question;

-

unacceptable: contains flaws in design or reporting that make interpretation unreliable;

-

acceptable: provides credible evidence but has limited detail, precision, or generalizability;

-

well done: provides detailed, precise, and persuasive evidence; and

-

excellent: compelling and complete.

The final ranking of an article was determined by agreement of at least two project members. Only studies ranked 3 or higher were used in the synthesis.

In addition to the review of existing research, the University of California team conducted site visits to 15 cities, 10 of which were in the United States, 3 in Canada, and 2 in Europe.2 The sites were selected on the basis of a published list of programs and reflected the range of existing needle exchange programs with respect to size, legal status, geographical location, injection drug users' HIV seroprevalence, and extent of prior evaluation research. (CDC was consulted during the selection process.) At each site, the research team used multiple data collection methods with multiple iterations, consisting of interviews, focus groups, and observation using a formal qualitative research strategy.

The methodology was codified in a manual. Standardized training of the research staff was provided. In the 15 cities, 33 needle exchange sites were visited and a total of 239 interviews with needle exchange directors and staff, public health officials, injection drug use researchers, community leaders, program participants (11 focus groups), and injection drug users not enrolled in programs (7 focus groups) were completed. Observation guidelines were pretested at two sites and the results were compared qualitatively for interrater reliability before adopting the final guidelines.

Results

Of the nine outcomes and expectations for successful needle exchange programs listed in Table 7.1, the University of California report addressed eight. That is, research findings concerning four of the five possible positive outcome domains were reviewed: reduction in drug-related and sexual risk behaviors, increase in referrals to drug abuse treatment, and reduction in HIV and other infection rates. The report addressed all four possible negative outcomes: increases in (1) drug use by program participants; (2) new initiates to injection drug use; (3) drug use in the community in general; and (4) the number of contaminated needles discarded.

Possible Positive Outcomes

Reduction in High-Risk Behavior

The University of California report reviewed data on reported needle-sharing frequency in studies of needle exchange programs. Of the 26 evaluations addressing behavior change associated with the use of needle exchange programs that were identified, 16 were deemed of acceptable quality (rating 3 or higher). Of the 16 studies, 14 presented data on the frequency of needle sharing; 9 of these had comparison groups reported. As Table 7.4 indicates, 10 of the 14 studies showed a beneficial effect of the needle exchange programs on reported frequency of needle sharing; 4 showed a mixed or neutral effect; and none showed an increased frequency of needle sharing. Regarding sexual risk behavior change, the report concluded that the findings were neutral. That is, four studies reported beneficial effects of needle exchange programs relating to sexual risk associated with number of partners and two reported mixed or neutral effects. When reviewing studies that addressed risk associated with partner choice, three showed beneficial effects and two reported mixed or neutral effects. Finally, beneficial effects of needle exchange programs relating to condom use were observed in one study, mixed or neutral results in another, and adverse effects in three studies.

Increased Referrals to Drug Treatment

The University of California report noted that 17 of 18 U.S. and Canadian programs visited stated that they provide referrals to drug treatment. Of 33 U.S. programs surveyed, 3 reported treatment services on site. The extent to which referrals enter treatment and are retained was described—the 6 programs that collect data on referrals reported 2,208—but was not studied. The report noted (Lurie et al., 1993:236) that the paucity of drug abuse treatment slots in many cities limits the usefulness of needle exchange program referrals to drug abuse treatment. This affects the likelihood that a needle exchange program will refer and that a referral will link a client with treatment.

Reduction in HIV Infection Rates

The University of California report identified 21 studies that were relevant to the issue of whether needle exchange programs impact rates of HIV infection: 2 case studies, 7 serial community cross-sectional studies, 6 serial needle exchange program cross-sectional studies, 1 case-control study,

TABLE 7.4 University of California Report: Studies of Behavior Change With Quality Rating of Three or Greater

|

Outcome Measure |

Beneficial Needle Exchange Program Effect |

Mixed or Neutral Needle Exchange Program Effect |

Adverse Needle Exchange Program Effect |

|

Drug risk sharing frequency |

Amsterdama,b (Hartgers et al., 1989); London/SW Englanda (Dolan et al., 1991) (Donoghoe et al., 1991); New South Wales (Schwartzkoff, 1989); New York City (Paone et al., 1993); Portlanda (Oliver et al., 1991) (Oliver et al., 1992) (Des Jarlais and Maynard, 1992); San Franciscoa (Watters and Cheng, 1991) (Guydish et al., 1989) (Feldman et al., 1989); Tacoma (Hagan et al., 1991b) (Hagan et al., 1992a); Tacoma (Hagan et al., 1993); Walesa (Stimson et al., 1991) |

Amsterdama (Hartgers et al., 1992) Amsterdama (van Ameijden et al., 1993) England/Scotlanda (Donoghoe et al., 1989) (Stimson et al., 1988) (Stimson et al., 1989) Manchestera (Klee et al., 1991) |

|

|

Giving away used needles |

London/SW Englanda (Dolan et al., 1991) (Donoghoe et al., 1991); Tacoma (Hagan et al., 1991b) (Hagan et al., 1992a); Tacoma (Hagan et al., 1993) |

Amsterdam (van Ameijden et al., 1993) |

Manchestera (Klee et al., 1991) |

|

Needle cleaning |

New South Wales (Schwartzkoff, 1989); Portlanda (Oliver et al., 1991) (Oliver et al., 1992) (Des Jarlais and Maynard, 1992); Tacoma (Hagan et al., 1993) |

Amsterdam (Hartgers et al., 1992) |

|

|

Outcome Measure |

Beneficial Needle Exchange Program Effect |

Mixed or Neutral Exchange Program Effect |

Adverse Needle Exchange Program Effect |

|

Injection frequency |

Amsterdama,c (Hartgers et al., 1989); Tacoma (Hagan et al., 1991b) (Hagan et al., 1992a); Walesa (Stimson et al., 1991) |

New York City (Paone et al., 1993); San Francisco (Watters and Cheng, 1991) (Guydish et al., 1989) (Feldman et al., 1989); San Francisco (Guydish et al., 1993); Tacoma (Hagan et al, 1993) |

London/SW Englanda (Dolan et al., 1991) (Donoghoe et al., 1991) |

|

Sex risk Number of partners |

England/Scotlanda (Donoghoe et al., 1989) (Stimson et al., 1988) (Stimson et al., 1989); New South Wales (Schwartzkoff, 1989) |

London/SW Englanda (Dolan et al., 1991) (Donoghoe et al., 1991) |

|

|

Partner choice |

England/Scotlanda (Donoghoe et al., 1989) (Stimson et al., 1988) (Stimson et al., 1989) |

London/SW Englanda (Dolan et al., 1991) (Donoghoe et al., 1991) |

|

|

Condom use |

New South Wales (Schwartzkoff, 1989) |

Walesa (Stimson et al., 1991) |

England/Scotlanda (Donoghoe et al., 1989); (Stimson et al., 1988) (Stimson et al., 1989) |

|

NOTE: SW = southwest. a Compared with control group(s). b Cross-sectional component. c Retrospective component. SOURCE: The Public Health Impact of Needle Exchange Programs in the United States and Abroad, Volume 1 (Lurie et al., 1993:414). |

|||

and 3 prospective studies. The quality of studies was rated on a 5-point scale ranging from a low of 1 (not valid) to a high of 5 (excellent) and a mid-point of 3 (acceptable). Only two of the studies received a quality rating of 3 or higher, and two others were rated between 2 and 3. None of the studies showed increased prevalence or incidence of HIV infection among needle exchange participants.

Given the quality rating of the studies, it is not surprising that the University of California report concluded that the studies available up to the time of the report (Lurie et al., 1993) do not, and for methodological reasons probably cannot, provide clear evidence that needle exchange programs decrease HIV infection rates. However, needle exchange programs do not appear to be associated with increased rates of infection.

It is intrinsically difficult to measure effects of intervention on the incidence of new infections of rare diseases, whose victims ordinarily do not show symptoms at the time of infection. Although most of the early studies used prevalent infection as the outcome measure, the more appropriate measure is incident or new infection. However, a further complication is that incidence is low in most locations, thereby requiring larger study populations to demonstrate program effects. The University of California report noted (Lurie et al., 1993:465) appropriately:

Well-conducted, sufficiently large case-control studies offer the best combination of scientific rigor and feasibility for assessing the effect of needle exchange programs on HIV rates.

Possible Negative Outcomes

Increase in Program Participant Drug Use

The University of California report noted that eight "acceptable" studies were identified that presented data on the issue of reported injection frequency. As Table 7.4 shows, three studies found reductions in injection associated with needle exchange programs; four found mixed or no effects; and one found an increase in injection compared with controls. This last study also found reduced needle sharing reported among needle exchange participants. This study noted that the apparent increase in injection could be attributed to several other factors, including the differential dropout of low-level injectors. The report also reviewed the methodological limitations of the studies, including the potential for socially acceptable responses by injection drug users. On balance, because of methodological problems, the report drew no strong conclusions about levels of injection drug use.

Increase in New Initiates to Injection Drug Use

The University of California report reviewed a variety of studies and used focus groups to understand whether needle exchange programs could encourage persons to initiate injection drug use. In reviewing the demographic data from the programs, the report noted that the median age of participants across programs ranged from 33 to 41, and the median duration of injection drug use from 7 to 20 years. This suggests that most participants initiated injection drug use prior to using the needle exchange program.

A review of serial cross-sectional studies of injection drug users in San Francisco noted an increase in the mean age of the samples over time from 34 in 1986 to 40 in 1990, suggesting that there was not an increase in young new injectors over time. Researchers in Amsterdam used a capture-recapture method to estimate the number of injection drug users between 1983 and 1988. Despite initiation of a needle exchange program in 1984, no change in the number of injection drug users was reported, and the average age of drug users increased over time. Furthermore, the number of drug users under age 22 decreased from 14 percent in 1983 to 3 percent in 1988. The authors concluded that there was no increase in the number of new initiates into injection drug use.

The report concluded, on the basis of evidence from surveys, that (Lurie et al., 1993:357) "needle exchange programs are not associated with an increase in community levels of injecting."

Focus groups were consulted. Of 10 focus groups from needle exchange programs, comprising 65 injection drug users, 2 individuals thought needle exchange programs could encourage nonparenteral drug users to start injecting. Among seven nonprogram focus groups comprising 47 injection drug users, 2 individuals thought needle exchange programs could encourage nonparenteral drug users to start injecting. The focus group data were viewed as corroborating evidence for the data available from surveys arguing against an effect of needle exchange programs on increasing the community levels of injection drug use.

Increased Drug Use in the Community

The University of California report addressed the potential for increased drug use in the community by reviewing the studies noted in the previous section. Researchers searched for additional data by examining established data sets of drug abuse indicators and answers to additional questions asked of focus groups of injection drug users.

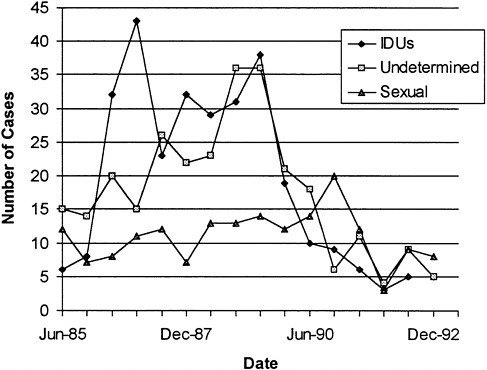

The University of California researchers attempted to relate the presence (or absence) of needle exchange programs to ongoing statistical series

like the Drug Abuse Warning Network (DAWN), Drug Use Forecasting (DUF), and Uniformed Crime Reports (UCR), which might reflect altered patterns of drug-related events, such as drug cases in hospital emergency rooms, positive urine drug screens, and drug-related arrests, respectively. The report noted wide variation in these drug-use indicators over time, which suggests inherent lack of precision and limits the manifestation of patterns—if any—relating to needle exchange.

The University of California report also noted that, because needle exchange programs are relatively new, changes in drug use might yet appear with longer follow-up. The report concluded that (Lurie et al., 1993:357) "currently available (national indicator) data provide no evidence of change in overall community levels of drug use associated with needle exchange programs."

The report also noted that the San Francisco and Amsterdam surveys described above provide (Lurie et al., 1993:357) "some evidence that needle exchange programs are not associated with an increase in community levels of injecting or overall drug use."

Increase in Number of Contaminated Needles Unsafely Discarded

The University of California report noted that adverse community responses to needle exchange programs are likely to be centered on the issue of discarded needles and the risk to the public of accidental needlestick injury. However, the report noted that one-for-one exchange rules cannot, in theory, increase the total number of discarded needles, although programs could affect the geographic distribution of discarded syringes. Data on a surveillance project with the Portland, Oregon, needle exchange program noted a decrease in the prevalence of discarded syringes near the program (Lurie et al., 1993:386). Passive surveillance of health or police department reports over time indicated either declines or small increases in needlestick injuries, with the trends due to changes in reporting patterns. The University of California report concluded that needle exchange programs "have not increased the total number of discarded syringes" and, if structured as a one-for-one exchange with no starter needles, "they cannot increase the total number of discarded needles" (Lurie et al., 1993:395).

Summary

Using multiple data sources, the University of California reviewed a number of questions about needle exchange programs. As far as possible positive outcomes are concerned, the report concluded that the data available at the time of the report "do not … provide clear evidence that needle exchange programs decrease HIV infection rates," (p. 20) but that "the

majority of studies of [program] clients demonstrate decreased risk of HIV drug risk behavior, but not decreased rates of HIV sex risk behavior'' (p. 18) In addition, "all but one of the 18 US and Canadian needle exchanges visited … stated that they provide referrals to drug abuse treatment" (p. 10) Finally, regarding possible negative outcomes, the report concluded that needle exchange programs have not increased the total number of discarded used needles and syringes (p. 16). The report goes on to state that there is no evidence that drug use among program participants increased, and there is no evidence of change in overall community levels of noninjection or injection drug use (Lurie et al., 1993:15).

EVIDENCE FROM RECENT STUDIES

This section is organized into topical areas that parallel the summaries of the GAO and University of California reports. Study findings are categorized according to the outcomes and expectations of program effects listed in Table 7.1; both possible positive and possible negative effects are reviewed. The information sources for this update comprise: (1) a review of abstracts from the 1994 International Conference on AIDS; (2) the 1994 American Public Health Association conference; (3) papers presented at the National Research Council/Institute of Medicine's Workshop on Needle Exchange and Bleach Distribution Programs (1994); and (4) articles appearing in refereed journals following the release of the University of California report. Since the University of California report was issued, a number of studies on the impact of needle exchange programs have been presented or published. These studies utilize a variety of designs, including an ecological design; a comparison of prevalence rates between injection drug users who use and those who do not use needle exchange programs; HIV incidence rates among needle exchange program attenders; and, using data collected prospectively, a comparison of HIV incidence rates between injection drug users who attend and those who do not attend a needle exchange program.

Possible Positive Outcomes

Reduction in Risk Behavior

Recent publications on needle exchange programs in San Francisco, New York City, and Portland, Oregon, have addressed the issue of the impact of the programs on HIV drug-use risk behaviors and sexual risk behaviors (Watters et al., 1994; Watters, 1994; Lewis and Watters, 1994; Des Jarlais et al., 1994a, 1994b, 1995; Paone et al., 1994a, 1994b; Oliver et al., 1994).

In an ecological study in San Francisco, Watters (1994) examined the

trends in risk behaviors and HIV seroprevalence over a 6.5-year period among heterosexual injection drug users over 13 cross-sectional surveys between 1986 and 1992. Interviews (5,956) were conducted with injectors in street settings and drug detoxification clinics. During that time period, multiple prevention efforts targeting injection drug users had been implemented (including outreach, education, voluntary HIV testing and counseling, bleach and condom distribution, and needle exchange programs). Among injection drug users who reported sharing needles, the proportion of those who reported ever using bleach increased from 3 percent in 1986 to 89 percent by 1988 and remained relatively constant at that level through fall 1992.

Sexually active heterosexual male injectors also reported significant changes in condom use (i.e., injection drug users reported using a condom 4.5 percent of the time in 1986, compared with 31 percent of the time in late 1992). However, Lewis and Watters (1994) found that a substantial proportion of sexually active male drug injectors, including heterosexuals, bisexuals, and homosexuals, reported frequently engaging in unprotected sex (i.e., reported condom use was low in all three groups). That is, 56 percent of the heterosexuals who had vaginal sex in the prior 6 months reported no condom use; one-third of the homosexuals who engaged in anal intercourse with male partners reported no condom use; and 41 percent of the bisexual men reported no condom use while engaging in vaginal sex and approximately half (52 percent) reported no condom use while engaging in anal or oral sex with females and/or males.

In another ecological report from New York City, Des Jarlais and colleagues (Des Jarlais et al., 1994a) examined trends in reported risk behaviors and seroprevalence among injection drug users for 1984 and 1990 to 1992 by comparing results of two surveys of injection drug users entering a drug detoxification program. Several trends in drug-use risk behaviors were reported. In 1984, for example, 65 percent of injection drug users reported having used shooting galleries in the preceding 2 years; in the 1990 to 1992 survey, only 3 percent reported injecting in shooting galleries in the preceding 6 months. Substantial reductions in sharing behavior were also observed. Use of potentially contaminated needles declined from 51 to 7 percent of injections. Moreover, an increasing proportion of injection drug users entering the detoxification program reported using the needle exchange programs since they opened in 1990. For the 1990 to 1992 period, results also show that needle exchange participation was associated with a downward trend in the proportion of subjects reporting any injection with needles that had been used by someone else and a reduction in the percentage of study participants reporting having passed on used needles to others. The extent to which the reductions in risk behaviors reported in the two surveys can be attributed to the needle exchange program itself is limited by the fact

that the data are ecological trends. Other prevention efforts were occurring in New York City between the two time intervals. Therefore, although the results are consistent with an inference of reduction in risk behaviors following the introduction of a needle exchange program, the study design does not exclude the possibility of contributing or alternate explanations.

In the San Francisco needle exchange program evaluation, Watters (1994) compared frequent needle exchange participants with two comparison groups—injection drug users who used the exchange less frequently and a group who did not use it at all. These researchers found a 47 percent decline (from 66 to 35 percent) in reported sharing behavior among injection drug user study participants between spring 1987 and spring 1992. More refined analyses revealed that frequent needle exchange participants (i.e., used the program more than 25 times in the past year) were less likely to report needle sharing in the past 30 days than study participants who used the needle exchange program less frequently or not at all. In contrast, over the 3-year study period, no change in reported rates of sharing behavior was observed among those not using the program.

In New York City, Paone et al. (1994a) conducted a pre-post analysis that examined the drug-use risk behaviors of 1,752 needle exchange program participants 30 days prior to using the exchange and during their most recent 30 days in the program. Participants reported a two-thirds decline in the proportion of time they injected with previously used needles (12 percent before participating in the needle exchange program, compared with 4 percent in the last 30 days while participating in the program). Similar reductions in renting or buying used needles (73 percent decline) were observed, and similar reductions in the number of participants who reported borrowing used needles were found (59 percent decline). The number of participants who reported using alcohol pads increased from 30 percent before participating in the needle exchange program to 80 percent in the most recent 30 days in the exchange. Although the reduction in high-risk behaviors was based on self-reports of exchange users and no comparison of injection drug users not using the exchange was included in this report, this pattern of reduction in drug-use risk behaviors was found to be relatively stable in recent updates (Des Jarlais et al., 1994b; Des Jarlais et al., 1995; Paone et al., 1994b). These authors also note in their recent updates that minimal changes in sexual risk behaviors were reported. For example, always using a condom with a primary sexual partner increased from 36 percent in the 30 days prior to first using the needle exchange program to 37 percent for the last 30 days while using the program; whereas always using a condom with a casual sexual partner increased from 56 percent in the 30 days prior to first using the exchange program to 60 percent in the last 30 days while using the exchange. However, due to design constraints,

it cannot be stated what portion of the reduction in risk behaviors is due to the needle exchange program.

An evaluation of the Portland needle exchange program (Oliver et al., 1994) assessed change in risk behaviors among 753 needle exchange program participants by comparing data collected on each study participant during an intake interview and a 6-month follow-up interview. Significant declines in sharing behaviors (rent needles and syringes: intake = 9 percent compared with 3 percent at follow-up; borrowed needles and syringes: intake = 20 percent compared with 7 percent at follow-up) and increased use of bleach (intake = 51 percent compared with 65 percent at follow-up) were observed. When drug-use risk behaviors of frequent attenders (attended four or more times) were compared with risk behaviors of those who attended three or fewer times, frequent attenders reported greater risk reduction on borrowing and returning used needles to the program. To supplement the pre-post analysis, needle exchange participants were also compared with another group of drug injectors, clients of Portland's National AIDS Demonstration Research (NADR) program (i.e., all received bleach and HIV education and a subset also received counseling). Frequent needle exchange participants were found to be less likely to reuse needles without cleaning or to improperly dispose of used needles than were the NADR clients. The two groups were not found to differ on other risk behaviors assessed. It is worth noting that there was little overlap between the two groups (11 percent). The two interventions apparently are recruiting different participants.

In sum, from the earliest studies of needle exchanges, there has been a dominant trend in the data showing significant and meaningful associations between participation in needle exchange programs and lower levels of drug-use risk behaviors, and small or no change in sexual risk behaviors. The most recent data continue to reflect this trend. Moreover, this pattern of findings has also been observed in foreign cities (Davoli et al., 1995; Hunter et al., 1995).

Reduction in HIV Infection Rates

Two recent ecological studies examined trends in HIV seroprevalence rates among injection drug users, one in New York City and the other in San Francisco (Des Jarlais et al., 1994a; Watters, 1994). Both reported a stabilization of HIV seroprevalence rates that coincided with reductions in high-risk behaviors and the implementation of various prevention programs (including outreach, education, testing and counseling, bleach and condom distribution, and needle exchange programs). Although these ecological studies do not provide direct causal evidence of the effect of such programs, they nonetheless document a pattern in behavioral risk reduction that corresponds

with stabilization of seroprevalence rates in distinct populations of injection drug users.

Hagan and colleagues (1994b) reported seroprevalence rates of needle exchange participants and nonparticipants. The cross-sectional sample of needle exchange participants (n = 426) was found to have a 2 percent HIV prevalence rate, compared with an 8 percent rate for the cross-sectional sample of nonparticipants (n = 159). Because the outcome measured in this study was prevalent infection, temporal associations cannot be established with certainty, and the possibility that the results might reflect that the needle exchange program attracts lower-risk injection drug users cannot be dismissed out of hand. However, the results are consistent with the inference that needle exchange programs are associated with a lower risk of infection.

On the basis of recent updates from the 1994 International Conference on AIDS, Des Jarlais (1994; in press) provided descriptive information on HIV incidence among injection drug users who participate in needle exchange programs across 14 different cities (Table 7.5). Some of these incidence rates were measured directly by testing cohorts of needle exchange participants; others were based on self-reports of prior serological tests; still others were derived from statistical modeling techniques (e.g., New Haven).

An examination of the data reveals that, with the exception of data from Montréal, HIV incidence is uniformly low among needle exchange program participants; that is, regardless of whether HIV prevalence is low, moderate, high, or very high among the community of injection drug users in a city, HIV incidence among program participants is consistently low across cities. These findings are consistent with the premise that an AIDS prevention program (e.g., needle exchange program or other outreach intervention) that is able to reach and successfully modify the behavior of injection drug users who are at high risk of becoming infected or of transmitting HIV to others is capable of maintaining a low level of new infections in the population of injection drug users in a community.

The high HIV incidence among needle exchange program participants observed in Montréal is disconcerting. The reader is referred to Appendix A for a detailed discussion of Montréal data. Although the prevalence is moderate and has remained stable, the observed incidence is high among the needle exchange cohort being studied. If the high incidence accurately reflects the rate of new infections in the population of injection drug users in Montréal, an increasing prevalence over time would be expected—it is not possible to think that mortality is as high as the observed incidence. A rational explanation (again, if the prevalence and incidence estimates are unbiased population estimates) could be that Montréal's needle exchange program is attracting a high-risk group of users (selection bias).

TABLE 7.5 HIV Incidence Estimates Among Needle Exchange Participants in Select Cities

|

City |

HIV Prevalencea |

Measured HIV Seroconversionsb |

Estimated HIV Seroconversionsc |

|

Lund, Sweden |

Low |

0 |

|

|

Glasgow, Scotland |

Low |

|

0-1 (2) |

|

Sydney, Australia |

Low |

|

0-1 (2) |

|

Toronto, Canada |

Low |

|

1-2 (2) |

|

England and Wales (except London) |

Low |

|

0-1 (1) |

|

Kathmandu, Nepal |

Low |

0 |

|

|

Tacoma, WA, USA |

Low |

<1 |

|

|

Portland, OR, USA |

Low |

<1 |

|

|

Montréal, Canada |

Moderate |

13 |

|

|

London, England |

Moderate |

|

1-2 (4) |

|

Amsterdam, The Netherlands |

High |

4 |

|

|

Chicago, IL, USA |

High |

0 |

|

|

New York, NY, USA |

Very high |

2 |

|

|

New Haven, CT, USA |

Very high |

|

0 (3) |

|

a Low = 0 to 5 percent; moderate = 6 to 20 percent; high = 21 to 40 percent; very high = 41 + percent. b Cohort study and/or repeated testing of participants in per 100 person years at risk. c Estimated from: (1) stable, very low <2 percent seroprevalence in area; (2) self-reports of previous seronegative test and a current HIV blood/saliva test; (3) HIV testing of syringes collected at exchange per 100 person years at risk; and (4) stable or declining seroprevalence. SOURCE: Adapted from Current Findings in Syringe Exchange Research: A Report to the Task Force to Review Services for Drug Misusers (Des Jarlais, 1994). |

|||

Recent reports from Montréal (Hankins et al., 1994; Lamothe et al., 1993; Bruneau et al., 1995) suggest that needle exchange participants still frequently engage in high-risk behaviors, including unsafe cocaine injection and prostitution. The program is located in an area of the city noted for prostitution, and the program operates in the middle of the night, which makes it prone to recruiting high-risk users. Moreover, injection drug users in Montréal who elect to purchase sterile needles in pharmacies can do so without a prescription (further biasing the representation of those who elect to acquire their needles in the middle of the night at the exchange program). Recent epidemiologic data from Montréal (Bruneau et al., 1995) highlight the complex nature of the observed association between sources of sterile needles and HIV seroincidence (see Appendix A). That is, although the risk of seroconversion was found to be higher among needle exchange participants when compared to nonparticipants, injection drug users who used the

needle exchange program as their exclusive source of sterile needles were found to be at substantially lower risk than those who used diverse sources of sterile needles. Furthermore, the needle exchange program limit of 15 needles per visit may not be sufficient to properly address drug-use risk behaviors of individuals who inject large amounts of cocaine. There is also a high level of male prostitution among the needle exchange participants.

If the infection rate estimates accurately reflect the population parameters, it would appear that the services offered by the Montréal needle exchange program are insufficient to control HIV transmission in that cohort. Specific ethnographic studies are needed to better understand the primary routes of transmission implicated and their dynamics (e.g., sex, specific drug-use risk behaviors). This would allow the program to better tailor its services (e.g., add new services) and/or the relative intensity of the service delivery according to needs of participants (i.e., primary risks).

The potential ability of needle exchanges to attract injection drug users that are at high risk of seroconversion was also recently reported in the United States by a San Francisco research team (Hahn et al., 1995; see Appendix A for a detailed review of the San Francisco study). Although the disparities in observed seroincidence rates between needle exchange participants and nonparticipants could not be attributed to having been exposed to the needle exchange program, the program appeared to serve a relatively high-risk subset of injection drug users. The authors concluded that the San Francisco program provides a unique setting for intervention because it provides direct access to a population that is at high risk.

Other cities with needle exchange programs that have high seroprevalence data (e.g., New Haven, Chicago, New York) report a low level of HIV seroconversion. The high seroconversion rate in Montréal therefore appears to be the exception, rather than the rule. These data are consistent with the premise that AIDS prevention programs (e.g., needle exchange) are able to stabilize HIV prevalence and very low seroincidence. Obviously, these results are descriptive in nature (there are no comparison groups) and, as a consequence, cannot in themselves provide evidence of the direct causal effect of needle exchange programs on HIV incidence rates. Nonetheless, they do provide valuable insight into HIV incidence rates among needle exchange participants in cities with varying levels of HIV seroprevalence among the local populations of injection drug users.

Other recent reports from New York City (Des Jarlais et al., 1994b, 1995; Paone et al., 1994b) have compared seroconversion rates of various injection drug user groups in the city. The HIV seroconversion rate among high-frequency drug injectors not using the needle exchange programs ranged from 4 to 7 per 100 person years at risk, compared with needle exchange participant groups with seroconversion rates ranging from 1 to 2 per 100 person years at risk. These findings suggest that the use of needle exchange

programs has a substantial protective effect for preventing new HIV infections. However, the results need to be interpreted with care. That is, nonequivalence across groups being compared (needle exchange users versus nonusers) precludes making strong causal inferences about the direct effect of the needle exchange on HIV incidence rates. Nonetheless, these data do reflect a significant association between needle exchange participation and HIV infection (Des Jarlais et al., 1994b, 1995).

Possible Negative Outcomes

Program Participant Drug Use

The most recent studies that have examined drug-use behaviors among needle exchange participants show either stable levels of reported drug injection frequency or even slight declines over time among injection drug users who continue to participate in needle exchange programs (Watters et al., 1994; Paone et al., 1994a; Des Jarlais et al., 1994a; Oliver et al., 1994). In the recent New York City study, Paone et al. (1994a) reported a statistically significant decrease in injection frequency among needle exchange participants, from 95 times in the 30 days preceding program participation to 86 times in the 30 days prior to participants' being interviewed.

The only exception to this reported trend comes from an unpublished research manuscript from Chicago researchers (O'Brien et al., 1995a). As noted in the Preface, as the panel was concluding its deliberations, the Assistant Secretary for Health made public statements that a number of unpublished needle exchange evaluation reports had raised doubts in his mind about the effectiveness of these programs. The panel deemed these statements to be significant in the public debate, therefore necessitating appropriate consideration in order for the panel to be fully responsive to its charge. The panel therefore reviewed the unpublished studies, one of which was the aforementioned O'Brien et al. (1995a) Chicago study. As unpublished findings, this research lacks the authority provided by the peer review and publication process. For this reason, the panel gave special attention to scrutinizing and describing in detail results reported by the researchers, as well as appraising their probative value (see Appendix A).

The investigators infer from their findings that those who participate in needle exchange programs spend more money and inject more frequently than nonparticipants as a result of their participation in the program. Their assertion is based on data that, according to these authors, support the contention that program participation is economically driven (i.e., by the cost of needles). The panel's review raised serious concerns about the tenability of their inferences. For instance, a clearly insufficient theoretical and empirical development of the underlying models is used. That is, there are

numerous other plausible models that could explain their data. From an economic standpoint, it would seem that individual socioeconomic status may be causally related to both drug abuse and use of the needle exchange program, rather than to the explanation that needle exchange programs cause drug use. Nonetheless, the authors do not test any alternative plausible models to assess the relative fit of their models compared with other viable competing models. Moreover, a weak theoretical justification is provided of their postulated model (e.g., cost of needles is the underlying driving force for using the needle exchange, but that cost is minute compared with other expenses, such as the cost of the drugs themselves).

The empirical information provided on key variables is inadequate. Properties of the distributions of key variables are absent and aggregate summary statistics are used in various models without attention to the possible adverse effect of outliers. The presence of such outliers can severely distort the results and challenges the viability of the inferences drawn by these investigators. Substantial inconsistencies between data on key variables (self-report) presented in the manuscript and information extracted from the needle exchange program records raised serious concerns among panel members. Moreover, as discussed in some detail in Appendix A, the panel had serious reservations about the appropriateness of the modeling techniques as implemented by these researchers.

Although this particular study suffers from serious limitations, the conclusions reached by the authors raise interesting questions and hypotheses that should be subjected to sound empirical testing. These issues should be further studied with adequate designs, measures, and analytical methods. In the meantime, in the panel's opinion, these difficulties are serious enough to preclude making causal inferences about the effect of needle exchange programs.

New Initiates to Injection Drug Use

The concern that having the opportunity to use a needle exchange may lead persons who are not currently injecting to begin injecting demands attention, and some information about this is available.

If the opportunity to participate in needle exchange programs were to lead to an increase in the number of new injection drug users, one would expect to see relatively large numbers of young newer injectors at the needle exchange programs. This has not been observed in any of the earlier studies (e.g., Lurie et al., 1993), or in the most recent publications (Paone et al., 1994a, 1994b; Des Jarlais et al., 1994b; Watters et al., 1994).

Investigators in Amsterdam have recently published data that permit examination of the hypothesis that "mixing" of injecting and noninjecting drug users at needle exchanges will lead noninjectors to begin injecting

behavior (van Ameijden et al., 1994). Many of the Amsterdam needle exchanges are operated out of the "low-threshold" methadone programs. These programs provide services to both heroin injectors and heroin smokers and do not require abstinence from illicit drug use as a condition for remaining in the program. Thus, these combined methadone treatment and needle exchange sites do provide frequent opportunities for social interactions between heroin injectors and heroin smokers. Despite this, the proportions of heroin users who smoke and those who inject have remained constant since the exchanges were implemented.

Recent U.S. data also support the conclusion that needle exchange programs do not lead to any detectable increase in drug injection. The recent San Francisco study (Watters, 1994) found an increase in the mean age of injection drug users in the city during the years of operation of the needle exchange programs (i.e., mean age in 1986 was 36 compared with 42 in 1992). Moreover, the author reported that during that 5.5-year period, the median reported frequency of injection declined from 1.9 to 0.7 injection per day and that the percentage of new initiates into injection drug use decreased from 3 to 1 percent. In Portland (Oliver et al., 1994), under 2 percent of program participants had histories of injecting of less than a year. The average duration of injection drug use was 14 years, and more than 75 percent had been injecting for 5 years or more. The presence of a needle exchange program does not appear to cause any increase in the number of new initiates to drug injection. Identifying what factors lead individuals to initiate injection drug use, despite knowing about AIDS, remains an important question for future research.

Discarded Needles and Syringes

Since the University of California report was issued, only one study has dealt with needle exchange programs and improperly discarded needles. Doherty et al.. (1995) conducted a survey of a random sample of city blocks in areas of high drug use in Baltimore before and after implementation of the needle exchange program; their results are consistent with the University of California conclusion that needle exchange programs do not increase the total number of discarded syringes.

THE NEW HAVEN STUDIES

After extended legislative debate, Public Act 90-214 allowed the City of New Haven, Connecticut, to implement—on an experimental basis—a legal needle exchange for injection drug users. On November 13, 1990, the New Haven Needle Exchange Program began operation. This program operated

from a van, typically 6 hours a day, 4 days a week, and traveled to specific sites known to involve high levels of drug activity.

The needle exchange program operated on an anonymous basis. Specifically, participants were assigned a fictitious name as a means of identification and tracking. New enrollees who did not have a needle and syringe to exchange at their first encounter with the needle exchange program were provided with a single ''rig." After that, exchanges were conducted on a one-for-one basis, with a maximum of five needles and syringes issued on each occasion. Syringes that were distributed were coded to enable tracking and evaluation. The program accepted syringes that had not originated from the program. All returned equipment was placed in a metal canister, and all returned equipment was turned over to an evaluation team at Yale University for assessment. In particular, a sample of returned syringes were assessed for the prevalence of HIV.

In addition to exchanging used sterile equipment, program staff provided AIDS education and information on risk reduction. Condoms and bleach packets were provided to all participants at each encounter. All participants were also provided information on drug treatment and a broad range of other relevant services (e.g., tuberculosis and sexually transmitted disease screening through clinics, HIV testing, maternal and child health services); outreach workers also provided participants with direct assistance in accessing drug treatment and other services.

In July 1992, syringe possession without a prescription was decriminalized. This was followed by a reduction in the monthly volume of exchanges at the program (from about 4,000 to a little more than half that number).

The importance of the evidence from the New Haven studies is twofold. They provide: (1) direct evidence of lower levels of HIV infection among needles in use and (2) indirect, model-based estimates of changes in the incidence of new HIV infections among needle exchange program participants.

The direct evidence involves the impact of the needle exchange program on the critical features of program process. Specifically, the evaluation reveals significant and substantial reductions in the infectivity of the syringes exchanged through the needle exchange program. The data also reveal increases in referral to drug treatment and no change in the number of injection drug users.

Prior to the distribution of sterile injection equipment, extremely sensitive DNA analyses using the polymerase chain reaction (PCR) to detect the presence of HIV-infected peripheral blood cells in the returned syringes of existing "street" syringes showed an HIV-positive rate of 0.675; needles from shooting galleries revealed a rate of 0.917; and needles from an underground exchange showed a rate of 0.628. During the first month of the

exchange, HIV-positive rate for needles turned into the exchange was 0.639; within 3 months, this rate declined to 0.406 and remained relatively constant (with an increase to 0.485 at the end of the year—November-December 1991). That is, the prevalence of HIV in needles decreased by one-third.

Program Implementation

Measures of syringe infectivity gradually declined over time, with the sharpest decline occurring in the first 3 months after the implementation of the needle exchange program. If the reduction in the infectivity of needles is due to the activities of the program, it is reasonable to expect changes in program operations that parallel the pattern of reductions in needle infectivity. Data about program operations tend to support the plausibility that reductions in infectivity are connected to the activities of the clients of the needle exchange program and the program itself.

In particular, the number of visits increased more rapidly than the number of clients, suggesting that the same clients were exchanging needles more frequently. Specifically, in December 1990 there were about 100 clients and 150 visits. By June 1992 there were 300 clients and 900 visits.

Program data are also consistent with a fundamental concept of the circulation theory advanced by Kaplan—namely, the law of conservation of needles. Specifically, there was a close match between the number of inbound and outbound needles, and there was a substantial increase in the volume of exchanges between December 1990 and June 1992. That is, the volume of exchanges increased from less than 500 to over 4,000 in June 1992. (After decriminalization in 1992, the volume of exchanges decreased to between 2,000 and 2,500.) Over the entire study period (November 1990 through June 1993), a total of 80,292 needles were distributed; for the same period, the total number of needles that were returned was 78,067. That is, 97.2 percent of the total number of needles distributed were returned to the needle exchange program. It appears that these figures include the "return" of nonprogram needles. In other papers (e.g., Kaplan and Heimer, 1994), return probabilities of about .7 are shown.

Using estimates of the size of the population of injection drug users in New Haven derived by Kaplan and Soloshatz (1993), it appears that about half of the injection drug users have had contact with the needle exchange program (Lurie et al., 1993).