Coupled Systems: An Essay

EDWARD S. SARACHIK

Coupled atmosphere-ocean-land-cryosphere models are basic tools in the study of climate and its variability. Since the atmosphere is sensitive to changes in lower-boundary conditions on long enough time scales, we must simulate the time evolution of these conditions in order to ensure the consistent simulation of the atmosphere. The time scales of atmospheric sensitivity depend on the geographic region of interest: The tropical atmosphere responds to sea surface temperature (SST) variability on monthly and longer time scales, while it has not been shown that the mid-latitude atmosphere responds significantly to SST unless the anomaly lasts for several years. Furthermore, variations in mid-latitude soil moisture seem to affect the distribution of precipitation over the continents seasonally. Variations of snow cover and sea ice have also been implicated in atmospheric variability beyond the seasonal time scale.

In turn, the evolution of the lower boundary conditions is partly determined by atmospheric processes, so coupled models become essential for simulating the mutually consistent evolution of the interacting systems. It is safe to say that if we are interested in decade-to-century-scale climate variability, the global atmosphere must be coupled to the global ocean, to the global land surface, and to global snow and ice.

While this realization has been with us since the beginning of climate modeling, progress in coupled modeling over the past decade has been fitful and hard won. The basic problem has been one of resources: A 100-year run of a coupled model consisting of a global atmosphere of modest resolution, with land processes parameterized, coupled to a global coarse-resolution ocean, with sea ice, uses a major part of a dedicated supercomputer. Increasing the resolution by just a factor of two increases the computer demands by an order of magnitude. If we are to understand and simulate climate variability on decade-to-century time scales, model runs of thousands of years are required. Up to this time, fully coupled models of satisfactory (but never sufficient) resolution have been run only at major institutions having access to large amounts of supercomputer time.

As computers become more capable, resource problems are ameliorated and the real problems of physical climate simulation come to the fore. The fundamental problem has been that the coupling of a reasonably well-understood atmospheric model to a reasonably well-understood oceanic model has produced a coupled model that is not only not well understood but also exhibits unexpected and unaccounted-for properties. The sensitivities of the two models to errors in each other, which are not apparent when each model is run in decoupled mode, seem to produce unusual sensitivity in the coupled model (Ma et al., 1994). It has become increasingly clear that a coupled model is a unique beast, with properties distinct from those of the component models. Coupled modeling therefore requires a quite different set of outlooks and approaches from those needed for modeling the component systems.

The coupled-model papers that appear in this chapter can best be put into perspective by recounting a bit of the history of coupled climate modeling, by pointing out where we now stand with respect to coupled modeling (and the data needed to support such modeling), and by suggesting

some future directions and problems likely to be addressed over the next few years.

HISTORY

Only a decade after the first numerical general-circulation model (GCM) of the atmosphere had been constructed (Phillips, 1956), the first attempt at a coupled general-circulation model (CGCM) was made in a remarkably prescient series of three papers (Manabe, 1969a,b; Bryan, 1969) published as a single issue of the Monthly Weather Review. The model was geographically simplified (it consisted of a sector of the globe, bounded by meridians, covering only a third of the zonal extent of the globe, a bit more than half the sector was covered by land), and the solar driving was without annual variation, but it contained most of the physics now recognized as important for the climate problem. Water vapor and its changes of phase were computed explicitly; a rudimentary land hydrology model was included (the ''bucket" model) that allowed for land evaporation and runoff; snow and land ice were parameterized; and radiative transfer for visible and infrared radiation was explicitly calculated using the specified clouds. The only major specification was cover from three types of clouds (low, middle, and high), as a function of latitude for use in the radiative transfer calculations. Rainfall and snowfall were explicitly calculated.

The ocean had five levels in the vertical; computed salinity explicitly; used an equation of state for density as a function of the calculated salinity, temperature, and pressure; and included a parameterization for sea ice. Coupling at the surface was accomplished by fluxes of heat and momentum through the surface into the ocean and by sensible and latent heat transfer into the atmosphere from the surface. SST was determined interactively by thermodynamic processes in both the ocean and the atmosphere.

The coupled model could be run for only 100 years of ocean model time, due to computational limitations, but at the end of this time it had reached a quasi-equilibrium in which only the deeper parts of the ocean were still changing. The resulting distribution of surface temperature, while not directly comparable to observations, looked quite reasonable, with the ocean heat transport warming higher latitudes and cooling the tropics. The modeled atmosphere developed eddies and had a wind and thermal structure similar to that observed, while the ocean developed a thermocline and had a density and current structure similar to that observed. Systematic problems were found in the lack of an intertropical convergence zone over the ocean, in a too deep and diffuse thermocline, and in a lack of sufficient meridional heat transport by the ocean circulation. No significant decadal variability was seen in the coupled model.

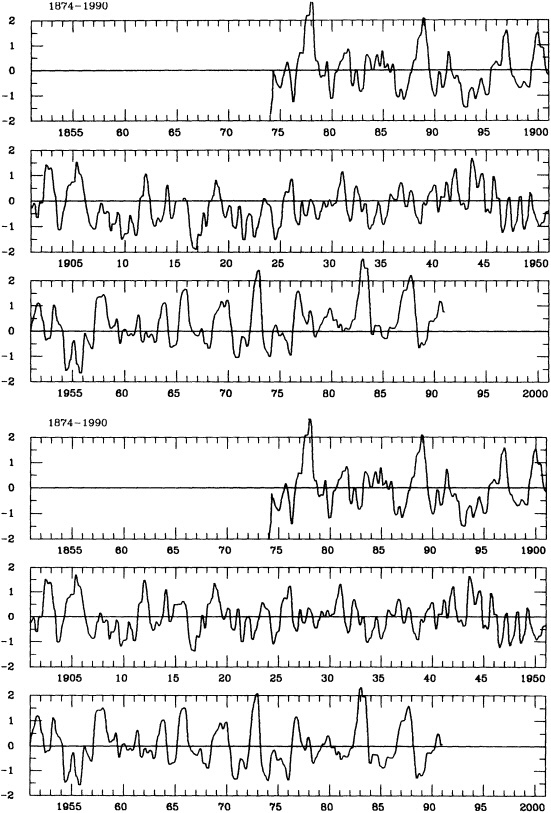

All succeeding CGCMs followed the basic themes set out in the original Manabe-Bryan papers (Figure 1, from Manabe (1969b), is still the best summary of CGCMs and continues to be widely used). In subsequent years, geography and topography have become more realistic, resolution has improved (but is still severely limited), clouds are now explicitly calculated instead of prescribed, radiation schemes have become more sophisticated and now include aerosols, land-surface parameterizations are more complete (they now describe vegetative types and evapo-transpiration), and ocean models now include more detailed bottom topography and more sophisticated mixing parameterizations. Many organizations other than GFDL are now running longer-term global coupled models, including groups at NCAR, NASA, DOE, and a few universities.

A major spur to a quite different type of coupled modeling came with the investigation of the ENSO phenomenon in the equatorial Pacific. A simplified coupled model, developed by Zebiak and Cane (1987), specified the annual cycle in both the atmosphere and the single-layer ocean (with embedded surface layer) and calculated the anomalies departing from this annual cycle. The model was successful not only in simulating the equatorial aspects of ENSO in and over the tropical Pacific but also at predicting aspects of ENSO a year or so in advance (see Cane, 1991). Only the upper portion of the equatorial ocean was modeled, since only the part above the thermocline is needed to simulate short-term variability (i.e., months to a year or two). Resolution near the equator was enhanced to fully resolve uniquely equatorial processes, especially equatorial waves and upwelling.

More complicated CGCMs without an annual cycle have also been successful in modeling ENSO variability (e.g., Philander et al., 1992). At this point, models with an annual cycle in solar forcing have had some success (e.g., Nagai et al., 1993; Latif et al., 1993) but still have difficulties in simulating the annual response as well as the full range and amplitude of interannual variability. (A recent review is

FIGURE 1

Box diagram of coupled-model structure. (From Manabe, 1969b; reprinted with permission of the American Meteorological Society.)

given in Neelin et al. (1992), and a full intercomparison of the climatology of this type of coupled model in Mechoso et al. (1995).) These models tend to have the oceans active only in selected regions; elsewhere, the ocean is relaxed to climatology, while the atmosphere has global extent.

PROBLEMS WITH GLOBAL CGCMS

Coupled models used to simulate ENSO (such as Philander et al., 1992) generally have only the tropical ocean active and use enhanced resolution in the equatorial area. Because these models are designed for interannual studies and are not configured for longer-term variability, they generally lack the mechanisms that maintain the upper ocean's thermal structure, especially the thermohaline circulation (THC), and so gradually diffuse away their thermocline. These models are therefore limited in the length of time over which they can be usefully run.

These types of CGCMs succeed in reproducing some aspects of the time-averaged climate, the annual cycle, and the interannual variability. Unrealistic features persist, however, especially off the western coast of South America, where simulated SSTs tend to be too high. Such problems could be remedied by adjusting the surface fluxes to make the climatology move closer to observed values, but attempts are under way to avoid such "flux corrections" by including or improving parameterizations of the necessary processes—for example, the stratus clouds off the coast of Peru that keep SSTs low.

Global CGCMs used for longer-term studies (e.g., for the response to anthropogenic increases in the greenhouse gases) must correctly simulate the basic climatology of the observed climate system, i.e., they must correctly simulate both the mean state and the annual cycle. It would be most desirable to correctly simulate the climatology without the need for flux corrections. A recent CGCM of Manabe and Stouffer (1988)—a model similar to the one used in the paper of Delworth et al. (1995) in this chapter, but with a sun lacking annual variation—illustrates the difficulties involved in achieving this goal. The model produces a deficit of salt (more properly, an excess of fresh-water) at high latitudes and prevents the deep sinking of ocean parcels. As a result, the THC does not exist, and the heat and salt transports into high latitudes are reduced. The higher latitudes are thus too fresh and too cold, and sea ice extends too far south, conditions that guarantee that the THC cannot get started. If the high-latitude ocean is artificially salted by a constantly imposed saline flux correction and the THC is on, the circulation is helped to stay on by its own delivery of salt to high latitudes. On the other hand, the artificially imposed salt flux is not, by itself, adequate to start the THC; a steady state exists with imposed salt flux but no THC.

The lessons from the Manabe-Stouffer model are that there can be two climate states (one with and one without a THC) in the presence of identical external forcings, and that it is difficult to achieve a good simulation of the surface salinity field unless both the atmospheric and oceanic processes that control salinity are correctly modeled. Salinity at the surface of the ocean is changed by the difference between evaporation and precipitation, by runoff from land, by freezing or melting of sea ice, by advection and subsequent melting of icebergs, by advection, convergence, and divergence of salinity by ocean currents, and by mixing of salinity downward into the ocean. All of these processes, some quite poorly measured and understood, must be modeled correctly to ensure a proper high-latitude salinity budget and hence a correct THC.

The global annual cycle is relatively well documented in the instrumental record. Since there is no guarantee that a CGCM will respond correctly to the imposition of the annually varying external solar forcing, the modeled annual cycle provides a major test of CGCMs. But if a CGCM responds only annually to an annually varying sun, it would miss the variability on all other time scales that comprise the climatology: The annual cycle is the long time average over all the variability present, and variability other than annual may contribute to the observed annual cycle. Since at present flux corrections are still needed to prevent climate drift of CGCMs, the global annual cycle in these models cannot be considered to have been independently modeled.

VARIABILITY

Since the ENSO cycle is so important a signal in the tropical atmospheres and oceans, it is not surprising that inter-decadal modulations of this signal are also important. Rasmusson et al. (1995, in this chapter) present evidence that the ENSO is modulated by long (century-scale) variability, although they make the point that the records are not nearly long enough (or good enough) to characterize this variability more precisely. Cane et al. (1995, also in this chapter) speculate that such regimes of enhanced or suppressed ENSO variability are internally generated, at least in the Zebiak-Cane model, and show that longer data records might be capable of resolving the precise mechanisms. Trenberth and Hurrell (1995, in this chapter) hypothesize that North Pacific decadal variability, which is connected with the Pacific North American pattern, can be linked to this decadal variability of the ENSO phenomenon.

At this time no CGCM that has a resolution near the equator high enough to simulate the processes known to be important for ENSO, has produced (or can produce) long enough simulations to tell us whether such regimes exist. In order to be able to run these models for long periods of time, equatorial high resolution in the ocean must be combined with the accurate simulation of the THC; satisfying both these conditions in the ocean component of a CGCM

is beyond the capabilities of the present generation of supercomputers.

It used to be thought that the response time of the THC was so long that decadal and longer variability would occur simply as a result of the accumulated response of higher-frequency forcings from the atmosphere. Recent results (see Weaver, 1995, in this volume) have indicated that there exist purely oceanic mechanisms for the generation of interdecadal variability, namely, the internal dynamics of the THC itself in response to steady fresh-water forcing from the atmosphere.

The question arises as to whether or not such variability would be present in a fully consistent CGCM, i.e., whether or not the atmosphere would increase or damp the THC variability that would exist in an ocean-only model. The paper by Delworth et al. (1995) in this chapter describes a fully coupled model, driven by an annually varying sun, that has high-latitude salt-flux corrections and heat-flux corrections, both varying as a function of the time of year. The results indicate that inter-decadal or longer variability survives the coupling. The mechanism for the SST and surface salinity involves the variability of the oceanic THC but is modified and complicated by the feedbacks inherent in a fully coupled model.

FUTURE PROBLEMS

Coupled models are gradually coming into their own. They have been quite successful at enabling us to understand and predict a wide range of phenomena, from the ENSO cycle to the responses to the anthropogenic increase of the radiatively active gases, especially carbon dioxide (see, e.g., Manabe et al., 1991, 1992). As computer resources become more available, coupled models are being run at more and more institutions. While it is still true that higher-resolution CGCMs can be run only on supercomputers, the advent of workstation computing has now made it possible for individual investigators to begin to run similar coarse-resolution coupled models.

The success of coupled models depends on the ability of the component models to realistically simulate key climate processes. It is therefore true, and always will be, that progress in atmospheric and oceanic modeling will prompt progress in coupled models. It is also true, however, that coupled models require special attention to processes not ordinarily emphasized in decoupled modeling. For example, atmospheric models must now consider the details of boundary-layer processes near the ocean surface in order to successfully simulate the fluxes of heat and momentum in response to a given SST. Similarly, mixed-layer processes in the ocean must be able to successfully simulate the SST for specified fluxes of heat and momentum from the atmosphere. The distribution of atmospheric rainfall, runoff from land, and sea ice growth and advection become important processes in guaranteeing the success of THC simulation. Because each of the component models is very sensitive to small errors in the other, process simulation that would be acceptable in a decoupled model can lead to unacceptable results in a coupled context. Successful simulation of one component in response to forcing in the other is a necessary, but by no means sufficient, condition for the success of the coupling.

We see that successful coupling demands improvements in the component models; indeed, the future of coupled models will depend on such improvements. Flux corrections can provide a temporary fix for model problems, but totally believable coupled models require simulating accurate climatologies without the need for flux corrections. Simulating decadal and longer variabilities also requires ocean models that correctly maintain the upper ocean's thermal structure; in practice this means that the THC must be correctly modeled. In order to confirm that natural variability has been successfully simulated, longer and more accurate data sets must be available. In this regard, paleoclimate indicators become especially valuable, and other proxy data sets (tree rings, corals, sediment cores, ice cores, etc.) become essential to mapping the domains of variability in which to test the coupled models. In this chapter Battisti (1995) points out not only the unique role that proxy data play in modeling but also the equally unique role that understanding and exploration through modeling play when data are so sparse and difficult to come by.

The ultimate test of understanding and simulating climate variability is the ability to predict that variability. We now know that certain aspects of seasonal-to-interannual variability, especially aspects of ENSO, are predictable, but no one knows whether decadal variability is deterministically predictable—and, if it is, which data are needed as initial conditions. Even if longer-term variability is not predictable, the ability of models to successfully simulate the spectrum of climate variability is a necessary prerequisite to our full understanding of the natural climate system.

CONCLUSION

As we have seen, the basic problem in coupled modeling is the correct simulation of the climatology, particularly the annual cycle. Since climate variability and the annual cycle contribute to each other, this goal is something of a moving target. We have to simulate the annual cycle correctly in order to simulate variability, but we have to simulate the variability correctly in order to simulate the annual cycle. We may therefore expect progress to be rather slow.

Progress in coupled modeling will be accelerated by:

-

Improvements in understanding and modeling the atmosphere, ocean, land, and cryosphere separately. The primary physical processes needing better parameteriza

-

tion are clouds and water vapor in the atmosphere, mixing in the ocean, evapotranspiration and small-scale hydrology in the land, and sea-ice extent and growth in the cryosphere.

-

Advances in understanding the nature of coupling, especially the general question of the sensitivities of each system to small errors in the other.

-

General increases in available resources and computational infrastructure, allowing a wider community to gain access to coupled modeling.

-

Improvement and extension of time series of physical quantities in the atmosphere, ocean, land, and cryosphere. This can be achieved by reanalysis of existing model data (e.g., daily weather analyses), data archaeology, improvements in existing observing networks and data handling, new techniques of paleoclimate analysis ... or by instituting new measuring networks and waiting till the time series is long enough.

While this last technique may seem the least efficient, future generations will appreciate our efforts as much as we would be grateful to previous generations, had they been prescient enough to do the same for us.

Decade-to-Century Time-Scale Variability in the Coupled Atmosphere-Ocean System: Modeling Issues

DAVID S. BATTISTI1

ABSTRACT

A primary limitation in the study of the decade-to-century (hereafter termed "intermediate") time-scale variability in climate is that the instrumental records for all climate variables either do not exist or are too short to detect these phenomena with any measure of statistical confidence. Hence, the methodologies and strategies of the research activities related to the intermediate time scales of climate variability will be distinctly different from those related to interannual variability. While climate models are currently used mainly to simulate phenomena that are already well observed, the models themselves will frequently be the instruments with which scientists identify intermediate-scale climate phenomena. Ascertaining the veracity of a climate model must therefore be a primary activity in the study of intermediate-scale climate variability. Proxy data will play an important role in documenting climate variability on intermediate time scales and in evaluating the climate variability simulated by the models.

In this paper I argue that it is extremely important that the models used for intermediate-scale climate studies be validated a priori by assessing how accurately many well-documented "target" phenomena are represented in each model. Specific target phenomena are suggested, such as the seasonal and diurnal cycles of the state variables that are well-documented in nature, and the fluxes of energy and mass at the media interfaces. A quantitative comparison should be made of the processes that are responsible for these cycles in the model and those that are observed. In addition, valuable information on the veracity of the models will result from an assessment of how well the model's performance matches the well-documented interannual variability in the climate system.

INTRODUCTION

An increasing number of earth scientists have become interested in understanding natural variability in the climate system on century and especially on decadal time scales. This paper pertains to the climate variability within the atmosphere and ocean system on these time scales, which will be referred to as the "intermediate" time scales.2 The potentially critical role of the land hydrology and cryosphere for the intermediate-time-scale fluctuations in climate is discussed elsewhere (see the Atmospheric Observations section of Chapter 2). The variability in the atmosphere-ocean system on the intermediate time scales is poorly documented at present by comparison with both shorter (interannual) and longer (e.g., 103 to 106 years) time-scale oscillations. Information on the longer-scale variability is abundant because the transitions between glacial and interglacial conditions are large enough changes in the global climate system to be clearly defined in the global geological record—e.g., in the stratigraphy of the sediment and ice deposits, and in the radio-isotopic composition and distribution of the embedded flora and fauna. These proxy data have been instrumental in the studies of long-term climate variability because an accurate measure of the absolute elapsed time is available from the decay of the isotopes of the ubiquitous carbon.

Compared with the sub-millennial vacillations in climate, the variability of the climate system on the intermediate time scales is thought to be rather small in amplitude. Until recently, there was little direct evidence for either local or global inhomogeneous climate variations on these time scales. The instrumental records of the climate variables prior to the turn of the century are, in isolation, inadequate for documenting the decade-to-century-scale climate variability; they exist for only a limited number of state variables, and the data for these variables are largely confined to very near the earth's surface (usually within 10 m) and are sparsely distributed. Historians and scientists have used phenological data to infer variability on interannual time scales. Phenological data are, by definition, available only in the regions of human habitation and are susceptible to the vagaries of human perception. In his seminal contribution to the study of European history, Braudel (1949) combined the evidence from literary references with a variety of phenological data and concluded that the entire Mediterranean area experienced abnormally cold and wet winters in the early seventeenth century—the Little Ice Age. (Braudel's data included the time of year of river and lake floods, the years of significant frost damage to olive trees, and the times and yields of various agricultural harvests.)

A much more rigorous historical portrait of the climate variability on intermediate time scales is provided by proxy data, although they are limited in usefulness because their interpretation is not straightforward. Proxy data relevant to intermediate-scale climate variability include d18O concentration in glacial ice, lake varves, loesses, pollen data, and coral and tree-ring data (see, e.g., Bradley, 1991). The proxy and phenological data have been used together to construct a rather detailed and comprehensive assessment of the change in climate since the Little Ice Age; a brief summary is presented in Crowley and North (1991).

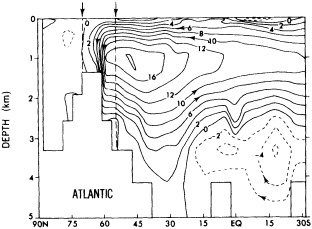

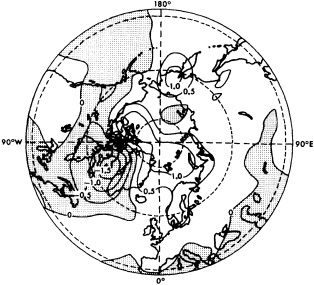

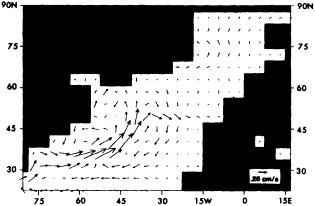

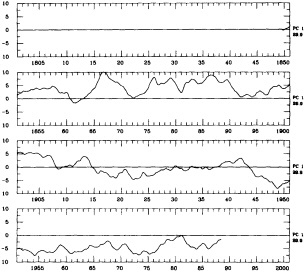

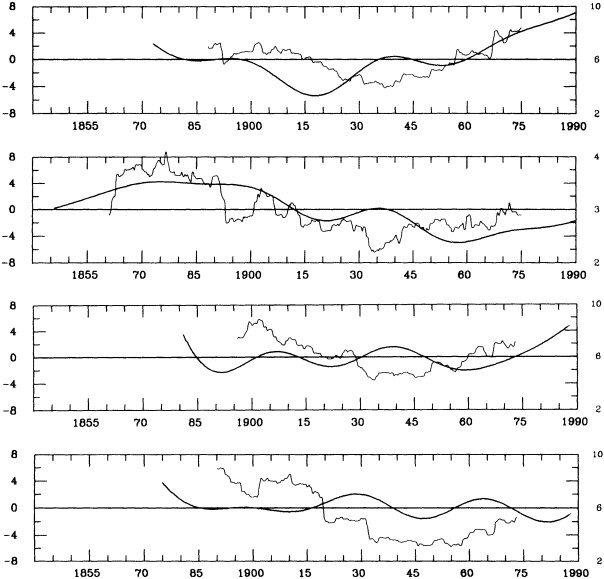

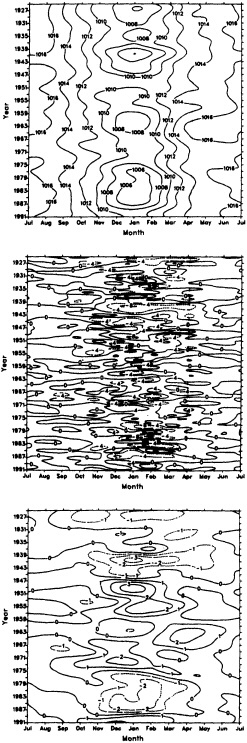

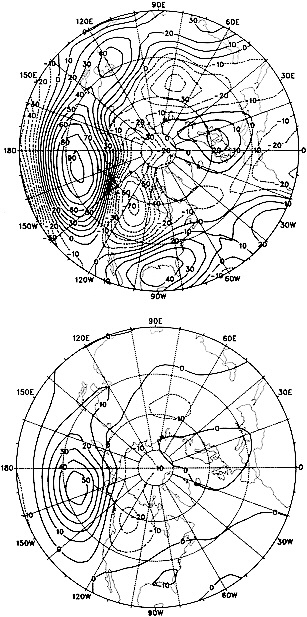

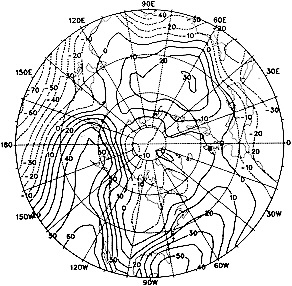

In the early 1960s Stommel and Bjerknes published studies that had a benign impact on the community for more than two decades, and now provide focal points for scientists working on the intermediate-scale climate variability. Stommel (1961) hypothesized that the general thermohaline circulation of the oceans might have multiple stable regimes, and demonstrated this hypothesis using a two-box analog model for an ocean basin. Stommel's hypothesis is supported by the recent coupled atmosphere/global-ocean general-circulation model studies of Manabe and Stouffer (1988), who achieved two statistically steady states for the meridional circulation in the Atlantic Ocean—with and without a vigorous thermohaline circulation. Bryan (1986) found that the transition between strong and weak mean meridional circulations in the abyssal ocean could happen in less than a century in a sector ocean model. Recently, Levitus (1989a,b) demonstrated that there was a change in the deep North Atlantic hydrographic structure from the 1950s to the 1970s, and supposed this change to be associated with deep convection (Figure 1). Together, these studies and related analytical, modeling, and observational studies have elevated the deep ocean circulation into the arena of potential mechanisms for—or indicators of—climate variability on the intermediate time scales.

In 1964, Bjerknes examined the contemporaneous fields of sea surface temperature (SST) and sea-level pressure (SLP) from the North Atlantic Ocean and noted differences in the 5-year mean climatologies of 1920-1924 and 1930-1934 (these pentads were chosen on the basis of the Azores-minus-Iceland SLP index). Bjerknes argued that these differences reflected a local climate change resulting from an interaction between the surface gyre circulation of the North Atlantic and the overlying atmosphere. He also implied that there were quantitative changes in the oceanic equator-to-pole heat transport. In retrospect, it can be seen that Bjerknes's method for inferring decadal changes in the pentads' means was inappropriate; the interannual variability of the coupled atmosphere-ocean system in the North Atlantic is large (see, e.g., Wallace et al., 1992; Kushnir, 1994), so a

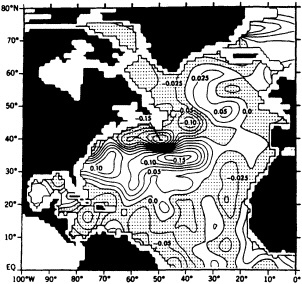

FIGURE 1

The difference in density (in kg m-3) at 500 m depth between the two pentads, 1970-74 and 1955-59. Regions with negative values are stippled and denote higher density during the 1955-59 pentad. (From Levitus, 1989a; reprinted with permission of the American Geophysical Union.)

long-term change inferred from differences in 5-year means is extremely unreliable. Nonetheless, Bjerknes's hypothesis that ocean dynamics play an important role in interdecadal variability is supported by recent studies of the decadal variability of the North Atlantic atmosphere-ocean system (e.g., Kushnir, 1992; Pan and Oort, 1983).

Nearly all the numerical and theoretical research activities on intermediate-scale climate variability have taken place during the last half-decade, for a variety of reasons. First, there is a growing demand that scientists assess and predict the anthropogenic impact on climate. Essential (but insufficient) to accomplish this goal are an accurate statement of the present climate from observations of the state variables, a rigorous program that results in multiple independent forecasts of the anthropogenically forced change in climate, and a comprehensive inventory of the intermediate variability in the present natural climate system so one can plan an efficient monitoring strategy to confidently assess the accuracy of the forecast climate change from the future observed climate.

There is another reason why the interest in the intermediate time scale variability of the climate system has sharply peaked during the past 5 to 10 years that is external to the greenhouse warming problem. The TOGA and EPOCS programs of the 1980s resulted in the documentation, simulation, and skillful model prediction of the El Niño/Southern Oscillation (ENSO) phenomenon. Through these extraordinarily successful programs scientists have explicitly demonstrated for the first time that rich variability in the climate system can result solely from the interaction between the oceans and the atmosphere. More important, this period of research marked the advent of a new era. With a few notable exceptions, for the first time the atmospheric scientists began to focus on the sub-monthly circulation anomalies in the troposphere, and the oceanographers began to abandon the default assumption of a world ocean in a quasi-steady state.3

The research activities of the last decade also created a modest population of scientists that are actively performing basic research on both oceanic and atmospheric circulation, and on the response of a climate system composed of atmosphere coupled with the global oceans. As a result, there are now numerous studies that document coordinated interannual variability in the atmosphere-ocean system, and many studies wherein isolated phenomena have been simulated and the essential physics documented. Thus, the extraordinary interest of the scientific community in identifying and analyzing the variability in the full climate system on decade-to-century time scales through modeling and observational studies can be attributed to both the research focus on the interannual variability of the coupled atmosphere-ocean climate system and the practical problems that have arisen in the detection of an anthropogenically forced climate change.

In this paper, I discuss the constraints inherent in assessing both the actual variability of the climate system on the intermediate time scales and the physical and dynamical processes that are likely to be responsible for this variability. The methods for validating the "modes" of intermediate-scale climate variability that are produced by numerical and analytical models necessarily represent a change from the traditional modus operandi. A good example of the unique blend of modeling and observational research and monitoring efforts that is required to assess intermediate-scale variability is found in the charter for the Atlantic Climate Change Program (ACCP) of the National Oceanic and Atmospheric Administration (NOAA). I describe the ACCP and briefly review the evidence for a directly observed variability in the atmosphere/ocean/sea-ice system that has recently become a focus of the ACCP. The implications for modeling and modeling strategies are discussed, and a summary is presented at the end of the paper.

THE LIMITATIONS OF THE HISTORICAL INSTRUMENTAL RECORD

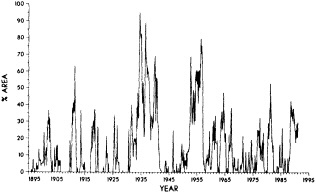

The primary limitation on the study of climate variations on the intermediate time scale is that the instrumental record

for all climate variables is too short to permit the detection of these phenomena. Prior to the 1950s, the only maritime instrumental records useful for these studies are those for SST and air temperature. Although SST data are available along the major global shipping routes from about 1900 (see, Pan and Oort, 1990), spatial and temporal coverage for these variables is adequate only across the North Atlantic (Figure 2). For the continental areas, potentially useful data are available for more of the climate variables as far back as the mid-1800s. These data, however, are uneven in their spatial distribution. Prior to World War II there are essentially no instrument-based data for the state of the atmosphere above the surface. For the ocean, subsurface data are limited to infrequent and isolated transects through the ocean, usually across the North Atlantic. Thus, prior to the 1950s the instrumental data records exist for only a few key variables in isolated regions, and provide only a blurred glimpse at climate variability. The data are insufficient for deducing the attending atmospheric and oceanic circulations and heat transport, and the energy exchange between the media.

The post-World War II instrumental data base for the

FIGURE 2

The climatological data base for sea-surface temperature. Shown in (a) is the number of months in which there exists at least one observation in a 2° latitude by a 2° longitude area during the decade 1920-29. A dot indicates 1-12 months (out of 120) with data, a slash 13-60 months, and a plus sign 61-120 months. Panel (b): as in (a), but for 1950-59. (From Pan & Oort. 1990; reprinted with permission of Springer-Verlag.)

state of the atmosphere is rather complete4 over the Northern Hemisphere, especially over the continents, but it is not suitable for studying decadal climate variability in the Southern Hemisphere. A very uneven spatial and temporal record of the hydrographic and current structure of the world oceans is available starting after World War II, but it is unlikely that the data coverage is sufficient permit deduction of a posterior decadal variations in the ocean climate.

Fundamental to understanding the coupled atmosphere-ocean system is a knowledge of how energy and constituents are exchanged between the two media. On this score, even the recent instrumental record is clearly deficient. For example, there is large uncertainty as to the annual cycle of the turbulent exchange of heat and momentum alone; estimates of the variability in these energy exchanges on the intermediate time scales would be premature.

In summary, it is not possible to discern the past variability of the climate system on the century scale from the instrumental data sources. Recent decadal variations in the atmosphere-ocean system can perhaps be deduced with some confidence from the instrumental data base, although incompletely. The ample spatial voids in the data set (especially in the subsurface oceans) introduce some uncertainty, into determining whether the variability is locally confined or is of global extent. Because of the severe limitations of the existing instrumental record, proxy data sets will play an important role in documenting the intermediate-scale climate variability and, perhaps, in evaluating simulated climate variability (discussed below).

THE TRADITIONAL MODUS OPERANDI AND THE ATLANTIC CLIMATE CHANGE PROGRAM

The Traditional Modus Operandi

The history of atmospheric sciences and oceanography is replete with examples of community-wide intensive research activities that are focused on the precise documentation and analysis of specific, observed phenomena that represent perturbations from a mean (state) that are statistically significant, e.g., the mid-latitude cyclone, the Quasi-biennial Oscillation (QBO), and the Gulf Stream. Major programs have also commonly had as a centerpiece a well-observed phenomenon. Examples include GATE (easterly waves, mesoscale tropical convection), POLYMODE (long-lived coherent eddies in the ocean thermocline), ERICA (rapidly deepening cyclones), CLIMAP (reconstruction of the climate of the last ice age), and the upcoming EPOCS effort (the annual cycle and the boundary layer circulation in the Pacific).5

The Tropical Ocean and Global Atmosphere (TOGA) program provides a good illustration of the traditional research strategies, and the profound effects the limited data base will have on the modus operandi in research on the variability of climate on intermediate time scales. The ENSO phenomenon was the centerpiece for TOGA, although mid-latitude phenomena were also documented and modeled in this program. It is important to recall that prior to TOGA the ENSO was already a reasonably well-documented phenomenon, being a large-scale, large-amplitude perturbation in the atmosphere-ocean system. The emphasis of the TOGA program was on providing an understanding of how and why this climate anomaly was manifested and assessing the predictability of the phenomena. In contrast, for intermediate-scale climate variability the target phenomena are smaller in amplitude, are derived from only a few realizations, and are not completely defined by the historical data.

The methodologies and strategies of the research activities related to the intermediate-scale climate variability will be distinctly different from those related to interannual variability for two additional reasons: (1) the inherent limitations of the data base of directly observed climate state variables (discussed above), and (2) the constraints imposed by limited computational resources coupled with the uncertainty as to the veracity of the simulated phenomena because of the parameterization of the small-scale processes and the (still) poorly understood physics. The science plan, priorities, and ongoing activities of the ACCP and of the nascent Global Ocean-Atmosphere-Land System program (GOALS) duly reflect these constraints.

The Atlantic Climate Change Program

The ACCP was formally initiated after a workshop held at the Lamont-Doherty Earth Observatory of Columbia University in July 1989. The goals of this program are as follows:

-

To determine the seasonal-to-decadal and multidecadal variability in the climate system due to interactions between the Atlantic Ocean, sea ice, and the global atmosphere using observed data, proxy data, and numerical models.

-

To develop and utilize coupled ocean-atmosphere models to examine seasonal-to-decadal climate variability in and around the Atlantic Basin, and to determine the predictability of the Atlantic climate system on seasonal-to-decadal time scales.

-

To observe, describe, and model the space-time variability of the large-scale circulation of the Atlantic Ocean and determine its relation to the variability of sea ice and sea surface temperature and salinity in the Atlantic Ocean on seasonal, decadal, and multidecadal time scales.

-

To provide the necessary scientific background to design an observing system of the large-scale Atlantic Ocean circulation pattern, and develop a suitable Atlantic

-

Ocean model in which the appropriate data can be assimilated to help define the mechanisms responsible for the fluctuations in Atlantic Ocean circulation.6

The ACCP is an interdisciplinary program that involves atmospheric scientists, oceanographers, and paleoclimatologists. Currently the program shows an appropriate balance between modeling efforts and analysis of the historical proxy and instrumental data. Furthermore, observational programs have been launched to help determine the link between intermediate-time-scale SST anomalies and variability in the thermohaline circulation by means of long-term monitoring of the deep Western Boundary Current. Observational studies that are ongoing or anticipated include tracer inventories, hydrologic monitoring of Fram Strait, and monitoring of the upper ocean thermal structure through the Atlantic Voluntary Observing Ships Special Observing Project and surface-drifter deployments.

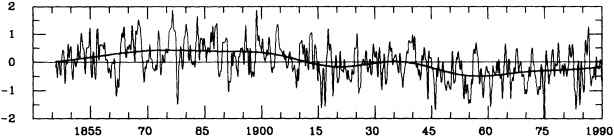

Two different patterns of variability in the North Atlantic atmosphere-ocean system have been identified in the historical data. Deser and Blackmon (1991; 1995, in this volume), using the historical observational data of sea-surface temperature, sea-level pressure, and the zonal wind over the Atlantic Basin, have demonstrated that there is a complex wintertime atmosphere-ocean interaction in the North Atlantic with a preferred time scale of about 10 years. The anatomy of this decadal variability appears to be somewhat different from that of the interannual climate anomaly in the North Atlantic documented in Wallace et al. (1992).

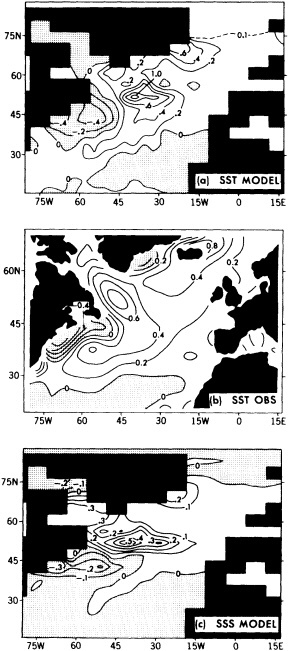

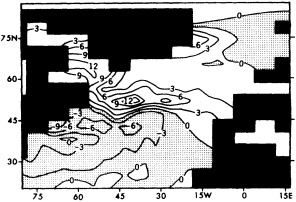

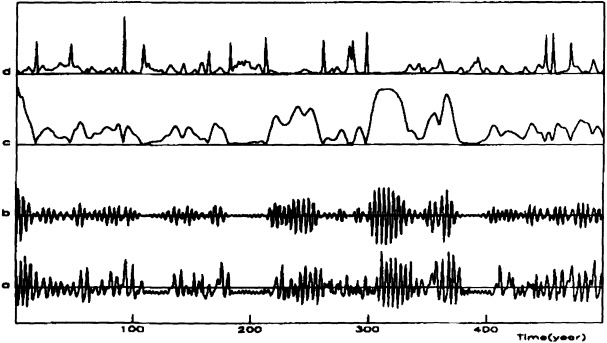

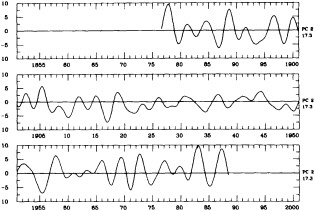

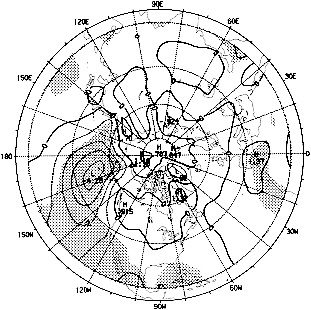

Using the same surface fields and focusing on the changes in the Atlantic atmosphere-ocean system over multidecadal periods during the last century, Kushnir (1992) found the relationship between changes in the SST and changes in the overlying surface atmospheric circulation significantly different from that associated with higher-frequency variability in the Atlantic climate system. In this multidecadal transition, the atmospheric circulation changes are consistent with a local quasigeostrophic response to the changes in the ocean SST (see Figure 3). The hydrographic record for the same period indicates that the SST changes are echoed in the deep ocean, where concomitant changes in salinity are found (Lazier, 1988; Levitus, 1989a,b; see also Figure 1). Thus, as is not true for the higher-frequency "modes," evidence suggests that changes in the thermohaline circulation and sea-ice export from the Arctic Ocean are associated with this multidecadal transition.

In order to identify the climate variability in and around the Atlantic basin on decade-to-century and longer time scales, ACCP-sponsored studies have been begun that will determine the extent to which the instrumental records used in the studies mentioned earlier can be augmented (e.g.,

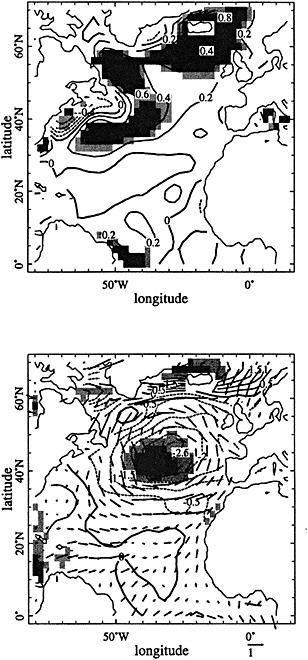

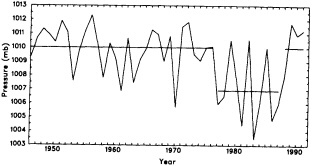

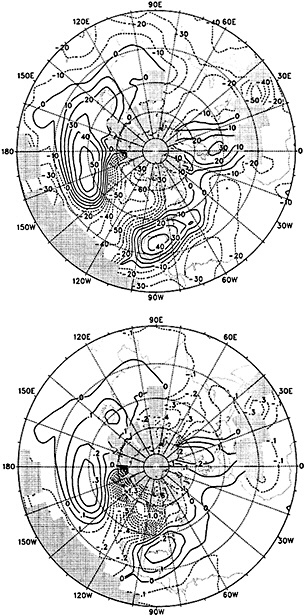

FIGURE 3

Decadal time-scale climate anomalies in the wintertime (December-April) in the North Atlantic Panel (a): the difference between the average wintertime SSTs between 1950 and 1964 and between 1970 and 1984. The former period (1950-1964) was characterized by warmer-than-normal water in the winter in the North Atlantic, the latter (1970-1984) by anomalously cold water. The contour interval is 0.2°C. Panel (b): as in (a), except for the attendant atmospheric surface variables: sea-level pressure (contour interval 0.5 mb) and vector winds (a 1.0-m/s vector is found below the panel). (From Kushnir, 1994; reprinted with permission of the American Meteorological Society.)

surface salinity and hydrographic data). Nonetheless, the state of the (Atlantic) climate system, as realized from direct measurements of the climate state variables, will remain insufficient to permit assessment of the natural climate variability on decadal and longer time scales, especially away from the earth's surface. Thus, within the ACCP there are also ongoing studies to identify sources of proxy data that will define and constrain the variability in the Atlantic climate system. These studies include an analysis of dendroclimatic and ice-core data surrounding the North Atlantic as well as an analysis of deep-sea, high-sedimentation-rate cores. Encouraging preliminary results from these studies were presented by D'Arrigo et al. (1992), Bond et al. (1992), and Keigwin and Boyle (1992) at the 1992 ACCP Principal Investigators' meeting.

The usefulness of a single proxy data source for inferring the intermediate-time-scale variability in climate or a climate state variable is often limited for two reasons. First, common proxy data are not usually available for the entire globe (e.g., coral is found only in the tropics). Thus, a proxy source, in isolation, cannot be used to differentiate local and global climate variations. Second, climatic implications from a single proxy source can be ambiguous. For example, the cambial activity of trees depends on both seasonal temperatures and precipitation; for many species of trees it is difficult to determine uniquely the relationship between these two climatic variables and the cambial activity. Many proxy climate indicators must be used to ensure consistency and remove any ambiguity in the historical climate record assembled from the proxy data, and to better assess whether the climate variability is regionally confined or global in extent. The utility of this effort is illustrated by the remarkable progress made on the climate of the last glacial maximum (CLIMAP, 1981). In this regard, the IGBP Past Global Change project (PAGES) is timely (IGBP, 1992; see also Bradley, 1991).

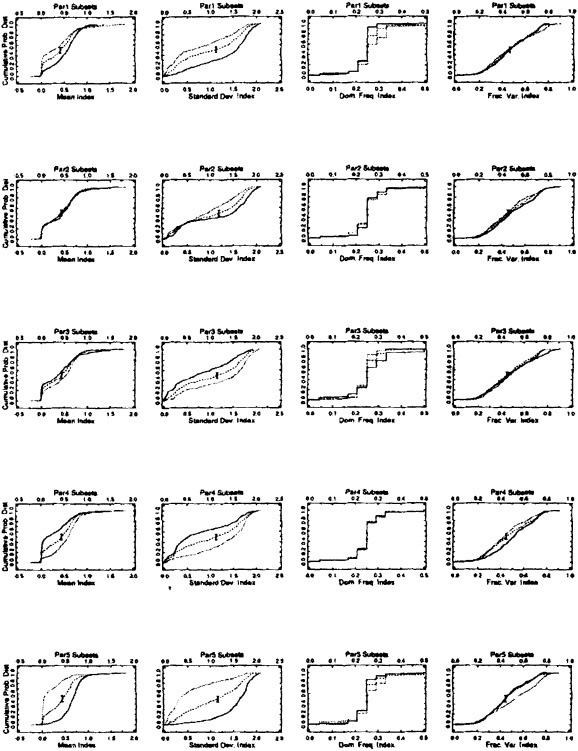

IDENTIFYING POTENTIAL CLIMATE VARIABILITY ON DECADE-TO-CENTURY TIME SCALES FROM NUMERICAL MODELS: STRATEGIES AND LIMITATIONS

Numerical models will be the primary tools by which the mechanisms responsible for decade-to-century-scale climate variability are identified. More important, these same models will frequently be the instruments that scientists use to identify target intermediate-time-scale climate phenomena, because instrumental data exist only for the last century and, as mentioned above, for only a limited domain of the climate system. For the same reasons it will often be difficult to determine whether simulated phenomena are ever realized in nature. Thus, our confidence that a simulated phenomenon could occur will result only from an a priori assessment of how accurately the model simulates many well-documented phenomena.

A model to be used for studying natural variability in the climate system on the intermediate time scales must include complete and interactive modules for the four central media: the atmosphere, global oceans, the global terrestrial marine biosphere, and sea ice. An important aspect of this system for the intermediate-time-scale climate studies is an accurate and complete representation of the hydrologic cycle (OCP, 1989). Climate variability models are the result of marrying the individual models for the four media, each of which has first been tested in isolation by prescribing the appropriate boundary conditions for the state of the adjacent media. (When appropriate, the flux of energy is prescribed at the boundaries.) The tests of the uncoupled models do not guarantee a good coupled climate model, but are practical first steps.

Finally, full general-circulation models (GCMs) for the atmosphere and global oceans are required to study the intermediate-time-scale climate variability. There is no evidence from the observational data that the intermediate-time-scale climate variability is regionally confined—for example, to within a hemisphere, an ocean basin, or to the near-surface ocean—as the interannual climate variations seem to be.

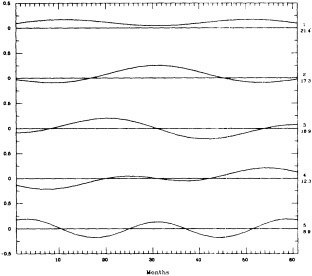

Prerequisite Constraints for the Uncoupled Modules

The foremost test of the uncoupled component modules is the ability of the model to reproduce the seasonal cycle, and thus the annual mean state, when forced by the imposed boundary conditions. The annual cycle is an test case for validating uncoupled component modules, because in most cases the annual cycle is well known for many of the climate state variables; in some cases the product moments are also reasonably well known (e.g., the meridional heat transport in the atmosphere). For the coupled atmosphere and land-surface modules, the diurnal cycle provides a second excellent test. The diurnal cycle of near-surface fields of air temperature, moisture, clouds, and wind are well-observed quantities over land. An accurate simulation of these cycles throughout the year provides a rigorous test of the impact of the combined boundary-layer physics and surface-flux parameterizations that act to maintain the simulated climate.

The general-circulation models for the atmosphere (AGCMs) are routinely validated by comparing the simulated climatology with that observed for variables or features that include the jet structure, variation of the height of selected geopotential surfaces in the troposphere, the zonal mean distribution of temperature, zonal wind, and SLP. It is of crucial importance for climate variability, however, that the models accurately simulate the observed annual cycle of all the boundary-layer fluxes: momentum, sensible, convective, latent, and radiative fluxes at the surface, and outgoing long-wave flux at the top of the atmosphere.

The sea-ice models should be required to provide an adequate simulation of the seasonal cycle of surface temperature, sea-ice thickness, and ice advection and production—all quantities that are qualitatively known from observations (e.g., Walsh et al., 1985). The ocean models must be able to simulate the climatological annual mean circulation and hydrographic structure. In the tropics there is a significant and well-documented annual cycle in the upper-level currents and hydrography that provides additional constraints on the model. Especially important is the accurate simulation of the global SST. Until recently the SST was constrained in many ocean modeling studies by a rapid forced relaxation to a prescribed climatology.

A relaxation to a climatologically observed hydrography has also been used in and below the thermocline in many numerical studies of ocean general circulation (Hibler and Bryan, 1987; Semtner and Chervin, 1988); in some instances maintenance of the thermocline is avoided by limiting the length of integrations, so equilibrium is never achieved. This practice has been useful for short-term simulations of the upper-ocean circulation away from convective regions (e.g., Philander et al., 1987) but is clearly inappropriate for the study of climate and intermediate-scale climate fluctuations. The limited observational data indicate that the entire ocean domain can be involved in climate variations on these time scales (e.g., Levitus, 1989a), although it is not clear, for instance, whether the deep ocean is necessarily an active player or just a barometer for the changing surface processes via the resultant convection.

Independent tests of the component models should also include constraints that can de deduced from certain tracers that are well observed and whose annual cycle and mean distributions are understood. For the atmosphere, these include the 85Kr, Freons, and CO2, which have already been used to independently validate the mean cross-equatorial exchange rate of one AGCM (Tans et al., 1989). For the ocean, the bomb-produced tracer 14C can be utilized to assess the accuracy of the simulated Atlantic Ocean circulation and the concomitant mixing processes (Toggweiler et al., 1989). Further insights into the oceanic mixing processes and convection, both of which are parameterized in ocean models, may be obtained by comparing the Lagrangian transport in models with observed distributions of other tracers, including the chlorofluorocarbons.

Finally, there are well-observed phenomena in the atmosphere and ocean that occur on interannual time scales that can be used to help validate the models. For the atmosphere models these include the QBO and the Southern Oscillation, and for the Pacific Ocean models, the El Niño.

There is a tremendous amount of work to be done on documenting the impact of the parameterization of unresolved physics on the simulated large-scale, low-frequency climate. Recent studies have demonstrated that different parameterizations for convection in both atmosphere and ocean GCMs can result in qualitatively different mean circulations and climate variability; an example is discussed below. Similarly, the recent advances in understanding the dynamics and thermodynamics of individual clouds must be extended to yield quantitative descriptions and parameterizations of the energy and mass transport by an ensemble of clouds on the scale of an AGCM grid.

Recent Results: Overturning the Rocks

In this section I will use three examples that I believe presage the results that will be achieved during the 1990s in modeling intermediate-time-scale climate variations.

Example 1. Over the last decade, studies have been published on the response of the wintertime Northern Hemisphere atmosphere to the principal mode of the observed interannual SST anomaly in the North Pacific Ocean (e.g., Pitcher et al., 1988). In these studies, which utilized AGCMs, investigators prescribed a perpetual January insolation to ensure statistically significant results with limited computational resources. The model's response to the prescribed SST anomaly in each of these experiments was contrary to that observed (e.g., Wallace and Jiang, 1987). The polarity of the model's geopotential anomaly was independent of the polarity of the forcing anomaly. Recently, Lau and Nath (1990; hereafter LN) examined the response of the GFDL AGCM to the observed 1950-1979 SST and an annual cycle in insolation.7 Upon isolating the circulation anomalies associated with the North Pacific SST anomalies, LN found that the model anomalies were indeed consistent with those observed (i.e., a quasi-linear relationship between anomalies in SST and geopotential). Kushnir and Lau (1992) used the same model as LN and repeated the perpetual-January experiments of Pitcher et al. (1988). The results of this study were consistent with those of the earlier perpetual-January experiments, and contrary to LN and the observational data. Thus, the discrepancies between the physics of the observed atmospheric response to SST anomalies and that found in the simulations of Pitcher et al. and of Kushnir and Lau can be explained by the prescribed unrealistic (perpetual-January) forcing. The moral here is that compromises in the experimental plan that are made because of computational constraints may lead to fallacious conclusions.

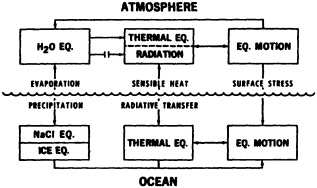

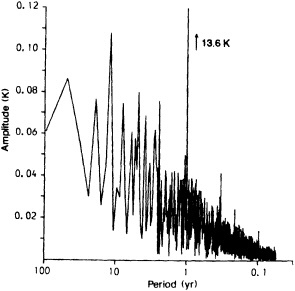

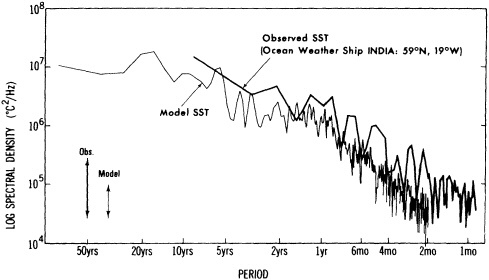

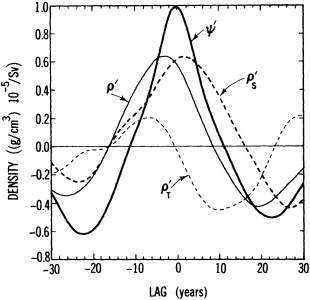

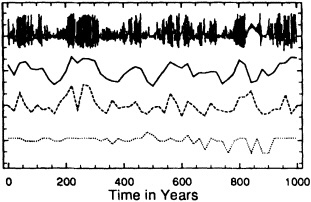

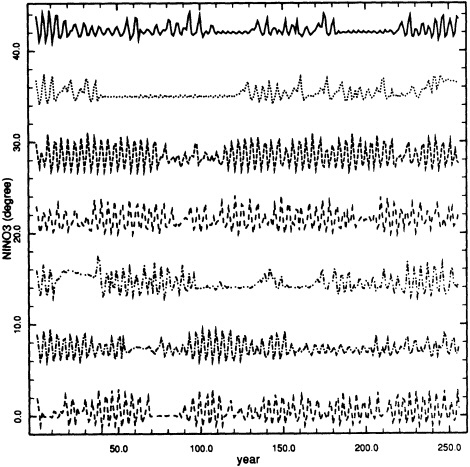

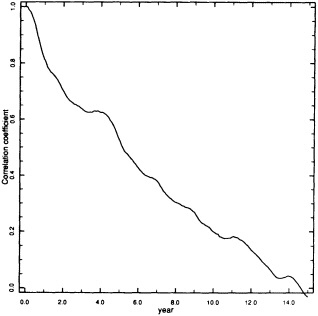

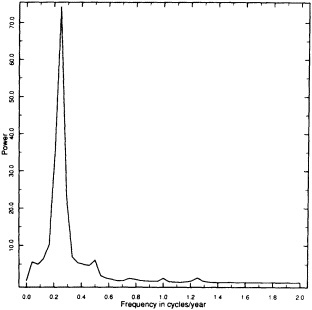

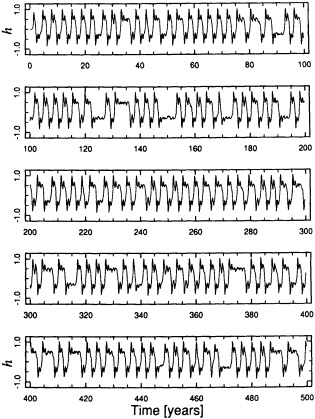

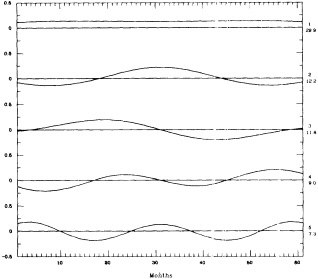

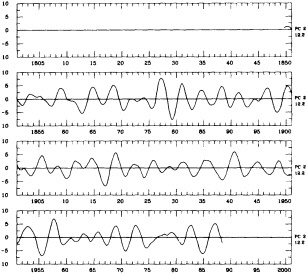

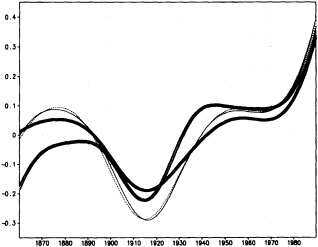

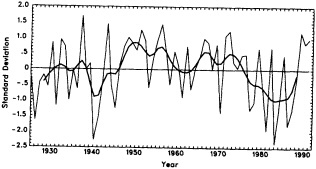

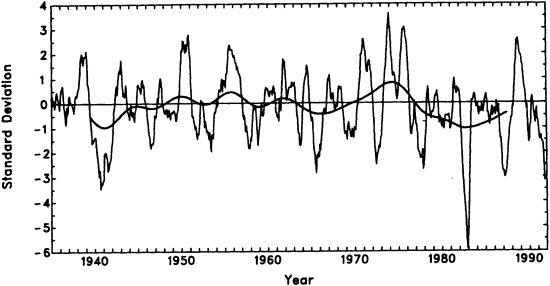

Example 2. James and James (1989) employed a primitive-equation model of the atmosphere (T21, five layers), prescribing the annual cycle as the only long-term forcing; slow variations in SST or insolation were not permitted in their experiment. In this model, the variance in the largest-scale structures in the circulation was on a decadal or longer

time scale (Figure 4). Here the moral seems to be that variability on the intermediate time scale may be internal to one or more of the principal climate media, and coincidental to any variations in either the external forcing or in the surrounding media.

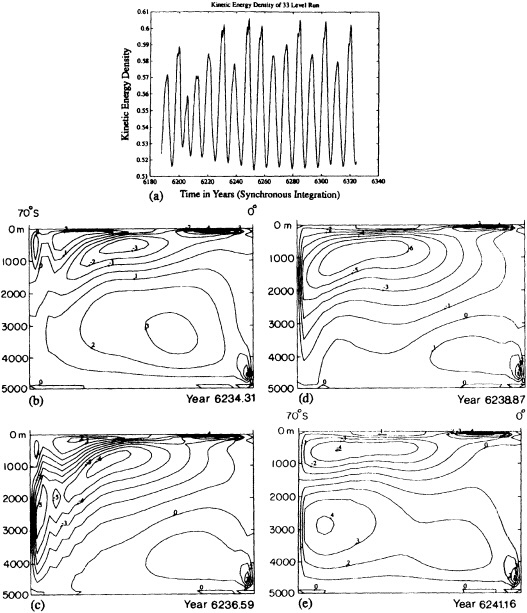

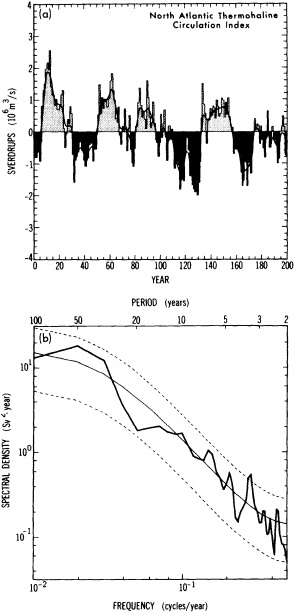

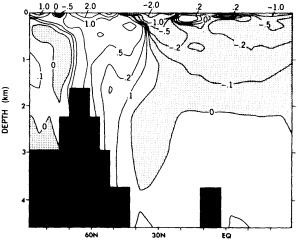

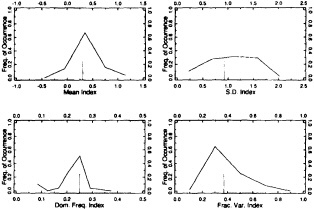

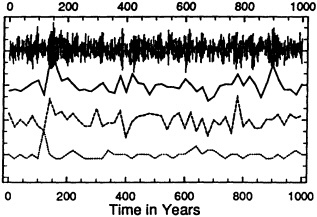

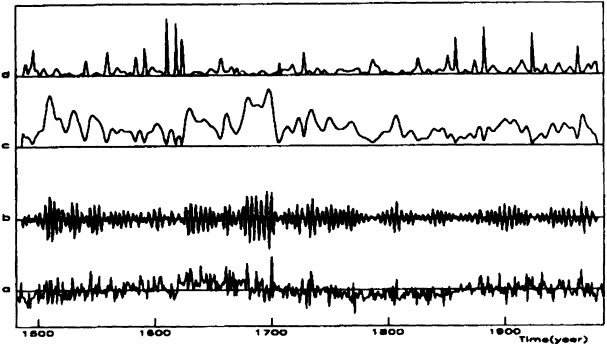

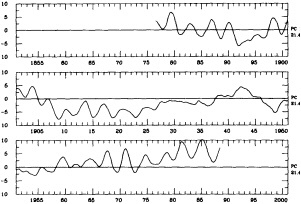

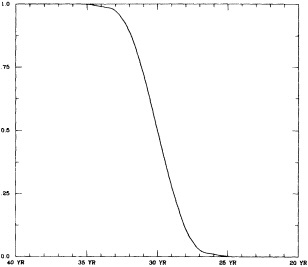

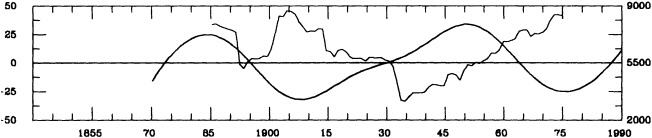

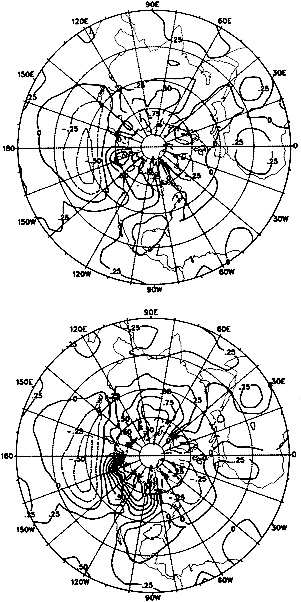

Example 3. A similar cautionary note is found in the studies of Weaver and Sarachik (1991a,b; hereafter WS). WS employed a stand-alone ocean-sector model to examine the variability in the thermohaline circulation (THC). They found that under steady mixed surface-boundary conditions (i.e., linear relaxation to a prescribed surface temperature, and a flux boundary condition on surface salinity) the meridional circulation was not steady; rather, three ''climate states" were realized: a collapsed THC, a vigorous THC, and a stage with highly energetic decadal oscillations in the circulation. The decadal oscillations result from the interaction between the convection in the polar regions and the buoyancy advection in the subtropical and polar-gyre circulations (Figure 5). These oscillations are accompanied by up to a threefold change in the poleward transport of heat. Throughout the oscillation, the deep ocean essentially acts as a reservoir, and thus works to maintain the long-term mean thermocline. Eventually, the THC collapses, and the decadal oscillations cease. The WS studies clearly indicate the potential for rich and unexpected intermediate-scale variability that is internal to the ocean.

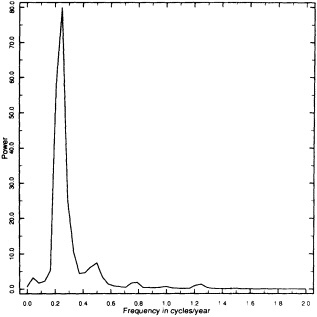

FIGURE 4

Spectrum of mean pole/pole temperature difference from a 96-year integration of an atmospheric primitive-equation model. The only long-term forcing is a prescribed annual cycle in the radiative relaxation temperature; no energy exchange is allowed at the surface of the top of the atmosphere. Note the spectral peak at 12 years. (From James and James, 1989: reprinted with permission of Macmillan Magazines Ltd.)

These and additional results presented in WS also serve to illustrate the state of affairs in ocean climate modeling. Here, I will only point out that the stability of the climate states found in WS depended on the type of convective scheme used, the type of boundary condition set for fresh-water, whether synchronous or asynchronous time stepping was used, and the horizontal resolution.

Together, these three examples illustrate the importance of extensive and meticulous examination of all the uncoupled models that will be used to study the intermediate-time-scale variations in climate. Such an examination should document the following prior to constructing a full climate model for use in studying intermediate-scale climate variability: the ability of all models to simulate multiple observed phenomena, the sensitivity of the solutions to the parameterized physics, and the sensitivity of the component models to the chosen numerical methods of solution and to the shortcuts that are motivated by computational constraints (e.g., perpetual-January insolation, asynchronous time stepping).

Validating the Performance of the Coupled Models

The numerical models that will be used to study intermediate-time-scale variability in climate will necessarily allow interactions between the atmosphere and global oceans and include modules for the land-surface hydrology and cryosphere. The climate system models will be validated by comparing the simulated modes of variability with those that are indicated by a careful analysis and synthesis of the multiple, contemporaneous proxy indicators for the global climate state. Stream One of PAGES is a very ambitious program to reconstruct the global climate since 2000 years BP using the available proxy data sources collectively. The temporal resolution of this climate record is expected to be decadal or better, so the reconstruction will provide the modeling community with "target" phenomena. The data from this program, which is not yet under way, will not be available for quite some time. It is reasonable to assume, however, that the climate models least prone to yielding non-physical solutions on the intermediate time scales are the models that can accurately simulate many of the directly observed and well-documented higher-frequency phenomena in the climate system. Thus, a prerequisite for a model for intermediate-time-scale climate studies is accurate reproduction of many of the already well-observed target phenomena and features.

The same target phenomena that are appropriate for testing the stand-alone component models are also appropriate for testing the climate system model. For example, the system model should adequately simulate the observed diurnal cycle in the near-surface atmosphere over land. It also should accurately simulate the annual cycle in each medium, especially for the state variables adjacent to the media inter-

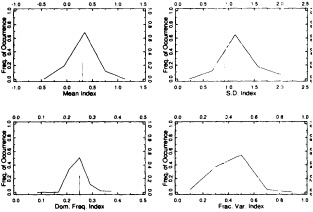

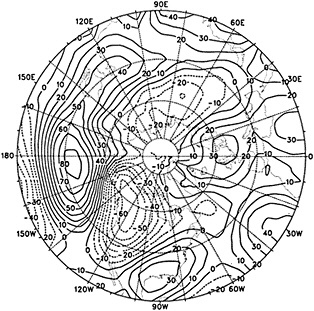

FIGURE 5

(a) The temporal variation in the kinetic energy density in an ocean-sector model, forced by "mixed" boundary conditions. The regular oscillations at 8.6 years are associated with cyclic changes in the meridional overturning circulation; the four characteristic stages are displayed in panels (b) through (e). Positive contours denote clockwise transport. (From Weaver and Sarachik, 1991b; reprinted with permission of the Canadian Meteorological and Oceanographic Society.)

faces and for the energy and mass exchange between the media. Wherever possible, both annual and diurnal cycles should be validated against those observed.

Important new constraints are available to the climate system model on the annual and especially the interannual time scales. Subtle discrepancies between the observed and simulated interfacial fields and fluxes formed with the standalone atmosphere and ocean models are often amplified in the coupling process. This amplification leads to a coupled climate state that is qualitatively different from either of the states achieved by the two uncoupled models in isolation. These "setbacks," however, are fruitful because they often indicate a physical deficiency in a component model that, when corrected, yields a more accurate climate model. The unpublished studies by Gordon (1989). Latif et al. (1988), and Mechoso et al. (1991) provide excellent examples of

how the coupling of atmosphere and ocean models can be used to identify the subtle but key processes involved in the maintenance of the present mean climate.

The observations also clearly demonstrate that certain coordinated atmosphere-ocean modes on the interannual time scale exist only because of the interaction between these media. The most robust example is ENSO, although there is increasing evidence for one or more western Northern Hemisphere coupled atmosphere-ocean modes. The absence of these unforced natural "modes" or phenomena in a climate system model can be helpful in isolating serious deficiencies in the model physics or in the formulae that govern the exchange of energy and mass between the media.

CONCLUSIONS AND DISCUSSION

Within the scientific community interest is growing in identifying and analyzing the variability in the full climate system on decade-to-century, or intermediate, time scales through modeling and observational studies. The resulting increased research activity can be attributed both to the advances in research on the interannual variability of the coupled atmosphere-ocean climate system and to the practical problems that have arisen in the detection of an anthropogenically forced climate change. The primary limitation on the study of climate variation on the intermediate time scale is that the instrumental records for many climate variables either do not exist or are too short to detect a phenomenon with any statistical measure of confidence. Proxy data for climate, therefore, will play an important role in documenting the past climate variability on the intermediate time scales and, most likely, in evaluating the simulated climate variability. This approach represents a significant change from the traditional modus operandi, wherein the models are used mainly to simulate phenomena that are already well observed. The goals and research activities supported by the ACCP illustrates these points.

Numerical models will be the primary tools by which the mechanisms responsible for decade-to-century-scale climate variability are identified and, most important, will frequently be the instruments scientists use to identify the intermediate climate phenomena. Thus, it is extremely important that the veracity of climate system models be evaluated a priori by assessing how accurately they simulate many well-documented phenomena. These "target" phenomena include the seasonal and diurnal cycles of the state variables that are well documented in nature, the fluxes of energy and mass at the media interfaces. Also necessary is a quantitative comparison of the processes that are responsible for these cycles in the model with those that are observed. Other prerequisites for a climate system model should include its ability to simulate the robust natural interannual variability that is observed within the individual media (e.g., the QBO) and in the coupled atmosphere-ocean system.

Where are we now? At present, no climate system models include a satisfactory treatment of the cryosphere and land hydrology for the intermediate-scale climate problem. In fact, there are only two climate models that have the potential to resolve the quantitative three-dimensional circulation of both the atmosphere and the global oceans: the Hamburg model (Cubash et al., 1991, unpublished manuscript) and the GFDL model (Manabe et al., 1992). Both of these models require flux corrections to achieve a mean climatology that is qualitatively consistent with that observed.8 To date these and other models have been used primarily to simulate the climate of the recent ice age and to estimate the potential for large climate changes due to an increase in the atmospheric CO2 concentration (IPCC, 1990; Delworth et al., 1995, in this chapter). These climate perturbations are extremely large in both amplitude and in spatial scale by comparison with the climate variations that are anticipated—and have thus far been observed—on the intermediate time scale. In addition, the agents responsible for the glacial cycles are unlikely to be relevant to the intermediate climate problem. Thus, the success of the climate system models in reproducing geologic time-scale variations will be of little use in assessing the veracity of the intermediate-scale variability that is produced by the same model. For these and many other reasons it is unclear whether the decade-to-century-scale variability produced by any of the existing climate system models has any bearing on the variability in the true climate system.

The research activities over the last 10 years have led to incredible progress in the modeling of climate, especially on the geologic time scales. On the intermediate time scale, significant progress has also been made. Important recent results achieved using stand-alone models of the individual media and the GFDL complete climate-system model will provide a great deal of guidance in establishing the scientific priorities for the next decade. These priorities include improvements in the parameterization schemes for certain small-scale processes, and the identification of key physics through extensive model-sensitivity experiments. The results of studies that address these and other central issues will be instrumental in the design of reliable models of decade-to-century-scale variability in the climate system.

ACKNOWLEDGMENTS

This work was supported by the National Science Foundation (Grant ATM 8822980) and the National Oceanic and Atmospheric Administration's Office of Global Programs and EPOCS. This is contribution number 217 to the Joint Institute for the Study of the Atmosphere and Oceans (JISAO).

Commentary on the Paper of Battisti

GERALD R. NORTH

Texas A&M University

Dr. Battisti's presentation made a broad sweep over many of the issues that we have talked about this week. He has thus given me the opportunity to touch on a few of these in relation to my own paper.

I tend to approach the question of long-time-scale change in an engineering fashion: I wonder whether we can tell from all the different records that we have whether there really is any anthropogenic forcing, and whether we are seeing any response to it. I recognize that there are many other reasons for studying these time scales, but this is certainly an important one. I find it particularly interesting that as we try to make some kind of statistical test of what we have seen in the last century, it is the frequencies just bordering on the decadal range from below for which we really need answers.

I should like to talk a little about two Hasselmann-type models, which could also be called default models. The first one is noise forcing on a mixed-layer slab model with geography; the other one is a deep-ocean extension of that, but still very crude: It distorts the spectrum somewhat at low frequencies. One of the things I noticed as we went through the week—Ed Sarachik and Bob Dickson have already mentioned it—is the failure of these models to generate intermediate water very well. That has a very important impact right in the frequency band (100 yr)-1 to (10 yr)-1. Also, one of the odd things about our deep-ocean model is that it cannot get information down to the thermocline area very well. The giant models adjust so quickly onto the ramp curve that I suspect they might be getting information down there too fast.

All this makes me wonder whether the kinds of things that Jim McWilliams brought up earlier might be fairly serious—for instance, when we construct our bottom topography out of stair steps. Going out in Reynolds number on that lovely bifurcation diagram that he showed is equivalent to going to higher and higher resolution. In our present models we have gone past only a couple of bifurcations, and it is hard to tell whether we are seeing fictitious oscillations in our model world that might go away if we were to go just a little bit further. I do not mean to criticize the people who are working on these models; I think they are doing what needs to be done. Consider, for instance, some of the things that Dr. Rooth and Dr. Barnett showed. I cannot help suspecting that if we were to look at the Mikolajewicz/ Maier-Reimer model, we might see a peak at 300 years (recall the film loop that we viewed earlier this week), and in the Delworth et al. work we might see a peak in the 50-year range. These would, of course, have a large impact on our ability to pick out a signal or to infer how rare such an excursion might be in, say, the last century. Clearly, we have a lot to do before we can unravel these problems, so I again raise Dr. McWilliams's issue.

I should also like to mention once more something that Dick Lindzen pointed out on the first day, since I think it is important but easy to miss. Up at the top of the ocean there is a "valve" that allows the radiation to go out and be absorbed. It amounts to an effective cooling coefficient, with all the feedbacks and everything that must go into it, and thus controls the spectrum and basically the sensitivity of the atmospheric model. If we have an otherwise good atmospheric model that does not do the clouds right, its sensitivity may be wrong by a factor of 2 or even 3, and that error will actually control the spectrum in the low-frequency range. It will have a rather important influence on fluctuations, even at the decadal level.

I hope that Dr. Battisti will open the discussion with some ideas about where we should be going in modeling, and particularly how fine the scales must be to get at some of the questions that we need to answer.

Discussion

BATTISTI: Explicitly examining the mechanisms and processes that yield variations in climate would take more computational power than I for one can fathom, even if we looked at only a subset of the system. I think what we should try for is determining the sensitivity of the simulated climate variability to the parameterization schemes for the unresolved physics. That sensitivity is likely to be a strong function of model resolution.

SARACHIK: You know, it seems to me that we should start concentrating on the signal rather than the noise. Some of the variability we've been talking about is undoubtedly scientifically interesting, but it's relatively local. It seems to be bounded by something on the order of one-third or one-half of a degree of temperature. My inclination is to look at longer time scales, something like 1000 years, and a signal big enough to be called climate change.

REIFSNYDER: I'd like to take philosophical issue with your statement that models are used to test hypotheses. I'd say that models are simply functional expressions of hypotheses, and any meaningful testing must involve independent data sets. Testing against data used in the specification of the model, or against general phenomena, won't tell you anything about predictive power.

BATTISTI: Well, that's true. The solution to a model is really the solution to a set of equations. But what worries me is the possibility that the results of complex, numerical GCMs will be used to build hypotheses of how model phenomena come about, without adequate attention to whether those phenomena appear in the observational or proxy data bases. I'd rather see models used to test hypotheses about the mechanisms responsible for observed phenomena. That is, we should be testing our understanding of a phenomenon, not defining one.

LINDZEN: I think that our interest has traditionally been signal detection—greenhouse warming, for instance. That implicit search for something dramatic worries me. I think we should be equally concerned with the constraints on detectable phenomena provided by the data themselves.

LEVITUS: It seems to me essential that we understand decadal-scale variability better before we can go on to longer time scales or develop better models. First we have to be able to parameterize the processes better and to understand the system on shorter time scales.

WEAVER: The people who complain that this or that process hasn't been included in a model seem not to understand that you can't possibly do a systematic analysis by putting everything in at once.

RIND: A propose of some comments by both Dave and Ed, I'd like to mention again that the NSF/NOAA ARRCC—Analysis of Rapid and Recent Climate Change—program is currently working on reconstructing the big climate changes over the past 1000 years, which includes two cold periods and one warm. We want to put together a worldwide picture of how the climate then compares with today's, and ultimately see whether models can reproduce it.

MARTINSON: Jerry, I just wanted to add that I'm glad you emphasized that it's almost impossible to evaluate the role of sea ice in long-term changes when a model has prescribed flux corrections, since in reality so much of that flux is driven by sea ice itself.

North Atlantic Interdecadal Variability in a Coupled Model1

THOMAS L. DELWORTH, SYUKURO MANABE, AND RONALD J. STOUFFER2

ABSTRACT

A fully coupled ocean-atmosphere model is shown to have irregular oscillations of the thermohaline circulation in the North Atlantic Ocean with a time scale of approximately 40 to 50 years. The fluctuations appear to be driven by density anomalies in the sinking region of the thermohaline circulation combined with much smaller density anomalies of opposite sign in the broad, rising region. Anomalies of sea surface temperature associated with this oscillation induce surface air temperature anomalies over the northern North Atlantic, the Arctic, and northwestern Europe. The spatial pattern of sea surface temperature anomalies bears an encouraging resemblance to a pattern of observed interdecadal variability in the North Atlantic.

INTRODUCTION

Substantial variability in the North Atlantic Ocean occurs on time scales of decades; this variability is thought to be associated with fluctuations in the large-scale meridional overturning (Gordon et al., 1992). This meridional overturning consists of cold, saline water sinking at high latitudes of the North Atlantic and flowing equatorward at depth, upwelling throughout the world oceans, and returning as a northward flow of warm, salty water in the upper layers of the Atlantic ocean. This overturning largely determines the oceanic component of the northward transport of heat from the tropics to higher latitudes and is thus essential for the maintenance of climate in the North Atlantic region (Manabe and Stouffer, 1988). Fluctuations in the intensity of this overturning have the potential to affect climate (Bjerknes, 1964) in both the ocean and the atmosphere. Interdecadal fluctuations in this overturning are thus of potentially critical importance, not only for their direct impact on the climate of the North Atlantic and European regions but also because of the implications of such variability for the detection or modification of anthropogenic climate change. The presence of interdecadal variability in the coupled ocean-atmosphere system makes the detection of anthropogenic climate change more difficult.

The incompleteness of the observational record makes it difficult to study variations in this overturning and the associated processes solely on the basis of the observational data. As a complementary approach, the output from a 200-

year integration of a coupled ocean-atmosphere model is used to study the stability and variability of this meridional overturning (hereafter referred to as the thermohaline circulation, or THC). The output from this model, developed at NOAA's Geophysical Fluid Dynamics Laboratory, forms the basis for the analyses presented here. Several previous modeling studies (Mikolajewicz and Maier-Reimer, 1990; Weaver and Sarachik, 1991b; Weaver et al., 1991; Winton and Sarachik, 1993) have used ocean-only models to study the variability and stability of the thermohaline circulation, in contrast to the fully coupled ocean-atmosphere model employed in the present investigation. Since the mechanisms governing the fluxes of heat and fresh-water across the ocean surface differ substantially between the two types of models, the models might be expected to have different time scales for variations in the THC. While the quantitative results presented here will depend on the details of the model formulation and the physical parameterizations employed, it is hoped that the physical processes identified in this model study are robust and are responsible for interdecadal variability in the North Atlantic. The present study demonstrates that substantial variability can occur in the coupled ocean-atmosphere system on time scales of decades in the absence of external forcings such as changing concentrations of greenhouse gases.

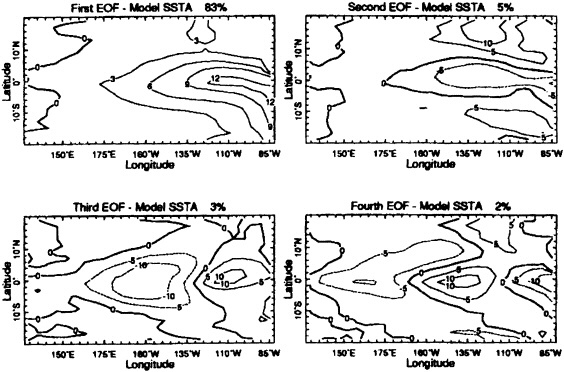

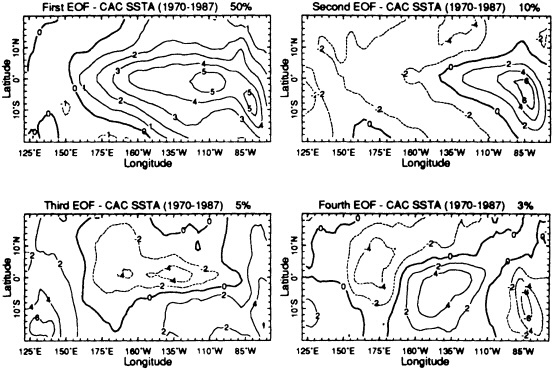

MODEL AND EXPERIMENTAL DESIGN