Elliott, L. 1996. Presentation to the Committee on Applications of Digital Instrumentation and Control Systems to Nuclear Power Plant Operations and Safety, Washington, D.C., April 16.

EPRI (Electric Power Research Institute). 1992. Advanced Light Water Reactor Utility Requirements Document, Appendix A. EPRI NP-6780-L. Palo Alto, Calif.: EPRI.

Finelli, G.B. 1991. NASA software failure characterization experiments. Reliability Engineering and System Safety 32:155–169.

Garrett, C.J., S.B. Guarro, and G. Apostolakis. 1995. The dynamic flowgraph methodology for assessing the dependability of embedded software systems. IEEE Transactions on Systems, Man and Cybernetics 25(5):824–840.

Gertman, D.I., and H.S. Blackman. 1994. Human Reliability and Safety Analysis Data Handbook. New York: John Wiley and Sons.

Goel, A. 1996. Relating operational software reliability and workload: Results from an experimental study. Pp. 167–172 in Proceedings of the 1996 Annual Reliability and Maintainability Symposium, Las Vegas, Nev., January 22–25, 1996. Piscataway, N.J.: Institute of Electrical and Electronics Engineers.

IEEE (Institute of Electrical and Electronics Engineers). 1983. IEEE Guide to Collection and Presentation of Electrical, Electronic and Sensing Component and Mechanical Equipment Reliability Data for Nuclear Power Generating Stations, Std 500–1984. New York: IEEE.

Johnson, B.W. 1989. Design and Analysis of Fault Tolerant Digital Systems. New York: Addison-Wesley.

Laprie, J-C. 1984. Dependability evaluation of software systems in operation. IEEE Transactions on Software Engineering 10(6):701–714.

Leemis, L.M. 1995. Reliability: Probabilistic Models and Statistical Methods. Upper Saddle River, N.J.: Prentice-Hall.

Leveson, N. 1995. Safeware: System Safety and Computers. New York: Addison-Wesley.

Littlewood, B., and D.R. Miller. 1989. Conceptual modeling of coincident failures in multiversion software. IEEE Transactions on Software Engineering 15(12):1596–1614.

Lyu, M. (ed.) 1996. Handbook of Software Reliability Engineering. New York: McGraw-Hill.

Marshall, P. 1995. NE Tries for Quantification of Software-based System. Inside NRC 17(20):9.

McCormick, N.J. 1981. Reliability and Risk Analysis. San Diego: Academic Press.

Miller, K.W., L.J. Morell, R.E. Noonan, S.K. Park, D.M. Nicol, B.W. Murrill, and J.M. Voas. 1992. Estimating the probability of failure when testing reveals no failures. IEEE Transaction on Software Engineering 18(1):33–43.

Mitchell, C.M., and K. Williams. 1993. Failure experience of programmable logic controllers used in emergency shutdown systems. Reliability Engineering and System Safety 39:329–331.

Modarres, M. 1993. What Every Engineer Should Know About Reliability and Risk Analysis. New York: Marcel Dekker.

Newman, P. 1995. NRC Takes a Chance, Turns to Risk-based Regulation. The Energy Daily 23(168):3.

NRC (National Research Council). 1994. Science and Judgment in Risk Assessment. Board on Environmental Studies and Toxicology. Washington, D.C.: National Academy Press.

Parnas, D., A.J. van Schouwen, and S.P. Kwan. 1990. Evaluation of safety-critical software. Communications of the Association of Computing Machinery (33)6:636–648.

Paula, H.M. 1993. Failure rates for programmable logic controllers. Reliability Engineering and System Safety 39:325–328.

Paula, H.M., M.W. Roberts, and R.E. Battle. 1993. Operational failure experience of fault-tolerant digital control systems. Reliability Engineering and System Safety 39:273–289.

Profetta, J. 1996. Presentation to the Committee on Applications of Digital Instrumentation and Control Systems to Nuclear Power Plant Operations and Safety, Washington, D.C., April 16.

Pullum, L.L., and J. Bechta Dugan. 1996. Fault tree models for the analysis of complex computer systems. Pp. 200–207 in Proceedings of the 1996 Annual Reliability and Maintainability Symposium. Las Vegas, Nev., January 22–25, 1996. Piscataway, N.J.: IEEE.

RAC (Reliability Analysis Center). 1995. Nonelectronic Parts Reliability Data 1995. Rome, N.Y.: Reliability Analysis Center.

Rushby, J. 1995. Formal Methods and Their Role in the Certification of Critical Systems. Technical Report CSL-95-1. Menlo Park, Calif.: SRI International. March.

Simon, B. 1996. Presentation to the Committee on Application of Digital Instrumentation and Control Systems to Nuclear Power Plant Operations and Safety, Irvine, Calif., February 28.

Singpurwalla, N.D. 1995. The failure rate of software: Does it exist? IEEE Transactions on Reliability 44(3):463–466.

Sudduth, A.L. 1993. Hardware aspects of safety-critical digital computer based instrumentation and control systems. NUREG/CP-0136. Pp. 81-104 in Proceedings of the Digital Systems Reliability and Nuclear Safety Workshop. U.S. Nuclear Regulatory Commission and the National Institute of Standards and Technology, September 13–14, 1993, Rockville, Md. Washington, D.C.: U.S. Government Printing Office.

Taylor, R.P., and A.J.G. Faya. 1995. Regulatory Guide for Software Assessment. Presented at 2nd COG CANDU Computer Conference, Toronto, Ontario. October.

USNRC (U.S. Nuclear Regulatory Commission). 1975. Reactor Safety Study: An Assessment of Accident Risks in U.S. Commercial Nuclear Power Plants. NUREG-75/014. USNRC report WASH-1400, October. Washington, D.C.: USNRC.

USNRC. 1980. Data Summaries of Licensee Event Reports of Diesel Generators at U.S. Commercial Nuclear Power Plants . NUREG/CR-1362. Washington, D.C.: USNRC. March.

USNRC. 1982a. Data Summaries of Licensee Event Reports of Pumps at U.S. Commercial Nuclear Power Plants. NUREG/CR-1205. Washington, D.C.: USRNC. January.

USNRC. 1982b. Data Summaries of Licensee Event Reports of Valves at U.S. Commercial Nuclear Power Plants. NUREG/CR-1363. Washington, D.C.: USNRC. October.

USNRC. 1993a. Class 1E Digital Systems Studies. NUREG/CR-6113. Washington, D.C.: USNRC.

USNRC. 1993b. Software Reliability and Safety in Nuclear Protection Systems. NUREG/CR-6101. Washington, D.C.: USNRC.

USNRC. 1995a. Presentation by USNRC staff (J. Wermeil) to the Committee on Application of Digital Instrumentation and Control Systems to Nuclear Power Plant Operations and Safety, Washington, D.C. October.

USNRC. 1995b. Verification and Validation Guidelines for High Integrity Systems. NUREG/CR-6293. Washington, D.C.: USNRC.

USNRC. 1996a. Development of Tools for Safety Analysis of Control Software in Advanced Reactors. NUREG/CR-6465. S. Guarro, M. Yau, and M. Motamed. Washington, D.C.: USNRC. April.

USNRC. 1996b. Presentation by USNRC staff (J. Wermeil) to the Committee on Application of Digital Instrumentation and Control Systems to Nuclear Power Plant Operations and Safety, Washington, D.C., April.

Walter, C.J. 1990. Evaluation and design of an ultra-reliable distributed architecture for fault tolerance. IEEE Transactions on Reliability 39(5):492–499.

Westinghouse/ENEL. 1992. Simplified Passive Advanced Light Water Reactor Plant Program–AP600 Probabilistic Risk Assessment. DE-AC03-90SF18495. Prepared for U.S. Department of Energy (DOE) by Westinghouse/ENEL. Washington, D.C.: DOE.

7

Human Factors and Human-Machine Interfaces

INTRODUCTION

New technology such as digital instrumentation and control (I&C) systems requires careful consideration of human factors and human-machine interface issues. New technologies succeed or fail based on a designer's ability to reduce incompatibilities between the characteristics of the system and the characteristics of the people who operate, maintain, and troubleshoot it (Casey, 1993). The importance of well-designed operator interfaces for reliable human performance and nuclear safety is widely acknowledged (IAEA, 1988; Moray and Huey, 1988; O'Hara, 1994). Safety depends, in part, on the extent to which the design reduces the chances of human error and enhances the chances of error recovery or safeguards against unrecovered human errors (Woods et al., 1994).

Experience in a wide variety of systems and applications suggests that the use of computer technology, computer-based interfaces, and operator aids raises important issues related to the way humans operate, troubleshoot, and maintain these systems (Casey, 1993; Sheridan, 1992; Woods et al., 1994). This experience is true for both retrofits (e.g., replacement of plant alarm annunciators) and the design of new systems (e.g., advanced plants).

Three recent studies highlight the importance of the "human factor" when incorporating computer technology in safety-critical systems. The study (FAA, 1996) conducted by a subcommittee of the Federal Aviation Administration (FAA) found interfaces between flight crews and modern flight deck systems to be critically important in achieving the Administration's zero-accident goal. They noted, however, a wide range of shortcomings in designs, design processes, and certification processes for current and proposed systems. Two surveys categorizing failures in nuclear power plants that include digital subsystems (Lee, 1994; Ragheb, 1996) found that (a) human factors issues, including human-machine interface errors, comprised a "significant" category (Lee, 1994; Ragheb, 1996); and (b) whereas the trend in most categories was decreasing or flat over the 13-year study period, events attributable to inappropriate human actions "showed a marked increase." The latter two studies are summarized in Chapter 4 of this document.

Two human-machine interaction issues frequently arise with the introduction of computer-based technology: (a) the need to address a class of design errors that persistently occur in a wide range of safety-critical applications or recur in successive designs for the same system; and (b) how to define the role and activities of the human operator with the same level of rigor and specificity as system hardware and software. Woods and his colleagues (1994) identify classic deficiencies in the design of computer-based technologies and show how these negatively impact human cognition and behavior. These include data overload, the keyhole effect, imbalances in the workload distribution among the human and computer-based team members, mode errors, and errors due to failures in increasingly coupled systems. A design sometimes manifests clumsy automation—that is, a design in which the benefits of the automation occur during light workload times and the burdens associated with automation occur at periods of peak workload or during safety- or time-critical operations (Wiener, 1989). Woods notes that design flaws result in computer systems that are strong and silent and, thus, not good team players (Sarter and Woods, 1995).

In many applications, the role and specific functions of the human operator are not rigorously specified in the design and are considered only after the hardware, software, and human interfaces have been specified (Mitchell, 1987, 1996). Human functions are then defined by default; the operator's role is to fill the gaps created by the limitations of hardware and software subsystems. Such design, or really the lack thereof, raises the question of whether the role and functions implicitly defined for the human operator(s) are in fact able to be effectively and reliably performed by humans. For example, are displays readable? Is information readily accessible? Is information presented at a sufficiently high level of aggregation/abstraction to support timely human decision making or does information integration and extraction impose unacceptable workload on the human operator?

Human factors engineers and researchers are quick to note that these problems are design problems, not inherent deficiencies of the technology (Mitchell, 1987; Sheridan, 1992; Wiener, 1989; Woods, 1993). Skillful design that effectively uses emerging technology can make a system safer, more efficient, and easier to operate. If digital I&C systems are to be readily and successfully applied in nuclear power plants, however, the design and implementation must guard against common design errors and properly address the role of humans in operating and maintaining the system.

Emerging results from both the research and practitioner communities of human factors engineering provide a range of guidance, e.g., Space Station Freedom Human-Computer Interface Guidelines (NASA, 1988); Human Factors in the Design and Evaluation of Air Traffic Control Systems (Cardosi and Murphy, 1995); User Interface Guidelines for NASA Goddard Space Flight Center (NASA, 1996). The guidance is limited, however. Anthologies of guidelines primarily address low-level issues, e.g., design of knobs and dials, rather than higher-level cognitive issues that are increasingly important in computer-based applications, such as mode error or workload (Smith and Mosier, 1988). Other guidance is conceptual or formulated as features to avoid rather than characteristics that a design should embody. For example, Wiener's notion of clumsy automation suggests a way to check a design for potential problems (Wiener, 1989), whereas Billings' human-centered automation (Billings, 1991) is a timely concept that should permeate computer design. Neither concept, however, provides readily implementable design specifications. Finally, because the science and engineering basis of human factors for computer-based systems is so new, little guidance is generally applicable (Cardosi and Murphy, 1995; O'Hara, 1994). Most studies are developed and evaluated in the context of a particular application. Thus, as the nuclear industry increasingly uses digital technology, human interaction with new computer systems must be carefully designed and evaluated in the context of nuclear applications.

Statement of the Issue

At this time, there does not seem to be an agreed-upon, effective methodology for designers, owner-operators, maintainers, and regulators to assess the overall impact of computer-based, human-machine interfaces on human performance in nuclear power plants. What methodology and approach should be used to assure proper consideration of human factors and human-machine interfaces?

Control Rooms in Existing and Advanced Plants

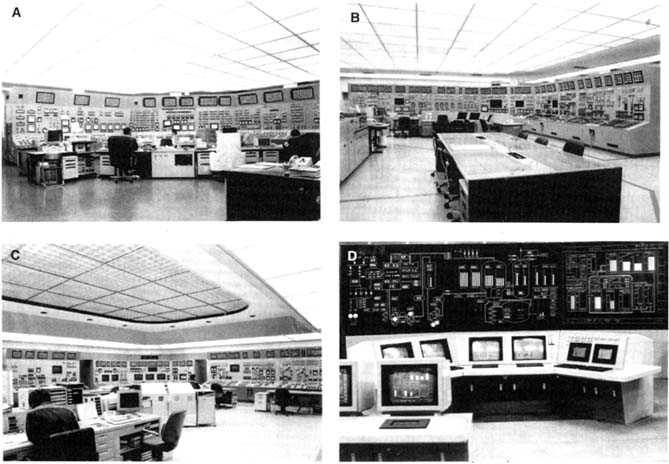

To acquire a context for the discussion that follows, consider the photographs of nuclear power plant control rooms in Figure 7-1, with plants ranging from the 1970s through the next generation plants of the late 1990s. These photographs show a typical progression of control rooms in nuclear power plants.

In early plants, controls and displays were predominantly analog and numbered in the thousands. In advanced plants, controls and displays are predominantly digital, with a control room that can be staffed, at least theoretically, by a single operator.

The photographs illustrate two important features associated with the introduction of digital systems in nuclear power plant control rooms. First is the need, in existing plants, to address the human factors issues of mixed-technology operations. That is, it is likely that, for the foreseeable future, control rooms in existing plants will combine both analog and digital displays and controls. Safety concerns and budget constraints ensure that for existing plants, digital technology will be introduced at a slow, cautious pace. This means, however, that good engineering practice evolved in analog systems is potentially compromised by the availability of digital systems. Likewise, the power and potential of digital controls and displays may be limited by the need to integrate them into a predominantly analog environment.

The second issue concerns the tremendous flexibility that digital technology offers to designers or redesigners of operator consoles and the control room as a whole. The flexibility and power of digital technology is both an asset and a challenge (Mitchell, 1996; Woods et al., 1994). Currently, the design of human-machine interaction lacks well-defined criteria to ensure that displays and controls adequately support operator requirements and ensure system safety. For example, there are no agreed-upon measures, other than subjective introspection, to measure cognitive workload. Design guidance is predominantly offered at low levels, e.g., color, font size, ambiance (NASA, 1988; Smith and Mosier, 1988). Guidance for higher-level, cognitive issues such as ensuring that appropriate information is available, task allocation is balanced, and both operator skills and limitations are adequately addressed is either minimal, stated quite vaguely, or application-dependent.

CURRENT U.S. NUCLEAR REGULATORY COMMISSION REGULATORY POSITIONS AND PLANS

The regulatory basis for human factors and human-machine interaction in nuclear power plant control rooms is given in Title 10 CFR Part 50, Appendix A, General Design Criteria for Nuclear Power Plants (Criterion 19, Control Room), 10 CFR 50.34(f)(2)(iii), Additional TMI [Three Mile Island]-Related Requirements (on control room designs), and 10 CFR 52.47(a)(1)(ii), Contents of Applications (for standard design certification dealing with compliance with TMI requirements).

Historically and for predominantly analog nuclear power plant control rooms, the U.S. Nuclear Regulatory Commission (USNRC) staff uses Chapter 18 (Human Factors

FIGURE 7-1 Evolution of Japanese nuclear power plant control rooms: (a) 1970s (Mihama-3 plant); (b) 1980s (Takahama-3 plant); (c) 1990s (Ohi-3 plant); (d) next generation plant. Source: Kansai Electric Power Co., Inc.

Engineering) of the Standard Review Plan (USNRC, 1984) and NUREG-0700, Guidelines for Control Room Design Reviews (USNRC, 1981). Both of these documents provide guidance for detailed plant design reviews. For new plants, if the design is approved and a standard design certification issued, it is expected that the implementation will conform to the specifications certified in the design review. Few changes in the control room design are expected between initial design and implementation.

In a 1993 memorandum, the USNRC Office of Nuclear Reactor Regulation communicated their 15 research needs related to human factors, five of which concerned human performance and digital instrumentation and control: (a) effects of advanced control-display interfaces on crew workload, (b) guidance and acceptance criteria for advanced human-system interfaces, (c) effect of advanced technology on current control rooms and local control stations, (d) alarm reduction, and (e) prioritization techniques and staffing levels for advanced reactors.

In 1994, the USNRC issued NUREG-0711, Human Factors Engineering Program Review Model. Recognizing the almost continuous changes in emerging human-system interface technology, the staff acknowledged that much of the human-machine interface design for advanced plants cannot be completed before the design certification is issued. Thus, the staff concluded that it was necessary to perform a human factors engineering review of the design process, as well as the design product , in advanced reactors. NUREG-0711 (USNRC, 1994) defines a program review model for human factors engineering that includes guidance for the review of planning, preliminary analyses, and verification and validation methodologies. This model is intended to be applied to advanced reactors under Title 10 CFR Part 52.

In 1995, the USNRC issued NUREG-0700 Rev. 1, Human-System Interface Design Review Guideline, as a draft report for comment (USNRC, 1995). NUREG-0700 Rev. 1 is intended to update the review guidance provided in NUREG-0700. NUREG-0700 was developed in 1981, well

before many computer-based human interface technologies were widely available, and thus the USNRC staff required guidance for USNRC reviews of advanced technologies incorporated into existing control rooms. NUREG-0700 Rev. 1 has two components: a methodology that the staff may use to review an applicant's human-machine interaction design plan and a set of detailed guidelines to review a specific implementation.

Existing Plants

As indicated above, the current guidance for incorporating advanced human-system interaction technologies in existing plants is provided by NUREG-0700 Rev. 1. It should be noted that this document is a draft report for comment. NUREG-0700 Rev. 1 is intended to complement NUREG-0800. It proposes both a methodology for reviewing the process of design of the human factors elements of control rooms and specific guidelines for evaluating a design product, i.e., a specific implementation.

New Plants

NUREG-0711 specifies a program review model for advanced plants. It has two parts: (a) a general model for the review of advanced power plant human factors, and (b) specific design guidance. The guidelines are implemented in computer form, in part to facilitate updating them as state-of-the-art knowledge, human factors practice, and human-computer interaction technology evolve.

DEVELOPMENTS IN THE U.S. NUCLEAR INDUSTRY

The U.S. nuclear industry makes some use of digital technology for nonsafety systems, e.g., feedwater control, alarms, displays, and many one-for-one replacements of meters, recorders, and displays. The indication is that, as with other process control industries and most other control systems, the U.S. nuclear industry would like to make more widespread use of digital technology in a variety of applications, including safety systems.

One perceived advantage of introducing digital technology is to enhance operator effectiveness. The committee frequently heard comments suggesting that one of the biggest advantages of the introduction of digital technology was to display more information to operators and to tailor displayed information to an operator's current needs. Digital I&C makes it much easier to integrate information along with advice in a very natural way, unlike the hard-wired independent displays of the analog age. (An example of this is the cross-plot of coolant pressure and temperature on a display with both historical and predictive abilities relative to the critical criterion, the line separating liquid from gaseous state. In earlier days the human operator had to look at separate displays of temperature and pressure and then go to a chart on the wall or a nomogram to determine whether things were in a critical state.) Despite the perceived benefits, the committee also heard comments suggesting that the U.S. nuclear industry was hesitant to attempt to incorporate additional computer technology into safety-related systems owing to licensing uncertainties.

DEVELOPMENTS IN THE FOREIGN NUCLEAR INDUSTRY

Foreign nuclear industries have made extensive use of digital technologies, and the control rooms of their nuclear power plants reflect extensive use of computer-based operator interfaces (White, 1994). It is important to note, however, that there are no emerging standards or sets of acceptance criteria that govern the design of human-machine interaction for such plants. For example, White (1994) notes two opposing trends in the definition of the operator's role in new advanced plant designs: in Japan and Germany, the trend is to use more automation, whereas in France the newest designs often use computer-based displays to guide plant operators.

The Halden Reactor Project of the Organization of Economic Cooperation and Development is an international effort to test and evaluate new control designs and technologies with the intent of understanding their impact of operator performance. The committee read several reports and the USNRC research staff summarized recent studies conducted by the Halden project. The committee noted that this research is very important but at this point fairly exploratory, yielding few results that can be readily used in practical power plant applications.

DEVELOPMENTS IN OTHER SAFETY-CRITICAL INDUSTRIES

Fossil-fuel power generating plants, chemical processing, more general process control industries (e.g., textile, steel, paper), manufacturing, aerospace, aviation, and air traffic control systems all make extensive use of digital technology for operator displays, aids, and control automation. Implementation is often incremental, with improvements and refinements made gradually over the life of the design and implementation process. Some industries have developed their own industry-specific guidelines (see, e.g., Cardosi and Murphy, 1995; NASA, 1988, 1996), while others observe good human factors engineering practice.

It is important to note, however that most industries, both nuclear and nonnuclear, strongly perceive a benefit to overall system safety and effectiveness by incorporating digital technology in complex safety-critical systems. The most striking example may be in aviation. Although there are many areas that require improvement, incorporation of digital technology in commercial aircraft is widely believed to