3

Monitoring Technologies: Research Priorities

INTRODUCTION

This chapter summarizes specific research issues confronting the major CTBT monitoring technologies and discusses strategies to enhance national monitoring capabilities through further basic and applied research. The technical challenges and operational issues differ among the technologies, but all of them share the basic functional requirements discussed in Section 2.2. Most of the research needs are discussed with respect to the monitoring challenges of detection, association, location, size estimation, and identification. The panel anticipates that new research challenges and issues will emerge as the IMS is deployed and there is experience with the analysis and use of its data. Presumably these needs will be motivated by "problem" events that defy present identification capabilities. Thus, although this report seeks to identify current areas of research priority, the panel emphasizes that a successful CTBT research program should maintain flexibility to shift emphasis and should nurture basic understanding in related areas that may provide unexpected solutions to future monitoring challenges.

3.1 SEISMOLOGY

Major Technical Issues

The CTBT seismic monitoring system will be challenged by the large number of small events that must be processed on a global basis to provide low-yield threshold monitoring of the underground and underwater environments. Although U.S. monitoring efforts will focus on certain areas of the world, those areas are still extensive. In many cases, the locations lack prior nuclear testing for direct calibration of identification; they require calibration efforts to improve location capability; and, for the most part, they have not been well instrumented seismologically. While historical arrival time observations are available from stations in many regions of the world, readily available waveform signals for determining local structures are less common. Implementation of well-established procedures for calibrating primary functions of the IMS seismic network (in conjunction with additional seismological NTM), such as event detection, association, and location, will provide a predictable level of monitoring capability.

In principle, the stated U.S. goal of high-confidence detection and identification of evasively conducted nuclear explosions of a few kilotons is achievable in limited areas of interest. In practice, doing so will require adequate numbers of appropriately located sensors, sufficient calibration of regional structures, and the development and validation of location and identification algorithms that use regional seismic waves. With the advent of the IMS and planned improvements in U.S. capabilities, many of the current data collection requirements for achieving the current national monitoring objectives will be met. However, additional research is certainly required to use the new data to meet these objectives. Given the current state of knowledge, a number of seismic events within the magnitude range of U.S. monitoring goals would not be distinguishable from nuclear explosions, even if the full IMS-NTM seismic network were in operation. Routine calibration methods will somewhat reduce the upper bound on this population of problem events in certain areas, but even then, research will be essential for significantly improving the overall capabilities of the system. The purpose of the research programs reviewed in this report is to improve monitoring capabilities to the level defined by U.S. monitoring goals.

There are several philosophies in the seismological community about how best to advance the capabilities of seismic monitoring systems, and there is extensive experience with global and regional monitoring of earthquakes and global monitoring of large nuclear explosions. Earthquake monitoring has emphasized collecting data from large numbers of stations, usually in the form of parametric data such as arrival times and amplitudes of seismic phases provided by station operators to a central processing facility. Several thousand global stations contribute data of this type to the production of bulletins and catalogs of the USGS/NEIS and the ISC (see NRC, 1995). Earthquake studies have prompted the development of many global and regional seismic velocity models for use in event location procedures. Many regional seismographic networks process short-period digital seismic waveforms for local earthquake bulletin preparation, and there has been some progress in use of near-real-time digital seismic data for production of the USGS/NEIS bulletins. For these bulletins, the need for prompt publication is usually less that that associated with nuclear test monitoring. When there is a need for a rapid result, as in documenting the location of an earthquake disaster to assist in emergency planning, the USGS/NEIS can and does provide a preliminary location within a few minutes of the arrival of the seismic waves to distant stations. These earthquake-related activities will continue in parallel with CTBT monitoring.

In recent years, global and regional broadband networks deployed by universities and the USGS for studying and monitoring earthquakes have developed entirely new analytical approaches, including systematic quantification of earthquake fault geometry and energy release based on analysis of waveforms. Of greatest relevance to CTBT monitoring are the quantitative approaches for event location and characterization being developed for analysis of seismic signals from small nearby earthquakes. When adequate crustal structures and seismic wave synthesis methods are available, it is possible to model complete broadband ground motions for regional events, enabling accurate source depth determination, event location and characterization, and development of waveform catalogs for efficient processing of future events (see Appendix D). The modeling may include inversion for the source moment tensor19 Efforts of this type require complete understanding of the nature of all ground motions recorded by the monitoring network.

One of the core philosophical issues for seismic monitoring operations is whether it is better to use global and/or regional travel time curves, possibly with station or source region corrections, or to explicitly use models of the Earth's velocity structure and calculate the travel times and amplitudes for each source-station pair. The velocity models, which can include variable crustal and lithospheric structure, can be derived from the same data used in defining local travel time curves, but once they are determined they could also be used to model additional seismic signals that are not employed in standard event processing, such as free oscillations, surface waves, and multiple-body

wave reflections. Velocity models are constantly improving on both global and regional scales and provide better approximations to the Earth with each model generation, with corresponding improvements in event location. Velocity models also have a key advantage completely lacking in travel time curves: they provide the basis for synthesizing the seismic motions expected for a specific path, as involved in the regional wave modeling mentioned above. The synthetic ground motions are useful for estimates of improving the source depth, identifying blockage of certain phase types, and enhancing the identification of the source type. The use of travel time curves has been adequate for teleseismic monitoring of large events but may be too limited for dealing with the regional monitoring required for small events.

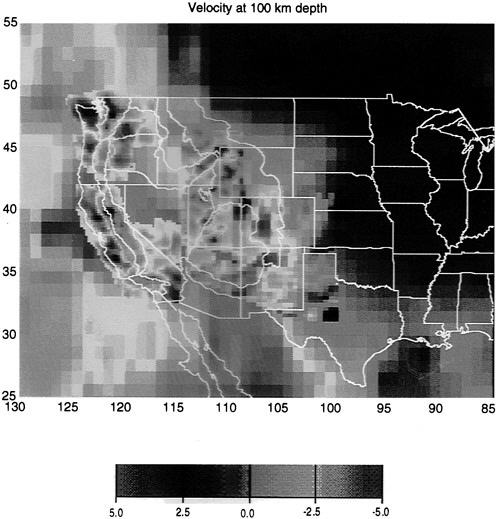

The nature of the Earth's velocity structure is such that heterogeneity exists on all scales (Figure 3.1), and at some level, interpolation of empirical travel time corrections as well as the intrinsic interpolation involved in aspherical model construction will fail to account for actual effects of Earth structure. For the teleseismic monitoring approach, in which the major phases of interest travel down into the mantle (or are relatively long-period surface waves that average over shallow structure), there is no obvious advantage of using travel time corrections versus three-dimensional models in regions with many sources, other than perhaps the operational simplicity of the former. However, for regions with sparse source or station distributions, the velocity model can incorporate information from many independent wavetypes and paths and predict structural effects for other paths and wavetypes that cannot otherwise be calibrated directly. The confidence gained from correctly predicting the energy partitioning in the seismic signal on a give path by waveform modeling directly enhances the source identification. The value of this approach is not a controversial notion, but it is in tension with the magnitude of resources that must be invested to adequately determine the structure in extensive areas of the world.

When the entire field of seismology is considered, it is clear that the science is moving toward a three-dimensional parameterized model of Earth's material properties that will provide quite accurate predictions of seismic wave travel times for many applications (including earthquake monitoring and basic studies of Earth's composition and dynamics). One component of a long-term CTBT monitoring research program could involve commitment of resources toward the development of an improved global three-dimensional velocity model beginning with regions of interest, perhaps in partnership with the National Science Foundation (NSF; see Appendix C). Previous nuclear test monitoring research programs have supported development of reference Earth models. The operational system could be positioned for systematically updating the reference model used for locations by adopting a current three-dimensional model at this time, possibly including three-dimensional ray-tracing capabilities that will become essential as resolution of the model improves. This approach would provide a framework for including the somewhat more focused efforts of the CTBT research program, such as the pursuit of a detailed model of the crust and lithosphere in Eurasia and the Middle East, for event location and identification. Partnership with other agencies and organizations pursuing related efforts could lead to rapid progress on this goal. Another major coordinated effort could be the development of regional event bulletins complete down to a low-magnitude level, such as 2.5 (achieving a global bulleting at this level would require significant enhancement of global seismic monitoring capabilities). This would require extensive coordination between the earthquake monitoring research and the CTBT monitoring communities, but it is technically viable and would provide a basis for CTBT monitoring with high confidence. Related research issues are summarized below as specific functions of the monitoring system are considered.

Detection

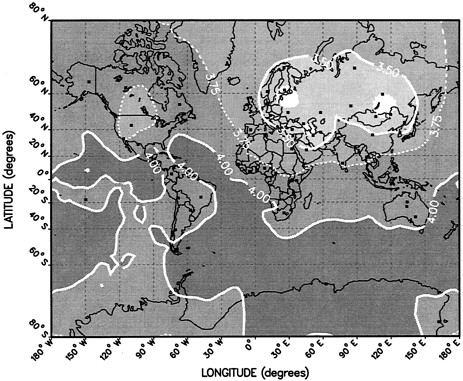

Figure 3.2 shows the projected detection threshold of the 50-station IMS Primary Network when fully deployed (Claassen, 1996). This calculation is based on a criterion of three or more stations detecting P arrivals with a 99 per cent probability. Where available, actual station spectral noise statistics were used, but for stations that do not yet exist the noise levels were assumed to be those of low-noise stations. NTM will enhance the performance of the national monitoring system relative to these calculations in several areas of the

FIGURE 3.2 Detection threshold predicted for the fully deployed IMS Primary Network. The station locations are indicated as black squares on the map. Source: (Claassen, 1996)

world. This simulation, which will have to be validated by actual operations, suggests that IMS detection thresholds across Eurasia will be at or below magnitude 3.75, with some areas being as low as 3.25.

Given the small percentage (5–6 per cent) of detections ultimately associated with events in the first few years of the prototype-IDC REB, and the fact that 30 per cent of the detections in the final event list had to be added by analysts, there are clearly research issues related to improved seismic detection. Original goals for the IDC involved association of 10 and 30 per cent of detected phases from the Primary and Auxiliary stations, respectively. Many unassociated detections are actually signals from tiny local events that occur only at one station. It would not be useful to locate such small events in most regions. It may be possible to screen out such signals using the waveshape information provided by templates of past events recorded by each station. This could reduce false associations and unburden the overall algorithms for association. Three-component stations have a lower proportion of associated detections (4 per cent) than do arrays (6 per cent) in the prototype system, and further research on combining and adjusting automated detection parameters holds promise of improving the performance.

The significant number of detections added or revised by analysts suggests room for improving detection algorithms that run automatically.

Research on enhancing the signal-to-noise ratio and improving the onset time determination has particular value. Exploration of simultaneous use of multiple detectors at a given station may result in approaches to reduce spurious detections and improve onset determinations. Practice at the prototype IDC has found that 76 per cent of the automatic detections have an onset time within 1.0 second of that picked by an analyst, whereas the goal of the IDC is 90 per cent.

A key detection issue is improving how overlapping signals are handled. This includes the problems of both multiple events and multiple arrivals from events. Evasion scenarios that involve masking an explosion in an earthquake or quarry blast require detection of superimposed signals. Time series analysis procedures for separating closely spaced overlapping signals with slightly different frequency content potentially can improve detection in such cases.

Perhaps the greatest room for research progress on detections involves phase identification. Only 7 per cent of teleseismic phases at Primary Network arrays were mis-identified as regional phases by the prototype IDC in December 1995, but 32 per cent of teleseismic phases from three-component stations were mis-identified. The complementary numbers of mis-identified regional phases were 8 per cent for arrays and 48 per cent (P waves) and 27 per cent (S phases) for three-component stations. Improved polarization analysis for three-component stations is needed. There is also a need for improved slowness and azimuth determinations. In the prototype system, azimuth and slowness measurements currently make up 9 per cent and 5 per cent, respectively, of the total defining parameters used in the REB, and the introduction of methods to improve these parameters will enhance both association and location procedures significantly.

There is relatively little experience with detectors for T-phases observed at island seismic stations, and effective algorithms must be developed for these noisy environments. Another area of research is the identification of seismo-acoustic detections generated by propagating air waves. Although they are generated by acoustic waves in the atmosphere, they are often called "lonesome" Lg waves because they appear as an Lg phase long after the first arrival. Consequently, they are interpreted incorrectly as a seismic signal to be associated with records from other seismic stations. Analysis of colocated seismic and infrasonic sensors holds promise for solving this problem.

Association

Association is an area in which recent basic research has enhanced CTBT monitoring capabilities, as newly developed Generalized Association (GA) algorithms (see discussion of association in Chapter 2) are being incorporated into routine operations of the U.S. NDC (and the prototype IDC). Active research is under way on incorporating additional information into GA methods, and several approaches have been explored for using relative phase amplitude information and other arrival properties. Use of complete wave-forms is also promising, and such methods may find particular value for complex overlapping sequences of aftershocks or quarry blasts. Given the archive of waveform data for older events that will accumulate at the U.S. NDC, innovative use of previous signals as templates and master events should be explored. This is a new arena of monitoring operations, and only limited research has been conducted on such approaches. It is likely that the number of unassociated detections can be reduced by preliminary waveshape screening to recognize local events detected at only one sensor and to remove their signals from further association processing. Incorporation of regional propagation information into the GA, such as blockage patterns for a given candidate source region, using a computer knowledge base should enhance association methods, but this strategy requires further development.

Location

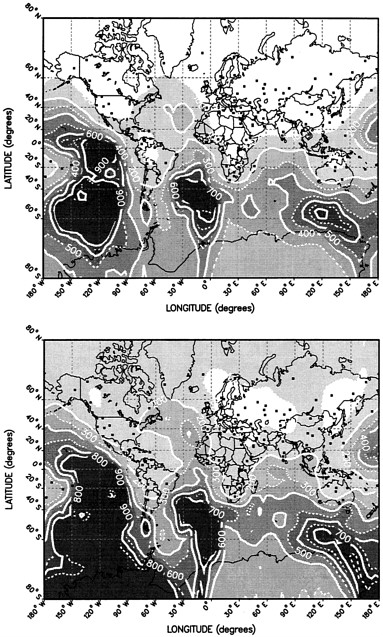

Seismic event location is a key area for further research efforts because accurate locations are essential for event identification and On-Site Inspections. Formal procedures exist for assessing the precision in event location (see Appendix C), but these typically do not account for possible systematic error and hence may overestimate event location accuracy. Figure 3.3 presents a

FIGURE 3.3 Estimates of location precision for the IMS Primary and Auxiliary networks for (a) magnitude 4.25 events and (b) events at the detection threshold in Figure 3.2. Neither plot includes an appraisal of uncertainty due to systematic error. The seismic stations are indicated as black squares on the map. Source: (Claassen, 1996)

calculation of the expected distribution of the precision of seismic event locations for the fully deployed Primary and Auxiliary networks of the IMS (Claassen, 1996) for events with magnitudes of 4.25 at the detection threshold calculated for the Primary Network (Figure 3.2). As in the case of detection-level calculations, actual station noise levels were used when available, low noise levels were assumed for future Primary Network stations, and low noise levels raised by 10 dB were used for future Auxiliary Network stations. The precision of the location performances is stated in terms of 90 per cent confidence of being within a given area measured in square kilometers. Only P-wave arrival times and azimuths were used, and no extensive calibration was assumed. (Note that this error estimate does not include the uncertainty due to possible systematic error.) Location precision better than 1000 km2 can be attained in most regions of the world for events at and above the detection level, but these results can be misleading because it is important to allow for biases due to systematic error, which can be substantial. For example, during assessment of the first 20 months of the REB, comparison by various countries of the locations produced by their own denser national networks with those in the REB showed that the REB 90 per cent confidence ellipses contained the national network location (which is presumably more accurate) less than half of the time. It has been estimated that the location precision for events in the REB can be improved by a factor of 6 after calibration of the network.

Two main research strategies exist for reducing systematic error in event locations: (1) the development of regionalized travel times with reliable path calibrations for events of known location, and (2) the development of improved three-dimensional velocity models that give less biased locations. (Appendix C discusses this issue at length.) A catalog of calibration events can advance the latter strategy as well, given the difficulty of eliminating trade-offs between locations and heterogeneity in developing three-dimensional models. From the CTBT operational perspective it is not clear that even the next generation of three-dimensional models (or regionalized travel time curves) will account for the Earth's heterogeneity sufficiently to eliminate systematic location errors for either teleseismic or regional observations. Thus, even if improved velocity models are used, it is desirable to calibrate station and source corrections to account for unmodeled effects.

Empirical calibration of location capabilities for a seismic network can proceed on various scales, ranging from use of a few key calibration events such as past nuclear explosions with well-known locations, to more ambitious undertakings such as development of large catalogs of events including well located earthquakes. Some efforts along these lines are being pursued in the DOE CTBT research program. The challenge is to obtain sufficient numbers of ground truth events with well-constrained parameters. Appendix C considers a systems approach to this problem, motivated by the need for calibration of extensive regions. Quarry blasts, earthquake ruptures that visibly break the surface, aftershocks with accurate locations determined from local deployments of portable arrays, and events located by dense local seismic networks can be used for this purpose because such event locations are in some cases accurate to within 1 to 2 km. There are large uncertainties in how to interpolate calibration information from discrete source-receiver combinations, and research on the statistical nature of heterogeneity may provide guidance in this process. Such uncertainty also serves as motivation for further development of velocity models because the many data sources that can be incorporated into such models can resolve heterogeneities that are not calibrated directly by ground truth events.

A major basic and applied research effort of great importance for event location capabilities involves developing regionalized travel time curves and velocity models, particularly for the crustal phases that will be detected for small events. These regionalized models must be merged with global structures to permit simultaneous locations with regional and teleseismic signals. Determining regionalized travel time curves usually involves a combination of empirical measurements and modeling efforts, with the latter being important to ensure appropriate identification of regional phases (variations in crustal thickness and source radiation can lead to confusion about which phase is being measured). Historical data bases may be valuable for determining regional travel time curves in areas where new stations are being deployed. A demonstrated research tool that assists

in this effort involves systematic modeling of regional broadband seismograms for earthquakes and quarry blasts. Confidence in the adequacy of a given regional velocity structure or the associated empirical travel time curve is greatly enhanced if computer simulations demonstrate that the structural model actually accounts for the timing and relative amplitude of phases in the seismogram. By systematic modeling of broadband waveforms from larger earthquakes (for which the source can be determined by analysis of signals from multiple stations), regional Earth structures with good predictive capabilities can be determined. It is then possible either to use the velocity model to predict the times of regional phases or to use travel time curves for which the model has validated the identification of phases. This is of particular value in regions where the crustal heterogeneity (e.g., near continental margins and in mountain belts) causes the energy partitioning to change among phases; for example, some crustal paths do not allow the Lg phase to propagate, and anomalously large Sn phases may be observed instead.

Waveform modeling approaches can play a major role in determining the local velocity structures required to interpret regional phases for both event location and event identification, so there is value in further development of seismological modeling techniques that can compute synthetic ground motions for complex models. Nuclear test monitoring research programs have long supported basic development of seismic modeling capabilities because they underlie the quantification of most seismic monitoring methods. Present challenges include modeling of regional distance (to 1000 km) high-frequency crustal phases (up to 10 Hz and higher) for paths in two-and three-dimensional models of the crust. Such modeling must correctly include surface and internal boundaries that are rough, as well as both large-and small-scale volumetric heterogeneities. Current capabilities are limited, and in only a handful of situations have regional waveform complexities been quantified adequately by synthetics. New modeling approaches and faster computer technologies will be required to achieve the level of seismogram quantification for shorter-period regional seismic waves that now exists for global observations of longer-period seismic waves. In parallel with the development of new modeling capabilities is a need for improved strategies for determining characteristics of the crust based on sparse regional observations, so that realistic velocity models can be developed rather than ad hoc structures.

Other promising areas for research include methods of using complete waveform information to locate events and improved use of long-period energy. Correlations of waveforms can provide accurate relative locations for similar sources such as mining explosions. The basic idea is that rather than relying on only the relative arrival times of direct P, one can use relative arrival times of all phases in the seismogram to constrain the relative location. The potential for regional event location based on such approaches is not yet fully established, but preliminary work with waveforms from mining areas is promising. There is also potential for improving event locations using surface waves because there have been significant advances in the global maps of phase velocity heterogeneity affecting these phases. Because surface waves with periods of 5–20 seconds are valuable for estimation of source size and event identification, signal processing procedures that accurately time these arrivals (notably using phase-match filters that enhance signal-to-noise by correcting for the systematic dispersive nature of surface waves) have to be developed and will provide information useful for locating events. For applications to small events, existing phase velocity models must be improved and extended to shorter periods.

A particularly important aspect of event location requiring further research is determination of depth for small, regionally recorded events. This parameter is of great value for identifying the source but is one of the most challenging problems for regional event monitoring. Based on experience with earthquake monitoring in densely instrumented regions of the world, accurate depth determination is not typically achievable using direct body wave arrival times alone, and more complete waveform information must be used. The complex set of reverberations that exist at regional distances makes identification of discrete seismic ''depth phases" difficult, but complete waveform modeling, as well as cepstral methods (involving analysis of the spectrum of the signal) applied to entire sets of body wave arrivals, hold potential for identifying such phases. Research

advances in this area potentially can eliminate many events from further analysis in the CTBT monitoring system.

Event location techniques that exploit synergy between various monitoring technologies are described in Section 3.6.

Size Estimation

Every event located by the IMS and NTM will have some recorded signals that can be used to estimate the strength of the source for various frequencies and wavetypes. Because the actual seismograms are retrieved from the field, it is possible to measure a variety of waveform characteristics to characterize the source strength. The standard seismic magnitude scales (see Appendix D) for short-period P waves (mb) and 20-second-period surface waves (MS) are the principal teleseismic size estimators used for event identification and yield estimation for larger events. In December 1995, 88 per cent of the events in the REB were assigned body wave magnitudes, but only 10 per cent have surface wave magnitudes because of low surface wave amplitudes for small events and the sparseness of the network. Operational experience at the prototype IDC is establishing systematic station corrections (average deviations from well-determined mean event magnitudes) that can be applied to reduce biases in the measurements, particularly for small events with few recordings. About 34 per cent of the REB events for December 1995 were assigned a "local magnitude," which was scaled relative to mb.

In addition to station corrections, which account for systematic station-dependent biases, it is important to determine regionalized amplitude-distance curves analogous to regional travel time curves. These curves are used in the magnitude formulations and have great variations at regional distances (see Appendix D). Although range-and azimuth-dependent station corrections can absorb regional patterns, it is valuable to have a suitable regional structure for interpolation of general trends. Research is needed to establish the level of regionalization required and the nature of the regional amplitude-distance curves. As new IMS and NTM stations are deployed, each region must be calibrated and an understanding attained of the nature of the seismic wave propagation in that region (including effects such as blockage, which may prevent measurement of some phases).

The availability of complete waveform information for each event offers potential source strength estimation that exploits more of the signal than conventional seismic magnitudes. For example, complete waveform modeling can determine accurate seismic moments (measures of the overall fault energy release) that may be superior to seismic magnitudes because they explicitly account for fault geometry. Routine inversion of complete ground motion recordings for seismic moment and fault geometry is now conducted for all events with magnitudes larger than about 5.0 around the world by the academic community that is studying earthquake processes. Similar capabilities have been demonstrated for events with magnitudes as small as 3.5 in well-instrumented seismogenic areas (see Appendix D). These approaches are closely linked to source identification because they explicitly incorporate and solve for generalized representations of forces exerted at the source. The extent to which a sparse network such as the IMS can exploit such waveform modeling approaches is not fully established. In part, it depends on the extent to which adequate regional velocity models are determined and on their waveform prediction capabilities. Further research can establish the operational role of complete waveform analysis for source strength estimation.

Operationally, it is usually convenient to use parametric measurements such as magnitudes. Several promising waveform measurements for regional phases can be treated parametrically. These include waveform energy measurements for short-and long-period passbands; coda magnitudes based on frequency-dependent variations of reverberations following principal seismic arrivals; and signal power measurements of Sn, Lg, and other reverberative phases at regional distances. Extension of surface wave measurements to the short-period signals (5–15 seconds) that dominate regional recordings of small events is also necessary. Research on the utility, stability, and regional variability of these source strength estimators should continue. This includes necessary efforts to characterize the effects of source depth, distance, attenuation, heterogeneous crustal structure, and recording site for each approach. This effort is warranted given the limitations of conventional

mb and Ms measurements for small events recorded by sparse networks.

Identification

Several major research areas related to seismic source identification have been considered in the preceding discussion of event location and strength estimation. Accurate event location is essential for identification, including the reliable separation of onshore and offshore events and the determination of source depth. When location alone is insufficient to identify the source, secondary waveform attributes must be relied on. For events larger than about magnitude 4.5, well-tested methods based on teleseismic data provide reliable discrimination. For small events, experience has shown that a number of ad hoc methods, often different in different regions, can be used to distinguish explosions (e.g., mine blasting) from earthquakes. However, a primary area for both basic and applied research is to systematize such experience for identifying small events and turn it to solving the problems of CTBT monitoring. Some key areas include the following:

-

Extension of mb:Ms-type discriminants to regional scales for small events: this involves development of regional surrogates for both P-wave and surface-wave magnitudes that retain the frequency-source depth-source mechanism sensitivity of teleseismic discriminants. Improved methods of measuring the surface wave source strength for regional signals are necessary, as mentioned above. Quantification of the regional measures by waveform modeling and source theory is needed to provide a solid physical understanding of such empirical discriminants.

-

Regional S/P type measurements (e.g., Lg/Pn, Lg/Pg, Sn/Pg) have been shown to discriminate source types well at frequencies higher than 3–5 Hz. Research is needed to establish the regional variability of such measurements and to reduce the scatter in earthquake populations. Improved path corrections, beyond standard distance curves, that account for regional crustal variability should be developed further because they appear to reduce scatter for frequencies lower than 3 Hz.

-

Quarry blasts and mining events (explosions, roof collapses, rockbursts) can pose major identification challenges, and research is needed to establish the variability of these sources and the performance of proposed discriminants in a variety of areas. Ripple-fired explosions (with a series of spatially distributed and temporally lagged charges) can often be discriminated from other explosions and earthquakes by the presence of discrete frequencies associated with shot time separation. The discriminant appears to be broadly applicable, although additional testing in new environments is essential. However, it does not preclude a scenario in which the mining explosion masks a nuclear test (see below).

-

Systematic, complete waveform inversion for source type should be explored for regions with well-determined crustal structures, given the constraints of the large spacing between seismic stations in the IMS and NTM. This can contribute directly to source depth determination and source identification. It is likely that such approaches are the key to solving challenges posed by some evasion scenarios involving masking of nuclear test signals by simultaneous quarry blasts, rockbursts, or earthquakes.

-

Strategies for calibrating discriminants in various regions must be established, with procedures to correct for regional path effects being expanded. This includes systematic mapping of blockage effects and attenuation structure. It is also desirable to establish populations of quarry blast signals for each region.

Summary of Research Priorities Associated with Seismic Monitoring

In summary, a prioritized list of research topics in seismology that would enhance CTBT monitoring capabilities includes:

-

Improved characterization and modeling of regional seismic wave propagation in diverse regions of the world.

-

Improved capabilities to detect, locate, and identify small events using sparsely distributed seismic arrays.

-

Theoretical and observational investigations of the full range of seismic sources.

-

Development of high-resolution velocity models for regions of monitoring concern.

3.2 HYDROACOUSTICS

Major Technical Issues

The ocean is a remarkably efficient medium for the transmission of sound energy. Its deep sound channel (the SOFAR channel; see Appendix E) allows sound energy to propagate with little attenuation over global distances. Even low-level sounds, on the scale of those produced by several kilograms of TNT, can often be detected at ranges of many thousands of kilometers. Despite these attributes, the hydrophone and T-phase station network proposed for the IMS will be inadequate for accurately locating sources in all the world's oceans without ancillary data from other monitoring technologies. In certain cases, such as low-altitude explosions above the sea surface, the hydroacoustic system by itself might be incapable of providing a detection altogether or could be jammed easily. Thus, it is especially important to be able to fuse hydroacoustic data with other IMS data—seismic and infrasonic.

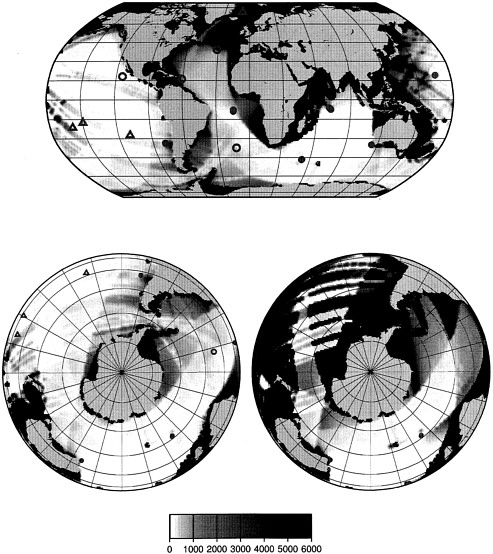

Figure 3.4 estimates the location accuracy of the IMS hydrophone network together with the ocean-island seismic stations for a 1-kiloton explosion 50 m beneath the sea surface. Model simulations show that for some areas, especially regions of high shipping density, location uncertainties can exceed 1000 km2 by a substantial margin. This is a result of at least two factors: (1) locally high background noise associated with shipping masks the explosive signal, and (2) at least three independent observations of acoustic arrivals are needed to locate a source by triangulation. Given blockage by islands, seamounts, continents, and other features, this is difficult to achieve worldwide with only six hydroacoustic systems and five T-phase stations. None of the IMS hydroacoustic stations have direction resolving capabilities; if available, these would provide improved location accuracy with detections on fewer sensors. In practice, the hydroacoustic data will be examined for discrimination purposes and combined with seismic data for location purposes.

If the detonation is above the sea surface, signal levels at those stations that are not blocked by bathymetry will be substantially lower, and location uncertainties will increase. For example, calculations show that an atmospheric explosion of 1 kt at a height 1 km above the surface will couple energy into the SOFAR channel roughly equivalent to the detonation of a 10–50 kg explosion at channel depth, which is five orders of magnitude less than 1 kt. Sound pressure level scaling (which is proportional to pressure squared) for TNT charges based on empirical data is roughly proportional to (W2/3, where W is the weight of the charge. Thus, each factor of 10 decrease in charge weight reduces the effective sound pressure level by about 6.7 dB (in practice, the factor is 7–7.5 dB), and five orders of magnitude decreases the effective source level by about 35 dB.

A reduction in signal-to-noise ratio of this magnitude implies that at certain receivers, signal levels will fall below the background noise and will not be detected at all. In addition, the reduction in signal-to-noise ratio will also reduce travel time accuracies by a factor of about 50 because the variance in measuring travel times is inversely proportional to the square root of the signal-to-noise ratio.

Even if the reduced signal from a low-level atmospheric explosion is above the background noise at a receiver, its level is comparable to many natural (e.g., volcanoes, earthquakes, microseisms generated by waves) and man-made (e.g., seismic profiling) sounds. The signals from such an explosion are, therefore, susceptible to natural noise masking and fairly simple jamming techniques.

The hydroacoustic system alone may also be incapable of detecting subsurface explosions in certain regions where bathymetric blocking exists and where the coupling of energy into the SOFAR channel is poor. In other areas, detection may be possible but localization could be problematic at best. An example might be an explosion in the Antarctic Ocean where the axis of the SOFAR channel at the surface causes all sound energy to

FIGURE 3.4 (Facing Page) (a, top) Estimated location precision for the IMS hydroacoustic network (open circles) combined with the ocean-island seismic stations (triangles) for a 1 kt explosion 50 m below the ocean surface. Station locations are also shown in Figure 1.1a. The scale shows the uncertainty in square kilometers. The linear features of low resolution are associated with regions of high shipping density. (b, bottom) Estimated location precision for the southern oceans. The image on the left is for the combined hydroacoustic and ocean-island seismic network. The image on the right shows the performance for only the hydroacoustic system. Source: D. B. Harris, Lawrence Livermore National Laboratory, personal communication, 1997.

be upwardly refracted and heavily scattered by the sea surface and seafloor. Further, sound generated in the Antarctic must pass through a highly variable region of circulating currents (Antarctic Circumpolar Current), which will refract the energy in unpredictable directions, thereby reducing the precision in both detection and localization.

Comprehensive studies of the effects of bathymetric and continental blockage and the propagation characteristics of shoaling waters must be conducted. From a technical viewpoint, the use of multiple hydrophones at hydroacoustic stations, rather than the recommended single sensor, should be considered. The incremental cost is small; the gain can be large. Furthermore, the false-alarm rate of a sparse network of single hydrophones is likely to be prohibitive. The use of two-or three-hydrophone systems that can provide an estimated azimuth, for example, will reduce this problem substantially.

The level of performance to be expected from the five island seismic T-phase stations needs to be determined. The use of land seismic stations to detect ocean acoustic energy that couples to the solid Earth and is transformed into elastic waves is poorly studied and little understood. For this reason, the T-phase stations' contribution to the location accuracy in Figure 3.4 is uncertain. Figure 3.4b shows that in certain regions, the T-phase stations are assumed to provide the majority of the locational capability. For these reasons, it will be important to calibrate existing sensors using artificial sources and to ensure that new installations are well calibrated.

Perhaps not a major research issue, but a general technical issue that must be addressed, is the confusion that arises as a result of nonstandard nomenclature and sound reference level standards. Because the study of T-phase signals (those that have coupled into waterborne acoustic modes and vice versa) by the seismic community is not as mature as the study of solid Earth phases, it suffers from a lack of standard nomenclature consistent with accepted seismological conventions. The effect is to confuse discussion, inhibit communication, and ultimately forestall research progress. Similarly, instrumentation and measurement standards are required to facilitate comparisons of projected performance levels and the results of observations made at widely dispersed locations. At present, for example, the hydroacoustic monitoring community refers all signal levels to 1 microvolt at the receiving hydrophone. The rest of the hydroacoustic community refers signal levels to a pressure level of 1 µPa at 1 m.

Detection

The ocean and ocean bottom are as variable as the atmosphere and internal structure of the Earth. Consequently there are regions of good energy propagation and regions where coupling of source energy into acoustic energy is relatively inefficient. In the former, detection may be relatively straight-forward; in the latter it may be impossible.

Large morphological features on the seafloor present particular difficulties for long-range detection of sources of interest. Large oceanic plateaus and chains of islands are likely to block the propagation of ocean acoustic waves. Even relatively small features such as abundant seamounts may block propagation to receivers at the frequencies typically used for hydroacoustic detection of earthquakes and explosions. A thorough search for "blind spots" should be conducted, and two-and three-dimensional propagation codes can be used to explore the effects of seafloor structures. Numerical simulations should be backed up with experimental verification, which might include the use of earthquake sources as well as seagoing expeditions to explore relevant issues. Most of the knowledge of long-range acoustic propagation is restricted to deep oceans. In such an environment, signals from very small sources in the sound channel can propagate thousands of kilometers. However, if the source is located on a continental margin or in shallow water in general, long-distance propagation of acoustic energy is seriously impeded through both surface and bottom interactions, and coupling into the sound channel for long-range propagation can be problematic. It is important to examine these effects so as to develop an understanding of shallow water source region effects on long-range detection. Trade-offs with the generation of seismic waves should be an integral part of such studies.

Although algorithms exist to test the effects of seamounts, oceanic plateaus, and continental margins on the propagation of acoustic energy, it is essential that predictions be tested effectively against observations. Toward that end, scientists

should exploit natural sources such as earthquakes and volcanoes for experimental verification. Some seagoing experiments with artificial sources may also be necessary.

Although it is common to observe underwater acoustic energy from earthquakes, volcanoes, and exploration sources at great distances, the coupling (or conversely the blockage) is poorly understood. Sound channel or waveguide propagation in the oceans is characterized by a very narrow band of phase velocities which can be measured in tens of kilometers/second. Most of the common sources (e.g. earthquakes, shallow ships) do not generate such phase velocities in a direct manner. In these cases, propagation depends strongly on heterogeneity and scattering for excitation. This phenomenon is not well understood. For example, an earthquake at a depth of 300 km cannot introduce energy directly into the channel but must rely on the interactions of elastic and acoustic waves at the seafloor to introduce sound into the channel. To date, the mechanism for this transformation has been studied only at the most rudimentary level. It is important that this aspect of long-range propagation be explored in order to understand the signals and noise observed in the water column. For example, there is no correlation between seismic magnitude and amplitudes observed on acoustic sensors located in the sound channel.

Problems of nuclear event monitoring in the ocean are exacerbated by the background of several thousand suboceanic earthquakes exceeding magnitude 4.0 per year. Furthermore, the slow acoustic propagation speed, coupled with the small number of sensors at large separations, enhances the opportunities for false alarms and incorrect locations for small events. Only through the development of robust automatic detection and noise rejection algorithms, coupled with synergy with other monitoring technologies, can the proposed IMS hydroacoustic system be useful.

Association and Location

The hydroacoustic network will rely on the association of arrivals from at least three different stations to perform cross-fixing and location of the event. This is called intra-hydroacoustic association, and it must be done successfully if the hydroacoustic network is to have utility as a stand-alone system for locating hydroacoustically coupled events, especially events that may only be well coupled in the water volume. Two-station location is, of course, possible if the left-right ambiguity can be resolved due to blockages, the positions of land masses, or contributions from other data types (e.g., seismology). Hydroacoustic observations have never been integrated with seismic and infrasound data on the scale proposed for the IMS. It is expected that there will be significant value in combining and associating hydroacoustic, seismic, and infrasound phases. Successful data fusion is expected to have a significant impact on the effectiveness of the hydroacoustic and T-phase detectors because of their small number and sparse distribution.

Several questions and research issues related to hydroacoustic location are raised:

-

What level of calibration is required to provide sufficient location accuracy to identify regions for debris collection? Will there be a need for more hydroacoustic stations? Preliminary analysis has shown that when only hydroacoustic data are considered, false alarms create many false associations that lead to inconclusive or erroneous locations, and this problem is greatly exacerbated by the small number of stations and the slow speed at which signals propagate in the ocean. One alternative that should be investigated is adding one or two more hydrophones to IMS stations, with a few kilometers' separation. This will permit determination of a direction or bearing that can be used to improve the intra-hydroacoustic association, localization, and false-alarm rejection without achieving a submarine tracking capability.

-

Can a single station localize an event by exploiting lateral multipaths due to ocean refraction and/or reflections from bathymetric features such as islands and coastlines, thereby partly mitigating false alarms and improving the location capability of the sparse IMS hydroacoustic array? Understanding diffraction effects and wave number refraction by shoaling bathymetry may also be important for this effort.

-

Is the present knowledge of ocean climatology sufficient to achieve 1000 km2 location accuracy worldwide? It is believed that in large portions of the central open oceans, climatology is not a limiting factor, but in regions of high variability (e.g., major boundary currents such as the Antarctic Circumpolar Current), this is almost certainly not true.

-

What is the performance of T-phase stations for detecting hydroacoustic signals and their consequent contribution to the location capability of the hydrophone network?

-

The IMS essentially locates events in latitude and longitude, with the initial assumption that they are at the surface. A significant research issue is to ascertain what can be done to determine event location in depth or at altitude as well—in the ocean, beneath the seafloor, or above the ocean surface—which can be an important basis for identification. In addition to research on using the characteristics (spatial and temporal) of hydroacoustic signals alone, combining hydroacoustic data with seismic data (for underwater and sub-ocean bottom events) and infrasound data (for atmospheric events over the ocean) needs to be investigated.

A further research topic is the calibration of source coupling, propagation paths, and losses for specific hydroacoustic sensors using events that are identified and located by seismic sensors. Improved understanding of the coupling losses and direction changes that occur as energy is coupled from the solid Earth to water and vice versa represents a major research area that must be pursued to use T-phase data effectively at hydroacoustic stations.

Identification

In contrast with chemical and nuclear explosions on land, the data involving explosions (especially less than 1–2 kt) at sea or at low altitudes above the sea surface is limited. Thus, the discriminants presently thought to be useful for distinguishing explosions from earthquakes, volcanoes, and other events at sea have not been subjected to statistically significant testing. Such testing with nuclear explosions will obviously be impossible in the future. Therefore, questions about the efficiency and robustness of present discriminants or the performance level of proposed discriminants will be difficult to evaluate, and alternative means of testing these important algorithms must be developed. At issue is whether nonnuclear-scaled testing is valid.

A similar problem is that many of the methodologies for computing explosion yield and the effective source pressure (in the linear range of propagation) rely on well-known relationships for chemical explosions. Given that the physical processes associated with nuclear explosions produce some different effects than those for chemical explosions, is this valid? Unfortunately, there are no good recordings of previous water-borne nuclear explosions, which require that this relationship be validated with analytic or numerical techniques.

Additionally, the signature of a low-level atmospheric explosion detected by hydroacoustic sensors is not known. Existing evidence indicates that it is composed of relatively low frequencies and that the signal duration is short. There are few data and experiments; calculation and modeling are required.

The high-frequency content of T-phases from earthquakes appears to decay more rapidly than for explosions. It is not known if this relationship holds for small events, nor are the physical reasons for this differential decay known.

Finally, although hydroacoustic and T-phase stations cover a fairly large portion of the world where seismic stations are sparse, their ultimate performance must be understood in the context of the larger system, and the improvements to be realized by the addition of infrasonic and seismic information must be evaluated.

Summary of Research Priorities Associated with Hydroacoustic Monitoring

In summary, a prioritized list of research topics in hydroacoustics that would enhance CTBT monitoring capabilities includes:

-

Improvements in source excitation theory for diverse ocean environments, particularly for earthquakes and for acoustic sources in shallow coastal waters and low altitude environments.

-

Understanding the regional variability of hydroacoustic wave propagation in oceans and coastal waters and the capability of the IMS hydroacoustic system to detect these signals.

-

Improved characterization of the acoustic background in diverse ocean environments.

-

Improving the ability to use the sparse IMS network for event detection, location, and identification and developing algorithms for automated operation.

3.3 INFRASONICS

Major Technical Issues

Atmospheric infrasonic detection in some respects has less complex propagation challenges than hydroacoustic or seismic detection because the medium does not contain discontinuities, such as islands, located in the propagation path. Even the heights of mountains are small compared to atmospheric propagation heights over long distances. However, the propagation of infrasound is affected strongly by rough surface scattering (scatterers are likely to be power-law distributed over a fairly broad range of wavenumbers) and by the presence of winds.

Two additional factors affect the propagation of acoustic waves in the atmosphere:

-

Winds and temperature variations affect their velocity, although there is little attenuation at low frequencies. Typical atmospheric winds can have Mach numbers of 0.1 to 0.2 (about 30 to 60 m/s), which means that the effects of refraction and changes in wind-induced propagation time are important. Also, temperature changes significantly with height. In complex ways this contributes to the creation of a variety of waveguides and ray paths. Furthermore, both horizontal as well as vertical gradients of wind speed and temperature can have important effects.

-

The atmosphere is spatially complex (over scales from meters to hundreds of kilometers) and temporally dynamic (over scales from minutes to months). For slowly varying features, climatological calibrations can account for the effects of long-term trends. For rapid variations, there is a need to develop short-term statistics. Bush et al. (1989) provide evidence for the short-term variability of infrasonic propagation paths. Technical aspects of sound propagation in the atmosphere are discussed in Appendix F.

The dynamics of the atmosphere have an even greater effect on radionuclide transport since the same waves, eddies, shears, temperature gradients, and turbulence that complicate infrasonic propagation, act over longer time periods and cause parcels of air to take complex paths that are difficult to predict. Moreover, the atmosphere is typically not laminar and the turbulent transport fluxes of mass and momentum can be a factor of 105 greater than those of a fluid, which might be described with a smooth molecular viscosity-controlled flow.

Some basic operational issues arise for global infrasonic monitoring, characteristic of the research issues that dominated seismic monitoring in the early 1960s when global seismic networks were first being deployed for treaty monitoring purposes. However, extensive experience gained in the 1950s and 1960s indicates that infrasonic monitoring may prove to have relatively few operational limitations for CTBT monitoring. Appendix F provides background and further perspectives of technical issues in this area. From a U.S. national perspective, an assessment of research needs should consider that infrasonic monitoring of atmospheric explosions over broad ocean areas complements satellite coverage (except for cases of heavy cloud cover). Infrasound will provide synergy with seismic methods by identifying the presence of surface effects in chemical explosions and lonesome Lg waves, as discussed in Section 3.1.

Detection

The history of automatic infrasonic monitoring is limited. Consequently, a high priority will be to gain experience with the performance of automated systems for detecting continuous infrasonic signals. Many of the research areas discussed below are thus at a more basic technical level than the other monitoring technologies. Phase identification for atmospheric signals is also poorly understood, and reliable techniques must be developed to make detection useful. A wide range of basic problems in sensor performance, array design, sampling rate, and signal processing is associated with the detection capabilities of the infrasonic network. Given these constraints, the following subsections describe important research

activities required to enhance the detection capabilities of the proposed IMS infrasound network.

Development of a Sensor with Extended Response, Temperature Stability, and High Accuracy

The development of a sensor with the required IMS operational characteristics should be a high priority. A critical goal of this work will be to reduce the noise level of the sensor from electronic and other sources (e.g., temperature-induced noise) to at least an order of magnitude below typical minimum atmospheric pressure signal levels (> 0.01 Pa). This means that the sensor noise level should be smaller than 1 mPa from peak to peak over the frequency range of interest. This goal is achievable.

Other sources of infrasonic noise (not associated with the sensor) are pressure fluctuations in the turbulent atmospheric boundary layer and the background of infrasound arising from geophysical sources. With the exceptions of quarry blasts, other chemical explosions, and missile launches, relatively few human processes produce sounds that could mask nuclear explosions.

Past infrasonic sensors operated effectively for years, but they were large, and heavy and did not incorporate current technology. Improvements in thermal insulation techniques should enable the size and weight to be reduced while retaining excellent temperature stability.

Improved Spatial Filter Design

Until the late 1970s, noise from pressure fluctuations in the boundary layer was reduced by using a 300 m long pipe with a sensor at the midpoint. This pipe was tapered in diameter in sections, with small pipes (e.g., 1 cm diameter) at the ends and larger pipes (e.g., 5 cm diameter) at the center. Each pipe was equipped with flow resistors (330 acoustic ohms) at intervals of 2 to 3 m to couple the atmospheric pressure. These installations were difficult to construct and maintain since 200 flow ports had to be kept clean and in the correct range of flow resistance. These noise filters had dimensions smaller than the wavelengths of the nuclear signal sounds they were designed to detect (e.g., acoustic wavelengths greater than 1 km) and dimensions larger than the scales of turbulent eddies producing pressure noise. Thus, they averaged pressure fluctuations from the eddies and did not affect the acoustic waves, which appeared coherently over the spatial filter (producing improvements in the signal-to-noise ratio of typically 20 dB).

Significant design changes in the 1980s, based on the work of Daniels (1959), Bedard (1977), and Grover (1971) allowed detection of infrasonic frequencies near 1 Hz. These designs involved the use of porous irrigation garden hose in place of the pipes with flow resistors. Hoses are continuously sensitive to pressure fluctuations along their entire length and provide additional averaging. These were deployed in a configuration with 12 hoses (typically of 8 or 16 m lengths) radiating outward from the sensor.

There is a continuing need to optimize the design for reducing the noise at lower frequencies while ensuring that signals-of-interest are not affected. This is a critical area of research and operational need that would help to lower the thresholds of signal detection. The panel emphasizes that research on the efficacy of different spatial filters should always involve comparisons between natural signals and noise pressure fields using the same type of microphone for each of the spatial filter designs.

Improved Knowledge of Noise Sources in the Turbulent Boundary Layer

Knowledge of the mechanisms that produce changes in pressure in the surface boundary layer must be improved. Such knowledge can guide site selection and the optimization of spatial filter designs (currently more of an art than a science). Few data sets exist on the scales of pressure fluctuations, and these tend to be episodic and limited in scope. A recent study (Bedard et al., 1992) found that depending on local conditions, the root-mean-square (RMS) pressure noise dependence on the mean wind speed ranges from wind speed to the first power to wind speed to the third power.

Other uncertainties exist. What are the relative roles of temperature and velocity fluctuations in creating pressure noise? Can the boundary layer be modified to reduce noise? These and other questions pose important analytical and experimental

research problems. The development of an omnidirectional, all-weather, static pressure probe (Nishiyama and Bedard, 1991) would permit a range of experiments not previously possible. The research payoffs again could lower detection thresholds significantly.

Improved Array Design

For infrasonic signal detection there is evidence that arrays of sensors are required to separate acoustic signals from local wind noise (often, even after reduction by spatial filters, these can have similar amplitudes). The local noise is not correlated from array sensor to sensor for a well-designed array. Arrays of individual sensors also are required for identifying distant sources since locally determined azimuth information is needed to distinguish among multiple sources. The challenge is to optimize the number of sensors and the geometry of their deployment for detection and discrimination.

Once a sparse array design has been defined it is important to explore the pros and cons of adding additional elements and to provide guidance in this regard. Considerations include

-

providing additional choices of combinations of sensor sites to use in cross-correlation, thereby increasing the probability of reducing local noise;

-

reducing side lobes through choice of number of elements and geometry;

-

increasing azimuth and phase speed resolution;

-

possibly enhancing the array with directivity options; and

-

providing means for discriminating between signal types based on spatial decorrelation as described in the next section (e.g., Mack and Flinn, 1971).

Using filled arrays of instrumentation with improved spatial filters, one can examine the correlation of the infrasonic signal across the array. In some cases, this may be an important discriminant. For example, infrasonic signals generated by ocean waves show rapid decorrelation with distance across an array (these ''microbaroms" are the atmospheric analogue of microseisms, both being excited by nonlinear interaction of opposing swells in the oceans) (Donn et al., 1967). These sounds occur almost continuously during the winter months and have the potential to mask sounds from other sources (Posmentier, 1967). Because they originate from large areas of incoherent sources (either interacting oceanic gravity waves or waves impacting beaches), they decorrelate across an array much more rapidly than point sources of sound. Although microbaroms typically have periods from 3 to 6 seconds (wavelengths of 1 to 2 km) they are almost completely decorrelated over array sizes of 4 km and will surely complicate detection for arrays much less than this size. Point sources remain correlated over arrays of 4 km size and larger.

High-Frequency Sampling of Infrasound Signals

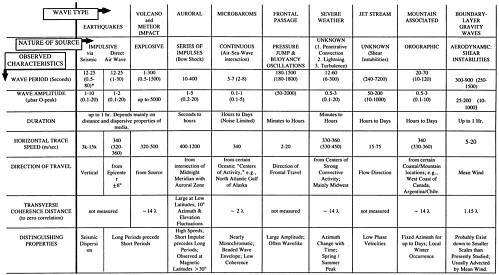

High-frequency sampling of atmospheric infrasound, between 1 and 5 Hz, provides potentially important discrimination information that will be valuable for synthesis with seismic data sets. The use of these higher frequencies is an area of continuing research. Examples of signals having significant acoustic power above 1 Hz are described below. Further details for these and other signals are presented in Table 3.1.

-

Avalanches. The infrasonic signatures for avalanches are quite unique and usually consist of a wavetrain of duration less than 1 minute with a sharp frequency peak between 1 and 5 Hz. Large avalanches produce detectable seismic energy, and such signals could be identified by data fusion with infrasonics.

-

Quarry blasting. Quarry blasts are detected frequently. Their acoustic signatures contain higher-frequency components that can distinguish them from underground nuclear explosions.

-

Severe weather. Growing knowledge of the spectral content of infrasonic signals from severe weather suggests that there are important discriminants for identifying and distinguishing these signals from other sources. Current results concerning the relationships between infrasonic signals and storm dynamics indicate that frequencies to at least 5 Hz are important.

-

Meteors. The infrasonic signatures associated with meteors can often be distinguished from explosions based on the frequency content. Recently, a bolide was tracked over the southwestern United States using the signal above 1 Hz (ReVelle, 1995).

-

Mountain-associated waves. Infrasound signals are generated by airflow over mountain ranges. Acoustic waves with periods between 50 seconds and 1 second often are excited. Energy greater than 1 Hz also occurs. The existence and character of high-frequency energy may be valuable for identifying this class of signal, which can be detected at distances of thousands of kilometers (Larson et al., 1971; Green and Howard, 1975).

-

Volcanoes. This is another area in which valuable discrimination information may exist at higher frequency. It should be an area for future research (Buckingham and Garces, 1996).

-

Aurora. For IMS stations at high geomagnetic latitudes impulsive infrasonic signals of amplitudes up to ten microbars with periods as short as ten seconds are frequently observed, on the night side of the earth, as bow waves generated by supersonic motions of large scale auroral arcs that contain strong electrojet currents, (Wilson, 1971). The specific source arcs that generate these impulsive type auroral infrasonic waves (AIW) can not be identified by triangulation, using the successive arrival of the AIW waves at two stations, because of the highly anisotropic nature of the bow wave radiation by auroras (Wilson, 1969a). However, if the auroral source arc of an AIW passes over the infrasonic station there will be a large magnetic perturbation that is easily observable and can be used to identify the infrasonic wave as one of auroral origin. Past observations in polar regions, especially by the University of Alaska, provided much useful data on these phenomena.

-

Earthquakes. Colocated seismic and acoustic arrays offer opportunities to identify seismic energy coupled from the atmosphere at frequencies greater than 1 Hz (as well as lower frequencies). Conversely, it will also be valuable to identify infrasonic signals caused by local seismic disturbances (Donn and Posmentier, 1964).

The optimum infrasonic sample rate should be at least 20 Hz. This will ensure that the acoustic signals are completely defined and that no information important for discrimination or yield estimation is lost. It should be done at least during the early stages of the IMS until it can be determined if a lower rate can be employed. In addition, a growing number of geophysical monitoring applications are being identified that represent potential valuable resources for other national needs (e.g., avalanche and tornado detection and warning, as well as monitoring turbulence aloft). These applications are dependent on data up to 5 Hz.

Improved Signal Processing

Array processing is required for typical infrasonic signals because signals with large signal-to-noise ratios tend to be the exception, except for nearby or large geophysical events such as volcanic eruptions. Even these events at long ranges can be comparable in amplitude to background signal threshold levels. On the other hand, the fact that wind-induced pressure noise will be uncorrelated from array element to array element offers a means to reduce this class of contamination. An analog cross-correlation was used prior to the 1970s to perform processing, and a digital version of this instrument was produced by Young and Hoyle (1977). This technique has been applied to the processing of atmospheric gravity waves (Einaudi et al., 1989) and ocean waves.

The use of a data-adaptive Pure-State Filter (PSF) (Samson and Olson, 1981; Olson, 1983) is effective in reducing incoherent, isotropic wind noise in multivariate infrasonic array data. Non-isotropic, incoherent noise can also be eliminated from infrasonic array data by adapting the PSF filter characteristics to the data in regions free of signals of interest (see Olson, 1982). While a wide variety of analysis techniques have been used in the search for infrasonic signals in the data stream, such as beam-steering, f-k analysis, and cross-correlation combined with least-squares estimators, the performance of each technique is always improved when the data are PSF filtered prior to analysis (Olson et al, 1982). An increase in signal-to-noise ratio of as much as 20 db and an increase in the maximum value of inter-microphone cross-correlation coefficient by as much as 0.20 have both been obtained after PSF filtering of the raw data. Further research on the application of data-adaptive, frequency domain filters to infrasonic data should be pursued to enhance the detection of low level CTBT monitoring station infrasonic signals.

There are a number of methods for improving the ability to distinguish signals and to visualize results. A range of geophysical phenomena can produce false alarms, mask nuclear detonation signals, or complicate analysis. Some of these produce unique signatures that can be identified using computer algorithms. For example, avalanches contain a short-duration, fixed-frequency wavetrain (see Appendix F). One approach to improve signal processing would include the following steps: (1) produce a training set using acoustic signals from known avalanches, (2) train a neural network, and (3) perform testing to validate the network skill in identifying avalanche signals. Such research could then be expanded to all types of signals using a variety of approaches.

Calibration and Testing

Important calibration and testing activities include the following.

-

The IMS will include sensors of a variety of designs, all intended to have the same properties in terms of operating characteristics. Because these designs will vary from country to country, methods of calibration must ensure that sensor sensitivities and frequency responses are within agreed-upon limits. Because few standards for calibrating infrasonic sensors exist, there is a need for transportable standards to perform cross-calibration between countries as well as monitoring sites.

-

Because of the nature of their design and the critical mission they perform, spatial noise reducing filters must be verified and calibrated periodically. A break in the filter hose is the equivalent of an electrical short to ground, and it is necessary to locate and fix such problems. This area of research and development will have a great impact on day-to-day operations.

-

There is a need to calibrate the array function itself at single or multiple sites. Research on controlled infrasonic sources could allow testing of array functions for detection and bearing estimation. They would also provide an opportunity to evaluate array performance. Dedicated large conventional explosive tests are good candidates, but these are not possible in all areas.

Association and Location

The process of association and location for the infrasound network can benefit from the known approaches used in seismology, although some modifications may be necessary. Research in several areas is needed because global infrasound networks have not been operated for more than 20 years. The characteristic parameters of events of interest such as spectral content, duration, amplitude, and correlation require elaboration. Some of these are known from past experience, but incorporation into automated processing has not been performed for infrasound data. Effects of atmospheric conditions on travel times, frequency content, duration, and bearing accuracy need to be understood. Propagation models should include the range dependence of atmospheric variables. Additional analyses of past infrasound data on nuclear explosions as a function of yield and path would be useful.

Size Estimation

Past monitoring experience provides a baseline amplitude-distance relation. Improvements will come from the incorporation of atmospheric dynamics to account for observed signal amplitudes and, therefore, size estimates. Fresh examination of the relationship between known period and yield for low-yield events would contribute to improved estimates. Additional research on atmospheric signals from partially coupled events will establish a modified amplitude-distance relation as a function of coupling efficiency. However, since yield estimation is of secondary importance to CTBT verification, research in this area is not a high priority.

Source Identification

Infrasonic data taken at long range from an event will not differentiate a chemical explosion from a nuclear explosion. Viewed at long range,

both are essentially point sources of impulsive energy release. Thus, there is a need to define unique explosion features. In parallel, the characteristics of natural and human-induced sources must be determined for discrimination purposes. These "background" sources have some known characteristics, but not all sites will see the same background. As sites become operational, their backgrounds will have to be cataloged. This will allow the rapid dismissal of some sources from further analysis.

Some past work and current research have defined signal characteristics of natural and human-induced sources, but much remains to be done. A review of past U.S. and French data from nuclear explosions in the atmosphere may provide a unique explosion "voiceprint." Focused field experiments or extended monitoring to create archival data suitable for source characterization may lead to ways to distinguish nuclear explosion signals from natural or man-made sources. These data bases could also be used to examine the synergy between seismic and infrasonic technologies to study evasion scenarios in which a chemical explosion is used to mask a nuclear explosion. These studies should be coupled with analytical or numerical investigations to understand and predict the spectral power produced and to extend the results to explore the ramifications of source strength or size changes. Once such knowledge bases exist, they can be applied to eliminate such sources as potential false alarms. There should be valuable intersections between the nuclear monitoring and geophysical communities. Some resources that will be worth drawing on include past meteor, volcano, and earthquake detections as well as documented signals from missile launches. A recent compilation from three years of array data at Antarctica and Fairbanks Alaska (Wilson et al., 1996) is an example of a database that will be valuable for these purposes.

Attribution

Locations determined by infrasound are unlikely to be sufficiently accurate to do an immediate sample collection, but they may help guide a wide-area search in the open oceans and focus the processing of signals detected by other technologies. Attribution by infrasound would be possible in cases where the location and error estimates lie totally within a country's borders. In broad ocean areas, attribution will not be possible solely through the use of infrasound data (as is the case for seismology and hydroacoustics).

Summary of Research Priorities Associated with Infrasound Monitoring

In summary, a prioritized list of research topics in infrasonics that would enhance CTBT monitoring capabilities includes:

-

Characterizing the global infrasound background using the new IMS network data.

-

Enhancing the capability to locate events using infrasound data.

-

Improving the design of sensors and arrays to reduce noise.

-

Analyzing signals from historical monitoring efforts.

3.4 RADIONUCLIDES