| This page in the original is blank. |

Air Traffic Control Modeling

KATHRYN T. HEIMERMAN

The MITRE Corporation

McLean, Virginia

Abstract

This paper describes how the U.S. national airspace system (NAS) operates today and discusses anticipated changes. Examples are given of recent modeling efforts. Models help NAS stakeholders make better-informed decisions about how to safely implement agreed-upon goals for the next generation of air traffic control equipment and procedures. The models sometimes suggest to decision makers what the goals ought to be. Six fundamental modeling concepts that lie on the modeling frontier and will influence its future directions are discussed. These concepts are suggestions for future research and areas where interdisciplinary contributions would expedite advances at the frontier .

Introduction

The term modeling spans the spectrum from simple relationships to highly complicated, parallel, fast-time, constructive, ''human-in-the-loop," and discrete-event computer simulations. This presentation emphasizes the frontier in modeling. Toward that end, the most exciting work in modeling and simulation is in the development of fundamental modeling concepts. This paper begins with a summary of how the national airspace system (NAS) operates today and then covers anticipated changes and gives examples of models. Then, concepts at the modeling frontier that will guide its future directions and opportunities for interdisciplinary research are discussed.

U.S. National Airspace System Operations

Both analytical and computer models are critical tools for researchers of the NAS. The reason is that if we wish to conduct tests or deploy new equipment or procedures, we cannot simply halt NAS operations. The NAS operates continuously. Nor can we simply plug in advanced prototype systems for testing during NAS operations because human lives would be at stake should anything go wrong. So we use models.

NAS airspace spans all U.S. territories and beyond the continental shelf. The NAS includes all air traffic control (ATC) and traffic management facilities and personnel as well as equipment used for communication, navigation, and surveillance, such as VHF/UHF voice transmitters and receivers, navigation beacons, weather and windshear radars, and instrument landing equipment. The Federal Aviation Administration (FAA) procures, operates, and maintains this equipment. Besides the FAA there are the system users who generate flights, including scheduled passenger and cargo carriers, business jets, the military, and general aviation (recreational and experimental aircraft). The NAS is the largest command, control, and computer system in the world.

On a typical day in the United States, over 1.5 million people fly safely aboard some 130,000 flights (Federal Aviation Administration, 1996). The United States maintains a sterling aviation safety record. In economic terms the U.S. civil aviation industry contributes about 5 percent of the annual U.S. gross domestic product, so there are also economic incentives to maintaining a safe and healthy civil aviation industry (Wilbur Smith Associates, 1995).

The FAA assures safety via certification of the people, procedures, and equipment that operates and is maintained in the civilian ATC system, and by regulation of the aviation industry. For example, the FAA inspects and certifies equipment airworthiness and skill levels of flight and maintenance crews. Regulations require that while flying under visual flight rules pilots must "see and avoid" to ensure safe separation. Safe separation means ensuring three-dimensional distance separation between all aircraft at all times.

FAA regulations similarly require that, while flying under instrument flight rules (e.g., passenger flights), pilots must adhere to an FAA-cleared flight plan. Air carrier dispatchers must maintain positive operational control of flights. Positive operational control means uninterrupted origin-to-destination surveillance, communication, and navigation services for every flight. Meanwhile, an FAA ATC specialist ensures safe separation under instrument flight rules.

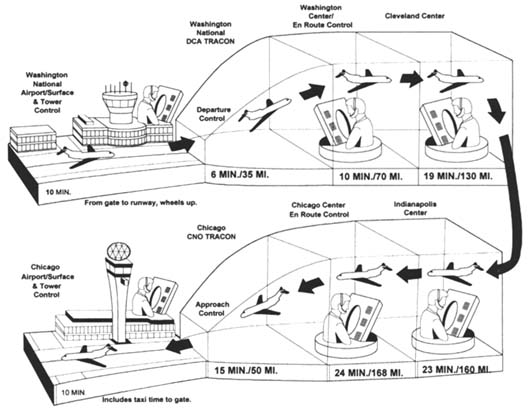

Starting from gate pushback, the phases of flight include (see Figure 1) taxi, departure, en route transit, approach, land, taxi, and at gate (Nolan, 1994). Correspondingly, ATC control of a flight is passed through a series of controllers: ground, terminal, departure, en route, approach, terminal, and ground. While en route, a flight passes through imaginary chunks of airspace

and is monitored and controlled by facilities called Air En Route Traffic Control Centers or simply "centers."

One additional control facility is the ATC System Command Center. There, traffic management specialists monitor and manipulate traffic flows nationwide so that traffic demand does not overwhelm system capacity. The command center coordinates among FAA centers and air carrier dispatchers to reroute traffic around pockets of bad weather or disrupted airports. In times of extreme congestion or service disruption, specialists are authorized to impose traffic flow initiatives such as ground delay, ground stop, or miles-in-trail (instructing pilots to maintain a particular distance between leading and trailing aircraft).

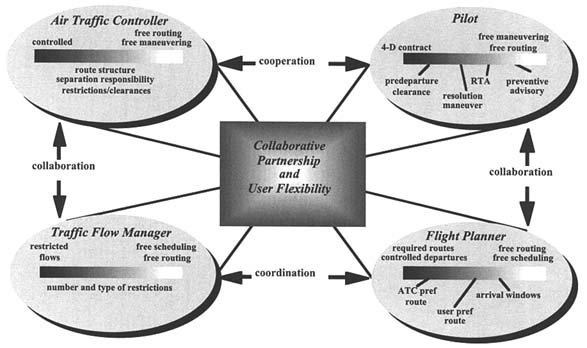

The system that has been described is how the NAS currently operates. However, economic and safety factors are driving change. Essentially, the anticipated changes are contained in the "Free Flight" concept (see Figure 2). Under Free Flight, today's system is expected to evolve toward one with distributed decision making, increased information flows, and shared responsibility. Free Flight's greater planning and trajectory flexibility is expected to reap economic benefits. To illustrate, consider that scheduled air carriers' collective profits were about $2.5 billion in 1996 (Air Transport Association, 1997). Under Free Flight, preliminary research conducted by MITRE and others indicates that scheduled air carriers may reap cost savings on the order of $1 billion annually from known, near-term communication, navigation, surveillance, and air traffic management enhancements.

Concern for safety is also driving change. The highest levels of the federal government have articulated a goal to improve safety. The reason why can be shown mathematically. U.S. passenger traffic is forecast to grow by 4 percent per year well into the next millennium (Federal Aviation Administration, 1995; Boeing Commercial Airplane Group Marketing, 1996). In addition, the scheduled air carrier accident rate has averaged 42 accidents per year over the past five years, including both fatal and non-fatal accidents (Federal Aviation Administration, 1997). Compounding the 4 percent annual traffic growth rate and applying the annual accident rate yields a doubling of the annual number of accidents by the year 2014. The only recourse to that unacceptable safety level is to decrease the accident rate by changing the NAS.

Modeling

Since we cannot simply halt NAS operations, analytical and computer models are critical in developing, testing, and evaluating equipment and procedures that show promise for bringing future NAS goals to fruition. For example, security improvements will come from better weapons detection (Makky, 1997) and passenger screening (National Materials Advisory Board,

1996). Equipment, materials, and procedures to accomplish this are often modeled by Monte Carlo and discrete-event computer simulations. Experimental designs implemented using these models reveal, for example, ways to improve detection error rates.

MITRE has in-house, human-in-the-loop, real-time cockpit and ATC console simulators that can even simulate weather conditions. Field operational conditions are set up, and air traffic controllers and/or certificated pilots are asked to participate. Sometimes simulations run under controlled experimental conditions, but they often run under exploratory research conditions.

One very well recognized large-scale model developed at MITRE is the Detailed Policy Assessment Tool (DPAT) (MITRE, 1997). DPAT is a constructive, discrete-event, fast-time computer simulation distributed over four Sun Microsystems processors with simulation time synchronized using the Georgia Tech Time Warp product. In about 4 minutes, DPAT can simulate more than 60,000 flights among more than 500 U.S. or international airports. DPAT simulates each flight, computing trajectory, itinerary, and route, as well as runway utilization, system delay and throughput, and other statistics.

Our modeling work has spanned a spectrum of logical paradigms, including deterministic and stochastic rules, fuzzy logic, genetic algorithms, simulated annealing, and mathematical programming. The remainder of this section details those efforts.

In one case we designed algorithms to model stakeholder responses to NAS traffic flow disruptions (Heimerman, 1996). Disruptions could be caused by severe weather, unanticipated airport closure, or other reasons. Responses are decisions to delay, divert, or reroute flights. The algorithms more realistically simulate runway arrival and departure queues as NAS users and command center specialists manage traffic demand. The model also illuminates critical information flows on which decisions are based.

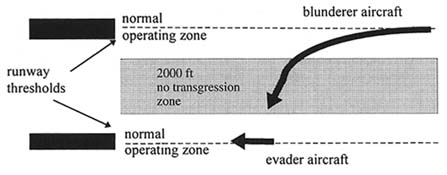

We also constructed a fast-time Monte Carlo model called the Simultaneous Instrument Approach Model. It simulates simultaneous approaches to parallel runways, where there is some probability that a blunder event could occur (see Figure 3). If the blunderer deviates enough, ATC will instruct the evader aircraft to perform a breakout maneuver and go around for a second approach. We designed and verified fuzzy logic algorithms representing the controllers' selection of one of several possible breakout maneuvers.

Current modeling effort explores how air carrier dispatchers might respond if given access to information as envisioned under Free Flight. A secure digital data exchange computer network will allow near-real-time exchange of data that have not been shared before. Such a system ought to enable decision makers to shift their attention from mere information exchange to collaborative decision making.

We have developed two prototype models to explore the implications of such a hypothesis. One is a linear program that shows the economic value of

FIGURE 3 Blunder event during simultaneous approaches.

Source: The MITRE Corporation.

information sharing. It shows that, if dispatchers are given an accurate, timely report of reduced arrival capacity at an airport, they can dispatch more economically prudent arrival streams. A second model is a set of coupled difference equations that represent an iterative competitive marketing game among air carriers. We used it to identify conditions under which one competitor dominates. A controller entity assumes strategic roles such as arbiter, referee, enforcer, or negotiator. The model exploits principles from complex adaptive systems theory and nonlinear dynamics.

To support these "data greedy" models, parameter estimates are obtained by conducting field tests. One such field test had as its purpose to examine whether datalinks that passed real-time arrival sequence information from the FAA to scheduled air carriers would improve dispatcher situational awareness, operational cost effectiveness, or collaborative problem resolution and decision making. Versions of this field test are currently under way.

Frontier and Interdisciplinary Opportunities

Six fundamental modeling concepts are driving the modeling frontier. These concepts are shaped in aviation applications by an uncompromising regard for safety. The safety constraint translates into a requirement for model credibility, which arises from the processes of verification (assuring that computer programs encode a model's conceptual design) and validation (assuring that the model reflects the real system). As one learns in graduate school, (1) a model is a simplified abstraction of the most salient features of a system and (2) the modeling process invokes a blend of mathematical relationships and art (Banks and Carson, 1984).

- Concept 1: The first fundamental concept driving the frontier is that statements (1) and (2) are coupled to the extent that the art of modeling manifests itself in the ways that different people perceive and define the terms

-

salient features and real system. This fact wreaks havoc on the model validation process, a research area where more work should be done.

The difficulty occurs when two experienced and knowledgeable individuals cannot agree on a single description of the system to be modeled. As a consequence, there is difficulty determining not only when the modeling effort is finished, but also which of the inconsistent perceptions of reality to use for comparison during the validation process. Heated arguments can ensue, particularly where safety is involved. These matters are exacerbated by the fact that the English words modelers use to try to resolve their differences are vague relative to the absolute requirement for unambiguous computer instructions. - Concept 2: Model validation may be additionally confounded when the system of interest is not a real system. For example, we might build a model intended to alert us to never-before-imagined system states, behaviors, processes, or boundaries. So if the model is not supposed to reflect reality, can we know when we have achieved success in modeling? Model validation is not well understood. To those interested, see Oreskes et al. (1994).

-

Concept 3: Though not required by statements (1) and (2), most students are taught a host of modeling techniques that first decompose a problem into component parts, solve those parts, and then aggregate the solutions as a solution to the larger problem. This approach is called "reductionism" and results in a pyramid of component models. This "pyramid scheme" approach is popular because it is the only one known to many modelers and because object structures in modern object-oriented programming correlate well with a system's component parts.

In fact, however, reductionism is not a productive approach to modeling those systems that are not simply the sum of their parts. The reason is that examining the components does not recognize their dependencies or interactions over time. For example, in a hierarchy of descriptive variables pertinent to some system, the measurement scales at the top and bottom of the hierarchy are often quite different. Such systems are said to exhibit a nonhomogeneous resolution scale throughout its components. As a consequence, reductionist approaches do not apply. More research to supplement reductionist techniques would be helpful. - Concept 4: Another modeling approach that needs to be examined arises from the fact that throughout the history of mathematical modeling we have proactively conserved computational time in order to generate results in a timely manner. For example, we use look-up tables of formula values or estimate functional values by a truncated Taylor's series expansion. We code techniques like these into our models. However, where beneficial and in light of today's high computer speeds and memory capacities, we should reappraise previously abandoned techniques such as exhaustive searches of variable spaces and response surfaces.

- Concept 5: Modelers, as a group, are very good at writing computer code that describes physical phenomena such as six-degree-of-freedom projectile trajectories. Increasingly important, however, are models of cognitive, social, and behavioral phenomena and ways that individual behaviors cause flux in trends and paths traversed by humans collectively. Examples include dynamics such as the public good, group performance, economic influences on decision making, and consequences of political struggles.

- Concept 6: Related to the preceding point is the concept of modeling how individual people think, process information, and identify the need to reevaluate options or change behavior. Even with results from artificial intelligence, little is known about the links between a decision and the information on which a decision was based. For example, we do not understand "selective attention" in which decision makers turn their attention at points in time to the data that they wish to focus on and discard the remaining data. Social scientists could help us understand human reasoning, but that would not be enough. The question of how to encode these processes in computer programs is an additional matter that needs investigation.

Summary

Research providing a richer theory about these six modeling concepts would expedite advances at the frontier. Clearly, contributions could come from other disciplines. Meanwhile, the newest ATC models help NAS stakeholders make better informed decisions about how to safely implement agreed-upon goals for the next generation of air traffic control equipment and procedures. The models are even helping us frame what the goals ought to be. This is an exciting and dynamic time for the modeling and simulation community and for civil aviation.

References

Air Transport Association. 1997. Annual Report. Washington, D.C.: Air Transport Association.

Banks, J., and J. S. Carson II. 1984. Discrete-Event System Simulation. Englewood Cliffs, N.J.: Prentice-Hall.

Boeing Commercial Airplane Group Marketing. 1996. 1996 Current Market Outlook, World Market Demand and Airplane Supply Requirements. Seattle, Wash.: The Boeing Co.

Federal Aviation Administration. 1995. FAA Aviation Forecasts, Fiscal Years 1995–2006. #FAA-APO-95-1. Washington, D.C.: U.S. Department of Transportation.

Federal Aviation Administration. 1996. Administrator's Fact Book. Washington, D.C.: Federal Aviation Administration.

Federal Aviation Administration. 1997. Aviation Safety Statistical Handbook , Vol. 5, No. 6. Washington, D.C.: Federal Aviation Administration, Safety Data Services Division.

Heimerman, K. T. 1996. Algorithms for Modeling Stakeholder Responses to NAS Disruptions. MTR96W56. McLean, Va.: The MITRE Corp.

Makky, W. H., ed. 1997. Proceedings of the Second Explosives Detection Technology Symposium and Aviation Security Technology Conference. Washington, D.C.: Federal Aviation Administration, Aviation Security R&D.

MITRE. 1997. Detailed Policy Assessment Tool (DPAT), 1997. MP97W92. McLean, Va.: The MITRE Corp.

National Materials Advisory Board. 1996. Airline Passenger Security Screening—New Technologies and Implementation Issues. Publication #NMAB482-1. Washington, D.C.: National Academy Press.

Nolan, M. S. 1994. Fundamentals of Air Traffic Control. Belmont, Calif.: Wadsworth.

Oreskes, N., et al. 1994. Verification, validation, and confirmation of numerical models in the earth sciences. Science 263(February 4):5147.

Wilbur Smith Associates. 1995. The Economic Impact of Civil Aviation on the U.S. Economy - Update 93. Prepared for the Federal Aviation Administration and Lockheed Martin. Washington, D.C.

Quadrupole Resonance Explosive Detection Systems

TIMOTHY RAYNER

Quantum Magnetics

San Diego, California

Quadrupole resonance (QR) has been demonstrated to be an effective technique for detecting the presence of energetic materials hidden in baggage and cargo. Quantum Magnetics has developed a series of explosive detection devices (EDDs) based on QR technology. The QSCAN1000 can detect the presence of two main high-explosive molecules, RDX and PETN. These two materials are the main explosive constituents of many military plastic explosives. C4 and Semtex H contain RDX; Detasheet and Semtex A and H contain PETN.

Quadrupole Resonance Analysis: Background

QR is a magnetic resonance technology that occurs as a result of the inherent electromagnetic properties of the atomic nuclei in crystalline and amorphous solids. Nuclei with nonspherical electric charge distributions possess electric quadrupole moments. QR originates from the interaction of this inherent electric quadrupole moment with the gradient of the electric field in the vicinity.

In classical terms, when an atomic quadrupolar nucleus experiences an electric field gradient from the surrounding atomic environment, different parts of the nucleus experience different electric fields. Therefore, the electric quadrupole experiences a torque that causes it to precess about the electric field gradient. This precessional motion carries with it the nuclear magnetic moment, so that a rotating magnetic field in phase with the precession can change the orientation of the nucleus with respect to the electric field gradient. After such a radio frequency magnetic field pulse, the precessing magne-

tization produces a detectable oscillating magnetic signal. For explosives and drug detection applications, there are three significant quadrupolar nuclear isotopes: nitrogen-14 (14N), chlorine-35 (35Cl), and chlorine-37 (37Cl).

The most significant distinguishing characteristics of a QR response are the precessional (or transition frequencies) and the relaxation times. Relaxation times are measures of the rates at which nuclei return to equilibrium after being disturbed by a radio frequency (RF) field. The types of RF pulse sequences that are appropriate for detecting QR signals are determined by the values of these relaxation times.

System Description

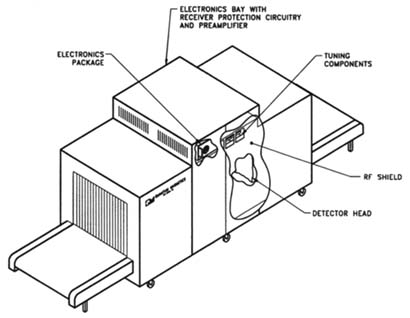

The QR scanner described here is referred to as the QSCAN1000, an EDD suitable for inspecting items up to 0.8 m (height) x 1.0 m (width) x 1.0 m (length) in size. The scanner is a conveyorized open-ended system with no encumbrances to impede the flow of baggage and is centered around a number of key components:

- A detection head that consists of a large RF coil that can be tuned over a frequency range containing the QR frequencies of plastic, sheet, and military-grade explosive compounds. RF coil tuning is completely automatic and adjusts for any baggage situation.

- An electro-magnetic interference (EMI) shield to protect the RF coil from external interference. Open access to the coil is afforded by shield tunnels that allow the scanner to operate without any door or cover while affording adequate EMI shielding.

- An electronics package containing an IBM-compatible PC chassis with four plug-in cards (the RF pulse programmer, the RF card, the analog-to-digital conversion card, and a general controller card) that responds to changes caused by different items in the detector head, an RF driver amplifier, control hardware, and system control software.

For explosive detection applications, a pulse train is applied at or near the QR frequency. The response to this train of RF pulses is captured and analyzed for the presence of the characteristic QR signal from an explosive. Two different scans are applied at different QR frequencies for two different explosives, RDX and PETN. The total scan takes less than 8 seconds to perform, equating to a throughput rate of 450 bags per hour. The scanner is shown in Figure 1.

System Performance

At present, the QSCAN1000 scans for the presence of RDX and PETN. The major factor that dictates detection performance is the signal-to-noise

Figure 1 The QSCAN1000 EDD, showing the system's key components.

Source: Quantum Magnetics.

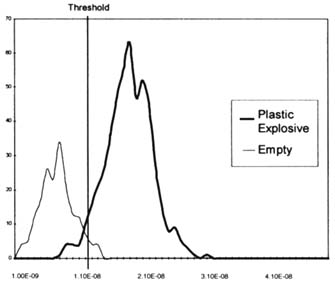

ratio (SNR) and the false alarm rate. SNR is related to a number of factors, including the efficiency of generating a QR signal and spurious noise in the system. As an example, Figure 2 shows histograms of signal amplitudes from a series of scans, with the QSCAN1000 containing explosives and not containing explosives. The figure clearly shows how the signal amplitude increases as explosives are added. Detection is based on the signal amplitude exceeding a predetermined threshold amplitude (shown as the dark line).

The scan results also can be displayed as a receiver operating characteristic (ROC) curve. The ROC curve is a convenient way to display the tradeoff between the probability of false alarm, P(FA), and the probability of detection, P(D). Figure 3 presents the same data shown in Figure 2, this time in the form of an ROC curve.

The dominating factor that affects the false alarm rate for the QSCAN1000 EDD is the phenomenon of magnetostrictive ringing. Certain types of metallic items when scanned can generate a QR-like signal.

Field Trials

The QSCAN1000 has been field tested at a number of locations. The first test was at Los Angeles International Airport's Terminal 7, replacing a stan-

dard in-line x-ray system in United Airlines' international baggage-handling line. This trial was the first test ever of a QR explosives screening system in an actual airport environment. During the 1-week trial, a total of 4,000 bags was scanned, at a throughput rate of approximately 600 bags per hour. Further tests were done in the United Kingdom at Manchester and Heathrow international airports. In those tests, over 6,000 bags were scanned, at a throughput rate of approximately 300 bags per hour.

In April 1996 the QSCAN1000 was tested at the Federal Aviation Administration's William J. Hughes Technical Center in Atlantic City. The QSCAN1000 was tested for detection of live explosives of various kinds and in different configurations. The tests were very successful in showing QR to be an effective screening system.

Future Work

As the development of QR technology has continued, Quantum Magnetics has embarked on a very wide ranging development schedule. The schedule includes both the technical development of QR and its introduction to different areas of airline security, such as the screening of cabin baggage.

Quantum Magnetics is currently engaged in a number of key areas of development with respect to improving the performance of QR-based explosive detection devices for aviation security applications. The detection of plastic explosives will be increased by (1) optimizing the pulse sequences used to detect the signal; (2) utilizing novel multiple frequency detection schemes to increase detection in the presence of magnetostrictive ringing; (3) investigating complex pulse shaping to increase detection bandwidth in order to mitigate deficiencies in detection caused by temperature shifts encountered in airline baggage; and (4) improving throughput with the implementation of an inductive tuning method. The program also will investigate the detection of two additional explosive materials—TNT and ammonium nitrate—and will study other related issues concerned with EMI shielding and the detection of shielded volumes.

Acknowledgments

This work was supported by the Federal Aviation Administration. I appreciate the help of Allen N. Garroway of the Naval Research Laboratory.

The Role of Nondestructive Evaluation in Life-Cycle Management

HARRY E. MARTZ

Lawrence Livermore National Laboratory

Livermore, California

Introduction

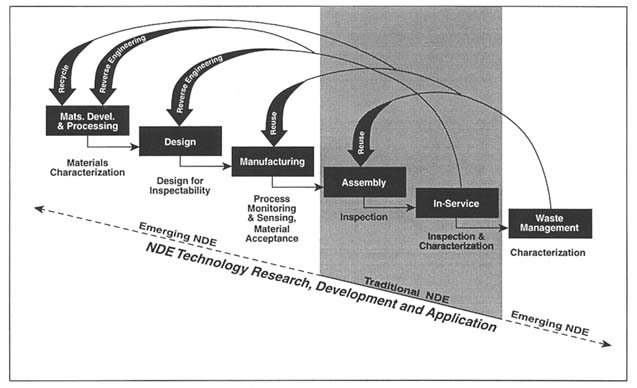

Nondestructive evaluation (NDE) is a suite of techniques that allows visualization of the external and internal structures of an object without damaging it. For example, a very common NDE technique that most people have experienced is the use of dental x-rays for cavity detection. NDE plays an even larger role in nonmedical applications. It is being integrated into the entire product life cycle (Figure 1).

Traditionally, NDE has been viewed only as an end-product inspection tool. The traditional view does not take advantage of the full economic benefit that NDE provides. NDE can ensure/improve safety, shorten the time between product conception and production, and help reduce waste. Examples include new material and process development; raw materials acceptance; process monitoring and control; finished product and in-service inspection; and retirement for cause, disposal, and reuse (Goebbels, 1994). Therefore, NDE is increasingly being used throughout the life-cycle management of products (Cordell, 1977). 1,2,3 Presented here is an overview of some NDE methods and life-cycle applications of NDE.

Overview of NDE Methods

NDE draws on the expertise of a multidisciplinary team and a broad range of technologies. The Lawrence Livermore National Laboratory's (LLNL) NDE organization is an example of such a multidisciplinary team. LLNL has a team that consists of mechanical and electrical engineers, material and com-

puter scientists, physicists, and chemists. The broad range of technologies used at LLNL includes electromagnetic (e.g., visual, x-ray radiation, infrared, microwave) and acoustic (e.g., ultrasonics and acoustic emission) measurements.4 The research, development, and application of these technologies require experimental, theoretical, modeling, and signal and image processing capabilities. NDE is successful when all of these technologies and disciplines are integrated while working closely with the customer to determine the most appropriate technique(s).

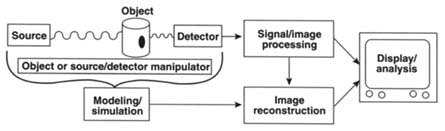

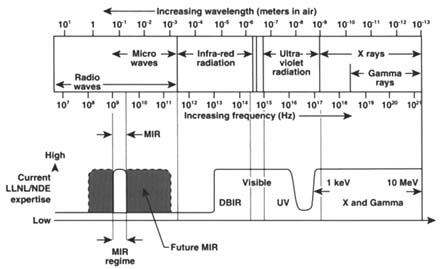

NDE techniques have a core of common components: a source (radiant energy); a detector to acquire transmitted or scatter radiation from the object; a manipulator/stage to translate, elevate, and/or rotate the object or source/detector synchronously; and a computer for control, data acquisition, processing, and analysis (Figure 2). A broad range of sources, detectors, manipulators, and computers have been used in NDE. Figure 3 provides some examples of the different electromagnetic sources used in NDE at LLNL. The next section briefly describes some NDE technologies under research and development at LLNL (see note 4).

Visual Inspection

Visual inspection is probably the oldest NDE technique. However, coupling cameras with computers and image processing greatly enhances the NDE application of visual inspection. Innovative lighting schemes provide high-contrast images allowing inspections never before possible with simple camera systems.5,6 Techniques range from common high-speed and timelapse photography streak cameras to miniature cameras and fiber-optic-based systems for access to restrictive or hazardous environments (McGarry, 1997). Surface finish, cleanliness verification, presence or absence of specific features, and dimensional measurements are typical NDE tasks performed by visible light systems (Mascio et al., 1997). Traditionally, these measurements required careful orientation of the object to avoid perspective effects. Recent stereo image processing techniques have removed the necessity of having precise part alignment (Nurre, 1996). In addition, stereo techniques are leading to reverse engineering and art-to-part applications where computer-aided design drawings can be realized directly from a scanned image of a part (Levoy, 1997). In this report I do not provide any visible light NDE examples but refer the reader to the above and other references7 (see also notes 5 and 6).

X- and Gamma-Ray Imaging

X- and gamma-ray imaging techniques in NDE and nondestructive assay (NDA) have seen increasing use in an array of industrial, environmental, military, and medical applications8 (see also notes 1, 2, and 3). Much of this

FIGURE 2 Schematic of a typical NDE system configuration.

growth in recent years is attributed to the rapid development of computed tomography (CT). First used in the 1970s as a medical diagnostic tool, CT was adapted to industrial and other nonmedical purposes in the mid-1980s. Single-view (or angle) radiography hides crucial information—that is, the overlapping of object features obscures parts of an object's features and the depth of those features is unknown. CT was developed to retrieve three-dimensional (3D) information of an obscured object's features. To make a CT measurement, several radiographic images (or projections) of an object are acquired at different angles, and the information collected by the detector is

FIGURE 3 Electromagnetic spectrum showing the range of frequencies used in nondestructive evaluation at the Lawrence Livermore National Laboratory. MIR is micropower impulse radar. Source: Reprinted with permission from Lawrence Livermore National Laboratory (Mast and Azevedo, 1996).

processed in a computer (Azevedo, 1991; Barrett and Swindel, 1981; Herman, 1980; Kak and Slaney, 1987). The final 3D image, generated by mathematically combining the radiographic images, provides the exact locations and dimensions of external and internal features of the object. Two examples of CT are presented here; one is for improved implant design, the other for radioactive waste management.

Ultrasonic Testing

Ultrasonic NDE interrogates components with acoustic energy and can be used to determine material properties, wall thickness, and internal defects (Krautkrämer and Krautkrämer, 1990). High-frequency (~1 MHz) pulses of ultrasonic energy are radiated into the material and subsequently detected using specially designed transducers. The sound pulses are altered as they travel into and through the material as a result of attenuation, reflection, and scattering. The output pulse—the detected signal—is displayed, processed, and interpreted in terms of the internal structure of the object under investigation and based on its relation to the input pulse. Most often, ultrasonics is applied to detect thickness and to search for flaws in metals—namely, cracks and voids (see notes 1 and 2). However, ultrasonics can also be used to ascertain grain size, measure residual stress and elastic moduli, evaluate bond quality (e.g., solid-state, adhesive), and analyze surface characteristics (Krautkrämer and Krautkrämer, 1990; see also notes 1 and 2). Whenever the configuration of the object under test permits, a 2-D or 3-D image of the interior of the object can be made with reflections of the sound. An example of the use of ultrasonic testing for understanding material properties in the aging of composites is presented below.

Infrared Imaging

Infrared (IR) imaging is a global area inspection technique used for thermal NDE. IR imaging measures temperature and temperature differences to detect debonding, delamination, cracks, residual stress, metal thickness loss from corrosion, and other conditions that impact heat flow (see notes 1 and 2). Damaged materials heat and cool differently than do undamaged ones. Infrared images of flash-heated materials and structures produce temperature maps at video frame rates. Time-sequenced temperature maps are processed with computer codes developed at LLNL to reveal 3D images of flaw location, size, shape, thickness, relative depth, and percentage of metal loss for corrosion-damaged materials (Del Grande, 1996).

The dual-band infrared (DBIR) technique, developed at LLNL, is used for high-sensitivity temperature mapping to evaluate the quality of subsurface materials and structures. Concurrent use of two thermal IR bands allows separation of thermal and nonthermal IR signal components. Spatially depen-

dent surface emissivity noise is subtracted from IR images, thereby decoupling temperature from emissivity effects. The DBIR method has been highly successful in isolating the effects of corrosion damage from those of clutter produced by IR reflectance anomalies, corrosion inhibitors, ripples, and interior insulation (Del Grande, 1996; Del Grande et al., 1997). An example for in-service inspection of aging aircraft is presented below.

Signal and Image Processing

Imaging technology and image analysis are integral parts of NDE (Russ, 1995). In the past several years the NDE organization at LLNL has assembled and developed tools that couple image processing with computational NDE algorithms (see note 4). LLNL uses MatLab, Explorer, IDL, VIEW, and VISU (the latter two are LLNL-developed image processing codes) as tools for connecting state-of-the-art computational NDE with a wide variety of signal and image processing functions. The focus areas include edge detection and image enhancement for digitized radiographs; noise reduction from electronic and radiation sources; focused wave mode calculations for ultrasonic inspections; in-depth examinations of image reconstruction techniques including modeling of radiographic imaging (Martz et al., 1997b); novel applications of image processing to IR imaging (Del Grande et al., 1995); and statistical studies of different NDE algorithms (Azevedo, 1991).

Life-Cycle Applications of NDE

This section provides several examples of how different NDE technologies are applied throughout the life cycle of different products.

Material Development for Durability of Composite Materials

For many carbon composite materials, particularly in aerospace applications, durability is a critical design parameter. Development of composites for durability is facilitated by understanding aging mechanisms. With a design lifetime of 120,000 hours (13.7 years) and skin temperature at˜180°C, real-time durability studies of candidate materials for high-speed aircraft structures are time-consuming and very expensive. Test programs are being developed so that long-term aging can be accelerated and the design of composite materials can occur in a reasonable period of time and at lower costs. For example, two methods used to accelerate aging of composites are elevating temperatures and varying chemical compositions in test environments.

Ultrasonic NDE is currently being used to aid in the characterization of fiber composite materials for high-speed aircraft structures (Chinn et al., 1997). Using ultrasonic attenuation, LLNL has characterized a series of fiber poly-

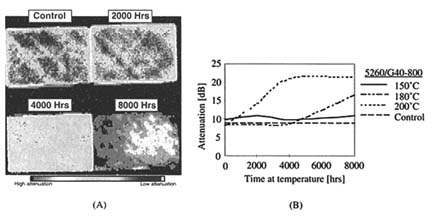

FIGURE 4 The 5260/G40-800 composite samples are aged to 8,000 hours. (A) C-scan images of samples aged at 180°C show postcuring up to 4,000 hours and extensive damage at 8,000 hours. (B) Ultrasonic attenuation of the sample increases with time and temperature. Source: Reprinted with permission from Plenum Press (Chinn et al., 1997).

mer composites aged under different temperatures, times, and chemical environments.9 Figure 4 shows the results of one type of fiber composite aged under different temperatures. The ultrasonic images show the deterioration of the sample aged at 180°C and up to 8,000 hours. The data reveal that damage at 180°C begins after 4,000 hours. Aging at 200°C causes damage after only 2,000 hours. Chemical analysis of the same series of materials confirms the damage trend that ultrasonic attenuation measurements suggest.

A comparison of accelerated aging to real-time aging is shown in Figure 5. The attenuation history of a sample aged for 2,500 hours in argon at 220°C is very similar to that of a sample aged for 20,000 hours in air at 180°C. These results appear very promising for ultrasonic testing to be used as a tool in the further development of aircraft composite materials.

In future work, LLNL will determine and better understand the correlation of ultrasonic attenuation data and the mechanical properties of fiber composite materials. These studies, combined with destructive mechanical testing, and microstructural and chemical analyses, will improve our understanding of composite durability as a function of the aging process.

Improved Prosthetic Implant Design

Human joints are commonly replaced in cases of damage from traumatic injury, rheumatoid diseases, or osteoarthritis. Frequently, prosthetic joint im-

FIGURE 5 Real-time aged samples of KIII-B/IM7 composites exhibit ultrasonic attenuation characteristics over time as samples aged in an accelerated program. Shaded lines indicate estimated behavior of time periods where data are not yet available. Accelerated aging correctly predicts initial drop in attenuation caused by postcuring in the real-time aged sample. Source: Reprinted with permission from Plenum Press (Chinn et al., 1997).

plants fail and must be surgically replaced by a procedure that is far more costly and carries a higher mortality rate than the original surgery. Poor understanding of the loading applied to the implant leads to inadequate designs and ultimately to failure of the prosthetic. 10

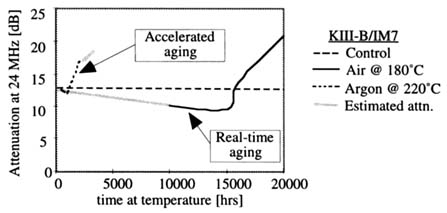

LLNL's approach to prosthetic joint design offers an opportunity to evaluate and improve joints before they are manufactured or surgically implanted. The modeling process begins with computed tomography data, which are used to develop human joint models (see Figure 6). An accurate surface description is critical to the validity of the model (Bossart and Martz, 1996). The marching cubes algorithm (Johansson and Bossart, 1997) is used to create polygonal surfaces that describe the 3D geometry of the structures identified in the scans. Each surface is converted into a 3D finite element mesh that captures its geometry (Figure 6). Boundary conditions determine initial joint angles and ligament tensions as well as joint loads. Finite element meshes are combined with boundary conditions and material models. The analysis consists of a series of computer simulations of human joint and prosthetic joint behavior. The simulations provide qualitative data in the form of scientific visualization and quantitative results such as kinematics and stress-level calculations. These calculations predict possible failure modes of the implant after it is inserted into the body.

Results from the finite element analysis are used to predict failure and to provide suggestions for improving the design. Multiple iterations of this pro-

FIGURE 6 The process of biomechanical model development begins with a CT scan of human joints. Data are identified from the scan using a semi-automated segmentation process, shown here applied to the bones in the fingers (a), and three-dimensional surfaces are created for each identified tissue (b). The surfaces are prepared for finite element modeling in a volumetric meshing step (c). The finite element results show soft tissue deformations and stresses in the index finger ligaments and tendons (d).

cess allow the implant designer to use analysis results to incrementally refine the model and improve the overall design. Once an implant design is agreed on, a prototype is made using computer-aided manufacturing techniques. The resulting implant can then be laboratory tested and put into clinical trials (Hollerbach and Hollister, 1996).

Three failure modes are prevalent: kinematic, material, and bone-implant interface. Currently, LLNL is analyzing human joint models to determine the

in vivo loading conditions of implants used in normal life and implant components as they interact with each other. Future research will combine the human and implant models into a single model to analyze bone-implant interface stresses, thereby addressing the third common implant failure mode.

In-Service Inspection of Aging Aircraft

Detection and quantitative evaluation of hidden corrosion have been of major importance to the Federal Aviation Administration (FAA) since the famous Aloha Air accident in which an older Boeing 737 lost a large portion of its fuselage skin in midair. Moreover, the U.S. Air Force (USAF) needs to extend the useful life of its existing military aircraft. Much of the problem associated with life extension is the destructive nature of undetected corrosion. Also, with reduced budgets, the USAF does not want to spend time or manpower repairing corrosion that has not reached a dangerous level or that in reality does not exist. A number of NDE methods have been tested on aircraft structures that contain hidden corrosion11 (see also note 7). They all show promise but so far none has proved to solve this problem.

LLNL, sponsored by the FAA, developed and demonstrated a dual-band infrared (DBIR) imaging thermography technique for corrosion detection. This technique combines a commercial dual-band IR system12 with LLNL-developed smart defect-recognition algorithms. The DBIR technique was used to demonstrate results for corrosion loss in aircraft.

At LLNL we have obtained results that agree qualitatively with eddy current measurements taken by Boeing scientists (Del Grande et al., 1997). We detected, imaged, and quantified 5 percent (0.003-inch) corrosion metal thickness loss in the outer skin of a Boeing 727 fuselage. The accuracy of these results will be verified after dismantlement of this section of the aircraft by Boeing.

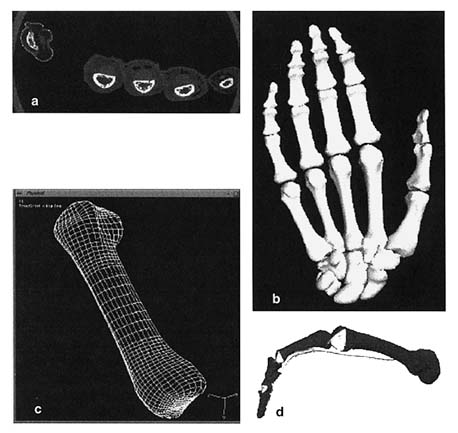

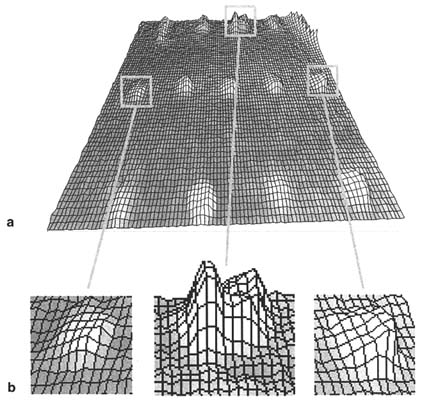

We also measured less than 10 percent skin thickness loss in a United Airlines 747 lap-splice structure scheduled for repair. This was confirmed by destructive exploratory maintenance prior to the repair. In addition to the commercial aircraft inspection activities, we measured the relative metal volume losses due to corrosion under 13 wing fasteners in a Tinker Air Force Base KC-135 wing panel (see Figure 7). Application of the smart defect-recognition algorithms with the IR imager was highly successful in isolating the effects of corrosion damage from clutter to eliminate false positives (or alarms) (Del Grande, 1996).

Our current research focus is on improving the depth resolution of IR tomography down to 20 to 40 micrometers, two orders of magnitude better than existing systems. This would greatly facilitate interpretation of DBIR temperature, thermal inertia, and heat capacity maps that detect, image, and quantify aircraft corrosion with few or no false alarms.

FIGURE 7 Results of infrared imaging of a military aircraft (KC-135) corroded wing fasteners. Contour maps of processed thermal data quantify the relative metal-loss volume from intergranular corrosion. In (a) damage varies under 13 wing-panel fasteners as shown. In (b) magnified views of 3 selected fasteners reveals, from left to right, slight, substantial, and moderate metal loss (corrosion) under the aircraft fasteners.

Gamma-Ray Nondestructive Assay for Waste Management

Before drums of radioactive or mixed (radioactive and hazardous) waste can be properly stored or disposed of, the contents must be known. Hazardous and "nonconforming" materials (such as free liquids and pressurized containers) must be identified, and radioactive sources and strengths must be determined. Opening drums for examination is expensive mainly because of the safety precautions that must be taken. Current nondestructive methods of characterizing waste in sealed drums are often inaccurate and cannot identify nonconforming materi-

als.13 Additional NDE and NDA techniques are being developed at LLNL (Decman et al., 1996; Roberson et al., 1995a) and elsewhere (see note 11) to analyze closed waste drums accurately and quantitatively.

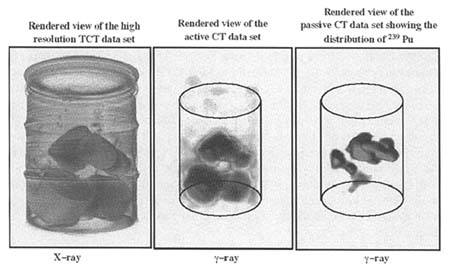

At LLNL we have developed two systems to characterize waste drums. One system uses real-time radiography and CT to nondestructively inspect waste drums (Roberson et al., 1995a). The other uses active and passive computed tomography (A&PCT), a comprehensive and accurate gamma-ray NDA method that can identify all detectable radioisotopes present in a container and measure their activity (Decman et al., 1996). A&PCT may be the only technology that can certify when radioactive or mixed wastes are below the transuranic (TRU) waste threshold, determine if they meet regulations for low-level waste, and quantify TRU wastes for final disposal. Projected minimum-detectable concentrations are expected to be lower than those obtainable with a segmented gamma-ray scanner, one method currently used by the U.S. Department of Energy.

Several tests have been made of A&PCT technology on 55-gallon TRU waste drums at LLNL (see Figure 8), Rocky Flats Environmental Technology Site (RFETS), and Idaho National Engineering Laboratory (INEL).14 These drums contained smaller containers with solidified chemical wastes and low-density combustible matrices at LLNL and RFETS, respectively. At INEL lead-lined drums were characterized with combustibles and a very dense sludge drum. In all cases the plutonium radioactivity of the drums ranged from 1 to 70 grams. At LLNL we are measuring the performance of the A&PCT system using controlled experiments of well-known mock-waste drums (Camp et al., 1994; Decman et al., 1996). Results show that the A&PCT technology can determine radioactivity with an accuracy, or closeness to the true value, of approximately 30 percent and a precision, or how reproducible the result is, to better than 5 percent (Martz et al., 1997a).

Perhaps the most important future development for this technology is to improve the system's throughput. The current throughput requires about 1 to 2 days per drum using a single detector-based scanner. At LLNL we have designs for upgrading this scanner to multiple detectors for throughputs estimated to be on the order of a few hours per drum (Roberson et al., 1997). Additional research and development efforts include improving the accuracy of the system and developing self-absorption correction methods.

Summary

This paper provides an overview of some common NDE methods and several examples for the use of different NDE techniques throughout the life cycle of a product. NDE techniques are being used to help determine material properties, design new implants, extend the service life of aircraft, and help dispose of radioactive waste in a safe manner. It is the opinion of this author and

FIGURE 8 Representative three-dimensionally rendered CT images of an LLNL transuranic-waste drum. (left) High-spatial (2-mm voxels) with no energy resolution x-ray CT image at 4 MeV reveals the relative attenuation caused by the waste matrix. (middle) Low-spatial (50-mm voxels) with high-energy resolution active gamma-ray CT image at 411 keV of the same drum reveals the quantitative attenuation caused by the waste matrix. (right) Low spatial (50-mm voxels) with high-energy resolution passive CT image at 414 keV reveals the location and distribution of radioactive 239 Pu in the drum. A&PCT was used to determine that this drum contained 3 g of weapons-grade Pu.

Source: Reprinted with permission from Lawrence Livermore National Laboratory (Roberson et al., 1995b).

others that the NDE community needs to work more closely with end users in the life cycle of a product to better incorporate NDE techniques. The NDE community needs to highlight the importance of NDE in the entire life-cycle process of a product by showing real costs savings to the manufacturing community.

Future Work

All NDE techniques have limitations. Some techniques are limited by physical constraints, while some can be overcome by developing new NDE system components. Examples include brighter sources, higher spatial and contrast resolution and more efficient detectors, higher-precision manipulators/stages, better image reconstruction, and signal and image processing algorithms with faster computers.

Acknowledgments

I want to thank the principal investigators of the projects highlighted in this paper: Diane Chinn—composites durability; Karin Hollerbach and Elaine Ashby—biomechanics and implant analysis; Nancy Del Grande—aircraft inspection; Dwight (Skip) Perkins—visual inspection and S&IP overview, and Graham Thomas—ultrasonics testing overview. I also thank Toby Cordell, Jerry Haskins, and Graham Thomas for several discussions on the application of NDE throughout the life cycle of a product and my secretary, Jane DeAnda, for helping me edit this paper. The LLNL work described here was performed under the auspices of the U.S. Department of Energy, contract no. W-7405-ENG-48.

References

Azevedo, S. G. 1991. Model-Based Computed Tomography for Nondestructive Evaluation. Ph.D. dissertation. Lawrence Livermore National Laboratory, Livermore, Calif., UCRL-LR-106884, March.

Barrett, H. H., and W. Swindel. 1981. Radiological Imaging: Theory of Image Formation, Detection, and Processing, vols. 1 and 2. New York: Academic Press.

Bernardi, R. T., and H. E. Martz, Jr. 1995. Nuclear waste drum characterization with 2 MeV X-ray and gamma-ray tomography. Proceedings of the SPIE's 1995 International Symposium on Optical Science, Engineering, and Instrumentation (Vol. 2519), San Diego, Calif., July 13–14.

Bossart, P. L., and H. E. Martz. 1996. Visualization software in research environments. Submitted to Engineering Tools for Life-Cycle NDE, Fifth Annual Research Symposium, Norfolk, Va., April, UCRL-JC-122795 Ext. Abs., Lawrence Livermore National Laboratory, Livermore, Calif., March.

Camp, D. C., J. Pickering, and H. E. Martz. 1994. Design and construction of a 208-L drum containing representative LLNL transuranic and low-level wastes . Proceedings of the Nondestructive Assay and Nondestructive Examination Waste Characterization Conference, Pocatello, Idaho, February 14–16.

Chinn, D. J., P. F. Durbin, G. H. Thomas, and S. E. Groves. 1997. Tracking accelerated aging of composites with ultrasonic attenuation measurements. Pp. 1893–1898 in Proceedings of Review of Progress in Quantitative Nondestructive Evaluation, Vol. 16B, D. O. Thompson and D. E. Chimenti, eds. New York: Plenum Press.

Cordell, T. M. 1997. NDE: A full-spectrum technology. Paper presented at the Review of Progress in Quantitative Nondestructive Evaluation, University of San Diego, Calif., July 27-August 1.

Decman, D. J., H. E. Martz, G. P. Roberson, and E. Johansson. 1996. NDA via Gamma-ray Active and Passive Computed Tomography. Mixed Waste Focus Area Final Report. Lawrence Livermore National Laboratory, Livermore, Calif., UCRL-ID-125303, November.

Del Grande, N. K., K. W. Dolan, P. F. Durbin, and D. E. Perkins. 1995. Emissivity-Corrected Infrared Method for Imaging Anomalous Structural Heat Flows. Patent 5,444,241, August 22.

Del Grande, N. K. 1996. Dual band infrared computed tomography: Searching for hidden defects. Science & Technology Review. Lawrence Livermore National Laboratory, Livermore, Calif., UCRL-52000-96-5, May.

Del Grande, N. K., P. F. Durbin, and D. E. Perkins. 1997. Dual-band infrared computed tomography for quantifying aircraft corrosion damage. Presented at the First Joint DOD/FAA/NASA Conference on Aging Aircraft, Ogden, Utah, July 8–10.

Goebbels, K. 1994. Materials Characterization for Process Control and Product Conformity. Boca Raton, Fla.: CRC Press.

Herman, G. T. 1980. Image Reconstruction from Projections: The Fundamentals of Computerized Tomography. New York: Academic Press.

Hollerbach, K., and A. Hollister. 1996. Computerized prosthetic modeling. Biomechanics (September):31–38.

Johansson, E. J., and P. L. Bossart. 1997. Advanced 3-D imaging technologies. Nondestructive Evaluation, H. E. Martz, ed. Livermore, Calif.:Lawrence Livermore National Laboratory, UCRL-ID-125476, February.

Kak, A. C., and M. Slaney. 1987. Principles of Computerized Tomographic Imaging. New York: IEEE Press.

Krautkrämer, J., and H. Krautkrämer. 1990. Ultrasonic Testing of Materials. Berlin: Springer-Verlag.

Levoy, M. 1997. Digitizing the shape and appearance of three-dimensional objects. Pp. 37–46 in Frontiers of Engineering: Reports on Leading Edge Engineering from the 1996 NAE Symposium on Frontiers of Engineering. Washington, D.C.: National Academy Press.

Martz, H. E., D. J. Decman, G. P. Roberson, and F. Lévai. 1997a. Gamma-ray scanner systems for nondestructive assay of heterogenous waste barrels. Presented and accepted for publication at the IAEA-sponsored Symposium on International Safeguards, Vienna, Austria, October 13–17.

Martz, H. E., C. Logan, J. Haskins, E. Johansson, D. Perkins, J. M. Hernández, D. Schneberk, and K. Dolan. 1997b. Nondestructive Computed Tomography for Pit Inspections. Livermore, Calif.: Lawrence Livermore National Laboratory, UCRL-ID-126257, February.

Mascio, L. N., C. M. Logan, and H. E. Martz. 1997. Automated defect detection for large laser optics. Nondestructive Evaluation, H. E. Martz, ed. Livermore, Calif.: Lawrence Livermore National Laboratory, UCRL-ID-125476, February.

Mast, J. E., and S. G. Azevedo. 1996. Applications of micropower impulse radar to nondestructive evaluation. Nondestructive Evaluation, H. E. Martz, ed. Livermore, Calif.: Lawrence Livermore National Laboratory, UCRL-ID-122241, February.

McGarry, G. 1997. Digital measuring borescope system. Paper presented at the Review of Progress in Quantitative Nondestructive Evaluation , University of San Diego, San Diego, July 27-August 1.

Nurre, J. H. 1996. Tailoring surface fit to three dimensional human head scan data. Proceedings of SPIE Symposium on Electronic Imaging: Science and Technology, San Jose, Calif., January.

Roberson, G. P., H. E. Martz, J. Haskins, and D. J. Decman 1995a. Waste characterization activities at the Lawrence Livermore National Laboratory. Pp. 966–971 in Nuclear Materials Management, INMM, 36th Annual Meeting Proceedings, Palm Desert, Calif., July 9–12.

Roberson, G. P., D. J. Decman, H. E. Martz, E. R. Keto, and E. J. Johansson. 1995b. Nondestructive assay of TRU waste using gamma-ray active and passive computed tomography. Pp. 73–84 in Proceedings of the Nondestructive Assay and Nondestructive Examination Waste Characterization Conference, Salt Lake City, Utah, October 24–26.

Roberson, G. P., H. E. Martz, D. C. Camp, D. J. Decman, and E. J. Johansson. 1997. Preliminary A&PCT Multiple Detector Design, Upgrade of a Single HPGe Detector A&PCT System to Multiple Detectors. Livermore, Calif.: Lawrence Livermore National Laboratory, UCRL-ID-128052, June.

Russ, J. C. 1995. The Image Processing Handbook, 2d ed. Boca Raton, Fla. : CRC Press.

NOTES

-

5.

See IS&T/SPIE's Symposium on Electronic Imaging: Science & Technology, January 28-February 2, 1996, San Jose, Calif.

-

6.

For further information, see papers from the SPIE International Symposium on Optical Science, Engineering, and Instrumentation, July 27-August 1, 1997, San Diego, Calif.

-

9.

This work is being performed under a cooperative research .and development agreement with Boeing.

-

10.

For further information, see papers by J. Fouke, F. Guilak, M. C. H. van der Meulen, and A. A. Edidin in the Biomechanics section of this book.

-

11.

The First Joint DOD/FAA/NASA Conference on Aging Aircraft, July 8-10, 1997, Ogden, Utah.

Challenges of Probabilistic Risk Analysis

VICKI M. BIER

University of Wisconsin-Madison

Madison, Wisconsin

The ever-increasing power of technology creates the potential for catastrophic accidents. Because such accidents are rare, though, the database on them is too small for conventional statistics to yield meaningful results. Therefore, sophisticated probabilistic risk analysis (PRA) techniques are critical in estimating the frequency of accidents in complex engineered systems such as nuclear power, aviation, aerospace, and chemical processing.

The approach used in PRA is to model a system in terms of its components, stopping where substantial amounts of data are available for most if not all of the key components. Using data to estimate component failure rates, the estimates can then be aggregated according to the PRA model to derive an estimate of accident frequency. The accuracy of the resulting estimate will depend on the accuracy of the PRA model itself, but there are good reasons to believe that the accuracy of PRA models has improved over time.

The failure rate estimates needed as input are generally obtained by using Bayesian statistics, owing to the sparsity of data even at the component level. Bayesian methods provide a rigorous way of combining prior knowledge (expressed in the form of ''prior distributions") with observed data to obtain "posterior distributions." A posterior distribution expresses the remaining uncertainty about a failure rate after observing the data. The posteriors for component failure rates are then propagated through the PRA model to yield a distribution for accident frequency.

Two major challenges of PRA are (1) the reliance on subjective judgment and (2) the difficulty of accounting for human performance in PRA. These issues are discussed below.

Subjectivity

PRAs generally result in distributions for accident frequencies, and these distributions are based extensively on subjective judgment (i.e., expert opinion), both in structuring the PRA model itself and in quantifying prior distributions for component failure rates. It is now generally accepted that the uncertainties in PRA results are not an artifact of PRA but are characteristic of low-frequency, high-consequence events. Explicitly recognizing these uncertainties should lead to better decisions; however, the subjectivity of PRA results poses larger problems.

The use of subjective probability distributions in making individual decisions is theoretically well founded. However, the situation is more complex for societal decisions, which pose significant policy and technical questions.

Policy Questions

The subjectivity of PRA results has been partially responsible for delays in implementing risk-based approaches to regulation. Regulators recognize that PRA can make it possible to achieve lower risks than the current body of regulations at no greater cost. However, because of the complexity of the facilities being analyzed and of the resulting models, regulators are dependent on risk analyses performed by facility owners/operators, and even validating a PRA is a costly undertaking. Ignoring the possibility of deliberate misrepresentations, the different incentives of a regulator and a licensee (combined with the subjectivity of PRA models) create ample opportunity for results to be "shaded" favorably to licensees.

Taking advantage of the opportunity for risk reduction posed by PRA requires careful attention to regulatory incentives and disincentives. In particular, licensees must have incentives to openly disclose information that may support increased or unfavorable risk estimates. Otherwise, licensees whose PRAs reveal unfavorable results will be unlikely to share those results with regulators, and licensees may be discouraged from upgrading their existing PRAs. These issues are currently being addressed by the U.S. Nuclear Regulatory Commission (NRC), which recently formulated draft regulatory guides for risk-informed decision making.

Technical Questions

In addition to policy questions, reliance on subjective judgment also poses interesting technical questions. In particular, PRA practitioners have sometimes treated the subjectivity of their inputs somewhat cavalierly. Significant guidance exists regarding the elicitation of subjective prior distributions, but this guidance is costly to apply, especially when prior distributions are needed for dozens of

uncertain quantities. Therefore, it would be desirable to develop less resource-intensive default methods for choosing prior distributions for use in PRA.

Similar work has been done in other fields, with attention focused on so-called robust or reference priors. The idea is to let the database speak for itself as much as possible and to avoid selecting priors that may have unduly large influences on the posteriors. While this approach may not work well in PRA because of data sparsity, it would at least seem worthwhile to identify families of priors that are likely to yield unreasonable posteriors. Such research could lead to improved guidance for PRA practitioners and improved credibility of PRA estimates.

Human Error and Human Performance

Another challenge to the accuracy of PRA is the difficulty of predicting human behavior. In this discussion I will distinguish between human error per se and the effects of organizational factors. Both topics are being addressed by the University of Wisconsin-Madison Center for Human Performance in Complex Systems, which is supported by several major high-technology companies and the NRC.

Human Error

Many large industrial accidents, including those at Three Mile Island and Chernobyl, were caused in part by human errors. Hence, it is natural to wonder whether such errors are adequately incorporated into PRA. Human errors are conventionally divided into errors of omission and those of commission. Errors of omission are relatively straightforward to model, since they can be explicitly enumerated based on the procedures to be performed.

Errors of commission have historically been considered extremely difficult to analyze, because of the infinite variety of possible human actions. More recently, it has been recognized that the vast majority of commission errors fall into a few simple categories. Barring sabotage or insanity, people are unlikely to undertake actions that seem unreasonable at the time. Therefore, most errors of commission reflect factors such as shortcuts, competing goals, or misdiagnoses. While these causes are harder to analyze than errors of omission, the recognition that most errors of commission have a rational basis makes them amenable to analysis, and there have been several pilot studies incorporating this approach.

After identifying relevant human errors, their probabilities must be estimated. Progress has been hindered both by the fact that psychologists do not yet know enough about the factors contributing to human error and by the tendency for PRA practitioners to prefer simple engineering-style models of human performance. While engineers are known for their willingness to

make assumptions in order to get the job done, more empirical knowledge of human error would contribute to better assumptions.

Organizational Factors

Another issue of concern is the effect of organizational factors on risk. At least for U.S. commercial nuclear power plants, corporate culture has as much effect on risk as plant design. Some such influences are implicitly taken into account in current PRAs (e.g., in plant-specific data), but it is unclear how risk will change if practices change. Moreover, organizational factors may also have numerous unmodeled influences on risk.

These issues are difficult to analyze in part because we cannot as yet even reliably quantify corporate culture, let alone identify features conducive to good performance. Despite these difficulties, the PRA community has recently begun to address organizational factors, and the NRC is currently funding research in this area.

Summary

The state of the art of PRA offers many promising research areas. Interestingly, the engineering basis of PRA seems better established than the input required from other fields. For example, although Bayesian statistical theory is well established, there is room for more work on the implications of alternative prior distributions.

More importantly, insights gained from PRA, and the necessity of safely managing complex hazardous systems, should inform the research agendas of social scientists. For example, in the real world errors need not reflect mistakes, but rather may represent people performing well under suboptimal conditions. Thus, broader definitions of "error" and greater attention to context would make some psychological research more relevant. Questions also remain in organizational behavior. For example, clearly both democratic/participatory and autocratic/hierarchical management styles can work well under the right circumstances, but the ingredients needed to make either style work effectively are not yet known. Such issues are often not prominent on the research agendas of social scientists, but I believe there is room for basic social science research with significant practical benefits.

Today, PRA is being productively applied to a variety of engineering technologies and is being used more extensively in the regulatory process. Since PRA is here to stay, it is time to develop closer ties with other fields. PRA practitioners stand to learn a lot from related areas of research. Moreover, the practical orientation of PRA can yield insights into the most important issues in high-hazard industries and can contribute to more relevant research agendas in other fields.

| This page in the original is blank. |