11

Information Warfare: A Structural Perspective

INTRODUCTION

What would be the impact of a leaflet dropped by a psychological operations unit into enemy territory? Would the number who surrender increase? As the amount of information available via satellite increases, will regimental staff personnel be more or less likely to notice critical changes in the tactical situation? Questions such as these are central to the information warfare context. As the amount and quality of information increase and the ability to deliver specific information to specific others improves, such questions will become more important. A better understanding of how individuals and units cope with changes in the quantity, quality, and type of information is crucial if U.S. forces are to maintain information dominance.

Information warfare, sometimes referred to as information operations, can be thought of as any type of conflict that involves the manipulation, degradation, denial, or destruction of information. Libicki (1995:7) identifies seven types of information warfare. Many aspects of information warfare identified by Libicki and others are concerned with technology issues, such as how one can identify technically that the integrity of databases has been tampered with and how one can keep networks linking computers from becoming unstable. While all these aspects of vulnerability are important, they are not the focus here. In this chapter, information warfare is examined more narrowly—from the perspective of human behavior and human processing of information. Thus the ideas discussed here bear on three aspects of information warfare identified by Libicki: (1) command and control warfare, (2) intelligence-based warfare, and (3) psychological war-

fare. From the perspective of this chapter, information warfare has to do with those processes that affect the commander's, staff's, opposing commander's, or civilians' decisions, ability to make decisions, and confidence in decisions by affecting what information is available and when.

This chapter focuses, then, on the human or social side of information warfare. Factors that affect the way people gather, disseminate, and process information are central to this discussion. These processes are so general that they apply regardless of whether one is concerned with command and control warfare, intelligence-based warfare, or psychological warfare. Thus rather than describing the various types of information warfare (which is done well by Libicki) this chapter addresses the social and psychological processes that cut across those types. Clearly, many of the factors discussed in earlier chapters, such as attention, memory, learning, and decision making, must be considered when modeling the impact of information warfare on individuals and units. In addition, several other aspects of human behavior—the structure of the unit (the way people are connected together), information diffusion, and belief formation—are relevant, and the discussion here concentrates on these three aspects. It is these aspects that will need to be integrated into existing models of information warfare and existing estimates of vulnerability if these models and estimates are to better reflect human behavior.

Consider the role played by these three aspects (structure, diffusion, and belief formation) in the area of information dominance. Information dominance has to do with maintaining superiority in the ability to collect, process, use, interpret, and disseminate information. Essentially, the way the commander acts in a particular situation, or indeed the way any individual acts, depends on the information characteristics of the situation, including what other individuals are present, what they know, what they believe, and how they interact. For example, in joint operations, commanders may have staffs with whom they have had only limited interactions. Vice Admiral Metcalf (1986) addresses this issue in discussing the Grenada Rescue Operation. He refers repeatedly to the importance of the Nelsonian principle—''know your commanders"—and of having a staff that has been trained to work together. He stresses the importance of understanding what others know and developing a sense of trust. In general, individuals are more likely to trust and/or act on information (including orders) if it comes from the "right" source. For example, Admiral Train notes that when he became the commander of the Sixth Fleet, he asked the incumbent, who believed the Beirut evacuation plans were inadequate, why he had not spoken up sooner. The incumbent replied that since he thought the evacuation order was from a valid authority, he was reluctant to object. Additionally, during missions, as crises come to the fore, the amount of information involved can escalate. The resulting information overload leads people to find ways of curtailing the information to which they attend. One means of doing so is to use one's interaction network (the web of trusted advisors) to decide what information is most important. All of these

factors point to the essential role of social structure, the connections among personnel, in the diffusion of information and the development of an individual's ideas, attitudes, and beliefs.

Being able to measure, monitor, and model these information networks is important in evaluating weaknesses and strengths in a personnel system from an information warfare perspective. Understanding how information diffuses within and between groups, how individuals acquire information, and how their beliefs and decisions change as a function of the information available to them and those with whom they interact, as well as the decisions and beliefs of friends and foes, is critical in a variety of military contexts, including information warfare, coalition management, and intelligence. Models of information warfare that can be used for examining alternative strategies (for defense and offense) can build upon models of information diffusion and belief formation. Similarly, models of information diffusion and belief formation can be used to help address questions such as who should get what information, how much information is needed, and who should filter the information. In this chapter, formal models of information diffusion and belief formation are discussed.

Individuals' decisions, perceptions, attitudes, and beliefs are a function of the information they know. Individuals learn the vast majority of what they know during interactions with others (i.e., vicariously), rather than through direct experience. Processes of communication, information diffusion, and learning are key to understanding individual and group behavior. These means of gathering information include both direct interactions with a specific person, such as talking with someone, and indirect interactions, such as reading a memorandum or monitoring a radar screen. Factors that affect these interactions in turn affect what information individuals learn, what attitudes and beliefs they hold, and what decisions they make. These factors fall roughly into two categories: structural and technological. Structural factors are those that influence who interacts with whom. Technological factors are those that influence the mode of interaction—face-to-face, one-to-many, e-mail, phone, and so forth. This chapter focuses primarily on the role of structural factors and existing models of the way they influence information diffusion and belief formation. We note in passing that there is also a great deal of work on the technological factors associated with information warfare; however, such concerns are not the focus here.

Collectively, the information diffusion and belief formation models and the empirical studies on which they are based suggest that an individual or group can control the attitudes, beliefs, and decisions of others by controlling the order in which the others receive information and the individuals from whom they receive it and by fostering the idea that others share the same beliefs. Social control does not require that information be hidden or that people be prevented from accessing it. It may be sufficient simply to make certain topics taboo so that people think they know each other's beliefs and spend little time discussing them. Or to turn this argument on its head, educational programs designed to provide

individuals with all the facts are not guaranteed to change people's beliefs. Research in this area suggests that in general, once an individual learns others' beliefs and concludes that they are the object of widespread social agreement, he/she will continue to hold those beliefs, despite repeated education to the contrary, until his/her perception of others' beliefs changes.

The next two sections examine the modeling of information diffusion and belief formation, respectively. Next is a discussion of the role of communications technology in the diffusion of information and the formation of beliefs. The final section presents conclusions and goals in the area of information warfare.

MODELS OF INFORMATION DIFFUSION

Branscomb (1994:1) argues that "in virtually all societies, control of and access to information became instruments of power, so much so that information came to be bought, sold, and bartered by those who recognized its value." However, the ability of individuals to access information, to recognize its value, and to buy, sell, or barter it depends on their position in their social network. This simple fact—that the underlying social or organizational structure, the underlying networks, in which individuals are embedded is a major determinant of information diffusion—has long been recognized (Rogers, 1983). Numerous empirical studies point to the importance of structure in providing, or preventing, access to specific information. Nonetheless, most models of information diffusion (and of belief formation) do not take structure into account.

Social or organizational structure can be thought of as the pattern of relationships in social networks. There are several ways of characterizing this pattern. One common approach is to think in terms of the set of groups of which an individual is currently or was recently a member (e.g., teams, clubs, project groups). The individual has access to information through each group.

A second common approach is to think in terms of the individual's set of relationships or ties to specific others, such as who gives advice to whom, who reports to whom, and who is friends with whom. A familiar organizational structure is the command and control (C 2) or command, control, communications, and intelligence (C3I) architecture of a unit (see also Chapter 10). In most social or organizational structures, multiple types of ties link individuals (see, e.g., Sampson, 1968, and Roethlisberger and Dickson, 1939). This phenomenon is referred to as multiplexity (White et al., 1976). For example, in the C3I architecture of a unit, there are many structures, including the command structure, the communication structure, the resource access structure, and the task structure. Different types of ties within the organization can be used to access different types of information.

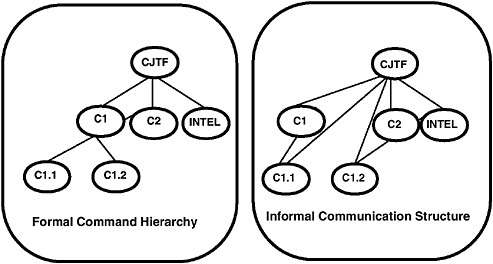

A common way of representing social structure is as a network. For example, Figure 11.1 shows two illustrative structures. The structure on the left is known as a formal structure, or organization chart; it is characterized by a pattern

FIGURE 11.1 Illustrative structures as networks.

of command or reporting ties. The structure on the right is known as an informal structure; it is characterized by a pattern of ties among individuals based on who communicates with whom. When social or organizational structures are represented as a network, the nodes can be groups, teams, individuals, organizations, subunits, resources, tasks, events, or some combination of these. The ties can be based on any type of linkage, such as economic, advice, friendship, command, control, access, trust, or allied.

The study of social networks is a scientific discipline focused on examining social structure as a network and understanding its impact on individual, group, organizational, and community behavior (Wellman and Berkowitz, 1988). Most models combine the formal and informal structures, and in many empirical studies, the informal subsumes the formal. A large number of measures of structure and of the individual's position within the structure have been developed (Scott, 1981; Wellman, 1991; Wasserman and Faust, 1994). Empirical studies of information diffusion have demonstrated the utility of this approach and the value of many of these measures for understanding the diffusion process (Coleman et al., 1966; Burt, 1973, 1980; Valente, 1995; Morris, 1994; Carley and Wendt, 1991). These studies suggest that what information individuals have, what decisions they make, what beliefs they hold, and how strongly they hold those beliefs are all affected by their position (peripheral or central) in the network, the number of other individuals with whom they communicate, and the strength of the relationships with those other individuals. Some empirical research suggests that each individual has an internal threshold for accepting or acting on new information that depends on the type of information and possibly on individual psychological

traits, such as the need for acceptance (Valente, 1995). This threshold can be interpreted as the number of surrounding others who need to accept or act on a piece of information before the individual in question does so. Moreover, much of the work on thresholds is more descriptive than prescriptive.

There is almost no work, either empirical or modeling, that directly links network and psychological factors. A first empirical attempt at making such a link is the work of Kilduff and Krackhardt (1994) and Hollenbeck et al. (1995b). In terms of modeling, Carley's (1990, 1991a) constructural model links cognition (in terms of information) and structure, but does not consider other aspects of human psychology.

While many researchers acknowledge the importance of social structure to information diffusion (Katz, 1961; Rapoport, 1953), there are relatively few models of how the social structure affects the diffusion process (Rogers, 1983:25). Empirical studies have shown that differential levels of ties within and between groups, the strength of ties among individuals, and the level of integration all impact information diffusion (for example, see, Burt, 1973, 1980; Coleman et al., 1966; Granovetter, 1973, 1974; and Lin and Burt, 1975).

There are several computational models of information diffusion. Most such models are limited in one or more of the following ways: structural effects are completely ignored, the social or organizational structure is treated as static and unchanging, only a single piece of information diffuses at a time, or the role of communications technology is not considered. These limitations certainly decrease the overall realism of these models. However, it is not known whether these limitations are critical with regard to using these models in an information warfare context.

Early diffusion models were based on a contagion model, in which everyone who came into contact with new information learned it. Following are brief descriptions of three current models: (1) the controversial information model, (2) the constructural model, and (3) the dynamic social impact model. These models are less limited than those based on the contagion model. Each is also capable of being combined with belief formation models such as those discussed in the next section.

The Controversial Information Model

The controversial information model (Krackhardt, forthcoming) is based on a sociobiological mathematical model of information diffusion developed by Boorman and Levitt (1980). This model of information diffusion applies only to the diffusion of "controversial" innovations or beliefs. A controversial innovation or belief is one whose value (and subsequent adoption) is socially and not rationally determined; that is, there is no exogenous superior or inferior quality to the innovation or belief that determines its eventual adoption. Thus, whether an

individual adopts the innovation or belief depends not just on what he/she knows, but also on whether others also adopt it.

In this model, there are two competing innovations or beliefs. Each individual can adopt or hold only one of the two at a time. Individuals are subdivided into a set of groups, each of which has a prespecified pattern of connection with other groups. Individuals and the innovations or beliefs to which they subscribe can move between groups, but only along the prespecified connections. Whether individuals remain loyal to an innovation or belief depends on whether they encounter other individuals who subscribe to it. The particular pattern in which groups are connected, the rate of movement of individuals between groups, the size of the groups, the likelihood of individuals changing the innovations or beliefs to which they subscribe as they encounter others, and the initial distribution of innovations and beliefs across groups all influence the rate at which a particular innovation or belief will be adopted and which innovation or belief will dominate.

Using a computational version of this model, Krackhardt (forthcoming) examines the conditions under which an innovation may come to dominate an organization. The results from Krackhardt's model parallel the theoretical results obtained by Boorman and Levitt (1980):

-

In a large undifferentiated organization, no controversial innovation can survive unless a large proportion of the organization's members adopt it at the outset.

-

There are structured conditions under which even a very small minority of innovators can take over a large organization.

-

Once an innovation has taken hold across the organization, it is virtually impossible for the preinnovation state to recover dominance, even if it begins with the same structural conditions the innovators enjoyed.

Contrary to intuition and some of the literature on innovation, the conditions that lead to unexpected adoption across an entire organization are enhanced by the following factors:

-

A high level of viscosity (that is, a low degree of free movement of and interaction among people throughout the organization)

-

The initial group of innovators being located at the periphery of the organization, not at the center

The controversial information model has several key features. First, the impact of various structures on information diffusion and the resultant distribution of beliefs within groups can be examined. For example, the model could be used to explore different C3I structures. Second, two competing messages or beliefs are diffusing simultaneously; they are treated as being in opposition, so that an individual cannot hold both simultaneously. A limitation of this model, however, is that the structure itself (the pattern of groups) is predefined and does

not change dynamically over time. Thus this model is most applicable to examining information diffusion in the short run.

The Constructural Model

According to the constructural model, both the individual cognitive world and the sociocultural world are continuously constructed and reconstructed as individuals move concurrently through a cycle of action, adaptation, and motivation. During this process, not only does the sociocultural environment change, but social structure and culture coevolve in synchrony.

Carley (1991a) defines the following primary assumptions in describing the constructural model:

-

individuals are continuously engaged in acquiring and communicating information

-

what individuals know influences their choices of interaction partners

-

an individual's behavior is a function of his or her current knowledge

According to the basic formulation (Carley, 1990, 1991a, 1995a; Kaufer and Carley, 1993), individuals engage in a fundamental interaction-shared knowledge cycle. During the action phase of the cycle, individuals can communicate to and receive information from any source. During the adaptation phase, individuals can learn, augment their knowledge, alter their beliefs, and as a result cognitively reposition themselves in the sociocultural environment. During the motivation phase, individuals select a potential interaction partner or artifact.

The constructural model suggests that the concurrent actions of individuals necessarily lead to the coevolution of social structure and culture. Further, the impact of any individual on the sociocultural environment is affected by that individual's integration into the society, both structurally and culturally, and the individual in turn impacts the sociocultural environment by engaging others in exchanges of information. Thus, for example, the rapidity with which a new idea diffuses is a function of the innovator's position in the sociocultural environment, and that position is in turn affected by the diffusion of the new idea. A consequence is that different sociocultural configurations may facilitate or hinder information diffusion and consensus formation. Because communications technologies affect the properties of the actor and hence the way the actor can engage others in the exchange of information, they influence which sociocultural configurations best facilitate information diffusion and consensus formation.

In the constructural model, beliefs and attitudes mediate one's interpersonal relationships through a process of "social adjustment" (Smith et al., 1956; Smith, 1973), and social structure (the initial social organization that dictates who will interact with whom) affects what attitudes and beliefs the individual holds (Heider, 1946), as well as other behavior (White et al., 1976; Burt, 1982). It

follows from this model that if those with whom the individual interacts hold an erroneous belief, the individual can become convinced of that belief despite factual evidence to the contrary and will in turn persuade others of its validity.

The constructural model has several key features. First, the diffusion of novel information through different social and organizational structures can be examined. These structures can, though they need not, change dynamically over time. Second, multiple messages (many more than two) can diffuse simultaneously. In this case, the messages compete for the individual's attention. These messages are not valued and so do not reflect beliefs. Thus, in contrast with the controversial information model, the individuals in the constructural model can hold all messages simultaneously. Beliefs are calculated on the basis of what messages are held. Third, some types of communications technologies can be represented within this model as actors with special communicative properties or as alterations in the way individuals interact. A limitation of this model, however, is that the messages or pieces of information are undifferentiated. Thus, content or type of information cannot influence action, nor can individuals choose to communicate a specific piece of information in preference to another.

Dynamic Social Impact Theory

Latane's dynamic social impact theory (Latane, 1996; Huguet and Latane, 1996) suggests that individuals who interact with and influence each other can produce organized patterns at the group or unit level that serve as a communicable representation identifiable by others. A key feature of this model is that individuals tend to be most influenced by those who are physically nearby. Thus spatial factors that influence who interacts with whom can result in locally consistent patterns of shared attitudes, meaning, and beliefs. Empirical evidence supports the idea that through interaction over time, group members become more alike, and their attitudes and beliefs become correlated (Latane and Bourgeois, 1996). Further, empirical evidence confirms that the physically closer in space individuals are, the more frequent are the interactions they recall. Results suggest that the relationship between distance and interaction frequency may possibly be described by an inverse power law with a slope of -1 (Latane et al., 1995). Latane (1996) uses an evolutionary approach to suggest how communication can lead to changes in attitude as individuals develop cognitive bundles of information that then become distributed through the social space.

A simplified version of the associated simulation model can be characterized as a modified game of artificial life. Individuals are laid out spatially on a grid and can interact with those nearest (e.g., those to the north, south, west, and east). They begin with one of two competing beliefs (or messages or attitudes) that diffuse simultaneously and are treated as being in opposition so that an individual cannot hold both simultaneously. Initially, beliefs may be distributed randomly. Over time, however, individuals come to hold beliefs similar to those held by

others near them. A limitation of this model is that the social structure is typically defined as the set of physically proximal neighbors in some type of city-block pattern. Therefore, all individuals typically have the same number of others with whom they can interact. An approach similar to that of Latane is taken in the work on A-Life by Epstein and Axtell (1997).

MODELS OF BELIEF FORMATION

Models and theories of belief (and attitude) formation range from those that focus on social processes to those that focus almost exclusively on psychological processes. Four lines of research in this area are discussed below: mathematical models of belief formation in response to message passing, belief networks, social information processing models, and transactive memory.

Mathematical Models Focusing on Information Passing

Much of the social psychology work on belief formation focuses on how message, message content, and sender attributes affect beliefs. Two important theoretical traditions in this respect are reinforcement theory (Fishbein and Ajzen, 1975; Hunter et al., 1984) and information processing theory (Hovland and Pritzker, 1957; Anderson and Hovland, 1957; Anderson, 1959; Anderson, 1964; Anderson, 1971; Hunter et al., 1984). In contrast with these social-psychological models, social network models of belief formation focus on how the individual's position in the social network and the beliefs of other group members influence the individual's beliefs. These social network models are often referred to as models of social influence.

Empirical and theoretical research on belief formation leads to a large number of findings or predictions, some of which conflict. For example, one perspective provides some empirical evidence suggesting that individuals hold beliefs that meet particular psychological (often emotional) needs (Katz, 1960; Herek, 1987); hence erroneous beliefs might be held because they reduce stress or increase feelings of self-esteem. Stability of beliefs would be attributable, at least in part, to emotional stability. In contrast, the symbolic interactionist perspective suggests that the stability of social structures promotes stability of self-image and hence a resistance to change in beliefs, attitudes, and behaviors (Stryker, 1980; Stryker and Serpe, 1982; Serpe, 1987; Serpe, 1988). There is some empirical evidence to support this perspective as well. An implication of this perspective that can be garnered from the models is that erroneous beliefs would be held if the social structure were such that interactions reinforced those beliefs. To be sure, much of the work in this area has also looked at the content of the message and whether the message coming from the social structure was positive or negative. However, the point here is that a great deal of the literature also focuses almost

exclusively on the structure (e.g., social context, who talks to whom) and its impact on attitudes independent of the content of specific messages. Attitude reinforcement theory suggests that erroneous beliefs persist only if they are extreme (Fishbein and Ajzen, 1975; Ajzen and Fishbein, 1980; Hunter et al., 1984). Information processing theories suggest that erroneous beliefs persist only if the most recent information supports an erroneous conclusion (Hovland and Pritzker, 1957; Anderson and Hovland, 1957; Anderson, 1959; Anderson, 1964).

Both reinforcement theory and information processing theory model the individual as receiving a sequence of messages about a topic. In these models, the individual adjusts his or her belief about the topic on the basis of each new message. A message is modeled as the information the individual learns during an interaction (i.e., either a fact or someone's belief). These theories differ, however, in the way the adjustment process is modeled. Reinforcement theorists and information processing theorists generally do not distinguish among types of messages, nor do they postulate that different types of messages will affect the individual's attitude in different ways.

Reinforcement theorists argue that the change in belief caused by a message is in the direction of the message (Hunter et al., 1984). Hunter et al. (1984) formulate Fishbein's model as a model of belief change by essentially stipulating that individuals' beliefs about an object change as they receive new messages, each of which alters an underlying belief about the presence of an attribute for that object. In this model, there is a gradual change in these underlying beliefs as new messages are received. Thus positive information leads to a more positive belief, negative information leads to a more negative belief, and neutral information has no effect. The individual's belief at a particular time is simply a weighted sum of the messages he or she has received. Various models of this ilk differ in whether the weights are a function of the individual, the message, the particular attribute, or some combination of these.

In contrast to reinforcement models, information processing models argue that the individual receives a sequence of messages and adjusts his or her belief in the direction of the discrepancy between the message and the current belief (Hunter et al., 1984). The various information processing models differ in whether the weights that determine the extent to which individuals shift their beliefs are a linear or nonlinear function of the individual, the message, or some combination of the two. In contrast with reinforcement theory, however, these weights are not a function of the individual's belief or the underlying beliefs (see for example, Hovland and Pritzker, 1957; Anderson, 1959; Anderson, 1964; Anderson, 1971). Thus in these models, positive information may lead to a less positive belief if the message is less positive than the current belief.

Numerous empirical studies have suggested that more established beliefs are more difficult to change (Cantril, 1946; Anderson and Hovland, 1957; Danes et al., 1984). In addition, Danes et al. (1984:216), in an empirical study controlling for both the amount of information already known by the individual and the

extremity of the belief, found that ''beliefs based on a large amount of information are more resistant to change" regardless of their level of extremity, and that extreme beliefs based on little information are less resistant to change than extreme beliefs based on much information. Thus extreme beliefs will be resistant to change to the extent that they are those for which the individual has the most information. A large number of empirical studies (see Hunter et al., 1984) have found support for the discrepancy hypothesis of information processing theory (Whittaker, 1967; Insko, 1967; Kiesler et al., 1969). A detailed analysis of these empirical results, however, leads to the following conclusions: (1) extreme beliefs, unless associated with more information, are generally more affected by contradictory information; (2) neutral messages may or may not lead to a belief change, but if they do, the change is typically that predicted by a discrepancy model; and (3) belief shifts are in the direction of the message for non-neutral messages. Thus these results provide basic support for the idea that there is a negative correlation between belief shift and current belief regardless of message content as predicted by information processing theory, but not for the idea that messages supporting an extreme belief will evoke a belief shift in the opposite direction.

Both the reinforcement models and the information processing models are astructural; that is, they do not consider the individual's position in the underlying social or organizational structure. This is not the case with social network models of belief. Certainly, the idea that beliefs are a function of both individuals' social positions and their mental models of the world is not new, and indeed reflects ideas of researchers such as James (1890), Cooley (1902), Mead (1934), and Festinger (1954). Numerous studies have provided ample empirical evidence that social pressure, in terms of what the individual thinks others believe and normative considerations, affects an individual's attitudes (e.g., Molm, 1978; Humphrey et al., 1988). However, formal mathematical treatments of this idea are rare. In a sense, the information diffusion models discussed above fall in the category of social network models. There also exists another class of models—referred to as social influence models—that ignore the diffusion of information and focus only on the construction of beliefs after information has diffused.

Belief Networks

One of the most promising approaches for understanding issues of information warfare and unit-level behavior is hybrid models employing both models of agency and models of the social or communication network. By way of example, consider the formation of a unit-level belief.

Within units, beliefs and decision making power are distributed across the members, who are linked together through the C3I architecture. One way of modeling belief formation and its impact on decision making at the unit level is to treat the entire unit as an extremely large belief network in which the different

individuals act, in part, as nodes or a collection of nodes in the larger network. This image, however, is not accurate. Viewing the entire unit as a single belief network assumes that the connections within and between individuals are essentially the same. Moreover, it does not allow for the reduction in data that occurs when individuals interact. Such reductions can occur when, for example, one individual paraphrases, forgets, interprets, or delegates as unimportant comments made by others. Further, this approach does not allow for extrapolations of data that can occur when individuals interact. For example, individuals can decide to promote their own ideas rather than the group consensus.

An alternative approach is to model each subunit or group member as a belief network and to model the connections among these members as a communication or social network. In this approach, each subunit or individual takes in and processes information, alters its belief, and generates some decision or belief as output. These subunits or individuals as nodes in the social network then communicate these pieces of reduced and processed information, these decisions or beliefs, to others. This is a hybrid model that combines organizational and cognitive models. In this type of hybrid model, information is reduced and transformed within nodes and delayed through communication constraints between nodes. Such a model can combine the power of social network analysis to evaluate aspects of communication and hierarchy with the power of belief networks to evaluate aspects of individual information processing. Such models use the social network to make the belief networks dynamic.

Social Information Processing

Numerous researchers have discussed and examined empirically the social processes that impact individuals' attitudes and behaviors in organizational units. This work has led to a number of theories about the way individuals process and use social information, including social comparison theory (Festinger, 1954), social learning theory (Bandura, 1977), social information processing theory (Salancik and Pfeffer, 1978), and social influence theory (Freidkin and Johnson, 1990). The earliest and most influential of these was social comparison theory. Festinger (1954) argues that individuals observe and compare themselves with others, and if they see themselves as similar to others, will then alter their attitudes accordingly. Salancik and Pfeffer (1978) argue, on the basis of both theory and empirical evidence that individuals acting for their organizational units will make decisions on the basis of not only what information is available about the problem, but also what information they have about other people and how these others see the problem. This point has been extended by numerous researchers; for example, social network theorists argue that part of this social information is the set of relevant others as determined by the social network in which the individual is embedded (Krackhardt and Brass, 1994). Freidkin and Johnson (1990) extend these views as part of social influence theory and argue that individuals change their attitudes based on the

attitudes of those with whom they are in direct contact. Their work includes a formal mathematical model of this process.

Currently, measures and ideas drawn from the social network literature are increasingly being used as the basis for operationalizing models of structural processes that influence belief and attitude formation (e.g., Rice and Aydin, 1991; Fulk, 1993; Shah, 1995). With the exception of the models discussed in the previous section, the processes by which individuals are influenced by others are generally not specified completely at both the structural and cognitive levels. Current models usually posit a process by which individuals interact with a small group of others. A typical model is the following (e.g., Rice and Aydin, 1991; Fulk, 1993):

y + aWy + Xb + e

where:

y is a vector of self's and other's attitude or belief on some point

X is a matrix of exogenous factors

W is a weighting matrix denoting who interacts with or has influence on

whom

a is a constant

b is a vector (individualized weights)

e is a vector (error terms)

Models differ in the way attitudes are combined to determine how the group of others affects the individual. More specifically, models differ dramatically in the way they construct the W matrix.

Transactive Memory

Transactive memory (Wegner, 1995; Wegner et al., 1991) refers to the ability of groups to have a memory system exceeding that of the individuals in the group. The idea is that knowledge is stored as much in the connections among individuals as in the individuals. Wegner's model of transactive memory is based on the metaphor of human memory as a computer system. He argues that factors such as directory updating, information allocation, and coordination of retrieval that are relevant in linking computers together are also relevant in linking the memories of individuals together into a group or unit-level memory.

Empirical research suggests that the memory of natural groups is better than the memory of assigned groups even when all individuals involved know the same things (even for groups larger than dyads; see Moreland [forthcoming]). The implication is that for a group, knowledge of who knows what is as important as knowledge of the task. Transactive knowledge can improve group performance (Moreland et al., 1996). There is even a small computational model of

transactive memory; however, both the theoretical reasoning and the model have thus far been applied primarily to dyads (two people).

ROLE OF COMMUNICATIONS TECHNOLOGY

While the literature on information diffusion demonstrates the role of social structure (and to a lesser extent culture) in effecting change through information diffusion, it ignores the role of communications technology. The dominant underlying model of communication that pervades this work is one-to-one, face-to-face communication. The literature on belief formation, particularly the formal models, also ignores communications technology. On the other hand, the literature on communications technology largely ignores the role of the extant social structure and the processes of belief formation. Instead, much of the literature focuses on technological features and usage (Enos, 1990; Rice and Case, 1983; Sproull and Kiesler, 1986), the psychological and social-psychological consequences of the technology (Eisenstein, 1979; Freeman, 1984; Goody, 1968; Kiesler et al., 1984; Rice, 1984), or historical accounts of its development (Innis, 1951; de Sola Poole, 1977; Reynolds and Wilson, 1968). Admittedly, a common question asked is whether communications technology will replace or enhance existing networks or social structures (Thorngen, 1977). Further, there is an abundance of predictions and evidence regarding changes to social structure due to the technology. For example, it is posited that print made the professions possible by enabling regular and rapid contact (Bledstein, 1976) and that electronic communication increases connectedness and decreases isolation (Hiltz and Turoff, 1978). Nevertheless, research on communications technology, information diffusion, and belief formation has remained largely disassociated. One exception is the work on community structures by Wellman and colleagues (Haythornthwaite et al., 1995; Wellman et al., 1996). Another is the work by Rice and colleagues (for a review see Rice, 1994). Currently, mathematical and computational modelers are seeking to redress this gap.

Empirical research has demonstrated that various communications technologies can have profound social and even psychological consequences (see, for example, Price, 1965; Rice, 1984; Sproull and Kiesler, 1991). Such consequences are dependent on various features of the technology. One salient feature of many technologies is that they enable mass or one-to-many communication. Another salient feature of many communications technologies, such as books and videotapes, is that they enable an individual's ideas to remain preserved over time and to be communicated without the individual being present, thus allowing communication at great geographical and temporal distances (Kaufer and Carley, 1993). In this sense, the technology enables the creation of artifacts that can themselves serve as interaction partners.

In those few formal models that have attempted to incorporate aspects of

communications technology, two approaches have been considered. The first models the technology by characterizing alterations in the communication channels (e.g., rate of information flow, number of others with whom one can simultaneously interact). This approach is used in the VDT model of Levitt et al. (1994). The second approach models the artifacts or artificial agents created by the technology, such as books, telegrams, and e-mail messages, and allows individuals to interact with them. This approach is used in the constructural model of Carley (1990, 1991a).

CONCLUSIONS AND GOALS

The argument that the individual who receives a message changes his or her attitude toward the source of the message as a function of the message is made by most communication theories (for a review, see Hunter et al., 1984), including reinforcement theory (Rosenberg, 1956; Fishbein, 1965; Fishbein and Ajzen, 1974; Fishbein and Ajzen, 1975; Ajzen and Fishbein, 1980); information processing theory (Hovland and Pritzker, 1957; Anderson and Hovland, 1957; Anderson, 1959; Anderson, 1964; Anderson, 1971); social judgment theory (Sherif and Hovland, 1961; Sherif et al., 1965); and affective consistency theories, such as dissonance theory (Newcomb, 1953; Festinger, 1957), balance theory (Heider, 1946; Heider, 1958), congruity theory (Osgood and Tannenbaum, 1955; Osgood et al., 1957), and affect control theory (Heise, 1977, 1979, 1987). The typical argument is that messages have emotive content and so provide emotional support or punishment; thus individuals adjust their attitude toward the source in order to enhance the level of support or decrease the level of punishment, or because they agree with the source, or some combination of these. Regardless of the specific argument, it follows from such theories that changes in both belief and attitude toward the source (1) should be systematically related and (2) will under most conditions be either positively or negatively correlated, and that change in attitude toward the source is a function only of the message, the individual's current belief, and the individual's current attitude toward the source. In other words, there is a cycle in which beliefs and attitudes change as a function of what information is communicated to the individual by whom, and with whom the individual interacts as these beliefs and attitudes change.

The strength of the diffusion models discussed in this chapter is that they focus on the dynamic by which information or beliefs are exchanged and the resultant change in the underlying social network. By and large, however, the diffusion models are relatively weak in representing belief formation. In contrast, the strength of the belief formation and social influence models is in their ability to accurately capture changes in beliefs. However, their weakness is that they fail to represent change in the underlying social structure of who interacts

with whom. Combining these approaches into a model that captures both structural and belief change is the next step.

A cautionary note is also in order. Beliefs are typically more complex than the models described herein would suggest. For example, a single affective dimension is often not sufficient to capture a true belief (Bagozzi and Burnkrant, 1979; Schlegel and DiTecco, 1982), factual evidence is not necessarily additive, some people's beliefs may be more important to the individual than others (Humphrey et al., 1988), the specific content of a message may impact its credibility, and so on. Adding such features to models such as those described here would increase the model's realism and possibly enable them to make even finer-grained predictions. An examination of these and other possible alterations to these models is thus called for.

Finally, this chapter opened with examples of questions that are typical of an information warfare context. None of the current military models can even begin to address questions such as these. The nonmilitary models discussed in this chapter provide some basic concepts that could be used to address such questions, and each has at its core a simple mechanism that could be utilized in the military context. Each, however, would require significant alteration for this purpose.

Short-Term Goals

-

Augment existing models so that information about who communicates with or reports to whom and when is traceable and can be stored for use by other programs. Doing so would make it possible to add a module for tracking the impact of various information warfare strategies.

-

Incorporate social network measures of the positions of communicators and their mental models. Such measures are necessary for models of information warfare and information diffusion based on structural and psychological features of personnel.

-

Gather and evaluate structural information about who sends what to whom and when from war games. Given the ability to capture structural information routinely, it would be possible to begin systematically evaluating potential weak spots in existing C3I structures from an information warfare perspective. This information would also provide a basis for validating computational models in this area.

-

Develop visual aids for displaying the communication network or C3I structure being evaluated from an information warfare perspective. Such aids might rely on intelligent agent techniques for displaying graphs. None of the current models of diffusion, network formation, or vulnerability assessment across personnel have adequate visualization capabilities. While there are a few visualization tools for network data, they are not adequate, particularly for networks that evolve or have multiple types of nodes or relations. The capability to visualize these networks and the flow of information (both good and bad) would provide an important decision aid.

-

that evolve or have multiple types of nodes or relations. The capability to visualize these networks and the flow of information (both good and bad) would provide an important decision aid.

Intermediate-Term Goals

-

Utilize multidisciplinary teams to develop prototype information warfare decision aids incorporating elements of both information diffusion and belief formation models. Efforts to develop such decision aids would illuminate the important military context issues that must be addressed. The development teams should include researchers in the areas of multiagent modeling, social networks, the social psychology of belief or attitude formation, and possibly graph algorithms. Such a multidisciplinary approach is necessary to avoid the reinvention of measures and methods already developed in the areas of social psychology and social networks.

-

Develop and validate models that combine a social-psychological model of belief formation with a social network model of information diffusion. Explore how to model an individual decision maker's belief and decision as a function of the source of the knowledge (from where and from whom). Such an analysis would be a way of moving away from a focus on moderators to a model based on a more complete understanding of belief formation. Such a model could be used to show conditions under which the concurrent exchange of information between individuals and the consequent change in their beliefs result in the formation or persistence of erroneous beliefs and so lead to decision errors. Even a simple model of this type would be useful in the information warfare context for locating potential weak spots in different C3 architectures. As noted, one of the reasons a model combining information diffusion and belief formation models is critical for the information warfare context is that commanders may alter their surrounding network on the basis of incoming information. For example, suppose the incoming information is ambiguous, misleading, contradictory, or simply less detailed than that desired. In the face of such uncertainty, different commanders might respond differently: some might respond by increasing their capacity to get more information; others might act like Napoleon and deal with uncertainty by "reducing the amount of information needed to perform at any given level" (Van Creveld, 1985:146).

Long-Term Goals

-

Support basic research on when specific beliefs will be held and specific decisions will be made. Research is needed to move from several statements that beliefs, the rate of decision making, the confidence in decisions, and the likelihood of erroneous decisions are high or low to statements about which specific beliefs will be held or which decisions will be made. Basic research is needed

-

toward the development and validation of models that combine detailed models of information technology, message content, belief formation, and the underlying social and organizational structure and are detailed enough to give specific predictions. In particular, emphasis should be placed on message content and its impact on the commander's mental model and resultant decisions. Selecting what information is available can affect intelligence decisions. However, without a thorough understanding of the role of cultural barriers, trust, and language barriers, detailed predictions about the impact of specific messages are not possible.

-

Support basic research on converting moderator functions into models of how people cope with novel and extensive information.