Risk-Assessment Practices for Pesticides

The California Department of Pesticide Regulation (DPR) conducts risk assessments as part of its mission to protect human health and the environment by regulating pesticide sales and use. Premarket evaluation of pesticide products that have been approved by the US Environmental Protection Agency (EPA) is used to determine whether a product can be used safely in California. The evaluation is based on EPA standards for registration and studies required by state statutes. The state may register a pesticide product without further assessment, implement restrictions on the use of the product that are more stringent than federal standards, or even deny registration. The premarket evaluation sometimes identifies the need for a more comprehensive risk assessment to support decisions about registering a product in California. Risk assessments are also undertaken by DPR during the re-evaluation of a registered pesticide and if a substantial health hazard resulting from exposure to a pesticide is identified (DPR 2011).

Risk assessments identify potential health hazards posed by active ingredients (AIs), characterize dose–response relationships, and evaluate exposure data to characterize potential risks to agricultural workers and the public. Over the last decade, advances in science and technical analysis have led to improvements in the risk-assessment process that make assessments more rigorous, transparent, and useful to risk managers. This chapter first reviews National Research Council reports that have made key recommendations for improving the practice of risk assessment; specific aspects of DPR’s assessments are evaluated in the context of those recommendations. DPR’s risk-assessment practices are then reviewed more generally to determine whether they reflect best practices and are optimized for the needs of DPR’s risk managers.

BEST PRACTICES IN RISK ASSESSMENT

Lessons from the Silver Book

In 2009, a National Research Council committee issued an influential report, Science and Decisions: Advancing Risk Assessment (the “Silver Book”), which was developed in response to a request from EPA. The report offers substantial guidance on improving the scientific status of risk assessments and on increasing their utility for decision-making. A number of EPA efforts to implement the recommendations found in the Silver Book are under way (EPA 2014), and DPR has asked the present committee to offer guidance on some of the ways in which those recommendations might be used to enhance its program.

The Silver Book reaffirms most of the risk-assessment principles and concepts first elucidated in the National Research Council’s 1983 report Risk Assessment in the Federal Government: Managing the Process (the “Red Book”): the framework within which risk assessments are to be conducted, the need for inference assumptions (defaults) when data and basic knowledge are lacking, the importance of guidelines that specify the types of scientific evidence and default assumptions that will be used in the conduct of risk assessments, and the important distinctions between risk assessment and risk management. The Silver Book builds on those principles and

concepts to offer detailed guidance on scientific improvements in risk assessments, some of which can be implemented in the near term and some of which will require substantial study before they can be implemented. The Silver Book also focuses on improving the usefulness of risk assessments.

Improvements in Risk-Assessment Guidelines

Risk-assessment guidelines typically set forth and explain the types of scientific evidence to be relied on in assessing risk and offer guidance on how the evidence is to be collected, organized, and evaluated. Guidelines are necessary to justify and make explicit the specific assumptions that will be used to deal with uncertainties and data gaps, the various forms of extrapolation beyond the data needed to complete risk assessments, and how such issues as population and life-stage variability are to be handled. DPR provided the committee with the guidance documents that it has developed (see Appendix B) and indicated that its guidance on uncertainty factors and calculation of reference values (DPR 2011) is undergoing revision.

Extrapolation models and uncertainty factors, typically referred to as default assumptions, are derived on the basis of both scientific and policy considerations. The Silver Book makes a distinction between explicit defaults and “missing defaults” (implicit assumptions that have become ingrained in risk-assessment practice), and it recommends more explicit treatment of the missing defaults. The Red Book (NRC 1983) and later National Research Council risk-assessment publications, including the Silver Book, have emphasized that the selection of defaults for risk assessment involves policy choices that are of a different kind from those involved in risk management. Thus, the selection of default assumptions is based, to the extent possible, on current scientific understanding; in the absence of complete understanding, a “science-policy” choice is introduced to select the assumptions to be routinely used. The guideline-development process should be explicit with respect to the selections.

Two other principles related to the selection of defaults might be addressed in guidelines (see Chapter 6 of the Silver Book):

• The specification of defaults to be used in risk assessments ensures consistency among assessments and minimizes opportunities for inappropriate manipulation of risk assessments to achieve desired risk-management outcomes.

• Guidelines typically allow departures from default assumptions if compelling scientific data show the inapplicability, in specific cases, of a standard default. In such cases, the data are used rather than the standard defaults. Departures in specific cases should be scientifically justified.

DPR assessments often refer to “weight-of-evidence” (WOE) analyses as the basis of selecting specific uncertainty factors (from a variety of possible factors), but in many cases it is not obvious how the analyses led to the selection of the uncertainty factors. DPR’s guidance on default uncertainty factors (DPR 2011) is relatively brief and consists mostly of tables that list default values. Current efforts by DPR to revise its guidelines present an opportunity to provide better justification for default assumptions and more explicit guidance on the factors to be considered in selecting uncertainty factors and extrapolation models. That will help to ensure consistency between risk assessments and provide greater transparency.

There have been occasions in which DPR has departed from its usual defaults, sometimes to use science-based data that have become available in specific cases or for other less clear reasons. The committee recommends that DPR not only elucidate more clearly the bases of its defaults but clarify the criteria to be used for departures from the usual defaults. There is a great risk that without such departure criteria the risk-assessment process may appear to be arbitrary and to be driven by risk-management objectives. Other technical refinements in risk-assessment guidelines are recommended in Appendix C.

Improving the Utility of Risk Assessment

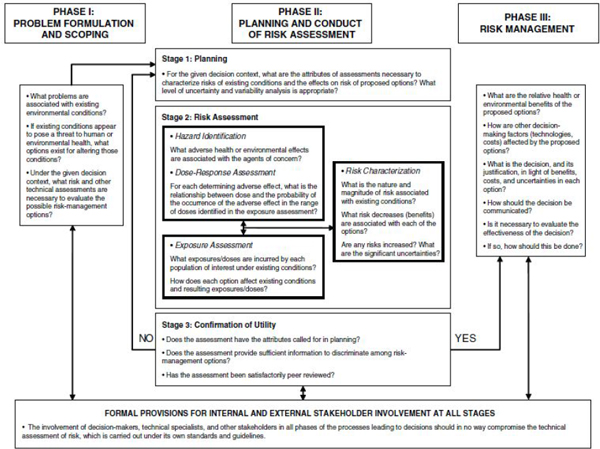

The Silver Book’s recommendations for improving the usefulness of risk assessment for decision-making might be the most important for enhancing DPR’s program because many of the recommendations depend on the uses to which risk assessments will be put. Chapter 8 of the Silver Book is devoted to the subject of risk assessment and decision-making and sets out a framework that maximizes the utility of risk assessments (see Figure 3-1 of the present report). The framework encompasses three phases: Phase I involves problem formulation and scoping, Phase II encompasses planning and conduct of risk assessment, and Phase III involves risk management. EPA efforts to adopt the the Silver Book’s recommendations offer useful examples and practical guidance (see EPA 2014).

Phase I: Problem Formulation and Scoping

Phase I places emphasis on “problem formulation and the simultaneous (and recursive) identification of risk-management options and identification of the types of technical analyses, including risk assessments, that will be necessary to evaluate and discriminate among the options” (NRC 2009, p. 247). Developing the problem formulation with input from risk managers would allow risk-assessment results to be better understood in the proper framework for risk management. The numbers and types of decisions in the case of DPR are smaller and less variable than those of EPA. The decision types could be formulated as the various problems that DPR is asked to solve, and risk-management options (interventions) to address the problems can be identified (see example questions posed in the box under Phase I of Figure 3-1). Under the statutes under which DPR operates, it might be possible to develop a general catalog of the decisions and options that is generally understood by DPR staff and stakeholders. Such a catalog would help to ensure that the decisions to be made and the options for addressing them are clearly set forth and that a consistent set of considerations is applied to each problem to be addressed. The results of the problem-formulation and scoping process are two products: “a conceptual model that explicitly identifies the stressors, sources, receptors, exposure pathways, and potential adverse human health effects that the risk assessment will evaluate and an analysis plan (or work plan) that outlines the analytic and interpretive approaches to be used in the risk assessment” (NRC 2009, p. 77). They are used in Phase II for planning risk assessments to discriminate between options. Unusual decision-making circumstances that are not covered in the general cases might arise, and they might require new planning, scoping, and problem formulation, but having a general catalog of decisions and options as a starting point could greatly increase program efficiency and transparency.

Phase II: Planning and Conduct of Risk Assessment

Phase II sets the stage for deciding what information and technical analyses are required to provide the information necessary to address the specified problems. A conceptual model and an analysis plan (or work plan) are used to guide the process. Typically, the risk assessment to be undertaken is focused first on an existing situation and then on the effects of the various possible interventions on the existing risks.1,2 Risk reductions achieved with various risk-management options are thus the key results of Phase II of the risk-assessment process. The framework for risk assessment presented as Stage 2 in Figure 3-1 is identical with the Red Book framework

______________

1If an existing situation is seen to pose no important risks, no further analysis or action is necessary.

2Interventions typically affect human exposures, not other elements of the risk-assessment process.

FIGURE 3-1 A framework for risk-based decision-making that maximizes the utility of risk assessment. Source: NRC (2009, p. 11).

(NRC 1983), and the same “rules” apply to its implementation (it is performed under specific risk-assessment guidelines and is free from interference by risk managers). Analyzing risk reductions associated with various risk-management options is solely a risk-assessment activity. And it is important that it is designed to provide risk managers with the information that they need to make good decisions. The latter is not possible without the type of planning called for in Phase II.

The committee emphasizes that the involvement of risk managers and other stakeholders in Phase II planning is not an inappropriate introduction of risk managers into the risk-assessment process. The involvement of risk managers should be focused on what needs to be evaluated to support good decisions, not on how the evaluation is to be done or what the outcomes should be.

Two other aspects of Phase II will be important for DPR to consider. First, risk assessments can be performed at various levels of technical sophistication, ranging from relatively simple “screening-level” assessments involving minimal data and modeling to highly detailed probabilistic assessments. Not all problems require the same level of risk assessment, and the first step of Phase II involves specifying the level of analysis appropriate for the problem under evaluation. Risk assessment is not an end in itself; its sole use is to support decision-making, so the assessment should always be “fit for purpose”.

Second, an important element of Phase II involves a check of the risk assessment to ensure that it has produced the information necessary to address the decision at hand (see Stage 3 in Figure 3-1). This check is performed before the assessment is moved into Phase III, the risk-management process.

Phase III: Risk Management

Risk assessment provides the critical information for decision-making. There may be circumstances in which other types of technical information (such as the relative effectiveness of various pesticides for plant protection and benefit–cost considerations) are also required for decisions (see box under Phase III in Figure 3-1), but health protection is of overriding importance. Thus, decision documents should be clear about how the risk-assessment results and the uncertainties associated with them are used to make the ultimate decision. The risk-management process must be completely transparent. Communication of risk decisions also needs to be clear and appropriate for the intended audience of stakeholders. Decisions do not automatically flow from the technical analyses, especially inasmuch as all such analyses contain uncertainties. Responsible risk management requires clear exposition of the path from risk assessment and other relevant technical analysis to the ultimate decision. The committee found that DPR’s risk-appraisal step is a highly valuable aid to the management process in this regard.

The committee emphasizes that risk managers are not to alter risk-assessment results. If the managers find the results not useful in some way, it is an indication that the important Phase I process of planning and scoping has failed. The proper course, if such circumstances arise, is to return to Phase I planning and the development of a more useful risk assessment.

Stakeholder Involvement

The Silver Book emphasizes the importance of stakeholder involvement in all phases of the decision framework (see box at the bottom of Figure 3-1). The involvement of stakeholders—including technical specialists, risk managers, and affected parties—is important to ensure the efficiency and relevance of the analyses undertaken to support decisions. The transparency of the entire process (all three phases) is essential, and DPR should take steps to ensure that there are no exceptions to this requirement. The agency might consider developing explicit guidance on how stakeholders can be involved (for example, see Chapter 3 of EPA 2014).

Scientific Improvements in Risk Assessment—Near Term

Two sets of recommendations in the Silver Book appear already to have an important presence in DPR’s risk-assessment practices. One concerns treatment of uncertainty and variability, and the second concerns the use of defaults. The committee’s evaluation of DPR’s current practices in those issues is discussed later. Here, the committee emphasizes additional features of the treatment of uncertainty and variability that might enhance DPR’s program further.

The Silver Book emphasizes the need to ensure that the level of uncertainty analysis is appropriate to the problem under evaluation. Uncertainty can be discussed simply at a purely qualitative level or, at the other extreme, probabilistically. It is a principle that analysis of risk and uncertainty should be carried out at a level of detail and sophistication appropriate to the problem at hand, and the appropriate level should be specified before the analysis is undertaken. (See Chapter 4 of the Silver Book for guidance on the “design” of risk assessments.) One goal of such recommendations is to avoid wasting time and resources on unnecessary technical analysis. It is not clear that DPR’s current approach to uncertainly analysis conforms to those principles.

The Silver Book makes a number of recommendations for improving the selection and use of defaults, which DPR should consider in updating its guidance documents. For example, the agency should begin developing explicit defaults to use in place of missing defaults, such as defaults for human variation in susceptibility to cancer and for risks to susceptible subpopulations (during early life for other stages). Guidance documents should provide clear rationales for the basis of all the defaults. The Silver Book also advocates that standards and criteria for departing from defaults be developed. Such a system would include an “evidentiary standard” for considering alternative assumptions in relation to defaults and establishing criteria for gauging whether an alternative model has met the evidentiary standard.

Scientific Improvements in Risk Assessment—Long Term

Chapter 5 of the Silver Book (“Toward a Unified Approach to Dose–Response Assessment”) recommends a move toward quantitative measures of risk for all end points. It advocates that the approach include (NRC 2009, p. 9)

use of a spectrum of data from human, animal, mechanistic, and other relevant studies; a probabilistic characterization of risk; explicit consideration of human heterogeneity (including age, sex, and health status) for both cancer and noncancer end points; characterization (through distributions to the extent possible) of the most important uncertainties for cancer and noncancer end points; evaluation of background exposure and susceptibility; use of probabilistic distributions instead of uncertainty factors when possible; and characterization of sensitive populations.

Those are far-reaching recommendations, and their general implementation will require many years of study and research. Relevant efforts are under way in EPA and the California Environmental Protection Agency (CalEPA) Office of Environmental Health Hazard Assessment (OEHHA).

The current approach to risk assessment for all noncancer end points involves the establishment of toxicity reference doses (RfDs), reference concentrations (RfCs), or their equivalents. Those values are not expressions of risk, except in a qualitative sense (exposures at or below the values are likely to pose little risk to health). Even more problematic is the fact that their use in decision-making provides no guidance on the probabilities of adverse effects at higher or lower doses or concentrations. In contrast, cancer risks are generally expressed as probabilities, so it is possible to quantify risks and how risk declines as exposures decline (in association with various possible risk-management interventions). Given a robust database on which to base the quantitative model, this type of risk

information is highly useful for decision-making. The use of RfDs or RfCs is not helpful in that respect, because they are “bright lines” and offer little flexibility for decision-making.3 Implementing the approach set forth in the Silver Book will lead to quantitative expressions of risk for all toxic end points, whether toxicity occurs by a threshold or nonthreshold mode of action, although the requirement for quantitative expressions of risk is decided during Phase II planning (specifying the level of analysis appropriate for the problem under evaluation).

DPR develops margin-of-exposure (MOE) estimates in its risk-characterization documents for noncancer end points. The MOE is the magnitude by which a toxicity value (such as a no-observed-effect level) exceeds the estimated exposure dose. The larger the MOE, the smaller the estimated risk. Risk assessments are based on developing MOEs for existing conditions of exposure and showing how they increase with various interventions. Although MOEs are not quantitative risk measures, they provide somewhat better guidance for risk managers than does the inflexible use of RfDs and RfCs. DPR also estimates RfDs and RfCs and calculates air concentrations at specified risk levels so that pesticides can be evaluated as possible toxic air contaminants.

At the same time, the standard defaults for uncertainty factors can be invoked for comparison with MOEs, and analysis can focus on identifying the ranges of MOEs that are clearly inadequate to achieve health protection, probably at least what is needed to achieve health protection, and probably adequate to achieve health protection but with some degree of uncertainty. For many decisions, a system of comparing risks associated with various possible interventions in this fashion will be useful. And for many important DPR decisions, comparing the benefits (risk reductions) of different management options is essential. Implementation of the approach described here clearly and transparently and with adequate peer review can improve decision-making.

Another major topic addressed by the Silver Book is cumulative risk assessment, which is the characterization of the combined risks to health posed by multiple agents or stressors. Consideration is given not only to exposure issues, such as aggregate exposure to the same chemical or exposure to multiple chemicals, but to nonchemical stressors, inherent vulnerabilities, population variability, and other effects on disease outcomes. Recommendations were made for collecting information on those issues in the near term and long term in recognition that more work is necessary to improve the methods of performing cumulative risk assessment. A National Research Council report (NRC 2008) on phthalates addresses issues of dose–response assessments in the context of simultaneous exposures to multiple stressors.

EPA has performed cumulative risk assessments of five groups of pesticides: organophosphates, N-methyl carbamates, triazines, chloroacetanilides, and pyrethrins/pyrethroids. Those pesticides were grouped on the basis of their common modes of action. The extent to which DPR has considered such cumulative risk assessments is unclear, but the agency has collected some data that would be relevant to informing them; for example, exposure data on multiple pesticides are available from DPR’s air-monitoring and residue-monitoring programs for pesticides. As stressed in the Silver Book, problem formulation is critical for determining the level of complexity and quantification that would be needed for a cumulative risk assessment in light of the decision context.

Lessons from Other National Research Council Reports

National Research Council reports published after the Silver Book provide more detailed guidance on how to address issues raised in it. Most notably, recommendations for improving the

______________

3It is often stated that these values are not truly “bright lines”, but they are used as though they were, and risk managers cannot treat them in any other way without being accused of manipulating risk-assessment outcomes.

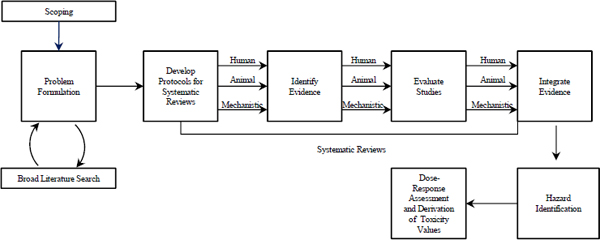

hazard identification and dose–response analyses of chemicals may be found in Review of the Environmental Protection Agency’s Draft IRIS Assessment of Formaldehyde (NRC 2011) and Review of EPA’s Integrated Risk Information System (IRIS) Process (NRC 2014). Chapter 7 of the formaldehyde report outlined a “roadmap to revision” of future IRIS documents, which included recommendations for making improvements in four broad categories: descriptions of methods and criteria for selecting studies, approaches to evaluating critical studies, weight-of-evidence analyses, and justification of modeling approaches (NRC 2011). The later report on the IRIS process expands on those recommendations, emphasizing the need to incorporate systematic review principles into the process (see Figure 3-2). The key factor is that the questions to be addressed and the methods by which the scientific evidence will be identified, analyzed, and integrated to answer the questions are set forth in advance of performing the assessment. The goal is to provide an objective analysis through a transparent and standardized approach that allows understanding of how decisions are made at each step of the process (NRC 2014).

CALIFORNIA’S RISK-ASSESSMENT PRACTICES

The committee reviewed DPR’s risk-assessment guidance documents and three examples of recent pesticide risk assessments—of carbaryl, chloropicrin, and methyl iodide—for the purpose of understanding the procedures and practices of DPR (see Appendix B for a list of documents reviewed). The committee’s observations in this section are related less to the scientific content and technical conduct of the assessments than to the procedures, assumptions, and guidelines that largely determine the direction and outcome of the assessments. Technical recommendations are provided in Appendix C. How the risk assessments are used and understood by risk managers, decision-makers, and stakeholders was of particular interest. Those factors necessarily impinge on DPR’s risk-management and communication processes. It was not within the committee’s charge to review those aspects of DPR’s responsibilities, but improving the integrity and usefulness of DPR’s risk assessments ultimately must involve a consideration of the entire risk-analysis framework used by DPR to integrate risk assessment, risk management, and risk communication.

FIGURE 3-2 Systematic review in the context of EPA’s Integrated Risk Information System process. Public input and peer review are integral parts of the process although they are not specifically noted in the figure. Source: NRC 2014 (p. 110).

Strengths

In the risk-characterization documents reviewed by the committee (DPR 2010a,b, 2012), the quality, thoroughness, and depth of the scientific effort put forth by DPR staff are evident. The documents reflect established practices and current trends in regulatory risk assessment as evidenced by several state-of-the-art approaches used to assess dose–response relationships and to model exposure. DPR uses several assets to develop and validate its risk assessments, primarily its collection of California-specific data on exposure, such as data obtained through its Pesticide Use Reporting program, Toxic Air Contaminants Program, and Pesticide Illness Surveillance Program. There is commendable transparency in reporting how hazard identification, exposure assessment, and risk characterization are performed for the assessments. However, it is sometimes less evident why a given procedural approach or assumption is used (see discussion later in this chapter). The risk-appraisal chapters are a particularly good feature of DPR’s risk-characterization documents and can be further strengthened to lend integrity to the risk findings by including an even more robust discussion of critical assumptions and inconsistencies with other agencies.

Potential Improvements

Problem Formulation

DPR’s risk-characterization documents have yet to include a formal problem-formulation component that clearly elaborates the purpose and approach of the assessment as recommended in the Silver Book (NRC 2009). As discussed earlier in this chapter, developing the problem formulation in conjunction with risk managers would allow risk-assessment results to be understood better in the proper framework for risk management. The problem should be formulated in a way that addresses the decision-making context in which the risk findings are to be applied. Many of the committee’s concerns about improving the risk-assessment process would be lessened if a problem-formulation description were developed for each assessment and included a conceptual model and analysis plan. There should continue to be separation between risk assessors and managers with respect to influence on the outcome of an assessment, but joint planning in the early stages of the process to identify and formulate the needs of the risk manager is likely to improve both the relevance and the efficiency of the assessment.

Hazard Identification

In the risk-characterization documents reviewed by the committee, the hazard-identification section often describes extensive post hoc analysis of studies. Independent reanalysis of data is an important aspect of study review that may identify end points otherwise overlooked in the original study and that may additionally help to resolve questionable findings. Post hoc analyses, however, must be conducted with caution or avoided because they might involve inferences that extend beyond the original intent of a study and the scope of problem formulation. When conducted, reanalysis of data should show a dose–response relationship, reproducibility, association with relevant effects that reflect the original study intent (for example, clinical signs or histopathology), differences outside the normal range of variation, and biologic plausibility (Doull et al. 2007). Adherence to such criteria was not uniformly evident in the analyses reviewed by the committee; this might reflect a lack of clear guidance for post hoc analysis of otherwise compliant studies. That can lead to the use of an indicator of exposure (such as ocular irritation in humans or respiration-rate effects in human or animal studies) that has an ambiguous biologic relationship to a toxicologic response (e.g., DPR 2012).

In addition to toxicologic end points, an indicator of exposure, such as ocular irritation, might have important application as a risk-management tool to mitigate against toxicologically significant exposures. Chloropicrin (DPR 2012) is a case in point. It may be used as a fumigant or as a warning agent added to other pesticide formulations to serve as an indicator of a potentially hazardous exposure to a formulation. It is important for the purposes of risk management and risk communication that the distinction in applying an exposure end point vs a toxicity end point be clear. Otherwise, the public may come to believe that evidence of exposure (ocular irritation or odor detection) represents toxicity. DPR should endeavor to make the nature of the end point clear and to distinguish risk options to prevent misinterpretation.

Recursive evaluation that involves iterating between end-point selection and calculation of an RfC is an inappropriate means of data analysis that favors sensitivity in end-point selection over uncertainty in the findings. In the example of chloropicrin, DPR (2012) analyzed a nonguideline human study with benchmark-concentration methods to identify points of departure for ocular irritation and increased nitrogen monoxide in nasal air. Ocular irritation was the more sensitive effect and had lower uncertainty associated with its RfC. However, DPR chose to use the lower RfC derived on the basis of increased nitric oxide in nasal air even though it was less sensitive than ocular irritation and was associated with greater uncertainty. DPR should consider how to communicate the balance of risk vs uncertainty in the available data and analysis so that risk managers can make clearly informed decisions.

Carcinogenicity risk assessments should use the most up-to-date guidance and be based on a WOE approach. Use of statistical reanalysis and trend analysis should conform to accepted practice and terminology, such as that of the International Agency for Research on Cancer or EPA (2005).

Exposure Assessment

DPR considers several types of potential exposure scenarios for pesticides, such as occupational exposure (for example, in application and harvesting), industrial exposure, residential exposure, and other general-population exposures (for example, to dietary and ambient air). Seven guidance documents on how DPR conducts its exposure assessments were provided (see Appendix B). Four of the documents are undergoing revision, including the master guidance document Guidance for the Preparation of Human Pesticide Exposure Assessment Documents. The agency noted that exposure data are often lacking, so it must use assumptions and judgments.

DPR has valuable data generated by its air-monitoring network and California-specific worker and residential information, residue data, and pesticide use and sales information. Those important datasets should be the main focus of the risk-assessment process conducted by DPR. Examples of California-specific data noted by the committee include exposure to multiple pesticides common in tank mixtures in the state, high-end seasonal or migrant-worker exposure scenarios, and frequent meteorologic conditions relevant to fumigant applications. When such information is absent or insufficient, the exposure assumptions and approaches used by EPA or other regulatory authorities may be appropriate as surrogate data.

The rationale for some exposure assumptions used by DPR needs to be clearly explained. Such exposure terms as high end, worst case, and maximum realistic exposure are used throughout documents, sometimes interchangeably and often without definition. Calculation of the probability of occurrence for use in exposure estimates would communicate the degree of conservatism of the exposure assumptions better and would inform regulatory decision-making better. Even when guidance is available, transparency is sometimes lacking. For instance, characterization of exposure of bystanders to airborne contaminants often refers to a 95th percentile exposure estimate (e.g., DPR 2012). The actual guidance is for only one aspect of the exposure estimate—the source concentration—and prescribes an approach that often results in a value greater than the 95th percentile (Frank 2009). Several further assumptions used to calculate the exposure of at-risk persons push well beyond the 95th percentile and postulate a series of circumstances that

may be individually plausible but collectively are implausible. Improved guidance and problem formulation that describe exposure calculations, probabilities, and definitions would be useful in this regard.

The committee reviewed DPR’s dietary risk assessment of carbaryl (DPR 2010a). DPR relied primarily on the US Department of Agriculture Continuing Survey of Food Intake by Individuals (CSFII) to determine food-consumption patterns in the general public. Although the dataset provided national estimates, it might not be representative of the consumption of fruits and vegetables by California residents. (Data collection for CSFII has been discontinued.) It would be preferable to use California-specific consumption data to the extent possible to provide more representative dietary exposure assessments by collecting data or finding other sources of data. DPR will have to continue to rely on national surveys and models, so it is important for it to stay current with scientific developments and workgroups for exposure modeling, such as the updated Dietary Exposure Evaluation Model—Food Commodity Intake Database/Calendex model. For occupational scenarios, DPR relies primarily on the Pesticide Handler Exposure Database (PHED). Although PHED is standard in occupational-pesticide risk assessment, it is constrained by outdated assumptions. DPR should consider evaluating the utility of the data in the Agricultural Handler Exposure Task Force database to inform its exposure assessments.

DPR has adopted advanced methods of physical modeling to characterize exposure of workers and residential bystanders on the basis of data from application-site mass flux studies (in the case of agricultural fumigation) and site monitoring (in the case of structural fumigation). The models are often applied in such a way as to establish worst-case exposures. In the committee’s review of the chloropicrin document (DPR 2012), examples of frequent application of worst-case assumptions included scaling to maximum theoretical flux, using default worst-case weather, fixing the wind rose to expose people continuously throughout an exposure interval, and fixing the position of an exposed person throughout the duration of exposure. To place worst-case exposure outcomes into context, DPR might consider including comparative exposure calculations that are based on more realistic or “typical” exposure scenarios. Including such comparisons would provide risk managers with useful information regarding options for mitigation when worst-case exposure outcomes yield evidence that warrants concern in risk characterization. There is an additional opportunity to use the same modeling approaches in fully stochastic modeling to account comprehensively for available information, such as actual use-rate statistics from pesticide-use reporting databases, the wind rose of distribution of the pesticide off-source, the variation in key weather period driving off-source movement, and the movements and duration of exposure of workers and residents in the immediate vicinity of application sites.

Risk Characterization

DPR makes a number of conservative assumptions and decisions in the performance of its risk assessments. The conflation of a series of conservative assumptions and estimates regarding health effects thresholds and exposures and the application of uncertainty factors can result in scenarios that are well in excess of worst-case exposure even if each individual estimate in itself is scientifically defensible. There appears to be a tendency for that to occur in the DPR assessments, at least in the three documents reviewed by the committee (DPR 2010a,b, 2012). Clarification of the outcomes of these worst-case scenarios can be achieved through comparison to a base case. Risk assessors serve the interests of risk managers best if they also provide a reasonably central view of the level of risk (for instance, a typical-exposure scenario) with an estimate of the range of uncertainty that would indicate the potential for greater risk in more serious situations.

An option would be the more consistent use of stochastic forecasts. Alternatively, information on markets, uses, and demographics can more specifically describe the at-risk people and the uses, markets, and operations that contribute most to risk to help to ascertain the degree to which the combination of various assumptions leads to plausible risk scenarios.

Consistency in the Department of Pesticide Regulation’s Risk Assessments

Although the risk assessments performed by DPR demonstrate the use of established risk-assessment methods, their application is sometimes inconsistent and could be strengthened with clearer guidance that is strictly adhered to. For example, in establishing hazard and regulatory end points, a benchmark dose (BMD) and a lower 95% confidence limit of the benchmark dose (BMDL) are sometimes calculated and used by DPR as relevant points of departure. However, the benchmark response level chosen for the BMD and the BMDL varies from case to case. The risk-characterization document on chloropicrin uses 1%, 2.5%, 5%, and 10% response levels (DPR 2012). That can lead to inconsistency in end-point comparisons between different critical effects. In addition, caution should be used in extrapolating far below the observed range of data because it introduces uncertainty into the assessment. The approaches used are clearly within the bounds of DPR’s recommended practices (DPR 2004a,b); however, for uniformity in comparisons among studies and for better understanding of end-point selection, it is advisable that when BMD approaches are used they be applied to all representative studies in a class. There are reasons for the differences in selection of models and thresholds, but the Silver Book points to the difficulty of using results of such analyses given the use of different models and model assumptions to characterize the model uncertainty associated with the BMD approach. DPR’s rationale for selecting models is sometimes stated as seeking a more health-protective outcome, which is a judgment with an obscure scientific basis. In fact, there is a tendency in DPR’s risk-characterization documents to intermix application of uncertainty factors (a quantifiable assumption based on accepted practice) and health-protective assumptions. Clear guidance and documentation as to the approaches to and assumptions regarding such determinations would improve consistency and increase the integrity of the assessment outcome. For instance, given that DPR now has over a decade of experience working within its guidance for BMD modeling, a revision and clarification of the guidance may be warranted.

DPR has a number of internal and external sources of risk-assessment guidance, but no overarching framework that instructs the DPR assessor as to the appropriate use and application of specific methods and guidelines appears to be available. Such a framework would help to ensure consistency among the risk-characterization documents. It is important that the most up-to-date guidance documents be used and that deviations from them be documented and justified. The dates on DPR’s risk-assessment guidance documents (see Appendix B) indicate that they have not been updated regularly and so do not reflect changes that might be necessary in response to recommendations of the Silver Book, EPA, or other resources. Examples of DPR deviations from EPA guidelines include those in study selection, in the use of BMD approaches, and in the WOE approach to evaluate carcinogenicity.

Relationship with the US Environmental Protection Agency

The requirements and procedures for the risk-assessment process in general appear to be similar between DPR and EPA, and the technical barriers to collaboration in risk assessment seem minimal. Although there are formal collaborative processes, it was not clear to the committee how extensively they were used and how effective they were. In the risk assessments considered by the committee, there appeared to be a recent tendency to “second-guess” EPA’s risk assessments and to find somewhat higher levels of risk. That can preclude harmonization later when DPR’s assessments are provided to EPA for comment. There is a clear need to understand differences between DPR and EPA outcomes with respect to risk assessments of the same pesticide.

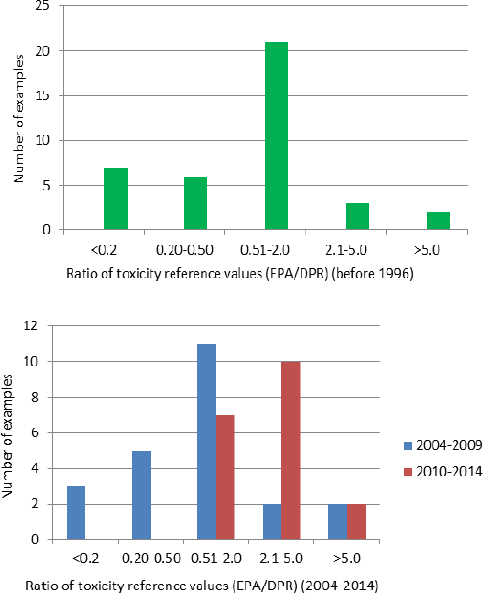

Reference values from historical (before 1996) and recent (2004–2010) pesticide-toxicity evaluations by DPR and EPA are compared in Figure 3-3. The data indicate a trend in DPR’s estimating higher levels of toxicity than EPA for the same compound in more recent years. In

1996, CalEPA compared the reference values estimated by DPR and EPA for 39 pesticide AIs (RAAC 1996). It found that DPR selected the same values as EPA for 10 pesticides, lower values (higher toxicities) for nine, and higher values (lower toxicities) for 20. The top histogram of Figure 3-3 shows the distribution of differences in chronic reference values estimated by the two agencies. A difference of a factor of 5 or more was found for 10 pesticides. In seven of those cases, DPR’s reference value was higher than derived by EPA; this indicates an estimate of potency lower than that of EPA for the end point in question. DPR estimated values that were lower than EPA’s by more than a factor of 2 only 13% of the time. Thus, the two agencies agreed within narrow limits most of the time, and DPR rarely concluded that the toxicity of an AI was much higher than judged by EPA.

FIGURE 3-3 Comparison of reference values derived by EPA and DPR for the same active ingredients over three periods: before 1996, in 2004–2009, and in 2010–2014. The results in the upper panel compare chronic reference values for 39 active ingredients (RAAC 1996). The data in the lower panel reflect multiple comparisons of reference values for each of 11 active ingredients, including acute, subchronic, and chronic durations and different routes of exposure (see Appendix D).

DPR provided the committee with a comparison of 11 of the most recently completed pesticide assessments, which spanned 2004–2014 (G. Patterson, DPR, personal communication, July 1, 2014).4 In the six assessments completed during 2004–2009 (see bottom histogram of Figure 3-3), DPR agreed with EPA within reasonably narrow limits in many cases and in some cases calculated reference values that were higher than those established by EPA. DPR estimated reference values that were lower than EPA’s by more than a factor of 2 only 17% of the time. This percentage is similar to that found in the comparison of older pesticide assessments. However, in the five assessments completed during 2010–2014, DPR disagreed with EPA more often than it agreed, and in each of these cases its reference values were lower than those of EPA (ratios greater than 1); this indicates a higher estimate of potency than made by EPA. Although the number of samples for the comparisons is small, it raises the question of whether there has been a recent divergence between DPR and EPA in toxicity assessments. That possibility is supported by the observation of similar trends in the cancer characterization and potency estimates derived by the two agencies for the same 11 AIs and in DPR’s risk-characterization documents for methyl iodide (DPR 2010b)5 and chloropicrin (DPR 2012).6 In the assessments conducted before 2010 (see Appendix D), DPR disagreed with EPA on the carcinogenicity and potency estimates in only one of the six cases; in the one case, DPR considered methidathion to be a carcinogen whereas EPA did not. In the assessments conducted since 2010, DPR disagreed with EPA in four of seven cases, either judging that the AI should be treated as a carcinogen (methyl iodide, chloropicrin, and simazine) in contrast with EPA or calculating a significantly higher cancer potency (by more than a factor of 10 for carbaryl) when the agencies agreed on the carcinogenic nature of the pesticide. Box 3-1 examines DPR’s cancer risk assessment for carbaryl in comparison with those of other relevant organizations. DPR was the only one of six organizations that found an unacceptable cancer risk associated with dietary exposure to carbaryl, primarily because it estimated the highest cancer potency.

Overall, DPR has generally estimated reference values within a factor of 5 of those established by EPA and rarely estimated toxicity greater than a factor of 2 above EPA. The magnitude of such differences lies within the normal bounds of uncertainty inherent in such assessments and raises the question of whether EPA’s reference values and cancer potencies could be satisfactory for use by DPR in many risk assessments unless DPR has new toxicology studies that were not considered by EPA (which does not appear to be the case in the 11 recent examples) or has compelling evidence to question EPA’s estimates. Accepting EPA assessments as a default practice in the absence of a clear and pressing rationale for repeating the hazard identification and dose–response characterizations could save large amounts of staff effort and improve efficiency. There should rarely be a need to conduct an independent assessment. The Silver Book states that “the goal of timeliness is as important as (sometimes more important than) the goal of a precise risk estimate” (NRC 2009, p. 20). For the purposes of the present report, recommendations to rely on EPA’s hazard identification and dose–response estimates to a greater extent does not equate to relying on the overall risk estimates inasmuch as exposure must also be considered; there may be important California-specific exposure scenarios that differ from those for the general US population used by EPA.

Although scientists can disagree on scientific interpretation, the consideration of general factors (such as definitions of terms, application of uncertainty factors, approaches for identifying a threshold, and default parameters for exposure calculations) may become largely a matter

______________

4The assessments of methyl iodide and chloropicrin were completed in 2010 and 2012, respectively, but were excluded from DPR’s submission because they were among the example assessments being reviewed in depth by the committee.

5DPR estimated a Q* factor of 0.2–3.2 × 10-2 for methyl iodide, but EPA judged it not to be a carcinogen.

6DPR estimated a Q* factor of 1.6 × 10-2 for chloropicrin, but EPA judged it not to be a carcinogen.

of policy. However, the general factors should be similar more often than dissimilar when risk assessments are undertaken by recognized regulatory authorities. Genuine differences in toxicity assessments (even small differences in magnitude) should not be minimized in the interests of harmonization between regulatory agencies, but consideration should be given to the benefits of harmonizing approaches among DPR and sister agencies, especially EPA, in terms of such factors as conservation of regulatory resources, enhanced public confidence in the risk-assessment process, possible interstate trade barriers, and the availability to California growers of pesticides that are used by growers in other states. When harmonization is impossible because of policy issues, the reasons for the differing approaches must be clearly elaborated and defended. In several instances, risk appraisals clearly point out the differences but do not elaborate on why the differences exist and why they were intractable.

BOX 3-1 Cancer Assessments of Carbaryl Performed by Different Organizations

The committee examined the cancer risk assessments of carbaryl by DPR (2012), the International Programme on Chemical Safety (IPCS 2001), EPA (Fort 2003, 2007), the European Food Safety Authority (EFSA 2006), the Australian Pesticides and Veterinary Medicines Authority (APVMA 2007), and Health Canada (2009). It found that although all the organizations used the same study as the basis for calculating cancer potency and agreed on the critical end point derived from the study, they derived different estimates of cancer risk. DPR, EPA, and Health Canada used nonthreshold linear extrapolations to derive Q* cancer potency factors. The differences in the values appear to be due in part to judgments about whether to include the high-dose data from the study and whether to combine findings on hemangiosarcomas with those on hemangiomas. DPR excluded the high-dose data and combined the tumor types to derive a Q* value (9.72 × 10-3 mg/kg-day), which is about 11 times higher than that derived by EPA (8.75 × 10-4 mg/kg-day); the latter included the high-dose data and considered only hemangiosarcomas. Like DPR, Health Canada excluded the high-dose data and combined the data on hemangiosarcomas and hemangiomas but calculated a Q* value (1.08 × 10-3), which is only slightly above that of EPA. On the basis of those results, only DPR concluded that dietary exposure to carbaryl carries an unacceptable cancer risk (about 3 × 10-6). The reason for the discrepancies in Q* values and resulting judgments on the acceptability of the cancer risk are not obvious, because the processes used by EPA and Health Canada are not fully explained, but the differences reveal a lack of consensus and considerable uncertainty in this element of the risk assessment.

The International Programme on Chemical Safety, the European Food Safety Authority, and the Australian Pesticides and Veterinary Medicines Authority used a different (threshold) approach to estimate cancer risk that was based in part on their conclusion that the WOE did not indicate that carbaryl is genotoxic. An acceptable daily intake (ADI) of 0.008 mg/kg-day, which was based on a safety factor of 2,000, was determined by each of those agencies. The highest chronic dietary exposure of various groups to carbaryl estimated by DPR under California conditions is 0.000379 mg/kg-day, which is less than 5% of the ADI and indicates no basis for concern if this alternative approach had been used. Thus, of the six agencies included in this comparison, only DPR calculated a cancer potency that leads to the conclusion that dietary carbaryl presents an unacceptable risk.

The committee points out the discrepancies not to suggest that DPR’s conclusions fall outside the reasonable bounds of scientific analysis (in fact, DPR has carefully justified its conclusions) but to illustrate DPR’s tendency toward conservatism. They also raise a question about whether any attempts at harmonization between EPA and DPR were made. Finally, despite DPR’s discussion of sources of uncertainty, its summary and conclusions do not indicate the considerable level of uncertainty and the degree of confidence that DPR has in its conclusion about the existence of an unacceptable cancer risk.

Productivity and Efficiency of the Department of Pesticide Regulation’s Risk-Assessment Process

Although DPR’s risk-assessment methods are technically advanced, the resulting time and effort expended by the agency’s personnel on independent assessments of single compounds is probably not sustainable in view of the current workload, including the required re-evaluation of high-priority AIs. DPR needs to consider the important aspect of timeliness of its risk-assessment process with respect to health and safety. DPR provided the committee with the timelines for completion of its risk-characterization and exposure-assessment documents for select AIs completed in 2000–2013. Twelve completed risk-characterization documents were listed for those years. The typical amount of time to complete the assessments was 6–10 years; the shortest took 2 years (DEET), and the longest took 19 years (azinphosmethyl). Three more documents were in the final stage of completion (submitted to the assistant director of DPR in 2014); the amount of time it took for them to reach this stage was 7–16 years (G. Patterson, DPR, personal communication, July 1, 2014). Turnovers in staff, competing responsibilities of risk assessors, and problems in coordinating assignments have contributed to the length of time needed to complete some assessments. Of the 11 most recently completed assessments, the committee noted that a few were of AIs that had no active registrations or uses at the time of completion (for example, methamidophos and methyl parathion).

DPR could substantially streamline its process and achieve greater productivity by relying on external-agency information (primarily from EPA’s Office of Pesticide Programs) for some components of the risk assessment to avoid duplication of effort. For example, in some cases, hazard identification and dose–response analysis conducted by EPA appear to be conducted in parallel, and not collaboratively, with DPR. Whether performing an independent risk assessment is the best use of DPR’s resources to ensure occupational and public health protection is unclear. If the agencies are reviewing the same studies and using the same or similar guidelines, the hazard findings should be similar, and it might be possible for DPR to leverage the hazard identifications developed by EPA. DPR might well consider how pesticide risk assessments in other states (such as New York and Florida7) are conducted in the context of resource constraints and leverage information on pesticides that have been evaluated by other regulatory authorities. If statutory requirements in California dictate specific health-protective approaches, they need to be highlighted on the basis of policy, and assessments by other regulatory authorities would need to be adjusted in keeping with those clearly stated policies. If DPR is able to rely on EPA’s assessments, it could direct more resources to California-specific needs, such as better characterization of exposure scenarios and estimates, when it lacks the ability to use assessments from other regulatory authorities or national estimates are inappropriate. In addition, DPR could potentially complete the assessments of more pesticides each year.

When DPR produces its own independent risk-characterization documents, efforts should be made to streamline the documents and the internal reviewing procedures. DPR’s risk-characterization documents often repeat information within and among assessments, such as hazard identification from an earlier assessment. That poses a problem for both timely conduct and communication of risk assessments. Internal processes in DPR need to be established to limit repetitive information and staff time spent on generation and review of sections. It is the committee’s judgment that the current redundancy in risk assessments conducted by DPR, in conjunction with limited resources and workflow constraints, is affecting DPR’s ability to complete risk assessments in a timely manner. Streamlining the scope of and approach to conducting risk assessments would present opportunities to refine the agency’s priority-setting process as well (see Chapter 2).

______________

7New York and Florida used to perform their own pesticide risk assessments but now rely on EPA’s risk assessments (A. Lawyer 2014).

Furthermore, DPR could cease work on a risk-characterization document if the registration status and use of the pesticide have changed to such an extent that public and occupational health risks are no longer of concern. That would allow DPR to refocus on currently used pesticides and pesticides of emerging concern.

On the basis of its review of three relatively recent risk-characterization documents, the committee found DPR risk assessments to be comprehensive, clear, and technically sound. The documents reflect that DPR staff is attempting to follow best practices in risk assessment and making a particularly valuable contribution in state-specific exposure assessments. However, the committee was struck by what appears to be an enormous duplication of effort in DPR’s conduct of toxicologic assessments of individual pesticides independently of EPA. The magnitude of differences in reference values estimated by the agencies for the same compound generally falls within the normal bounds of uncertainty. Although mechanisms are in place for collaboration between the two agencies, it appeared that harmonization is not regularly achieved. In the committee’s judgment, there are considerable benefits to harmonizing approaches among DPR, EPA, OEHHA, and other relevant agencies. The exposure assessments performed by DPR provide the greatest benefit when they introduce state-specific considerations into the risk-assessment process.

Recommendation: DPR should review the legislative mandates under which it works to determine whether an independent comprehensive evaluation of pesticides is required in every case in which a risk assessment is performed. If no new and compelling toxicology data have been generated since an EPA assessment was conducted and if there is no reason to believe that the EPA assessment is seriously flawed, DPR could rely on EPA’s assessment to a greater extent. If the legislation allows, DPR should collaborate with EPA on its pesticide risk assessments and then rely on EPA’s hazard identification, dose–response assessment, and derivation of reference values as a starting point for its own evaluations and focus its efforts on collecting California-specific exposure data, which will help in tailoring the risk assessments to the state’s needs. Some data might be obtained from other groups or researchers that are collecting exposure information in the state, but the agency may still be required to collect its own data.

When independent assessment is warranted, it will be important for DPR risk assessors to keep abreast of the risk-assessment recommendations outlined in recent National Research Council reports and relevant guidance developed by other agencies, particularly EPA, on how the recommendations can be implemented.

Recommendations:

• DPR should undertake a careful review of the framework presented in Chapter 8 of the Silver Book (NRC 2009) and the practical guidance in EPA (2014) and NRC (2014) for improving risk assessments. The review should include collaboration between risk assessors and managers to ensure a common understanding of the definitions, principles, and steps of the risk-assessment process, including stakeholder involvement.

• DPR should incorporate problem formulation and other relevant elements recommended in the Silver Book into its risk-assessment process. An important consideration is that risk managers should be involved in the problem-formulation stage so that risk assessments can be designed to address the decisions that need to be made by the managers and other stakeholders. Consideration should be given to whether a general set of problems and risk-management options could be formulated to use as a starting point in problem formulation.

• DPR should update its risk-assessment guidance documents regularly to reflect the most current risk-assessment practices. The guidance documents could draw from the work of EPA, OEHHA, and other relevant agencies; this could help to standardize and streamline reviews and evaluation approaches and promote consistency among the assessment teams and contributors. It might be useful for DPR to develop an overarching framework for considering and applying the various guidance documents on which it relies to ensure consistency between risk assessments and to aid new risk-assessment staff.

• DPR should update its guidance on defaults and begin developing explicit guidance on the inclusion of missing defaults, such as defaults for human variation in susceptibility to cancer and for risks to susceptible subpopulations (during early life and other stages). Guidance should also be developed on when departures from defaults may be justified.

• DPR should ensure that risk-management documents arising from its risk appraisals discuss explicitly how an appraisal informed a decision and describe the uncertainties associated with the assessment. A useful resource is draft guidance from EPA (2014), which discusses four principles (transparency, clarity, consistency, and reasonableness) to ensure the usefulness of information in the risk-characterization step to risk managers.

• In the long term, DPR should monitor (and perhaps participate in) the activities of EPA and OEHHA in developing guidance on unified approaches to performing quantitative risk assessments for cancer and noncancer end points and in performing cumulative risk assessments. DPR scientists should stay abreast of current trends in exposure assessment, perhaps by having opportunities for specialized training, participation in scientific conferences, and engagement with workgroups and task forces that advance the science of exposure assessment.

APVMA (Australian Pesticides and Veterinary Medicines Authority). 2007. The Reconsideration of Registrations of Products Containing Carbaryl and Their Approved Associated Labels, Part 1: Uses of Carbaryl in Home Garden, Home Veterinary, Poultry and Domestic Situations, Final Review Report and Regulatory Decision. Volume 2: Technical Reports. Australian Pesticides and Veterinary Medicines Authority, Canberra, Australia. January 2007 [online]. Available: http://apvma.gov.au/sites/default/files/carbaryl-phase-6-review_finding_pt1vol2__0.pdf [accessed August 22, 2014].

Doull, J., D. Gaylor, H.A. Greim, D.P. Lovell, B. Lynch, and I.C. Munro. 2007. Report of an expert panel on the reanalysis of the 90-day study conducted by Monsanto in support of the safety of a genetically modified corn variety (MON 863). Food Chem. Toxicol. 45(11):2073-2085.

DPR (Department of Pesticide Regulation). 2004a. Process for Human Health Risk Assessment Prioritization and Initiation. Department of Pesticide Regulation, California Environmental Protection Agency. July 1, 2004 [online]. Available: http://www.cdpr.ca.gov/docs/risk/raprocess.pdf [accessed February 12, 2014].

DPR (Department of Pesticide Regulation). 2004b. Guidance for Benchmark Dose (BMD) Approach – Continuous Data. DPR MT-2. Department of Pesticide Regulation, California Environmental Protection Agency [online]. Available: http://www.cdpr.ca.gov/docs/risk/bmdcont.pdf [October 9, 2014].

DPR (Department of Pesticide Registration). 2010a. Carbaryl (1-naphthyl methylcarbamate) Dietary Risk Characterization Document. Medical Toxicology Branch, Department of Pesticide Regulation, California Environmental Protection Agency. May 13, 2010 [online]. Available: http://www.cdpr.ca.gov/docs/risk/rcd/carbaryl.pdf [accessed January 10, 2014].

DPR (Department of Pesticide Registration). 2010b. Methyl Iodide (Iodomethane) Risk Characterization Document for Inhalation Exposure, Volume I, Health Risk Assessment. Medical Toxicology Branch, Department of Pesticide Regulation, California Environmental Protection Agency. February 2010 [online]. Available: http://www.cdpr.ca.gov/docs/risk/mei/mei_vol1_hra_final.pdf [accessed January 10, 2014].

DPR (Department of Pesticide Regulation). 2011. DPR Risk Assessment Guidance. September 22, 2011.

DPR (Department of Pesticide Registration). 2012. Chloropicrin Risk Characterization Document. Medical Toxicology Branch, Department of Pesticide Regulation, California Environmental Protection Agency. November 14, 2012 [online]. Available: http://www.cdpr.ca.gov/docs/risk/rcd/chloropicrinrisk_2012.pdf [accessed January 10, 2014].

EFSA (European Food Safety Authority). 2006. Conclusion Regarding the Peer Review of the Pesticide Risk Assessment of the Active Substance Carbaryl. Finalized 12 May 2006. EFSA Scientific Report 80:1-71 [online]. Available: http://www.efsa.europa.eu/en/efsajournal/doc/80r.pdf [accessed August 20, 2014].

EPA (US Environmental Protection Agency). 2005. Guidelines for Carcinogen Risk Assessment. EPA/630/P-03/001B. Risk Assessment Forum, US Environmental Protection Agency, Washington, DC. March 2005 [online]. Available: http://www.epa.gov/ttnatw01/cancer_guidelines_final_3-25-05.pdf [accessed October 7, 2014].

EPA (US Environmental Protection Agency). 2014. Framework for Human Health Risk Assessment to Inform Decision Making. EPA/100/R-14/001. April 2014. Available: http://www.epa.gov/raf/frameworkhhra.htm [accessed 2014].

Fort, F.A. 2003. Carbaryl (Chemical ID No. 056801/List A Reregistration Case No. 0080). Revised Dietary Exposure Analysis Including Acute Probabilistic Water Analysis for the HED Revised Human Health Risk Assessment. DP Barcode D288479. Memorandum from Felicia A. Fort, Chemist, to Jeffrey Dawson, Chemist, Reregistration Branch 1, Health Effects Division, Office of Prevention, Pesticides and Toxic Substances, US Environmental Protection Agency, Washington, DC. March 18, 2003.

Fort, F.A. 2007. Carbaryl Acute, Probabilistic Aggregate Dietary (Food and Drinking Water) Exposure and Risk Assessments for the Registration Eligibility Decision. Memorandum from Felecia A. Fort, Chemist, Reregistration Branch 1, Health Effects Division, to C. Scheltema, Chemical Review Manager, Reregistration Branch III, Special Review and Reregistration Division, Office of Prevention, Pesticides and Toxic Substances, US Environmental Protection Agency, Washington, DC. June 27, 2007.

Frank, J.P. 2009. Method for Calculating Short-Term Exposure Estimates. HSM-09004 Memorandum to Worker Health and Safety Branch Staff from Joseph P. Frank, Senior Toxicologist, Department of Pesticide Regulation, Sacramento, CA. February 13, 2009 [online]. Available: http://www.cdpr.ca.gov/docs/whs/memo/hsm09004.pdf [accessed February 3, 2014].

Health Canada. 2009. Proposed Re-evaluation Decision: Carbaryl. PRVD2009-14. Health Canada, Ottawa, Ontraio [online]. Available: http://pesticidetruths.com/toc/pest-control-products-health-canada-epa/ [accessed August 20, 2014].

IPCS (International Programme on Chemical Safety). 2001. Pesticide Residues in Food 2001. Toxicological Evaluations. Carbaryl (Addendum) [online]. Available: http://www.inchem.org/documents/jmpr/jmpmono/2001pr02.htm [accessed August 22, 2014].

Lawyer, A. 2014. Registrants’ Perspectives. Presentation at the Second Meeting on Review of the Risk Assessment Process for Pesticides in the California EPA’s Department of Pesticide Regulation, May 20, 2014, Washington, DC.

NRC (National Research Council). 1983. Risk Assessment in the Federal Government: Managing the Process. Washington, DC: National Academy Press.

NRC (National Research Council). 2008. Phthalates and Cumulative Risk Assesment: The Task Ahead. Washington, DC: The National Academies Press.

NRC (National Research Council). 2009. Science and Decisions: Advancing Risk Assessment. Washington, DC: The National Academies Press.

NRC (National Research Council). 2011. Review of the Environmental Protection Agency’s Draft IRIS Assessment of Formaldehyde. Washington, DC: The National Academies Press.

NRC (National Research Council). 2014. Review of EPA’s Integrated Risk Information System (IRIS) Process. Washington, DC: The National Academies Press.

RAAC (Risk Assessment Advisory Committee). 1996. A Review of the California Environmental Protection Agency’s Risk Assessment Practices, Policies, and Guidelines. October 1996 [online]. Available: http://oehha.ca.gov/risk/pdf/RAACreport.pdf [accessed June 17, 2014].