Appendix C

Clinical Evaluation Tools

CONCUSION ASSESMENT TOOLS

Glasgow Coma Scale

Originally developed by Teasdale and Jennett (1974), the Glasgow Coma Scale (GCS) (see Table C-1) is a scoring scale for eye opening, motor, and verbal responses that can be administered to athletes on the field to objectively measure their level of consciousness. A score is assigned to each response type for a combined total score of 3 to 15 (with 15 being normal). An initial score of less than 5 is associated with an 80 percent chance of a lasting vegetative state or death. An initial score of greater than 11 is associated with a 90 percent chance of complete recovery (Teasdale and Jennett, 1974). Because most concussed individuals score 14 or 15 on the 15-point scale, its primary use in evaluating individuals for sports-related concussions is to rule out more severe brain injury and to help determine which athletes need immediate medical attention (Dziemianowicz et al., 2012).

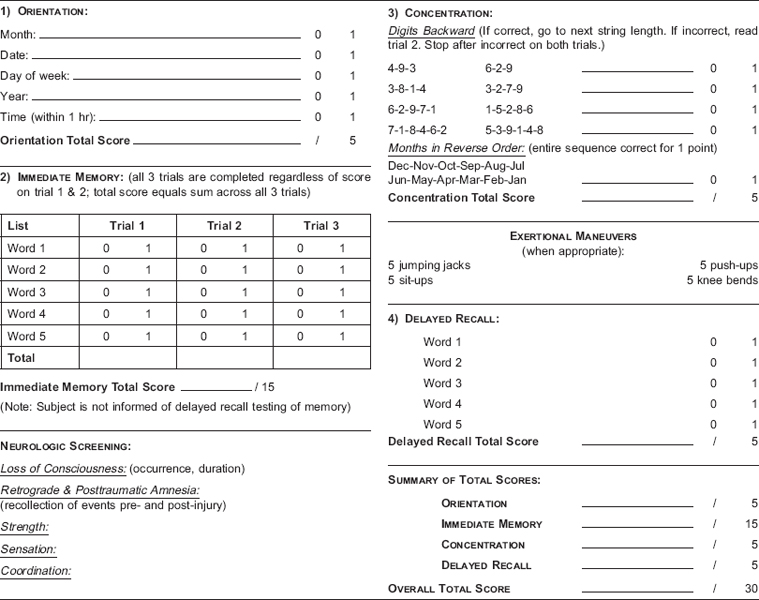

Standardized Assessment of Concussion

The Standardized Assessment of Concussion (SAC) (see Figure C-1) provides immediate sideline mental status assessment of athletes who may have incurred a concussion (Barr and McCrea, 2001; McCrea et al., 1998, 2000). The test contains questions designed to assess athletes’ orientation, immediate memory, concentration, and delayed memory. It also includes an exertion test and brief neurological evaluation. The SAC takes approxi-

| Response Type | Response | Points |

| Eye Opening | Spontaneous: Eyes open, not necessarily aware | 4 |

| To speech: Nonspecific response, not necessarily to command | 3 | |

| To pain: Pain from sternum/limb/supraorbital pressure | 2 | |

| None: Even to supraorbital pressure | 1 | |

| Motor Response | Obeys commands: Follows simple commands | 6 |

| Localizes pain: Arm attempts to remove supraorbital/chest pressure | 5 | |

| Withdrawal: Arm withdraws to pain, shoulder abducts | 4 | |

| Flexor response: Withdrawal response or assumption of hemiplegic posture | 3 | |

| Extension: Shoulder adducted and shoulder and forearm internally rotated | 2 | |

| None: To any pain; limbs remain flaccid | 1 | |

| Verbal Response | Oriented: Converses and oriented | 5 |

| Confused: Converses but confused, disoriented | 4 | |

| Inappropriate: Intelligible, no sustained sentences | 3 | |

| Incomprehensible: Moans/groans, no speech | 2 | |

| None: No verbalization of any type | 1 | |

mately 5 minutes to administer and does not require a neuropsychologist to evaluate test scores. The test is scored out of 30 with a mean score of 26.6 (McCrea et al., 1996).

Studies have found the SAC to have good sensitivity and specificity (McCrea, 2001; McCrea et al., 2003), making it a useful tool for identifying the presence of concussion (Giza et al., 2013). Significant differences in scores have been reported for males and females in healthy young athletes (9 to 14 years of age), suggesting the need for separate norms for males and females in this age group (Valovich McLeod et al., 2006) as well as in high school athletes (Barr, 2003).

Sport Concussion Assessment Tool 3

The Sport Concussion Assessment Tool 3 (SCAT3) is a concussion evaluation tool designed for individuals 13 years and older. Due to its demonstrated utility, the SAC has been incorporated into this tool, which also includes the GCS, modified Maddocks questions (Maddocks et al., 1995), a neck evaluation and balance assessment, and a yes/no symptom checklist as well as information on the mechanism of injury and background information, including learning disabilities, attention deficit hyperactivity disorder,

FIGURE C-1 Standardized assessment of concussion.

SOURCE: McCrea, 2001, Table 2, p. 2276.

and history of concussion, headaches, migraines, depression, and anxiety (McCrory et al., 2013c). The precursor SCAT2 had been standardized as an easy-to-use tool with adequate psychometric properties for identifying concussions within the first 7 days (Barr and McCrea, 2001). The SCAT3 was developed from the original SCAT to help in making return-to-play decisions (McCrory et al., 2009, 2013b). This concussion evaluation tool can be used on the sideline or in the health care provider’s office. The SCAT3 takes approximately 15 to 20 minutes to complete.

Because the SCAT3 was recently published (McCrory et al., 2013a), normative data and concussion cutoff scores are not yet available. However, a recent study to determine baseline values of the SCAT2 in normal male and female high school athletes found a high error rate on the concentration portion of the assessment in non-concussed athletes, suggesting the need for baseline testing in order to understand post-injury results (Jinguji et al., 2012). The study also showed significant sex differences, with females scoring higher on the balance, immediate memory, and concentration components of the assessment.

Findings similar to those of Jinguji and colleagues (2012) were reported in a study of youth ice hockey players who demonstrated an average total score of 86.9 out of 100 points (Blake et al., 2012). In the largest assessment of the SAC/SCAT2, Valovich McLeod and colleagues (2012) assessed 1,134 high school students. Male high school athletes and male and female ninth graders were found to have significantly lower SAC and total SCAT2 scores than did female athletes and upperclassmen, respectively (Valovich McLeod et al., 2012). A self-reported history of previous concussion1 did not have a significant effect on SAC scores, but it did affect the symptom and total SCAT2 scores. The authors recommended baseline assessments in order to understand post-injury results for individual athletes. Schneider and colleagues (2010) tested more than 4,000 youth hockey players with the original SCAT and reported baseline scores showing absolute differences with age and sex. However, because no parametric statistics were provided, the significance of the observed differences is not known.

The Child SCAT3 is a newly developed concussion evaluation tool designed for children ages 5 to 12 years (McCrory et al., 2013a). It is similar to the SCAT3 except that tests such as the SAC and Maddocks questions are age appropriate for younger children. The Child SCAT3 includes versions of the SAC and Maddocks questions, the GCS, a medical history completed by the parent, child and parent concussion symptom scales, neck evaluation, and balance assessment. As is the case with the SCAT3 for adults, the Child SCAT3 has yet to be validated, so no normative data are available, nor are there concussion cutoff scores.

Military Acute Concussion Evaluation

The military currently employs the Military Acute Concussion Evaluation (MACE) tool for concussion screening and initial evaluation (DVBIC, 2012). The first section of the MACE collects data regarding the nature of the concussive event and the signs and symptoms of concussion. The second “examination” portion of the MACE is a version of the SAC. The MACE was first employed in Iraq for determining concussions in theater (French et al., 2008). Coldren and colleagues (2010) examined concussed and control U.S. Army soldiers who were administered the MACE 12 hours after their injury. The researchers concluded that the MACE lacks the sensitivity and specificity necessary to determine a concussive event 12 hours post injury. However, a recent study by Kennedy and colleagues (2012) indicated that the MACE can be effective in serial concussion evaluation if originally administered within 6 hours of the injury.

____________________________________

1Information on the time elapsed since the previous concussions was not reported.

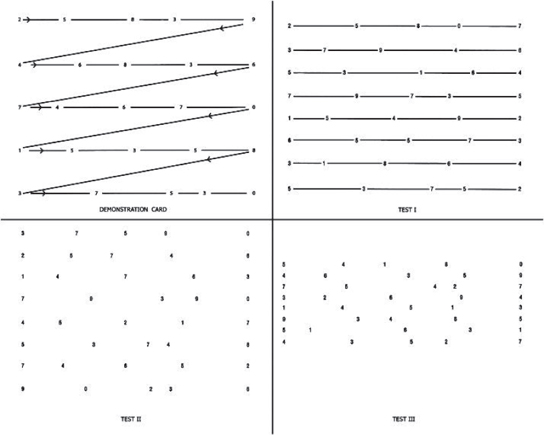

King-Devick Test

The King-Devick test is designed to assess saccadic eye movements, measuring the speed of rapid number naming as well as errors made by the athlete, with the goal of detecting impairments of eye movement, attention, and language as well as impairments in other areas that would be indicative of suboptimal brain function (Galetta et al., 2011a). The King-Devick test includes a demonstration and three test cards with rows of single-digit numbers that are read aloud from left to right (see Figure C-2). The participant is asked to read the numbers as quickly as possible without making any errors. The administrator records the total time to complete the three cards and the total number of errors made during the test. The results are compared to a personal baseline. The King-Devick test usually takes approximately 2 minutes to complete (King-Devick, 2013).

Studies of the King-Devick test involve 10 or fewer concussed athletes (Galetta et al., 2011a,b, 2013; King et al., 2012, 2013), which is too small

FIGURE C-2 Demonstration and test cards for King-Devick (K-D) test.

SOURCE: King-Devick, 2013.

a sample size to determine the test’s effectiveness in evaluating a concussion. Currently, there is not enough evidence to determine whether the test is effective in diagnosing or monitoring a concussion (Giza et al., 2013).

Clinical Reaction Time Test

Eckner and colleagues (2010) have developed a simple tool for measuring clinical reaction time (RTclin). The test involves a systematic approach to dropping a weighted stick that is calibrated to reflect speed of reaction for catching it. The athlete holds his or her hand around, but not touching, a rubber piece at the bottom of the stick, then the test administrator drops the stick, and the athlete catches it on the way down. Several studies document the initial development of this tool and demonstrate its concurrent and predictive validity (Eckner et al., 2010, 2011a,b).

A 2010 pilot study established convergent validity of RTclin with the CogSport simple reaction time measure (Eckner et al., 2010). The Pearson correlation for 68 of the 94 athletes who completed both RTclin and Cog-Sport was .445 (p < .001). The other 26 athletes did not meet the validity criteria on CogSport. For those individuals, the correlations between the two tests were nonsignificant. A 2011 study looked at the test-retest reliability of RTclin at a 1-year interval as well as the same reliability statistic for CogSport reaction time (Eckner et al., 2011a). The researchers used a two-way random effects analysis of variance model for intraclass correlation coefficient analysis for each test. This means that each subject was assessed by each rater, and the raters were randomly selected. The RTclin intraclass correlation coefficient was above .60. One might argue that a two-way mixed effects analysis should be used, which would actually increase the coefficient. There was also significant improvement in the absolute reaction times from time one to time two for the RTclin but not for CogSport (here the CogSport analysis only included valid responders).

Eckner and colleagues (2013) have also assessed the diagnostic utility of the RTclin. They compared 28 concussed athletes to 28 controls. Concussed athletes were tested within 48 hours of injury and a control was selected at the same time interval. Post-injury tests were compared to baseline scores and reliable change indices were calculated using the control group means and standard error of difference from the two time-points. Using a 60 percent confidence interval (one-tailed significance), the authors calculated sensitivity at 79 percent and specificity at 61 percent for a score difference of –3. Thirty-three of the 56 athletes obtained this score; of the 33, 22 were concussed and 11 were not, and therefore were misidentified. The sensitivity and specificity were improved somewhat by adjusting the cutoff score to a difference of zero seconds (75 percent sensitivity; 68 percent specificity). This correlated with a 68 percent (one-sided) confidence interval. Of note,

more stringent cutoff values lowered sensitivity but increased specificity, meaning that improvements in scores beyond 11 points increased the probability that the athlete did not have a concussion, which can be useful clinical information.

The RTclin is a simple-to-use and low-cost test of reaction time. The initial test of reliability at 1-year intervals is promising, and the diagnostic statistics indicate adequate utility. Further independent validation is needed, and it would be valuable to determine the increase in diagnostic efficiency if the test were combined with other tools because multimodal diagnostic test batteries have been recommended in the literature.

BALANCE TESTS

Balance Error Scoring System

The Balance Error Scoring System (BESS) is a quantifiable version of a modified Romberg test for balance (Guskiewicz, 2001; Riemann et al., 1999). It measures postural stability or balance and consists of six stances, three on a firm surface and the same three stances on an unstable (medium density foam) surface (Guskiewicz, 2001; Riemann et al., 1999). All stances are done with the athlete’s eyes closed and with his or her hands on the iliac crests for 20 seconds. The three stances are: feet shoulder width apart, a tandem stance (one foot in front of the other), and a single-leg stance on the person’s nondominant leg (Guskiewicz, 2001; Riemann et al., 1999). For every error made—lifting hands off the iliac crests, opening the eyes, stepping, stumbling, or falling, moving the hip into more than a 30 degree of flexion or abduction, lifting the forefoot or heel, or remaining out of the testing position for more than 5 seconds—1 point is assessed. The higher the score, the worse the athlete has performed.

The BESS test has very good test-retest reliability (0.87 to 0.97 intraclass correlations) (Riemann et al., 1999). The test’s sensitivity for diagnosis was 0.34 to 0.64, which is considered low to moderate, while specificity is high (0.91) (Giza et al., 2013). Using the BESS test in conjunction with the SAC and a graded symptom checklist increases the sensitivity (Giza et al., 2013). The BESS has only been found to be useful within the first 2 days following injury (Giza et al., 2013; McCrea et al., 2003).

Sensory Organization Test

The Sensory Organization Test (SOT) uses six sensory conditions to objectively identify abnormalities in the patient’s use of somatosensory, visual, and vestibular systems to maintain postural control. The test conditions systematically eliminate useful visual and proprioceptive information

in order to assess the patient’s vestibular balance control and adaptive responses of the central nervous system (NeuroCom, 2013). Broglio and colleagues (2008) examined the sensitivity and specificity of the SOT using the reliable change technique. A baseline and one follow-up assessment were performed on healthy and concussed young adults. Post-injury assessment in the concussed group occurred within 24 hours of diagnosis. An evaluation for change on one or more SOT variables resulted in the highest combined sensitivity (57 percent) and specificity (80 percent) at the 75 percent confidence interval. The low sensitivity of the SOT suggests the need to use additional evaluation tools to improve identification of individuals with concussion.

SYMPTOM SCALES

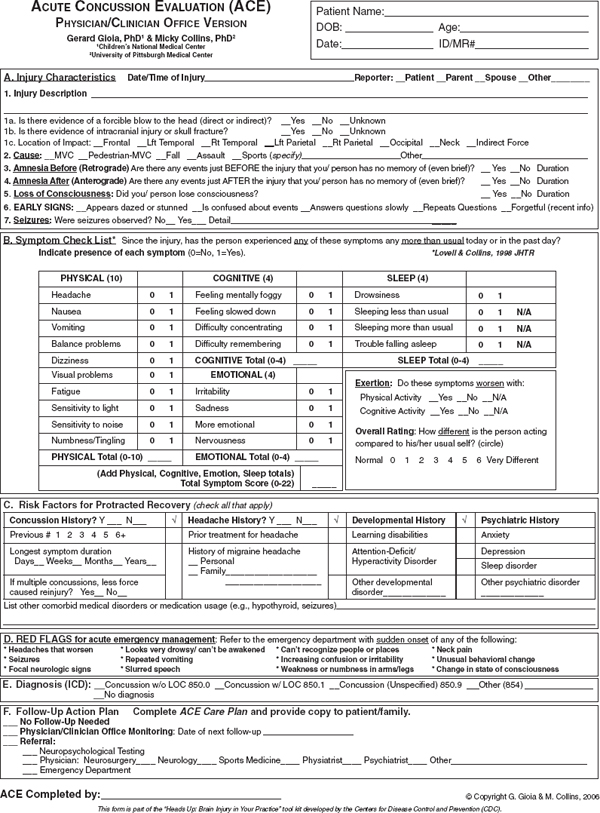

Acute Concussion Evaluation

The Acute Concussion Evaluation (ACE) tool is a physician/clinician form used to evaluate individuals for a concussion (see Figure C-3; Gioia and Collins, 2006; Gioia et al., 2008a). The form consists of questions about the presence of concussion characteristics (i.e., loss of consciousness, amnesia), 22 concussion symptoms, and risk factors that might predict prolonged recovery (i.e., a history of concussion) (Gioia et al., 2008a). The ACE can be used serially to track symptom recovery over time to help inform clinical management decisions (Gioia et al., 2008a).

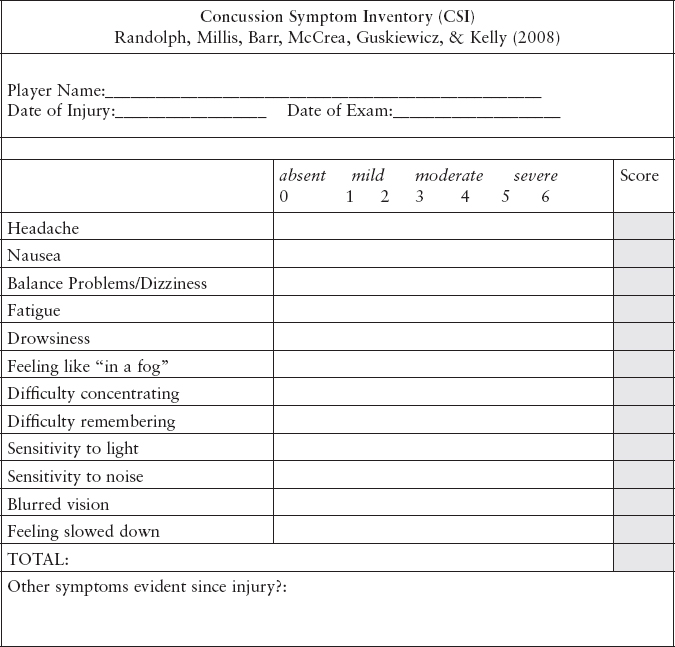

Concussion Symptom Inventory

The Concussion Symptom Inventory (CSI) (see Figure C-4) is a derived symptom scale designed specifically for tracking recovery. Randolph and colleagues (2009) analyzed a large set of data from existing scales obtained from three separate case-control studies. Through a series of analyses they eliminated overlapping items that were found to be insensitive to concussion. They collected baseline data from symptom checklists, including a total of 27 symptom variables from a total of 16,350 high school and college athletes. Follow-up data were obtained from 641 athletes who subsequently incurred a concussion. Symptom checklists were administered at baseline (pre-season), immediately post concussion, postgame, and at 1, 3, and 5 days following injury. Effect-size analyses resulted in the retention of only 12 of the 27 variables. Receiver-operating characteristic analyses (non-parametric approach) were used to confirm that the reduction in items did not reduce sensitivity or specificity (area under the curve at day 1 post injury=0.867). Because the inventory has a limited set of symptoms,

FIGURE C-4 Concussion symptom inventory.

SOURCE: Randolph et al., 2009, Appendix, p. 227.

Randolph and colleagues note the need for a complete symptom inventory for other problems associated with concussion.

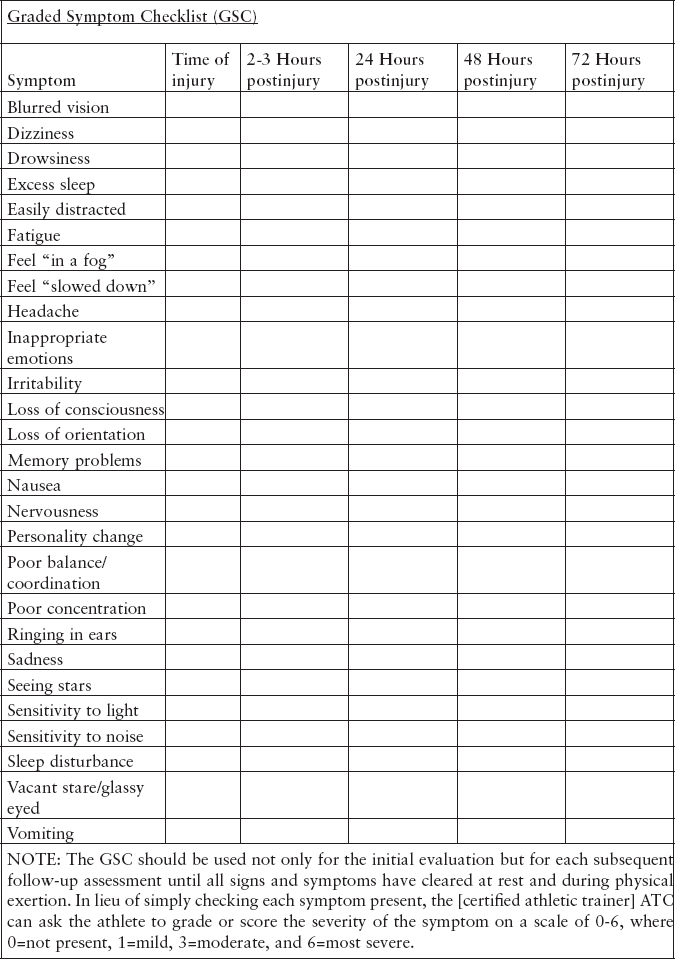

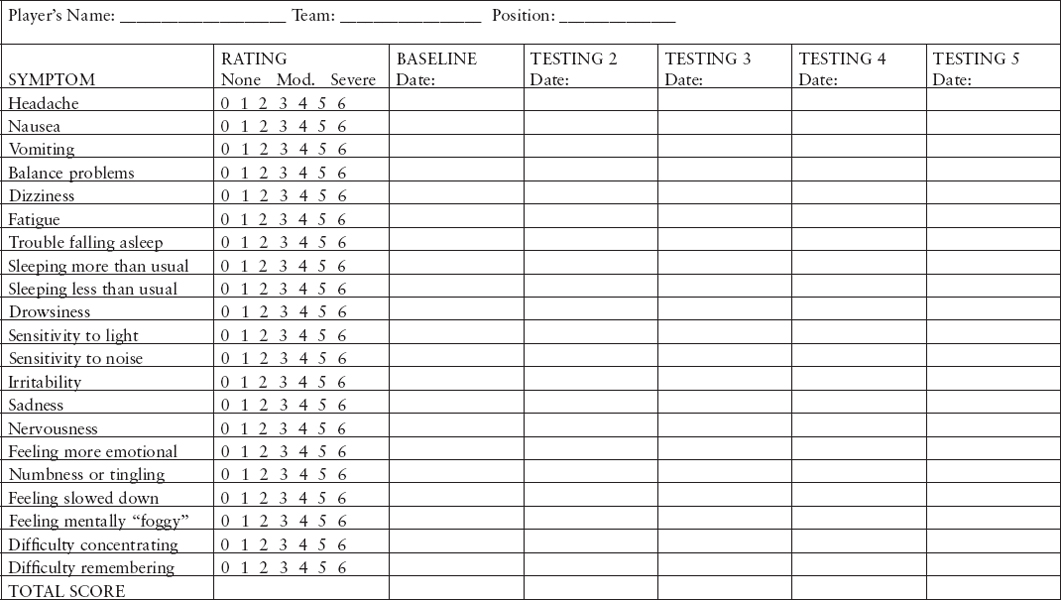

Graded Symptom Checklist and Graded Symptom Scale

The Graded Symptom Checklist (GSC) (see Figure C-5) and Graded Symptom Scale (GSS) are self-report measures of concussion symptoms derived from the longer Head Injury Scale (Janusz et al., 2012). The symptoms are rated on their severity. The evidence is much stronger to support the use of such self-report symptom measures in youth ages 13 and older. Test-retest reliability has not been reported, but a three factor solution (cognitive, somatic, neurobehavioral) has been reported, although a bet-

ter solution contained only nine items (Piland et al., 2006). Evidence of convergent validity includes parallel recovery on the GSC and measures of balance and neurocognitive function and correlation with the presence of posttraumatic headaches (McCrea et al., 2003; Register-Mihalik et al., 2007), and discriminant validity between higher and lower impact force (McCaffrey et al., 2007).

Health and Behavior Inventory

The Health and Behavior Inventory (HBI) is a 20-item instrument, with parent and child self-report forms, that has been validated on youth ages 8 to 15 years and their parents (Ayr et al., 2009; Janusz et al., 2012). Test-retest reliability has not been reported. A factor structure was reported (cognitive, somatic, and emotional) that is consistent across parent and child reports and both at baseline and at 3-month follow-up. Parent and child reports are moderately inter-correlated, internal consistency has been reported, and there is evidence of both convergent (correlation with quality of life, family burden, educational and social difficulties) and discriminant (from those with non-head orthopedic injuries, moderate to severe traumatic brain injury) validity. Finally, the measures shows reliable change in 8- to 15-year-olds with mild traumatic brain injury (mTBI) followed over 12 months, and these increases in symptomatology related to orthopedic controls were related to injury characteristics, abnormalities on neuroimaging, the need for educational intervention, and pediatric quality of life (Yeates et al., 2012).

Post-Concussion Symptom Inventory

The Post-Concussion Symptom Inventory (PCSI) has self-report forms for youth ages 5 to 7 years (13 items), 8 to 12 years (25 items), and 13 to 18 years (26 items) as well as reports for parents and teachers (26 items). The forms focus on symptoms in the cognitive, emotional, sleep, and physical domains. Interrater reliability on the child reports was moderate to high (r’s ranging from 0.62 to 0.84) (Schneider and Gioia, 2007; Vaughan et al., 2008), as was internal consistency for all three reports (Gioia et al., 2008b; Vaughan et al., 2008). There was moderate agreement among the reporters (r=0.4 to r=0.5) in one of the two studies (see Gioia et al., 2009, Table 2); and evidence of predictive and discriminant validity (Diver et al., 2007; Vaughan et al., 2008). It would appear that the strongest data for adolescents supports the use of the Post-Concussion Symptom Scale (PCSS) (discussed in the following section) and, for children and adolescents, the HBI or the PCSI.

Post-Concussion Symptom Scale

The PCSS is a 21-item self-report measure that records symptom severity using a 7-point Likert scale of severity (see Figure C-6; Lovell and Collins, 1998). The measure has not been studied in youth under the age of 11. In contrast to several of the other measures, evidence of moderate test-retest reliability has been reported (pre- to post-season intraclass correlation was 0.55; test-retest, r=0.65) (Iverson et al., 2003), and the scale is able to detect reliable change (Iverson et al., 2003). Recently, a revised factor structure has been reported in adolescents ranging from ages 13 to 22 years, which showed, post concussion, a four factor solution—cognitive-fatigue-migraine, affective, somatic, and sleep—and higher scores in female than in male participants (Kontos et al., 2012). The PCSS is able to discriminate between concussed and non-concussed athletes (Echemendia et al., 2001; Field et al., 2003; Iverson et al., 2003; Lovell et al., 2006; Schatz et al., 2006); shows greater abnormalities in those with multiple concussions (Collins et al., 1999); and shows convergent validity with measures of neurocognitive performance and regional hyper-activation on functional magnetic resonance imaging during a working memory task (Collins et al., 2003; Pardini et al., 2010). Although a consistent relationship between neuropsychological outcomes and the PCSS has been reported, persistent neurocognitive abnormalities have been reported even in “asymptomatic” athletes who have recovered clinically and on the basis of their scores on the PCSS (Fazio et al., 2007).

Rivermead Post-Concussion Symptoms Questionnaire

The Rivermead Post-Concussion Symptoms Questionnaire (RPCSQ) is a 16-item self-report measure of symptom severity that asks individuals to compare the presence and severity of symptoms they have experienced within the past 24 hours relative to their experience of the same symptoms prior to the injury. One study showed discriminant validity between concussed and non-concussed youth ages 12 and under, but no reliability or factor structure was reported (Gagnon et al., 2005). In adolescents, high internal consistency but low test-retest reliability has been reported (Iverson and Gaetz, 2004) as well as strong discriminant validity between concussed and non-concussed youth (Wilde et al., 2008).

FIGURE C-6 Post-concussion scale.

NOTE: More recent versions of this instrument include “visual problems” in the list of symptoms rated.

SOURCE: Lovell and Collins, 1998, Figure 1, p. 20.

COMPUTERIZED NEUROCOGNITIVE TESTS

Automated Neuropsychological Assessment Metrics

Automated Neuropsychological Assessment Metrics (ANAM) is a computer-based neuropsychological assessment tool designed to detect the accuracy and efficiency of cognitive processing in a variety of situations (Levinson and Reeves, 1997). ANAM measures attention, concentration, reaction time, memory, processing speed, and decision making (Cernich et al., 2007). Test administration takes approximately 20 minutes.

Recently ANAM has published normative data for sex and age using more than 100,000 military service members 17 to 65 years of age (Vincent et al., 2012). Researchers have also investigated the sensitivity, validity, and reliability of the ANAM test battery. Levinson and Reeves (1997) investigated the sensitivity of ANAM in traumatic brain injury patients who were classified as marginally impaired (n=8), mildly impaired (n=7), or moderately impaired (n=7). The ANAM test battery was administered on two occasions separated by a 2- to 3-month interval in order to examine its ability to classify the patients by comparing accuracy and efficiency scores on the tests to appropriate normative data. At the first administration, the efficiency scores on the ANAM classified 91 percent of the patients into groups accurately; 100 percent classification was obtained in the second administration when the mild and moderately impaired patients were combined as one group. At the second administration, the efficiency scores accurately classified 86.36 percent of the patients. This study revealed the sensitivity of ANAM in distinguishing the severity of traumatic brain injury.

Bleiberg and colleagues (2000) investigated the construct validity of ANAM by examining the relationship between ANAM and a set of traditional clinical neuropsychological tests (Trail Making Test Part B, Consonant Trigrams total score, Paced Auditory Serial Addition test, the Hopkins Verbal Learning test, and the Stroop Color and Word test). The strongest correlation for mathematical processing, Sternberg memory procedure, and spatial processing were found with the Paced Auditory Serial Addition test (0.663, 0.447, and 0.327, respectively). The strongest correlation for matching to sample was found with Trail Making Test Part B (–0.497). This study indicated good construct validity between ANAM and traditional neuropsychological measures.

The ANAM sports medicine battery is a specialized subset designed for serial concussion testing. The ANAM sports medicine battery assesses attention, mental flexibility, cognitive processing efficiency, arousal and fatigue level, learning, and recall and memory (Reeves et al., 2007). Segalowitz and colleagues (2007) examined the test-retest reliability of ANAM sports medicine battery in a group of 29 adolescents. The researchers adminis-

tered ANAM twice during the same time of day over a 1-week interval. The highest intra-class correlations were reported in matching to sample (0.72), followed by continuous performance test (0.65), mathematical processing (0.61), code substitution (0.58), simple reaction time 2 (0.47), and simple reaction time (0.44). The highest Pearson correlation coefficient was reported in code substitution (0.81), followed by matching to sample (0.72), continuous performance test (0.70), code substitution delayed (0.67), simple reaction time 2 (0.50), and simple reaction time (0.48). The results suggest that there is variability of test-retest reliability for individual subtests of ANAM.

Another study assessed the reliability of ANAM sports medicine battery through test-retest methods using a military sample (Cernich et al., 2007). The average test-retest interval for this study was 166.5 days. Results revealed a wide range of intra-class correlations for each subtest with the highest being in mathematical processing (0.87), followed by matching to sample (0.66), spatial processing (0.60), continuous performance test (0.58), Sternberg memory procedure (0.48), and simple reaction time (0.38). Both of these studies suggest that there are low intraclass correlations values for reaction time subtest scores (Cernich et al., 2007; Segalowitz et al., 2007).

As with all computerized neuropsychological test batteries, it is important to determine whether multiple test sessions result in practice effects. Eonta and colleagues (2011) examined practice effects of ANAM in two groups of military personnel. In the first study, 38 U.S. marines were administered four tests back-to-back on 1 day and on consecutive days. In the second study, 21 New Zealand military personnel were administered the ANAM test battery eight times across 5 days. Individuals demonstrated practice effects on five of six subtests in the two studies.

Bryan and Hernandez (2012) examined military service members who presented with mTBI to a military clinic in Iraq. The findings of the study indicated that on five out of the six ANAM subtests, a larger proportion of mTBI patients than control patients without TBI demonstrated significant declines in speed at 2 days post injury. The one exception was accuracy, which showed no difference between the mTBI and the control groups.

Finally, Coldren and colleagues (2012) examined concussed U.S. Army soldiers at 72 hours and 10 days post injury using the ANAM test battery. Concussed soldiers showed impairment at 72 hours compared to a control group; however, at 10 days the concussed group showed no significant impairments on the ANAM subtests when compared to the control group. Although the researchers concluded that the ANAM lacks utility as a diagnostic or screening tool 10 days following a concussion, they did not report whether the concussed soldiers were also asymptomatic and reporting a normal clinical exam. Thus, more research is warranted to determine

whether the ANAM test battery is effective in the long-term assessment of concussed patients.

CogSport/AXON

CogSport is a computerized neuropsychological test battery developed by CogState that measures psychomotor function, speed of processing, visual attention, vigilance, and verbal and visual learning and memory (Falleti et al., 2006). The battery employs a series of eight “card games” to examine cognitive function including simple reaction time, complex reaction time, and one-back and continuous learning. Axon Sports is a recently launched company within CogState that has developed the Computerized Cognitive Assessment Tool (CCAT). Like Cogsport, the CCAT employs a “card game” to evaluate cognitive domains, including processing speed, attention, learning, and working memory. This test specifically examines reaction time and accuracy.

Collie and colleagues (2003) determined the reliability of CogSport by calculating intraclass correlation coefficients on serial data collected in 60 healthy youth volunteers at intervals of 1 hour and 1 week. In the same study, construct validity was determined by calculating intraclass correlation coefficients between CogSport and performance on traditional paper-and-pencil assessment tools (the Digit Symbol Substitution test and the Trail Making Test Part B) in 240 professional athletes competing in the Australian Football League. The results indicated high to very high intraclass correlations in CogSport speed indices at intervals of 1 hour and 1 week (0.69 to 0.90), while CogSport accuracy indices displayed lower and more variable intraclass correlations (0.08 to 0.51). Construct validity between CogSport and the Digit Symbol Substitution test were found to be the highest in decision making (r=0.86), followed by working memory speed (0.76), psychomotor speed (0.50), and learning speed (0.42). Smaller and less variable correlation coefficients between CogSport and the Trail Making Test Part B were observed with the highest in psychomotor speed (0.44), decision making (0.34), working memory speed (0.33), and learning speed (0.23).

Broglio and colleagues (2007) examined the test-retest reliability of three computerized neuropsychological testing batteries: Immediate Post-Concussion Assessment and Cognitive Testing (ImPACT), Concussion Resolution Index (CRI), and Concussion Sentinel, which is an earlier version of CogSport. In this study, these computerized neuropsychological test batteries were administered to 73 participants successively (the order of administration was not specified) on three occasions: at baseline, and at 45 days and 50 days after baseline. Based on seven neuropsychological tests, Concussion Sentinel develops five output scores: reaction time, decision making, matching, attention, and working memory. When baseline and

day 45 were compared, the highest intraclass correlation was observed for working memory (0.65), followed by reaction time (0.60), decision making (0.56), attention (0.43), and matching (0.23). When days 45 and 50 were compared, the highest correlation was reported for matching (0.66), followed by working memory (0.64), decision making (0.62), reaction time (0.55), and attention (0.39). However, the effect on the participants’ performance of successively administering three neuropsychological testing batteries was identified as a possible methodological flaw, potentially contributing to the low intraclass correlation scores.

Makdissi and colleagues (2010) conducted a prospective study that tracked the recovery of 78 concussed male Australian football players using the Axon Sport CCAT and traditional paper-and-pencil tests (the Digit Symbol Substitution Test and the Trail Making Test Part B). Although concussion-associated symptoms lasted an average of 48.6 hours (95 percent CI, 39.5-57.7 hours), and cognitive deficits on the traditional paper-and-pencil test had for the most part resolved at 7 days post injury, 17.9 percent of the athletes still demonstrated significant cognitive decline on the Axon sport CCAT. This study implied that the Axon sport CCAT has greater sensitivity to cognitive impairment following concussion than the Digit Symbol Substitution test and the Trail Making Test Part B. Because Axon Sport is a new computerized neuropsychological test battery, more research is warranted on this test battery to determine whether it is effective in assessing concussion outcomes.

Concussion Resolution Index

The CRI, developed by HeadMinder, Inc., is a Web-based computerized neuropsychological assessment battery composed of six subtests: reaction time, cued reaction time, visual recognition 1 and 2, animal decoding, and symbol scanning (Erlanger et al., 2003). Symbol scanning measures simple and complex reaction time, visual scanning, and psychomotor speed. These six subtests form three CRI indices: Psychomotor, Speed Index, Simple Reaction Time (Erlanger et al., 2003). In addition to cognitive testing, the CRI collects demographic information, medical history, concussion history, and symptom report.

Concurrent validity was established using traditional neuropsychological paper-and-pencil tests. Correlations for CRI Psychomotor Speed Index were 0.66 for the Single Digit Modality Test, 0.60 and 0.57 for the Grooved Pegboard Test dominant and nondominant hand respectively, and 0.58 for the WAIS-III Symbol Search subtest (Erlanger et al., 2001). Correlations for CRI Complex Reaction Time Index were 0.59 and 0.70 for the Grooved Pegboard test for dominant and nondominant hand, respectively (Erlanger et al., 2001). Correlations for the CRI Simple Reaction Time Index were

0.56 for Trail Making Test Part A, and 0.46 and 0.60 for the Grooved Pegboard Test dominant and nondominant hand, respectively (Erlanger et al., 2001).

In the previously mentioned study by Broglio and colleagues (2007), test-retest reliability was also examined for the CRI at baseline and at 45 and 50 days post baseline test. The CRI demonstrated extremely low intraclass correlations (0.03) for Simple Reaction Time Error score when days 45 and 50 were compared, and 0.15 for baseline to day 45. The correlations for simple reaction time were 0.65 (baseline to 45 days) and 0.36 (day 45 to 50) and for complex reaction time were 0.43 (baseline to 45 days) and 0.66 (day 45 to 50); the complex reaction time error score was 0.26 (baseline to 45 days) and 0.46 (day 45 to 50), and the processing speed index was 0.66 (baseline to 45 days) and 0.58 (day 45 to 50). These low correlations may be due to the three neuropsychological testing batteries being administered during one session and also to the lack of counterbalance of these three computerized test batteries.

Erlanger and colleagues (2003) reported that test-retest reliability for a 2-week interval was 0.82 for psychomotor speed, 0.70 for simple reaction time, and 0.68 for complex reaction time. Another study also examined the sensitivity of the CRI in detecting changes between baseline and post-injury testing, and it found that the CRI had a sensitivity of 77 percent in identifying a concussion (Erlanger et al., 2001). Thus, the CRI was found to be a valid method of identifying changes in psychomotor speed, reaction time, and processing speed after a sports-related concussion.

Immediate Post-Concussion Assessment and Cognitive Testing

ImPACT is an online computerized neuropsychological test battery composed of three general sections. First, athletes input their demographic and descriptive information by following instructions on a series of screens. The demographic section includes sport participation history, history of alcohol and drug use, learning disabilities, attention deficit hyperactive disorders, major neurological disorders, and history of previous concussion. Next, the athletes self-report any of 22 listed concussion symptoms, which they rate using a 7-point Likert scale. The third section consists of six neuropsychological test modules that evaluate the subject’s attention processes, verbal recognition memory, visual working memory, visual processing speed, reaction time, numerical sequencing ability, and learning.

Schatz and colleagues (2006) examined the diagnostic utility of the composite scores and the PCSS of the ImPACT in a group of 72 concussed athletes and 66 non-concussed athletes. All athletes were administered a baseline test and all concussed athletes were tested within 72 hours of incurring a concussion. Approximately 82 percent of the participants in the

concussion group and 89 percent of the participants in the control group were correctly classified. This indicates that the sensitivity of ImPACT was 81.9 percent and the specificity was 89.4 percent.

To examine the construct validity of ImPACT, Maerlender and colleagues (2010) compared the scores on the ImPACT test battery to a neuropsychological test battery and experimental cognitive measures in 54 healthy male athletes. The neuropsychological test battery included the California Verbal Learning test, the Brief Visual Memory Test, the Delis Kaplan Executive Function system, the Grooved Pegboard, the Paced Auditory Serial Attention Test, the Beck Depression Inventory, the Speilberger State-Trait Anxiety Questionnaire, and the Word Memory Test. The experimental cognitive measures included the N-back and the verbal continuous memory task. The following scores were generated: neuropsychological verbal memory score, neuropsychological working memory score, neuropsychological visual memory score, neuropsychological processing speed score, neuropsychological attention score, neuropsychological reaction time score, neuropsychological motor score, and neuropsychological impulse control score. The results indicated significant correlations between neuropsychological domains and all ImPACT domain scores except the impulse control factor. The ImPACT verbal memory correlated with neuropsychological verbal (r=0.40, p=0.00) and visual memory (r=0.44, p=0.01); the ImPACT visual memory correlated with neuropsychological visual memory (r=0.59, p=0.00); and the ImPACT visual motor processing speed and reaction time score correlated with neuropsychological working memory (r=0.39, p=0.00 and r=–0.31, p=–.02), neuropsychological process speed (r=0.41, p=.00 and r=–0.37, p=0.01), and neuropsychological reaction time score (r=0.34, p=0.00 and r=–0.39, p=0.00). It must be noted that the neuropsychological domain scores for motor, attention, and impulse control were not correlated with any ImPACT composite scores. Overall the results suggest that the cognitive domains represented by ImPACT have good construct validity with standard neuropsychological tests that are sensitive to cognitive functions associated with mTBI.

Allen and Gfeller (2011) also found good concurrent validity between ImPACT scores and a battery of paper-and-pencil neuropsychological tests. Specifically, 100 college students completed the traditional paper-and-pencil test battery used by the National Football League and the ImPACT test in a counterbalanced order. Five factors explained 69 percent of variance with the ImPACT test battery with the authors suggesting that ImPACT has good concurrent validity.

Although ImPACT has been reported to have good sensitivity, specificity, and construct validity, its test-retest reliability has been shown to be somewhat inconsistent. Iverson and colleagues (2003) examined the test-retest reliability over a 7-day time span using a sample of 56 non-

concussed adolescent and young adults (29 males and 27 females with an average age of 17.6 years). The Pearson test-retest correlation coefficients and probable ranges of measurement effort for the composite scores were: verbal memory=0.70 (6.83 pts), visual memory=0.67 (10.59 pts), reaction time=0.79 (0.05 sec), processing speed=0.89 (3.89 pts), and post-concussion scale=0.65 (7.17 pts). There was a significant difference between the first and 7-day retest on the processing speed composite (p < 0.003) with 68 percent of the sample performing better on the 7-day retest than at the first test session.

In the 2007 study by Broglio and colleagues, the ImPACT intraclass correlations ranged from 0.28 to 0.38 (baseline to day 45) and 0.39 to 0.61 (day 45 to day 50) (Broglio et al., 2007). The correlations for each composite score were: verbal memory (0.23 for baseline to day 45, and 0.40 for day 45 to day 50), visual memory (0.32 and 0.39, respectively), motor processing speed (0.38 and 0.61, respectively), and reaction time (0.39 and 0.51, respectively). However, it must be pointed out that this study was flawed by methodological errors which contributed to the low intraclass correlation values.

Miller and colleagues (2007) conducted a test-retest study over a longer time period (4 months) with in-season athletes. The researchers administered a series of ImPACT tests to 58 non-concussed Division III football players during pre-season (before the first full-pads practice), at midseason (6 weeks into the season), and during post-season (within 2 weeks of the last game). The results indicated no significant differences in verbal memory (p=0.06) or in processing speed (p=0.05) over the three testing occasions. However, the scores for visual memory (p=0.04) and reaction time showed significant improvement as the season progressed (p=0.04). Even though the statistical difference was found at the P level of 0.05, when an 80 percent confidence interval was used, the ImPACT results could be interpreted as stable over a 4-month time period in football players. The test-retest reliability of ImPACT has been examined with even longer time periods.

In response, Elbin and colleagues (2011) investigated a 1-year test-retest reliability of the online version of ImPACT using baseline data from 369 high school varsity athletes. The researchers administered the two ImPACT tests approximately 1 year apart, as required by the participants’ respective athletic programs. Results indicated that motor processing speed was the most stable composite score with an intraclass correlation of 0.85, followed by reaction time (0.76), visual memory (0.70), verbal memory (0.62), and PCSS (0.57).

The test-retest study of ImPACT with the longest elapsed time was conducted by Schatz (2010), who tested 95 college athletes over a 2-year interval. Motor processing speed was the most stable composite score over those 2 years with an intraclass correlation of 0.74, followed by reaction

time (0.68), visual memory (0.65), and verbal memory (0.46). Even though the correlation for verbal memory did not reach the “acceptable” threshold (0.60), with the use of regression-based methods none of the participants’ scores showed significant change. Furthermore, reliable change indices revealed that only a small percentage of participants (0 to 3 percent) showed significant change. This study suggests that college athletes’ cognitive performance remains stable over a 2-year time period. In addition, the ImPACT test battery has been shown to have good psychometric properties.

In a small sample of non-athlete college students (n=30), Schatz and Putz (2006) administered three computerized batteries along with select paper-and-pencil tests, counterbalanced over three 40-minute testing sessions. The results showed shared correlations between all the computer-based tests in the domain of processing speed, and between select tests in the domains of simple and choice reaction time. Little shared variance was seen in the domain of memory, although external criterion measures were lacking in this area. Of the test measures used, ImPACT shared the most consistent correlations with the other two computer-based measures as well as with all external criteria except for internal correlations in the domain of memory. However, the authors were clear about the limitations in sample size and the lack of a clinical population as well as the other limitations of the study, and they cautioned against considering this a complete evaluative study. The study does, however, provide some framework for understanding the concurrent validity of these tools.

Previous concurrent validity studies indicated good validity when compared to individual tests. The convergent construct validity of ImPACT was good compared to a full battery of neuropsychological tests (Maerlender et al., 2010). Using a factor analytic approach, Allen and Gfeller (2011) also found good concurrent validity between ImPACT scores and a battery of paper-and-pencil neuropsychological tests. However, there were differences in factor structure between the paper-and-pencil battery and the ImPACT battery, suggesting differences in “coverage” of neuropsychological constructs.

REFERENCES

Allen, B. J., and J. D. Gfeller. 2011. The Immediate Post-Concussion Assessment and Cognitive Testing battery and traditional neuropsychological measures: A construct and concurrent validity study. Brain Injury 25(2):179-191.

Ayr, L. K., K. O. Yeates, H. G. Taylor, and M. Browne. 2009. Dimensions of postconcussive symptoms in children with mild traumatic brain injuries. Journal of the International Neuropsychological Society 15(1):19-30.

Barr, W. B. 2003. Neuropsychological testing of high school athletes: Preliminary norms and test-retest indices. Archives of Clinical Neuropsychology 18:91-101.

Barr, W. B., and M. McCrea. 2001. Sensitivity and specificity of standardized neurocognitive testing immediately following sports concussion. Journal of the International Neuropsychological Society 7(6):693-702.

Blake, T. A., K. A. Taylor, K. Y. Woollings, K. J. Schneider, J. Kang, W. H. Meeuwisse, and C. A. Emery. 2012. Sport Concussion Assessment Tool, Version 2, normative values and test-retest reliability in elite youth ice hockey. [Abstract.] Clinical Journal of Sport Medicine 22(3):307.

Bleiberg, J., R. L. Kane, D. L. Reeves, W. S. Garmoe, and E. Halpern. 2000. Factor analysis of computerized and traditional tests used in mild brain injury research. Clinical Neuropsychologist 14(3):287-294.

Broglio, S. P., M. S. Ferrara, S. N. Macciocchi, T. A. Baumgartner, and R. Elliott. 2007. Test-retest reliability of computerized concussion assessment programs. Journal of Athletic Training 42(4):509-514.

Broglio, S. P., M. S. Ferrara, K. Sopiarz, and M. S. Kelly. 2008. Reliable change of the Sensory Organization Test. Clinical Journal of Sport Medicine 18(2):148-154.

Bryan, C., and A. M. Hernandez. 2012. Magnitudes of decline on Automated Neuropsychological Assessment Metrics subtest scores relative to predeployment baseline performance among service members evaluated for traumatic brain injury in Iraq. Journal of Head Trauma Rehabilitation 27(1):45-54.

Cernich, A., D. Reeves, W. Y. Sun, and J. Bleiberg. 2007. Automated Neuropsychological Assessment Metrics sports medicine battery. Archives of Clinical Neuropsychology 22(1):S101-S114.

Coldren, R. L., M. P. Kelly, R. V. Parish, M. Dretsch, and M. L. Russell. 2010. Evaluation of the Military Acute Concussion Evaluation for use in combat operations more than 12 hours after injury. Military Medicine 175(7):477-481.

Coldren, R. L., M. L. Russell, R. V. Parish, M. Dretsch, and M. P. Kelly. 2012. The ANAM lacks utility as a diagnostic or screening tool for concussion more than 10 days following injury. Military Medicine 177(2):179-183.

Collie, A., P. Maruff, M. Makdissi, P. McCrory, M. McStephen, and D. Darby. 2003. Cog-Sport: Reliability and correlation with conventional cognitive tests used in postconcussion medical evaluations. Clinical Journal of Sport Medicine 13(1):28-32.

Collins, M. W., S. H. Grindel, M. R. Lovell, D. E. Dede, D. J. Moser, B. R. Phalin, S. Nogle, M. Wasik, D. Cordry, K. M. Daugherty, S. F. Sears, G. Nicolette, P. Indelicato, and D. B. McKeag. 1999. Relationship between concussion and neuropsychological performance in college football players. JAMA 282(10):964-970.

Collins, M. W., G. L. Iverson, M. R. Lovell, D. B. McKeag, J. Norwig, and J. Maroon. 2003. On-field predictors of neuropsychological and symptom deficit following sports-related concussion. Clinical Journal of Sport Medicine 13(4):222-229.

Diver, T., G. Gioia, and S. Anderson. 2007. Discordance of symptom report across clinical and control groups with respect to parent and child. Journal of the International Neuropsychological Society 13(Suppl 1):63. [Poster presentation to the Annual Meeting of the International Neuropsychological Society, Portland, OR.]

DVBIC (Defense and Veterans Brain Injury Center). 2012. Military Acute Concussion Evaluation. https://www.jsomonline.org/TBI/MACE_Revised_2012.pdf (accessed August 23, 2013).

Dziemianowicz, M., M. P. Kirschen, B. A. Pukenas, E. Laudano, L. J. Balcer, and S. L. Galetta. 2012. Sports-related concussion testing. Current Neurology and Neuroscience Reports 12(5):547-559.

Echemendia, R. J., M. Putukian, R. S. Mackin, L. Julian, and N. Shoss. 2001. Neuropsychological test performance prior to and following sports-related mild traumatic brain injury. Clinical Journal of Sport Medicine 11(1):23-31.

Eckner, J. T., J. S. Kutcher, and J. K. Richardson. 2010. Pilot evaluation of a novel clinical test of reaction time in National Collegiate Athletic Association Division I football players. Journal of Athletic Training 45(4):327-332.

Eckner, J. T., J. S. Kutcher, and J. K. Richardson. 2011a. Between-seasons test-retest reliability of clinically measured reaction time in National Collegiate Athletic Association Division I athletes. Journal of Athletic Training 46(4):409-414.

Eckner, J. T., J. S. Kutcher, and J. K. Richardson. 2011b. Effect of concussion on clinically measured reaction time in nine NCAA Division I collegiate athletes: A preliminary study. PM & R 3(3): 212-218.

Eckner, J. T., J. S. Kutcher, S. P. Broglio, and J. K. Richardson. 2013. Effect of sport-related concussion on clinically measured simple reaction time. British Journal of Sports Medicine. Published online first: January 11, doi:10.1136/bjsports-2012-0915792013.

Elbin, R. J., P. Schatz, and T. Covassin. 2011. One-year test-retest reliability of the online version of ImPACT in high school athletes. American Journal of Sports Medicine 39(11):2319-2324.

Eonta, S. E., W. Carr, J. J. McArdle, J. M. Kain, C. Tate, N. J. Wesensten, J. N. Norris, T. J. Balkin, and G. H. Kamimori. 2011. Automated Neuropsychological Assessment Metrics: Repeated assessment with two military samples. Aviation, Space, and Environmental Medicine 82(1):34-39.

Erlanger, D., E. Saliba, J. Barth, J. Almquist, W. Webright, and J. Freeman. 2001. Monitoring resolution of postconcussion symptoms in athletes: Preliminary results of a Web-based neuropsychological test protocol. Journal of Athletic Training 36(3):280-287.

Erlanger, D., D. Feldman, K. Kutner, T. Kaushik, H. Kroger, J. Festa, J. Barth, J. Freeman, and D. Broshek. 2003. Development and validation of a web-based neuropsychological test protocol for sports-related return-to-play decision-making. Archives of Clinical Neuropsychology 18(3):293-316.

Falleti, M. G., P. Maruff, A. Collie, and D. G. Darby. 2006. Practice effects associated with the repeated assessment of cognitive function using the CogState battery at 10-minute, one week and one month test-retest intervals. Journal of Clinical and Experimental Neuropsychology 28(7):1095-1112.

Fazio, V. C., M. R. Lovell, J. E. Pardini, and M. W. Collins. 2007. The relation between post concussion symptoms and neurocognitive performance in concussed athletes. NeuroRehabilitation 22:207-216.

Field, M., M. W. Collins, M. R. Lovell, and J. Maroon. 2003. Does age play a role in recovery from sports-related concussion? A comparison of high school and collegiate athletes. Journal of Pediatrics 142(5):546-553.

French, L., M. McCrea, and M. Baggett. 2008. The Military Acute Concussion Evaluation (MACE). Journal of Special Operations Medicine 8(1):68-77.

Gagnon, I., B. Swaine, D. Friedman, and R. Forget. 2005. Exploring children’s self-efficacy related to physical activity performance after a mild traumatic brain injury. Journal of Head Trauma Rehabilitation 20(5):436-449.

Galetta, K. M., J. Barrett, M. Allen, F. Madda, D. Delicata, A. T. Tennant, C. C. Branas, M. G. Maguire, L. V. Messner, S. Devick, S. L. Galetta, and L. J. Balcer. 2011a. The King-Devick test as a determinant of head trauma and concussion in boxers and MMA fighters. Neurology 76(17):1456-1462.

Galetta, K. M., L. E. Brandes, K. Maki, M. S. Dziemianowicz, E. Laudano, M. Allen, K. Lawler, B. Sennett, D. Wiebe, S. Devick, L. V. Messner, S. L. Galetta, and L. J. Balcer. 2011b. The King-Devick test and sports-related concussion: Study of a rapid visual screening tool in a collegiate cohort. Journal of the Neurological Sciences 309(1-2):34-39.

Galetta, M. S., K. M. Galetta, J. McCrossin, J. A. Wilson, S. Moster, S. L. Galetta, L. J. Balcer, G. W. Dorshimer, and C. L. Master. 2013. Saccades and memory: Baseline associations of the King-Devick and SCAT2 SAC tests in professional ice hockey players. Journal of the Neurological Sciences 328(1-2):28-31.

Gioia, G., and M. Collins. 2006. Acute Concussion Evaluation (ACE): Physician/Clinician Office Version. http://www.cdc.gov/concussion/headsup/pdf/ace-a.pdf (accessed August 23, 2013).

Gioia, G., M. Collins, and P. K. Isquith. 2008a. Improving identification and diagnosis of mild traumatic brain injury with evidence: Psychometric support for the Acute Concussion Evaluation. Journal of Head Trauma Rehabilitation 23(4):230-242.

Gioia, G., J. Janusz, P. Isquith, and D. Vincent. 2008b. Psychometric properties of the parent and teacher Post-Concussion Symptom Inventory (PCSI) for children and adolescents. [Abstract.] Journal of the International Neuropsychological Society 14(Suppl 1):204.

Gioia, G. A., J. C. Schneider, C. G. Vaughan, and P. K. Isquith. 2009. Which symptom assessments and approaches are uniquely appropriate for paediatric concussion? British Journal of Sports Medicine 43(Suppl 1):i13-i22.

Giza, C. C., J. S. Kutcher, S. Ashwal, J. Barth, T. S. D. Getchius, G. A. Gioia, G. S. Gronseth, K. Guskiewicz, S. Mandel, G. Manley, D. B. McKeag, D. J. Thurman, and R. Zafonte. 2013. Evidence-Based Guideline Update: Evaluation and Management of Concussion in Sports. Report of the Guideline Development Subcommittee of the American Academy of Neurology. American Academy of Neurology.

Guskiewicz, K. M. 2001. Postural stability assessment following concussion: One piece of the puzzle. Clinical Journal of Sport Medicine 11(3):182-189.

Guskiewicz, K. M., S. L. Bruce, R. C. Cantu, M. S. Ferrara, J. P. Kelly, M. McCrea, M. Putukian, and T. C. Valovich McLeod. 2004. National Athletic Trainers’ Association position statement: Management of sport-related concussion. Journal of Athletic Training 39(3):280-297.

Iverson, G. L., and M. Gaetz. 2004. Practical consideration for interpreting change following brain injury. In Traumatic Brain Injury in Sports: An International Neuropsychological Perspective, edited by M. R. Lovell, R. J. Echemendia, J. T. Barth, and M. W. Collins. Exton, PA: Swets & Zeitlinger. Pp. 323-356.

Iverson, G. L., M. R. Lovell, and M. W. Collins. 2003. Interpreting change in ImPACT following sport concussion. Clinical Neuropsychology 17(4):460-467.

Janusz, J. A., M. D. Sady, and G. A. Gioia. 2012. Postconcussion symptom assessment. In Mild Traumatic Brain Injury in Children and Adolescents: From Basic Science to Clinical Management, edited by M. W. Kirkwood and K. O. Yeates. New York: Guilford Press. Pp. 241-263.

Jinguji, T. M., V. Bompadre, K. G. Harmon, E. K. Satchell, K. Gilbert, J. Wild, and J. F. Eary. 2012. Sport Concussion Assessment Tool-2: Baseline values for high school athletes. British Journal of Sports Medicine 46(5):365-370.

Kennedy, C., E. J. Porter, S. Chee, J. Moore, J. Barth, and K. Stuessi. 2012. Return to combat duty after concussive blast injury. Archives of Clinical Neuropsychology 27(8):817-827.

King, D., T., Clark, and C. Gissane. 2012. Use of a rapid visual screening tool for the assessment of concussion in amateur rugby league: A pilot study. Journal of the Neurological Sciences 320(1-2):16-21.

King, D., M. Brughelli, P. Hume, and C. Gissane. 2013. Concussions in amateur rugby union identified with the use of a rapid visual screening tool. Journal of the Neurological Sciences 326(1):59-63.

King-Devick. 2013. King-Devick Test. http://kingdevicktest.com (accessed August 23, 2013).

Kontos, A. P., R. J. Elbin, P. Schatz, T. Covassin, L. Henry, J. Pardini, and M. W. Collins. 2012. A revised factor structure for the Post-Concussion Symptom Scale: Baseline and postconcussion factors. American Journal of Sports Medicine 40(10):2375-2384.

Levinson, D. M., and D. L. Reeves. 1997. Monitoring recovery from traumatic brain injury using Automated Neuropsychological Assessment Metrics (ANAM V1.0). Archives of Clinical Neuropsychology 12(2):155-166.

Lovell, M. R., and M. W. Collins. 1998. Neuropsychological assessment of the college football player. Head Trauma Rehabilitation 13(2):9-26.

Lovell, M. R., G. L. Iverson, M. W. Collins, K. Podell, K. M. Johnston, D. Pardini, J. Pardini, J. Norwig, and J. C. Maroon. 2006. Measurement of symptoms following sports-related concussion: Reliability and normative data for the Post-Concussion Scale. Applied Neuropsychology 13(3):166-174.

Maddocks, D. L., G. D. Dicker, and M. M. Saling. 1995. The assessment of orientation following concussion in athletes. Clinical Journal of Sport Medicine 5(1):32-33.

Maerlender, A., L. Flashman, A. Kessler, S. Kumbhani, R. Greenwald, T. Tosteson, and T. McAllister. 2010. Examination of the construct validity of ImPACT™ computerized test, traditional, and experimental neuropsychological measures. Clinical Neuropsychologist 24(8):1309-1325.

Makdissi, M., D. Darby, P. Maruff, A. Ugoni, P. Brukner, and P. R. McCrory. 2010. Natural history of concussion in sport: Markers of severity and implications for management. American Journal of Sports Medicine 38(3):464-471.

McCaffrey, M. A., J. P. Mihalik, D. H. Crowell, E. W. Shields, and K. M. Guskiewicz. 2007. Measurement of head impacts in collegiate football players: Clinical measures of concussion after high- and low-magnitude impacts. Neurosurgery 61(6):1236-1243.

McCrea, M. 2001. Standardized mental status testing on the sideline after sport-related concussion. Journal of Athletic Training 36(3):274-279.

McCrea, M., J. Kelly, and C. Randolph. 1996. Standardized Assessment of Concussion (SAC): Manual for Administration, Scoring and Interpretation. Waukesha, WI: CNS Inc.

McCrea, M., J. P. Kelly, C. Randolph, J. Kluge, E. Bartolic, G. Finn, and B. Baxter. 1998. Standardized Assessment of Concussion (SAC): On-site mental status evaluation of the athlete. Journal of Head Trauma Rehabilitation 13(2):27-35.

McCrea, M., J. P. Kelly, and C. Randolph. 2000. Standardized Assessment of Concussion (SAC): Manual for Administration, Scoring and Interpretation, Second edition. Waukesha, WI: CNS Inc.

McCrea, M., K. M. Guskiewicz, S. W. Marshall, W. Barr, C. Randolph, R. C. Cantu, J. A. Onate, J. Yang, and J. P. Kelly. 2003. Acute effects and recovery time following concussion in collegiate football players: The NCAA Concussion Study. JAMA 290(19):2556-2563.

McCrory, P., W. Meeuwisse, K. Johnston, J. Dvoøák, M. Aubry, M. Molloy, and R. Cantu. 2009. Consensus statement on concussion in sport: The 3rd International Conference on Concussion in Sport held in Zurich, November 2008. British Journal of Sports Medicine 43(Suppl 1):i76-i84.

McCrory, P., W. H. Meeuwisse, M. Aubry, B. Cantu, J. Dvoøák, R. J. Echemendia, L. Engebretsen, K. Johnston, J. S. Kutcher, M. Raftery, A. Sills, B. W. Benson, G. A. Davis, R. G. Ellenbogen, K. Guskiewicz, S. A. Herring, G. L. Iverson, B. D. Jordan, J. Kissick, M. McCrea, A. S. McIntosh, D. Maddocks, M. Makdissi, L. Purcell, M. Putukian, K. Schneider, C. H. Tator, and M. Turner. 2013a. Child-SCAT3. British Journal of Sports Medicine 47(5):263-266.

McCrory, P., W. H. Meeuwisse, M. Aubry, B. Cantu, J. Dvoøák, R. J. Echemendia, L. Engebretsen, K. Johnston, J. S. Kutcher, M. Raftery, A. Sills, B. W. Benson, G. A. Davis, R. G. Ellenbogen, K. Guskiewicz, S. A. Herring, G. L. Iverson, B. D. Jordan, J. Kissick, M. McCrea, A. S. McIntosh, D. Maddocks, M. Makdissi, L. Purcell, M. Putukian, K. Schneider, C. H. Tator, and M. Turner. 2013b. Consensus statement on concussion in sport: The 4th International Conference on Concussion in Sport held in Zurich, November 2012. British Journal of Sports Medicine 47(5):250-258.

McCrory, P., W. H. Meeuwisse, M. Aubry, B. Cantu, J. Dvoøák, R. J. Echemendia, L. Engebretsen, K. Johnston, J. S. Kutcher, M. Raftery, A. Sills, B. W. Benson, G. A. Davis, R. G. Ellenbogen, K. Guskiewicz, S. A. Herring, G. L. Iverson, B. D. Jordan, J. Kissick, M. McCrea, A. S. McIntosh, D. Maddocks, M. Makdissi, L. Purcell, M. Putukian, K. Schneider, C. H. Tator, and M. Turner. 2013c. SCAT3. British Journal of Sports Medicine 47(5):259-262.

Miller, J. R., G. J. Adamson, M. M. Pink, and J. C. Sweet. 2007. Comparison of preseason, midseason, and postseason neurocognitive scores in uninjured collegiate football players. American Journal of Sports Medicine 35(8):1284-1288.

NeuroCom. 2013. Sensory Organizing Test. http://www.resourcesonbalance.com/neurocom/protocols/sensoryImpairment/SOT.aspx (accessed August 23, 2013).

Pardini, J. E., D. A. Pardini, J. T. Becker, K. L. Dunfee, W. F. Eddy, M. R. Lovell, and J. S. Welling. 2010. Postconcussive symptoms are associated with compensatory cortical recruitment during a working memory task. Neurosurgery 67(4):1020-1027; discussion 1027-1028.

Piland, S. G., R. W. Motl, K. M. Guskiewicz, M. McCrea, and M. S. Ferrara. 2006. Structural validity of a self-report concussion-related symptom scale. Medicine and Science in Sports and Exercise 38(1):27-32.

Randolph, C., S. Millis, W. B. Barr, M. McCrea, K. M. Guskiewicz, T. A. Hammeke, and J. P. Kelly. 2009. Concussion Symptom Inventory: An empirically-derived scale for monitoring resolution of symptoms following sports-related concussion. Archives of Clinical Neuropsychology 24(3):219-229.

Reeves, D. L., K. P. Winter, J. Bleiberg, and R. L. Kane. 2007. ANAM® genogram: Historical perspectives, description, and current endeavors. Archives of Clinical Neuropsychology 22(Suppl 1):S15-S37.

Register-Mihalik, J., K. M. Guskiewicz, J. D. Mann, and E. W. Shields. 2007. The effects of headache on clinical measures of neurocognitive function. Clinical Journal of Sport Medicine 17(4):282-288.

Riemann, B. L., K. M. Guskiewicz, and E. W. Shields. 1999. Relationship between clinical and forceplate measures of postural stability. Journal of Sport Rehabilitation 8(2):71-82.

Schatz, P. 2010. Long-term test-retest reliability of baseline cognitive assessments using ImPACT. American Journal of Sports Medicine 38(1):47-53.

Schatz, P., and B. O. Putz. 2006. Cross-validation of measures used for computer-based assessment of concussion. Applied Neuropsychology 13(3):151-159.

Schatz, P., J. Pardini, M. R. Lovell, M. W. Collins, and K. Podell. 2006. Sensitivity and specificity of the ImPACT Test Battery for concussion in athletes. Archives of Clinical Neuropsychology 21(1):91-99.

Schneider, J., and G. Gioia. 2007. Psychometric properties of the Post-Concussion Symptom Inventory (PCSI) in school age children. [Abstract.] Developmental Neurorehabilitation 10(4):282.

Schneider, K. J., C. A. Emery, J. Kang, G. M. Schneider, and W. H. Meeuwisse. 2010. Examining Sport Concussion Assessment Tool ratings for male and female youth hockey players with and without a history of concussion. British Journal of Sports Medicine 44(15):1112-1117.

Segalowitz, S., P. Mahaney, D. Santesso, L. MacGregor, J. Dywan, and B. Willer. 2007. Retest reliability in adolescents of a computerized neuropsychological battery used to assess recovery from concussion. NeuroRehabilitation 22(3):243-251.

Teasdale, G., and B. Jennett. 1974. Assessment of coma and impaired consciousness: A practical scale. Lancet 2(7872):81-84.

Valovich McLeod, T. C., W. B. Barr, M. McCrea, and K. M. Guskiewicz. 2006. Psychometric and measurement properties of concussion assessment tools in youth sports. Journal of Athletic Training 41(4):399-408.

Valovich McLeod, T. C., R. C. Bay, K. C. Lam, and A. Chhabra. 2012. Representative baseline values on the Sport Concussion Assessment Tool 2 (SCAT2) in adolescent athletes vary by gender, grade, and concussion history. American Journal of Sports Medicine 40(4):927-933.

Vaughan, C., G. A. Gioia, and D. Vincent. 2008. Initial examination of self-reported postconcussion symptoms in normal and mTBI children ages 5 to 12. [Abstract.] Journal of the International Neuropsychological Society 14(Suppl 1):207.

Vincent, A. S., T. Roebuck-Spencer, K. Gilliland, and R. Schlegel. 2012. Automated Neuropsychological Assessment Metrics (v4) Traumatic Brain Injury Battery: Military normative data. Military Medicine 177(3):256-269.

Wilde, E. A., S. R. McCauley, J. V. Hunter, E. D. Bigler, Z. Chu, Z. J. Wang, G. R. Hanten, M. Troyanskaya, R. Yallampalli, X. Li, J. Chia, and H. S. Levin. 2008. Diffusion tensor imaging of acute mild traumatic brain injury in adolescents. Neurology 70(12):948-955.

Yeates, K. O., E. Kaizar, J. Rusin, B. Bangert, A. Dietrich, K. Nuss, M. Wright, and H. G. Taylor. 2012. Reliable change in postconcussive symptoms and its functional consequences among children with mild traumatic brain injury. Archives of Pediatrics and Adolescent Medicine 166(7):615-622.