In Chapter 4, we focused on assessments that are used as a part of classroom instructional activities. In this chapter we turn to assessments that are distinct from classroom instruction and used to monitor or audit student learning over time. We refer to them as “monitoring assessments” or “external assessments.”1 They can be used to answer a range of important questions about student learning, such as: How much have the students in a certain school or school system learned over the course of a year? How does achievement in one school system compare with achievement in another? Is one instructional technique or curricular program more effective than another? What are the effects of a particular policy measure, such as reduction in class size? Table 5-1 shows examples of the variety of questions that monitoring assessments may be designed to answer at different levels of the education system.

The tasks used in assessments designed for monitoring purposes need to have the same basic characteristics as those for classroom assessments (discussed in Chapter 4) in order to align with the Next Generation Science Standards (NGSS): they will need to address the progressive nature of learning, include multiple components that reflect three-dimensional science learning, and include an interpretive system for the evaluation of a range of student products. In addition, assessments for monitoring need to be designed so that they can be given to large numbers of students, are sufficiently standardized to support the intended monitoring purpose (which may involve

___________

1External assessments (sometimes referred to as large-scale assessments) are designed or selected outside of the classroom, such as by districts, states, countries, or international bodies, and are typically used to audit or monitor learning.

TABLE 5-1 Questions Answered by Monitoring Assessments

| Types of inferences | Levels of the Education System | |||

| Individual Students | Schools or District | Policy Monitoring | Program Evaluation | |

| Criterion-referenced | Have individual students demonstrated adequate performance in science? | Have schools demonstrated adequate performance in science this year? | How many students in state X have demonstrated proficiency in science? | Has program X increased the proportion of students who are proficient? |

| Longitudinal and comparative across time | Have individual students demonstrated growth across years in science? | Has the mean performance for the district grown across years? How does this year’s performance compare to last year’s? | How does this year’s performance compare to last year’s? | Have students in program X increased in proficiency across several years? |

| Comparative across groups | How does this student compare to others in the school/state? | How does school/district X compare to school/district Y? | How many students in different states have demonstrated proficiency in science? | Is program X more effective in certain subgroups? |

high-stakes decisions about students, teachers, or schools), cover an appropriate breadth of the NGSS, and are cost-effective.

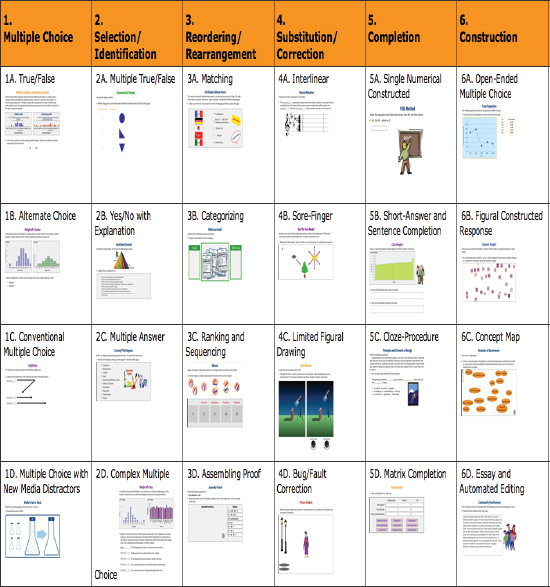

The measurement field has considerable experience in developing assessments that meet some of the monitoring functions shown in Table 5-1. In science, such assessments are typically composed predominantly of multiple-choice and short-answer, constructed-response questions. However, the sorts of items likely to be useful for adequately measuring the NGSS performance expectations—extended constructed-response questions and performance tasks—have historically posed challenges when used in assessment programs intended for system monitoring.

In this chapter we explore strategies for developing assessments of the NGSS that can be used for monitoring purposes. We begin with a brief look at currently used assessments, considering them in light of the NGSS. We next discuss the challenges of using performance tasks in assessments intended for administration on a large scale, such as a district, a state, or the national level, and we

revisit the lessons learned from other attempts to do so. We then offer suggestions for approaches to using these types of tasks to provide monitoring data that are aligned with the NGSS, and we highlight examples of tasks and situations that can be used to provide appropriate forms of evidence, as well as some of the ways in which advances in measurement technology can support this work.

CURRENT SCIENCE MONITORING ASSESSMENTS

In the United States, the data currently used to answer monitoring-related questions about science learning are predominantly obtained through assessments that use two types of test administration (or data collection) strategies. The first is a fixed-form test, in which, on a given testing occasion, all students take the same form2 of the test. The science assessments used by states to comply with the No Child Left Behind Act (NCLB) are examples of this test administration strategy: each public school student at the tested grade level in a given state takes the full test. According to NCLB requirements, these tests are given to all students in the state at least once in each of three grade spans (K-5, 6-8, 9-12). Fixed-form tests of all students (census tests) are designed to yield individual-level scores, which are used to address the questions about student-level performance shown in the first column of Table 5-1 (above). The scores are also aggregated as needed to provide information for the monitoring questions about school-, district-, and state-level performance shown in the three right-hand columns.

The second type of test administration strategy makes use of matrix sampling, which is used when the primary interest is group- or population-level estimates (i.e., schools or districts), rather than individual-level estimates. No individual student takes the full set of items and tasks. Instead, each of the tasks is completed by a sample of students that is sufficiently representative to yield valid and reliable scores for schools, states, or the nation. This method makes it possible to gather data on a larger and more representative collection of items or tasks for a given topic than any one student could be expected to complete in the time allocated for testing. In some applications, all students from a school or district are tested (with different parts of the whole test). In other applications, only some students are sampled for testing, but in sufficient number and representativeness that the results will provide an accurate estimate of how the entire school or district would perform.

___________

2A test form is a set of assessment questions typically given to one or more students as part of an assessment administration.

The best-known example in the United States of an assessment that makes use of a matrix-sampling approach is the National Assessment of Educational Progress (NAEP), known as the “Nation’s Report Card.” NAEP is given to representative samples of 4th, 8th, and 12th graders, with the academic subjects and grade levels that are assessed varying from year to year.3 The assessment uses matrix sampling of items to cover the full spectrum of each content framework (e.g., the NAEP science framework) in the allotted administration time. The matrix-sampling approach used by NAEP allows reporting of group-level scores (including demographic subgroups) for the nation, individual states, and a few large urban districts, but the design does not support reporting of individual-level or school-level scores. Thus, NAEP can provide data to answer some of the monitoring questions listed in Table 5-1, but not the questions in first or fourth columns. Matrix-sampling approaches have not generally been possible in the context of state testing in the last decade because of the requirements of NCLB for individual student reporting. When individual student results are not required, matrix sampling is a powerful and relatively straightforward option.

These two types of administration strategies for external assessments can be combined to answer different monitoring questions about student learning. When the questions require scores for individuals, generally all students are tested with fixed- or comparable test forms. But when group-level scores will suffice, a matrix-sampling approach can be used. Both approaches can be combined in a single test: for example, a test could include both a fixed-form component for estimating individual performance and a matrix-sampled component that is used to estimate a fuller range of performance at the school level. This design was used by several states prior to the implementation of NCLB, including Massachusetts, Maine, and Wyoming (see descriptions in National Research Council, 2010; Hamilton et al., 2002). That is, hybrid designs can be constructed to include a substantial enough fixed or common portion of the test to support individual estimates, with each student taking one of multiple matrix forms to ensure broad coverage at the school level.

The science tests that are currently used for monitoring purposes are not suitable to evaluate progress in meeting the performance expectations in the NGSS, for two reasons. First, the NGSS have only recently been published, so the current tests are not aligned with them in terms of content and the focus

___________

3The schedule for NAEP test administrations is available at http://www.nagb.org/naep/assessment-schedule.html [November 2013].

on practices. Second, the current monitoring tests do not use the types of tasks that will be needed to assess three dimensional science learning. As we discuss in Chapters 3 and 4, assessing three-dimensional science learning will require examining the way students perform scientific and engineering practices and apply crosscutting concepts while they are engaged with disciplinary core ideas.

Currently, some state science assessments include the types of questions that could be used for assessing three-dimensional learning (e.g., questions that make use of technology to present simulations or those that require extended constructed responses), but most rely predominantly on multiple-choice questions that are not designed to do so. In most cases, the items assess factual knowledge rather than application of core ideas or aspects of inquiry that are largely decoupled from core ideas. They do not use the types of multicomponent tasks that examine students’ performance of scientific and engineering practices in the context of disciplinary core ideas and crosscutting concepts nor do they use tasks that reflect the connected use of different scientific practices in the context of interconnected disciplinary ideas and crosscutting concepts. Similarly, NAEP’s science assessment uses some constructed-response questions, but these also are not designed to measure three-dimensional science learning. In 2009, NAEP administered a new type of science assessment that made use of interactive computer and hands-on tasks. These task formats are closer to what is required for measuring the NGSS performance expectations (see discussion below), but they are not yet aligned with the NGSS. Consequently, current external assessments cannot readily be used for monitoring students’ progress in meeting the NGSS performance expectations.

We note, however, that NAEP is not a static assessment program. It periodically undertakes major revisions to the framework used to guide the processes of assessment design and task development. NAEP is also increasingly incorporating technology as a key aspect of task design and assessment of student performance. The next revision of the NAEP science framework may bring it into closer alignment with the framework and the NGSS. Thus, the NAEP science assessment might ultimately constitute an effective way to monitor the overall progress of science teaching and learning in America’s classrooms in ways consistent with implementation of the framework and the NGSS.

INCLUDING PERFORMANCE TASKS IN MONITORING ASSESSMENTS

Implementation of the NGSS provides an opportunity to expand the ways in which science assessment is designed and implemented in the United States and the ways in which data are collected to address the monitoring questions shown in

Table 5-1. We see two primary challenges to taking advantage of this opportunity. One is to design assessment tasks so that they measure the NGSS performance expectations. The other is to determine strategies for assembling these tasks into assessments that can be administered in ways that produce scores that are valid, reliable, and fair and meet the particular technical measurement requirements necessary to support an intended monitoring purpose.

Measurement and Implementation Issues

In Chapter 3, we note that the selection and development of assessment tasks should be guided by the constructs to be assessed and the best ways of eliciting evidence about a student’s proficiency relative to that construct. The NGSS performance expectations emphasize the importance of providing students the opportunity to demonstrate their proficiencies in both science content and practices. Ideally, evidence of those proficiencies would be based on observations of students actually engaging in scientific and engineering practices relative to disciplinary core ideas. In the measurement field, these types of assessment tasks are typically performance based and include questions that require students to construct or supply an answer, produce a product, or perform an activity. Most of the tasks we discuss in Chapters 2, 3, and 4 are examples of performance tasks.

Performance tasks can be and have been designed to work well in a classroom setting to help guide instructional decisions making. For several reasons, they have been less frequently used in the context of monitoring assessments administered on a large scale.

First, monitoring assessments are typically designed to cover a much broader domain than tests used in classroom settings. When the goal is to assess an entire year or more of student learning, it is difficult to obtain a broad enough sampling of an individual student’s achievement using performance tasks. But with fewer tasks, there is less opportunity to fully represent the domain of interest.

Second, the reliability, or generalizability, of the resulting scores can be problematic. Generalizability refers to the extent to which a student’s test scores reflect a stable or consistent construct rather than error and supports a valid inference about students’ proficiency with respect to the domain being tested. Obtaining reliable individual scores requires that students each take multiple performance tasks, but administering enough tasks to obtain the desired reliability often creates feasibility problems in terms of the cost and time for testing. Careful task and test design (described below) can help address this issue.

Third, some of the monitoring purposes shown in Table 5-1 (in the second row) require comparisons across time. When the goal is to examine performance across time, the assessment conditions and tasks need to be comparable across the two testing occasions. If the goal is to compare the performance of this year’s students with that of last year’s students, the two groups of students should be required to respond to the same set of tasks or a different but equivalent set of tasks (equivalent in terms of difficulty and content coverage). This requirement presents a challenge for assessments using performance tasks since such tasks generally cannot be reused because they are based on situations that are often highly memorable.4 And, once they are given, they are usually treated as publicly available.5 Another option for comparison across time is to give a second group of students a different set of tasks and use statistical equating methods to adjust for differences in the difficulty of the tasks so that the scores can be placed on the same scale.6 However, most equating designs rely on the reuse of some tasks or items. To date, the problem of equating assessments that rely solely on performance tasks has not yet been solved. Some assessment programs that include both performance tasks and other sorts of items use the items that are not performance based to equate different test forms, but this approach is not ideal—the two types of tasks may actually measure somewhat different constructs, so there is a need for studies that explore when such equating would likely yield accurate results.

Fourth, scoring performance tasks is a challenge. As we discuss in Chapter 3, performance tasks are typically scored using a rubric that lays out criteria for assigning scores. The rubric describes the features of students’ responses required for each score and usually includes examples of student work at each scoring level. Most performance tasks are currently scored by humans who are trained to apply the criteria. Although computer-based scoring algorithms are increasingly in use, they are not generally used for content-based tasks (see, e.g., Bennett and Bejar, 1998; Braun et al., 2006; Nehm and Härtig, 2011; Williamson et al., 2006, 2012). When humans do the scoring, their variability in applying the criteria

___________

4That is, test takers may talk about them after the test is completed, and share them with each other and their teachers. This exposes the questions and allows other students to practice for them or similar tasks, potentially in ways that affect the ability of the task to measure the intended construct.

5For similar reasons, it can be difficult to field test these kinds of items.

6For a full discussion of equating methods, which is beyond the scope of this report, see Kolen and Brennan (2004) or Holland and Dorans (2006).

introduces judgment uncertainty. Using multiple scorers for each response reduces this uncertainty, but it adds to the time and cost required for scoring.

This particular form of uncertainty does not affect multiple-choice items, but they are subject to uncertainty because of guessing, something that is much less likely to affect performance tasks. To deal with these issues, a combination of response types could be used, including some that require demonstrations, some that require short constructed responses, and some that use a selected-response format. Selected-response formats, particularly multiple-choice questions, have often been criticized as only being useful for assessing low-level knowledge and skills. But this criticism refers primarily to isolated multiple-choice questions that are poorly related to an overall assessment design. (Examples include questions that are not related to a well-developed construct map in the construct-modeling approach or not based on the claims and inferences in an evidence-centered design approach; see Chapter 3). With a small set of contextually linked items that are closely related to an assessment design, the difference between well-produced selected-response items and open-ended items may not be substantial. Using a combination of response types can help to minimize concerns associated with using only performance tasks on assessments intended for monitoring purposes.

Despite the various measurement and implementation challenges discussed above, a number of assessment programs have made use of performance tasks and portfolios7 of student work. Some were quite successful and are ongoing, and some experienced difficulties that led to their discontinuation. In considering options for assessing the NGSS performance expectations for monitoring purposes, we began by reviewing assessment programs that have made use of performance tasks, as well as those that have used portfolios. At the state level, Kentucky, Vermont, and Maryland implemented such assessment programs in the late 1980s and early 1990s.

In 1990, Kentucky adopted an assessment for students in grades 4, 8, and 11 that included three types of questions: multiple-choice and short essay questions, performance tasks that required students to solve practical and applied

___________

7A portfolio is a collection of work, often with personal commentary or self-analysis, that is assembled over time as a cumulative record of accomplishment (see Hamilton et al., 2009). A portfolio can be either standardized or nonstandardized: in a standardized portfolio, the materials are developed in response to specific guidelines; in a nonstandardized portfolio, the students and teachers are free to choose what to include.

problems, and portfolios in writing and mathematics in which students presented the best examples of their classroom work for a school year. Assessments were given in seven areas: reading, writing, social science, science, math, arts and humanities, and practical living/vocational studies. Scores were reported for individual students.

In 1988, Vermont implemented a statewide assessment in mathematics and writing for students in grades 4 and 8 that included two parts: a portfolio component and uniform subject-matter tests. For the portfolio, the tasks were not standardized: teachers and students were given unconstrained choice in selecting the products to be in them. The portfolios were complemented by subject-matter tests that were standardized and consisted of a variety of item types. Scores were reported for individual students.

The Maryland School Performance Assessment System (MSPAP) was implemented in 1991. It assessed reading, writing, language usage, mathematics, science, and social sciences in grades 3, 5, and 8. All of the tasks were performance based, including some that required short-answer responses and others that required complex, multistage responses to data, experiences, or text. Some of the activities integrated skills from several subject areas, some were hands-on tasks involving the use of equipment, and some were accompanied by preassessment activities that were not scored. The MSPAP used a matrix-sampling approach: that is, the items were sampled so that each student took only a portion of the exam in each subject. The sampling design allowed for the reporting of scores for schools but not for individual students.

These assessment programs were ambitious, innovative responses to calls for education reform. They made use of assessment approaches that were then cutting edge for the measurement field. They were discontinued for many reasons, including technical measurement problems, practical reasons (e.g., the costs of the assessments and the time they took to administer), as well as imposition of the accountability requirements of NCLB (see Chapter 1), which they could not readily satisfy.8

___________

8A thorough analysis of the experiences in these states is beyond the scope of this report, but there have been several studies. For Kentucky, see Hambleton et al. (1995), Catterall et al. (1998). For Vermont, see Koretz et al. (1992a,b, 1993a,b, 1993c, 1994). For Maryland, see Hambleton et al. (2000), Ferrara (2009), and Yen and Ferrara (1997). Hamilton et al. (2009) provides an overview of all three of these programs. Hill and DePascale (2003) have pointed out that some critics of these programs failed to distinguish between the reliability of student-level scores and school-level scores. For purposes of school-level reporting, the technical quality of some of these assessments appears to have been better than generally assumed.

Other programs that use performance tasks are ongoing. At the state level, the science portion of the New England Common Assessment Program (NECAP) includes a performance component to assess inquiry skills, along with questions that rely on other formats. The state assessments in New York include laboratory tasks that students complete in the classroom and that are scored by teachers. NAEP routinely uses extended constructed-response questions, and in 2009 conducted a special science assessment that focused on hands-on tasks and computer simulations. The Program for International Student Assessment (PISA) includes constructed-response tasks that require analysis and applications of knowledge to novel problems or contexts. Portfolios are currently used as part of the advanced placement (AP) examination in studio art.

Beyond the K-12 level, the Collegiate Learning Assessment makes use of performance tasks and analytic writing tasks. For advanced teacher certification, the National Board for Professional Teaching Standards uses an assessment composed of two parts—a portfolio and a 1-day exam given at an assessment center.9 The portfolio requires teachers to accumulate work samples over the course of a school year according to a specific set of instructions. The assessment center exam consists of constructed-response questions that measure the teacher’s content and pedagogical knowledge. The portfolio and constructed responses are scored centrally by teachers who are specially trained.

The U.S. Medical Licensing Examination uses a performance-based assessment (called the Clinical Skills Assessment) as part of the series of exams required for medical licensure. The performance component is an assessment of clinical skills in which prospective physicians have to gather information from simulated patients, perform physical examinations, and communicate their findings to patients and colleagues.10 Information from this assessment is considered along with scores from a traditional paper-and-pencil test of clinical skills in making licensing decisions.

Implications for Assessment of the NGSS

The experiences to date suggest strategies for addressing the technical challenges posed by the use of performance tasks in assessments designed for monitoring. In

___________

9For details, see http://www.nbpts.org [June 2013].

10The assessment is done using “standardized patients” who are actors trained to serve as patients and to rate prospective physicians’ clinical skills: for details, see http://www.usmle.org/step-2-cs/ [November 2013].

particular, much has been written about the procedures that lead to high-quality performance assessment and portfolios (see, e.g., Baker, 1994; Baxter and Glaser, 1998; Dietel, 1993; Dunbar et al., 1991; Hamilton et al., 2009; Koretz et al., 1994; Pecheone et al., 2010; Shavelson et al., 1993; Stiggins, 1987). This large body of work has produced important findings, particularly on scoring processes and score reliability.

With regard to the scoring process, particularly human scoring, strategies that can yield acceptable levels of interrater reliability include the following:

- use of standardized tasks that are designed with a clear idea of what constitutes poor and good performance;

- clear scoring rubrics that minimize the degree to which raters must make inferences as they apply the criteria to student work and that include several samples of student responses for each score level;

- involvement of raters who have significant knowledge of the skills being measured and the rating criteria being applied; and

- providing raters with thorough training, combined with procedures for monitoring their accuracy and guiding them in making corrections when inaccuracies are found.

With regard to score generalizability (i.e., the extent to which the score results for one set of tasks generalize to performance on another set of tasks), studies show that a moderate to large number of performance tasks are needed to produce scores that are sufficiently reliable to support high-stakes judgments about students (Shavelson et al., 1993; Dunbar et al., 1991; Linn et al., 1996).11 Student performance can vary substantially among tasks because of unique features of the tasks and the interaction of those features with students’ knowledge and experience. For example, in a study on the use of hands-on performance tasks in science with 5th- and 6th-grade students, Stecher and Klein (1997) found that three 45 to 50-minute class periods were needed to yield a score reliability of 0.80.12 For the mathematics portfolio used in Vermont, Klein et al. (1995) estimated that as many as 25 pieces of student work would have been needed to produce a score reliable enough to support high-stakes decisions about individual stu-

___________

11When test results are used to make important, high-stakes decisions about students, a reliability of 0.90 or greater is typically considered appropriate.

12The reader is referred to the actual article for details about the performance tasks used in this study.

dents. However, it should be noted that Vermont’s portfolio system was designed to support school accountability determinations, and work by Hill and DePascale (2003) demonstrated that reliability levels that might cause concern at the individual level can still support school-level determinations. We note that this difficulty is not unique to assessments that rely on performance tasks: a test composed of only a small number of multiple-choice questions would also not produce high score reliability, nor would it be representative of a construct domain as defined by the NGSS. Research suggests that use of a well-designed set of tasks that make use of multiple-response formats could yield higher levels of score reliability than exclusive reliance on a small set of performance tasks (see, e.g., Wilson and Wang, 1995).

The measurement field has not yet fully solved the challenge of equating the scores from two or more assessments relying on performance tasks, but some strategies are available (see, e.g., Draney and Wilson, 2008). As noted above, some assessment programs like the College Board’s advanced placement (AP) exams use a combination of item types, including some multiple-choice questions (that can generally be reused), which can be of assistance for equating, provided they are designed with reference to the same or similar performance expectations. Other assessment programs use a strategy of “pre-equating” by administering all of the tasks to randomly equivalent groups of students, possibly students in another state (for details, see Pecheone and Stahl, n.d., p. 23). Another strategy is to develop a large number of performance tasks and publicly release all of them and then to sample from them for each test administration. More recently, researchers have tried to develop task shells or templates to guide the development of tasks that are comparable but vary in particular details, so that the shells can be reused. This procedure has been suggested for the revised AP examination in biology where task models have been developed based on application of evidence-centered design principles (see Huff et al., 2012). As with the NGSS, this exam requires students to demonstrate their knowledge through applying a set of science practices.

There is no doubt that developing assessments that include performance tasks and that can be used to monitor students’ performance with respect to the NGSS will be challenging, but prior research and development efforts, combined with lessons learned from prior and current operational programs, suggest some strategies for addressing the technical challenges. New methods will be needed, drawing

on both existing and new approaches. Technology offers additional options, such as the use of simulations or external data sets and built-in data analysis tools, as well as flexible translation and accommodation tools. But technology also adds its own set of new equity challenges. In this section we propose design options and examples that we think are likely to prove fruitful, although some will need further development and research before they can be fully implemented and applied in any high-stakes environment. The approaches we suggest are based on several assumptions about adequate assessment of the NGSS for monitoring purposes.

It will not be possible to cover all of the performance expectations for a given grade (or grade band) during a typical single testing session of 60-90 minutes. To obtain a sufficient estimate of a single student’s proficiency with the performance expectations, multiple testing sessions would be necessary. Even with multiple testing sessions, however, assessments designed for monitoring purposes alone cannot fully cover the NGSS performance expectations for a given grade within a reasonable testing time and cost. Moreover, some performance expectations will be difficult to assess using tasks not tied directly to a school’s curriculum and that can be completed in 90 minutes or less. Thus, our first assumption is that such assessments will need to include a combination of tasks given at a time mandated by the state or district (on-demand assessment components) and tasks given at a time that fits the instructional sequence in the classroom (classroom-embedded assessment components).

Second, we assume that assessments used for monitoring purposes, like assessments used for instructional support in classrooms, will include multiple types of tasks. That is, we assume that the individual tasks that compose a monitoring assessment will include varied formats: some that require actual demonstrations of practices, some that make use of short- and extended-constructed responses, and some that use carefully designed selected-response questions. Use of multiple components will help to cover the performance expectations more completely than any assessment that uses only one format.

We recognize that the approaches we suggest for gathering assessment information may not yield the level of comparability of results that educators, policy makers, researchers, and other users of assessment data have been accustomed to, particularly at the individual student level. Thus, our third assumption is that developing assessments that validly measure the NGSS is more important than achieving strict comparability. There are tradeoffs to be considered. Traditional

approaches that have been shown to produce comparable results, which heavily rely on selected-response items, will not likely be adequate for assessing the full breadth and depth of the NGSS performance expectations, particularly in assessing students’ proficiency with the application of the scientific and engineering practices in the context of disciplinary core ideas. The new approaches that we propose for consideration (see below) involve hybrid designs employing performance tasks that may not yield strictly comparable results, which will make it difficult to make some of the comparisons required for certain monitoring purposes.13 We assume that users will need to accept different conceptualizations and degrees of comparability in order to properly assess the NGSS.14

Fourth, we assume that the use of technology can address some of the challenges discussed above (and below). For example, technology can be useful in scoring multiple aspects of students’ responses on performance tasks, and technology-enhanced questions (e.g., those using simulations or data display tools) can be useful if not essential in designing more efficient ways for students to demonstrate their proficiency in engaging in some of the science practices. Nevertheless, technology alone is unlikely to solve problems of score reliability or of equating, among other challenges.

Finally, we assume that matrix sampling will be an important tool in the design of assessments for monitoring purposes to ensure that there is proper coverage of the broad domain of the NGSS. Matrix sampling as a design principle may be extremely important even when individual scores are needed as part of the monitoring process. This assumption includes hybrid designs in which all students respond to the same core set of tasks that are mixed with matrix-sampled tasks to ensure representativeness of the NGSS for monitoring inferences about student learning at higher levels of aggregation (see the second, third, and fourth columns in Table 5-1, above).

___________

13A useful discussion of issues related to comparability can be found in Gong and DePascale (2013).

14We note that in the United States comparability is frequently based on a statistical (psychometric) concept; in other countries, comparability relies on a balance between psychometric evidence and evidence derived from assessment design information and professional judgment (i.e., expert judgment as to commonality across assessments in terms of the breadth, depth, and format of coverage). Examples include the United Kingdom system of assessment at the high school level and functions served by their monitoring body, called the Office of Qualifications and Examinations Regulations (Ofqual), to ensure comparability across different examination programs all tied to the same curricular frameworks. See http://ofqual.gov.uk/how-we-regulate/ [November 2013].

With these assumptions in mind, we suggest two broad classes of design options. The first involves the use of on-demand assessment components and the second makes use of classroom-embedded assessment components. For each class, we provide a general description of options, illustrating the options with one or more operational assessment programs. For selective cases, we also provide examples of the types of performance tasks that might be used as part of the design option. It should be noted that our two general classes of design options are not being presented as an either-or contrast. Rather, they should be seen as options that might be creatively and selectively combined, with varying weighting, to produce a monitoring assessment that appropriately and adequately reflects the depth and breadth of the NGSS.

On-Demand Assessment Components

As noted above, one component of a monitoring system could include an on-demand assessment that might be administered in one or more sessions toward the end of a given academic year. Such an assessment would be designed to cover multiple aspects of the NGSS and might typically be composed of mixed-item formats with either written constructed responses or performance tasks or both.

Mixed-Item Formats with Written Responses

A mixed-item format containing multiple-choice and short and extended constructed-response questions characterizes certain monitoring assessments. As an example, we can consider the revised AP assessment for biology (College Board, 2011; Huff et al., 2010; Wood, 2009). Though administered on a large scale, the tests for AP courses are aligned to a centrally developed curriculum, the AP framework, which is also used to develop instructional materials for the course (College Board, 2011). Most AP courses are for 1 year, and students take a 3-hour exam at the end of the course. (Students are also allowed to take the exam without having taken the associated course.) Scores on the exam can be used to obtain college credit, as well as to meet high school graduation requirements.

Using the complementary processes of “backwards design” (Wiggins and McTighe, 2005) and evidence-centered design (see Chapter 3), a curriculum framework was developed for biology organized in terms of disciplinary big ideas, enduring understandings, and supporting knowledge, as well as a set of seven science practices. This structure parallels that of the core ideas and science practices

in the K-12 framework. The AP biology curriculum framework focuses on the integration, or in the College Board’s terminology, “fusion,” of core scientific ideas with scientific practice in much the same way as the NGSS performance expectations. And like what is advocated in the K-12 science framework (see National Research Council, 2012a) and realized in the NGSS, a set of performance expectations or learning objectives was defined for the biology discipline. Learning objectives articulate what students should know and be able to do and they are stated in the form of claims, such as “the student is able to construct explanations of the mechanisms and structural features of cells that allow organisms to capture, store or use free energy” (learning objective 2.5). Each learning objective is designed to help teachers integrate science practices with specific content and to provide them with information about how students will be expected to demonstrate their knowledge and abilities (College Board, 2013a, p. 7). Learning objectives guide instruction and also serve as a guide for developing the assessment questions since they constitute the claim components in the College Board system for AP assessment development. Through the use of evidence-centered design, sets of claim-evidence pairs were elaborated in biology that guide development of assessment tasks for the new AP biology exam.

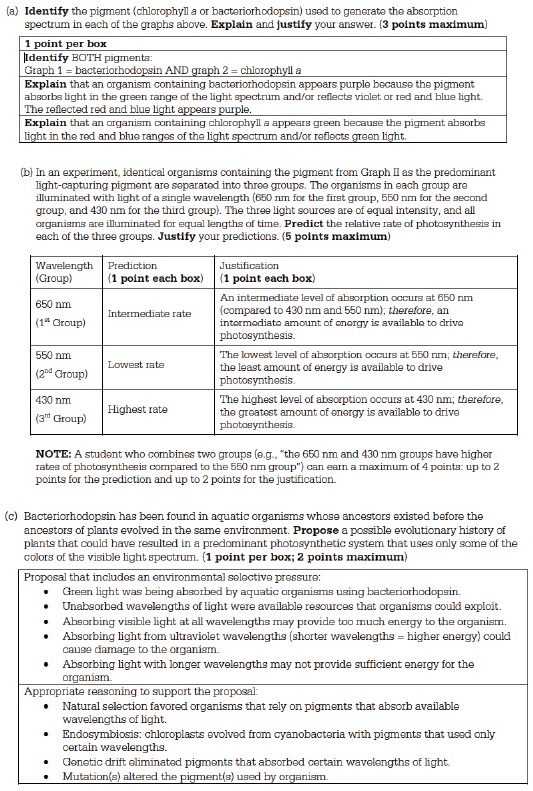

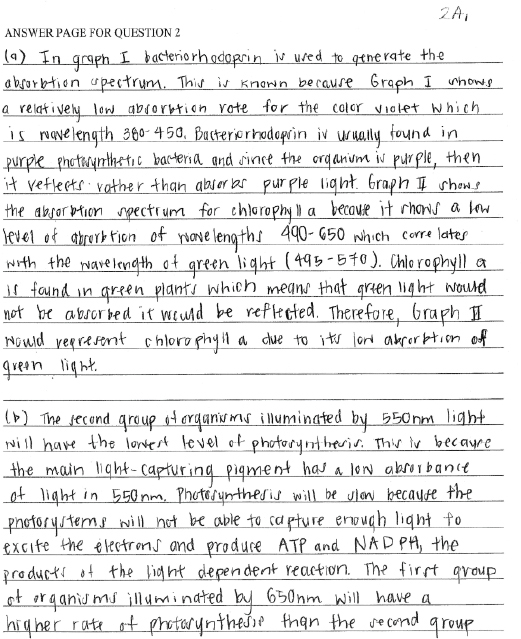

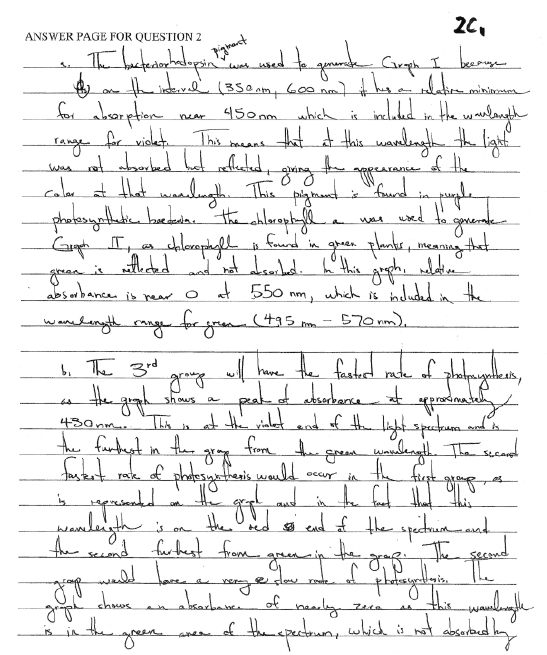

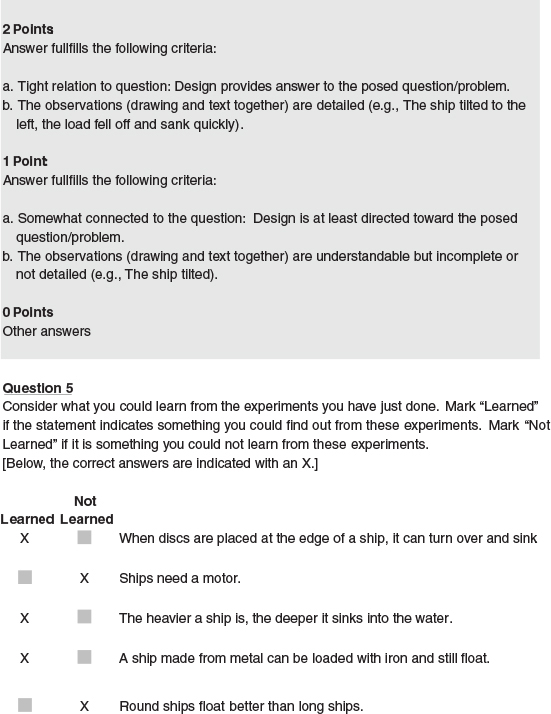

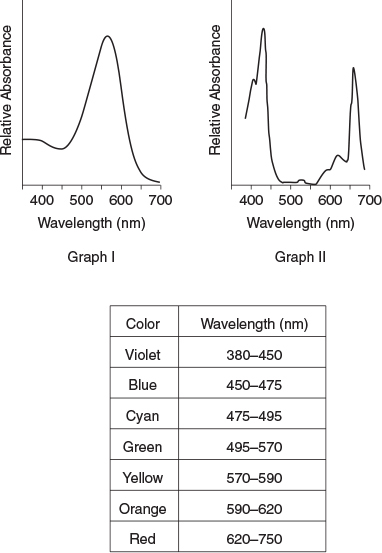

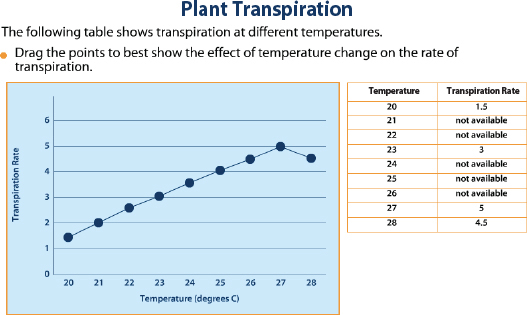

Assessment Task Example 9, Photosynthesis and Plant Evolution: An example task from the new AP biology assessment demonstrates the use of a mixed-item formats with written responses. As shown in Figure 5-1, this task makes use of both multiple-choice questions and free-response questions. The latter include both short-answer and extended constructed responses. It was given as part of a set of eight free-response questions (six short-answer questions and two extended constructed-response questions) during a testing session that lasted 90 minutes. The instructions to students suggested that this question would require 22 minutes to answer.

The example task has multiple components in which students make use of data in two graphs and a table to respond to questions about light absorption. It asks students to work with scientific theory and evidence to explain how the processes of natural selection and evolution could have resulted in different photosynthetic organisms that absorb light in different ranges of the visible light spectrum. Students were asked to use experimental data (absorption spectra) to identify two different photosynthetic pigments and to explain how the data support their identification. Students were then presented with a description of an experiment for investigating how the wavelength of available light affects the rate of photosynthe-

FIGURE 5-1 AP biology example.

NOTE: See text for discussion.

SOURCE: College Board (2013a, p. 4). Reprinted with permission.

sis in autotrophic organisms. Students were asked to predict the relative rates of photosynthesis in three treatment groups, each exposed to a different wavelength of light, and to justify their prediction using their knowledge and understanding about the transfer of energy in photosynthesis. Finally, students were asked to propose a possible evolutionary history of plants by connecting differences in resource availability with different selective pressures that drive the process of evolution through natural selection.

Collectively, the multiple components in this task are designed to provide evidence relevant to the nine learning objectives, which are shown in Box 5-1. The

BOX 5-1

LEARNING OBJECTIVES (LO) FOR SAMPLE AP BIOLOGY QUESTION

LO 1.12

The student is able to connect scientific evidence from many scientific disciplines to support the modern concept of evolution.

LO 1.13

The student is able to construct and/or justify mathematical models, diagrams or simulations that represent processes of biological evolution.

LO 1.2

The student is able to analyze data related to questions of speciation and extinction throughout the Earth’s history.

LO 1.25

The student is able to describe a model that represents evolution within a population.

LO 2.24

The student is able to analyze data to identify possible patterns and relationships between a biotic or abiotic factor and a biological system (cells, organisms, populations, communities, or ecosystems).

LO 2.5

The student is able to construct explanations of the mechanisms and structural features of cells that allow organisms to capture, store or use free energy.

LO 4.4

The student is able to make a prediction about the interactions of subcellular organelles.

LO 4.5

The student is able to construct explanations based on scientific evidence as to how interactions of subcellular structures provide essential functions.

LO 4.6

The student is able to use representations and models to analyze situations qualitatively to describe how interactions of subcellular structures, which possess specialized functions, provide essential functions.

SOURCE: College Board (2011). Reprinted with permission.

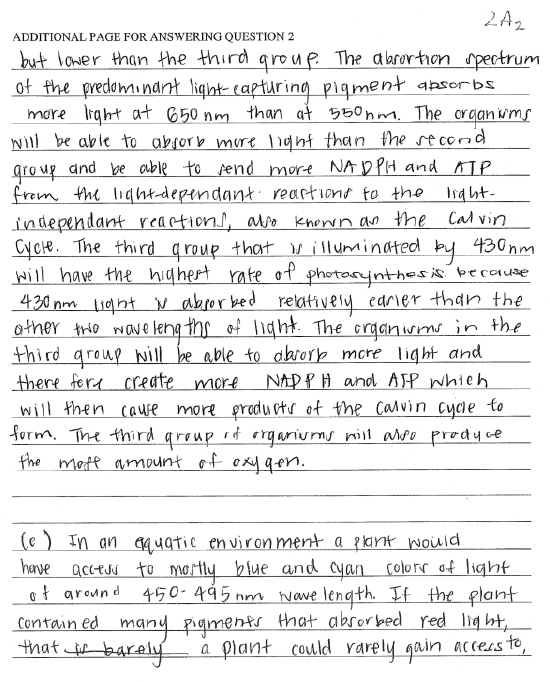

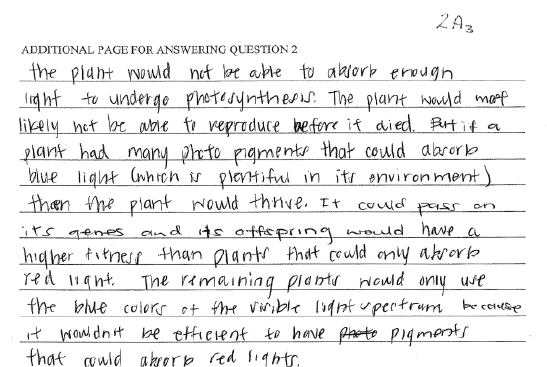

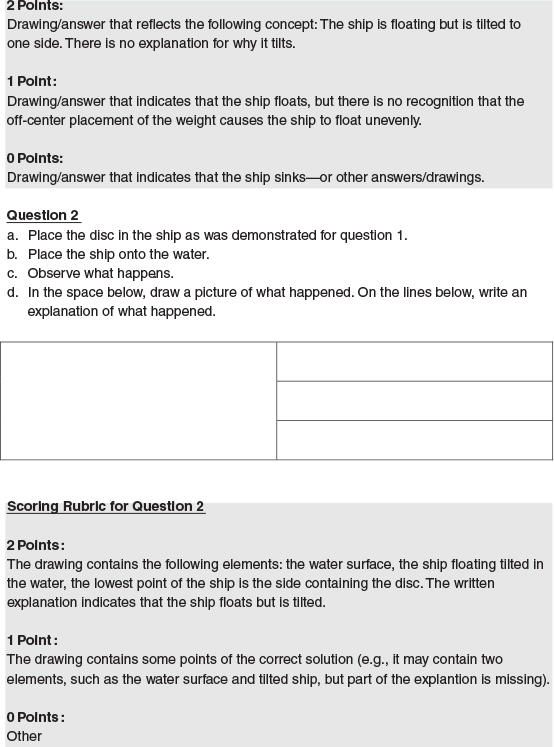

task has a total point value of 10 and each component of the task (a, b, c) has an associated scoring rubric (see Figure 5-2). Note that in the case of responses that require an explanation or justification, the scoring rubric includes examples of the acceptable evidence in the written responses. Figure 5-3 shows two different student responses to this task: one in which the student earned all 10 possible points and one in which the student earned 6 points (3 points for Part a; 3 points for Part b; and 0 points for Part c).15

Mixed-Item Formats with Performance Tasks

Two current assessment programs use a mixed-item format with performance tasks. Both assessments are designed to measure inquiry skills as envisioned in the science standards that predate the new science framework and the NGSS. Thus, they are not fully aligned with the NGSS performance expectations. We highlight these two assessments not because of the specific kinds of questions that they use, but because the assessments require that students demonstrate science practices and interpret the results.

One assessment is the science component of the NECAP, used by New Hampshire, Rhode Island, and Vermont, and given to students in grades 4 and 8. The assessment includes three types of items: multiple-choice questions, short constructed-response questions, and performance tasks. The performance-based tasks present students with a research question. Students work in groups to conduct an investigation in order to gather the data they need to address the research question and then work individually to prepare their own written responses to the assessment questions.16

A second example is the statewide science assessment administered to the 4th and 8th grades in New York. The assessment includes both multiple-choice and performance tasks. For the performance part of the assessment, the classroom teacher sets up stations in the classroom according to specific instructions in the assessment manual. Students rotate from station to station to perform the task, record data from the experiment or demonstration, and answer specific

___________

15Additional examples of student responses to this task, as well as examples of the other tasks, their scoring rubrics, and sample student responses, on the constructed-response section of the May 2013 exam can be found at http://apcentral.collegeboard.com/apc/members/exam/exam_information/1996.html [November 2013].

16Examples of questions are available at http://www.ride.ri.gov/InstructionAssessment/Assessment/NECAPAssessment/NECAPReleasedItems/tabid/426/LiveAccId/15470/Default.aspx [August 2013].

questions.17 In addition to these state programs, it is worth considering an international example of how a performance task can be included in a monitoring assessment.

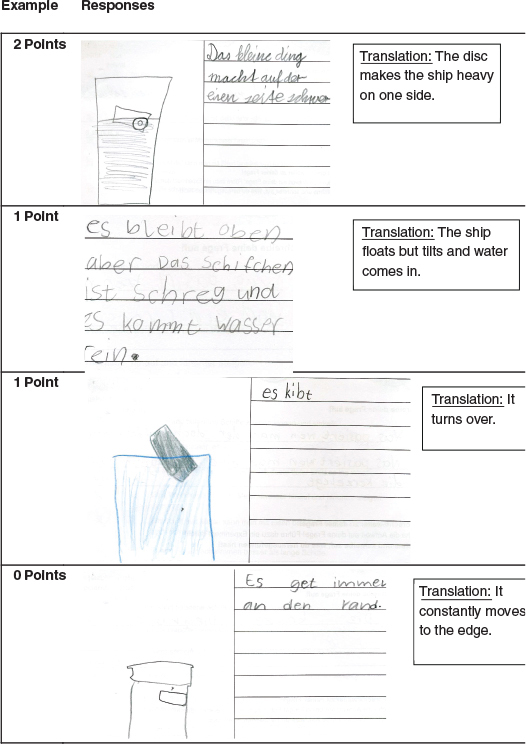

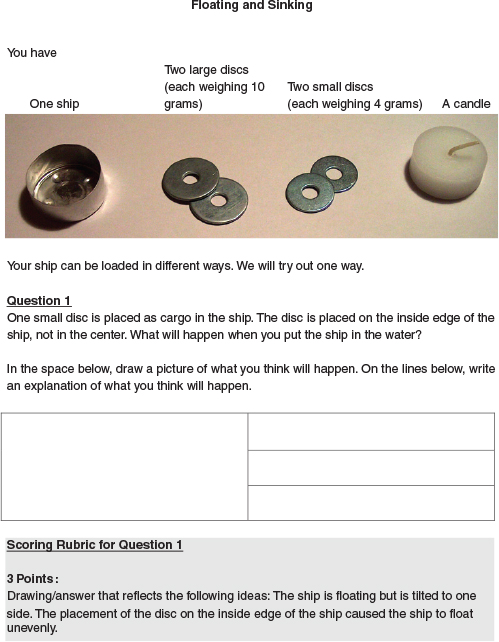

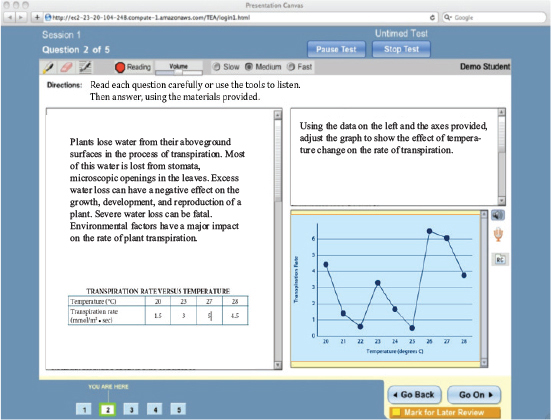

Assessment Task Example 10, Floating and Sinking: To create standards for science education in Switzerland, a framework was designed that is similar to the U.S. framework. Assessments aligned with the Swiss framework were developed and administered to samples of students in order to obtain empirical data for specifying the standards. Like the U.S. framework, the Swiss framework defined three dimensions of science education—which they called skills, domains, and levels—and emphasized the idea of three-dimensional science learning. The domain dimension includes eight themes central to science, technology, society, and the environment (e.g., motion, force and energy, structures and changes of matter, ecosystems). The skills dimension covers scientific skills similar to the scientific practices listed in the U.S. framework. For each skill, several subskills are specified. For the skill “to ask questions and to investigate,” five subskills are defined: (1) to look at phenomena more attentively, to explore more precisely, to observe, to describe, and to compare; (2) to raise questions, problems, and hypothesis; (3) to choose and apply suitable tools, instruments, and materials; (4) to conduct investigations, analyses, and experiments; and (5) to reflect on results and examination methods (see Labudde et al., 2012).

To collect evidence about student competence with respect to the framework, Swiss officials identified a set of experts to develop assessments. From the outset, this group emphasized that traditional approaches to assessment (e.g., paper-and-pencil questions assessing factual knowledge and simplistic understandings) would not be sufficient for evaluating the integrated learning reflected by the combinations of domains and skills in the framework. As a result, the group decided to follow the example of the Trends in Mathematics and Science Study (TIMSS), which (in its 1995 iteration) included an add-on study that used performance tasks to assess students’ inquiry skills in 21 countries (Harmon et al., 1997). One of the performance tasks used for defining standards in science education in Switzerland is shown in Figure 5-4, and, for use in this report, has been translated from German to English.

___________

17Examples are available at http://www.nysedregents.org/Grade4/Science/home.html and http://www.nysedregents.org/Grade8/Science/home.html [August 2013].

FIGURE 5-4 Sample performance-based task.

NOTES: The English translation of the three examples of answers are as follows “the little boat is heavy on the one side (at code 2)”; “it remains on the top of the water, but the little boat is inclined and water is coming in” (code 1, drawing on the left); “it tilts over” (code 1, drawing on the right).

SOURCE: Labudde et al. (2012). Copyright by the author; used with permission.

This task was one of several designed for use with students in 2nd grade. As part of the data collection activities, the tasks were given to 593 students; each student responded to two tasks and were given 30 minutes per task. The task was designed primarily to assess the student’s skills in asking questions and investigating (more specifically, to look at phenomena more attentively, to explore more precisely, to observe, to describe, and to compare), within the domain of “motion, force and energy”: for this task, the focus was on floating and sinking, or buoyancy in different contexts. The full task consisted of eight questions. Some of the questions involved placing grapes in water; other questions involved loading weights in a small “ship” and placing it in water. Figure 5-4 shows an excerpt from the portion of the task that involves the ship (see Table 1-1 for the specific disciplinary core ideas, scientific practices, and crosscutting concepts assessed).

In the excerpt shown in the figure, the first two activities ask students to observe a weighted ship floating on water and to describe their observations. Students were given a cup half full of water, a small ship, four metal discs (two large discs and two small discs), and a candle.18 Students were instructed to (1) place the metal discs in the ship so they rested on the inside edge of the ship (i.e., off center); (2) place the ship into the water; (3) observe what happens; and (4) draw and describe in writing what they observed. The test proctor read the instructions out loud to the students and demonstrated how the discs should be placed in the ship and how the ship should be put into the water. The task included two additional activities. For one, students were asked to formulate a question and carry out an experiment to answer it. In the final section of the task, students were asked a series of questions about the type of information that could be learned from the experiments in the tasks. The figure shows the rubric and scoring criteria for the open-ended questions and the answer key for the final question. Sample responses are shown for the second activity.

As can be seen from this example, the task is about buoyancy, but it does not focus on assessing students’ knowledge about what objects float (or do not float) or why objects float (or do not float). It also does not focus on students’ general skill in observing a phenomenon and describing everything that has been observed. Instead, students had to recognize that the phenomenon to observe is about floating and sinking—more specifically, that when weights are placed off center in the ship, they cause the ship to float at an inclined angle or even to sink. Moreover, they were expected to recognize the way in which the off-center

___________

18The candle could be used for other questions in the task.

load will cause the ship to tilt in the water. The task was specifically focused on the integration of students’ knowledge about floating and sinking with their skill in observing and describing the key information. And the scoring criteria were directed at assessing students’ ability to observe a phenomenon based on what they know about the phenomenon (i.e., what characteristics are important and how these characteristics are related to each other). Thus, the task provides an example of a set of questions that emphasize the integration of core ideas, crosscutting concepts, and practices.

Design of Performance Events

Drawing from the two state assessment program examples and the international assessment task example, we envision that this type of assessment, which we refer to as a “performance event,” would be composed of a set of tasks that center on a major science question. The task set could include assessment questions that use a variety of formats, such as some selected-response or short-answer questions and some constructed-response questions, all of which lead to producing an extended response for a complex performance task. The short-answer questions would help students work through the steps involved in completing the task set. (See below for a discussion of ways to use technological approaches to design, administer, and score performance events.)

Each of the performance events could be designed to yield outcome scores based on the different formats: a performance task, short constructed-response tasks, and short-answer and selected-response questions. Each of these would be related to one or two practices, core ideas, or crosscutting concepts. A performance event would be administered over 2 to 3 days of class time. The first day could be spent on setting up the problem and answering most or all of the short- and long-answer constructed-response questions. This session could be timed (or untimed). The subsequent day(s) would be spent conducting the laboratory (or other investigation) and writing up the results.

Ideally, three or four of these performance assessments would be administered during an academic year, which would allow the task sets to cover a wide range of topics. The use of multiple items and multiple response types would help to address the reliability concerns that are often associated with the scores reported for performance tasks (see Dunbar et al., 1991). To manage implementation, such assessments could be administered during different “testing windows” during the spring or throughout the school year.

Use of multiple task sets also opens up other design possibilities, such as using a hybrid task sampling design (discussed above) in which all students at a grade level receive one common performance task, and the other tasks are given to different groups of students using matrix sampling. This design allows the common performance task to be used as a link for the matrix tasks so that student scores could be based on all of the tasks they complete. This design has the shortcoming of focusing the link among all the tasks on one particular task—thus opening up the linkage quality to weaknesses due to the specifics of that task. A better design would be to use all the tasks as linking tasks, varying the common task across many classrooms. Although there are many advantages to matrix-sampling approaches, identifying the appropriate matrix design will take careful consideration. For example, unless all the performance tasks are computer-based, the logistical and student-time burden of administering multiple tasks in the same classroom could be prohibitive. There are also risks associated with using all the tasks in an assessment in each classroom, such as security and memorability, which could limit the reuse of the tasks for subsequent assessments.19

The assessment strategies discussed above have varying degrees of overlap with the assessment plans that are currently in place for mathematics and language arts in the two Race to the Top Assessment Program consortia, the Partnership for Assessment of Readiness for College and Careers and the Smarter Balanced Assessment Consortium (see Chapter 1). Both are planning to use a mixed model with both performance tasks and computer-based selected-response and construct-response tasks (K-12 Center at Educational Testing Service, 2013). The different task types will be separated in time with respect to administration and in most grades the total testing time will be 2 or more hours.

Classroom-Embedded Assessment Components

As noted above, one component of a monitoring system could involve classroom-embedded tasks and performances that might be administered at different times in a given academic year so as to align with the completion of major units of instruction. These instructional units and assessments would be targeted at various sets of standards, such as those associated with one or more core ideas in the life sciences. Such a classroom-embedded assessment would be designed to cover more selective aspects of the NGSS and would be composed of tasks that require written constructed responses, performance activities, or both. We discuss three

___________

19This format can also be viewed in terms of “replacement units”: see discussion below.

options that involve the use of classroom-embedded assessment activities: replacement units, collections of performance tasks, and portfolios of work samples and projects

Replacement Units

Replacement units are curricular units that have been approved centrally (by the state or district) and made available to schools. They cover material or concepts that are already part of the curriculum, but they teach the material in a way that addresses the NGSS and promotes deeper learning. They are not intended to add topics to the existing curriculum, but rather to replace existing units in a way that is educative for teachers and students. The idea of replacement units builds from Marion and Shepard (2010).

Given the huge curricular, instructional, and assessment challenges associated with implementing the NGSS, replacement units would be designed to be used locally as meaningful examples to support capacity to implement the NGSS, as well as to provide evidence of student performance on the NGSS. The end-of-unit standardized assessment in the replacement unit would include performance tasks and perhaps short constructed-response tasks that could be used to provide data for monitoring student performance. The assessments could be scored locally by teachers or a central or regional scoring mechanism could be devised.

The units could be designed, for instance, by state consortia, regional labs, commercial vendors, or other groups of educators and subject-matter experts around a high-priority topic for a given grade level. Each replacement unit would include instructional supports for educators, formative assessment probes, and end-of-unit assessments. The supports embedded in the replacement units would serve as a useful model for trying to improve classroom assessment practices at a relatively large scale. In addition, the end-of-unit assessments, although not necessarily useful for short-term formative purposes, may serve additional instructional uses that affect the learning of future students or even for planning changes to instruction or curriculum for current students after the unit has been completed.

Collections of Performance Tasks

A second option would be for a state or district (or its contractors) to design standardized performance tasks that would be made available for teachers to use as designated points in curriculum programs. Classroom teachers could be trained to score these tasks, or student products could be submitted to the district or state

and scored centrally. Results would be aggregated at the school, district, or state level to support monitoring purposes.

This option builds on an approach that was until recently used in Queensland, Australia, called the Queensland Comparable Assessment Tasks (QCATs). The QCAT consists of performance tasks in English, mathematics, and science that are administered in grades 4, 6, and 9. They are designed to engage students in solving meaningful problems. The structure of the Queensland system gives schools and teachers more control over assessment decisions than is currently the case in the United States. Schools have the option of using either centrally devised QCATs, which have been developed by the Queensland Studies Authority (QSA), with common requirements and parameters and graded according to a common guide, or school-devised tasks, which are developed by schools in accord with QSA design specifications.

The QCATs are not on-demand tests (i.e., not given at a time determined by the state); schools are given a period of 3-4 months to administer, score, and submit the scores to the QSA. The scores are used for low-stakes purposes.20 Individual student scores are provided to teachers, students, and parents for instructional improvement purposes. Aggregate school-level scores are reported to the QSA, but they are not used to compare the performance of students in one school with the performance of students in other schools. The scores are considered to be unsuitable for making comparisons across schools (see Queensland Studies Authority, 2010b, p. 19). Teachers make decisions about administration times (one, two, or more testing sessions) and when during the administration period to give the assessments, and they participate in the scoring process.

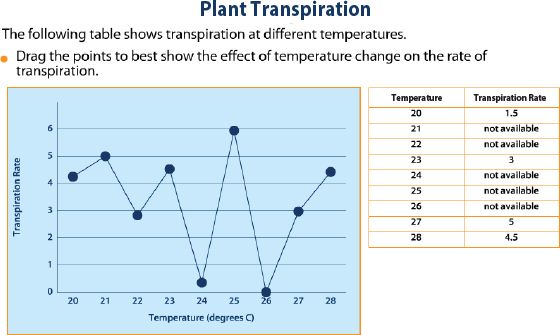

Assessment Task Example 11, Plate Tectonics: An example of a performance task that might be used for monitoring purposes is one that was administered in a classroom after students had covered major aspects of the earth and space science standards. It is taken from a program for middle school children in the United States that provided professional development based on A Framework for K-12 Science Education: Practices, Crosscutting Concepts, and Core Ideas (National Research Council, 2012a) and training in the use of curriculum materials aligned

___________

20Low-stakes tests are those that do not directly affect a decision about any student or teacher.

to the framework. It was designed and tested as part of an evaluation of a set of curriculum materials and associated professional development.21

The task was given to middle school students studying a unit on plate tectonics and large-scale system interactions (similar to one of the disciplinary core ideas in the NGSS). The assessment targets two performance expectations linked to that disciplinary core idea. The task, part of a longer assessment designed to be completed in two class periods, is one of several designed to be given in the course of a unit of study. The task asks students to construct models of geologic processes to explain what happens over hot spots or at plate boundaries that leads to the formation of volcanoes. The students are given these instructions:

- Draw a model of volcano formation at a hot spot using arrows to show movement in the model. Be sure to label all parts of your model.

- Use your model to explain what happens with the plate and what happens at the hot spot when a volcano forms.

- Draw a model to show the side view (crosssection) of volcano formation near a plate boundary (at a subduction zone or divergent boundary). Be sure to label all parts of your model.

- Use your model to explain what happens when a volcano forms near a plate boundary.

In parts A and B of the task, students are expected to construct a model of a volcano forming over a hot spot using drawings and scientific labels, and they are to use this model to explain that hot spot volcanoes are formed when a plate moves over a stationary plume of magma or mantle material. In parts B and C, students are expected to construct a model of a volcano forming at a plate boundary using drawings and scientific labels and then use this model to explain volcano formation at either a subduction zone or divergent boundary.

The developers drew on research on learning progressions to articulate the constructs to be assessed. The team developed a construct map (a diagram of

___________

21Although the task was designed as part of the evaluation, it is nevertheless an example of a way to assess students’ proficiency with performance expectations like those in the NGSS. The question being addressed in the evaluation was whether the professional development is more effective when the curriculum materials are included than when they are not. Teachers in a “treatment” condition received professional development and materials needed to implement Project-Based Inquiry Science, a comprehensive, 3-year middle school science curriculum. The research team used evidence from the task discussed in this report, in combination with other evidence, to evaluate the integrated program of professional development and curriculum.

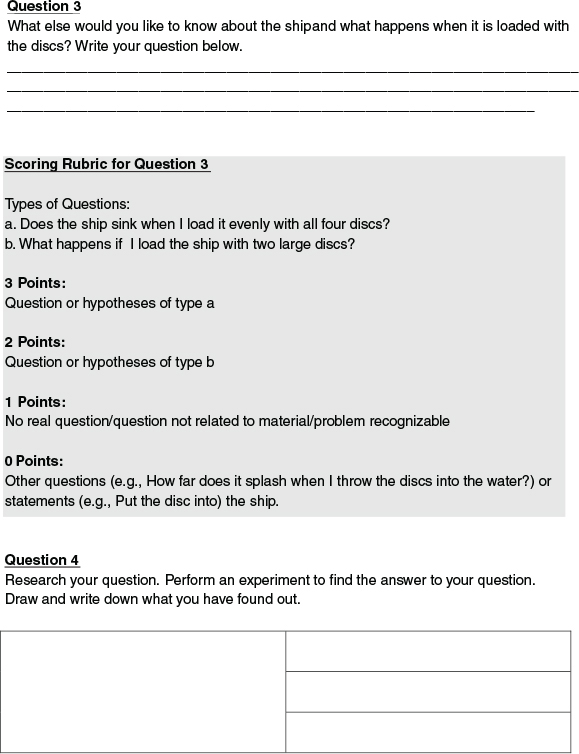

TABLE 5-2 Scoring Rubric for Task on Volcano Formation

| Score Point | Descriptor B | |

| +1 | The explanation states or drawing clearly shows that a volcano forms when magma from the hot spot rises and breaks through the crust. | |

| +1 | The explanation states or drawing clearly shows that the hot spot in the mantle stays in the same place and/or states that the crust/plate moves over it. | |

| 0 | Missing or response that cannot be interpreted. | |

thinking and understanding in a particular area; see Chapter 3) that identified disciplinary core ideas and key science practices targeted in the unit, which was based on research on how students learn about the dynamics of Earth’s interior (Gobert, 2000, 2005; Gobert and Clement, 1999) and on research on learning progressions related to constructing and using models (Schwarz et al., 2009).

The scoring rubric in Table 5-2 shows how the task yields evidence related to the two performance expectations. (The developers noted that the task could also be used to generate evidence of student understanding of the crosscutting concepts of pattern and scale, although that aspect is not covered in this rubric.) The scoring rubric addressed the middle school performance expectations, as well as the range of student responses generated from a field test of the task. Field testing verified that students could provide explanations as part of their responses to the task that matched the researchers’ expectations (Kennedy, 2012a,b).

Scores on the component sections of the task set were used to produce a single overall score (the individual parts of the item are not independent, so the task does not generate usable subscores). Taken together, the components demonstrate the “completeness” of a student’s skill and knowledge in constructing models to explain how volcanoes form. To earn a top score for parts A and B, not only must students label key parts of their models (crust, plates, magma, and mantle) with arrows showing the mechanism involved, they must also provide an explanation of or clearly show how volcanoes form over a hot spot.

Figure 5-5 illustrates two students’ different levels of performance on parts A and B. The drawing on the left received a combined score of 4 points (of a possible total of 5) for constructing a model because it includes labels for the mantle, magma, crust, volcano, and a hot spot. Arrows show the movement of crust, and the student has written a claim (below the drawing), “The hot spot allows magma to move up into the crust where it forms a volcano.” The drawing includes the correct labels, shows some direction in the movement of the crust, and mentions

FIGURE 5-5 Two student responses to task on volcano formation.

SOURCE: SRI International (2013). Reprinted with permission.

magma moving up and penetrating the crust, to form a volcano. However, the student did not write or draw about the plate moving across the hot spot while the hot spot stays in the same place, so the model is incomplete.

The drawing on the right received only 1 point for parts A and B. It included a drawing of a volcano with magma and lava rising up, with the claim, “The magma pushes through the crust and goes up and erupts.” The student’s drawing does not show anything related to a hot spot, although it does mention that rising magma pushes up through the crust causing an eruption, for which the student earned partial credit.

A score on this task contributes one piece of evidence related to the performance expectations. A similar rubric is used to score parts C and D. These scores are combined with those on other tasks, given on other days, to provide evidence of student learning for the entire unit. No attempt is made to generate separate scores for the practice (developing models) and the knowledge because the model is a part of the way students are representing their knowledge in response to the task: these two aspects of practice and knowledge are not separable.

Portfolio of Work Samples and Projects

A third option for classroom-embedded assessments would be for a state or district to provide criteria and specifications for a set of performance tasks to be completed and assembled as work samples at set times during the year. The tasks might include assignments completed during a school day or homework assignments or both. The state or local school system would determine the scoring rubric and criteria for the work samples. Classroom teachers could be trained to

score the samples, or the portfolios could be submitted to the district or state and scored centrally.

An alternative or complement to specifying a set of performance tasks as a work sample would be for a state or district to provide specifications for students to complete one or more projects. This approach is used internationally in Hong Kong; Queensland and Victoria, Australia; New Zealand; and Singapore. In these programs, the work project is a component of the examination system. The projects require students to investigate problems and design solutions, conduct research, analyze data, write extended papers, and deliver oral presentations describing their results. Some tasks also include collaboration among students in both the investigations and the presentations (Darling-Hammond et al., 2013).

Maintaining the Quality of Classroom-Embedded Components

The options described above for classroom administration as part of a monitoring assessment program introduce the possibility of local (district or school) control over certain aspects of the assessments, such as developing the assessments and involving teachers in administration or scoring the results. For these approaches to work in a monitoring context, procedures are needed to ensure that the assessments are developed, administered, and scored as intended and that they meet high-quality technical standards. If the results are to be used to make comparisons across classrooms, schools, or districts, strategies are needed to ensure that the assessments are conducted in a standardized way that supports such comparisons. Therefore, techniques for standardizing or auditing across classrooms, schools, and districts, as well as for auditing the quality of locally administered assessments, have to be part of the system.

Several models suggest possible ways to design quality control measures. One example is Kentucky’s portfolio program for writing, in which the portfolios are used to provide documentation for the state’s program review.22 In Wyoming, starting officially in 2003, a “body of evidence system” was used in place of a more typical end-of-school exit exam. The state articulated design principles for the assessments and allowed districts to create the measures by which students would demonstrate their mastery of graduation requirements. The quality of the

___________

22In Kentucky, the state-mandated program review is a systematic method of analyzing the components of an instructional program. In writing, the portfolios are used, not to generate student scores, but as part of an evaluation of classroom practices. For details, see http://education.ky.gov/curriculum/pgmrev/Pages/default.aspx [November 2013].

district-level assessments was monitored through a peer review process, using reviewers from all of the districts in the state (see National Research Council, 2003, pp. 30-32). Several research programs have explored “teacher moderation” methods. Moderation is a set of processes designed to ensure that assessment results (for the courses that are required for graduation or any other high-stakes decision) match the requirements of the syllabus. The aim of moderation is to ensure comparability; that is, that students who take the same subject in different schools or with different teachers and who attain the same standards through assessment programs on a common syllabus will be recognized as at the same level of achievement. This approach does not imply that two students who are recognized as at the same level of achievement have had exactly the same collection of experiences or have achieved equally in any one aspect of the course: rather, it means that they have on balance reached the same broad standards.

One example is the Berkeley Evaluation and Assessment Research Center, in which moderation is used not only as part of assessments of student understanding in science and mathematics, but also in the design of curriculum systems, educational programs, and teacher professional development.23 Two international programs that use moderation, the Queensland program and the International Baccalaureate (IB) Program, are described in the rest of this section. The New Zealand Quality Assurance system provides another example.24

Example: Queensland Approach

Queensland uses a system referred to as “externally moderated school-based assessment” for its senior-level subject exams given in grades 11 and 12.25 There are several essential components of the system:

- syllabuses that clearly describe the content and achievement standards,

- contextualized exemplar assessment instruments,

- samples of student work annotated to explain how they represent different standards,

___________

23For details, see Draney and Wilson (2008), Wilson and Draney (2002), and Hoskens and Wilson (2001).

24 For details, see http://www.k12center.org/rsc/pdf/s3_mackrell_%20new_zealand_ncea.pdf [November 2013].

25The description of the system in Queensland is drawn from two documents: School-Based Assessment (Queensland Studies Authority, 2010b) and Developing the Enabling Contest for School-Based Assessment in Queensland, Australia (Allen, 2012).

- consensus through teacher discussions on the quality of the assessment instruments and the standards of student work,

- professional development of teachers, and

- an organizational infrastructure encompassing an independent authority to oversee the system.

Assessment is determined in the classroom. School assessment programs include opportunities to determine the nature of students’ learning and then provide appropriate feedback or intervention. This is referred to as “authentic pedagogy.” In this practice, teachers do not teach and then hand over the assessment that “counts” to external experts to judge what the students have learned: rather, authentic pedagogy occurs when the act of teaching involves placing high-stakes judgments in the hands of the teachers.

The system requires a partnership between the QSA and the school.

The QSA

- is set up by legislation;

- is independent from the government;

- is funded by government;

- provides students with certification;

- sets the curriculum framework (or syllabus) for each subject within which schools develop their courses of study;

- sets and operates procedures required to ensure sufficient comparability of subject results across the state; and

- designs, develops, and administers a test of generic skills (the Queensland Core Skills Test) with the primary purpose of generating information about groups of students (not individuals)

For each core subject (e.g., English, mathematics, the sciences, history):

- The central authority sets the curriculum framework.

- The school determines the details of the program of the study in this subject, including the intended program of assessment (the work program).

- The central authority approves the work program as meeting the requirements of the syllabus, including the assessment that will be used to determine the final result against standards defined in the syllabus.

- The school delivers the work program.

- The school provides to the central authority samples of its decision making about the levels of achievements for each of a small number of students on two occasions during the course (once in year 11 and once in year 12) with additional information, if required, at the end of year 12.

- Through its district and state panels, the central authority reviews the adequacy of the school’s decision making about student levels of achievement on three occasions (once in year 11 and twice in year 12). Such reviews may lead to recommendations to the school for changes in its decisions.

- The central authority certifies students’ achievement in a subject when it is satisfied that the standards required by the syllabus for that subject have been applied by the school to the work of students in that subject.