When Jessica Smay1 took a position teaching geosciences and astronomy at San Jose City College in California in 2006, she was already persuaded of the value of interactive instruction. In her previous teaching job at South Suburban College, a community college in Illinois, she had attended a Center for Astronomy Education workshop and had started implementing ConcepTests. She had also begun to develop lecture tutorials in geosciences with her colleague Karen Kortz and was using them in her classes. With her move to a new community college with a highly diverse student body, Smay wanted to fine-tune some of her teaching strategies.

One approach Smay used to determine how well her students were learning was simply to listen to students’ reasoning as they discussed a clicker question with a partner or worked in small groups on a tutorial. Smay would “walk around the classroom and see how the students were talking about or answering the questions—their thought processes,” she says. If a student seemed confused, “I would say, ‘How did you get this answer?’ and they would talk me through it.” Based on these discussions, Smay realized that in some cases she was expecting her students to “make too big of a leap” in their progression toward more accurate understanding, so she would revise a learning activity to provide students with more scaffolding. Some of the student misconceptions that emerged during these discussions also helped her to design better ConcepTests.

In a student-centered undergraduate classroom, many of the learning activities themselves are a form of assessment that provide instructors with richer information about students’ understanding than they could obtain from traditional assessments and lecture-based instruction. While this is just one of several sources of assessment data in student-centered classes, it can be a valuable one, according

________________

1 Interview, May 21, 2013.

to Edward Price,2 a physics professor at California State University San Marcos. “Very early in my experience as an instructor, I would often wonder what students were thinking, what they were getting from what we were doing in class. Other than just the ‘nodding head’ index of how many of them were asleep, you don’t really know in a large lecture class.” When Price began using ConcepTests, he found that the answers students gave to these questions and the discussions they had about them provided him with the immediate feedback he craved.

As you gain experience with research-based instruction, you will likely find that the same traditional tests—whether end-of-chapter textbook quizzes or the questions typically found on midterms or finals—do not adequately measure the kinds of conceptual understanding you want your students to develop. Student-centered approaches to teaching and learning call for different methods of assessment.

Student-centered instruction may also necessitate new ways of using technology. While technologies, ranging from clickers to interactive simulations of scientific processes, open up additional possibilities for instruction, their effectiveness hinges on how well they are implemented and whether they are aligned with sound pedagogy.

This chapter addresses three issues that often arise in the early stages of designing an effective, research-based approach to teaching undergraduate science and engineering.3 The first is the appropriate use of assessment, which is an essential part of research-based instruction. The second is the effective use of technology, which has become commonplace in science and engineering classrooms. A third issue, the redesign of classroom spaces to support active learning, is by no means a prerequisite; many instructors have implemented research-based strategies quite well in whatever learning spaces are available, from large traditional lecture halls to smaller rooms with fixed desks. This issue is included here to show what can be done when changing the learning space is an option.

In a student-centered undergraduate classroom, many of the learning activities themselves are a form of assessment that provide instructors with richer information about students’ understanding than they could obtain from traditional assessments and lecture-based instruction.

________________

2 Interview, August 23, 2013.

3 Much of the information in this chapter is drawn from the 2012 National Research Council report on discipline-based education research; see, in particular, Chapter 4 of that report for more information about assessment of conceptual understanding; and Chapter 6 for more about technology and redesign of learning spaces.

Assessment and Course Evaluation

In a research-based science or engineering classroom, assessment serves several critical purposes for both instructors and students that go beyond the need to determine course grades. The most immediate purpose for instructors, and one that will help you with implementing the ideas in this book, is to obtain frequent feedback about what students know, how well they are learning, and where they are having difficulties. You can then use this feedback to modify your teaching to enhance student learning. The literature on assessment often refers to this as formative assessment. Formative assessments also serve an important purpose for students by providing them with information they can use to gauge their own learning and adjust how they study.

Summative assessments, which evaluate students’ performance against a standard or benchmark at the end of a unit, in midterm, or at the end of a semester, continue to have a place in research-based instruction. They can tell you how students have progressed in their learning and can be used to determine students’ grades. In addition, summative assessments can help you evaluate the effectiveness of your course design and determine which aspects to adjust in future iterations of the course.

Roles of assessment in a research-based course

Effective formative assessments conducted during the course of classroom instruction can make students’ thinking “visible” to the instructor and the students themselves, notes the National Research Council (NRC) report Knowing What Students Know: The Science and Design of Educational Assessment (National Research Council, 2001, p. 4). They can reveal students’ preconceptions and help both instructors and students monitor students’ progress from a naïve to a more expert-like understanding. In a research-based classroom, formative assessments are not quizzes that students can ace by memorizing material the night before; rather, they should provide students with opportunities to revise and improve their thinking and help teachers identify problems with learning (National Research Council, 2001).

Rick Moog, a chemistry professor at Franklin & Marshall College, came to understand the various roles of assessment in the 1990s, when he began developing the group learning activities that would later evolve into Process Oriented Guided Inquiry Learning (POGIL).

But How Did You Arrive at That Answer?

ASSESSMENT IN AN ACTIVITY-BASED CLASSROOM

Rick Moog in his POGIL chemistry classroom.

Rick Moog,a the executive director of The POGIL Project (see Chapter 4 for a description), had already begun experimenting with ways to make his lectures more interactive when he attended a workshop on student-centered learning and assessment. After the workshop, he was determined to move away from lecturing in his general chemistry class and have students learn by doing group activities. And this, he realized, would require a different approach to assessment. “Historically, at least in my experience, instruction in science and math has really emphasized being able to generate correct answers to certain kinds of questions,” he says. He attributes this to the nature of exams and the traditional approach to instruction—“which is to tell students what you want them to tell you back.” But in a classroom that stresses process skills, critical thinking, problem solving, group work, and self-assessment, he realized he needed an assessment approach that was compatible with these goals. Moog explains:

Most people, if you provide them simultaneously with formative feedback and evaluative feedback, pretty much ignore the formative feedback and focus only on summative feedback. For example, if a student hands in a lab report and you write all these comments on it about things they need to improve and how they could improve them, and then you write B+ at the top, many students only look at the B+. They either are or are not satisfied, but regardless, they put it in their book bag, and the next lab report you get from them has all the same issues…. If you want your students to actually get better at something, you need to find ways to provide them with constructive formative assessment that is independent of summative assessment.

________________

a Except where noted, the information in this case study comes from an interview with Richard Moog, May 1, 2013.

Moog says this insight changed his approach to assessment. In POGIL classrooms, he explains, how a student arrives at an answer is at least as important as generating the correct answer. In his tests, he includes some traditional questions, such as asking students to calculate the pH of a particular solution. But he also includes questions that ask students to mark whether a particular statement is true or false and explain their reasoning. Students receive no points for a correct true or false answer; instead, credit is based solely on their explanation of their reasoning. In these explanations, students are expected to discuss what information they used to arrive at the answer and how they analyzed the statement. It’s not uncommon, he says, for students to write out their reasoning and then go back and cross out or erase their original true or false answer and replace it with the opposite one.

In addition, Moog notes, the activities that students do in his POGIL classes are themselves a type of formative assessment. He starts each 80-minute class with a 5-minute quiz to check how well students understand the material they studied in the last class, after which he reviews any points that are unclear. Students then work in small groups on a learning activity while the instructor facilitates. To explore the concept of atomic number, for example, students analyze diagrams of atoms that identify the element and show the number and location of the protons, neutrons, and electrons in each atom. Students then answer a series of questions that guide them toward recognizing that all of the atoms with the same number of protons are identified as the same element—and that this number corresponds with the number on the periodic table for that element (Moog et al., 2006). Time is set aside at the end of each activity for students to reflect on and summarize what they have learned. Throughout this process of group work, debate, discussion, and reflection, both the students and the instructor receive feedback that helps them gauge students’ level of understanding.

As the Moog example shows, such research-based activities as group project work and students’ writings and reflections function as formative assessment as well as learning exercises. And as the Smay example at the beginning of the chapter illustrates, instructors can get rapid formative feedback simply by listening to student discussions during group work, such as the Peer Instruction component of a clicker question or a Think-Pair-Share activity done without clickers. With this type of formative feedback, instructors can adjust their teaching on the spot to clarify misunderstandings or address student questions. A study of geosciences classes that used ConcepTests and Peer Instruction to provide immediate feedback found a substantial improvement in students’ scores on the Geoscience Concept Inventory (McConnell et al., 2006). A similar approach that used Peer Instruction with clicker questions in four introductory computer science courses reduced the student failure rate by 67 percent (Porter, Bailey-Lee, and Simon, 2013).

Something as low-tech as a whiteboard can be a useful formative assessment tool, says Eric Brewe,4 a physics instructor at Florida International University (FIU). By walking around and looking at students’ collaborative work on whiteboards, the instructor can quickly see how the groups are doing. “Without even talking to them, you can see what they’re thinking. And sometimes you look at a whiteboard and see you need to step in and guide them in a different direction,” he explains.

The importance of alignment

Various studies and guides to instructional design emphasize the importance of considering assessment in conjunction with content (or curriculum) and instruction (or pedagogy) (see, for example, Streveler, Smith, and Pilotte, 2012; Wiggins and McTighe, 2005). Aligning these three essential components of content, assessment, and instruction can serve as the backbone for designing or redesigning a course. The first step in the alignment process, write Streveler, Smith, and Pilotte, is to develop learning goals for what students should know and be able to do, which they see as “the activity that links content with assessment” (2012, p. 15). The next step, they propose, is to determine what constitutes acceptable evidence that students have met the learning goals—the assessment element—in the form of both summative and formative assessments. The final step is to decide on instructional strategies that will support the kind of learning embodied in the learning goals.

________________

4 Interview, April 16, 2013.

The NRC report Knowing What Students Know recommends that assessment be grounded in a scientifically credible model of how students learn—in particular, how they represent knowledge and develop expertise in a domain, such as a particular science discipline or engineering (National Research Council, 2001). This model can guide the choice of assessment tasks that will prompt students to say, do, or create something that demonstrates important knowledge and skills.

Instructors often include questions on their assessments that elicit the kinds of conceptual understanding and thinking skills they want students to develop in their classes. “If you believe it’s important for students to learn concepts in an introductory course, then you better also test them on that,” says David Sokoloff,5 a physics professor and discipline-based education research (DBER) scholar at the University of Oregon. “You don’t often test them on concepts by giving them problems straight out of the textbook. You have to include conceptual questions on the test you use to grade them.” Students get so accustomed to solving problems by memorizing algorithms that if a professor puts a question on the exam that deviates only slightly from the homework problems, “students will write that you were unfair because the problems on the exam were different,” he notes. Sokoloff uses a combination of open-ended questions and multiple-choice “conceptual evaluations” with several incorrect “distractor” responses based on research about common student misunderstandings.

In his physics classes at FIU, Eric Brewe6 also espouses the principle that “assessment should reflect what you’re teaching.” In addition to including questions about content that prospective physics majors would be expected to learn, he assesses how students use and interpret models or representational tools. And because he teaches an integrated laboratory and lecture course, he also asks exam questions that require students to use some type of lab apparatus.

________________

5 Interview, July 10, 2013.

6 Interview, April 16, 2013.

Methods of assessment in research-based courses

A wide range of assessment methods are compatible with research-based approaches to teaching and learning. You don’t need to design your own assessments; many research-validated instruments or items are readily available through resource centers, universities, professional organizations, and other sources. In deciding which assessment methods to use, you should consider how well the assessment aligns with your learning goals (as discussed above), which specific aspects of learning and teaching you are trying to measure, and how you intend to use the results. Assessment methods will vary depending on purpose.

Because different types of assessment have various strengths and weaknesses, researchers suggest using multiple forms of assessment, rather than relying on a single form, to obtain the richest information about students’ learning (National Research Council, 2001). For example, if you are using a validated multiple-choice instrument to measure conceptual knowledge, you might supplement that with an assessment that requires students to write about and reflect on their learning to probe other aspects of their understanding and to encourage metacognition.

Steven Pollock and his colleagues in the physics education research group at the University of Colorado Boulder have employed different assessment methods at different stages of transforming several upper-division physics courses (Pollock et al., 2011). In the course design stage, they use student observations and surveys, analyses of student work, and interviews with previous instructors of the courses to investigate common student difficulties and determine what to teach. For formative classroom assessments, they use clicker questions and tutorials to pinpoint and address student difficulties. At the end of the course, they use a standard validated post-test and faculty interviews to assess student learning and evaluate the impact of the changes.

The sections that follow describe some of the most common assessment methods used in research-based courses.

Assessments of conceptual understanding

Improving students’ conceptual understanding is a primary goal of research-based practice. Several research-validated tools have been developed to assess students’ initial level of understanding and to measure their learning after instruction. Two common types are concept inventories and ConcepTests.

Concept inventories assess students’ understanding of the core concepts of a discipline and are often administered as pre- and post-tests. Results of these inventories can also be used to compare learning gains across different sections or courses. Typically these inventories use a multiple-choice format, but they differ from traditional multiple-choice tests in that the questions and answers—including the distractor responses—have been developed through research on common student misunderstandings and erroneous ideas.

The widely used Force Concept Inventory (FCI) in physics is an early example of this type of assessment (Hestenes, Wells, and Swackhamer, 1992). The FCI consists of multiple-choice questions about the concept of force, which is central to understanding Newtonian mechanics, and many of the incorrect responses (distractors) are based on commonsense beliefs about these topics. Concept inventories have also been developed for other science disciplines and for engineering. These inventories can be used in large or small classes and with a range of students, which makes them useful for various types of comparative research.

The inventories vary in terms of their sophistication and validation methods, and the best ones have been validated in many instructional contexts. Like all multiple-choice tests, concept inventories address a relatively coarse level of knowledge and provide no guarantee that a student who answers such a question understands the concept, as noted in Chapter 4 of the 2012 NRC report on DBER. Thus, users should be attentive to the specific purposes for which a particular inventory has been designed, and to use the right assessment tool for the job.

ConcepTests, discussed in Chapters 2 and 4, are short formative assessments of a single concept.

Student writing as formative assessment

Many science and engineering instructors use short writing assignments to assess students’ understanding and to develop their metacognitive skills. The reading reflections and the “muddiest points” reflections described in Chapter 3 are two examples. Other possibilities include writing “prompts” that require students to articulate their thinking in depth about a thought-provoking question or apply what they have learned to a real-world situation; and writing “one-minute papers” in which students identify the most important thing they learned and a point that remains unclear to them. These and other types of writing assignments can be done in a few minutes and can reveal different information about student learning than a multiple-choice question would.

Ed Prather7 of the Center for Astronomy Education at the University of Arizona is a proponent of short, in-class writing assignments as a way to intellectually engage students and gather highly discriminating information that instructors can use to revise their teaching. In some cases, he says, instructors who thought their teaching was going well based on student responses to clicker questions are “in shock about how difficult it seems to be for their students to articulate a complete and coherent answer” to a writing prompt on the topic of the clicker question. Here are a few examples of prompts for five-minute writing assignments that Prather uses in his classes and offers in his professional development workshops on interactive learning (Prather, 2010):

- Explain how light from the Sun and light from Earth’s surface interact with the atmosphere to produce the Greenhouse Effect.

- What three science discoveries made during the past 150 years have made the greatest impact on mankind’s prosperity and quality of life? Explain the reasoning for your choices.

- What about the enterprise of science makes it different than business?

________________

7 Interview, April 29, 2013.

Erin Dolan,8 a biology professor at the University of Georgia, often requires students to write about case studies of realistic situations in her biochemistry classes. “If you ask [students] to write something and they have to think about what they’ve written, they often recognize when they don’t understand something,” she explains, and they will ask for help to resolve their misunderstanding. For example, Dolan provides students with data from a case study of a patient with a biochemically based disorder and asks the students to explain in writing why the patient is experiencing particular symptoms, what might be causing them, and how they used the data to reach their conclusion. Her exams include similar types of open-ended questions based on case studies.

The time required to grade writing assignments and essay questions can be a deterrent to using them in large classes. Some instructors have relied on calibrated peer grading as a way to overcome this obstacle, particularly for low-stakes assessments in introductory courses; grading each other’s written responses can also be a learning experience for students (see, for example, Freeman and Parks, 2010).

Assessments of group work

Because students often work in groups in research-based classes, some instructors have incorporated a group dimension into their assessments. These approaches require students to direct their collaborative skills toward an end result that “counts”—just as professionals collaborate on work products—and enables instructors to assess collaborative skills as well as individual knowledge. As students work together on assessment questions or problems, they must defend their reasoning and listen to others, so the assessment itself becomes a group learning activity.

Group assessment can be challenging. Smith (1998) and many others recommend including assessments of individual as well as group performance, even in a cooperative learning environment. In addition, grading assessments on a curve, in which students are graded relative to the performance of others and grades are assigned to fit into a predetermined distribution, does not align well with the spirit of cooperative learning.

Instructors have developed various approaches for combining group and individual forms of assessment in the same course. A popular one is the “two-stage” exam, which students first do individually and then redo collaboratively.

________________

8 Interview, July 2, 2013.

Two-stage exams are relatively easy to implement, can benefit learning, and are consistent with collaborative approaches to learning (Yuretich et al., 2001).

Mark Leckie and Richard Yuretich were early innovators of the two-stage exam approach in their geosciences courses at the University of Massachusetts Amherst (see Box 5.1).

BOX 5.1 TWO-STAGE EXAMS—ASSESSMENTS THAT PRODUCE LEARNING

How does an instructor balance the need for individually graded performance with the philosophy of cooperative learning? Geosciences professors Mark Leckie and Richard Yureticha at UMass Amherst have come up with a method that Leckie describes as “very, very successful” and Yuretich calls the “number one” most effective piece of their course. Since the late 1990s, the instructors, who teach an oceanography course built on findings from research, have administered two-stage exams (Yuretich et al., 2001). These exams, which consist of about 25 multiple-choice questions, are given after each unit, or five times during the semester, in addition to a comprehensive final exam. (See Chapter 1 for a description of Yuretich’s approach to pedagogy.)

The procedure goes like this: The students take the exam twice during a 75-minute class. The first time they take it individually. Then they turn in their answer sheets but keep the exam itself. The students are issued new answer sheets, and they retake the same exam “open book, open notes, and talking with their neighbors,” says Leckie. “The whole room erupts in conversation. It’s really kind of satisfying to watch them engage with each other. If you think about it, it becomes an active learning environment where they’re discussing and debating and trying to convince each other of what the right answer is.” While the few students who might be reluctant to “give away” their answers to others are not required to talk during this phase, most students

________________

a Except where noted, the information in this case study comes from interviews with Mark Leckie, March 22, 2013, and Richard Yuretich, April 4, 2013.

Other assessment methods

Many other assessment techniques are compatible with research-based approaches to teaching and learning. The examples that follow illustrate just some of the possibilities.

In her biology classes at Michigan State University, Diane Ebert-May9 bases her exam questions on daily learning objectives. The exams are aligned with the types of activities students do in class, such as analyzing real data, developing

welcome the opportunity. “I’ll never forget the first time we did it; people were exiting the room and thanking you for an exam,” says Leckie.

Seventy-five percent of a student’s grade is based on the individual part of the exam, and 25 percent on the group part. To encourage collaboration and open discussion during the group phase of the exam, a score on the group part is not counted if it lowers a student’s grade (Yuretich et al., 2001). The scoring is designed to be a “win-win,” Leckie explains. Typically, the group score bumps up a student’s grade a bit. “People learn that they can’t not study for a test and take it the second time and get 100 percent,” says Leckie.

By engaging students in active learning and critical thinking during the exam, the cooperative format has “increased the value of the exams as a learning experience,” according to a study by Yuretich and his colleagues (2001, p. 115). This study included comments from students to illustrate how students prepare for the exam and negotiate their answers during the group part (p. 116):

- “In one case, studying involved simulating the group process of the exam in the home: ‘I study with … five other people … we get in a big group and discuss it because we’re going to be doing that in class anyway, and I benefit from that.’”

- “Students stated that they will change their answer on the group part of the exam ‘usually because of peer pressure, but sometimes someone will give an explanation that sounds correct since they back it up with a scientific explanation.’”

- “one student’s group ‘[went] over each question, and it’s never just the answer is A, it’s always: well, no, I disagree, why is it A?’”

When Leckie and Yuretich give presentations around the country, they realize how the idea of a cooperative exam has caught on elsewhere. “I’ve always been interested to hear a junior faculty member describing for me this really fascinating two-stage exam she’s tried,” says Leckie. “It’s fun to hear it come back to you.”

________________

9 Interview, April 17, 2013.

models, and structuring arguments based on evidence. One question intended to assess students’ understanding of the dynamics of the carbon cycle—the sequence of events by which carbon is exchanged among Earth’s biosphere, geosphere, hydrosphere, and atmosphere—presents students with the following scenario (Ebert-May, Batzli, and Lim, 2003):

Grandma Johnson had very sentimental feelings toward Johnson Canyon, Utah, where she and her late husband had honeymooned long ago. Because of these feelings, when she died she requested to be buried under a creosote bush in the canyon. Describe below the path of a carbon atom from Grandma Johnson’s remains, to inside the leg muscle of a coyote. Be as detailed as you can be about the various molecular forms that the carbon atom might be in as it travels from Grandma Johnson to the coyote. NOTE: The coyote does not dig up and consume any part of Grandma Johnson’s remains.

To answer the question correctly, students must “trace carbon from organic sources in Grandma Johnson, through cellular respiration by decomposers and into the atmosphere as carbon dioxide, into plants via photosynthesis and biosynthesis, to herbivores via digestion and biosynthesis that eat the plants, and finally to the coyote, which consumes an herbivore” (D’Avanzo et al., n.d.). The question is also useful for identifying students’ misconceptions about carbon cycling in an ecosphere.

Robin Wright10 and her University of Minnesota colleagues who teach active learning biology courses based on the Student-Centered Active Learning Environment with Upside-down Pedagogies (SCALE-UP) model (see Chapter 4) “try in every way to represent the authentic work of the discipline” in their classes, and this extends to assessment. The “culminating experience” for each class, says Wright, is a poster session in which students present and discuss their team projects, which they have worked on for several weeks. In this type of authentic assessment, students demonstrate their mastery of essential knowledge and skills by performing a task of the sort that scientists do in their professional life. Students in Wright’s active learning courses also do take-home exams that consist of just a few essay questions. Students can raise their grade on these exams by doing a reflection piece in which they analyze their strengths and weaknesses, consider the sources of information they used to answer questions, and develop a strategy for improvement.

In chemistry, researchers have developed a Metacognitive Activities Inventory (MCAI) to assess students’ use of metacognitive strategies in problem

________________

10 Interview, April 12, 2013.

solving (Sandi-Urena et al., 2011). Data from this type of assessment can be used to determine interventions that are attuned to students’ metacognitive level.

In addition to assessing academic learning and cognitive processes, some instructors also assess the affective domain—students’ attitudes, beliefs, and expectations—which can influence their motivation to study science or engineering and their performance in these disciplines. An awareness of these characteristics can help instructors adjust their teaching to improve student learning, reduce attrition, and keep students in the science, technology, engineering, and mathematics (STEM) pipeline (McConnell and van der Hoeven Kraft, 2011). Several validated instruments are available to measure aspects of the affective domain.

Kaatje Kraft,11 formerly a geology instructor at Arizona’s Mesa Community College who currently teaches at Whatcom Community College in Washington, is one of more than a dozen instructors across the country who are participating in the Geoscience Affective Research NETwork (GARNET), a National Science Foundation (NSF)-funded effort to study the attitudes and motivation of students in introductory geology classes as a way to improve their learning. Kraft shares individualized pre- and post-responses from the GARNET assessments with her students and encourages them to use these data to reflect on how their motivation, self-regulation, and related characteristics affect their learning.

You should not feel limited by these examples. Many science and engineering instructors have adapted ideas from the general literature on assessment, such as the numerous techniques for classroom formative assessment suggested by Angelo and Cross (1993) and the general principles articulated in Knowing What Students Know (National Research Council, 2001). Additional assessment ideas are available in the 2012 NRC report on DBER and from professional networks and curriculum websites, such as On the Cutting Edge. The most important considerations are to choose assessments that are aligned with learning objectives and that engage authentic scientific or engineering thinking.

Evaluating the impact of instructional changes

The main reason for adopting research-based instructional practices is to improve students’ learning and academic success. To determine whether you are meeting this goal, you need to assess the impact of any changes you are making, just as you would in a research study in your discipline. As discussed in Chapter 2,

________________

11 Interview, June 13, 2013.

“You need to know what the results of your current practices are in terms of student learning, and you need to be able to compare them with what your reformed practices are. That was crucial to us. Otherwise it’s all anecdote.”

—John Belcher,

Massachusetts Institute of Technology

evaluating your teaching does not require you to do formal DBER studies, but it does mean analyzing some type of assessment data over time.

This type of evaluation of your courses can serve several purposes. It can indicate how well the reforms you have undertaken are working and reveal areas for future adjustments. It can help convince your department head and colleagues that your approaches are more effective than traditional instruction. It can provide evidence you can share with your students to explain why you are asking them to do certain things and how they are benefiting. And perhaps most importantly, it can convince you that the effort you’ve invested in reforming your teaching is worthwhile.

When John Belcher12 began the Technology-Enabled Active Learning (TEAL) project in his physics classes at the Massachusetts Institute of Technology (MIT), he had already seen earlier teaching innovations come and go—in some cases because they lacked sufficient evidence to demonstrate their effectiveness to colleagues and to students who complained about the changes. Belcher recognized that having good assessment data could help increase the staying power of an innovation like TEAL, which uses media-rich technology to help students visualize and hypothesize about conceptual models of electromagnetic phenomena (Dori and Belcher, 2005). Students in TEAL classes conduct desktop experiments and engage in other types of active learning in a specially designed studio space.

As part of the TEAL project, which began in 2000, Belcher and his colleague Yehudit Judy Dori, who was then on sabbatical from Technion–Israel Institute of Technology, developed pre- and post-tests to measure students’ conceptual understanding and determine the effectiveness of the visualizations and experiments. The TEAL students showed significantly higher gains in conceptual understanding of the subject matter than their peers in a control group (Dori and Belcher, 2005).

________________

12 Interview, July 9, 2013.

These assessment data were instrumental to TEAL’s survival, says Belcher. When TEAL first made the transition in 2003 from a small-scale pilot to a large-scale project affecting nearly 600 introductory students per semester, the shift caused some organizational upheaval, and additional faculty had to be trained. “We got a lot of pushback from students,” says Belcher. “If I hadn’t had a lot of quantitative numbers in terms of assessment, I think we would have died that first year. Someone would say, ‘They don’t like it,’ and I would say, ‘I know they don’t like it, but they’re learning twice as much.’ And I had reasonable proof that this was the case; it wasn’t just my anecdotal feeling. I think that carried us through the first couple of years.”

Belcher advises other practitioners who are undertaking major revisions in teaching to emphasize assessment in the early years. “You need to know what the results of your current practices are in terms of student learning, and you need to be able to compare them with what your reformed practices are. That was crucial to us. Otherwise it’s all anecdote—it would be my anecdote against other people’s anecdote[s].”

Many of the assessments described earlier in this chapter can be used to evaluate the impact of instruction as well as to assess individual students’ learning. For example, an instructor might compare the post-tests of a group of students taught through a research-based approach with a control group of traditionally taught students.

Finally, as the 2012 NRC report on DBER emphasizes, DBER is a young and growing field. Some instructors who begin by assessing the impact of instructional reforms in their own classrooms may find this a stimulating area of scholarship and may choose to develop their expertise by not only using DBER, but also conducting DBER. Those who are so inclined will find many opportunities for contributions from new scholars.

Technologies for teaching and learning have particular relevance to science and engineering education. Becoming adept at using the technological tools of a discipline is part of the practice of science and engineering. In addition, technologies for learning, when used well, can advance research-based instruction through their capacity to engage students, facilitate interaction, and enable students to use hands-on approaches to explore scientific phenomena. Many technological tools

have been expressly developed or adapted to suit the needs of research-based instruction. These range from the relatively simple, like clickers, to the more sophisticated, like interactive computer-based simulations.

The 2012 NRC report on DBER concludes that technologies for learning hold promise for improving undergraduate science and engineering but with this important proviso:

Research on the use of various learning technologies suggests that technology can enhance students’ learning, retention of knowledge, and attitudes about science learning. However, the presence of learning technologies alone does not improve outcomes. Instead, those outcomes appear to depend on how the technology is used. (p. 137)

Findings from research and advice from experienced practitioners about effective and ineffective uses of technologies can guide instructors’ decisions about when and how to use these tools.

Learning goals drive technology choices

Before deciding whether and how to use technology in a course and which technologies to use, you need a clear set of learning goals and good assessments. You can then consider how technology can assist in meeting these goals and measuring students’ progress. This point became clear to Edward Price, a physics professor at California State University San Marcos (CSUSM) as he experimented with a variety of technologies for teaching and learning physics.

“Don’t Erase That Whiteboard” and Other Lessons from Teaching with Technology

Clickers, whiteboards, videos, simulations, tablet PCs, and photo-sharing websites—Edward Pricea has tried these technologies and others in his physics classes at CSUSM. In the process, he has come to understand how various technologies affect classroom interactions and which tools work best with his teaching approach and student learning objectives.

“You have to have your own pedagogical goals and other goals for engaging the students, and that should drive your use of technology; it shouldn’t be the other way around,” says Price. “If you have some cool, neat toy it has to serve some purpose.”

With this basic principle in mind, Price has changed how he uses technology over time. Price and several colleagues have developed a research-based, active learning curriculum called Learning Physics for larger enrollment classes taught in lecture halls (Price et al., 2013a). The curriculum incorporates videos of experiments and hands-on activities that students can perform in groups on small, lecture-hall desks. The pedagogy is designed to enable students to develop a deep understanding of such concepts as the conservation of energy and Newton’s laws, as well as an understanding of important aspects of scientific thinking and the nature of science.

An earlier version of this curriculum used videos of experiments shot on the CSUSM campus but did not include a hands-on component. In many ways, the videos were successful, says Price. They took less time than hands-on experiments and they had some powerful features, such as allowing instructors to put two processes side by side to facilitate direct comparisons or to use a time-lapse feature for processes that took a long time. “But at the end of the day, doing experiments is an important practice in science. We wanted students to have an opportunity to do that for themselves,” he says.

In later versions of the curriculum, students watch videos of experiments for about half of the class period and do hands-on experiments for the other half. Videos are used for experiments that are not practical to do in a large class, such as those that require expensive or complicated equipment or take up too much time or desk space. Hands-on experiments are used to teach concepts that students can study with inexpensive materials in a reasonable time and to give them practice in interpreting observations.

Students conduct the experiments, which take from 5 to 15 minutes, in groups of three or four. For example, as part of a unit on magnetism, students float various materials on a styrofoam disk in a bowl of water and see if a magnet reacts to them and whether the interactions are different for a rubbed and an unrubbed magnet. To aid students in setting up the experiments properly without wasting time, the instructors provide short videos or photos of what the setup should look like.

Clickers also play a role in Price’s classes. After students have watched a video or a simulation, they discuss the outcome with a neighbor, and then they vote on their conclusions with clickers. When students conduct an experiment, they answer clicker questions as a way to establish a consensus about their results. “That kind of mirrors how things work in science, where you establish a consensus,” says Price. “Students appreciate that because

________________

a Except where noted, the information in this case study comes from an interview with Edward Price, August 23, 2013.

they have some uncertainty about whether the outcome observed is the one we intended them to see. The whole-class vote and discussion gives them a lot more confidence in doing the experiments.”

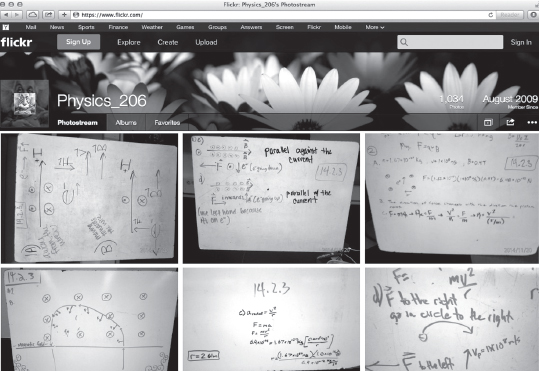

Students in Price’s courses often use whiteboards to brainstorm, solve problems, and present results to the rest of the class (Price et al., 2011). The low-tech whiteboard has several features that make it a suitable collaborative workspace. “It’s big, it’s very approachable, it’s easy to start with—I joke that the whiteboard always boots up,” says Price. The work that students display on whiteboards is a valuable record of their thinking, but this record is lost when the whiteboard is erased. Price and his colleagues hit upon a technological solution to this problem, which they tried out in an introductory physics course for biology majors. Using wireless-enabled digital cameras, they created an archive of students’ work on the photo-sharing website Flickr.com. With Flickr, images can be organized into hierarchical collections that match the course structure. Flickr also supports tags and comments, which enable students, instructors, or learning assistants to discuss or ask questions about an image (Price et al., 2011).

“The availability of the photo archive changed the class culture in an unintended but productive way,” write the researchers (Price et al., 2011, p. 427). “Before Flickr was used, whiteboards were seldom corrected during the class discussions. Errors were pointed out, but there was typically no reason to mark them, especially since the whiteboards would be erased when the class moved on to a new topic.” After the whiteboards were archived on Flickr, however, students began correcting their whiteboards in class so that the photo would preserve an accurate solution. Whether this behavior stemmed from a desire to have a useful and clear photo archive or from an effort to avoid embarrassment, the act of photographing the whiteboards “initiated a final round of instructor feedback and student revision that presumably helps students consolidate their understandings.” This process of students correcting their solutions is another example of how technology can restructure a classroom.

In some of their courses, Price and his colleagues have used tablet computers and Ubiquitous Presenter software as an alternative to whiteboards (Price et al., 2011). With these technologies, students can write on the tablet screen as they would on a whiteboard and then send their work to the instructor, who can preview, project, and annotate submissions from any of the groups in the class. The instructor can also create and write on lecture slides. The student submissions, instructor slides, and annotations are automatically archived and can be reviewed on a website. Students have made extensive use of this Web archive, and in an end-of-semester survey, 75 percent of students reported that access to other students’ work was use-

Example of Flickr whiteboard photo archive.

ful or very useful (Price et al., 2011). The instructors note, however, that these benefits must be weighed against the expense and technological complexity of the approach and the small size of the tablet screen compared with a whiteboard.

Learning is a social process, says Price, and introducing new technology or changing the technology can impact that social process. The experience with the photo archive of students’ whiteboard work illustrates this. He suggests that instructors think beyond what a particular technology enables them to do and also consider “How is it going to restructure the roles of the different people in the classroom? How is it going to change the social interactions?”

In Price’s classroom, one technology that has transformed the students’ and instructor’s roles is Calibrated Peer Review (CPR), a Web-based tool developed at the University of California, Los Angeles, that enables students to learn and apply a structured process for evaluating their own and their peers’ written work. In a class of 100 students, it is difficult for the instructor to read and grade writing assignments with any frequency. But with CPR, Price can assign homework in which students must write explanations of the scientific phenomena they have studied in class. Students write a response to a prompt in the CPR system. Then they use instructor-prepared questions to evaluate sample responses and receive feedback on their evaluations, which allows them to “calibrate” their evaluation skills. Students then score their peers’ work. “As the instructor, my role shifts to developing these materials but with less of an emphasis on grading them,” he explains. “The students’ role changes dramatically. They’re put in the position of having to become expert enough to evaluate other people’s work. It’s a really different kind of expectation.” Price and his colleagues have independently analyzed the validity of the CPR tool as used with the Learning Physics curriculum and found that students’ scores for their peers’ essays correlate very closely with the instructors’ own expert evaluations of the same work (Price et al., 2013b).

According to an evaluation by Price and colleagues (2013a), students taught with the Learning Physics curriculum learned significant physics content and developed more expert-like views about science and learning science. Their performance on a conceptual content assessment was similar to that of comparison students taught with an inquiry-based curriculum developed for smaller enrollment classes that met for more hours per week. Moreover, Learning Physics students outperformed the comparison group on an end-of-semester written explanation.

If you want to gradually integrate learning technologies into your courses, you might take some of these tips from Price’s experience:

- Basic technologies like clickers—or even a whiteboard—have a place in a research-based, high-tech classroom.

- Just because a technology is new or visually impressive does not mean it will serve the purpose of increasing student interaction.

- Some technologies may prove to be more successful than others in achieving your teaching and learning goals. If a certain technology turns out to be less effective than you hoped, you can abandon it, revise its application, or try something else.

- Technologies can change how students interact with each other and with the content of your course.

- After you gain experience with various technologies, you may see possibilities for adapting new technologies to meet specific learning needs in your course.

- You need to weigh the costs of a technology against its benefits for student learning. Technologies that are expensive, time-consuming, or complicated to use may not be worth the cost or effort.

Clickers

Using clicker questions is often the first step that instructors take toward a more interactive style of teaching. But “a clicker is a technology, not a pedagogy,” as pointed out by Alex Rudolph,13 a physics and astronomy professor at California State Polytechnic University, Pomona, and an experienced clicker user.

Clickers in and of themselves do not transform learning. Their efficacy depends on how they are used. Many instructors who maintain that they are implementing research-based strategies because they have integrated some clicker questions into their lectures are omitting important elements, such as peer discussion or sufficiently challenging questions.

When used properly, clickers have certain advantages. They make it possible for instructors to obtain rapid feedback for themselves and their students. They allow instructors to collect an answer to a question from every student and hold

________________

13 Interview, August 20, 2013.

each student accountable for participating. They can tell instructors when students are disengaged or confused and why this has happened, and can help instructors immediately fix the situation. Good clicker questions can generate more discussion and questions from a much wider range of students than occur in a traditional lecture. When clickers are implemented correctly, students are more engaged and learn much more of the content covered. Students will overwhelmingly support their use and say they help their learning (Wieman et al., 2008).

“The most compelling evidence on clicker use shows that learning gains are associated only with applications that challenge students conceptually and incorporate socially mediated learning techniques” concludes the 2012 NRC report on DBER (p. 124). Examples include posing formative assessment questions at higher cognitive levels and having students discuss their responses in groups before the correct answer is revealed.

A guide on effective clicker use developed by the Science Education Initiative at the University of Colorado Boulder and the University of British Columbia contains numerous suggestions, including the advice paraphrased below (Wieman et al., 2008, p. 2).

- Have a clear idea of the goals to be achieved with clickers, and design questions to improve student engagement and interactions with each other and the instructor.

- Focus questions on particularly important concepts. Use questions that have multiple plausible answers and will reveal student confusion and generate spirited discussion.

- Take care that clicker questions are not too easy. Students learn more from challenging questions and often learn the most from questions they get wrong.

- Give students time to think about the clicker question on their own and then discuss with their peers.

- Listen in on the student discussions about clicker questions in order to understand how students think, and address student misconceptions on the spot.

Jacob Smith,14 a student at Cal Poly Pomona, was exposed to clickers for the first time in Rudolph’s physics class. The clicker questions and ensuing discussions “had the whole class engaged,” he says. “I learned different ways [that]

________________

14 Interview, August 23, 2013.

people’s thought processes worked—how they would develop their idea and why they believed it was correct.” Formulating an explanation to convince another student who disagreed with him “helped me engage my mind to better grasp each concept,” he adds.

You need to weigh the costs of a technology against its benefits for student learning. Technologies that are expensive, time-consuming, or complicated to use may not be worth the cost or effort.

Research by Michelle Smith and colleagues (2009) has found that the peer discussion process enhances students’ understanding even when none of the students in a group initially knows the correct answer. A representative comment from a student in the study by Smith and colleagues clarifies how this works: “Often when talking through the questions, the group can figure out the questions without originally knowing the answer, and the answer almost always sticks better that way because we talked through it instead of just hearing the answer.” In addition, the authors note, students develop communication and metacognitive skills when they have to explain their reasoning to a peer.

Conversations around clicker questions can cause students to confront their misconceptions and realize they have to adjust their thinking, says Derek Bruff,15 director of the Center for Teaching at Vanderbilt University. “That idea of collective cognitive dissonance is exciting,” he adds. A common faculty error is to use clicker questions that are too easy, he points out; all that does is “ensure at some minimal level the students were awake.” Another common failing occurs when a large percentage of students answer incorrectly and the instructor quickly gives the correct answer and moves on; a better approach is to give students time to discuss and rethink their answers, and then to follow up with additional clarifications if necessary.

Simulations, animations, and interactive demonstrations

Other popular learning technologies used in science and engineering courses include simulations, animations, and interactive demonstrations. These tools can help students visualize, represent, and understand scientific phenomena or engineering design problems. While more research is needed on their educational efficacy and the conditions under which they are effective, expert practitioners around the country have studied and used these tools and found them to be valuable teaching and learning aids.

________________

15 Interview, April 29, 2013.

One research-validated example of this type of tool is the suite of Interactive Lecture Demonstrations developed by David Sokoloff and Ron Thornton (discussed in Chapter 3). Another example is the collection of PhET (Physics Education Technology) simulations developed at the University of Colorado Boulder (see Box 5.2).

In chemistry, Michael Abraham and John Gelder (n.d.) at the University of Oklahoma have developed interactive Web-based simulations of molecular structures and processes, along with materials for using these simulations as part of an inquiry-based instructional approach. For example, students can use a Web-based simulation of Boyle’s law to observe the activity of molecules in a gas sample and collect data and answer questions about the relationship between gas pressure and volume. Abraham, Varghese, and Tang (2010) have also studied the influence of animated and static visualizations on conceptual understanding and found that two- and three-dimensional animations of molecular structures and processes appear to improve student learning of stereochemistry, which concerns the spatial arrangement of atoms within molecules.

Numerous other simulations, animations, demonstrations, and videos are available for use in research-based science and engineering classrooms. Here are some issues to consider when using these types of tools:

- Interactive simulations are flexible enough to be used in a variety of class settings, but they are most effective when they are used in ways that encourage students to predict and discuss possible outcomes and propose “what if” scenarios, or that allow students to explore the simulation for themselves.

- Simulations that are interactive are particularly well suited to research-based instruction because they enable students to develop and test their conceptual understanding by changing different variables.

- Well-designed simulations have some advantages over real-life experiments because they can be designed to help students see a phenomenon in the way that experts do. They can also require less class time, fewer materials, and less space to carry out.

- Simulations and interactive demonstrations can be ineffective for learning if an instructor’s demonstration omits opportunities for student predictions, discussion, and suggestions, or if an instructor discourages student exploration by being too prescriptive about what to do.

BOX 5.2 STUDENTS BUILD CONCEPTUAL UNDERSTANDING WITH A “SANDBOX” OF COMPUTER-BASED SIMULATIONS

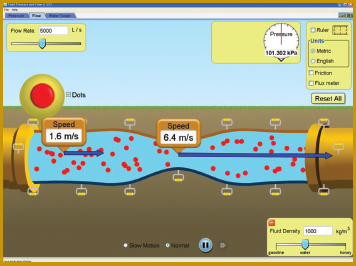

A student scrutinizes an image on a computer screen that simulates fluid running through a pipe. What will happen to the velocity of the fluid if a section of the pipe is constricted from 2 meters to 1 meter? using the mouse, the student narrows a section of the pipe and sees that the velocity of the flow increases through the narrower passage. She calculates the change in pressure between the 2-meter and the 1-meter diameter and writes down the answer. Now, what will happen if the density of the fluid is changed from that of water to that of honey?a

PhET simulation.

This is one of dozens of PhET simulations developed at Colorado. These interactive simulations enable students to make connections between real-life physical phenomena and the underlying scientific concepts. The PhET simulations are based on research findings about how students learn in science disciplines and have been extensively tested and evaluated for educational effectiveness, usability, and student engagement (university of Colorado Boulder, 2013).

The first group of simulations was developed for physics, but simulations have since been added in other science disciplines and for the elementary through undergraduate levels. As of early 2014, 128 simulations were available for free on the Web.

“An important goal for these simulations is to help students connect the science to the world around them. We try to embed it in the context of something familiar,” says. Kathy Perkins,b a Colorado physics professor who directs the PhET project. “Students can get engaged in scientist-like explorations to discover and build up the main concepts behind a particular topic.”

The PhET simulations include appealing graphics, and students can manipulate certain variables with the easy-to-use controls. The simulations encourage students to explore quantitative changes through measurement instruments such as rulers, stop-watches, voltmeters, and thermometers. “As the students work with the simulation, they can be asking their own questions, and the simulation will help them answer those questions by what it shows,” Perkins explains.

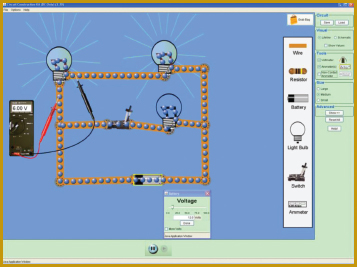

Another simulation allows users to build circuits involving lifelike resistors, lightbulbs, wires, batteries, and switches.c They can measure voltages and currents with realistic meters and see lightbulbs lighting up. Unlike real-life experiments, the PhET simulations show visual representations of the models that experts use to understand phenomena that are invisible to the naked eye—in this case, the flow of electrons around the circuit and how their velocity changes in response to changes in the circuit (Wieman and Perkins, 2005). One of the common student misconceptions about circuits is that “students think that current is used up in the circuit—that it starts out large and gets used up, and there’s zero current at the end,” Perkins notes. “But showing the electrons flowing around helps to mitigate that

________________

a See http://phet.colorado.edu/en/simulation/fluid-pressure-and-flow.

b Interview, June 18, 2013.

c See http://phet.colorado.edu/en/simulation/circuit-construction-kit-dc.

difficulty because they can see that the current isn’t changing; they see the electrons are not slowing down, they are not losing them.”

Circuit simulation.

This ability to replicate expert-like perception is one of the advantages that a carefully designed computer simulation has over a real-life experiment, write Wieman and Perkins (2005). In a simulation, certain important features of a scientific phenomenon can be enhanced while peripheral features that would distract students in a real experiment can be hidden; and time scales and other features can be adjusted to point students to the desired perception. In addition, using an existing simulation often takes less preparation time than assembling the materials for a traditional experiment.

The simulations can be integrated into class instruction in various ways, says Perkins. She often recommends that instructors start by allowing students to explore the simulation on their own for a few minutes, which helps students gain some familiarity with how it works and generate their own questions. Then the instructor can move into a guided activity with the simulation–“not telling students what to touch and do exactly, but asking open-ended questions where the simulation is providing the ‘sandbox’ in which to explore these questions,” Perkins says.

Integrating the simulations with interactive teaching techniques is preferable to simply using them as a visual demonstration during a lecture, in Perkins’s view. When the simulations are used in a lecture, Perkins suggests that instructors first have students predict the outcome and discuss their predictions before showing them the simulation. This might be done through a clicker question, such as asking students to predict, vote on, and discuss what will happen to the brightness of one of the lightbulbs in a circuit if a certain switch is closed. After the instructor demonstrates the simulation, students can then propose changes in certain variables–for example, “’What if you add another battery? What if you flip that battery around? What if those are in parallel instead of in series?’ I call that whole class inquiry,” says Perkins, “when you’re operating the simulation up front but the questions are coming from the students.” Alternatively, instructors in a large lecture class could use the simulations as tutorials that students do on their own laptops or in groups.

In smaller classrooms, simulations can form the basis of collaborative student activities, says Perkins. For example, for the PhET simulation on projectile motion, the instructor might challenge the students to explore all the ways in which they can make the projectile go further. Simulations can also be used for pre-lab learning or in the labs themselves “At [the University of Colorado] we have circuit labs with the simulations and real-world equipment in the same lab, where students will be going back and forth.”

Simulations can also be used ineffectively, Perkins cautions. “One of the things we see that really short circuits the learning from the simulations is when you give students really explicit directions about what to set up”–in other words, when they are used “in a cookbook style.”

Other computer- and Web-based technologies

Computers and Web-based technologies are essential to the practice of science and engineering, and their applications to undergraduate education in these disciplines are innumerable. The following examples highlight a few of the ways in which computer technologies are being used to enhance teaching and learning, facilitate student-teacher and peer-to-peer interaction, and encourage students to take greater responsibility for their own learning.

Workshop Physics, a research-validated approach developed at Dickinson College by Priscilla Laws and her colleagues, teaches calculus-based introductory physics without formal lectures (Dickinson College, 2004). Instead, students learn collaboratively by conducting activities and observations entirely in the laboratory, using the latest computer technology. The process encourages students “to make predictions, discuss their predictions with each other, test their predictions by doing real activities, and then draw conclusions,” says Laws.16 In addition to directly observing real phenomena, students in Workshop Physics use computers to collect, graphically display, analyze, and model real data with greater speed and efficiency than they otherwise could. Recently, Laws and a group of colleagues have created and are evaluating Web-based, interactive video vignettes that demonstrate physics topics like Newton’s third law.17

In many undergraduate geosciences courses, students are using Google Earth to support hands-on projects, create maps and models, measure features, organize geospatial data, and accomplish many other purposes (Science Education Research Center, 2013).

Some science and engineering instructors and students are using blogs, social media, and other common Web resources to promote student interaction and learning. John Pollard at the University of Arizona has created a YouTube “Chemical Thinking” channel on which he posts videos he made to explain general chemistry concepts.18 Facebook groups are also an important part of Pollard’s class, says student Courtney Collingwood,19 who took the course in 2013. “People will post, and Pollard or Talanquer will answer. There’s a lot of opportunity for help if people are willing to ask,” she says.

Students in Eric Brewe’s introductory modeling physics course at FIU decided to put together a course “textbook” in the form of a class wiki as a study

________________

16 Interview, July 30, 2013.

17 See http://ivv.rit.edu.

18 See http://www.youtube.com/user/CHEMXXl.

19 Interview, April 24, 2013.

resource.20 Students took turns doing the wiki page for each class session, and other students could make comments, additions, and suggestions.

Many instructors vacillate about adopting student-centered instruction because they are not sure it can be done in an auditorium with fixed seats or other standard classrooms designed for large enrollment courses. But this is more a problem of mindset than of physical constraints, as illustrated by the case study of Scott Freeman’s biology class in Chapter 1 and other examples in the preceding chapters. Many DBER studies directly address the viability of using student-centered approaches in large classes, and the evidence emerging from these studies has been quite positive. In addition, clickers, computer-based simulations, and other technologies have been particularly useful in facilitating interaction in large courses, as the previous discussion makes clear.

That said, some instructors, departments, and institutions have designed or redesigned learning spaces that are particularly suitable for interaction, group work, project-based learning, and other research-based approaches. These redesigns are typically accompanied by dramatic changes in instruction, including reductions in the amount of lecturing and the integration of lecture and laboratory courses. These models for redesigning learning spaces are particularly worth considering when an institution is planning a remodeling or new construction program.

Early examples of redesigning learning spaces in conjunction with reforms in pedagogy include the Workshop Physics approach described above and Studio Physics, an integrated lecture/laboratory model developed at Rensselaer Polytechnic Institute in 1993. The best known example is the SCALE-UP approach, which as of 2013 was being implemented at 250 sites in the United States, including North Carolina State University, MIT, the University of Minnesota, Old Dominion University, and many other institutions. While the motive for redesigning the learning space in many of these sites was to promote more active learning in courses of 100 or more students, the approach has also been used in smaller settings of 50 students or fewer. The SCALE-UP model is described in Chapter 4; what follows in Box 5.3 is a discussion of how institutions adopting this approach have transformed the classroom environment.

________________

20 Interview, April 16, 2013.

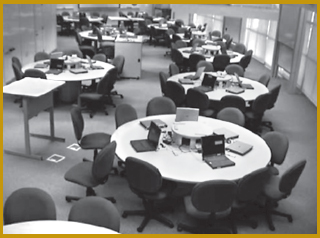

BOX 5.3 FORM FOLLOWS FUNCTION IN TRANSFORMED SPACES FOR INTERACTIVE LEARNING

At NC State, a pioneer of the SCALE-UP model, the redesigned classroom space is an obvious departure from the standard lecture hall. The room holds roughly 100 students. The students sit at 11 round tables arranged banquet-style, each large enough to accommodate 9 students working in teams of 3. Everything about the room’s design—from the three networked laptops, whiteboards, and lab equipment on every table to the strategically placed larger whiteboards and computer screens that afford every student a view—is intended to maximize collaboration and hands-on work among students and interaction between students and faculty.

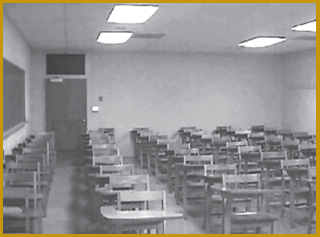

Phase II classroom seating 55 before renovation.

Robert Beichner,a an NC State physics professor who was frustrated with the stadium seating, wooden chairs, and wobbly paddle-shaped desks in his institution’s lecture halls, approached his department head about designing an optimum space for the types of research-based strategies he wanted to use. He was told that if he could find matching funds, the department would provide the furniture. He obtained grant money from NSF and the U.S. Department of Education’s former Fund for the Improvement of Postsecondary Education (FIPSE) program and found a space on campus that could be remodeled.

Phase II SCALE-UP classroom seating 54 after renovation.

The transformed learning space sent an obvious signal to students that teaching and learning in SCALE-UP classes would be different. “[Students] know how a lecture hall is supposed to be used—you essentially sit and write down notes,” says Beichner. “When you walk into a space that’s different, your expectations are violated.”

Phase III classroom seating 99 students.

Beichner recognizes that institutional issues, including funding constraints, competition for space allocation, and scheduling, make this type of renovation

________________

a Interview, March 26, 2013.

Robert Beichner believes his role in a SCALE-UP classroom is to listen to students and guide their thinking.

difficult to undertake. If an institution is willing to commit to this approach, he recommends that the faculty involved be specific about what they want and closely monitor the remodeling process. Often the people who do the work are not accustomed to faculty being particular about such details as the size of the tables or the placement of projection screens and ceiling lights, but these types of design features affect learning, Beichner says.

At the University of Minnesota, another institution that has adopted or adapted the SCALE-UP approach, the biology department’s plan to transform a course using an active learning approach coincided with the Office of Classroom Management’s plan to remodel a learning space. The biology department offered to combine one of its classrooms with an adjacent room controlled by Classroom Management in order to create a larger classroom patterned after NC State’s SCALE-UP design, says Minnesota biology professor Robin Wright.b The result was the creation of the university’s first remodeled active learning classroom. The success of this first classroom helped to convince her university to incorporate 17 additional active learning classrooms in a new campus building.

In Minnesota’s active learning classrooms, “the space invites” student peer-to-peer discussion and innovative instructional approaches, says Wright. The feedback on the redesigned rooms has been positive among faculty members who have taught there, she reports. “Generally, faculty said they never want to teach any other way.”

SCALE-UP classrooms are also equipped with computer-based simulations and many other technological supports for project-based learning. And what does Beichner consider the most important technology in the room? “The round tables.”

________________

b Interview, April 12, 2013.

While these types of redesigned classroom spaces can facilitate active learning, they are expensive and may not always be feasible. If the opportunity for remodeling or construction arises, then a department or institution should give thought to research on learning in their design. But if the opportunity is not there, a redesigned classroom is by no means necessary to realize the benefits of research-based instruction. As Colorado professor Steve Pollack21 notes, instructors can adopt “transformed pedagogy in old-fashioned classrooms.”

This chapter has offered ideas for appropriately assessing learning and teaching, using technology effectively, and redesigning classroom spaces when that option is available. These and other aspects of research-based instruction are likely to present challenges, but these challenges need not derail you. They can be tackled head-on, as discussed in Chapter 6.

Resources and Further Reading

Discipline-Based Education Research: Understanding and Improving Learning in Undergraduate Science and Engineering (National Research Council, 2012)

Chapter 6: Instructional Strategies

Interactive physics simulations http://phet.colorado.edu

Interactive video vignettes in physics http://ivv.rit.edu

Knowing What Students Know: The Science and Design of Educational Assessment (National Research Council, 2001)

Student-Centered Active Learning Environment with Upside-down Pedagogies (SCALE-UP) http://scaleup.ncsu.edu

YouTube “Chemical Thinking” channel http://www.youtube.com/user/CHEMXXl

________________

21 Interview, April 25, 2013.