Evolving Methods in Evaluation Science

Important Points Made by the Speakers

- Realist methods are one way to understand the mechanisms that generate outcomes and the relationship between the outcomes and the context.

- Innovative methods can make it possible to gather evaluation data in situations that would not have been amenable to analysis in the past.

- Nonexperimental, observational, and mixed methods can provide valuable evaluation information.

Many technologies, techniques, and approaches are available in evaluation science. The presenters at this concurrent sessions examined some of these evaluation approaches, including long-standing principles of evaluation science that have continuously evolved as well as new approaches that have been introduced. The speakers focused on potential application in the evaluation of complex initiatives and interventions in complex systems.

USE OF REALIST METHODS TO EVALUATE COMPLEX INTERVENTIONS AND SYSTEMS

There are two types of realist methods, said Geoff Wong, senior lecturer in primary care at Queen Mary, University of London. The first,

called realist evaluation, is theory-driven evaluation in which the evaluator generates data and conducts primary research. The other, realist synthesis, which Wong said is derived from realist evaluation, is a form of systematic literature review that can be considered secondary research. In realist synthesis, the goal is more about explaining and understanding how and why an intervention works rather than looking at whether it does or does not work (Pawson, 2013).

Most large interventions have multiple interacting components that tend not to act in a linear fashion. “You can put a vast amount of effort in at one end and not necessarily get back your return” on the final desired outcome or the proximal outcomes, said Wong. Interventions are also highly dependent on the context in which they take place and on the variability associated with the fact that every intervention relies on people, from those who are the targets of the intervention to those who are on the ground delivering the intervention. As an example, he cited his experience with large smoking cessation programs, which deal with a wide range of smokers who have a variety of motivations to stop smoking, strategies for kicking the habit, and responses both to particular interventions and to when they fail any one attempt to quit smoking. In addition, those delivering the intervention have different personal approaches, further complicating the context of the intervention.

In their potential to address this complexity, realist methods differ from other methods, Wong said, by starting with an ontology based on critical realism that attempts to identify, understand, and explain causation through generative mechanisms. The idea, he said, is that interventions themselves do not necessarily cause any outcomes. Instead, what causes the outcomes are what he called mechanisms. “Mechanisms are a causal process, a driving force.” For example, letting go of an object does not make it fall—gravity does. Letting go of the object is the intervention, but gravity is the mechanism. One key concept is that mechanisms can be hidden yet they are real and can be used even though they cannot be seen or touched. “None of us have ever seen gravity, but we are able to see the effects of gravity,” he said. They are also context dependent—an object dropped on the moon would fall at a different velocity than an object dropped on earth.

Wong noted that these concepts enable the ability to develop a logic of analysis that depends on the mechanism. Middle-range theories that are close enough to the data to be testable can then explain the relationships between outcome, context, and mechanism.

Epistemology is another way in which realist methods differ from other methods. “How do we know something is knowledge?” Wong asked. Knowledge should be judged by assessing the processes and assumptions by which it is produced, he said. He analogized this to detective work that starts with outcomes and works backward to understand the cause. “Theo-

ries are right because they are, for example, coherent, plausible, and repeatedly successful.” From the ontological perspective of a realist, the world is stratified. There are layers that give the world depth, with mechanisms operating at a layer that may not be immediately observable. As a result, Wong said, controlling for context may be neither possible nor desirable because doing so “may in fact be stripping out the thing that is an important trigger to the mechanism.”

Wong finished his remarks by noting that it helps to have some grounding in the philosophy of science to understand the basis of realist methods and how best to apply them. The realist synthesis has quality reporting standards and training materials available on the web, and there is a discussion group for anyone interested in realist research, he said.1

INNOVATIVE DESIGNS FOR COMPLEX QUESTIONS

Emmanuela Gakidou, professor of global health and director of education and training at the Institute for Health Metrics and Evaluation at the University of Washington, discussed three complex interventions that she and her colleagues are evaluating using innovative designs. The first project, Salud Mesoamerica 2015, is a 5-year, public–private partnership with multiple funders whose purpose is to improve health indicators for the poorest quintile of people living in Mesoamerica—Mexico from Chiapas plus all of Central America—using results-based financing as a way to implement the intervention. The objectives of the evaluation, Gakidou explained, are to assess if countries are reaching the initiative’s targets as agreed to between each country and the Inter-American Development Bank, the project’s managing organization, and to evaluate the impact of the specific components of all interventions in each country.

The evaluation design includes a baseline measurement prior to the intervention and three follow-up measurements over the course of the 5-year project, with intervention and control groups in most countries. To deal with the complexity of the project, Gakidou and her colleagues are using what they consider to be innovative ways of conducting measurements, including sampling populations at high risk so they can have enough of a sample size to make inferences at the end, capturing data electronically, evaluating the quality of the data during data entry, and evaluating project implementation. Another innovation, she noted, is linking the health facilities where households are receiving care with the information from household surveys to provide a flow of information between the supply side and the demand side for these communities. “We also do a lot of health facility observations and medical record review information extraction from charts,

1 More information is available at http://www.ramsesproject.org (accessed April 8, 2014).

so we don’t just rely on qualitative assessments in the facilities,” she said. For example, the evaluation is using dried blood spot analysis to measure immunity status in the children rather than just relying on immunization status reports from the facilities.

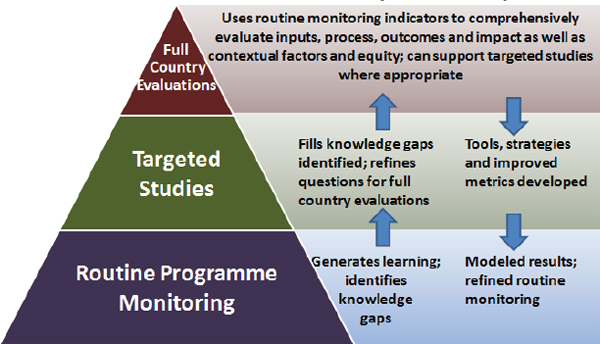

Next, Gakidou described the Global Alliance for Vaccines and Immunization (GAVI) full-country evaluation. The goal of this evaluation is to understand and quantify the barriers to and the drivers of immunization program improvement, including GAVI’s contribution, in five countries: Bangladesh, India, Mozambique, Uganda, and Zambia. It is a 4-year prospective evaluation that started in 2013 with a $16 million budget, and it is using monitoring indicators to comprehensively evaluate inputs, process, outcomes, and impacts as well as contextual factors and equity (see Figure 9-1). Given that GAVI is not the only agency working on immunization in these five countries, the evaluation will attempt to look at the whole immunization system and GAVI’s role in that system. The evaluation is also designed to answer several more specific questions about GAVI’s contribution to immunization rates and, ultimately, to reductions in child mortality.

The GAVI evaluation uses a mixed methods approach to analyze quantitative and qualitative data from sources, including process evaluation, resource tracking, facility surveys, household surveys, verbal autopsies,

FIGURE 9-1 The GAVI Full Country Evaluation sought to understand and quantify the barriers to and drivers of immunization program improvement through routine program monitoring, targeted studies, and full country evaluations, as presented by Gakidou.

SOURCE: Printed by courtesy of the GAVI Alliance, 2010.

vaccine effectiveness assessments, small area analyses, and impact analyses. The evaluation is using existing data and, as Gakidou explained, “we’re supplementing that with what we call smart primary data collection, where we piggyback on existing programs like the DHS and oversample some populations or add additional questionnaires for subsampling some populations.” She observed that this saved costs for the evaluation while taking advantage of other ongoing, large-scale activities.

Finally, Gakidou discussed the evaluation of the Malaria Control Policy Assessment, funded by the Bill & Melinda Gates Foundation, which is designed to determine how much of the reduction in child mortality seen in Zambia over the past two decades is a result of scaled-up malaria control interventions. She explained that in addition to malaria control efforts—distribution of insecticide-treated bed nets and indoor residual spraying—there has been an expansion of efforts to prevent mother-to-child transmission of HIV through PEPFAR, a transformation and scale-up of immunization programs combined with the development of a new pentavalent vaccine, the introduction of child health weeks, and other health interventions new to Zambia since the mid-1990s. Contextual factors also have been at work, including economic growth and huge improvements in the education of women of reproductive age. “What we’re trying to figure out is if the rate of decrease in child mortality accelerated as a result of malaria control interventions, and if so, by how much,” said Gakidou.

The first step in the evaluation has been to estimate trends at the district level for all quantities of interest between 1990 and 2010. Next the evaluation will conduct causal attribution analyses looking at a number of variables addressing the entire range of health interventions and a composite measure for sociodemographic factors. Gakidou noted that there are variables that she would liked to have added to this analysis, including both malaria control and other health-related interventions that are critically linked to child mortality, but that the data are not available. Noting “We’re a fairly quantitative institute where I work,” she said that her team tested dozens of models, ranging from linear models to structural equation modeling, along with many combinations of interventions using random effects, fixed effects, and other approaches.

Currently, she and her colleagues are favoring a linear model with bootstrapping that includes distribution of insecticide-treated bed nets and indoor residual spraying, seven other key health interventions, and a composite measure of non-health factors. Analyzing the data using this model shows that none of the interventions stands out as being significant on its own. “You can imagine with so many things going on, it’s really difficult to figure out what actually is leading to the reduction of under-5 mortality,” Gakidou said. “In some ways it’s not fair for somebody to ask me what has been the contribution of the malaria control scale-up to child mortal-

ity, and in some ways it’s my responsibility as a quantitative analyst to say that, given this graph, I really can’t tell you.” A counterfactual analysis suggested that if none of these interventions had been instituted, under-five child mortality in Zambia would have been 13 percent higher in 2010, which Gakidou characterized as “a remarkable reduction and acceleration in the pace of child mortality reduction.”

In closing, Gakidou said that one implication of the studies she discussed is that for broad, complex global health evaluations, retrospective evaluations are always limited by available data. “You can’t go in and design your own study to answer the question, and what this means is that sometimes you can’t actually answer the question you set out to answer, but you still learn a lot of valuable information along the way,” she remarked. “So, as an evaluator, my pitch is that large global health programs and initiatives need to build in evaluation from the beginning, like the study on Mesoamerica that I was referring to, because the evidence base on what works and what does not work urgently needs to be expanded in our field.”

COMPARATIVE SYSTEMS ANALYSIS: METHODOLOGICAL CHALLENGES AND LESSONS FROM EDUCATION RESEARCH

Education research over the past 20 years in developing countries has often been criticized by donors for being fragmented, small scale, noncumulative, and methodologically flawed, as well as for being politically motivated, researcher driven, and of limited relevance for policy and practice, said Caine Rolleston, lecturer in education and international development at the Institute of Education, University of London. This criticism, he explained, has led to an increasing emphasis on what is considered to be the “gold standard” of experimental methods—the randomized controlled trial—but there is also a need for better evaluation of education systems through nonexperimental, observational, and mixed methods.

As an example, he discussed the Young Lives survey, a longitudinal study of 12,000 children born in two cohorts in Ethiopia, India, Peru, and Vietnam that was designed originally to look at childhood poverty.2 In 2010, when the younger cohort of children reached age 10, Rolleston and his colleagues started including school surveys focused on math and literacy and measuring progress in learning over time. They also looked at school and teacher effectiveness using a longitudinal, within-school design, and included both the indexed children and class peers to get a balanced sample of children at the school and class level.

Rolleston noted that despite a large number of studies of the effects of observable school inputs, there is little consistent evidence of what works

2 More information is available at http://www.younglives.org.uk (accessed April 8, 2014).

in terms of individual school inputs. “Not only that, but those effects that are consistently significant are pretty much the most obvious ones and don’t offer a huge amount of guidance for additional programs and projects,” he said. “That’s partly because of the large differences in context, but also because of the very large number of variables that are included in school effectiveness studies.” Nonetheless, despite the poor consistency of these findings, there are large differences in the effectiveness of education systems. “It seems that those are bound up with different kinds of system characteristics, political economy characteristics, and bundles of inputs that vary in inconsistent ways across countries,” Rolleston said.

When looking at student performance data in the four countries studied in the Young Lives survey, there are clear differences in learning levels between the four systems. Children in India, for example, do not make as much progress in math as do children in Vietnam between ages 7 and 14. While there are inconsistent patterns of explanatory variables, two factors stood out: all teachers in Vietnam received formal teacher training, while 16.5 percent of teachers in India did not, and nearly a third of students in India reported that their teacher was often absent, while teacher absenteeism in Vietnam was exceedingly rare. “But assessing school quality in comparative terms between two systems is quite complex, because what you really need is to be able to measure the value added over time,” said Rolleston, “To do that you need to be able to separate the effects of pupils’ backgrounds from their prior attainment, which requires a longitudinal design, repeated measures of test scores at the school level, and linked data between teachers, schools, and pupil backgrounds.” While difficult to achieve, Rolleston and his team successfully used these sophisticated designs for value-added analysis where they have found big differences in cross-sectional effects compared to longitudinal effects.

Another challenge in developing countries is to develop context-appropriate measures for educational performance. Rolleston and his colleagues used a package of assessments, including teacher tests and a progressive linked pupil achievement test in core subjects to provide relevant and robust measures of learning over time, including skills such as school engagement and self-confidence. They then scaled the results using latent trait models to look at change over time in a comparable way. Performing value-added analysis test results, he noted, requires an understanding of both school and teacher quality as well as methodological rigor in constructing the assessments. “This is not only a technical issue, but one very much about the relevance of the tests,” Rolleston stated, adding that the design of these relevant learning metrics in developing countries “has been extremely demanding.” In Ethiopia, for example, not only is the literacy level low, but there are eight linguistic groups and languages of instruction.

It also is necessary to balance national curricula and expectations with international norms in literacy and numeracy.

When the analysis of the four countries was complete, Rolleston and his colleagues were able to answer the question posed by the donor, which was what is it about Vietnam’s educational system that enables it to be more effective over time? “The general lesson is that it’s an equity-oriented, centralized public school system,” said Rolleston. There is greater equity in the public school system, which is linked to higher performance for the majority of pupils. To achieve equity, Vietnam has an emphasis on fundamental or minimum school quality and that, he added, “means that the least advantaged pupils and the most disadvantaged areas do not suffer from as extreme a disadvantage as they do in the other countries in our study.” Other important factors for Vietnam’s higher levels of student performance are a greater degree of standardization in terms of curricula and textbooks that are more closely matched to pupils’ learning levels and abilities, a commitment to mastery by all pupils, and the use of regular assessments.

In closing, Rolleston said that, in education, data on learning metrics in developing countries are still inadequate, that there are few rigorous assessments of students’ learning performance, and that a robust longitudinal study design is needed to assess school quality. Context is also critically important to the development of a theory of change, he added.

OTHER TOPICS RAISED IN DISCUSSION

During the discussion period, the presenters turned to the issue of whether sophisticated statistics are needed to identify the effect of an intervention in a complex setting. Gakidou answered that choices made in the design and data collection can reduce the need for statistical analyses of that kind. However, the links between an intervention and outcomes need to be clearly drawn to understand causal mechanisms.

Wong pointed out that interventions are heterogeneous, not just people and settings. They should actually be seen more as a family of interventions. In that case, evaluations seek to understand why these heterogeneous inputs should produce a certain set of outcomes. The causal mechanisms, which are more universal, become the areas of focus.

Rolleston expressed interest in the application of realist evaluation to educational interventions, despite the complexities of doing so. For example, such an approach could produce insights on causal mechanisms in education that go beyond statistical associations. “Any teacher can explain to you why a particular textbook is better or worse than another. You don’t need a statistical analysis for that.” Wong agreed, pointing out that realist methods can help explain patterns observed in data.