Developing the Evaluation Design and Selecting Methods

Important Points Made by the Speakers

- Evaluations of complex initiatives require trade-offs in developing the evaluation design and choosing the methods.

- Evaluations of complex initiatives are well served by the use of a logic model, theory of change, results chain, impact pathway, or other framework to describe how the program is intended to create change and to identify potential unintended results.

- It is important to design the evaluation and interpret findings in the context of the environments in which initiatives are implemented and in the context of interrelated activities both within the same initiative and carried about by other stakeholders.

- Building measurement into the management of programming can be a powerful way of tracking causation.

- Standardization of data collection and analysis when feasible can improve comparability within and across evaluations.

- Despite guidance and resources that are available, many complex evaluations still fail to follow good practices.

Designing an evaluation involves many challenges and trade-offs. The members of the workshop’s second panel discussed designing evaluations to understand not only whether an effect was achieved but also how and why. They also spoke of the importance of strategically thinking through

different options for data collection and analysis methods and how those methods can be matched to the aims and questions of an evaluation, the available data, and the feasibility of implementing the methods with appropriate rigor. Finally, the panelists addressed ways of recognizing, understanding, and grappling with the complexity of an initiative being evaluated and of the contexts in which an initiative is implemented.

INSTITUTE OF MEDICINE EVALUATION OF PEPFAR

As was mentioned during the first panel, Congress has twice mandated that the IOM conduct an independent, external evaluation of the effects of PEPFAR. Deborah Rugg, a member of the IOM committee for the second independent evaluation of PEPFAR, explained that this most recent evaluation attempted to assess PEPFAR’s contribution to the HIV response in partner countries and globally since the inception of the initiative in 2003. Thus, the task for the IOM was to design and then conduct an impact evaluation of a complex dynamic initiative with a wide range of supported activities and a global reach. The resulting evaluation was conducted over 4 years with an extensive planning phase followed by an intensive implementation phase. The evaluation was carried out by a volunteer expert committee for each phase, along with IOM staff and consultants with relevant expertise. The evaluation was comprehensive in terms of the overall program, but it was not an evaluation of country-specific programs, specific partners, or specific agencies. Rugg noted that the evaluation relied on a framework of contribution to impact rather than attribution.

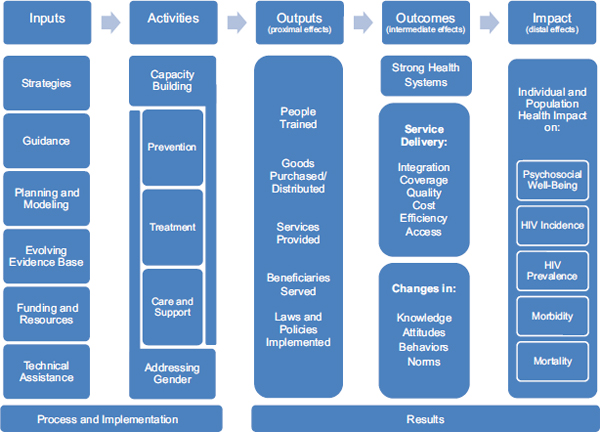

A critical part of the early evaluation planning process was to carry out an evaluability assessment, which included the interpretation of the congressional mandate, the development of evaluation questions, and a mapping exercise to determine what data were available globally at the headquarters level and in the countries. As another critical and parallel part of the early planning process, the committee also explored the feasibility of various designs and methods that the IOM might use in its evaluation, ultimately deciding on a conceptual framework and developing a program impact pathway (see Figure 4-1). “This conceptual framework was then simplified in order to cover the diversity of programs, to communicate to a variety of audiences, and to be something that all of the technical areas could use to organize the committee’s activity,” explained Rugg.

The conceptual framework included the various inputs into the PEPFAR initiative, such as the considerable financial and technical assistance resources going into the PEPFAR initiative as well as the strategies, guidance, and planning activities that were significant in developing and implementing the initiative. Rugg noted that the committee also viewed PEPFAR’s use of the evolving scientific evidence base as an input. In devel-

FIGURE 4-1 The program impact pathway for the Institute of Medicine’s Second Evaluation of PEPFAR (2009–2013), as presented by Rugg.

SOURCE: IOM, 2013.

oping this framework, the IOM committee examined PEPFAR’s capacity-building activities; the three areas of prevention, treatment, and care and support; and efforts to address gender equality in the initiative. The committee then looked at basic outcomes and tried to understand the impacts of PEPFAR in terms of proximal, intermediate, and distal effects.

“The outputs that we considered to be primary were outputs such as people trained, goods purchased and distributed, services provided, beneficiaries served, and the laws and policies that were designed and implemented,” said Rugg. She explained that the basic outcomes for the evaluation were in the areas of strengthening health systems; specific service delivery areas focusing on the integration of services and the coverage, quality, cost, efficiency, and access to services; and looking at any resulting changes in knowledge, attitude, behavior, and norms of the beneficiaries as well as providers. Seeking to respond to the congressional mandate, the IOM committee focused on key impact areas that would allow it to comment on individual and population health impact, including psychosocial well-being, HIV incidence, HIV prevalence, morbidity, and mortality.

The end result was a hybrid evaluation design that was retrospective and cross-sectional in nature and included time trend data and time series analyses. This design had different in-depth approaches and different topical areas, which Rugg characterized as a nested design. It also used a mixed methods approach. “This mixed methods approach included complementary data from different data sources and used both qualitative and quantitative data in order to balance the limitations of each other,” she said. The quality and rigor of the causal contribution analysis were improved through a triangulation approach among the different types of data and different analyses. When combined with the program impact pathway, this design provided a solid basis to help determine not just whether PEPFAR was affecting health outcomes but how and why.

In reflecting on the basic design, Rugg said that the value of a “whole of initiative” approach was that its findings could be interpreted in the context of the obvious interrelatedness of all the different activities conducted by PEPFAR. “We have long since moved beyond a time when interventions are isolated and single interventions have the effect we seek,” said Rugg. “They are necessarily interrelated and interdependent.”

The trade-offs associated with this design were the necessary duration of the evaluation and the limited data collection and depth of analysis in any one area of activity. “We could not drill down because of our focus at the higher, whole initiative level,” she explained. The “whole of initiative” approach also meant trade-offs in evaluation use in terms of the lack of country-specific findings described previously in Chapter 3, but she noted that the candor achieved by not identifying countries was critical to the quality and credibility of the data.

Rugg closed her comments by offering some advice for future evaluation options of this now mature initiative. Rather than conducting a single periodic evaluation to assess the impact of the entire initiative, it might be more strategic to create a portfolio of external evaluations, with each evaluation focused on a more narrow, high-priority, complex area of the initiative (e.g., interventions to increase women’s access to services or activities to strengthen public health laboratories) that could be completed in a shorter time frame. In addition, she advised that dissemination activities for these hypothetical evaluations focus on specific interpretations and applications of messages that could be tailored to specific countries, providing an opportunity for dialog without compromising the evaluation design in a way that would sacrifice candor and credibility.

Why is evaluating impact important to the Global Fund? Daniel Low-Beer, head of impact, results, and evaluation at the Global Fund, explained

that the core purpose of the Global Fund is to achieve a sustainable and significant contribution to the reduction of infections, illness, and death. To realize this goal, it has developed a new strategy of investing specifically for impact in terms of lives saved, infections averted, and meeting the Millennium Development Goals of reducing child mortality, improving maternal health, and combating HIV/AIDS, malaria, and tuberculosis. Today, a key part of the Global Fund’s programming is to invest more strategically for impact and to promote prioritization through its management focus.

The definitions of impact that the Global Fund uses for its evaluation have two components: assessing final disease outcomes and impact, and assessing contribution and causation along the results chain. For the first component at the epidemiological stage, Low-Beer explained, the evaluation looks at two primary questions. The first asks if there has been a change in disease mortality and morbidity or incidence and prevalence and if that change has been positive or negative. The second asks if there has been a change in outcomes, positive or negative. In terms of the second component of impact, contribution and causation, the evaluation asks if interventions, as well as other competing explanations such as deforestation and climate change, contributed to and resulted in these impacts, both positive and negative. To enable the evaluation of impact, the Global Fund has established a $10 million fund for investing in data infrastructures in target countries to enable rigorous analysis; disaggregation of data by time, person, and place; and inclusion of comparison groups where feasible.

Low-Beer said that the Global Fund’s technical committee is seeking to establish an independent yet practical approach to evaluation that can be integrated into the way the Global Fund makes grants and develops policies. Its approach to impact reviews is to:

- involve partners and build on in-country evaluation programs;

- make the evaluations periodic so they occur at regular intervals coordinated with in-country evaluations;

- use a plausibility design to provide evidence of impact, both positive and negative impact, and taking into account nonprogram influences;

- build country platforms that build on national systems and includes program reviews; and

- produce practical results and recommendations for grant management, grant renewal, and reprogramming.

In essence, said Low-Beer, the Global Fund has shifted its focus and funding away from a single overarching evaluation once every 5 years, to investing in a more continuous form of evaluation—“from a 5-year

evaluation to this challenge of 5 years of rolling evaluations.” By the end of 2013, all key components of this new strategy were operational, and the Global Fund is now supporting 21 in-country program reviews that are part of its new funding model. Four thematic reviews on cross-cutting areas have been launched along with 17 data quality assessments that were agreed to by its partners and general managers in 10 countries. At the time of the workshop, the Global Fund’s new funding model had been launched in Myanmar and Zimbabwe, and both Cambodia and Thailand have developed refocused national strategies. Some of the thematic reviews had already led to new grants, said Low-Beer, including a regional grant to evaluate artemisinin resistance that was based on one of the evaluations by the technical evaluations group. According to Low-Beer, a key issue is to determine the best way to use country program reviews to develop better programming and to improve grant making.

Low-Beer then described two examples to illustrate why it is important to establish impact up front in the Global Fund’s programming. The first involved HIV prevention in Thailand, where the grant had been achieving many of its programmatic targets. “But when we looked at epidemiological trends, we saw high levels of HIV among injection drug users, increasing levels among men who have sex with men and male sex workers, and stable but not decreasing levels in female sex workers,” said Low-Beer. These data show that while programmatic performance was good, impact was limited. Further review of the data suggested that it should be possible to increase coverage by focusing on the 27 provinces that accounted for 70 percent of new HIV infections, to use a network approach to deliver the packaged services to those who are most at risk, and to use innovative and robust monitoring approaches to support the delivery of HIV prevention services.

In Cambodia, the Global Fund put its HIV, tuberculosis, and malaria reviews together to identify common components of value. These reviews found that malaria deaths dropped by 80 percent and that this drop was linked to specific investments in the Global Fund’s portfolio, such as the $5 million to $10 million spent on community workers and the scale-up of treatments and bed net use; in addition, there was a 45 percent decline in tuberculosis prevalence related to Global Fund grants. However, noted Low-Beer, the review found that HIV prevention efforts were stagnant despite successful high coverage of antiretroviral therapy.

One of the strengths of this approach is that it builds measurement into management of programming and the way the Global Fund invests in its grants. “The causative framework that relies on time, person, and place has been quite powerful,” said Low-Beer. One of the current drawbacks of this approach is the variability in the quality of the program reviews, an issue that will take 2–3 years and investments by the Global Fund to address. This approach also requires strong political backing, both at the country

and Global Fund level, to turn recommendations for national programs into grants that focus on impact. Low-Beer added that this approach works well only in countries where additional investments are made in country evaluation agendas and where there are trials, studies, and operational research. He also noted that these reviews use a series of questions that have been defined by a technical evaluation group and that start with impacts and outcomes. The reviews include funding for an independent consultant to ensure they are run independently.

AFFORDABLE MEDICINES FACILITY–MALARIA ASSESSMENT

The Affordable Medicines Facility–malaria (AMFm) was established to address problems with access to artemisinin-based combination therapies (ACTs) for malaria, the highly effective and recommended treatment for this disease. Catherine Goodman, senior lecturer in health economics and policy in the Department of Global Health and Development at the London School of Hygiene and Tropical Medicine, explained that, despite free or highly subsidized public-sector availability of ACTs, access through the public sector remains poor. As a result, many customers use less effective antimalarials or use artemisinin as a single agent, which could exacerbate the development of artemisinin resistance. To address these problems, the Global Fund created AMFm with the twin goals of contributing to malaria mortality reduction and delaying the development of artemisinin resistance by increasing the availability, affordability, market share, and use of quality-assured ACTs.

AMFm comprises three elements, said Goodman. First, the program negotiates with ACT manufacturers to reduce drug costs in both the public and private sectors. Second, the program provides a large buyer subsidy at the top of the global supply chain. Third, the program supports a range of in-country interventions to ensure effective scale up. AMFm was first implemented as eight national-scale pilot programs that enabled the participating countries to purchase ACTs from approved manufacturers at the subsidized, negotiated price. Within each country, the drugs were distributed through the standard public- or private-sector distribution chain, which means that the program has no control over where the drugs go within a given country.

Turning to the design of the AMFm evaluation, Goodman noted that because the intervention occurs on a national scale, control areas within the country were not possible. Instead, the evaluation relied on a pre- and post-test design with baseline and endpoint assessments. The key primary source of data was outlet surveys, which she explained are nationally representative surveys conducted at baseline and endline. The baseline surveys took place before the intervention began. The endline, which varied by country, was 6 to 15 months after the first subsidized drugs had arrived

in the country. She and her colleagues surveyed all outlets that could possibly supply antimalarials. “We are looking at total market approach in the evaluation,” said Goodman. Outlets included public and private health facilities, pharmacies and drug stores, general stores that stock antimalarials, or community health workers or vendors. “Whoever had antimalarials, we visited them.”

To measure ACT availability, price, and market share, the survey used a cluster sampling approach stratified by urban and rural areas; it also used a sample size calculation based on detecting a 20 percentage point change in quality-assured ACT availability. For data on use of ACTs, the evaluators required household survey information, but as Goodman explains, “It was decided not to fund specific household surveys for this study … largely due to cost considerations.” Use of ACTs was certainly considered an important outcome, but several ongoing household surveys collect data on fever treatment, and the evaluation team hoped to use the data from these surveys. In the end, she added, the evaluation only had appropriately timed data from those types of surveys for five of the eight pilots. “The use data was somewhat incomplete,” she said.

The next component of the evaluation design relied on the availability of careful documentation of the process of AMFm implementation and the context of the implementation in specific countries, such as the exact settings of the implementation and other activities that were occurring at the time of implementation. The evaluation also included a few extra studies, including one that looked specifically at distribution and use in remote areas of Ghana and Kenya and the role of the AMFm logo.

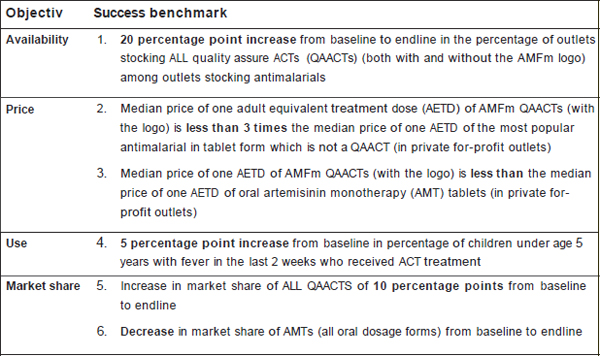

Goodman noted that once the evaluation was in progress, the Global Fund Secretariat contracted with an independent group from the University of California, San Francisco, to develop recommendations for success metrics that would be reasonable to expect 1 year after the effective start date of AMFm (see Figure 4-2). “When we were doing the evaluation, what we were actually testing was not whether there had been a significant change over time but whether countries had significantly exceeded the success metric and whether we could be confident that they had met these targets,” said Goodman.

Goodman then highlighted a number of strengths and limitations of the AMFm evaluation. One of the strengths was that the evaluation was conducted in all eight operational pilot projects, which represented a wide range of contexts. The primary outlet survey data were from nationally representative surveys and drew on well-developed methods being used by the ACTwatch monitoring program.1 The evaluation also had careful standardization of data collection and analysis across the pilots, and although

1 More information is available at http://www.actwatch.info (accessed April 10, 2014).

FIGURE 4-2 Success metrics for AMFm evaluation.

SOURCE: Goodman, 2014.

actual data collection was done by different agencies, not by the evaluation team, the evaluation team was involved in quality assurance throughout the data collection process. While there were no controls for this evaluation, the team was able to assess plausibility using the carefully documented information on process and context, and it was able to conduct its evaluation independently.

Regarding limitations of the evaluation, Goodman listed the short timeline for the evaluation between AMFm implementation and the endline outlet survey in some countries as a challenging issue, along with the need to rely on secondary household survey data to assess one of the key outcomes. While the evaluation did cover eight countries, that is still a limited number, so extrapolation to countries with different antimalarial markets needs to be done with caution. Also, a number of areas were beyond the scope of this evaluation, such as patient adherence to ACT, the prevalence of counterfeit drugs, and targeting by parasitemia status—that is, whether the patients receiving ACT actually had malaria.

One of the biggest concerns, said Goodman, was the fact that there were no comparators for the evaluation. One possible methodological solution was that, while it was not possible to create comparison areas within the pilot settings, it might have been possible to create comparison areas in

other countries. The evaluation team debated this approach and concluded that the challenges of choosing comparison countries were too great for this evaluation. “There are so many big differences in context and in the implementation of other malaria control strategies,” said Goodman. These differences between pilot AMFm countries and potential comparison countries raised important questions. “Are we trying to compare with the status quo in other countries? Are we trying to compare with other countries that have implemented other strategies, like community health workers, for instance? It is quite difficult to know how to go about those comparisons even if they were valid.”

She noted, too, there is a selection bias in terms of meeting the criteria to be an AMFm pilot country and the challenge of finding additional comparison countries that matched those same criteria. There was also a concern about the potential flow of drugs across national borders and other ways in which the AMFm pilot in some countries might have led to effects in other nonpilot countries—as well as the fact that AMFm may have had a role in shaping the global market for relatively low-cost ACTs, which could have influenced the market as a whole and the cost of production. “One cannot be absolutely sure what the counterfactual results would have been in the absence of AMFm,” she noted. Finally, collecting sufficient comparator data in other countries would have been costly.

THE SEARCH FOR GOOD PRACTICE IN COMPLEX EVALUATIONS

In the final presentation of this panel, Elliot Stern, emeritus professor of evaluation research at Lancaster University and visiting professor at Bristol University, discussed some of the lessons he has learned about good practices in evaluation design during his career and his experiences reviewing evaluations, sitting on quality assurance bodies, and helping to draw up terms of reference. He said, “What is amazing, despite all the guidance and debates and conferences like this and the investments that have taken place, is how difficult it is in truly complex areas to find good practice.” For example, even in major evaluations commissioned by major bodies, evaluation questions can be absent or poorly formulated, context is often ignored, and there is poor use of theory even when there is an acknowledged need for explanations of how and why. Other shortcomings that he noted include poor stakeholder engagement, which is often associated with weak construct validity; the continued application of Humean designs that look for the single cause of a single effect even after recognizing that there are multiple causes with multiple effects; weaknesses in the bases for causal claims; and poor integration of multiple methods.

To illustrate the challenges of finding good practices for evaluating complex programs, Stern discussed evaluations of the Consultative Group

on International Agricultural Research (CGIAR) Natural Resource Management Research (NRM-R) programs, and in particular, the Research Program on Aquatic Agricultural Systems being run in the Lake Victoria area, the Mekong Delta, and the Coral Triangle. The NRM-R, explained Stern, runs multileveled, multilocation interventions operating at the farm, landscape, regional, and global levels. It combines participatory and technological interventions in ways designed to change the behavior of individuals, households, institutions, and markets, as well as to change policies. NRM-R deploys research and local tacit knowledge through action research, and it engages policy makers, scientists, and community partners to collaboratively plan for change that will in the end strengthen natural resource management in the target region.

Looking at the goals of the Research Program on Aquatic Agricultural Systems, Stern characterized them as generic and difficult to pin down. “We are talking about sustainable productivity gains for system-dependent households, improved markets and services for the poor and vulnerable, strengthened resilience and adaptive capacity, reduced gender disparities in access to and control of resources and decision making, and improvements in policy and institutions to support pro-poor, gender-equitable, and sustainable development,” he said. Evaluating outcomes for these goals is challenging without reducing them to specific activities, but doing so “involves a trade-off between being able to say something about the program as opposed to being able to say something about a particular scheme in a particular area,” said Stern.

One of the building blocks of a successful evaluation is developing an adequate theory of change. Doing so requires identifying the critical links in program planning, implementation, and delivery and identifying critical conditions, assumptions, and supporting factors. It is also necessary to identify rivals to the “official” program theory, not simply develop an evaluation that has what Stern called a “confirmatory bias” resulting from a design that evaluates a program from the perspective of how it is supposed to work rather than how a program actually is working. Another component of an adequate theory of change is a means of assessing the contexts of program implementation. In terms of causality, it is important to remember that most programs do not cause results singlehandedly; rather, they make a difference or contribute to results. Rarely, said Stern, is a program both necessary and sufficient by itself to produce positive results without other supporting factors. Using fish farming as an example, the initiative components could include start-up funding, provision of fingerlings, low-cost fish food, and advice on improved fish farming techniques. Supporting factors could be an adequate number of farmers initially convinced to try fish farming and an adequate market for fish produced over and above a

family’s consumption. In this case, the initiative factors made a difference, but were more likely to do so if the supporting factors were also present.

The evaluation questions have important implications concerning design choices, yet too often evaluators place insufficient emphasis on how these questions are formulated, Stern said. Asking if an observed change can be attributed to the intervention requires a counterfactual design; asking how an intervention makes a difference is difficult to answer without theory; asking whether an intervention will work elsewhere requires some consideration of contexts and mechanisms.

The other major building block for developing a good evaluation design involves understanding the attributes of a program and designing in a way that will account for them. One of the attributes of the NRM-R program, for example, is that it recognizes that ecosystems mediate social and ecological systems. As a result, multidisciplinary knowledge and theory need to be used when designing an evaluation. In addition, the lack of market-based coordination of resource use by stakeholders means that an evaluation design will have to account for the likelihood of conflicts and will need methods to evaluate conflicts and collective action. The presence of level-specific effects in a multilevel program highlights the importance of nested designs that may require different theories and methods at each level, which creates the challenge of achieving vertical integration between levels. Uncertain and extended change trajectories, in which markets change rapidly but landscapes change over decades, requires the use of iterative rather than fixed designs accompanied by extended longitudinal modeling. Stern noted, too, that because systems integration involves trade-offs, the use of game theory and modeling may be needed to capture both trade-offs and holistic factors that might be having an impact.

“The key message that I have been trying to emphasize is not only that design is important, but if you want quality evaluations, you have to invest in it,” Stern said in closing. “We suggest that up to 20 percent of the budget available for evaluation ought to be invested in good design work. That does not mean sitting and writing a proposal, but it does mean revisiting the design issues over time. The more complex the program, the more design matters—and it takes time. If you get the logic of description, explanation, and causal inference right, methods follow more easily.”

OTHER TOPICS RAISED IN DISCUSSION

To start the discussion, moderator and workshop planning committee member Kara Hanson, who is professor of health system economics at the London School of Hygiene and Tropical Medicine, asked the panelists to speak briefly about (1) how they conceived of context in designing their evaluation and (2) the methodological approaches that exist to understand

the effects of context, particularly for complex interventions. Goodman replied that she and her colleagues tried to collect data on context within each of the countries being studied. “For instance, what is the antimalarial market like in a given country and how has that affected how AMFm is rolled out,” she said. “If there has been a change in one of the key outcomes, is there anything else that is plausibly responsible for this apart from AMFm? We tried to look at all of those things mainly through qualitative and some quantitative data toward the endline.”

The Global Fund, said Low-Beer, takes an open approach to causation that considers alternative hypotheses involving context as opposed to looking just at whether an individual intervention produces an observed effect. In addition, he and his colleagues often start with impacts and outcomes and then work back along the causal chain to try to identify other hypotheses of change that could be dependent on context. Stern added, “There is an overall question of the object of evaluation, which defines the context. If you take a realist ontology where you are actually looking at mechanisms in context and being able to understand why things work in one place and not in another, that inevitably drives you toward trying to understand how is it that the context has made the difference. To some extent, the rediscovery of context can occur much later in the train of events. It can occur when you get to the stage that you have this puzzling data. And it may only be then that the nature of that context might be revealed.”

In the PEPFAR evaluation, said Rugg, the issue of context came up in the early phases of the design. She explained that a conceptual model was developed that embedded the PEPFAR operations in the context of many other factors in each country. Then, in the implementation phase, the evaluators looked at a variety of indicators that were compared across countries to give a contextual background of the environment in which PEPFAR was operating. The significant qualitative data collection component also explored contextual issues in the countries that were visited. Rugg added that what is equally important but rarely considered concerning context is the influence of individuals and charismatic leaders on a program’s success or failure.

In response to a question from Sangeeta Mookherji from George Washington University about whether the panelists were thinking about supporting factors as part of context, Hanson noted and Stern agreed that the field needs some clarification in the language to communicate such issues clearly. Stern added, “It is quite different to talk about nonprogram things and those things that we can influence and the much more causative specific contextual or supporting factors that might affect a particular change in a particular place.”

A discussion about stakeholder involvement in planning and reviewing evaluations, prompted by a question from Carlo Carugi of the Global

Environment Facility, highlighted a variety of reasons why it may be useful to involve stakeholders at different stages of the evaluation process. Rugg noted that the PEPFAR evaluation team had opportunities to engage with staff from PEPFAR and Congress to understand the intent of the mandate, as well as to discuss the strategic plan for the evaluation after the publication of a planning phase report, when there was also an opportunity for public engagement. Bridget Kelly, one of the two IOM study co-directors for the evaluation, added that an operational planning phase included two pilot country visits, which in addition to being data collection trips were also an opportunity to elicit that kind of stakeholder understanding of how things operate in practice, to learn what kind of data requests would be feasible and timely, and to pilot tools for primary data collection.

Goodman said that the AMFm team held a meeting of the pilot countries after producing a first draft of their report to debate the results, and during this meeting at least some of the countries were, as she put it, “not feeling happy with the way the evaluation was framed for their country.” She added that the international malaria community was brought in at the design stage as part of the advisory team that supported the development of the evaluation design.

Finally, in response to a question from Ruth Levine, director of the Global Development and Population Program at the William and Flora Hewlett Foundation Evaluations, about why evaluations break what she called “Evaluation 101 rules,” Stern singled out overambitious and underfunded terms of reference for evaluations, poor governance, and insufficient experience on the part of those conducting evaluations.