6

Evidence Integration for Hazard Identification

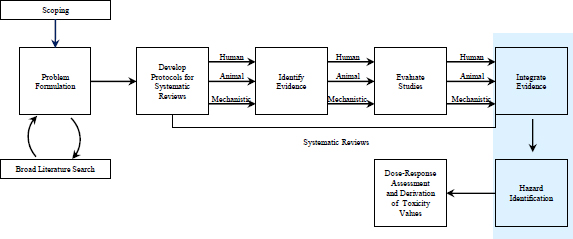

Hazard identification is a well-recognized term in the risk-assessment field and was codified in the 1983 NRC report Risk Assessment in the Federal Government: Managing the Process (NRC 1983). In the present report, hazard identification is understood to answer the qualitative scientific question, Does exposure to chemical X cause outcome Y in humans? Evidence integration is understood to be the process of combining different kinds of evidence relevant to hazard identification. In a typical assessment developed for the Integrated Risk Information System (IRIS), for example, evidence integration might involve observational epidemiologic studies, experimental studies of animals and possibly humans, in vitro mechanistic studies, and perhaps other mechanistic knowledge. If the answer to the qualitative question for a given outcome is affirmative, the US Environmental Protection Agency (EPA) produces a quantitative estimate of toxicity by using selected studies to characterize the dose-response relationship with some estimate of uncertainty to yield a reference dose (RfD), a reference concentrations (RfC), or a unit risk value for the given outcome. This chapter focuses on the qualitative question of hazard identification (that is, the hazard-identification process, see Figure 6-1). Chapter 7 considers the quantitative process that follows hazard identification.

In this chapter, the committee first discusses some concerns about current terminology, next addresses the kinds of evidence that must be combined, and then outlines some organizing principles for integrating evidence. A review of the approach that EPA has recently taken and its responsiveness to the recommendations of the NRC formaldehyde report (NRC 2011) follows. Options for integrating evidence are then discussed with a focus first on qualitative approaches and then on quantitative approaches. The final section of the chapter provides the committee’s findings and recommendations, which are offered in light of consideration of how EPA might best increase transparency and implement a process that is feasible within its time and resource constraints and that is ultimately scientifically defensible.

FIGURE 6-1 The IRIS process; the hazard-identification process is highlighted. The committee views public input and peer review as integral parts of the IRIS process, although they are not specifically noted in the figure.

An early challenge faced by this committee was to determine how the phrase weight of evidence (WOE) is used by EPA and others. The term is often used by EPA in the context of a WOE “narrative.” In the case of a carcinogenic risk assessment, the narrative consists of a short summary that “explains what is known about an agent’s human carcinogenic potential and the conditions that characterize its expression” (EPA 2011). In EPA’s Guidelines for Carcinogen Risk Assessment, the WOE narrative “explains the kinds of evidence available and how they fit together in drawing conclusions, and it points out significant issues/strengths/limitations of the data and conclusions” (EPA 2005, p. 1-12). Current guidelines for evidence integration are given in Section 5 of the preamble for IRIS toxicological reviews, and guidelines for writing a WOE narrative are given specifically in Section 5.5 (EPA 2013a, pp. B-5 to B-9). Guidance has also been provided in some of the outcome-specific guidelines (for example, EPA 2005).

Rhomberg et al. (2013), in a review article surveying best practices for WOE frameworks or analyses, describe WOE as encompassing all of causal inference. They state that “in the broadest sense, almost all of scientific inference about the existence and nature of general causal processes entails WOE evaluation” (Rhomberg et al. 2013, p. 755). They then describe the wide array of meanings attached to the phrases systematic review and weight of evidence as follows:

Some terms are used differently in different frameworks. In particular, to some practitioners, the term “systematic review” refers specifically to the systematic assembly of evidence (for example, by using explicit inclusion and exclusion criteria or by using standard tabulation and study-by-study quality evaluation), while “weight of evidence” refers to the subsequent integration and interpretation of these assembled selected studies/data as they are brought to bear on the causal questions of interest. To others, “systematic review” refers to the whole process from data assembly through evaluation, interpretation, and drawing of conclusions; for still others, this whole suite of processes is subsumed under WoE. …when we refer to “WoE frameworks,” we mean approaches that have been developed for taking the process all the way from scoping of the assessment and initial identification of relevant studies through the drawing of appropriate conclusions.

The present committee found that the phrase weight of evidence has become far too vague as used in practice today and thus is of little scientific use. In some accounts, it is characterized as an oversimplified balance scale on which evidence supporting hazard is placed on one side and evidence refuting hazard on the other. That analogy neglects to account for the total weight on either side (that is, the scope of evidence available) and captures only where the balance stands. Others characterize WOE as a single scale, and different kinds of evidence have different weights. For example, a single human study with low risk of bias might be considered as providing the same evidential weight as three well-conducted animal studies. The weights might be adjusted according to the quality of the study design. This analogy neglects to account for the “weight for” vs the “weight against” hazard.

Perhaps the overall idea of the WOE for hazard should combine both characterizations. It is evident, however, that its use in the literature and by scientific agencies, including EPA, is vague and varied. The present committee found the phrase evidence integration to be more useful and more descriptive of what is done at this point in an IRIS assessment—that is, IRIS assessments must come to a judgment about whether a chemical is hazardous to human health and must do so by integrating a variety of evidence. In this chapter, therefore, the committee uses the phrase evidence integration to refer to the process that occurs after assessment of all the individual lines of evidence (see Figure 6-1).

As described in previous chapters, the committee uses the phrase systematic review to describe a process that ends before evidence integration and hazard identification (Figure 6-1). After hazard identification, the IRIS process turns to dose-response assessment and derivation of toxicity values. By defining systematic review as a process that ends before hazard identification,

the committee is not implying that the process by which IRIS conducts hazard identification and dose-response assessment is or should be nonsystematic; it simply ensures that the committee’s use of the phrase systematic review is clear and consistent with current literature.

Finally, the committee makes a distinction between data and evidence. Although it is common to use the two somewhat interchangeably, they are not synonymous. As the report Ethical and Scientific Issues in Studying the Safety of Approved Drugs (IOM 2012, p. 122) states:

The Compact Oxford English Dictionary [Oxford Dictionaries 2011] defines data as “facts and statistics collected together for reference or analysis” and evidence as “the available body of facts or information indicating whether a belief or proposition is true.”

Data become evidence for or against a claim of hazard only after some sort of statistical or scientific inference.

EVALUATING STRENGTHS AND WEAKNESS OF EVIDENCE

As discussed in Chapter 3, evidence on hazard can come from human studies, animal studies, mechanistic studies, background knowledge, and a host of other sources. Each source has its relative strengths and weaknesses, and Table 6-1 highlights some of the important ones. In using integrative approaches, those considering the evidence should take their strengths and weaknesses into account.

ORGANIZING PRINCIPLES FOR INTEGRATING EVIDENCE

One challenge that EPA and other regulatory agencies face when attempting to establish guidelines for integrating evidence is that the amount and quality of the various types of evidence can vary substantially from one chemical to another. For example, a small number of environmental contaminants—such as arsenic, dioxins, polychlorinated biphenyls, and formaldehyde—have extensive human data, often from relatively well-designed cohort studies, substantial animal data from several animal models, and mechanistic information. On a larger number of chemicals, there are few or no high-quality human data, but there are a small number of animal studies and some in vitro mechanistic studies. For the great majority of the chemicals in the environment that might cause harm, however, there are virtually no human or animal data, although there might be some scientific knowledge relevant to a chemical’s potential toxicity or putative mechanism (often inferred from structurally similar compounds).1

That variation in the evidence base invites different organizing principles by which evidence could be combined into a single judgment. One option is to organize the evidence around potential mechanisms by which a chemical might cause harm. As models of chemical action improve, it might become possible to predict the toxicity of a chemical reasonably accurately merely by using sophisticated models of its interaction with human cells and tissues. As it is clearly infeasible to generate human or animal data on the more than 80,000 chemicals in commercial use in the United States, that approach might be the only option for the great majority of chemicals, and it is the approach proposed in the NRC report Toxicity Testing in the 21st Century: A Vision and a Strategy (NRC 2007). In fact, EPA’s strategic plan for evaluating chemical toxicity provides a framework for the agency to incorporate the new scientific paradigm into future toxicity-testing and risk-assessment practices (EPA 2009).

_____________________________

1As noted in Chapter 3, the committee is using the term mechanism of action (or mechanism) in this report rather than mode of action simply for ease of reading; it recognizes that these terms can have different meanings.

| Source of Uncertainty | Strength | Weakness |

| Interspecies extrapolations | HE: Not applicable, because not needed. | HE: Not applicable, because not needed. |

| EA: Can use multiple species, and this provides a broad understanding of species differences. | EA: Inherent weakness when interspecies extrapolation from animals to humans is required. | |

| MECH: Can identify cellular, biochemical, and molecular pathways that are similar or different in humans and the test species and thus lend strength to the veracity of the extrapolation. | MECH: For a given chemical, multiple mechanisms might be involved in a given end point, and it might not be evident how different mechanisms interact in different species to cause the adverse outcome. | |

| Intraspecies extrapolation | HE: Often able to study effects in heterogeneous populations. | HE: Many studies involve occupational cohorts, which do not reflect the general population. |

| EA: Effects seen during different life stages (such as pregnancy and lactation) can be evaluated. Use of transgenic animals can provide important mechanistic data. | EA: Often rely on a few strains in which animal genetics, life stage, diet, and initial health state are controlled. | |

| MECH: Observed differences between strains of a common test species (such as Fisher 344 rats and Sprague-Dawley rats) might be readily explained by different pathways. Comparison with human in vitro mechanistic data might allow better selection of the most appropriate animal model for predicting human response. | MECH: Putative mechanism of the adverse outcome might not be known, and mechanistic data might not reveal the basis of differences within a species. | |

| High-dose to low-dose extrapolation | HE: Often better suited for considering actual range of population exposures. | HE: Occupational exposure is often higher than that seen in the general human population. |

| EA: Wide range of exposures is possible, and this allows better estimation of quantitative dose-response relationships. | EA: Exposures used are often orders of magnitude higher than those seen in the general human population. | |

| MECH: Dose-related differences in ADME properties and pharmacodynamic processes might be used to adjust for differences in rate of response between high and low doses. | MECH: The ultimate molecular target for toxicity might not be known at low or high doses, so mechanism might not accurately predict high-dose to low-dose extrapolations. | |

| Acute to chronic extrapolation (temporal considerations) | HE: Might closely mimic exposure durations seen in the general population. | HE: Occupational exposure durations are often shorter (years vs lifetime; 8 hr/day vs 24 hr/day) than those seen in the general human population. |

| EA: Wide range of exposure durations is possible. | EA: Highly dependent on study design. | |

| MECH: Provides invaluable information on whether a product or effect can accumulate on repeated exposure and whether repair pathways or adaptive responses can lead to outcomes that are significantly different between single and repeated exposures. | MECH: If mechanism differs between acute or chronic response, the information on one might not be informative of the other. | |

| Route-to-route extrapolation | HE: Often involve route of exposure relevant to the general human population. | HE: Data might be available on only one route of exposure. |

| EA: Can involve route of exposure relevant to the general human population. | EA: Often uses an exposure method that requires extrapolation of data (for example, diet to drinking water). | |

| MECH: Pharmacokinetic differences (ADME, PBPK) might facilitate more accurate identification of target-tissue dose from different exposure pathways. | MECH: Mechanism might be tissue-specific and therefore route-dependent as the route determines the initially exposed tissue. | |

| Other considerations | HE: Can evaluate cumulative exposures and health effects. | HE: Long lag time to identify some effects. Increased potential for exposure and outcome misclassification and confounding. Variable cost. |

| EA: Shorter animal lifespans allow for more rapid evaluation of hazards. Reduced misclassification of exposures and outcomes. Allows examination of full spectrum of toxic effects. | EA: Multiple extrapolations required. Variable cost. | |

| MECH: Conservation of fundamental biologic pathways (such as cell-cycle regulations, apoptosis, and basic organ-system physiology) might allow quick and inexpensive identification of potential adverse effects of a new chemical in the absence of human or animal in vivo data. | MECH: Identification of relevant pathways in producing the toxic response might be difficult because of the lack of understanding of pathobiologic processes. |

Organizing evidence around mechanism for chemicals on which only some human or animal data are available, however, seems inappropriate. Consider the Food and Drug Administration (FDA) and drug safety. If FDA were required to organize drug safety around mechanism, it would be nearly impossible to regulate many important drugs because the mechanism is often not understood, even for drugs that have been studied extensively. For example, it is known that estrogen plus progestin therapy causes myocardial infarctions on the basis of randomized clinical trials even though the mechanism is not understood (Rossouw et al. 2002). Randomized clinical trials are so successful partly because they bypass the need for mechanistic information and provide an indication of efficacy. Similarly, epidemiologic studies that identify unintended effects are often credible because explanations of an observed association other than a causal effect are implausible. For example, the associations between statins and muscle damage and between thalidomide and birth defects are widely accepted as causal; mechanistic information played a minor role in the determination, if any. The history of science is replete with solid causal conclusions in advance of solid mechanistic understanding.

A second option is to organize the case for hazard around the kinds of evidence either actually or potentially available. Different kinds of evidence have more or less direct relevance to the determination of hazard and can often be indirectly relevant by virtue of bearing on the relevance of other kinds of evidence. As discussed previously, each major type of evidence has inherent strengths and weaknesses, and the three major lines of research used by the IRIS program produce complementary findings. For example, mechanistic knowledge can often be informative about the relevance of animal-model data, as exemplified in the approaches of EPA and the International Agency for Research on Cancer (IARC).

In considering which kind of evidence is more or less important in driving a conclusion about hazard, human studies are historically taken to be more important than animal studies. For example, the EPA guidelines for cancer risk assessment state that classification of a chemical as a human carcinogen is reached when there is “convincing epidemiologic evidence of a causal association between human exposure and cancer” (EPA 2005, p. 2-54; emphasis added). According to the guidelines, the determination can be made irrespective of the strength of the animal data. In other words, in cases in which extensive human data strongly support a causal association between exposure and disease and the studies are judged to have a relatively low risk of bias, the human evidence can outweigh animal and other evidence, no matter what it is. Furthermore, a judgment of “carcinogenic to humans” can be justified when human studies show only an association (not a causal association) if they are buttressed by extensive animal evidence and mechanistic evidence that support a conclusion of causation (EPA 2005, p. 2-54).

When human data are nonexistent, are mixed, or consistently show no association and an animal study finds a positive association, the importance of mechanistic data is increased. Fundamental toxicologic questions related to dose, exposure route, exposure duration, timing of exposure, pharmacokinetics, pharmacodynamics, and mechanisms would then play an even more important role in determining the relevance of positive in vivo animal data, especially when the human data are negative or inconclusive.

A final option for organizing evidence integration might be called an alternative interpretation, which Rhomberg et al. (2013, p. 755) argue is desirable and will improve transparency:

A WoE evaluation is only useful and applicable to constructive scientific debate if the logic behind it is made clear; with that, it is often necessary to take the reader through alternative interpretations of the data so that the various interpretations can be compared logically. This approach does not eliminate the need for scientific judgment, and often may not lead to a definitive choice of one interpretation over the other, but it will clearly lay out the logic for how one weighs the evidence for and against each interpretation. Only in this way is it possible to have constructive scientific debate about potential causality that is focused on an organized, logical “weighing” of the evidence.

The alternative interpretations are implied to arise from different potential mechanisms, but the committee does not view mechanisms as central to this sort of organizing framework. Rather, the pattern of human, animal, and mechanistic evidence analyzed might be explained in various ways. For example, if human data show little association between exposure and disease but animal data provide consistent evidence of toxicity, one explanation might be that the chemical is toxic to animals but not to humans because of some difference in metabolic response. Another explanation might be that the human data were consistently underpowered statistically, rife with measurement difficulties, or taken from populations exposed to low doses of the chemical. The organizing principle for integrating the evidence in this case would be to consider each explanation and describe the evidence (of any type) that supports it and refutes it.

Whether one organizes evidence around mechanism, kinds of evidence, or alternative interpretations, it has to be integrated into a single judgment on hazard. Integrating evidence rationally requires an implicit or explicit set of guidelines. The guidelines for integration are often called a framework, which is defined as a clear process or a clear set of guidelines for evidence integration. Such frameworks range from ones that involve a rigid, algorithmic integration process to ones that provide loose guidelines and allow experts substantial freedom in applying them.

It seems impossible and undesirable to build a scientifically defensible framework in which evidence is integrated in a completely explicit, fixed, and predefined recipe or algorithm. There are no empirical data on the basis of which fixed weighting schemes are more likely to produce true answers than other schemes, and getting such data is far off. Furthermore, substantial expert judgment in making categorizations according to such schemes is unavoidable. On the other hand, simply putting a group of experts into a room and asking them to consider the evidence in its totality and to emerge with a decision seems equally undesirable and endangers transparency. To ensure transparency, it seems desirable to have an articulated framework within which to consider the relevance of different evidence to the causal question of hazard identification. Various options for evidence integration are considered further below.

Common considerations (or quasicriteria) used in many frameworks in which various bodies of evidence must be integrated to reach a causal decision are the “Hill criteria for causality,” a set of guidelines first articulated by Austin Bradford Hill in 1965 to deal with the problem of integrating evidence on environmental exposure and disease, particularly with respect to smoking and lung cancer. EPA states that “in general, IRIS assessments integrate evidence in the context of Hill (1965)” (EPA 2013a, p. 13). Hill’s guidelines are meant as considerations in assessing the move from association to causation (causal association). They include strength of association, consistency, specificity, temporality, biologic gradient, plausibility, coherence, experimental evidence, and analogy. The Hill criteria are widely regarded as useful (Glass et al. 2013) and, as noted in the IRIS preamble, explicitly constitute the basis on which EPA should evaluate the overall evidence on each effect (EPA 2013a, Appendix B, p. B-5).

The Hill guidelines, however, are by no means rigid guides to reaching “the truth.” Rothman and Greenland (2005) used a series of examples to illustrate why the Hill criteria cannot be taken as either necessary or sufficient conditions for an association to be raised to a causal association. They provide counterexamples to each of Hill’s criteria, some from the very example—smoking— that Hill considered in his 1965 article. For example, they note that although the association between smoking and cardiovascular disease is comparatively weak, as is the association between second-hand smoke and lung cancer, both relationships are now considered causal (Rothman and Greenland 2005). They further note that examples of strong associations that are not causal also abound, such as birth order and Down syndrome. There are many examples of causal inference in which there is no known mechanism. Therefore, although the guidelines can

usefully inform an evidence-integration narrative, Rothman and Greenland caution against using the Hill guidelines as “checklist criteria”—a warning that the present committee considers appropriate.

The 2011 NRC formaldehyde report made several recommendations for evidence integration in IRIS assessments (see Box 6-1). As in the other recommendations, there is an emphasis on transparency and standardization of approach.

The draft preamble (EPA 2013a, Appendix B) and the draft handbook (EPA 2013a, Appendix F) contain the most recent guidelines on evidence integration for IRIS assessments. Whereas the preamble and the handbook provide reasonably extensive guidelines on evidence integration within evidence streams, the preamble does not provide guidelines for evidence integration among evidence streams (only what hazard descriptors should be used), and instructions for evidence integration have yet to be written for the handbook. Therefore, this section discusses the guidelines that EPA has outlined and how evidence integration has been carried out and described in recent IRIS assessments of methanol and benzo[a]pyrene (EPA 2013b,c). Potential revisions that EPA might want to consider are provided. The committee recognizes that the methanol and benzo[a]pyrene assessments do not reflect all changes that EPA has made or plans to make to the IRIS process in response to the recommendations in the NRC formaldehyde report.

Two guiding principles are apparent in the committee’s review of the current IRIS process. First, as of fall 2013, EPA still relies on a guided expert judgment process (discussed below). EPA (2013a, p. 14) states that hazard identification requires a critical weighing of the available evidence, but this process “is not to be interpreted as a simple tallying of the number of positive and negative studies” (EPA 2002, p. 4-12). EPA (2013a, p. 14) further states that “hazards are identified by an informed, expert evaluation and integration of the human, animal, and mechanistic evidence streams.” Second, overall conclusions regarding causality are to be reached and justified according to the Hill criteria (EPA 2013a).

• Strengthened, more integrative and more transparent discussions of weight of evidence are needed. The discussions would benefit from more rigorous and systematic coverage of the various determinants of weight of evidence, such as consistency.

• Review use of existing weight of evidence guidelines.

• Standardize approach to using weight of evidence guidelines.

• Conduct agency workshops on approaches to implementing weight of evidence guidelines.

• Develop uniform language to describe strength of evidence on noncancer effects.

• Expand and harmonize the approach for characterizing uncertainty and variability.

• To the extent possible, unify consideration of outcomes around common modes of action rather than considering multiple outcomes separately.

Source: NRC 2011, pp. 152, 165.

Section 5 of the IRIS preamble articulates guidelines for “evaluating the overall evidence of each effect” (EPA 2013a, Appendix B). Rather than giving an explicit process for evaluating the overall evidence, the preamble states that “causal inference involves scientific judgment, and the considerations are nuanced and complex” (EPA 2013a, p. B-5). It also describes evidence integration within each kind of evidence stream—evidence in humans, evidence in animals, and mechanistic data to identify adverse outcome pathways and mechanisms of action—before combining different kinds of evidence. For evidence in humans, IRIS assessments are to “select a standard descriptor” from among the following (EPA 2013a, p. B-6):

• “Sufficient epidemiologic evidence of an association consistent with causation.”

• “Suggestive epidemiologic evidence of an association consistent with causation.”

• “Inadequate epidemiologic evidence to infer a causal association.”

• “Epidemiologic evidence consistent with no causal association.”

No detailed process is suggested for arriving at a classification other than relying on expert judgment that is based on the aspects listed above. A subset of the Hill guidelines is offered as relevant for integrating the evidence in animals. For integration of mechanistic evidence, IRIS assessments are to consider the following three questions (EPA 2013a, pp. B-7 to B-8):

1. “Is the hypothesized mode of action sufficiently supported in test animals?”

2. “Is the hypothesized mode of action relevant to humans?”

3. “Which populations or lifestages can be particularly susceptible to the hypothesized mode of action?”

For overall evidence integration, an IRIS assessment must answer the causal question, “Does the agent cause the adverse effect?” (EPA 2013a, p. B-8). It then must summarize the overall evidence with a “narrative that integrates the evidence pertinent to causation” (EPA 2013a, p. B-8). The narrative should target a qualitative categorization, and two examples are offered in the IRIS preamble. The first is taken directly from the EPA guidelines for carcinogen risk assessment (EPA 2005, Table 6-2). The second is taken from EPA’s integrated science assessments for the criteria pollutants (EPA 2010, see Table 6-3).

In summary, the draft IRIS preamble (EPA 2013a, Appendix B) gives guidelines as to what considerations ought to inform the experts’ integration of human, animal, and mechanistic evidence, and it gives extensive guidance on the qualitative categorization that the experts should use, but it articulates no systematic process by which the experts are to come to a conclusion. The draft handbook (EPA 2013a, Appendix F) gives extensive guidelines for synthesizing evidence within each stream but no guidelines for integrating evidence among streams. The guidelines and the summary descriptors offered for epidemiologic and other studies are reasonable, and similar ones have been used in many other organizations that have similar aims and problems, such as IARC and the National Toxicology Program (NTP).

Draft IRIS Assessment of Methanol

A recent IRIS assessment of Methanol (EPA 2013b) includes a section (Section 4.6, Synthesis of Major Noncancer Effects) that provides a summary of the dose-related effects that have been observed after subchronic or chronic methanol exposure. EPA (2013b, p. 4-77) provides the following conclusion in the summary:

Taking all of these findings into consideration reinforces the conclusion that the most appropriate endpoints for use in the derivation of an inhalation RfC for methanol are associated with developmental neurotoxicity and developmental toxicity. Among an array of findings indicating developmental neurotoxicity and developmental malformations and anomalies that have been observed in the fetuses and pups of exposed dams, an increase in

the incidence of cervical ribs of gestationally exposed mice (Rogers et al., 1993b) and a decrease in the brain weights of gestationally and lactationally exposed rats (NEDO, 1987) appear to be the most robust and most sensitive effects.

TABLE 6-2 Categories of Carcinogenicity

| Category | Conditions |

| Carcinogenic to humans | There is convincing epidemiologic evidence of a causal association (that is, there is reasonable confidence that the association cannot be fully explained by chance, bias, or confounding); or there is strong human evidence of cancer or its precursors, extensive animal evidence, identification of key precursor events in animals, and strong evidence that they are anticipated to occur in humans. |

| Likely to be carcinogenic to humans | The evidence demonstrates a potential hazard to humans but does not meet the criteria for carcinogenic. There may be a plausible association in humans, multiple positive results in animals, or a combination of human, animal, or other experimental evidence. |

| Suggestive evidence of carcinogenic potential | The evidence raises concern for effects in humans but is not sufficient for a stronger conclusion. This descriptor covers a range of evidence, from a positive result in the only available study to a single positive result in an extensive database that includes negative results in other species. |

| Inadequate information to assess carcinogenic potential | No other descriptors apply. Conflicting evidence can be classified as inadequate information if all positive results are opposed by negative studies of equal quality in the same sex and strain. Differing results, however, can be classified as suggestive evidence or as likely to be carcinogenic. |

| Not likely to be carcinogenic to humans | There is robust evidence for concluding that there is no basis for concern. There may be no effects in both sexes of at least two appropriate animal species; positive animal results and strong, consistent evidence that each mode of action in animals does not operate in humans; or convincing evidence that effects are not likely by a particular exposure route or below a defined dose. |

Source: EPA 2013a, pp. B-8 to B-9.

TABLE 6-3 Categories of Evidential Weight for Causality

| Category | Conditions |

| Causal relationship | Sufficient evidence to conclude that there is a causal relationship. Observational studies cannot be explained by plausible alternatives, or they are supported by other lines of evidence, for example, animal studies or mechanistic information. |

| Likely to be a causal relationship | Sufficient evidence that a causal relationship is likely, but important uncertainties remain. For example, observational studies show an association but co-exposures are difficult to address or other lines of evidence are limited or inconsistent; or multiple animal studies from different laboratories demonstrate effects and there are limited or no human data. |

| Suggestive of a causal relationship | At least one high-quality epidemiologic study shows an association but other studies are inconsistent. |

| Inadequate to infer a causal relationship | The studies do not permit a conclusion regarding the presence or absence of an association. |

| Not likely to be a causal relationship | Several adequate studies, covering the full range of human exposure and considering susceptible populations, are mutually consistent in not showing an effect at any level of exposure. |

Source: EPA 2013a, p. B-9.

EPA goes on to use those and other studies to develop candidate RfCs. Although the discussion often provides details concerning the decision-making process used by EPA with more transparency than previous IRIS assessments, what remains somewhat lacking is an explicit description of the integrative approach used by EPA to combine data streams.

More specifically, the report notes that informative human studies of methanol are limited to acute exposures, but “the relatively small amount of data for subchronic, chronic, or in utero human exposures are inconclusive. However, a number of reproductive, developmental, subchronic, and chronic toxicity studies have been conducted in mice, rats, and monkeys” (EPA 2013b, p. xxiv). The report also notes, however, that the “enzymes responsible for metabolizing methanol are different in rodents and primates” (EPA 2013b, p. xxii), but then remarks that several PBPK models have been developed to account for these differences. Even though reproductive and developmental end points are identified as hazards in humans, the report notes that there is “insufficient evidence to determine if the primate fetus is more sensitive or less sensitive than rodents to the developmental or reproductive effects of methanol” (EPA 2013b, p. xxv). Interspecies differences are clearly important in methanol. Some central nervous system toxicities, such as blindness, have been observed in humans but not rodents; the differences are most likely due to species differences in the rate of elimination of formic acid that is formed by the oxidation of methanol. Section 4.7 of the IRIS assessment includes a discussion of noncancer mechanisms and the uncertainties in how such mechanisms are shared between humans and rodents. It ultimately concludes by saying that “the effects observed in rodents are considered relevant for the assessment of human health” (EPA 2013b, p. xxvi).

The narrative is informative, detailed, and accessible. The issues are clear, but the narrative does not include any systematic discussion of evidence integration that uses the Hill criteria or any others, such as the ones listed in Table 6-3. Although the interspecies evidence is complicated (and in this case crucial), the overall evidence-integration statement is as follows (EPA 2013b, p. xxv):

Taken together, however, the NEDO (1987) rat study and the Burbacher et al. (2004a; 2004b; 1999a; 1999b) monkey study suggest that prenatal exposure to methanol can result in adverse effects on developmental neurology pathology and function, which can be exacerbated by continued postnatal exposure.

Draft IRIS Assessment of Benzo[a]pyrene

In August 2013, EPA released the draft Toxicological Review of Benzo[a]pyrene (EPA 2013c). The draft assessment shows that the IRIS program has taken several additional steps toward addressing the recommendations in the 2011 NRC formaldehyde report. In the executive summary, EPA concludes that benzo[a]pyrene is carcinogenic; that noncarcinogenic effects might include developmental, reproductive, and immunological effects; that animal studies clearly demonstrate these effects; and that human studies show associations between DNA adducts that are biomarkers of exposure and these effects. “Overall, the human studies report developmental and reproductive effects that are generally analogous to those observed in animals, and provide qualitative, supportive evidence for hazards associated with benzo[a]pyrene exposure” (EPA 2013c, p. xxxiii).

In Section 1 (Hazard Identification) of the IRIS assessment, an accessible and detailed narrative describes the human, animal, and mechanistic evidence on developmental, reproductive, and immunotoxicity. In Section 1.2, an explicit narrative describes the evidence on noncancer effects (1.2.1) and then on cancer (1.2.2). For noncancer outcomes, the Hill criteria are not mentioned, nor is there a qualitative categorization for any end point of the sort described in the preamble. Yet the narrative is clear and describes the evidence in a way that roughly matches the conditions given in Table 6-3. Section 1.2.2, which describes the evidence on carcinogenicity, is

extremely clear and follows closely EPA’s 2005 Guidelines for Carcinogen Risk Assessment. It includes separate assessments of the human, animal, and mechanistic evidence according to those guidelines and includes evidence tables (for example, Table 1-18, p. 1-87) that connect evidential categorization with the supporting studies.

In summary, EPA is clearly moving toward implementing the recommendations of the NRC formaldehyde report but needs to continue to improve the narratives for noncancer outcomes to bring them into line with the preamble or the narrative for carcinogenicity. The two draft assessments that the committee reviewed are not consistent in their approach.

Evaluation of Agency Response

The purpose of this section was to assess how the IRIS process for evidence integration has evolved in response to the recommendations in the NRC formaldehyde report. First, the committee discussed how the guidelines for evidence integration have evolved. A preamble has been created that broadly describes how evidence-integration narratives might be structured to follow the Hill criteria. The committee discusses in more detail in the following section options for improving the guidelines included in such a preamble.

The recent IRIS assessments for methanol and benzo[a]pyrene include the new preamble, and in both cases, the evidence-integration narrative for cancer and noncancer outcomes is clear and informative. In both cases, however, the narrative for noncancer outcomes does not follow the guidelines as given in the preamble or other recent IRIS guidelines. In future assessments, EPA might either change the guidelines to follow more closely the kinds of narratives given for methanol and benzo[a]pyrene or ensure that the narratives more closely follow the guidelines included.

In this section, the committee describes several options for improving the IRIS process for evidence integration. The options are divided into qualitative approaches and quantitative approaches. A qualitative approach is a process whose output is a categorization of the overall evidence, for example, “the evidence suggests that it is likely that chemical X is immunotoxic.” A quantitative approach is a process that produces a quantitative output, for example, “the evidence suggests that there is at least a 75% chance that chemical X is immunotoxic.”

Qualitative Approaches for Integrating Evidence

Several approaches have been taken by the scientific and regulatory communities for integrating diverse evidence for hazard identification. Most commonly, the target is a qualitative categorization of the overall evidence as to whether an agent is a health hazard, that is, Can it cause cancer or some other adverse outcome, and with what degree of certainty can the judgment as to causation be made? For example, the 2004 Surgeon General’s Report—The Health Consequences of Smoking (DHHS 2004) and the 2006 Institute of Medicine (IOM) Committee on Asbestos: Selected Health Effects (IOM 2006) uses a four-level categorization for the overall strength of evidence on causation: “sufficient to infer a causal relationship,” “suggestive but not sufficient to infer a causal relationship,” “inadequate to infer the presence or absence of a causal relationship,” and “suggestive of no causal relationship.” Tables 6-2 and 6-3 provide qualitative categorizations used by EPA.

There are several methods for making a qualitative categorization of the evidence on hazard. One method can be described generally as guided expert judgment, of which there are many varieties. An alternative method for reaching a qualitative categorization of evidence can be described as a structured process. Those processes are described in the following sections.

Guided Expert Judgment

Recently, the IRIS program has undergone several organizational changes that should improve the expertise available to develop assessments. EPA has created disciplinary workgroups that have expertise in epidemiology, reproductive and developmental toxicity, neurotoxicity, toxicokinetic modeling, and biostatistics. Multiple disciplinary workgroups contribute to each assessment, and each disciplinary workgroup reviews the studies and develops the conclusions regarding all assessments on which there are studies in their fields. The new workflow is meant to ensure that all sections of an assessment are developed by appropriate experts, and use of the workgroups contributes to quality control and consistency among assessments. The workgroups have been initially formed by drawing on expertise available in EPA’s National Center for Environmental Assessment. A long-term goal is to broaden the expertise available to the IRIS program by including other scientists from within and outside EPA. In addition, EPA has established a Systematic Review Implementation Group whose primary purpose is to coordinate the implementation of systematic review in the IRIS program. Evidence integration done internally by EPA experts is (or can be) an extremely efficient method in arriving at sensible conclusions. The process in which EPA experts integrate evidence via a set of guidelines through some form of internal deliberation, however, does not lend itself in any obvious way to being transparent or reproducible.

If EPA wants to achieve more transparency within the basic process of evidence integration that it now follows, one option is to expand its current practice by following the IARC model. IARC recruits a working group of experts on a particular substance from epidemiology (cancer in humans), toxicology (cancer in experimental animals), mechanistic or biologic disciplines, and exposure science. A two-stage process is then used by which the experts in each field are asked to come to consensus on a qualitative categorization of the evidence in their field (except exposure). In the second stage, the full and diverse group of experts is asked to integrate their group findings into an overall judgment of a chemical’s carcinogenicity (IARC 2006; Meek et al. 2007). IARC has a general scheme for bringing together the human and animal data and for modifying the resulting classification on the basis of the findings of mechanistic research.

The overall IARC process and the judgments required are guided by an extensive preamble, (IARC 2011), but the process relies on expert judgment rather than on a structured approach to weighing and combining evidence. Experts who have acknowledged conflicts of interest are not allowed on the panels but can be present to observe without participating in formal deliberations, although on occasion they might be asked to respond to specific questions. According to the IARC (2011) guidelines,

In the spirit of transparency, Observers with relevant scientific credentials are welcome to attend IARC Monographs meetings. Observers can play a valuable role in ensuring that all published information and scientific perspectives are considered. The chair may grant Observers an opportunity to speak, generally after they have observed a discussion. Observers do not serve as meeting chair or subgroup chair, draft any part of a Monograph, or participate in the evaluations.

Thus, it is consistent with IARC that EPA could allow a wide variety of experts at least to observe the deliberations, including experts who have a potential conflict of interest and those representing key commercial or government concerns. Another option would be for EPA to provide more systematic requirements for the write-up and involve targeted scientific review of that part of the assessment.

Structured Processes

One approach for using a structured process to integrate evidence is the Grading of Recommendations Assessment, Development and Evaluation (GRADE) system, which is being used with

increasing frequency in the development of clinical-practice guidelines and health-technology assessments (Guyatt et al. 2011a,b,c,d,e). A version of GRADE is also being used by NTP in its Office of Health Assessment and Translation to make judgments about whether a chemical is hazardous to humans (NTP 2013). GRADE is a system for rating the quality of evidence and the strength of recommendations. The quality of the evidence on each outcome being assessed is evaluated according to the following approach: evidence is downgraded if there is risk of bias, inconsistency, indirectness, imprecision, and publication bias in or among studies; and evidence is upgraded if there is a large effect size, a dose-response relationship, and elimination of all plausible confounding. The strength of the recommendations is rated on the basis of the evidence evaluation. The highest-quality, most upgraded evidence results in a strong recommendation, whereas the lowest-quality evidence results in a weak recommendation.

The main advantage of GRADE over other qualitative approaches for integrating evidence is that the judgments made to evaluate and integrate bodies of evidence are systematic and transparent. In practice, consensus approaches or multiple coders working independently are used to assess each GRADE criterion. The reasons for each conclusion or recommendation are then clearly summarized. The final product of a GRADE assessment is a qualitative, tabular summary of the evidence with a quality rating (0+ to 4+) for each outcome.

GRADE criteria for assessing the quality of evidence are closely aligned with the Hill criteria for establishing causality (Schünemann et al. 2011). The strength of association assessed with Hill is accounted for by upgrading or downgrading the evidence according to the specific GRADE criteria. Upgrading reflects scientific confidence that a causal relationship exists. The Hill criterion for strength of association means that the stronger the association, the more confidence in causation.2 As shown in Table 6-4, the Hill criteria and the GRADE approach consider inconsistency of the evidence, indirectness of evidence, the magnitude of the effect, and the dose-response relationship. The Hill criteria require a prospective temporal relationship between the exposure and outcome and note that experimental evidence strengthens causation. Similarly, the GRADE criteria give greater weight to randomized clinical trials, although such studies are rarely available for environmental chemicals. Notable exceptions are some irritant gases and a few metals (such as trivalent chromium, cobalt, and selenium) and organic chemicals (such as perchlorate) that have been used or investigated for medicinal purposes.

In developing recommendations for clinical-practice guidelines, GRADE is usually applied to randomized clinical trials (of efficacy of clinical interventions) and observational studies (of harm). However, a GRADE-like approach has recently been applied to the integration of evidence from different data streams for the assessment of chemical hazards. Woodruff and Sutton (2011) have proposed the Navigation Guide, which specifies the study question, selects the evidence, rates the quality of individual studies, and rates the quality of evidence and the strength of a recommendation. That final step uses a GRADE-like approach to rate the quality of the overall body of evidence— including human, animal, and in vitro studies—on the basis of a priori and clearly stated criteria and integrates the quality assessment with information about exposure to develop a recommendation. NTP has adopted a similar approach for combining evidence from different data streams (NTP 2013). NTP develops a confidence rating for the body of evidence on a particular outcome by considering the strengths and weaknesses of the entire collection of studies that are relevant for a particular question. As in GRADE, the initial confidence in a recommendation is determined by the strength of the study design for assessing causality independently of the risk of bias in an individual study. However, unlike GRADE, which rates randomized controlled trials with higher confidence than epidemiologic studies, the NTP approach

_____________________________

2This statement assumes that all important confounding factors have been controlled. Strong associations in poorly controlled studies are unreliable, and such associations might become weaker after adjustment for confounding factors.

| Hill | GRADE | Navigation Guide | NTP | |

| Downgrading confidence or weakening recommendation | ||||

| Risk of bias | X | X | X | |

| Inconsistency | X | X | X | X |

| Indirectnessa | X | Xb | X | X |

| Imprecision | X | X | X | |

| Publication bias | X | X | X | |

| Financially conflicted sources of funding | Xc | X | ||

| Upgrading confidence or strengthening recommendation | ||||

| Large effect | X | X | X | X |

| Dose-response relationshipd | X | X | X | X |

| No plausible confounding | X | X | X | |

| Cross-species, population, or study consistency | X | |||

| Serious or rare end points, such as teratogenicity | X | Xb | ||

aIndirectness is the extent to which a study directly addresses the study question (Higgins and Green 2011). Indirectness might arise from the lack of a direct comparison or if some restriction of the study limits generalizability.

bIncludes Hill criteria of specificity, biologic plausibility, and coherence.

cRated under “other.”

dA formal dose-response assessment is typically performed, depending on the outcome of the hazard identification. However, at this stage, a potential dose-response relationship provides evidence of a hazard and should be used in a hazard-identification process.

determines the initial confidence on the basis of whether the exposure to the substance is controlled, data indicate that the exposure precedes development of the outcome, individual level (not population aggregate) data are used to assess the outcome, and the study uses a comparison group (NTP 2013). Thus, randomized controlled trials meet the first criterion, and epidemiologic studies are distinguished by how well they meet the remaining three criteria; prospective cohort studies, for example, start at a higher level of confidence than case-control studies. See Table 6-4 for a comparison of the various structured approaches.

Structured assessments like GRADE are useful primarily as a means of systematically documenting the judgments made in evaluating the evidence. This kind of documentation might enhance transparency to the extent that it tracks the details of how the evidence was assessed. The committee emphasizes that structured assessments like GRADE formalize and organize but do not replace expert judgment. Although the idea of adopting a structured-assessment process to enhance transparency is commendable, there is some risk that imposing excessively formal criteria for describing and evaluating evidence could slow the process and produce more complex output without improving the quality of decisions. The criteria in GRADE were developed for the assessment of evidence from clinical studies and might not always be appropriate for evaluating the effects of environmental chemicals. Thus, if EPA decides to adopt a GRADE-like approach, it should take care to customize it to the needs of IRIS, perhaps along the lines currently being developed at NTP.

Quantitative Approaches to Integrating Evidence

In each approach above, evidence is integrated with reliance on expert judgment and the output is qualitative. Although a structured process can use several quasi-formal rules for integrating evidence of different types, the rules are based essentially on scientific intuition and ex-

perience in a given domain. In many settings, integrating the evidence requires estimating a number or a set of numbers that can summarize the information obtained from various sources. For example, in the context of IRIS assessments, one needs to estimate the magnitude of harm potentially caused by a chemical and the uncertainty of the estimate.

A number of quantitative approaches can be used for hazard identification. Three approaches are meta-analysis, probabilistic bias analysis, and Bayesian analysis.3 In the case of meta-analysis and probabilistic bias analysis, the natural targets of the analyses are not qualitative yes-no questions but rather quantitative estimates of an effect size. In both cases, however, the key question is whether the estimate of the effect size can reasonably be inferred to exclude zero or to be negligible. If so, one can conclude hazard. If not, there is not adequate evidence to conclude hazard, but there might be evidence that suggests hazard. Bayesian models can be used to produce quantitative judgments, for example, “There is at least a 60% chance that chemical X is a human carcinogen.” Quantitative judgments are easily converted into qualitative categorical judgments as shown, for example, in Table 6-5. The committee emphasizes that the numbers provided in the table are arbitrary and are meant only as illustration. They are not taken from an existing source, nor do they reflect any recommendation by the committee.

Meta-analysis and probabilistic bias analysis, as they are typically carried out, produce effect-size estimates and confidence intervals around them. Converting an estimate and its accompanying confidence interval into a quantitative judgment about hazard is not as straightforward as it is in a Bayesian analysis, but it can be done. A vast literature and excellent textbooks are devoted to each approach. Here, a brief discussion of the methods and their relative advantages and disadvantages is provided. See Appendix C for a primer on the Bayesian approach.

Meta-Analysis

Meta-analysis is a broad term that encompasses statistical methods of combining data from similar studies. Typically, meta-analysis is used to estimate the effects of an exposure on the risk of an outcome. In its simplest form, a meta-analysis combines the effect estimates from several studies into a single weighted estimate that is accompanied by a 95% confidence interval that reflects the pooled data.

The primary goal of a meta-analysis is to integrate rigorously a set of similar studies with respect to a single estimate of the size of an effect and to the uncertainty due to random error. In fixed-effect meta-analysis, investigators assume that all studies are estimating a common causal effect, and the pooled estimate is simply a more precise estimate of the common effect. In random-effects meta-analysis, investigators assume sizable variation in effect size among studies, and the pooled estimate summarizes the mean of the distribution of the individual estimates of effect size. In both cases, investigators are not required to have information or hypotheses about the magnitude of systematic biases. Expert knowledge about the causal mechanisms by which exposure or other variables affect the outcome also is not required. However, it is worth noting that meta-analysis does not correct for or “fix” biases; indeed, it is possible for all studies in a meta-analysis to be biased in the same direction because of confounding or selection effects.

Meta-analysis is typically used as a technique to combine the results of similar randomized clinical trials, but it can be applied to results of epidemiologic studies. Meta-regression (Greenland and O’Rourke 2001) allows pooling of data from epidemiologic studies with some unexplained heterogeneity, and Kaizar (2005, 2011) and Roetzheim et al. (2012) improve on meta-regression for situations in which data are available from randomized clinical trials and epidemiologic studies. Bayesian methods are also used to conduct meta-analyses and are commonly used in network meta-analyses in which many agents are compared simultaneously (Cipriani et al. 2009).

_____________________________

3Both meta-analysis and probabilistic bias analysis can be done in a Bayesian framework.

TABLE 6-5 Example Conversion of Quantitative Output to Qualitative Categorical Judgments

| Chance that Chemical X is a Carcinogen | Categorical Judgment |

| > 90% | Carcinogenic in humans |

| ≤ 90% to > 75% | Likely to be carcinogenic in humans |

| ≤ 75% to > 50% | Suggestive evidence of carcinogenicity |

| ≤ 50% to > 5% | Inadequate information |

| ≤ 5% | Not likely to be carcinogenic in humans |

Although meta-analytic methods have generated extensive discussion (see, for example, Berlin and Chalmers 1988; Dickersin and Berlin 1992; Berlin and Antman 1994; Greenland 1994; Stram 1996; Stroup et al. 2000; Higgins et al. 2009), they can be useful when there are similar studies on the same question. For example, the 2006 IOM Committee on Asbestos and Selected Cancers (IOM 2006) did a quantitative meta-analysis on asbestos and cancer risk and presented an overall estimate that was derived from the combination of the estimates from the individual studies for each cancer type.

Probabilistic Bias Analysis

In all studies that seek to estimate causal effects, there are two broad sources of uncertainty: systematic bias and random error from sampling. In the famous poll that predicted that Thomas Dewey had beaten Harry Truman in the 1948 presidential election, there was systematic bias related to the sampling and the external validity of the survey; it was a telephone poll, telephone ownership was not ubiquitous at that time, and telephone ownership was heavily skewed toward Dewey supporters. The systematic bias was severe enough to dwarf uncertainty that was due to sample variability. There is still some systematic bias in modern presidential polls, but it is much smaller. When poll results are reported as accurate to within ± 3%, this number represents only variation in the reported number due to sampling variability (random error); it does not include systematic bias. Similarly, the confidence intervals in meta-analysis reflect only uncertainty that is due to random error from sampling. However, the possible presence of systematic bias due to various types of bias discussed in Chapter 5 can be another important source of uncertainty around effect estimates. The uncertainty that is due to systematic bias is well recognized by investigators and is usually a central part of the discussion section of scientific articles.

Methods collectively referred to as quantitative or probabilistic bias analysis produce intervals around the effect estimate that integrate uncertainty that is due to random and systematic sources. If empirical data on the direction and magnitude of systematic biases are unavailable, investigators need to use their expert knowledge to make quantitative assumptions about systematic bias. See the excellent books by Lash et al. (2009) and Rosenbaum (2010) for details.

The Bayesian Approach

Whether the uncertainty in a meta-analysis includes only random sampling error or also includes systematic bias, it is still limited to combining statistical evidence from similar studies into a single statistical estimate of effect size. A technique for combining all the available evidence into a single judgment needs to accommodate human studies, animal studies, and mechanistic analyses. One approach for doing so is to build a Bayesian model (Berry and Stangl 1996; Peters et al. 2005; Kadane 2011). The Bayesian approach has been used extensively in evaluating clinical data and in regulatory decision-making (Etzioni and Kadane 1995; Parmigiani 2002; Kadane 2005; DuMouchel 2012) and has several general advantages and disadvantages.

Regarding advantages, the Bayesian model is built to calculate, on the basis of prior knowledge and new data, how likely a hypothesis is to be true or false. It provides an opportunity to include as much rigor in constructing a formal model of evidence integration and uncertainty as one wants, and it comes with a type of theoretical guarantee. If experts are not dogmatic and agree on the fundamental design of a model and update their opinions with a Bayesian model, their opinions will eventually converge.

Because it supports the explicit modeling of all types of uncertainty, not only uncertainty due to sampling variability, a Bayesian model can help to identify the specific gaps in knowledge that make a large difference in overall uncertainty. For example, one might learn from a Bayesian model that measurement error of exposure in a series of epidemiologic studies produces far more uncertainty in a final estimate of toxicity than does uncertainty related to cross-species (rodent to human) extrapolation.

Regarding disadvantages, building a Bayesian model requires the elicitation and modeling of expert opinion. Although a large literature exists on elicitation (see, for example, Chaloner 1996; Kadane and Wolfson 1998), it requires expertise that is not typically possessed by a bio-statistician or epidemiologist.

Overall, the Bayesian approach is being adopted by a growing number of scientists and regulatory agencies. For example, the IOM report Ethical and Scientific Issues in Studying the Safety of Approved Drugs endorses the Bayesian approach as providing “decision-makers with useful quantitative assessments of evidence” (IOM 2012, p. 159). FDA’s Center for Devices and Radiological Health has published explicit guidelines on using Bayesian methods in regulatory decision-making (FDA 2010), and they are used increasingly in legal settings (Kadane and Terrin 1997; Perlin et al. 2009; Woodworth and Kadane 2010).

In the Bayesian approach, probability is typically treated as a degree of belief. Any proposition, (that is, any statement that is either true or false) can be given a degree of belief, including a proposition regarding hazard, that is, that a chemical causes some sort of specific human harm, such as lung cancer or heart disease. If “H” notates a proposition about hazard—exposure to methanol causes blindness—then “~H” notates the opposite—exposure to methanol does not cause blindness.4 Before seeing any evidence, one might ask a scientist to express his or her “prior” degree of belief in H. Scientist A might say that H is 75% likely, and this would translate to PA(H) = 0.75. Scientist B might say that H is only 40% likely, and this would translate to PB(H) = 0.4. In the Bayesian approach, the goal is to compute the “posterior probability” of H after seeing evidence E, which is notated as P(H | E). If E favored H, the two scientists’ posterior probability might be closer than when they started: PA(H | E) = 0.85 and PB(H | E) = 0.65.

In hazard identification, which is essentially a qualitative yes-no answer to a causal question, one would use the Bayesian approach to assess the probability of hazard, that is, the degree of belief in a causal proposition, after seeing all the evidence (human, animal, and mechanistic). In dose-response estimation, the target is not a yes-no proposition but rather a more complicated quantity: What is the quantitative dependence of disease response on dose. In the simplest possible case, the relationship might be linear, so that the amount of extra disease burden that one could expect can be expressed with one extra unit of dose exposure: Disease = β0+ (β1 × Dose). In this case, the parameter β1 expresses the dose-response relationship. If β1 is 0, there is no effect. If β1 is large, there is a large effect. In a Bayesian analysis for β1, the output would be

_____________________________

4One complication with this approach is that it forces one to collapse all degrees of causation into a single yes–no proposition. It forces one to make the same distinction between causes that have an extremely small effect vs no effect at all and other causes that have a substantial effect vs no effect at all. One solution is to let H stand for a proposition, such as that chemical X is an appreciable or substantial or meaningful cause of harm Y, where the term appreciable, substantial, or meaningful would have to be defined. If one equates an effect-size interval, such as greater than 0.1, with the idea of appreciable, a Bayesian analysis can also quantify the probability that a chemical X is an appreciable cause of harm Y.

P(β1 | E)—that is, a probability distribution over all the possible values of β1—when one has seen the evidence E.

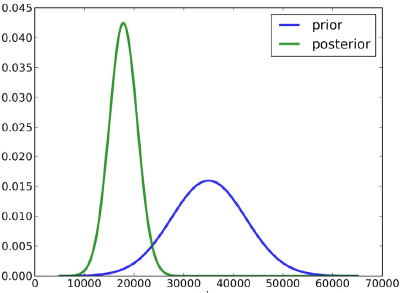

In Figure 6-2, for example, β1 is shown to range from 0 to 70,000. In the blue “prior” distribution, the mode is about 35,000, and the distribution is wide, demonstrating considerable uncertainty. In the green “posterior” distribution, the mode is below 20,000, and the distribution is much narrower, representing a reduction in uncertainty. Chapter 7 discusses a Bayesian approach to dose-response estimation and its attendant uncertainty in more detail. In the present chapter, the discussion is restricted to a Bayesian approach to hazard identification, which involves a yes-no proposition: Does chemical X cause outcome Y?

To combine evidence from disparate studies, a Bayesian approach needs to model the likelihood of data or evidence from different kinds of studies, given the hypothesis that a chemical is hazardous to humans. The approach must explicitly model the relevance that each kind of evidence has to the overall question of human hazard and how much uncertainty accompanies the modeling assumptions that allow us to relate disparate studies to the common target of human hazard. For example, if one in vivo animal study shows that a chemical poses a hazard, it is relevant to the question of human health only insofar as the animal model for this chemical and this outcome is relevant to humans. Almost every IRIS assessment that involves animal data must deal with the question of whether the animal model is relevant to humans. Rather than incorporate expert opinion about this question informally, a Bayesian hierarchical model can explicitly incorporate data from previous studies about cross-species relevance or mechanistic similarity and use them to derive overall estimates and uncertainties. In the early 1980s, for example, DuMouchel and Harris (1983) showed how to combine human and animal studies of radium toxicity to derive the evidential signal of animal studies of plutonium toxicity in terms of how it bears on the target: the toxicity of plutonium to humans. More recent work by Jones et al. (2009) and Peters et al. (2005) shows how to combine epidemiologic and toxicologic evidence in a Bayesian model. The report Biological Effects of Ionizing Radiation (BEIR) IV (NRC 1988), which sought to estimate the carcinogenicity of plutonium in humans, adopted a Bayesian approach, which included an uncertainty analysis that incorporated the variability in the ratios of relative carcinogenicities of different radionuclides among species. That analysis revealed that although there were few human data on plutonium, they could be combined with animal data to estimate carcinogenicity in humans effectively.

In the IRIS assessment of methanol, uncertainty about animal-model relevance plays a large role. Studies show species differences in the rates at which rodents and humans metabolize methanol into formic acid, which produces acidosis and causes lasting CNS damage. Those interspecies differences could be explicitly modeled in a Bayesian model, and the uncertainty estimate would incorporate them.

There is similarly uncertainty about the relationship between adult humans, infant humans, and rodents in how they metabolize methanol. Adult humans primarily use alcohol dehydrogenase (ADH1), whereas rodents use ADH1 and catalase to metabolize methanol. It is not known whether infant humans, like rodents, use catalase to metabolize methanol. The uncertainty about methanol metabolism could be included in a Bayesian model, and its effect on overall uncertainty could be computed by incorporating the relevance of rodent studies to developmental toxicity.

Uncertainty in human studies is equally amenable to a Bayesian analysis. Models can explicitly include uncertainty about unmeasured confounding, about measurement error in exposure, and about any other risk of bias in an epidemiologic study.

In principle, Bayesian methods provide a quantitative framework for combining theoretical understanding and evidence from human, animal, and mechanistic studies with data to update model-parameter estimates or the probability that a particular hypothesis is true. Although the Bayesian approach is growing in popularity in many scientific arenas, it is still not perceived as being widely applicable or widely used in public health, partly because the computational demands imposed by the method were prohibitive a decade ago. There also have been many conceptual misunderstandings regarding its subjective nature, and reliably eliciting expert knowledge and converting it into model parameters is difficult and takes special expertise. The computational worries have largely been resolved. Enormous computational advances have taken place over the last 15 years, and several software platforms are available for carrying out sophisticated Bayesian modeling (for example, BUGS5). Eliciting expert opinion is time-consuming and in some cases difficult, but there is now a considerable literature on how it should be done and a considerable number of cases in which it has been done successfully (Chaloner 1996; Kadane and Wolfson 1998; Hiance et al. 2009; Kuhnert et al. 2010).

Quantitative models for integrating evidence are powerful tools that can answer a wide array of scientific questions. Their obvious downside is that model misspecification at any level can result in incorrect inferences. Nevertheless, they make rigorous what other techniques have to make heuristic, and they force scientists to make their assumptions explicit in ways that less formal methods do not.

Comparison of Quantitative Methods

Meta-analysis is appropriate for situations in which there are a number of similar statistical studies involving experiments on humans or animals or similar epidemiologic studies. Probabilistic bias analysis is appropriate when the risk of bias in observational studies is substantial, and there is information that makes estimating or at least bounding such bias feasible. A Bayesian analysis seems appropriate when the stakes are high and when the uncertainty is substantial, especially when the evidence is to some degree inconsistent. For example, when a chemical is fairly common in the environment and might have serious health effects and the relevant evidence is difficult to integrate because human studies show little or no association and animal studies show toxicity, a Bayesian analysis can help to weight the evidence provided by both study types and characterize uncertainty appropriately.

A Template for the Evidence-Integration Narrative

No matter what method is used to integrate the different kinds of evidence available for an IRIS assessment, using a template for the evidence-integration narrative could help to make IRIS assessments more transparent. In particular, an evidence-integration narrative can make clear EPA’s view on the strength of the case for or against a specific hazard when all the available evidence is taken into account.

_____________________________

Rather than organize the narrative around a checklist of criteria, such as the Hill criteria, EPA might consider organizing the narrative as an argument for or against hazard on the basis of available evidence. It should be qualified by explicitly considering alternative hypotheses, uncertainty, and gaps in knowledge. Elements of the Hill criteria will undoubtedly find their way into such arguments and might even help to organize some of the discussion supporting the argument, but they need not be required topics to be discussed in every evidence narrative.

If the narrative is organized around types of evidence, it might begin by considering the conclusions supported by the human evidence and then consider how the available animal evidence confirms, does not support, or is irrelevant to the conclusions. Mechanistic evidence, if available, should be used in the discussion of the animal evidence to determine whether the animal evidence is relevant to the claim about human hazard. Gaps in knowledge and important uncertainties should be explicitly included.

Both the benzo[a]pyrene and methanol draft IRIS assessments contain narratives that mostly satisfy that sort of template. Both build a case for a variety of different cancer and noncancer end points and leave the reader with a clear sense of the evidence available that is relevant to the end points and thus the strength of the case for each end point. Where the narratives are particularly effective, they explain specifically how different strands of evidence connect. For example, the assessment of methanol explains that CNS toxicity has been observed in humans but not in rodents but then goes on to explain the differences in the rates at which humans and rodents eliminate formic acid, which explain the apparent evidential discrepancy. What is missing and might be desirable is a more systematic discussion of gaps in knowledge and gaps in the evidence.

Finding: Critical considerations in evaluating a method for integrating a diverse body of evidence for hazard identification are whether the method can be made transparent, whether it can be feasibly implemented under the sorts of resource constraints evident in today’s funding environment, and whether it is scientifically defensible.

Recommendation: EPA should continue to improve its evidence-integration process incrementally and enhance the transparency of its process. It should either maintain its current guided-expert-judgment process but make its application more transparent or adopt a structured (or GRADE-like) process for evaluating evidence and rating recommendations along the lines that NTP has taken. If EPA does move to a structured evidence-integration process, it should combine resources with NTP to leverage the intellectual resources and scientific experience in both organizations. The committee does not offer a preference but suggests that EPA consider which approach best fits its plans for the IRIS process.

Finding: Quantitative approaches to integrating evidence will be increasingly needed by and useful to EPA.

Recommendation: EPA should expand its ability to perform quantitative modeling of evidence integration; in particular, it should develop the capacity to do Bayesian modeling of chemical hazards. That technique could be helpful in modeling assumptions about the relevance of a variety of animal models to each other and to humans, in incorporating mechanistic knowledge to model the relevance of animal models to humans and the relevance of human data for similar but distinct chemicals, and in providing a general framework within which to update scientific knowledge rationally as new data become available. The committee emphasizes that the capacity for quantitative modeling should be developed in parallel with improvements in existing IRIS evidence-integration procedures and that IRIS assessments should not be delayed while this capacity is being developed.

Finding: EPA has instituted procedures to improve transparency, but additional gains can be achieved in this arena. For example, the draft IRIS preamble provided to the committee states that “to make clear how much the epidemiologic evidence contributes to the overall weight of the evidence, the assessment may select a standard descriptor to characterize the epidemiologic evidence of association between exposure to the agent and occurrence of a health effect” (EPA 2013a, p. B-6). A set of descriptor statements was provided, but they were not used in the recent IRIS draft assessments of methanol and benzo[a]pyrene.

Recommendation: EPA should develop templates for structured narrative justifications of the evidence-integration process and conclusion. The premises and structure of the argument for or against a chemical’s posing a hazard should be made as explicit as possible, should be connected explicitly to evidence tables produced in previous stages of the IRIS process, and should consider all lines of evidence (human, animal, and mechanistic) used to reach major conclusions.