9

Funding and Evaluation of Team Science

Organizations that fund and evaluate team science face a unique set of challenges that are related to the opportunities and complexities presented by the seven features that create challenges for team science first introduced in Chapter 1. Funding science teams and larger groups is different from funding individuals, and the differences increase when teams and groups include features such as large size, the deep knowledge integration of interdisciplinary or transdisciplinary projects, or geographic dispersion. Evaluating all phases of such complex teams and groups, from proposals to how the funded teams or groups are progressing to the project outcomes can be challenging. It requires an understanding of how teams or groups conduct science that leaders and staff members of science funding organizations may lack. Recognizing this problem, the National Science Foundation (NSF) commissioned the current study to enhance its own understanding of how best to fund, evaluate, and manage team science, as well as to inform the broader scientific community (Marzullo, 2013). The National Cancer Institute supports the new field of the science of team science for similar reasons, including to clarify the outcomes of its investments in large science groups (e.g., research centers) and to increase understanding within the scientific community of how best to support and manage team science (Croyle, 2008, 2012). In addition, a federal Trans-agency Subcom-

mittee on Collaboration and Team Science1 was launched in 2013 with the goal of advancing science by helping researchers put in place the infrastructure and processes needed to facilitate success in team-based science.

This chapter looks in turn at the funding and evaluation of team science. The final section presents conclusions and recommendations.

A range of organizations fund team science. Examples of funders include (1) federal agencies, (2) private foundations and individual philanthropists, (3) corporations, (4) academic institutions that provide seed money or infrastructure, and (5) nonprofit organizations that obtain funding from private donors and/or the general public and use it to fund team science research (e.g., Stand Up to Cancer2). At a time of constrained public spending, alternative sources of funding become increasingly important to maintain the scientific enterprise. Additionally, a plurality of sources can potentially help to balance tensions between, for example, supporting an individual scientist to establish novel areas of research without “strings attached” versus more directed programmatic funding focusing on a specific research area (OECD, 2011). The wide range of funders and the evolving nature of their roles introduce many avenues through which funders can support and facilitate team science. In this section, we describe how funders can influence the conduct and support of team-based research, including a discussion of the broader context for the ways priorities are set.

Federal Funding for Team Science

Federal funding for team science has increased greatly over the past four decades. For example, agencies are increasingly providing funding to projects overseen by more than one principal investigator (PI). At NSF, the number of awards to projects with multiple PIs increased from fiscal year 2003 to fiscal year 2012, while the number of awards to individual PIs remained steady (National Science Foundation, 2013). At the National Institutes of Health (NIH), the number of multiple PI grants grew from 3 in 2006 (the first year such grants were awarded) to 1,098 in 2013, or 15–20 percent of all major grants funded (Stipelman et al., 2014). Agencies also

________________

1 The subcommittee is part of the Social, Economic, and Workforce Implications of IT and IT Workforce Development Coordinating Group within the National Information Technology Research and Development Program of the National Technology Council in the Executive Office of the President. See http://www.nitrd.gov/nitrdgroups/index.php?title=Social,_Economic,_and_Workforce_Implications_of_IT_and_IT_Workforce_Development_Coordinating_Group(SEW_CG)#title [May 2015].

2For more information, see http://www.standup2cancer.org/what_is_su2c [May 2015].

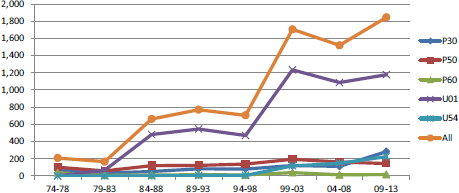

have increased their funding of research centers, which typically include multiple, related research projects that may be interdisciplinary and may involve industry or other stakeholders. For example, beginning in 1985 with a single center program, called the Engineering Research Centers, NSF created six more center programs over the following decade. By fiscal 2011, NSF invested nearly $298 million in these seven center programs, supporting 107 centers and engaging scientists at approximately 2,200 universities (National Science Foundation, 2012). At NIH, there were very few center grants until the mid-1980s, but the number of these grants to research centers and more loosely linked networks has increased steadily since then, as shown in Figure 9-1.

Prioritizing Research Topics and Approaches

Public and private funders work closely with both the scientific community and policy makers to establish research priorities and approaches. Federal agencies that fund research are led and staffed by scientists, convene scientific advisory bodies (e.g., the Department of Energy’s High Energy Physics Advisory Panel), and allocate funding through peer review by panels of scientists. Major new federal research programs often involve

FIGURE 9-1 Number of commonly awarded National Institutes of Health Center/Network Grants by mechanism over time.

NOTES:

P30 = Center Core Grants

P50 = Specialized Center

P60 = Comprehensive Center

U01 = Research Project Cooperative Agreement

U54 = Specialized Center Cooperative Agreement

SOURCE: Unpublished data provided by the National Institutes of Health.

years of engagement and discussion among funding agencies, the scientific community, policy makers, and other stakeholders. For example, the 1990 congressional mandate for the U.S. Global Climate Change Research Program emerged from an array of “bottom-up” research projects initiated by scientists (Shaman et al., 2013). According to Braun (1998, p. 808), if they are strategic, then “funding agencies are in a good position to balance demands from both the political and scientific sides.”

Through this collaborative process of setting research priorities, federal agencies have increasingly supported team science approaches in recent years (see further discussion below). Nevertheless, some scientists fear that increased public funding of large groups of scientists focusing on particular topics transfers too much control of research topics, approaches, and goals away from the scientific community and to bureaucrats (e.g., Petsko, 2009). Such views reflect the traditional role of individual investigators and professional societies (most of which are discipline based) in setting research agendas through publications, meetings, annual conferences, and peer review panels. However, as the number of scientific specializations increases and the public and policy makers seek solutions to scientific and societal problems, the scientific enterprise can benefit when funders look across disciplines or individual studies within a discipline to see the “big picture” of research needs and opportunities. Critiques of the peer review processes used in awarding research grants as too conservative (National Institutes of Health, 2007; Nature, 2007; Alberts et al., 2014) reinforce the potential benefit if funders consider whether new research areas need to be stimulated. In some instances, if scientists continue to focus on already well-explored problems or approaches that hold limited potential to add to existing scientific knowledge, then funders may need to set new directions and priorities (Braun, 1998). Furthermore, given the historically individual- and discipline-based incentive structure of academia and scientific journals, funders are positioned to provide incentives for alternatives to these approaches.

The Growing Role of Private Funders

Individual philanthropists and private foundations are beginning to play a larger role in establishing research priorities, and the continued debate regarding how much funders should influence the directions of science extends to these private entities. A policy analyst at the American Association for the Advancement of Science recently commented: “For better or for worse, the practice of science in the 21st century is becoming shaped less by national priorities or by peer review groups and more by the particular preferences of individuals with huge amounts of money” (Broad, 2014).

Philanthropic giving influences scientific research through investments

such as establishing new institutes or providing funds through universities. Private foundations and wealthy individuals contribute an estimated $7 billion per year to research conducted at U.S. universities, with a strong emphasis on translational medical research (Murray, 2012). They typically target particular scientific areas or address specific societal problems, prioritizing research topics narrowly, rather than in broad strategic ways.

The growth of private funding raises questions for federal policy makers and research funding agencies. One concern is that scientists funded by philanthropists with particular research agendas, who also sit on funding agency advisory panels and peer review panels, may have a significant influence over the priorities set by federal funding agencies. Another is that wealthy individuals may ignore important fields of science that lie outside their direct interests.

Funding Models, Funding Mechanisms, and Organizational Structures

Whether funding individual or team science, once funders establish research needs and priorities, they develop funding models and mechanisms to address the identified needs. Funding organizations differ widely in their models and mechanisms of funding (e.g., Stokols et al., 2010). Funding models are generic mechanisms for funding science (e.g., grants, prizes, donations), while funding mechanisms are specifically targeted incarnations of funding models. For example, NIH P50 is a specific type of funding mechanism to support research centers, and the Google Lunar XPrize is a specific competition inviting private teams to land a robot safely on the surface of the moon. Some funders are experimenting with “open” funding mechanisms. For example, the Open Source Science Project (2008–2014) used a web-based micro financing approach to support individual or team research, and the Harvard Medical School used an open funding mechanism to generate research topics on Type I diabetes (Guinan, Boudreau, and Lakhani, 2013).

As noted in previous chapters, these various funding mechanisms may support various organizational structures for team science (Hall et al., 2012c), ranging from small science teams to global networks The amount of funding often dictates the magnitude and, thereby, complexity of the organizational structure; a worldwide research network requires more resources than a university research center, which requires more resources than a single research project. In addition, science teams or larger groups may be funded by multiple public and private sources. For example, the NSF investment in Science and Technology Centers discussed in the previous chapter is multiplied by funding from industry and universities, while the Koch Center for Integrative Cancer Research at Massachusetts Institute of Technology combines private donations with university funding and

federal support as a National Cancer Institute-designated Cancer Research Center. In addition to important differences in how team science is funded, there is wide variation in how the research funding can be used. Common expenses include academic salaries, student tuition, equipment, materials, and space. Some budgets permit funds for training (e.g., cross-training for interdisciplinary teams), core units (e.g., administrative or statistical support), discretionary developmental projects (e.g., small mid-course pilot projects), travel for collaborators, and conference attendance. These variations in how funding can be used have important implications for team science funding, raising questions about how and when funders might provide support for:

- Planning or meeting grants to support the developmental phases of team science, which provides an incubator space to generate or advance new cross-disciplinary ideas (National Research Council, 2008; Hall et al., 2012a, 2012c).

- Travel funds to enable geographically dispersed teams to meet face-to-face, which can enhance communication and trust (National Research Council, 2008; Gehlert et al., 2014), as discussed in Chapter 7.

- Developmental or pilot project funds to enable flexible funds for just-in-time innovations or new integrative ideas that emerge during larger collaborative projects (Hall et al., 2012a; Vogel et al., 2014).

- Professional development funds, which can be used to promote the early development of collaborations and facilitate team processes that enhance effectiveness (see Chapter 5).

- Flexible funds for leaders of team science projects to allow them to make “real-time” adjustments for projects as project needs unfold. For example, leaders might be allowed to move funds between subprojects, adjust the timing of funding plans, and/or provide incentives and rewards for successful team research (National Cancer Institute, 2012).

Agencies often use public announcements, referred to as Funding Opportunity Announcements (FOAs), Program Solicitations, or Program Announcements to emphasize scientific priorities and influence the particular approaches used to implement those priorities. Additionally, these announcements delineate the type of mechanism and describe the intended organizational structure for supporting that approach. Language in the FOAs can encourage or stipulate particular approaches for conducting science (e.g., interdisciplinary, transdisciplinary, translational) and organizational configurations (e.g., centers or teams; see Table 9-1). For example,

the program solicitation for NSF’s CyberSEES Program states, “Due to this program’s focus on interdisciplinary, collaborative research, a minimum of two collaborating investigators (PIs/Co-PIs) is required” (National Science Foundation, 2014a).

However, agency leaders and staff experience a tension between providing clear guidance (which may become too prescriptive) and encouraging flexible responses from scientists, based on their particular research contexts and capabilities. In addition, agency employees sometimes lack understanding of team science processes and outcomes. As a result, they sometimes develop public announcements that include vague language about the type of collaboration and the level of knowledge integration they seek in the desired research3 (see Table 9-1). Announcements may lack sufficient guidance to facilitate interaction (e.g., by specifying the timing and frequency of in-person or virtual meetings or the inclusion of professional development plans). If the funder is soliciting interdisciplinary or transdisciplinary proposals, then these announcements may lack sufficient guidance to facilitate the deep knowledge integration that is required to carry out such research.

When funders do clearly articulate their goals for team science, they provide signals to scientists and institutions, which can in turn help facilitate culture change in the broader scientific community. For example, an earlier National Academies study reported that many scientists would like research universities to recognize and reward interdisciplinary research (National Academy of Sciences, National Academy of Engineering, and Institute of Medicine, 2005). In response to signals from NIH, the promotion and tenure guidelines for the University of Virginia School of Medicine support such recognition and reward. The guidelines include the statement, “The NIH roadmap for patient-oriented research endorsed team science and established the expectation of expertise for interdisciplinary investigation and collaboration” (Hall et al., 2013). This language reflects the medical school’s effort to align its institutional rewards and incentives with team-based approaches to conducting science and highlights the important role that funding agencies can play in influencing the scientific community.

Funders evaluate science teams and larger groups throughout the evolution of a research endeavor, beginning with the proposal review, then dur-

________________

3 Professional leadership development to increase agency employees’ understanding of team science, as recommended in Chapter 6, could help improve the clarity of communication in research solicitations involving team science.

TABLE 9-1 Examples of Federal Research Funding Announcements That Support Team Science

| Agency | FOA/ Program Soliciation Number | Program Name | Type of Mechanism | Organizational Structure | Funds | Examples of Language Related to Team Science |

| NSF | NSF 07-558 | Engineering Virtual Organization Grants | Standard grant | Seed money to create engineering virtual organization. | Small $2M total for ~10–15 awards | “EVOs extend beyond small collaborations and individual departments or institutions to encompass wide-ranging, geographically dispersed activities and groups.” |

| DoE | DE-FOA-0000919 | Collaborative Research in Support of GOAmazon Campaign Science | Research grant award | Projects will be affiliated with the multilateral campaign. Investigators are expected to coordinate their research with other investigators and with reps of the ARM Climate Research Facility. | Small $2.3M total for ~6-8 awards ($50k–350k per award) | “Emphasis on collaboration; those involved must be truly collaborative in the conduct of research, including definition of goals, approach, and work plan.” |

| NSF | NSF 13-500 | Cyber-Enabled Sustainability Science and Engineering (CyberSEES) | Standard grant | Team must include at least two investigators from distinct disciplines. | Small to Medium $12M total for ~12–20 awards | “Team composition must be synergistic and interdisciplinary”; “focus on interdisciplinary, collaborative research; a minimum of two |

| collaborating investigators (PIs/Co-PIs) working in different disciplines is required.” | ||||||

| NSF | NSF 12-011 | CREATIV: Creative Research Award for Trans-formative Interdisciplinary Ventures | New grant mechanism for special projects | Any NSF support topic area: interdisciplinary, high-risk, novel, potentially transformative. | Medium Up to $1M total up to 5 years | “Must integrate across multiple disciplines;” “the proposal must identify and justify how the project is interdisciplinary;” “encourage cross-disciplinary science;” “break down any disciplinary barriers;” “proposals must be interdisciplinary.” |

| NIH | RFA-AG-14-004 | Roybal Centers for Translational Research on Aging | P30 | Center organized around thematic area—includes translational research activities, pilot projects, cores and coordination center (optional). | Medium $3.9M/ year ~8–12 awards | “To galvanize scientists at several academic institutions;” “of particular interest are projects that incorporate approaches from emerging interdisciplinary areas of behavioral and social science, including behavioral economics; the social, behavioral, cognitive and affective neurosciences; neuroeconomics; behavior genetics and genomics; and social network analysis.” |

| Agency | FOA/ Program Soliciation Number | Program Name | Type of Mechanism | Organizational Structure | Funds | Examples of Language Related to Team Science |

| NASA | NASA ROSES A.11, NNH13ZDA001NOVWST | Ocean Vector Winds Science Team | Standard grant | Research that requires vector wind and backscatter measurements provided by QuikSCAT and the combined QuikSCAT/ Midori-2 SeaWinds scatterometers. | $4.5M/year, expected ~25 awards | “Oceanographic, meteorological, climate, and/or interdisciplinary research.“ |

NOTES:

DoE = U.S. Department of Energy

FOA = Funding Opportunity Announcements

NASA = National Aeronautics and Space Administration

NSF = National Science Foundation

RFA = Request for Applications

ing the active research project, and finally following the end of the formal grant period.

Proposal Review

Once funders have mechanisms in place to support team science, they must solicit and facilitate the review of proposals submitted for funding. Sometimes this review process involves internal review by program officers, but more often it involves peer review by experts in the field of study (Holbrook, 2010). There are a number of challenges that arise when reviewing team science proposals, especially when the research is interdisciplinary in nature. Challenges include issues such as composition of review panels and needed scientific expertise (Holbrook, 2013). To adequately review an interdisciplinary or transdisciplinary proposal, funders need to identify and recruit reviewers with expertise in the range of disciplines and methods included (Perper, 1989). It is often not sufficient, however, to have the specific expertise related to the elements of a proposal, as individuals with specialized expertise may not have sufficient breadth of knowledge or perspective to evaluate the integration and interaction of disciplinary or methodological contributions of an interdisciplinary proposal.

This may be particularly relevant in the case of agencies such as NIH where reviewers have been increasingly more junior (Alberts et al., 2014; Nature, 2014). Less experienced reviewers especially need review criteria to be clear, including what is being judged and how quality is defined (Holbrook and Frodeman, 2011; also see National Science Foundation, 2011, for a description of the agency’s merit review criteria).

In a recent empirical study of the grant proposal process at a leading research university, Boudreau et al. (2014) lent support to the view that peer reviewers may be too conservative. The authors found that members of peer review panels systematically gave lower scores to research proposals closer to their own areas of expertise and to highly novel research proposals. They suggested that, if funders wish to support novel research, then they prime reviewers with information about the need for and value of novel research approaches in advance of the review meeting. A related concern is that some reviewers from individual disciplines may be biased against interdisciplinary research, potentially complicating the evaluation of the science itself (Holbrook, 2013). NSF’s Workshop on Interdisciplinary Standards for Systematic Qualitative Research (Lamont and White, 2005) produced an approach for establishing review criteria that could be applied to interdisciplinary research more broadly. Furthermore, review panels for some cross-disciplinary translational research, such as the patient-centered outcomes research funded by the congressionally mandated Patient-Centered Outcomes Research Institute, includes non-scientist reviewers (at least two

on each review panel). Such stakeholders are included to “help ensure the research . . . reflects the interests and views of patients” (Patient-Centered Outcomes Research Institute, 2014). The creation of the institute and inclusion of these stakeholders is an indication that patient advocacy groups are influencing biomedical research and health care practice (Epstein, 2011).

A number of additional issues can arise in the process of reviewing proposals. For example, involving many institutions may strengthen a given team science project (e.g., by bringing more resources or perspectives to the project), but this can potentially create a bias in favor of having more institutions. Reviewers may rate proposals including multiple institutions more favorably than those including fewer institutions, rather than basing their ratings entirely on scientific merit (Cummings and Kiesler, 2007). In some cases, moreover, reviewers from an institution included in a proposal must excuse themselves from review in order to avoid conflict of interest (e.g., in NIH and NSF panel reviews); the larger the proposed science group, the higher the likelihood that review members will need to leave the room. As a result, with larger and more complex projects, relatively fewer panel reviewers will remain in the room to judge the proposals. Such complications have prompted changes in agency policies for managing conflict-of-interest issues in the peer review process. For example, NIH (2011) issued a revised review policy based on “the increasingly multi-disciplinary and collaborative nature of biomedical and behavioral research.”

As discussed in the previous chapter, larger and more complex projects are also at greater risk for collaborative challenges after funding, yet there are typically no sections of the grant application devoted to describing management or collaboration plans. Review criteria are typically focused on the technical and scientific merit of the application, and not the potential of the team to collaborate effectively. The Trans-agency Subcommittee on Collaboration and Team Science mentioned above believes that including collaboration plans in proposals will help ensure that the needed infrastructure and processes are in place. The subcommittee has engaged in a series of workshops and projects specifically to develop guidance for (a) researchers, including key components to consider when developing collaboration/ management plans; (b) agencies, including potential language for program officers to use when soliciting collaboration plans from investigators or guidance to researchers; and (c) reviewers, including evaluation criteria for reviewers of collaboration plans of submitted by investigators as part of a funding proposal.

Team charters typically outline a team’s direction, role, and operational processes, whereas agreements or contracts outline specific terms that multiple parties formally or informally establish verbally or in writing. The use of charters and agreements for addressing specific collaborative factors such as conflict, communication, and leadership has been discussed in the

literature (e.g., Shrum, Gernuth, and Chompalov, 2007; Bennett, Gadlin, and Levine-Finley, 2010; Asencio et al., 2012; Bennett and Gadlin, 2012; Kozlowski and Bell, 2012). Importantly, as noted in Chapter 7, Mathieu and Rapp (2009) showed that the use of charters increased team performance, and that the quality of the charter mattered. In a study by Shrum, Genuth, and Chompalov (2007), the greater the number of participants, teams, and organizations included in a large, multi-institutional research project, the more frequently formal contracts were used. Although two-thirds of the collaborations studied by Shrum, Genuth, and Chompalov (2007) used some form of formal contract, the contracts were often very specific (e.g., to specify roles and assignments or rules for reporting developments within/outside of the collaboration) or were not drawn up until the end of the project.

Collaboration plans, as described here, build upon the goals of charters and agreements/contracts, but provide a broader framework to help address the breadth of issues outlined in this report. The plans include the use of charters, agreements, and contracts to achieve specific objectives. A study (Woolley et al., 2008) examining the influence of collaboration planning demonstrated that (p. 367) “team analytic work is accomplished most effectively when teams include task-relevant experts and the team explicitly explores strategies for coordinating and integrating members’ work.” The authors found that high task expertise in the absence of explicit collaborative planning actually decreased team performance.

This report has highlighted evidence related to factors at many levels that influence the effectiveness of team science. The primary goal of collaboration plans is to engage teams and groups in formally considering the various relevant factors that may influence their effectiveness and deliberately and explicitly planning actions that can help maximize their effectiveness and research productivity. Collaboration plans can serve to provide a framework for systematically considering the primary domains covered in this report. Federal agencies have begun requiring plans such as data management plans (e.g., NSF4) or leadership plans (e.g., NIH5), which contain elements of collaboration plans. However, these required plans are designed for more specific purposes or for specific mechanisms.

Emerging guidelines for broader collaboration plans, developed by the trans-agency subcommittee, would require proposals to address 10 key aspects of the proposed project: (1) Rationale for Team Approach and Team Configuration; (2) Collaboration Readiness (at the individual, team, and institutional levels); (3) Technological Readiness; (4) Team Functioning; (5) Communication and Coordination; (6) Leadership, Management, and

________________

4See http://www.nsf.gov/bio/pubs/BIODMP061511.pdf [May 2015].

5See http://grants.nih.gov/grants/guide/notice-files/NOT-OD-07-017.html [May 2015].

Administration; (7) Conflict Prevention and Management; (8) Training; (9) Quality Improvement Activities; and (10) Budget/Resource Allocation (Hall, Crowston, and Vogel, 2014). Collaboration plans should vary in relation to the size and complexity of the scientific endeavor and take into account unique circumstances of the proposed team or group. The goal is to effectively collaborate to more rapidly advance science.

Program Evaluation

Evaluation approaches include formative evaluation, which provides ongoing feedback for project improvement (D. Gray, 2008; Vogel et al., 2014) and retrospective summative evaluation, which provides lessons for enhancing future programs (e.g., Institute of Medicine, 2013; Vogel et al., 2014). Public or private funders may require one or both types of evaluation as a condition of funding (Vogel et al., 2014) or conduct or commission evaluations on an ad hoc basis (e.g., Chubin et al., 2009). The complexities introduced by team-based research need to be considered when developing a comprehensive evaluation plan. However, a recent review of more than 60 evaluations of NIH center and network projects from the past three decades found that while a majority of evaluation studies included some type of evaluation of the research process, this important dimension often was represented with either a single variable or a limited set of variables that were not linked to one another or to program outcomes in any conceptually meaningful way (The Madrillon Group, 2010).

Improvement-Oriented Approaches

Improvement-oriented or formative evaluation aims to enhance the ongoing management and conduct of a project by providing feedback to support learning and improvement (e.g., D. Gray, 2008; The Madrillon Group, 2010). This can be done in a number of different ways, including embedding evaluators within the team or group (e.g., D. Gray, 2008), engaging team science researchers to study the projects (e.g., Cummings and Kiesler, 2007), and collaborating with science of team science scholars or evaluators at a federal agency (e.g., Porter et al., 2007; The Madrillon Group, 2010; Hall et al., 2012b). For larger and longer-duration projects, especially university-based research centers, it is not unusual for a funding agency to conduct a site visit in which program officers visit the principal investigators (PIs) and have in-person discussions with project participants. Site visits allow funders to learn about the people involved in the projects, the research being conducted, and any barriers or hurdles being encountered.

Outcome-Oriented Approaches

Increased funding of team science has raised questions within the scientific community about the effectiveness of team approaches relative to more traditional, solo science, which has put pressure on funders to demonstrate the value of their investments through summative evaluation of outcomes (Croyle, 2008, 2012). Whether conducted as a case study or to compare what the project has achieved with a known benchmark or standard, summative evaluation can provide valuable information to funders and other stakeholders in the scientific community (Scriven, 1967). However, evaluating the outcomes of team science projects can be difficult, as discussed in Chapter 2. For example, the goals of small teams may entail the creation and dissemination of new scientific knowledge, but the goals of larger groups may include translation of scientific knowledge into new technologies, policies, and/or community interventions. Thus, the first step toward evaluating outcomes is to clearly specify all desired outcomes from the beginning. For example, if translation is a desired outcome, then Funding Opportunity Announcements (FOAs) could provide examples of outputs from research projects that synthesize and translate research findings into formats useful for a variety of stakeholder groups; such outputs might include written briefs or informational videos for use in clinical practice or new product development.

A summative evaluation can be completed by researchers themselves (e.g., through a final report or published journal article), by program evaluators contracted by funding agencies, by internal agency staff in collaboration with grantees, or by team science researchers. In all cases, the purpose is to establish lessons learned for the development and implementation of subsequent science teams, larger groups, or research programs (Hall et al., 2012b; Vogel et al., 2014). There are many dimensions to choose from when conducting an evaluation of team science outcomes, including identifying or developing metrics of outputs (e.g., publications, citations, training; see Wagner et al., 2011 for a discussion of interdisciplinary metrics), and identifying the intended targets of these outputs (research findings may be targeted to academics, business, or the general public; see Jordan, 2010, 2013). In addition, the evaluator must consider the type of innovation sought by the project (e.g., incremental or small improvements vs. radical or discontinuous leaps; see Mote, Jordan, and Hage, 2007), the time frame (e.g., short-term vs. long-term outcomes), and the type of intended long-term impact (e.g., science indicators; see Feller, Gamota, and Valdez, 2003). Evaluators can also use a range of methods to judge how successful particular team science projects have been, such as citation analysis and the use of quasi-experimental comparison samples and research designs (Hall et al., 2012b).

As discussed in Chapter 2, evaluators have tended to rely on publication data (bibliometrics) as metrics of the outputs and outcomes of team science. While funders and evaluators recognize the need for new metrics to capture broader impacts, such as improvements in public health (Trochim et al., 2008), developing methodologically and fiscally feasible metrics has proven difficult (see Chapters 2 and 3). Other challenges to conducting a thorough evaluation arise due to unavailability of data from a range of programs and projects. In addition, little research to date has used experimental designs, comparing team science approaches or interventions6 with control groups to identify impacts.

The recent development of “altmetrics” provides helpful data that may be used to improve evaluation of team science projects (Priem, 2013; Sample, 2013). In 2010, a group of scientists called for consideration of all products of research grants rather than just peer-reviewed publications, including sharing of raw data and self-published results on the web and through social media; they also called for development of “crowdsourced” automated metrics tied to the products, such as reach of Twitter posts or blog views (Priem et al., 2010). The new movement already has had some effects, as NSF has changed the language of required biosketches to include products such as datasets, software, patents, and copyrights. Piwowar (2013) contended that altmetrics give a fuller picture of how the products of scientific research have influenced conversation, thought, and behavior.

As emphasized in this report, it is important to evaluate the team science processes and to study the relationships of these processes to research outcomes and impacts in order to understand potential mediators and moderators of successful team science outcomes. By doing so, funders can contribute to the knowledge needed to develop evidence-based support for team science. In addition, studies can examine not only the relationships between outcomes and particular funding mechanisms (Druss and Marcus, 2005; Hall et al., 2012b) but also outcomes and measures of team processes (e.g., The Madrillon Group, 2010, Stipelman et al., 2010) to increase the knowledge base and enhance funders’ ability to better support team science.

In a time of federal budget constraints, funding agencies are becoming increasingly aware of the potential advantages of using systematic and scientific approaches to managing, administrating, and setting priorities and allocating funds. The Office of Management and Budget in the Executive Office of the President (2013) released a government-wide memo that calls for using evidence and innovation to improve government performance.

________________

6 As noted in Chapter 6, an ongoing study by Salazar and colleagues uses an experimental design to test interventions designed to facilitate knowledge integration in interdisciplinary and transdisciplinary projects (see http://www.nsf.gov/awardsearch/showAward?AWD_ID=1262745&HistoricalAwards=false [May 2015]).

The memo emphasizes (p. 3) “high-quality, low-cost evaluations and rapid, iterative experiments” and the use of “innovative outcome-focused grant designs.” Agencies have begun responding to this message. For instance, a recent report by NIH (2013) summarized and recommended:

. . . ways to strengthen NIH’s ability to identify and assess the outcomes of its work so that NIH can more effectively determine the value of its activities, communicate the results of studies assessing value, ensure continued accountability, and further strengthen processes for setting priorities and allocating funds.

The Office of Management and Budget memo and the NIH report highlight the need for the development of more evidence-based strategies to facilitate and support team science. The science of team science community is well poised to help address these issues.

SUMMARY, CONCLUSIONS, AND RECOMMENDATIONS

Many public and private organizations fund and evaluate team science. Public and private funders typically use a collaborative process to set research priorities, engaging with the scientific community, policy makers, and other stakeholders. Hence, they are well-positioned to work with the scientific culture to support those who want to undertake team science. When soliciting proposals for team science, federal agency staff members sometimes write funding announcements that are vague about the type and level of collaboration being sought. At the same time, the peer review process used to evaluate proposals typically focuses on technical and scientific merit, and not the potential of the team to collaborate effectively. Including collaboration plans in proposals, along with guidance to reviewers about how to evaluate such plans, would help ensure that projects include infrastructure and processes that enhance team science effectiveness. The committee’s review of research and practice on funding and evaluation of team science in this Chapter raises several important unanswered questions, which are discussed in Chapter 10.

CONCLUSION. Public and private funders are in the position to foster a culture within the scientific community that supports those who want to undertake team science, not only through funding, but also through white papers, training workshops, and other approaches.

RECOMMENDATION 7: Funders should work with the scientific community to encourage the development and implementation of new collaborative models, such as research networks and consortia; new team science incentives, such as academic rewards for team-based research

(see Recommendation #6); and resources (e.g., online repositories of information on improving the effectiveness of team science and training modules).

CONCLUSION. Funding agencies are inconsistent in balancing their focus on scientific merit with their consideration of how teams and larger groups are going to execute the work (collaborative merit). The Funding Opportunity Announcements they use to solicit team science proposals often include vague language about the type of collaboration and the level of knowledge integration they seek in proposed research.

RECOMMENDATION 8: Funders should require proposals for team-based research to present collaboration plans and provide guidance to scientists for the inclusion of these plans in their proposals, as well as guidance and criteria for reviewers’ evaluation of these plans. Funders should also require authors of proposals for interdisciplinary or transdisciplinary research projects to specify how they will integrate disciplinary perspectives and methods throughout the life of the research project.