The “moving parts” of a decadal survey are described in Chapter 1. This chapter describes how a typical decadal survey works and the variations that have developed as planetary science, Earth science and applications from space, and solar and space physics (heliophysics)—with their different scientific goals, methods, and cultures—have joined astronomy and astrophysics in developing their community’s consensus on science goals and a program for achieving them. Different parts of the decadal process are discussed, including how a recommended program of activities is produced for the following 10 years.

COMMON FACTORS AND DIFFERENCES BETWEEN DISCIPLINES

Common Threads

Although decadal surveys have many discipline-specific science goals and objectives, there are also many similar factors that, during this committee’s deliberations, were recognized as common themes that apply to all surveys.

First and foremost, each survey needs to engage its science community across a broad range of specific disciplines in order to identify the most important science questions and goals for the coming decade. Typically, concurrent with the beginning of the decadal process, the community is engaged to provide white papers on specific science goals and the tools—facilities, missions, capabilities, and associated activities—that should be pursued in the coming decade. The disciplinary or thematic panels of the decadal survey function as a conduit channeling ideas from the community at large to the decadal survey committee. The panels synthesize and prioritize community input in their panel reports. How the panels and committees interact and the fate of those panel reports varies between disciplines, as discussed below.

Another common theme is that all surveys have a much broader range of science and mission goals than can be implemented within resource constraints. The decadal survey committee assumes, or is given an outline of, the budget profile for the coming decade, but that budget may or may not fit the future fiscal reality. All surveys have faced the difficult task of distilling a wide range of potential possibilities down to a high-priority science program that fits within the projected budget profile, one that is balanced in terms of science areas, basic research versus societal needs, mission/facility scale, technology development versus infrastructure, and many more such choices. Each survey may consider alternative scenarios and develop decision rules to provide resilience to inevitable changes in expected funding.

As discussed in the “Mission Definition and Formulation” section below, a common difficulty encountered in surveys is that the short development timeframe limits the time available for detailed mission and facility concept studies. Similarly, all survey committees have considered the impact of cost overruns by large missions on overall program health and balance. Many must also consider the impacts of long-term operational costs for missions and facilities that live well beyond their original design lifetime. Finally, of concern to all disciplines is assuring the continuity of the research and analysis and technology development programs that foster community stability and the development of the next generation of scientists and engineers.1

Once the decadal survey is released, all disciplines face the need to engage their science communities to support the survey with a unified voice—however, there are sometimes vocal minorities that think their science has not received the priority it deserves. This is why it is critically important that decadal surveys widely sample their community’s ideas and opinions at the beginning of the process (see Chapter 1, “Community Input and Engagement”). The community’s perception of a “fair hearing for all comers” is essential: equality of opportunity must be assured where equality of outcome is not. The survey report and, in particular, the panel reports, record the paths taken through the process by the top science priorities and favored implementation strategies. By thoroughly laying out the rationale for these choices, their successes over other possibilities can be conveyed to the community at large. In order to be successful with program managers, politicians, the Office of Management and Budget (OMB), the Office of Science and Technology Policy (OSTP), and other stakeholders, the decadal survey report needs to be seen as a consensus document that is not circumvented by “special interests” pleading their priorities to their representatives in Congress and/or the administration.

Differences in Survey Structure

Prominent distinctions between decadal surveys can be attributed to differences in their disciplines, influencing the number of panels and the areas of focus, the interfaces between the committee and panels, the structure and roles of the committee versus the panels, how reports from the panels are provided to the survey committee for deliberation, and whether or not the panel reports are included in the final survey.2

In some communities, implementation is not separable from science goals, while in others the science goal takes precedence and might be implemented in a variety of ways. The former is exemplified by a facility that is capable of supporting multiple users addressing very different science goals—for example, the Hubble Space Telescope, where users with a huge range of different projects apply for observing time. In the latter case, with the focus on a science goal, multiple competitive proposals might be solicited for an identified science target (e.g., NASA’s New Frontiers line of medium-class, principal-investigator (PI)-led, planetary science missions). In still other cases, measurements for multiple science goals may require multiple platforms or a system of measurements (e.g., up and down stream in the solar wind), suggesting a recommended program rather than series of isolated projects.

How each community defines its metrics for cutting-edge science also varies; for example, the survey may address fundamental questions with new technology or methods, focus on specific targets or measurement objectives (by wavelength or energy, location in space or in the solar system), or determine that the “answer” requires a long-term sustained observation of slowly changing processes (e.g., changes in the oceans and climate).

Some Discipline-Specific Differences

Earth Science and Applications from Space

As noted by its survey co-chair, Berrien Moore, the implementation of the first Earth science and applications from space decadal survey3 (Earth2007) had many unique challenges, in particular how to create an integrated

_______________

1 A more complete discussion of the research and analysis and technology development programs can be found in Chapter 3.

2 National Research Council (NRC), Lessons Learned in the Decadal Planning in Space Science: Summary of a Workshop, The National Academies Press, Washington, D.C., 2013, pp. 6-8, 17.

3 National Research Council (NRC), Earth Science and Applications from Space: National Imperatives for the Next Decade and Beyond, The National Academies Press, Washington, D.C., 2007.

set of observations and missions for Earth system science and applications.4 With an emphasis on Earth system science, panels were organized thematically rather than by discipline. The survey considered balancing societal needs and benefits with fundamental advances in scientific knowledge in a heavily politicized environment, at least with regard to climate change.5 In addition, because this discipline engages many federal agencies beyond NASA—in particular the National Oceanic and Atmospheric Administration (NOAA) and the U.S. Geological Survey, each of which has its own mission—data may be applicable across a broad range of agencies without clear lines of financial or operational responsibility. Earth2007 was the first in the most recent series of reports from each of the disciplines under consideration in this report. However, because it was completed prior to the enactment of the 2008 NASA Authorization Act, Earth2007 did not implement independent cost evaluations, as was required for all subsequent surveys.

Astronomy and Astrophysics

The 2010 astronomy and astrophysics decadal survey6 (Astro2010) had the largest and arguably most complicated survey structure. Its survey committee included an executive committee and three subcommittees. Science and implementation strategies were separated into science frontiers panels (SFPs) and program prioritization panels (PPPs), and there were six infrastructure study groups (ISGs) that were populated by unpaid consultants rather than appointees of the Academies.7 The priorities from the SFPs reports were first fed into the PPPs, whose reports, along with the SFPs reports, subsequently went to the survey committee for final integration in the survey report.8 The working papers generated by the ISGs were shared with the PPPs and the survey committee but were not published. There is an especially strong synergy and codependence of orbiting and ground-based telescopes that require a degree of coordination between the National Science Foundation (NSF) and NASA. This discipline also addresses important questions in fundamental physics, leading in part to an important role for the Department of Energy (DOE) as a stakeholder. Interactions between federal investment, private philanthropy, and academic institutional assets are crucial in this discipline and often strongly affect survey discussions.

Planetary Science

Due to the nature of the science, Planetary2011 divided the planetary science discipline by solar system target or object class and by how specific targets (e.g., inner planets, small bodies, giant planets) contribute to human knowledge of current state and evolution of the solar system as a whole. Science and mission ideas that emerged during the survey were prioritized at the panel level and then integrated across the solar system by the survey committee. Most solar system targets have inflexible or limited launch windows that provide hard schedule constraints with associated budget implications, due to planetary and small body (e.g., comets, NEOs) orbits relative to Earth. The availability of launch windows was one deciding factor when considering whether Uranus or Neptune should have priority in the 2012-2023 decade.9 The potential for long cruise phases for missions before initiation of data collection also means that missions may have significant financial impacts across multiple decades and can pose unique challenges with retaining scientific and engineering continuity from launch to arrival at the destination. This discipline has also provided explicit decision rules, but it is perhaps too early to assess their ultimate effectiveness.10

_______________

4 NRC, Lessons Learned in the Decadal Planning in Space Science, 2013, p. 14.

5 NRC, Lessons Learned in the Decadal Planning in Space Science, 2013, pp. 18, 27-28.

6 NRC, New Worlds, New Horizons in Astronomy and Astrophysics, The National Academies Press, Washington, D.C., 2010.

7 Activities of the National Research Council are now referred to as activities of the National Academies of Sciences, Engineering, and Medicine.

8 National Research Council (NRC), Lessons Learned in the Decadal Planning in Space Science, 2013, Figure 2.1 and p. 8.

9 NRC, Vision and Voyages for Planetary Science in the Decade 2012-2023, The National Academies Press, Washington, D.C., 2011, pp. 7-35.

10 National Research Council (NRC), Lessons Learned in the Decadal Planning in Space Science, 2013, p. 15.

Solar and Space Physics (Heliophysics)

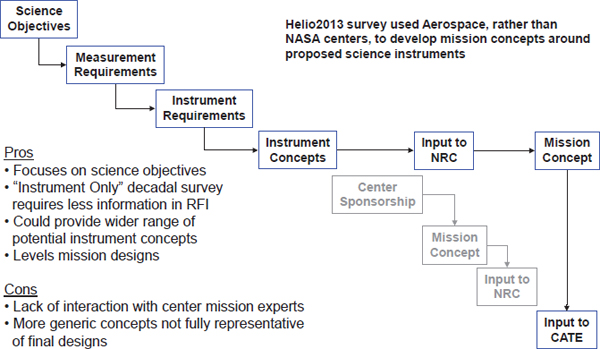

The 2013 solar and space physics (heliophysics) decadal survey (Helio2013) explicitly did not “grandfather” prior mission recommendations from the previous decadal survey but later was asked by the NASA program manager to consider Solar Probe Plus with cost triggers and decision rules in the event of major programmatic changes. As Daniel Baker, chair for the latest decadal survey to occur in the round of four, noted, the Helio2013 committee benefitted from seeing the challenges to implementing the programs of the other surveys and chose to focus on achievable, smaller-scale activities.11 The survey also utilized working groups that considered crosscutting issues of relevance to all the panels. The statement of task for the survey, however, explicitly excluded the development of “specific mission or project design/implementation concepts,”12 leading to difficulty in costing “science objectives” via the required cost and technical evaluation (CATE) process, leading to development of more notional mission concepts.13

Each community draws from the lessons learned and best practices of its earlier decadal surveys, and from the experiences of other disciplines that use the decadal survey process.

Lesson Learned: There is no “one-size-fits-all” approach to a decadal survey. Each discipline has cultural heritage and scientific goals that cannot be directly mapped to any other group.

Lesson Learned: The presently used process for conducting decadal surveys is able to accommodate these differences in the four disciplines and achieve the goal of community consensus on science goals and the activities that are required to achieve them.

Best Practice: Because the disciplines are so different, clear articulation of their unique aspects is useful to readers of the survey reports who might expect “uniformity in approach” across the surveys.

MISSION DEFINITION AND FORMULATION

Where Do Missions Come From?

The primary role of a decadal survey is to a provide an overview and assessment of the current state of knowledge in a specific discipline, identify and prioritize the top-level science questions for the coming decade, and map these questions into a balanced implementation strategy that could be accomplished within projected budgets. Each decadal survey identifies a small set of overarching science questions or goals that drive the entire program; these often change little from decade to decade. Underlying each question or goal are a number of more detailed questions and objectives that can change significantly from decade to decade as new discoveries are made and prior objectives are achieved through missions or other activities. Science priorities can also change if technology developments are able to advance high-priority science that was previously beyond reach.

Because of the scientific breadth of each discipline, and the complexities and interrelationships of the science, it is not straightforward to move from the identification of high-priority science goals to an optimized program of missions, facilities, and associated activities that maximizes the science, maintains balance across the program, and fits within anticipated budgets. For the purposes of this discussion, the committee uses the term mission to apply to space missions, including multi-platform observing systems and ground-based facilities.

Mission concepts have been derived from a variety of sources, including the following:

• Concepts that had been studied during prior decadal survey(s) that had either not been selected or not survived Phase A study;

_______________

11 Ibid.

12 NRC, Solar and Space Physics: A Science for a Technological Society, The National Academies Press, Washington, D.C., 2013, p. xii.

13 NRC, Lessons Learned in the Decadal Planning in Space Science, p. 16.

• Concepts that had been studied by a community group or NASA center but have not been officially part of a previous decadal survey process;

• New concepts that were brought to the decadal survey either in white papers from across the community or from presentations by community groups (such as the analysis and assessment groups [AGs] within planetary science) or senior members of the community with a broad perspective on the field;14 and

• Novel concepts that evolved from discussions during meetings of the decadal survey panels.

Before discussing more specific aspects of missions, it is useful to review their place in the decadal process. Regardless of their origin, all missions are discussed in detail by the panels before selections are made for further study, cost evaluation, and/or implementation recommendations. The panels then (typically) develop a notional program for that part of the discipline. These programs are passed to the survey committee, whose primary responsibility is to edit and blend this input into a coherent set of science goals, and the means to achieve them, across the discipline. In defining the strategy for the coming decade, each survey pays attention to a balance of activities, including missions, research and analysis, supporting facilities, technology development for the decade and beyond, and education and training. The implementation strategy for each decadal survey strives to be robust and achievable within appropriate timelines and budgets.

Best Practice: A solicitation process to gather community input via white papers, together with invited presentations by community groups and field leaders, broadens survey participation and ensures there is opportunity to consider important activities for the coming decade. This approach recognizes emerging opportunities and innovative approaches to program implementation that may not be readily apparent to members of the survey committee and panels.

How Are Missions Formulated?

Within and between each of the four disciplinary areas in the NASA Science Mission Directorate (SMD), there are differences in mission classes, issues of cost-capped missions (see Box 2.1) versus performance-driven missions, science-focused versus time-series campaigns (notably in Earth science and space weather), significant differences in time from launch to science phase, and the availability of supporting resources and infrastructure. NSF faces analogous challenges for certain large-scale facilities. Not surprisingly, each decadal survey has followed a different approach in determining which concepts would go forward for study and in prioritizing the subsequent mission concepts, a complicated process that is described in detail in the following section.

Despite the differences in approach for the various decadal surveys, there are clear parallels in how mission concepts, large-scale facilities, and observing system infrastructure are defined, prioritized, and implemented between the disciplines. Priority science goals that are defined within each of the decadal surveys are used to develop and refine mission concepts, facilities, and infrastructure that optimize science return while minimizing mission cost and risk.

In general, concepts for lower-cost NASA missions in each of the disciplines (e.g., Discovery or Explorer-class missions) are not defined or studied in detail within the decadal survey process. Instead, surveys typically recommend that such missions address priority decadal survey science goals and objectives while leaving the implementation details to the agencies, proposers, and/or mission teams.

For higher-cost missions, both cost-capped (generally PI-led) missions and performance-driven missions (see the section “High-Profile NASA Missions”), more detailed studies are generally required to ensure a viable decadal science strategy based on a full understanding of the costs and risks associated with the recommended program. To support a realistic cost evaluation, a sufficient fidelity in mission definition is needed to identify sources of risk that could lead to cost growth, which could affect that committee’s prioritization and recommendation. The extent

_______________

14 For all decadal surveys, community input has been solicited on both science questions and implementation strategies for the coming decade. While community input can address the full spectrum of implementation activities, a significant percentage of community input is focused on particular space mission concepts.

BOX 2.1

Cost-Capped Missions in NASA’s Science Mission Directorate

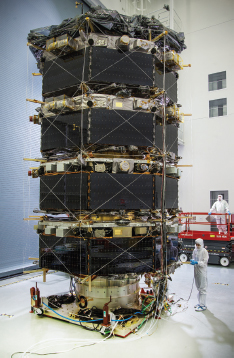

The Heliophysics Division supports the Heliophysics Explorer Program of competed principal-investigator (PI)-led small and medium Explorer-class missions ($50 million-$300 million), as well as directed missions in the Solar Terrestrial Probes Program (center-led but recommended for PI-led/cost-capped management; see Figure 2.1.1) and the Living with a Star Program, with a mix of medium ($300 million-$600 million) and large (>$600 million) missions.

The Earth Sciences Division supports competed Venture-class missions (space missions, hosted instruments, and suborbital campaigns with varying cost caps under $150 million that are managed by the Earth System Science Pathfinder Program) and directed systematic missions of various sizes (both cost-capped and performance-driven).

The Planetary Science Division supports the Discovery Program of smaller PI-led, competed missions (<$500 million); the New Frontiers Program of medium-sized PI-led competed missions ($500 million-$1 billion); and high-profile missions that are center-led, directed—i.e., non-competed—missions.

The Astrophysics Division supports the Astrophysics Explorer Program of small (<$120 million) and medium ($120 million-$180 million) missions, as well as the Physics of the Cosmos Program, the Cosmic Origins Program, and the Exoplanet Exploration Program, each with a mix of medium and large (>$1 billion) missions.

In addition, each of the divisions can support smaller Missions of Opportunity (MOOs), suborbital missions (balloons, sounding rockets, etc.) and cube satellites.

FIGURE 2.1.1 View of the fully stacked Magnetospheric Multiscale Mission (MMS) prior to its launch in March 2015. MMS is the fourth spacecraft in NASA’s Solar-Terrestrial Probe program. SOURCE: Courtesy of NASA/Chris Gunn.

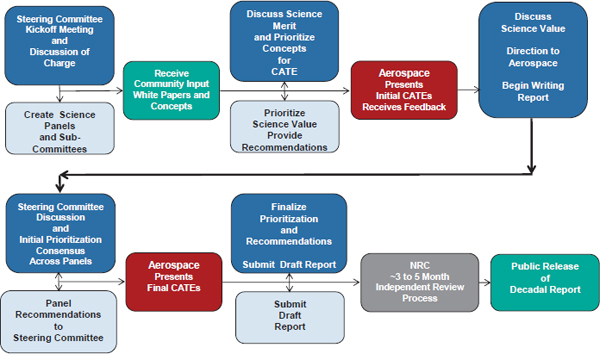

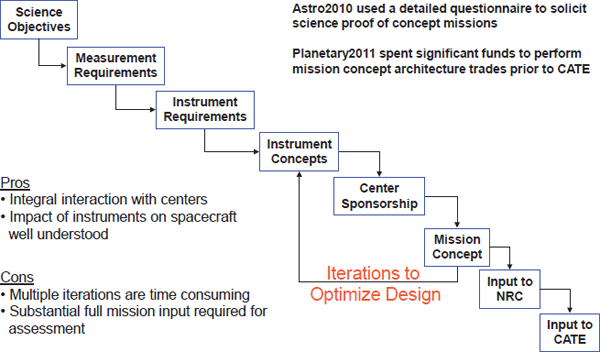

to which mission concepts are developed prior to versus during the decadal survey has varied between disciplines. For Astro2010, for example, the agencies sponsored detailed mission-concept studies that were fed directly into the decadal survey process for evaluation and prioritization. However, in Helio2013, mission concepts were developed within the decadal survey process based on proposed instrument concepts. Figures 2.2 and 2.3 from the “Cost and Technical Evaluation: The CATE Process” section (below) show the evolution of mission concepts were used in Astro2010 and Helio2013, respectively.

Best Practice: The practice within decadal surveys of not defining specific NASA mission concepts for lower-cost and competed missions, yet recommending that such missions address priority decadal survey goals and objectives, allows flexibility to leverage innovative implementation approaches.

How Is Mission Affordability Assessed?

Assessing the affordability of recommended missions has been a challenge for decadal surveys. The cost-risk assessment process adopted by recent decadal surveys is described below (see the section “Cost and Technical Evaluation: The CATE Process”; the specific application of the CATE process for recent surveys is described in Appendix B). The intent of the process is to ensure that mission costs considered during the prioritization process are credible and associated risks are well understood.

The fidelity of cost evaluations depends strongly on the robustness of the mission design. Unfortunately, the decadal survey timescale and budget are limited, and mission studies can consume considerable portions of both. This restricts the total number of studies that can be performed prior to mission prioritization and drafting of the decadal survey. Given the time constraints involved, it is even possible that decisions have to be made on whether or not to study particular mission concepts prior to the identification of priority science questions and goals. Obviously, such occurrences must be avoided.

Best Practice: A two-step CATE process that allows more concepts to remain in consideration in the early stages of the survey includes a faster, cruder “cost box” analysis for a longer list of candidate concepts. This would be followed by a detailed CATE for candidates for the final program that require more detailed assessment due to their cost, complexity, risk, or importance to the community.

Robust cost evaluation of mission concepts requires fairly detailed mission definition. The template process is to (1) start with the science goals, (2) trace them through science implementation—specific investigations and measurements, (3) identify or invent a candidate instrument or suite of instruments, and (4) develop a mission point design. Seeking a more accurate cost evaluation drives a tendency to over-specify instrument suites and missions. A negative consequence is that program directors and potential mission principal investigators can feel overly constrained in developing alternative mission concepts that might more efficiently address survey goals.

Lesson Learned: The tendency to over-define mission concepts in pursuit of more accurate cost evaluation can stifle creative approaches to addressing survey goals.

Best Practice: Decadal surveys can present their implementation strategies as reference missions—that is, a credible hardware configuration that can achieve the science goals and is sufficiently defined for robust cost evaluation—instead of blueprints for detailed implementation.

Best Practice: It is desirable that the survey committee determine, as early in the process as possible, how robust a mission concept needs to be to provide sufficient cost certainty. An example is an ambitious mission where the survey committee needs to know—with reasonable confidence—that a mission team will be able to propose a credible design that meets science requirements and fits within the cost cap for the mission class.

Care is needed to prevent decadal survey discussions from interfering with ongoing competitions. For example,

during Planetary2011, competition for the third mission in the New Frontiers program was under way. Ultimately, three mission concepts were selected by NASA for Phase A studies. The decadal survey’s respective panels discussed all three, although cost assessments were not performed. In one case, a panel determined that a particular mission concept did not warrant consideration as a high-priority mission for the coming decade; this information was not shared with NASA so as not to interfere with an ongoing competition.

Lesson Learned: Because each of the disciplines define and develop missions differently, there can be no uniform approach in dealing with mission concepts from prior decadal surveys, mission concepts that are under competition, or missions in Phase A study. Nonetheless, it is highly desirable for a survey committee to decide early on how to deal with such situations and to communicate this to the panels.

SCIENCE AND MISSION PRIORITIZATION

In the process of constructing a recommended program for a particular discipline, a decadal survey “must provide a compelling science narrative that communicates the importance and value of the science.”15

Prioritization is an essential part of producing a strategic program that is feasible, executable, and sustainable over a decade-long horizon. The decadal survey prioritization process assimilates science goals, missions, facilities, observing systems, infrastructure, human capital, and public-benefit considerations into a programmatic vision that is coherent and integrated, with well-articulated goals and well-defined metrics.

It is in the prioritization process where “scientific aspirations—what we would like to do—come face-to-face with what we can do.”16 To accomplish this, decadal surveys must identify and weigh a number of goals, values, and challenges related to initiatives that could advance the highest-priority science programs. Some examples of these criteria are as follows:

• Is the proposed science transformational and/or fundamental, or is it incremental?

• Is the science primarily explorative, exploiting new technology or techniques to search for new kinds of celestial sources and/or phenomena that have been previously beyond reach?

• Is it practical, within state-of-the art technology, or does it require a substantial advancement from present capabilities?

• What is the breadth of the science to be achieved? Will its accomplishment have effects across the discipline, across multiple disciplines, across agencies, or around the world?

• What is the complexity of execution? Are multiple new technologies or system capabilities required? Are there substantial dependencies on infrastructure and/or workforce or a complex relationship among international partners?

• How will the execution of the program, including the scale of effort and potential risk, affect portfolio balance? Are substantial benefits to other science themes or disciplines likely?

• What is the return on investment? Is it simply science per dollar, or does it extend to national priorities or leadership?

• Are there other aspects of the activity that could affect its priority—for example, operational issues, links to human exploration, interagency and international cooperation, and the interests of other stakeholders?

• Would there be societal benefits or other impacts? Will the science notably serve the public interest, contribute to national imperatives, advance education or workforce development, or help build or retain areas of excellence?

It is clear from this incomplete list that prioritization is a very complicated process that involves the simultaneous assessment of very different qualities and characteristics, the ultimate “apples versus oranges versus plums versus grapes” comparison. The choice is multidimensional and unsymmetrical, with both correlation and anti-correlation

_______________

15 National Research Council, Lessons Learned in Decadal Planning in Space Science, 2013, p. 3.

16 NRC, Lessons Learned in Decadal Planning in Space Science, 2013, p. 33.

TABLE 2.1 Prioritization Criteria Used by Various Decadal Surveys

| Survey | Broad Prioritization Criteria for Notional Mission Concepts, Measurement Systems, and Capabilities |

| Earth Sciences and Applications from Space (Earth2007) |

|

| Astronomy and Astrophysics (Astro2010) |

|

| Planetary Sciences (Planetary2011) |

|

| Solar and Space Physics (Helio2013) |

|

of pros and cons. This is why the process is so difficult and so powerful and why doing a good job is key to the success of a decadal survey.

What Do Decadal Surveys Prioritize?

A decadal survey committee’s key role is in the prioritization of science goals and objectives. To accomplish these science goals, the decadal survey considers missions, facilities, observing systems, and associated activities that are judged to be necessary for making the key observations and measurements.

The term missions and facilities may capture the major elements of the decadal programs of the astronomy and astrophysics and planetary science, even though a single mission or facility may serve the needs of completely independent science programs. However, these words do not fully capture the richness of the observational tools needed for both Earth science and solar and space physics, where comprehensive understanding of diverse and coupled physical domains requires a coordinated observing network comprising distributed sensors, satellites, observatories and laboratories. Elements of a decadal program that contribute to an array that is capable of multiple types of measurements is referred to as an observing system. Especially in the committees’ discussion of prioritization, this distinction is crucial.

For example, Earth2007 considered the possibility of placing a variety of instruments on single platforms in the same orbit, identifying multiple uses for similar or the same measurement, flying platforms/instruments in formation, and many other options. These coordinated elements provide a framework for the Earth information system to address “recognized national needs for Earth system science research and applications to benefit society.”17 Similarly, solar and space physics prioritization criteria (Table 2.1) include consideration of the relevance of measurement and data requirements to societal issues (space weather impacts and solar and terrestrial climate system measurements), timing relative to the solar cycle, exploitation of unique critical vantage points, and other missions delivering complementary data. Helio2013 explicitly discussed how “NASA’s existing heliophysics flight missions

_______________

17 NRC, Earth Science and Applications from Space, 2007, pp. 27-28.

and NSF’s ground-based facilities form a network of observing platforms that operate simultaneously to investigate the solar system. This array can be thought of as a single observatory—the Heliophysics Systems Observatory.”18

How Decadal Surveys Prioritize: The Roles of Committees and Panels

The prioritization process varies by discipline owing to different scientific methods and cultures. As discussed in Chapter 1, all decadal survey committees have used panels to organize and to winnow community inputs in order to guide the early steps of prioritization. As noted at the 2012 workshop, most survey committees delegate the formulation of key science priorities to supporting panels.19 A variety of criteria and techniques have been used by the four SMD disciplines to prioritize science, observing systems, and supporting activities.

Earth Science and Applications from Space

The Earth2007 panels used guideline criteria to assess how the proposed concept contributed to the following:

• The most important scientific questions facing Earth sciences today (scientific merit, discovery, exploration),

• Applications and policy making (societal benefits), and

• The long-term observational record of Earth.

The Earth2007 panels also examined and assigned priority based on (1) complementarities with other observational systems, including planned national and international systems; (2) technical readiness and risk mitigation strategies; and (3) affordability with respect to the entire envisaged portfolio.20

The panels received more than 200 white papers outlining ideas or plans for missions. Individual panels discussed and winnowed community proposals and introduced ideas of their own to develop a program; the list of potential missions was much longer than could conceivably be implemented.

Individual Earth2007 panels had direct representation on the survey committee—panel chairs were also members of the survey committee. After the first round of panel prioritization, the survey committee met with the panels to look for synergies among the various individual proposals. Panels were able to consider various kinds of observing systems. This complex process enabled the survey committee to develop and optimize a suite of missions as components of an observing system, instead of having to simply choose among the priorities of individual panels.

A further objective of the Earth2007 prioritization process was to achieve a robust, integrated program—one that would not crumble if one or several of the prioritized missions were removed or delayed or if the mission list evolved to accommodate changing needs. The survey committee underscored the importance of maintaining a robust program over any particular mission on the list, stating, “It is the range of observations that must be protected rather than the individual missions themselves.”21 This strategy would also facilitate augmentation or enhancement of the program should additional resources become available beyond those planned for by the survey.

The Earth2007 report called for the U.S. government to renew its investment in Earth-observing systems, with specific recommendations concerning long-term observations and a listing of 17 recommended “new measurement” missions, organized into phases, for implementation by NASA and NOAA.22

Solar and Space Physics (Heliophysics)

Helio2013 developed a process for prioritizing science and observing systems that was driven by the overarching scientific goal of advancing understanding of the Sun and its interactions with Earth and the interstellar medium. A particular focus was placed on practical applications, such as the science needed to reliably forecast

_______________

18 NRC, Solar and Space Physics, 2013, p. 4.

19 NRC, Lessons Learned in Decadal Planning in Space Science, 2013, p. 8.

20 NRC, Earth Science and Applications from Space, 2007, p. 7.

21 NRC, Earth Science and Applications from Space, 2007, p. 7.

22 NRC, Earth Science and Applications from Space, 2007, p. 8-9.

disruptive space-weather disturbances that threaten the economy and technology infrastructure. Three guiding principles for prioritization in the solar and space physics program were articulated: transformational science requires study of the Sun, Earth, and heliosphere as a coupled system; understanding the system requires measurable progress toward achieving major goals in each subdiscipline during the decade; and a successful program requires an effective mix of all program elements—theory, modeling, analysis, and technological innovation, as well as large, medium, and small missions and facilities. The survey committee, working in concert with panel chairs, developed four key science goals and a “discipline vision” that would help scientists to understand our home in the solar system, to predict the changing space environment and its societal impact, and to explore space to reveal universal physical processes.

Helio2013 solicited white papers on science topics and questions, but not for mission concepts. Nearly 300 white papers were received, and these—together with the relevant NASA roadmaps—were given to three panels organized by science theme (see Box 1.7). The panels developed detailed scientific “imperatives” that were then traced into concepts for reference missions. Eventually, the survey committee requested each of the three panels to identify its highest-ranked mission concepts for additional study by a design team.23 Prioritization criteria included scientific merit, relevance to societal issues, technical readiness, and timing relative to the solar cycle or other missions.

The survey committee integrated scientific inputs, assessments, and priorities from the panels to provide an overall prioritization for new ground- and space-based initiatives. After completing the prolonged program that was already under way, the top recommendations called for modest investment in a number of innovative and effective scientific research activities called the “Diversify, Realize, Integrate, Venture, Educate” (DRIVE) initiative, followed by an increase in the cadence of competitively selected Explorer missions. Prioritization of new initiatives was heavily influenced by the Aerospace Corporation’s CATE assessment (described below), because only a few viable choices were identified.

Planetary Science

Planetary2011 identified three priority themes for the coming decade that crosscut the planetary sciences:

• Building new worlds—understanding solar system beginnings;

• Planetary habitats—searching for the requirements of life; and

• Workings of solar systems—revealing planetary processes through time.

Planetary2011 used four criteria for selecting and prioritizing missions:

• Science return per dollar;

• Programmatic balance—striving to achieve an appropriate balance among mission targets across the solar system and an appropriate mix of small, medium, and large missions;

• Technological readiness; and

• Availability of trajectory opportunities within the 2013 to 2022 time period.

Although planetary science is “destination oriented,” priorities for spacecraft missions to the Moon, Mars, and other solar system bodies were treated in a unified manner with no predetermined “set asides” for specific bodies,24 a major departure from the 2003 planetary science decadal survey.25

As described at the 2012 workshop, Planetary2011 used a multistep process to develop priorities for both science goals and reference missions.26 This involved continuous communication and feedback between the panels

_______________

23 NRC, Lessons Learned in Decadal Planning in Space Science, 2013, p. 37.

24 NRC, Vision and Voyages, 2011, pp. 9-10.

25 NRC, New Frontiers in the Solar System: An Integrated Exploration Strategy, The National Academies Press, Washington, D.C., 2003.

26 NRC, Lessons Learned in Decadal Planning in Space Science, 2013.

and the survey committee. The panels developed science questions based on input received from external sources (such as white papers generated by individuals and like-minded members of the relevant scientific communities), plus internal deliberations. The survey committee then integrated the science questions from across the panels. Next, the panels developed concepts for reference missions to address these science questions. Selected mission concepts were forwarded from the panels, via the survey committee, to leading mission design centers to assess their technical feasibility. Each center-based, mission-concept design team included at least one panel member who acted as the “science champion”—that is, the panel member charged to advocate on behalf of the mission’s science goals. The individual panels used the results of the concept design studies to inform their ranking of the most promising mission concepts.27

Planetary2011 finalized a set of recommended missions intended to achieve the highest-priority science identified by the planetary science community and the panels within the projected budget resources.28

Astronomy and Astrophysics

Astro2010 used a model for prioritization that separated exploration of science goals from identification and ranking of concepts for missions, facilities, and associated activities. The survey committee’s deliberations were informed by five SFPs and four PPPs. By running them sequentially, these two varieties of panels were run with minimal cross talk during their deliberations.29 The decadal survey committee accepted community input using mechanisms similar to other disciplines, including the use of a series of requests for information (RFIs) addressed to the community. Once key scientific priorities where defined, the SFPs were disbanded, and their goals where passed on to the PPPs, which were charged with identifying and evaluating missions, facilities, and supporting activities, as described in the RFIs, that could make progress on the science priorities. This “science first” structure was intended to avoid “picking specific science goals because they were what a preselected mission was good at doing.” 30

An important aspect of Astro2010—highlighted at the 2012 workshop—was that the SFPs and PPPs were supplemented by six informal (i.e., not appointed by the Academies) infrastructure study groups addressing a broad range of topics, including education and public outreach, international and private partnerships, computation and data handling, and the demographics of the astronomical community.31 This combination of formal panels and informal study groups worked well because it provided ample mechanisms for community input with low barriers for participation.

Astro2010 selected and ranked the highest-priority science objectives, as informed by the SFPs, and the concepts for mission and facilities to meet these objectives, from the PPPs. The survey committee’s recommended program included not only missions and facilities but also programs at NSF (mid-scale innovations program) and NASA (Explorer Program augmentation) that are competitively selected. The prioritized science goals identified by Astro2010 have been used by proposers to validate the importance of their project, utilizing the independent science prioritization made by the survey.

The Astro2010 list of prioritized missions, facilities, programs, and supporting activities was intended to guide NASA, NSF, and Department of Energy in the implementation of the recommended program. The decadal survey report emphasized that optimizing the implementation of their decadal aspirations is the responsibility of agency managers.32

_______________

27 NRC, Lessons Learned in Decadal Planning in Space Science, 2013, p. 37.

28 NRC, Vision and Voyages, 2011, pp. 26.

29 NRC, New Worlds, New Horizons, 2010, p. xvii-xx.

30 NRC, Lessons Learned in Decadal Planning in Space Science, 2013, p. 36.

31 NRC, Lessons Learned in Decadal Planning in Space Science, 2013, pp. 7-8.

32 NRC, New Worlds, New Horizons, 2010, p. 4.

Synergies Across Disciplines and the Changing Face of Research

Decadal survey committees have a responsibility to look broadly over the discipline priorities identified by supporting panels for synergies and connections. Astro2010 recognized that “the sociology of astronomy has also changed [resulting in a field today that] is more collaborative, more international, and more interdisciplinary.”33 For large ground-based facilities, the committee highlighted the synergy between the Large Synoptic Survey Telescope (LSST) and the Giant Segmented Mirror Telescope, noting that each “would be greatly enhanced by the existence of the other, and the omission of either would be a significant loss of scientific capability.”34 Astro2010 also stressed the need for both NSF’s LSST and NASA’s James Webb Space Telescope (JWST) to advance key, discipline-wide, science questions. As noted at the 2012 workshop, an “astronomy decadal survey that addressed only science questions and leading implementation issues for NASA would give short shrift to the interests of NSF,” 35 and concomitantly to other agencies and stakeholders.

Similarly, NSF’s Daniel K. Inouye Solar Telescope (DKIST, formerly the Advanced Technology Solar Telescope) is recognized as a key strategic element of the Heliophysics System Observatory that supports NASA’s Heliophysics program and all of solar and space physics. Helio2013 repeatedly emphasized that the ground-based assets of NSF “provide essential global synoptic perspective and complement space-based measurements of the solar and space physics system” to enable frontier research.36 The survey also recommended the new, crosscutting DRIVE initiative as a focus for low-cost, high-impact ways to accomplish the science goals of the survey and integrate the recommendations across the agencies. The prioritizations of Helio2013 reflect the economic environment; they were made in the context of recommendations from other science areas and were intended to address operational needs as well. However, as noted at the 2012 workshop, decadal surveys should be mindful of “the principle agency and organizations that will receive the recommendations and define the scope of their responsibilities.”37

Interactions between discipline panels may also help identify initial synergies and potential priorities for science and missions/facilities that are cross-cutting over the discipline. While it is exclusively the job of the survey committee to integrate panel priorities into a cohesive strategic plan, cooperation among panels may lead to more effective and/or affordable implementation of the program. A critique voiced at the workshop was that certain surveys adopted a posture that inhibited such conversations and led to narrow “stove-piping” of mission concepts,38 a situation that might be avoided through advanced planning for inter-panel and panel-committee interaction.

A continual theme in the 2012 workshop discourse was that there were distinct differences in “how each decadal [survey] evaluated missions and science objectives for prioritization.”39 Agencies recognize that each decadal survey has adopted a discipline-specific strategy for developing a prioritized, executable science program. Questions of how to balance the prioritization of science and missions were discussed at length throughout the workshop.40 With the exception of Astro2010, decadal surveys have used panels that prioritized science and missions together, and in Planetary2011, in particular, this process was augmented by contemporaneous involvement by the survey committee itself. Table 2.1 lists some of the criteria used by survey committees and panels, as articulated during 2012 workshop discussion.41

Different disciplines prioritize using different approaches and techniques. Common across disciplines is a tendency for survey committees to provide a broad set of prioritization criteria to guide the work of the panels, which individually have a sharper focus.

_______________

33 NRC, New Worlds, New Horizons, 2010, p. 80.

34 NRC, New Worlds, New Horizons, 2010, p. 95.

35 NRC, Lessons Learned in Decadal Planning in Space Science, 2013, p. 41.

36 NRC, Solar and Space Physics, 2013, p. 5.

37 NRC, Lessons Learned in Decadal Planning in Space Science, 2013, p. 25.

38 NRC, Lessons Learned in Decadal Planning in Space Science, 2013, p. 20.

39 NRC, Lessons Learned in Decadal Planning in Space Science, 2013, p. 16.

40 NRC, Lessons Learned in Decadal Planning in Space Science, 2013, p. 16.

41 NRC, Lessons Learned in Decadal Planning in Space Science, 2013, p. 37.

Lesson Learned: Although science goals may not be explicitly ranked, science priorities drive the rankings of missions, facilities, programs, and other activities.

Lesson Learned: Experience indicates that survey prioritization puts a high value on programmatic balance across missions and facilities and is also attentive to the need for long-term continuity in certain observational data.

Lesson Learned: Technical readiness and affordability can often influence science prioritization of missions and facilities. High-priority science may be deferred if needed technology is immature and there is a significant risk of cost growth that would affect both scope and schedule.

Survey prioritization implicitly recognizes the importance of strengthening the nation’s workforce in space sciences and engineering. Workshop participants noted, “The space science community and NASA need to maintain core competencies within the workforce on how to carry out large-scale missions.”42 The science programs carried out by agencies crucially depend on the recently graduated scientists and engineers who will maintain and improve the mature technologies and pioneer new ones. A long hiatus in one part of a discipline can lead to decay in critical scientific and technological expertise. All disciplines need the opportunity to make progress during a decade in order to preserve their capability to create the next generation of instrumentation.

Workshop participants noted that for Earth2007 and Helio2013, the implementation of the decadal survey’s recommended program relied on the cooperation of multiple agencies, and they expressed great concern that science priorities can be compromised if components are lost or data go uncollected.

In addition, tightly specified mission recommendations can be a problem for NASA in developing implementation plans. NASA has internal constraints (NASA centers, workforce issues, etc.) and is subject to congressional and executive branch decisions. Furthermore, new technologies and innovative approaches may produce a more effective mission, as can international and interagency cooperation. Therefore, a potential option for decadal surveys is to choose and describe reference missions (see the section “Suggested Changes in the Prioritization Process” below) that are judged capable of carrying out the science but to encourage agencies to follow, first-and-foremost, the science objectives of the prioritized missions.

Lesson Learned: The potential for international collaboration, interagency cooperation, and inclusion of the private sector impacts science and mission prioritization across all disciplines.

As noted at the 2012 workshop, comprehensive sampling of community opinions—using the best available practices for gathering and curating community input (e.g., solicitation of white papers, town hall meetings, committee and panel meetings at major research centers, and the use of broadly representative panels)—has been woven into the fabric of all decadal surveys.43 Digesting and incorporating this input is an essential part in assessing community consensus and for initiating the survey’s prioritization process.

Best Practice: Establishing a community-wide consensus is arguably the most important goal of a decadal survey, and community “buy-in” to the decadal survey’s process is crucial. Community trust in the decadal survey process depends on a clear understanding of the prioritization methodology used by the survey committee and its supporting panels.

Challenges to Science and Mission Prioritization Processes

Clarity of task from sponsoring agencies and substantial involvement of the community are essential components in the process. The success of future decadal surveys lies in part with the following:

_______________

42 NRC, Lessons Learned in Decadal Planning in Space Science, 2013, p. 59.

43 NRC, Lessons Learned in Decadal Planning in Space Science, 2013, pp. 5, 8, 17, 20, 26, 34, 36-37, 49, and 75.

• Clarifying that discussions and recommendations by the panels are an important element in “traceability” of survey process, but only the decadal survey committee’s recommendations “count”;

• Recognizing that “buy-in” to the decadal process by all participating agencies (and/or divisions within agencies, as appropriate) is essential, because carrying out national programs can require more than one agency;

• Minimizing the uncertainty in budgetary planning envelopes that decadal surveys use to construct a recommended program;

• Executing “CATE-like” assessment of affordability and technical difficulty; and

• Communicating effectively with the community

DECADAL SURVEY PRIORITIES AS INPUTS TO AGENCY GOALS AND OBJECTIVES

The science goals and objectives developed by each of the decadal surveys are an integral part of NASA’s strategic planning process. Other agencies also use decadal surveys as part of their strategic planning process to varying degrees. While NASA must consider broader issues beyond the scope of the decadal surveys, such as facilitation of and synergy with the human space program, there exists a clear traceability between decadal recommendations and NASA strategic goals and objectives and the science goals of the SMD divisions, as delineated in Appendix B of NASA’s 2014 Science Plan.44 Clearly, decadal survey science goals support NASA’s strategic goals to (1) expand the frontiers of knowledge, capability, and opportunity in space (Heliophysics, Planetary Science, Astrophysics, and Earth Science divisions) and (2) advance understanding of Earth and develop technologies to improve the quality of life on our home planet (Earth Science and Heliophysics divisions). Not surprisingly, a strong synergy remains between the science goals of each SMD division and the science goals of each decadal survey discipline.

SUGGESTED CHANGES IN THE PRIORITIZATION PROCESS

“Science Only” Decadal Surveys?

In a panel discussion at the 2012 workshop, NASA SMD division directors or their representatives suggested that decadal surveys focus on science prioritization, with less attention to missions, facilities, observing systems, and the means to accomplish them. Their concern was that surveys are becoming ever more prescriptive in their recommendations of specific architectures for missions, perhaps because surveys are trying too hard to optimize the recommended science program in the face of tighter budget constraints. Specific mission architectures can put NASA SMD in a “straightjacket” with respect to evolving capabilities and budgets, the division directors noted, and they may conflict with other programmatic, governmental, and societal priorities that the agency must accommodate while executing the decadal program.

Science priorities evolve slowly: decadal surveys—with their 10-year cadence—show a high level of correlation between the science priorities from one survey to the next. However, an implementation strategy may change over the decade due to new technical capabilities or instability in available budgets. There is some sense, then, to focusing decadal surveys on science, leaving implementation largely in the hands of the agencies.

However, the strong consensus of participants of the 2012 workshop was that prioritizing science alone—without including “missions” that juxtapose “what we want to do” with the critical “what we can do”—is not a good idea and probably not possible. The committee suggests that, in order to attend to the legitimate concerns of NASA SMD, future decadal surveys can choose to describe “reference missions” rather than explicitly recommend specific point designs for direct implementation. Reference missions are implementation architectures that are judged capable of addressing specific science goals. NASA would then have the flexibility it needs to optimize a reference mission and craft an implementation strategy that is more capable and/or more affordable and, thus, satisfy NASA’s broader interests and constraints. Through the stewardship process following a survey, community scientists can work with NASA to rescope, adjust, or innovate to accomplish the survey’s science objectives.

_______________

44 NASA 2014 Science Plan, reprinted in Appendix A.

While this reference mission approach might be suitable for many recommended missions, certain high-profile missions (discussed in Chapter 3) can be mature concepts that have been refined and optimized over years of design studies and may indeed be intended as explicit recommendations for implementation. Decadal survey reports are well advised to explicitly note when they are discussing a reference mission and when they are not. In other words, survey reports need to signal when they are discussing a notional reference mission capable of achieving science goals X, Y, and Z and when they are discussing a specific implementation of a mission that achieves science goals X, Y, and Z. The New Frontiers mission candidates discussed in Planetary2011 are, for example, reference missions.45 The Jupiter Europa Orbiter, discussed in the same document, is a specific implementation of a mission designed to conduct scientific observations of Europa from an orbital vantage point (Figure B.5).46 Successful prioritization of science objectives in a decadal survey requires a clear understanding of the scope, scale, and feasibility of missions that could reach these objectives.

Lesson Learned: It is important that decadal surveys explicitly note which proposed missions are reference missions—i.e., subject to further development—versus those intended as explicit implementation recommendations based on mature and well-refined concepts.

As noted above in the section “Mission Formulation and Development,” decadal surveys can utilize reference missions in their science prioritization processes as “existence proofs”—i.e., demonstrations that spacecraft missions addressing specific science goals are feasible—that allow continued development of missions to best achieve a survey’s science goals and serve the interests of all stakeholders.

A Two-Phase Decadal Survey Process?

The necessity of including mission concepts in the science prioritization process raises the question of whether the science prioritization might be undertaken to some degree before a survey begins. The committee was asked to consider this option for future surveys.

Planetary2011, Earth2007, and Helio2013 all chose a process that specifically prioritizes science and missions together. In contrast, the SFPs of Astro2010 identified high-priority science questions independently of missions, although they stopped short of actually ranking them within or across the subdisciplines. This process put a strain on the schedule for completing Astro2010 within the allotted 2-year period. Adding a “time out” to inform the community of the results of science prioritization, as has been suggested to help proposers of missions and facilities, is, in the committee’s opinion, impractical in terms of schedule and budget. This motivates consideration of what the committee’s statement of task calls a “two-phase approach”—prioritizing the science and then informing the community in a separate process that occurs before the survey begins.

It is clear that a pre-survey process cannot be on the scale of the decadal itself, so wide community involvement would be in the form of white papers and community meetings that might serve as input to the appropriate standing committee of the Academies, or a specially tasked committee run by the Academies by the Space Studies Board (SSB), in collaboration with other boards as appropriate. It would be challenging for such a review committee to represent the entire community and to prioritize across the discipline, something only achievable by a survey committee (with broad community representation and the help of panels). The best outcome would likely be a list of the 10 to 20 highest (but equally weighed by elements of the community) priorities for the different themes of the discipline.

This is, indeed, what resulted from a “science only—no missions considered” process in Astro2010. Five SFPs framed 4 questions each for their subdiscipline, adding 1 or 2 “discovery areas.” The result was a list of 20 questions and 6 discovery areas: there was no attempt to prioritize across these—that job was left for the survey committee, with input from the panels. This crucial next step required consideration of the means to carry out the science—the missions and facilities. It is not obvious that the availability of such a long, unprioritized list would

_______________

45 NRC, Vision and Voyages, 2011, pp. 266-268, 243-246, 339, 344-347, and 352.

46 NRC, Vision and Voyages, 2011, pp. 243-246 and 345.

have helped mission proposers to better “tune” to the community’s science goals, whether it came in the middle of the survey or in an organized pre-survey process.

Given the richness of science in these NASA SMD disciplines and the demonstrated difficulty for a group of scientists to prioritize within their subdisciplines, the committee concludes that it is not possible—based on science alone—for any group to prioritize across their discipline. Are missions to Mars more important than visiting the icy moons of Jupiter? Is the discovery of Earth-like planets around nearby stars more interesting than a full understanding of how stars are born or when the first galaxies appeared? Is the heating of the Sun’s corona a more urgent area of inquiry than the effect of the solar wind on Earth’s atmosphere? Considering the capabilities of realistic candidate missions helps decadal survey committees choose which science programs should have the highest priority, based on where the greatest progress can be made. Otherwise, the decadal survey task would be difficult at best.

Updating Science Priorities Before a Survey Begins

An alternative approach would be to exploit the variety of existing advisory committees and regular community activities in the discipline under consideration. The community can be encouraged, and activities structured, to look at the evolution of science goals from the previous survey. The SSB standing committees and the midterm reviews of the decadal surveys could look for new science developments that indicate a shift of priorities in advance of the next survey. For example, evidence for an accelerating universe came to light in the middle of the Astro2000 process; the change in the science priorities of cosmology happened in between surveys.

Society meetings could include public forums to discuss the impact of important discoveries on the next survey. NASA could ask the “AGs” (e.g., the Mars Exploration Program Analysis Group) to contribute to the discussion.47 The process of developing NASA roadmaps leads to development of new mission concepts to address new and topical science questions. To digest all of this, a modestly sized pre-survey science committee, perhaps run by the Academies on behalf of the relevant agencies, could prepare a report that synthesizes, from these activities and their own assessments, which areas of science might be promoted or added (or demoted or removed) from the discipline’s long-term science goals. This report, although stopping short of prioritizing science, would help lay the foundation for the work of the next survey.

Moreover, the period between decadal surveys is an ideal time to work on mission concepts and to bring them up to a minimal standard of development. Similar thinking may apply to issues of international collaboration (see Chapter 4). International activities between surveys, including some sponsored by the agencies, could help prepare for collaborative missions proposed to decadal surveys or to, for example, the European Space Agency’s (ESA’s) process for selecting the medium- and large-class missions in its Cosmic Vision strategic plan.48 Before a survey begins is, of course, also an opportunity for exploring new interagency collaborations. The Astrophysics Division of SMD recently proposed just such an activity to prepare for Astro2020. The opportunity of using the time between surveys to update the science and formulate possible missions is arguably the best way to achieve the goals of a two-phase process, without prolonging the decadal survey process.

Lesson Learned: The community has many means, especially between decadal surveys, to address the evolution of science in the discipline. This forms the basis of a two-phase process without separating the decadal survey process itself in two.

Best Practice: Agencies, committees of the Academies, community workshops and meetings, and white papers can contribute to pre-survey science priority identification as preparation for, and a valuable contribution to,

_______________

47 Additional information about MEPAG and the other analysis and assessment groups can be found at Lunar and Planetary Institute, “NASA Advisory, Analysis and Assessment Groups and Resources,” last updated November 18, 2014, http://www.lpi.usra.edu/analysis/.

48 European Space Agency, Cosmic Vision: Space Science for Europe 2015-2025, BR-247, ESA Publications Division, Noordwijk, The Netherlands, 2005, http://www.esa.int/esapub/br/br247/br247.pdf.

the next survey. These activities can also spur early development, evaluation, and maturation of concepts for new missions for potential priorities well in advance of the survey itself.

AGENCY FEEDBACK DURING THE DECADAL: THE “2-YEAR BLACKOUT PROBLEM”

Generally, the development of decadal survey recommendations is performed using a budget profile for the coming decade that is based on current and historical budget trends provided by NASA’s SMD divisions and NSF’s Astronomical Sciences Division (AST). Similar budgetary profiles are provided by other federal agencies to the survey committees. During the formulation of the decadal survey, changes from previous notional “out-year” budgets for NASA may occur in OMB’s formulation of the president’s budget request for the coming year and notional budgets for the following 4 years. Such changes may result in substantial variance from the assumed budget profile used in the development of decadal survey recommendations. Often, NASA, NSF, and other agencies cannot share budget (and budget-related planning) information due to OMB embargos. The net effect can be that a survey’s recommendations may be inconsistent with the budget available when the decadal report becomes available. In addition, because the Academies cannot share the status of the survey’s recommendations while committee deliberations are ongoing, the recommendations cannot be used to communicate science community planning to NASA or other agencies for use in formulating arguments to OMB for future funding.

There are several possible mitigation strategies to this issue, parts of which have already been tested in prior decadal surveys, including the following:

1. Utilize decision rules that can accommodate significant but reasonable deviations from the projected budget. Such decision rules should allow for both increases and decreases in the budget and provide guidance for maintenance of program balance (see Chapter 3). For example, when the budget of NASA’s Planetary Science Division was cut from $1.5 billion to $1.2 billion just as Planetary2011 was released, its decision rules were sufficient to accommodate such cuts and maintain the overall viability of the decadal survey recommendations.

2. Ensure that the duration of the decadal survey process is kept as short as is reasonably possible to ensure that the recommended program aligns as closely as possible with the current fiscal environment. The 18 to 24 months required to complete the many activities of a survey committee, including input from the community, panel meetings, and survey deliberation, is unlikely to be significantly shortened. However, some pre-work with the scientific community and its representative groups prior to the official start of a survey could help ensure the survey completion in the minimum possible time. Such pre-work could reduce the pressure on a survey to define and refine concepts for study very early in its discussions. A recent policy announcement by the director of NASA’s Astrophysics Division aims to do just this.49

3. Agencies can continue to engage the Academies’ standing committees while the decadal survey is in process, even if at a reduced frequency. OMB will not release budget information to the Academies prior to official release of the president’s budget, nor will the Academies share the deliberations while the survey is in progress; however, there are many other science-related issues that can and should be productively discussed during the approximately 2-year period of the decadal survey’s preparation so that both the agencies and communities remain situationally aware. This also enables the agencies to request and receive advice in the interim, should it be needed to respond to emergent and/or time-sensitive opportunities. Continued engagement during the decadal survey preparation period ensures the advisory infrastructure remains in place to effectively steward the new decadal immediately upon its release.

Although the “2-year blackout problem” creates a degree of uncertainty for the implementation of the decadal surveys, the existing process is designed to accommodate modest levels of change to ensure that the decadal survey recommendations remain appropriate and valid for the decade in which they are to be implemented.

_______________

49 For details see “Planning for the 2020 Decadal Survey: An Astrophysics Division White Paper,” January 4, 2015, a NASA Astrophysics Division Strategic Planning Document available at http://science.nasa.gov/astrophysics/documents/.

Sidestepping the Budgetary Blackout Problem?

The committee thinks there is another approach that could minimize the effect of this blackout period. Decadal surveys are concerned with budgets over the next 10 years, so updates on the next one or two budgets are not central to the task. Rather, it is the projected out-year budgets that are important, and they are, by nature, even more uncertain. A different approach to setting anticipated funding levels, one separated from year-to-year expectations and fulfillment, would be to use the previous budgets of the particular NASA or NSF division, averaged over some number of years preceding the survey, as a “baseline” budget for the survey program. A flat budget, perhaps with a yearly adjustment for inflation, could be the starting point for planning a program, with a possible “up” and “down” adjustment assumed to provide a budget that would envelope future fluctuations in the division budgets.50

COST AND TECHNICAL EVALUATION: THE CATE PROCESS

In the 2008 NASA Authorization Act, Congress mandated a “lifecycle cost and technical readiness” review of proposed NASA projects.51 Such reviews have become an important part of the decadal survey process. This section provides a detailed account of how this process has been used and how it has evolved as a critical tool in constructing an executable and affordable program for the highest-priority science.

NASA has a proud history of executing space missions in all four divisions of its Science Mission Directorate. Often, these missions are technically challenging and incorporate a high degree of innovation, and they have consistently delivered science discoveries, fresh understanding of phenomena, and durable measurements that go far beyond the original scope. There have been few instances of failures in launch, deployment, instrumentation, operation, or communication or underestimation of the scientific challenge.

Yet, space science is an intrinsically risky business, as well as an expensive business. In a few well-publicized cases, mission costs have risen by large factors, usually because of underestimating the technical challenge or true cost, and/or due to budget cuts that led to extended construction profiles that substantially increased costs and launch delays. Contingency for such occurrences has rarely been part of the planning, because of an insidious disincentive for those involved to include it in their proposal, especially for the most ambitious missions. A number of cost overruns, most notably those of JWST and the Mars Science Laboratory’s Curiosity rover, begged the question, does the decadal process need a dimension of technical feasibility and cost evaluation? A 2006 report of the Academies drew the following conclusion:

Major missions in space and Earth science are being executed at costs well in excess of the costs estimated at the time when the missions were recommended in the National Research Council’s decadal surveys for their disciplines. Consequently, the orderly planning process that has served the space and Earth science communities well has been disrupted, and the balance among large, medium, and small missions has been difficult to maintain.52

In response to this concern, the report made the following recommendation:

NASA should undertake independent, systematic, and comprehensive evaluations of the cost-to-complete of each of its space and Earth science missions that are under development, for the purpose of determining the adequacy of budget and schedule.53

An extended discussion of cost estimates and technology readiness of candidate missions took place during at the Academies’ 2006 workshop “Decadal Science Strategy Surveys.” Some workshop participants agreed that cost

_______________

50 Colleen Hartman presented a version of this idea at the 2012 workshop. In discussing the difficulties of decadal program planning in the context of budget uncertainties, she imagined a “three worlds” approach, using the colorful terms “heavenly” or “evil” to describe the better and worse budgets that the survey might plan for, above and below the “nominal” expectations of a flat budget.

51 Congress of the United States, National Aeronautics and Space Administration Authorization Act of 2008, Public Law 110-422, Section 1104b, October 15, 2008.

52 NRC, An Assessment of Balance in NASA’s Science Programs, The National Academies Press, Washington, D.C., 2006, p. 32.

53 NRC, An Assessment of Balance in NASA’s Science Programs, 2006, p. 33.

and technology readiness evaluations conducted independently of NASA estimates add value to decadal surveys. It was also suggested that uniform cost-estimating methods should be used within a given survey to facilitate cost comparisons among initiatives.54

With this guidance in hand, and prompted by congressional language inserted in the formulation of the fiscal year (FY) 2007 budget, NASA and DOE asked the Academies to prepare a report reviewing NASA’s Beyond Einstein Program.55 Specifically, the report was to assess the five Beyond Einstein missions and recommend a first mission for development and launch, utilizing a program funding “wedge” that would start in 2009. The report assessed five mission areas using criteria that addressed both potential scientific impact and technical readiness. The study committee considered the realism of preliminary technology and management plans as well as cost estimates. Criteria used by the committee included plans for the maturity of critical mission technology, technical performance margins, schedule margins, risk mitigation plans, and estimated costs versus independent probable cost estimates.

Coming out of the Beyond Einstein Program Advisory Committee and the reports and workshops noted above, it was consistently observed that previous decadal surveys significantly underappreciated mission costs and difficulty. This was noticed by Congress, and in 2008, the NASA Authorization Act, codifying the decadal surveys, mandated that the Academies “include independent estimates of the life cycle costs and technical readiness of missions assessed in the decadal survey wherever possible.”56 In response to this congressional mandate, the Academies chose an independent contractor, the Aerospace Corporation, to assist in this task. Earth2007 was already complete at that time, so starting with Astro 2010, a cost and technical evaluation (CATE) process was devised by the Academies in partnership with the Aerospace Corporation. The intention was to pay particular attention to assessing the risks associated with proposed missions. Experience gained in Astro 2010 was used to improve CATE in the subsequent planetary science and heliophysics surveys.57

Purpose of CATE