3

A Decision Framework for NASA Earth Science Continuity Measurements

NASA Earth Science Division (ESD) has established evaluation processes for proposals submitted in response to NASA Research Announcements (NRAs) and Earth Venture Instrument and mission Announcements of Opportunity (AOs). For both NRAs and AOs, the NASA evaluation relies on subjective ratings by experts of a set of evaluation factors. As a complement to the NRA and AO processes, this chapter describes a methodology for quantifying the value of extending the duration of a particular space-borne measurement, a necessary first step in developing a framework for prioritizing among similar competing continuity measurements. The committee’s framework, which is proposed for consideration by NASA ESD, uses a simple scoring system to characterize technical and managerial options. Chapter 4 provides examples of applications of the methodology.

Within NASA, choosing among Earth science continuity measurements competing for funding naturally involves weighing risks and benefits under uncertain technical and financial conditions. Development of approaches to rational decision-making under such conditions has a rich history of academic inquiry and provides important insights into the decision process (see Box 3.1). As a first step toward a NASA decision-making framework, the committee focused on methods to evaluate measurement choices. This evaluation step provides the critical foundation for approaches to measurement selection, a second step not covered in this report.

The required elements for a useful decision-making framework are (1) a set of key characteristics suitable for discriminating among measurements; (2) a method for evaluating the measurement characteristics; and (3) a method for rating a measurement based on evaluation of its characteristics (described in Sections 3.2 and 3.3, below). In recognition of the challenges of measurement selection, the committee has sought to avoid being overly prescriptive with inflexible schemes that may also incorrectly weight, or even omit, characteristics. Instead, the committee emphasized defining a framework that is firmly founded on a small, robust set of key characteristics, but retains substantial methodological flexibility with regard to the evaluation of characteristics and rating of measurements.

Framework development follows from the definition of measurement continuity given in Chapter 2. According to that definition, continuity is recognized to exist only when the quality of the measurement is maintained over a required time period and spatial domain. Maintaining quality over an extended period necessarily incurs cost. Accordingly, the affordability metric (A) of achieving measurement continuity will clearly be a prime concern in NASA’s decision making. Of similar importance, however, is the expected scientific or societal benefit metric (B) of the considered measurement. Just as economic cost-benefit analysis attempts to summarize the value metric (V)

BOX 3.1

Decision Theory and the Committee’s Framework

In its effort to develop a decision framework for NASA continuity measurements, the committee benefited from the insights of past theoretical inquiry into the decision process and practical application of decision theories to administrative and business decision-making. As articulated in the works of Simon (1977), Brim et al. (1962), and Mintzberg et al. (1976), an organizational decision process can be divided into a set of distinct phases that generally proceed from problem identification, to designing solutions, to evaluating solutions, to choosing between solutions. In the following sections of Chapter 3, the reader will recognize within the committee’s recommended framework a first step that is focused on problem identification and subsequent steps that focus on evaluating proposed solutions. The final step of choosing between solutions has been an important focus of classical decision theory (Mintzberg et al., 1976). Proposed criteria for decision making under uncertainty or ignorance1 fall broadly into high-payoff/high-risk and acceptable-payoff/risk-averse categories. The choice of a particular selection criteria strategy for use with the recommended continuity measurement evaluation approaches is, in the committees’ opinion, best left to the NASA decision makers. Accordingly no attempt is made to apply such selection strategies in this report.

______________

1 Peterson explains that in decision theory, everyday terms such as risk, ignorance, and uncertainty are used as technical terms with precise meanings. In decisions under risk, the decision maker knows the probability of the possible outcomes, whereas in decisions under ignorance, the probabilities are either unknown or non-existent. Uncertainty is either used as a synonym for ignorance or as a broader term referring to both risk and ignorance (Peterson, 2009, pp. 5-6).

of funding for a particular project or endeavor, a value-centered framework is capable of effectively distinguishing among the relevant Earth measurements, as follows:

V = function (B, A)

Finding: A value-based approach can enable more objective decisions regarding continuity measurements.

Recommendation: NASA’s Earth Science Division should establish a value-based decision approach that includes clear evaluation methods for the recommended framework characteristics and well-defined summary methods leading to a value assessment.

3.1.1 Quantified Earth Science Objectives

A quantitative determination of the value of a measurement can only be accomplished in the context of a quantifiable objective. Accordingly, the starting point for the committee’s recommended framework is identification of a relatively small set (i.e., tens) of quantified objectives that are key to addressing the highest-priority, societally relevant scientific goals.

The committee envisions NASA ESD establishing a small set of quantified objectives from the same sources that inform the development of its program plan, notably, the scientific community-consensus priorities expressed in National Research Council (NRC) decadal surveys1 and guidance from the executive and congressional

______________

1 The 2007 Earth science decadal survey (NRC, 2007) highlighted the following emerging regional and global challenges; each of which can be mapped to particular quantified objectives: changing ice sheets and sea level; large-scale and persistent shifts in precipitation and water availability; transcontinental air pollution; shifts in ecosystem structure and function in response to climate change; human health and climate; and extreme events including severe storms, heat waves, earthquakes, and volcanic eruptions.

branches. Congressionally mandated midterm assessments of the decadal survey afford an additional opportunity for community evaluation of the objectives. Continuity of an established data set will compete with proposed new measurements as well as multi-measurement “intensives,” campaigns that may be mounted to, for example, gain a detailed understanding of a particular climate process. The latter proposals should be defined through an objective that could then be evaluated via the committee’s proposed framework or whatever similar quantitative, open, and objective evaluation ESD establishes for continuity measurements.

Setting as goals the deeper understanding of the science underlying each of these decadal survey-identified challenges, the committee envisions NASA being able to identify a finite number of quantified objectives for each goal, as well as identifying the highest priority among them. The objectives should provide critical leverage against the identified goals; such objectives will typically focus on challenges with the greatest uncertainty. Objectives may address, for example, causal attribution, process connections among key geophysical variables, or future projections. As implied by its name, it is essential that an objective be framed quantitatively so that the degree of contribution of a single measurement, or set of measurements, can be evaluated for that objective. Representative examples of quantified objectives for likely important global change science goals are given in Box 3.2.

Finding: The starting point for a framework that discriminates among competing continuity-relevant measurements is the identification of quantified science objectives.

Recommendation: Proposed space-based continuity measurements should be evaluated in the context of the quantified science objectives that they are addressing.

As stated in Chapter 1, the committee chose to illustrate the framework with science objectives and not societal-benefit objectives, primarily because of the perceived difficulty in adequately comparing large numbers of possible applications. However, the recommended continuity framework is, in principle, applicable to cases where the quantified objective in Earth science is replaced by a quantified objective in Earth applications. A methodology for identifying and assessing such objectives would enable the use of the framework for prioritizing measurements with respect to societal-benefit applications.2

Finding: Quantified objectives in Earth applications can also be a starting point for the recommended framework, if suitably developed.

Recommendation: NASA should initiate studies to identify and assess quantified objectives in Earth applications related to high-priority, societal-benefit areas.

3.2 FRAMEWORK CHARACTERISTIC: BENEFIT3

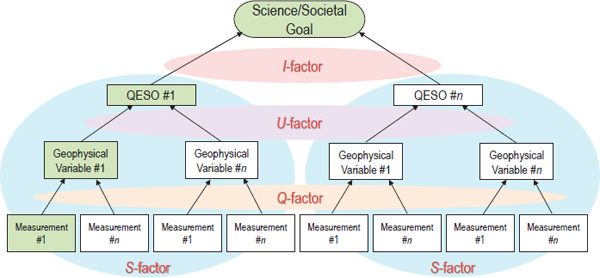

Through analysis of the continuity examples given in Chapter 2, the committee has identified four key characteristics to define the benefit metric (B) of a measurement proposed in pursuit of a quantified objective:

- The scientific importance of achieving an objective (importance I),

- The utility of a geophysical variable record for achieving an objective (utility U),

- The quality of a measurement for providing the desired geophysical variable record (quality Q), and

- The success probability of achieving the measurement and its associated geophysical variable record (success probability S).

______________

2 For an example of how societal applications might be incorporated into a value framework, see Pellec-Dairon (2012).

3 In this report, the term “benefit” is used in relationship to a specific measurement, not the scientific goal per se. Accordingly, as stated above, a measurement is seen as having benefit with respect to achieving a particular quantified objective.

BOX 3.2

Quantified Objectives

The committee’s proposed quantitative decision framework is organized around the evaluation of candidate measurements and their contributions to a particular quantified objective(s) in Earth science. A well-formulated quantified objective is

- Directly relevant to achieving an overarching science goal of NASA’s Earth Science Division;

- Presented in such a way that the measurements, their characteristics (spatial, temporal resolution) and their calibration (uncertainty and repeatability), and other requirements are traceable to the overarching science goal; and

- Expressed in a way that allows an analytical assessment of the importance of the objective to an Earth science goal and the utility of the targeted geophysical variable(s) for meeting the science objective.

The following are sample quantified objectives for continuity measurements in Earth system science. It is important to recognize that this list is meant for illustration purposes only; it is not a complete list, and the entries are in no particular order.

1. Narrow the Intergovernmental Panel on Climate Change Fifth Assessment (IPCC AR5) uncertainty in equilibrium climate sensitivity (ECS) (1.5 to 6°C at 90% confidence) by a factor of 2. ECS is defined as the long-term global temperature change for a radiative forcing equal to a doubling of carbon dioxide (Myhre et al., 2013). Uncertainty in climate sensitivity is one of the major sources of uncertainty in future economic impacts of climate change (Myhre et al., 2013; SCC, 2010) and for a given forcing, most climate change impacts scale with climate sensitivity.

2. Detect decadal change in the effective climate radiative forcing (ERF) to better than 0.05 W m-2 (1σ). To understand the decade-to-decade warming as observed, it is critical to know the ERF for that decade, and particularly how it has changed compared to the previous decade. The recent slowdown in the rate of increase in global mean surface air temperature (see Flato et al., 2013, Box 9.2) has raised questions in the public/policy arena about the scientific understanding of climate change.

3. Determine the rate of global mean sea level rise to ±1 mm per year per decade(1σ). Sea level is increasing, rising at an average rate of 2.0 mm per year between 1970 and 2010. The rate estimated for the period of 1993-2010 increased to 3.2 mm per year (Church et al., 2013). From these estimates, the acceleration of sea level rate is about 1 mm per year per decade. We must be able to determine the current acceleration of sea level at this level with high degree of confidence to make timely projections.

4. Identify the land carbon sink and quantify this globally to ±1.0 Pg C per year aggregating from the 1° × 1° scale. Currently, the atmospheric O2/N2 ratio and the change in atmospheric d13 C indicate a global land carbon sink of 2.9 ±0.8 Pg C per year (1σ) (Ciais et al., 2013; Le Quéré et al., 2014). Because land vegetation removes one quarter of the carbon emitted to the atmosphere, we must be able to determine the locations of and mechanisms for land carbon uptake. This can be achieved by employing satellite data coupled to numerical process models at the 1° × 1° scale over multiple annual cycles.

5. Determine the change in ocean heat storage within 0.1 W m-2 per decade (1σ). Over 90 percent of the recent heat from global warming is stored in the ocean (Rhein et al., 2013). Observation of the ocean heat storage is key to understanding the heat budget of the planet and thus prediction of future climate. The uptake of heat by the ocean is estimated to be 0.5-1 W m-2 (Loeb et al, 2012; Trenberth and Fasullo, 2010). Detection of its change by 10-20 percent per decade is essential.

6. Determine changes in ice sheet mass balance within 15 Gt/yr per decade or 1.5 Gt/yr2 (1σ). Ice sheets are losing mass at an accelerating rate of 300 Gt/yr per decade, or 30 Gt/yr2. Detecting changes at the 5 percent level is essential for understanding the interactions of ice sheets and climate at the regional level and for improving projections from numerical models.

The relationship between the framework characteristics and a measurement/quantified objective pair is illustrated in Figure 3.1.4 This leads to the following general relationship for the benefit of the measurement in terms of its importance, utility, quality, and success probability:

B = Function (I, U, Q, S).

Additional cross-cutting factors potentially impact both benefit and affordability. Examples include the ability to leverage other measurement opportunities in pursuit of the science objective and the resilience of a geophysical variable record to unexpected degradation (or gaps) in the measurement quality. The definitions of the four characteristics of benefit are given in the following subsections, where the relationships between these characteristics and value are further explored.

The importance of a continuity measurement ultimately relates to the importance that NASA and the scientific community attach to the science goal that the measurement addresses. Within the framework, importance is directly related to the scientific or societal benefit of achieving a quantified objective. The primary method for gauging importance is through science community consensus as expressed in documents such as the decadal survey.

Recommendation: NASA, which is anticipated to be a principal sponsor of the next decadal survey in Earth science and applications from space, might task the decadal survey committee with the identification, and possible prioritization, of the quantified Earth science objectives associated with the recommended science goals.

The utility metric gauges the contribution that an intended geophysical variable record makes to a specified quantified science objective. On one end of the utility spectrum, are cases where only a single geophysical variable is needed to achieve a quantified objective. On the other end of the spectrum are cases where the considered geophysical variable is but one of many needed for addressing an objective. Over this range, the committee ascribes a higher utility rating to geophysical variables that provide essential contributions to objectives and a lower utility rating to geophysical variables that make indirect/minor contributions.5

It is important to clearly distinguish between the utility and quality characteristics. Utility represents the value of an optimal or full quality measurement to the objective. Quality is an independent factor that represents how well a proposed measurement meets the uncertainty required for the objective. Another way to state this is that utility is the relevance of a full quality measurement to the objective, while quality is the uncertainty of the measurement relative to the objective requirement.

A number of methods can be used to gauge the utility of a given geophysical variable record (see Chapter 4 for examples). For instance, Observing System Simulation Experiments (OSSE), while not yet sufficiently mature to be used as formal tools for most objectives, can provide important insights on the utility of geophysical variable records.6 In particular, OSSE analyses performed on the impacts of various geophysical variables for achieving an objective can greatly inform a relative utility rating of the geophysical variables.

Utility can also be gauged by the relative uncertainty of different components of the quantified objective, with a higher utility rating being attached to geophysical variables that address the highest uncertainty objective components. Examples of this approach would include the very different levels of uncertainty for feedback com

______________

4 Note: The extension of the simple single measurement/quantified objective pair framework depicted in Figure 3.1 to a multitude of benefits is discussed below in Section 3.7. Such an extension is conceptually straightforward, although elucidating all possible objectives of interest may be impossible. This problem can be made tractable if the science community can successfully identify the top tier of objectives.

5 The proposed framework will give high value to an ancillary measurement(s) required to achieve a quantified objective.

6 For additional information about OSSEs, see, for example, Masutani et al. (2010).

FIGURE 3.1 A schematic representation of the relationship between benefit metric (B) factors and key measurement-related terms and characteristics defined in this study—importance (I), utility (U), quality (Q), and success probability (S). The shaded areas denote the specific connections between framework factors and the appropriate terms. In particular, the I-factor connects an important science/societal goal with one or more quantified Earth science objectives (QESOs). The U-factor relates the utility of a particular geophysical variable record to achieving a quantified objective. The Q-factor ties together a needed geophysical variable record with the quality of a proposed continuity measurement (and the instrument specific to that measurement). Finally, the S-factor broadly connects, through a probability of success analysis, a quantified objective with a geophysical data record and its associated measurements. Evaluation of benefit is accomplished for specific measurement, geophysical variable, objective, and science goal sets (green boxes illustrate an example set for evaluation).

ponents of climate sensitivity or components of anthropogenic radiative forcing (see Section 4.1 and Appendix C for examples).

In the near term, utility may be a more subjective metric given the current limited application of OSSEs for most objectives. In the future, utility metrics can become more quantitative using OSSEs and priorities based on relative uncertainties in addressing the objective. Ultimately, a full Bayesian approach to the quantification of utility is desirable. There is an extensive literature on the use of such approaches for the verification of complex system models such as the climate system (NRC, 2012a). Box 3.3 provides a discussion of the application of a Bayesian approach to the utility and quality characteristics.

Finding: The benefit of a measurement is valued by the degree of contribution that the derived geophysical variable record makes to a targeted quantified objective.

Recommendation: NASA should foster a consistent methodology to evaluate the utility of geophysical variables for achieving quantified science objectives. Such a methodology could also inform the deliberations of the Earth science and applications from space decadal survey committee.

The quality metric plays a decisive role in determining when a measurement should be collected for durations longer than the typical lifetimes of single satellite missions. This metric derives directly from the measurement characteristics—instrument calibration uncertainty, repeatability, continuity (time and space sampling), and data

BOX 3.3

Bayesian Methodologies for Evaluation of the Framework Characteristics

The committee recommends a blend of qualitative and quantitative approaches to the key characteristics of utility and quality. This recommendation is based on current capabilities and understanding as discussed in Chapter 3. Many, if not most, quantified objectives involve the use of measurements to observe the consequences of Earth system processes—to validate the complex physical models of the Earth system used to predict these processes and to determine the uncertainty of the predictions of past, current, and future Earth system behavior.

The National Research Council (NRC) report Assessing the Reliability of Complex Models: Mathematical and Statistical Foundations for Verification, Validation, and Uncertainty Quantification (NRC, 2012a; hereafter ARCM), is particularly relevant to the value framework of the current report. ARCM considered a wide range of complex models ranging from aeronautics to combustion to Earth System models. In most cases, the report found that a Bayesian statistical approach can be used to relate the uncertainty in complex models to uncertainty in observations (quality in the current report) and to the relationship between key model parameters and observations (utility in the current report).

As described in ARCM (p. 61), the Bayesian formalism leverages measurements to provide “a set of so-called posterior probability densities of the parameters, describing updated uncertainty in the model parameters.” The relationship between a set of measurements and the model parameters can be determined using Observation System Simulation Experiments (e.g., Liu et al., 2014, Feldman et al., 2011). Constructing a posterior probability distribution requires a large set of OSSE (Observing System Simulation Experiment) experiments using a wide range of Earth system models such as the Climate Model Intercomparison Project (CMIP-5) (Taylor et al., 2012) as well as a range of uncertain parameterization values performed in Perturbed Physics Ensembles (Murphy et al., 2004; Stainforth et al., 2005; Smith et al., 2009). A large ensemble of Perturbed Physics OSSEs could, in principle, provide a more quantitative estimate of the utility characteristic consistent with the Bayesian formulation above.

A full Bayesian approach to the value characteristics for continuity in this report would be an ideal long-term quantitative strategy, but practical challenges as discussed in ARCM may limit its full application. These challenges arise due to the large number of parameters and measurements that would be used for many quantified objectives (see examples in the appendixes of this report). Further, Earth system models are expensive to run, while data volumes from hundreds to thousands of full satellite OSSE simulations are at the edge of current computational capabilities. For some objectives, nonlinear changes such as abrupt ocean circulation or ice sheet mass loss as well as very small probability events such as weather extremes would present additional challenges (NRC, 2012a).

Given these difficulties, the current report can only make what is a first step toward a Bayesian statistical evaluation for the framework (primarily for the quantified quality and success probability examples that are in Chapter 3 and the appendixes). As NASA OSSE capability develops in the future, more quantitative utility measures can be added to the framework.

systems and delivery for climate variables (algorithms, reprocessing, and availability)—discussed in the definition of continuity in Chapter 2. The goal or requirement for the quality metric is based on the objectives discussed in Section 3.3.

A number of critical factors define quality. One is the uncertainty and repeatability of the measurement’s absolute calibration relative to the quantified science objective requirements. Another factor is the impact of a data gap (see Section 3.4.2, below). For measurements that achieve the quantified objective’s uncertainty and/or repeatability requirements, the impact of a data gap must be quantitatively assessed with respect to the lengthening of the time needed to detect a change. The difference in quality of a measurement with, versus without, a gap is determined by the magnitude of the increase in the time needed to detect a change in the presence of a gap. The smaller the increase, the less the impact on the measurement’s quality. The difference in quality of the climate

record with and without continuity of the proposed measurement provides input for continuity decision making: if the difference in the quality metric is small, the continuity observation priority will be low, if the difference is large, the continuity observation priority will be high.

While there are numerous ways to evaluate quality in the context of continuity measurements, a useful metric is expected to vary between continuity required for short-term operational use (weather prediction, agricultural crop monitoring, hazard warnings) versus longer-term science objectives, such as those related to global climate change.7 Examples for assessing the quality are given in Chapter 4.

Finding: Assessing the quality characteristic of a particular continuity measurement requires knowledge of a measurement’s combined standard uncertainty, deriving from the uncertainty of an instrument’s calibration uncertainty, repeatability, time and space sampling, and data systems and delivery for climate variables (algorithms, reprocessing, and availability) and the consequences of data gaps on the relevant quantified science objective(s).

Recommendation: The committee recommends that NASA be responsible for refining the assessment approach for the quality characteristic.

3.2.4 Benefit: Success Probability

The success probability metric S is defined as the probability that the measurement being proposed for continuity will successfully meet the goal of extending the specified geophysical record. This metric accounts for such things as the measurement’s resilience to gaps (discussed in more detail below) and the possibility of leveraging national and international partners for needed measurements. The success probability metric is also meant to capture—to the extent that they are not covered in the affordability metric—issues that would affect the ability of the proposed measurement to meet the scientific or societal goal. From a decision-theory perspective, S can be seen to address uncertainty in the decision-process by “derating” the maximum potential benefit for known risks to measurement quality. Factors that should be included in deriving S include the risks of the instrument development itself, as well as risks of the associated algorithms needed to achieve the predicted accuracy; an example derivation is given in Chapter 4.

The quality characteristic, through the definition of the calibration uncertainty, and the success probability characteristic are both affected by the impact of gaps and their probability of occurrence. For observations where overlap is critical to maintain higher quality, gaps will affect the quality rating with and without continuity, as well as the success probability, through the risk of a gap occurring. Those measurements whose calibration is sufficiently certain to meet global change requirements will have high quality rating, and their success probability rating will also be high due to smaller impacts of data gaps.8As a result, gap impact on quality, gap risk of occurrence, and gap effects on observing system costs can all be accounted for in the current framework.

Finding: Success probability is assessed by evaluating the maturity of the measurement instrumentation and algorithms, the risk-posture of the mission implementation approach, the resilience of the geophysical variable record to measurement gaps, and the degree to which alternate approaches, including those from national and international partners, can provide acceptable bridging measurements.

______________

7 The committee notes that the quality requirements for measurements related to climate change quantified objectives will tend to be most stringent at global scale, and less stringent at zonal or regional scale. Instrument accuracy and repeatability will therefore be driven by global average analysis as in the examples in this report. However, the committee’s analysis framework can be used at any spatial scale required by the objective. In general, anthropogenic climate change signals appear first in global averages since natural variability typically decreases with increasing spatial averaging. Natural variability at zonal and regional scales can be factors of 3 (zonal) or 10 (regional) larger than global averages (e.g. Wielicki et al., 2013).

8 Note that those instruments which make accurate measurements (i.e., with calibration uncertainty sufficient to meet the quantified objective) could be launched less often since gaps for short time periods are unlikely to degrade the fidelity of the long-term record: this could translate to significant cost savings in the affordability metric.

3.3 FRAMEWORK CHARACTERISTIC: AFFORDABILITY

Affordability is the cost per year of continuing the prescribed measurement for a specified time period with the required quality, relative to the total budget that NASA has allocated for all satellite measurements.

To achieve the required quality, the measurement must have the uncertainty, repeatability, time and space coverage, and reduction algorithms to meet the scientific requirements. The cost is the full funding needed to make the observations and produce the measurement for a finite length of time: it includes instrument development; space platform accommodation; launch; on-orbit collection; validation; algorithm implementation; science team contributions; and data algorithm (re)processing and testing facilities, archiving, and distribution. Should multiple overlapping measurements be prescribed to preclude gaps to achieve the needed repeatability of the measurement over the specified time, they are also part of the cost.9 Included as well are additional factors that reduce risk, such as advancing the instrument TRL and auxiliary observations, should they be needed, to implement the algorithms that produce the measurements. To the extent that factors such as TRL, gap mitigation, validation, and algorithm maturity development are included in the measurement cost, they may enhance the reliability of the associated success probability estimate of the measurement. Thus, cost shares a number of cross-cutting characteristics with utility, quality, and success probability that must be quantified and implemented in the value metric.

Finding: Assessing affordability requires comprehensive cost analysis from measurement to geophysical variable record and includes risk mitigation.

Recommendation: NASA should extend their current mission cost tools to address continuity measurement-related costs needed for the decision framework.

The committee identified the following cross-cutting issues that do not easily group into any one of the aforementioned framework criteria—measurement calibration uncertainty, repeatability, time and space sampling, and data systems and delivery for climate variables (algorithms, reprocessing, and availability), but instead apply to multiple criteria.

Leveraging is a factor that the framework takes into account via the success probability and affordability of a proposed measurement. It can be an important consideration for many proposed measurements; for example, collaboration with international partners can serve as a demonstration of a broad acceptance in the global community of the importance of a measurement, and has the potential to reduce the required contribution of individual partners. In a 1998 NRC report, a joint committee of the Space Studies Board and the European Space Science Committee identified the following as elements essential to successful international cooperation in space research missions (NRC, 1998, p. 3):

______________

9 The impact of interagency and international collaboration on cost can be accounted for in the committee’s proposed decision framework. Consider the following three cases: (1) NASA and Sponsor A are flying similar instruments and coordinate their launches to provide an ex - tended data record; (2) Sponsor A is operating instrument A to measure variable A, and NASA launches instrument B into a similar orbit to measure variable B, which can be combined with variable A to produce variable C; and (3) Sponsor A is developing an instrument with various channels or frequencies to measure variable A, and NASA offers to provide some funds for additional channels/frequencies to measure variable B. Especially for a foreign partner, Case 3 would appear to be unlikely because of risk and the restrictions imposed by International Traffic in Arms Regulations, although there have been notable exceptions (e.g., Jason and GRACE). For Case 2, the collaboration between Sponsor A and NASA will be reflected in changes in the scoring of the quality metric (versus adjustments in affordability). Finally, the framework’s treatment of Case 1 is the same for gaps in the data record. As noted above, should multiple overlapping measurements be prescribed to preclude gaps to achieve the needed repeatability of the measurement over the specified time, they are also part of the cost. In Case 1, Sponsor A’s launch of a similar instrument (at no cost to NASA) will be reflected in the framework by the assignment of a higher affordability rating for the NASA effort.

- Scientific support—compelling scientific justification of a mission and strong support from the scientific community. All partners need to recognize that international cooperative efforts should not be entered into solely because they are international in scope.

- Historical foundation—partners have a common scientific heritage that provides a basis of cooperation and a context within which a mission fits.

- Shared goals and objectives for international cooperation that go beyond the objectives of scientists to include those of the engineers and others involved in a joint mission.

- Clearly defined responsibilities and a clear understanding of how they are to be shared among the partners, a clear management scheme with a well-defined interface between the parties, and efficient communication.

- Sound plan for data access and distribution—a well-organized and agreed-upon process for data calibration, validation, access, and distribution.

- Sense of partnership that nurtures mutual respect and confidence among participants.

-

Beneficial characteristics—successful missions have had at least one (but usually more) of the following characteristics:

- Unique and complementary capabilities offered by each international partner;

- Contributions made by each partner that are considered vital for the mission;

- Significant net cost reductions for each partner, which can be documented rigorously, leading to favorable cost-benefit ratios;

- International scientific and political context and impetus; and

- Synergistic effects and cross-fertilization or benefit.

- Recognition of importance of reviews—periodic monitoring of mission goals and execution to ensure that missions are timely, efficient, and prepared to respond to unforeseen problems.

Leveraging can also occur in collaborations among U.S. federal agencies and in joint programs such as the U.S. Geological Survey-NASA partnership for Landsat.10 Finally, it is important to consider not only leverage opportunities for space-based missions, but also interagency or international collaborations involving space- and in situ-based instruments.

Early in its discussions, the committee included “gap risk” as an independent characteristic in the value framework. It rapidly became clear, however, that gap risk affects many of the other characteristics in the value framework and, therefore, should be addressed as part of those factors.

First, the occurrence of a gap can increase the uncertainty and decrease the repeatability of a geophysical variable record (Loeb et al., 2009; NRC, 2013) and, therefore, affect the quality characteristic for that record. The primary effect on quality arises from discontinuities between successive measurements in a long-term geophysical variable record without sufficient absolute calibration uncertainty of the measurement. Another quality impact can occur if there are time-space gaps in a perfectly calibrated satellite measurement record (usually over several years) that miss the key variability needed to define a geophysical variable record (e.g., volcanic eruptions or the ability to average over internal natural variability such as the El Niño southern oscillation).

Second, the statistical likelihood of a data gap depends on instrument and spacecraft reliability design, launch schedules, as well as existing instruments and their age in orbit. All of these factors in the observing system design will affect the success probability of achieving a geophysical variable record of desired quality (Loeb et al., 2009).

Third, the strategy to avoid gaps will involve instrument and spacecraft reliability and launch schedules. These factors will then drive cost and the associated affordability factor. For these reasons, a careful gap risk analysis is required as part of the value analysis, but gap risk must be considered in three of the characteristics (quality, success, and affordability) and cannot be treated as a single factor.

______________

10 Factors influencing the success of these collaborations are reviewed in detail in (NRC, 2011).

The key framework characteristics are defined in the preceding sections. In the following chapters, the committee discusses possible approaches for quantitatively evaluating framework characteristics and for calculating value based on functional relationships between the characteristics. Regardless of the approach taken for judging the value of a continuity measurement, a uniform set of information is required for the recommended framework to be successfully applied. The committee envisions that future NASA discussions of proposed continuity measurements will use—in analogy with current ROSES (Research Opportunities in Space and Earth Sciences) and AO solicitations—well-established guidelines for submitting advocacy information. An example of such a guideline for continuity measurements is shown in Box 3.4.

3.6 DETERMINING CONTINUITY MEASUREMENT VALUE

Having identified the key value characteristics in the previous sections, the committee sought a robust approach for rating the value of a continuity measurement based on evaluations of its key characteristics. To be useful, the framework must successfully differentiate among the hundred or more climate-related geophysical variables of interest (e.g., there are approximately 50 Global Climate Observing System (GCOS)-established essential climate variables11), and also among the larger number of instrument data records that potentially can be used to provide measurements of sought after geophysical variables. Ideally, the chosen approach would involve rigorous analytical evaluation methods that can yield precise quantitative ratings.

Among the many rating approaches considered, two were identified by the committee as having particular merit. The first approach, similar to that used by NASA for evaluating proposals submitted in response to NRAs or Earth Venture AOs, relies on subjective ratings by experts of a set of evaluation factors (see Box 3.5). Whereas NRA evaluations are focused on programmatic relevance, intrinsic merit, and cost realism, and AO evaluations are focused on scientific merit, scientific implementation merit and feasibility, and technical, management, and cost feasibility (including cost risk) of the mission implementation, a continuity measurement would be evaluated using questions related to key value characteristics, namely

- Does it address an important scientific objective requiring continuity? (Importance)

- Will it contribute substantially to the objective? (Utility)

- Does it have sufficient quality to contribute to the objective? (Quality)

- Can the quality be readily obtained and maintained? (Success Probability)

- Is it affordable within the available NASA budget? (Affordability)

Similar to the NRA and AO cases, the committee envisions an overall continuity measurement value rating being derived from summary analysis of the individual evaluations. The use of a five-level summary rating system (see Table 3.1) should provide sufficient discrimination for NASA to divide proposals between “selectable” and “not selectable” and identify the fraction of proposals to fund (Table 3.2). For continuity measurements, where there is a substantially larger cost commitment and, hence, smaller fractions of supportable proposals, the need to increase proposal discrimination is apparent. Several approaches can be used to create higher discrimination, including increasing the number of rating levels for evaluation criteria, creating a higher threshold definition for the top rating category, giving higher weights to more readily quantifiable evaluation criteria (e.g., cost), or sequencing the criteria evaluations in ways that progressively distill the measurement candidates (e.g., a series of elimination gates).

______________

11 The goal of the Global Climate Observing System (GCOS) is to provide comprehensive information on the total climate system, involving a multidisciplinary range of physical, chemical, and biological properties and atmospheric, oceanic, hydrological, cryospheric, and terrestrial processes (GCOS, “About GCOS,” https://www.wmo.int/pages/prog/gcos/index.php?name=AboutGCOS, accessed April 6, 2015). GCOS identifies essential climate variables (ECVs) that are both currently feasible for global implementation and are required to support the work of the Intergovernmental Panel on Climate Change and the UN Framework Convention on Climate Change. See GCOS (2010). The 50 GCOS ECVs are listed at GCOS, “GCOS Essential Climate Variables,” http://www.wmo.int/pages/prog/gcos/index.php?name=EssentialClimateVariables, accessed April 6, 2015.

BOX 3.4

Guidelines for Continuity Measurement Framework Input

- Identify the quantified objective(s) the proposed measurement under construction addresses (see Box 3.2).

-

Describe the importance of the quantified objective to a high-priority, societally relevant science goal.

- Description should be short, referenced, but understandable to a broader audience.

- Provide a perspective on how the objective fits within the broader scientific issues of understanding global change.

- Provide a perspective on how the objective benefits society, beyond the science.

-

Explain the utility of the measured geophysical variable(s) to achieving the quantified objective.

- Explanation of the geophysical variables to be provided by the mission / measurement(s).

- Description of the utility of these variables in terms of the relative fraction1 they contribute to answering the objective.

- A list of auxiliary data required to deliver the proposed measurement(s), but not part of the proposed mission, delineated by program and instrument.

-

Detail the quality of the measurement relative to that needed for the quantified objective.

- Assess the quality of the proposed measurement against the requirements of the objective.

- Includes, inter alia, instrument calibration uncertainty, repeatability, time and space sampling, and data systems and delivery for climate variables (algorithms, reprocessing, and availability) of all data products.

- Assess the ability to satisfy the objective both with and without proposed observation(s).

-

Discuss the success probability of achieving the measurement.

- Provide an assessment of the heritage and maturity of proposed instruments and data algorithms.

- Assess the likelihood of leveraging similar or complementary non-NASA measurements.

- Provide a quantitative analysis of the risk of a gap in the measurement and the effect of that gap on the quality of the long-term record and its ability to remain useful in meeting the objective.

-

Provide an estimate of the affordability of the measurement.

- Estimate the total cost of the proposed observation(s).

- Include the expected years of record on orbit at reasonable levels (e.g., 85%) of reliability.

- Include additional costs to mitigate unacceptable risks of measurement gaps.

______________

1 Regarding 3b and “relative fraction”: In this report, the committee notes that its evaluation methods for the importance and utility characteristics are subjective; however, it recommends (in Chapter 3) that the sum of the utility ratings of all observations needed by the quantified objective be equal to 1.0. This allows the framework to account for some observations being more important than others, while avoiding a “check the box” process that just counts the number of observation sources without consideration of relative importance. It also normalizes the utility rating of all objectives to the same numerical scale, thereby allowing an “apples-to-apples” comparison. The report also shows a path toward future more rigorous and objective analysis of utility using the Bayesian framework discussed in Box 3.3.

BOX 3.5

Suggested Evaluation Factors for Continuity Measurement Proposals

Unless otherwise specified by NASA, the principal elements (of approximately equal weight) considered in evaluating a continuity measurement are the importance of the scientific objective being addressed (importance), the contribution of the measurement to the science objective (utility), the quality of the measurement for addressing the objective (quality), the likelihood that the measurement can be developed and maintained (success probability), and the affordability of the continued measurement within the NASA budget (affordability).

- Evaluation of a measurement’s importance considers documented community priorities for science goals and quantified science objectives.

- Evaluation of a measurement’s utility includes consideration of all of the key geophysical variables, and their relative contributions, for addressing a quantified objective.

- Evaluation of a measurement’s quality includes consideration of its uncertainty, repeatability, time and space sampling, and data algorithm characteristics relative to that required for achieving a targeted scientific objective.

- Evaluation of the measurement’s success probability includes consideration of the heritage and maturity of the proposed instrument and its associated data algorithms, the likelihood of leveraging similar or complementary measurements, and the likelihood of data gaps that would adversely affect the quality of the measurement.

- Evaluation of the affordability of a proposed continuity measurement includes consideration of the total cost of developing, producing, and maintaining the sought-after data record. The impacts of gap mitigation on cost are included in this consideration.

TABLE 3.1 Adjectival “Value” Scale for Proposed Continuity Measurements Versus Framework Rating

|

|

||

| Analytical Method Score (from Chapter 3) | Description | Rating I × U × Q × S |

|

|

||

| Poor | A continuity measurement of low value that provides little benefit regardless of affordability. | 0 - 5 |

| Fair | A continuity measurement that provides value but with neither of the benefit and affordability characteristics being above average. | 6 - 10 |

| Good | A continuity measurement of moderate value with only one of the benefit and affordability characteristics being above average | 11 - 15 |

| Very Good | A continuity measurement of high value with above average benefit and affordability characteristics. | 16 - 20 |

| Excellent | A continuity measurement of exceptional value that provides maximum benefit and is highly affordable. | 21 - 25 |

|

|

||

TABLE 3.2 Comparison of Summary Evaluation Methods

|

|

||

| Evaluation Metric | Subjective Method Ratings | Analytical Method Scores (from Chapter 3) |

|

|

||

| Importance (I) | Low, Moderate, High, Very High, Highest | 1 - 5 |

| Utility (U) | Low, Moderate, High, Very High, Highest | 0 - 1 |

| Quality (Q) | Low, Moderate, High, Very High, Highest | 0 - 1 |

| Success probability (S) | Low, Moderate, High, Very High, Highest | 0 - 1 |

| Affordability (A) | Low, Moderate, High, Very High, Highest | 1 - 5 |

| Overall value (V) | Poor, Fair, Good, Very Good, Excellent | 0 - 25 |

|

|

||

A second ratings approach considered by the committee adheres more strongly to a typical “cost-benefit” analysis. The potential advantage of this approach is more reliance on well-prescribed quantitative analysis and less on subjective evaluation. A simple manifestation of this approach is12

V = B × A = (I × U × Q × S) × A

Successful implementation of this approach requires determining the relative weights of the benefit and affordability terms and defining the ratings scales of the individual benefit terms in a way that maintains the relative B and A weights. A self-consistent method would be to (1) assign ratings scales (e.g., 1 to 5) to the importance and affordability13—terms that reflect the desired relative weights for B and A, and (2) define the utility, quality, and success probability rating scales in terms of percentages. In this formulation, the rating of B can be understood as follows:

B = I (maximum potential benefit) × U × Q × S (percentage of maximum benefit realized),

where

I = importance of the quantified objective = maximum potential benefit,

U = percentage of the quantified objective achieved by obtaining targeted geophysical variable record,

Q = percentage of required geophysical variable record obtained by proposed measurement, and

S = probability that proposed measurement will be successfully achieved.

In Chapter 4, the committee describes its examination of various methods for defining and quantifying characteristic ratings and for calculating the cost-benefit value. Not surprisingly, the committee’s examination revealed the inherent challenges in moving from subjective to analytical evaluations. As a result, the committee explored a hybrid approach that combines subjective ratings for I, semi-analytical ratings for U and S, and analytical ratings for Q and A.

Finding: A number of potentially useful methods exist for prioritizing among continuity measurements.

Recommendation: NASA should establish a value-based decision approach that includes clear evaluation methods for the recommended framework characteristics and well-defined summary methods leading to value assessment.

3.7 EXTENDING THE FRAMEWORK BEYOND SINGLE CONTINUITY MEASUREMENT/QUANTIFIED OBJECTIVE PAIRS

As described above, the decision framework is designed to assess a single continuity measurement for a single quantified objective. As such, it illustrates a general approach to comparing different objective/continuity measurement pairs. In deciding whether to pursue a particular continuity measurement, NASA managers may seek answers to some additional questions, such as the following:

______________

12 The committee debated at length regarding the choice of framework characteristics; the object was to derive a minimal set of largely independent characteristics that would provide meaningful evaluations of proposed continuity measurements. That the factors are not completely independent in a statistical sense is recognized. For example, success probability and affordability are not completely independent; however, the relationship between them is sufficiently complex that it was necessary to retain both in the framework. As an example: NASA’s ability to “buy down” risk (i.e., increase S by decreasing A) is not easily quantified for complex technologies; similarly, accounting for the strategic plans of other national and international partners—a difficult problem—is easier to handle in a framework with separate success and affordability factors. Accordingly, the committee elected to retain both the success probability and affordability characteristics. By retaining success probability, the treatment of uncertainty in the decision process is more readily achieved.

13 The committee does not assign a zero value to I or A because no measurement would be under consideration if it has no importance, and A would only be zero in the case of infinite cost.

- How does the value of a continuity measurement compare to that of a new measurement?

- For any single objective, which measurement (or set) provides the most value?

- What is the total value of a single measurement relative to all objectives of interest?

All three questions are relevant when considering how best to address the highest-level objectives identified by the science community (i.e., decadal survey recommendations). Addressing question 1 requires that objectives be defined at a relatively high level of scientific inquiry so as to accommodate considerations of new measurements. In particular, a new measurement is justified by either a new objective that is not amenable to the existing observing systems, or by some significant weakness apparent in the current system that addresses an established objective. In the context of an established objective, need for new measurements may arise from improvement in utility, quality, or cost in meeting the objective. Given an appropriate objective, a new measurement would be handled within the decision framework in a manner identical to that of an existing measurement.

Addressing questions 2 and 3 can be readily accomplished within the recommended framework by repetitive application of the methodology to each of the measurements contributing to a single objective or to each of the objectives pertaining to a single measurement, respectively. For question 3, an evaluation of the total value of a measurement requires the development of an appropriate summary methodology.

Brim, Jr., O.G., D.C. Glass, D.E. Lavin, and N. Goodman. 1962. Personality and Decision Processes: Studies in the Social Psychology of Thinking. Stanford University Press, Calif.

Church, J.A., P.U. Clark, A. Cazenave, J.M. Gregory, S. Jevrejeva, A. Levermann, M.A. Merrifield, et al. 2013. Sea Level Change. Chapter 13 in Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change (Stocker, T.F., D. Qin, G.-K. Plattner, M. Tignor, S.K. Allen, J. Boschung, A. Nauels, Y. Xia, V. Bex, and P.M. Midgley, eds.). Cambridge University Press, Cambridge, U.K., and New York, N.Y.

Ciais, P., C. Sabine, G. Bala, L. Bopp, V. Brovkin, J. Canadell, A. Chhabra, et al. 2013. Carbon and Other Biogeochemical Cycles. Chapter 6 in Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change (Stocker, T.F., D. Qin, G.-K. Plattner, M. Tignor, S.K. Allen, J. Boschung, A. Nauels, Y. Xia, V. Bex, and P.M. Midgley, eds.). Cambridge University Press, Cambridge, U.K., and New York, N.Y.

Feldman, D.R., C.A. Algieri, J.R. Ong, and W.D. Collins. 2011. CLARREO shortwave observing system simulation experiments of the twenty-first century: Simulator design and implementation. Journal of Geophysical Research 116(D10):107.

Flato, G., J. Marotzke, B. Abiodun, P. Braconnot, S.C. Chou, W. Collins, P. Cox, et al. 2013. Evaluation of Climate Models. Chapter 9 in Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change (Stocker, T.F., D. Qin, G.-K. Plattner, M. Tignor, S.K. Allen, J. Boschung, A. Nauels, Y. Xia, V. Bex, and P.M. Midgley, eds.). Cambridge University Press, Cambridge, U.K., and New York, N.Y.

GCOS (Global Climate Observing System). 2010. Implementation Plan for the Global Observing System for Climate in Support of the UNFCCC (2010 Update), GCOS-138 (GOOS-184, GTOS-76, WMO-TD/No.1523). World Meteorological Organization, Geneva, Switzerland. August. http://www.gosic.org/content/about-gcos-ecv-data-access-matrix.

Le Quéré, C., R. Moriarty, R.M. Andrew, G.P. Peters, P. Ciais, P. Friedlingstein, et al. 2014. Global carbon budget 2014. Earth System Science Data Discussions 6:1-90.

Liu J., K.W. Bowman, M. Lee, D.K. Henze, N. Bousserez, H. Brix, G.J. Collatz, D. Menemenlis, L. Ott, S. Pawson, D. Jones, and R. Nassar. 2014. Carbon monitoring system flux estimation and attribution: Impact of ACOS-GOSAT XCO2 sampling on the inference of terrestrial biospheric sources and sinks. Tellus 66.

Loeb, N.G., B.A. Wielicki, T. Wong, and P.A. Parker. 2009. Impact of data gaps on satellite broadband radiation records. Journal of Geophysical Research 114:D11109.

Loeb, N., J.M. Lyman, G.C. Johnson, R.P. Allan, D.R. Doelling, T. Wong, B.J. Soden, and G.L. Stephens. 2012. Observed changes in top-of-the-atmosphere radiation and upper-ocean heating consistent within uncertainty. Nature Geoscience 5:110-113.

Masutani, M., J.S. Woolen, S.J. Lord, G.D. Emmitt, T.J. Kleespies, S.A. Wood, S. Greco, et al. 2010. Observing system simulation experiments at the National Centers for Environmental Prediction. Journal of Geophysical Research 115(D7).

Mintzberg, H., D. Raisinghani, and A. Théorêt. 1976. The structure of “unstructured” decision processes. Administrative Sciences Quarterly 21:246-275.

Murphy, J.M., D.M.H. Sexton, D.N. Barnett, G.S. Jones, M.J. Webb, M. Collins, and D.A. Stainforth. 2004. Quantification of modelling uncertainties in a large ensemble of climate change simulations. Nature 430(7001):768-772.

Myhre, G., D. Shindell, F.-M. Bréon, W. Collins, J. Fuglestvedt, and J. Huang. 2013. Anthropogenic and Natural Radiative Forcing. Chapter 8 in Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change (Stocker, T.F., D. Qin, G.-K. Plattner, M. Tignor, S.K. Allen, J. Boschung, A. Nauels, Y. Xia, V. Bex, and P.M. Midgley, eds.). Cambridge University Press, Cambridge, U.K., and New York, N.Y.

NRC (National Research Council). 1998. U.S.-European Collaboration in Space Science. National Academy Press, Washington, D.C.

NRC. 2007. Earth Science and Applications from Space: National Imperatives for the Next Decade and Beyond. The National Academies Press, Washington, D.C.

NRC. 2011. Assessment of Impediments to Interagency Collaboration on Space and Earth Science Missions. The National Academies Press, Washington, D.C.

NRC. 2012a. Assessing the Reliability of Complex Models: Mathematical and Statistical Foundations for Verification, Validation, and Uncertainty Quantification. The National Academies Press, Washington, D.C.

NRC. 2012b. Earth Science and Applications from Space: A Midterm Assessment of NASA’s Implementation of the Decadal Survey. The National Academies Press, Washington, D.C.

NRC. 2013. Review of NOAA Working Group Report on Maintaining the Continuation of Long-Term Satellite Total Irradiance Observations. The National Academies Press, Washington, D.C.

Pellec-Dairon, M.L. 2012. From chains to platforms: valuing remote sensing data for environmental management. Proceedings of Toulouse Space Show.

Peterson, M. 2009. An Introduction to Decision Theory, 1st Edition. Cambridge University Press, New York, N.Y.

Rhein, M., S.R. Rintoul, S. Aoki, E. Campos, D. Chambers, R.A. Feely, S. Gulev, et al. 2013. Observations: Ocean. Chapter 3 in Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change (Stocker, T.F., D. Qin, G.-K. Plattner, M. Tignor, S.K. Allen, J. Boschung, A. Nauels, Y. Xia, V. Bex and P.M. Midgley eds.). Cambridge University Press, Cambridge, U.K., and New York, N.Y.

SCC (Interagency Working Group on Social Cost of Carbon). 2010. Social cost of carbon for regulatory impact analysis under executive order 12866. Washington, D.C. Appendix 15a. http://www.epa.gov/otaq/climate/regulations/scc-tsd.pdf.

Simon, H. 1977. The New Science of Management Decision. Prentice Hall, Englewood Cliffs, N.J.

Smith, R., C. Tebaldi, D. Nychka, and L. Mearns. 2009. Bayesian modeling of uncertainty in ensembles of climate models. Journal of the American Statistical Association 104(485):97-116.

Stainforth, D.A., T. Aina, C. Christensen, M. Collins, N. Faull, D.J. Fram, J.A. Kettleborough, et al. 2005. Uncertainty in predictions of the climate response to rising levels of greenhouse gases. Nature 433(7025):403-406.

Taylor, K.E., R.J. Stouffer, and G.A. Meehl. 2012. An Overview of CMIP5 and the experiment design. Bulletin of the American Meteorological Society 93:485-498.

Trenberth, K.E., and J.T. Fasullo. 2010. Tracking Earth’s energy. Science 328:316-317.

Wielicki, B.A., D.F. Young, M.G. Mlynczak, K.J. Thome, S. Leroy, J. Corliss, J.G. Anderson, et al. 2013. Climate Absolute Radiance and Refractivity Observatory (CLARREO): Achieving climate change absolute accuracy in orbit. Bulletin of the American Meteorological Society 94:1519-1539.