CHAPTER FIVE

Evaluation to Refine Goals and Demonstrate Effectiveness

Measure what is measurable, and make measurable what is not so.

—Galileo

Evaluation is required for creating effective communication. It is needed to determine audience needs and interests, to test communication approaches, and to evaluate impact.

Many communication events are initiated because of a chemist’s desire to increase public awareness, appreciation, or understanding of chemistry. How does the chemist know if such events are effective? Evaluation can determine effectiveness and can be performed to varying degrees. A chemist will first need to decide whether the best evaluation option for an event is a simple, one-time, on-site assessment of participant responses or a more in-depth, time-intensive programmatic assessment conducted by a third-party expert. Fortunately, there is a body of research on evaluation and many resources available to aid organizers in selecting an evaluation type based on their needs and in conducting the evaluation. This chapter provides an overview of the research on evaluation and guidance for performing evaluations in informal settings.1 It addresses evaluation appropriate for large installations in museums as well as for small, one-time activities. The process of evaluation may seem overwhelming; however, although elements of an evaluation will be similar regardless of the event, the scope and intricacy of the evaluation will scale with the event complexity and with the goals of the chemistry communication event.

___________________

1 This chapter draws extensively from Communicating Chemistry in Informal Environments: Evaluating Chemistry Outreach Experiences (Michalchik, 2013). As part of its formal information-gathering process, the committee commissioned this paper, which provides an extensive review of social science theory on evaluation for informal science learning.

WHAT IS EVALUATION?

Evaluation is a set of techniques used to judge the effectiveness or quality of an event; improve its effectiveness; and inform decisions about its design, development, and implementation (NRC, 2010). Evaluation can occur before, during, and after an activity. Three stages of evaluation are front end, formative, and summative (Michalchik, 2013; Box 5-1).

WHY EVALUATE?

Evaluation, if begun at the outset of planning, can make communication events more effective at meeting their intended goals. As described by Michalchik (2013), evaluation enables chemists organizing an activity to learn about intended participants, receive advanced feedback about communication design, and determine whether the goals and outcomes are met. Widespread use of evaluation would help chemistry communication meet the four goals listed in Chapter 2 or other goals.

A well-designed evaluation typically improves the quality of an experience by helping better define goals, identify important milestones and indicators of success, and support ongoing improvements. The information generated during an evaluation is useful to others seeking to learn from their colleagues’ experiences. In addition, evaluation can provide evidence of value to funders, potential partners, and other stakeholders. Reports from evaluations can also inform efforts to replicate or broaden the scale of a communication effort.

Evaluation provides valuable information to funders and other stakeholders that support communication. Funders may require evaluation. For example, the National Science Foundation (NSF) requires all proposals to its Advancing Informal Science Learning (AISL) program to include an evaluation plan and to demonstrate that a professional evaluator will be involved from the early stages in the project’s conception. Specifically, the evaluation plan must do the following:

[E]mphasize the coherence between the proposal goals and the evidence of meeting such goals. It must be appropriate to the type, scope, and scale of the proposed project. It is strongly encouraged that the plans include front end, formative, and remedial/iterative evaluation, as appropriate to achieving the projects’ goals. (NSF, 2014, p. 10)

Beyond AISL, NSF requires that all grant proposals, including proposals for research in chemistry, describe the broader impacts of the research on society. As noted previously, some researchers meet this requirement by including communication events in the proposal, although they are not required to include an evaluation plan. Nevertheless, evaluation should be a part of every chemist’s toolkit for communication, whether required or not, because it is helpful at each phase of designing and conducting communication. Although evaluation is often conducted by trained professionals using specialized techniques, anyone can use basic evaluative approaches to inform the design and development of communication activities and to learn about their impact.

OVERARCHING CONSIDERATIONS

The data described in Chapter 3 revealed that chemistry communication events in informal environments vary greatly in objectives, activities, content, and participants. Thus, evaluation plans can vary greatly in depth and scope, but efforts to evaluate even diverse activities follow an approach defined by research and by professional practice in the evaluation of informal science learning (Bonney et al., 2011; NRC, 2009). This section describes the elements of that approach and presents some guidelines for evaluation.

Evaluation planning should begin in parallel with defining the goals and desired outcomes of the communication event. This planning includes developing evaluation questions, indicators, and measures; selecting appropriate methods to gather information about the indicators; using the methods to gather, analyze, and interpret the data; and applying findings to inform the design or revision of a current event, or to inform the development of future events. Some steps of evaluation may seem daunting or excessive especially for chemists planning one-time communication activities on their own, but the requirements and scope of an evaluation will vary depending on the activity and often will not be arduous. Incorporating evaluation from the beginning can help the organizer identify clear goals. Understanding the

general principles of evaluation and using it can improve events, whether the organizer is an individual chemist or a chemistry department partnering with a science center on a large-scale communication event.

The most important consideration in developing an evaluation plan is how best to serve the needs of the communication event given the available time, budget, personnel, and other issues of capacity. Tailoring expectations of what can be accomplished in an evaluation is a critical and often challenging part of developing an evaluation. Efforts to follow a lockstep set of procedures or employ plug-and-play evaluation tools may lead to frustration if there is a lack of relevant data. Communication goals, evaluation plan, and selected outcome measurements must be aligned to conduct a meaningful evaluation of an experience.

DEVELOPING AN EVALUATION PLAN

Planning for evaluation is different from, but related to, planning the communication event itself. Evaluation planning integrates a clear understanding of the intent and context of the communication event with the purposes of the evaluation. When working with a professional evaluator, a preliminary step is to provide the evaluator with background information about the project to provide the necessary context for the evaluation work.

Project Goals and Outcomes

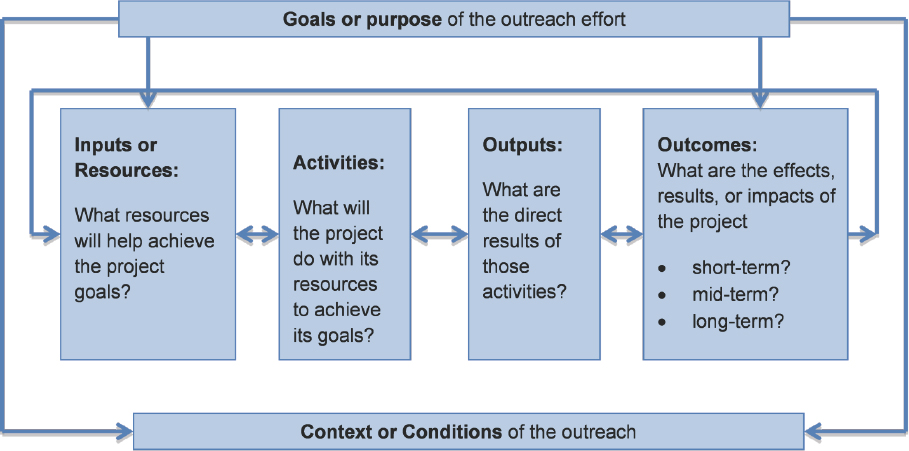

The first step in designing and evaluating an effective chemistry communication project is to specify its intended goals and outcomes. A project without clearly defined goals and anticipated outcomes cannot be evaluated. An approach used by professional evaluators is to describe in visual form a project’s rationale using a logic model (Figure 5-1).

A logic model specifies the stages in a project to describe how the project works. It defines and shows the relationships between the project’s inputs, activities, outputs, and outcomes as defined in Box 5-2. Although it may be relatively easy to specify the communication event (e.g., a hands-on chemistry experiment in a museum exhibit) and the desired output (e.g., a large percentage of museum visitors will choose to conduct the experiment), it can be more challenging to identify the desired outcomes—what the participants will get out of a given experience. In the logic model, the outcomes are often described as short term, occurring within a few years of the event; midterm, occurring 4 to 7 years after the activity; or long term, occurring many years after an event has commenced.

Communication events are often designed to encourage long-term impacts, such as future science participation or additional learning through subsequent experiences. They may also aim to achieve shorter-term outcomes. Although included in logic models, long-term outcomes are rarely measured because of their complexity and long time horizon (Bonney et al., 2011; NRC, 2009). However, some funders value evaluation approaches that can demon-

SOURCE: Michalchik, 2013.

strate the long-term impact of science communication. For example, NSF requires that funding proposals for its AISL program include not only measures of learning outcomes for the target participants, but also the intended long-term impacts on science, technology, engineering, and mathematics (STEM) learning.

A logic model should be dynamic. Any element—inputs, activities, outputs, or outcomes—can change as the project develops. The changes should be reflected in the model. Logic models should also be based on careful alignment between the activities (the nature of the experience for participants), the outputs, and the expected outcomes. For example, if a project promotes playful exploration of materials and substances, the project should not be expected to lead to skill in arguing based on evidence (see Bonney et al., 2011, p. 48, for further examples).

Frameworks to Guide Outcome Development

Outcomes should be realistic, achievable, and measurable. Two evaluation frameworks developed through research and practice in informal science learning (Friedman, 2008; NRC, 2009) provide guidance in developing outcomes that meet these criteria. Each framework provides an organized way of thinking about the desired outcomes of an experience.

As noted previously, the 2009 National Research Council (NRC) report Learning Science in Informal Environments details the first framework, six interrelated strands of science learning that can “serve as a conceptual tool” for both designing and evaluating informal learning experiences. The strands encompass learning processes and outcomes and can be used for any type of evaluation—front end, formative, or summative. The report presented the set of strands as an ideal that informal learners might achieve through lifelong participation in multiple events. Most chemistry communication events cannot advance all of the strands. Communication events vary in duration and participation. Participants vary in their knowledge and interests. These variations should be considered when selecting and evaluating desired outcomes. For example, a short-term activity, such as a talk on water chemistry in a community affected by a chemical spill, might focus on engaging town citizens in the topic (Strand 1) and fostering a sense of identity as people who use and affect science (Strand 6). Thus, evaluation should focus on Strands 1 and 6. A longer-term program, such as a selective summer chemistry camp for high school students, might focus on achieving gains in all six strands.

In the Framework for Evaluating Impacts of Informal Science Education (Friedman, 2008), a new evaluation framework was designed, for more systematic reporting of the impacts of NSF-funded informal science learning projects, as indicated by the summative evaluations of individual projects. Using this framework, NSF made a requirement for all proposals. The Friedman framework is well known by professional evaluators and has been used for 7 years to gather and analyze data in NSF’s informal science education Online Project Monitoring System (Allen and Bonney, 2011). Designed for use in summative evaluation, the framework organizes the outcomes of informal science learning into categories that roughly parallel the six strands of science learning in the NRC’s 2009 report (as shown in Table 5-1).

TABLE 5-1 Participant Outcomes as Goals of Chemistry Communication Experiences

| Six Strands of Science Learning (NRC, 2009) | Framework for Evaluating Impacts of Informal Science Education Projects (Friedman, 2008) |

|---|---|

|

Strand 1: Develop interest in science, including a positive attitude toward or excitement about science and the predisposition to reengage in science and science learning. |

|

|

Strand 2: Understand science. |

|

|

Strand 3: Engage in scientific reasoning. This includes developing scientific skills such as asking questions, exploring, and experimenting. |

|

|

Strand 4: Reflect on science. This involves understanding how scientific knowledge is constructed and how the learner constructs it. It is critical for an informed citizenry. |

|

|

Strand 5: Engage in scientific practices. This includes participating in scientific activities and learning practices with others, using scientific language and tools. |

|

|

Strand 6: Think about themselves as science learners and develop identities as people who know about, use, and sometimes contribute to science. |

|

NOTE: STEM = science, technology, engineering, and mathematics.

A comparison of NRC (2009) and Friedman (2008) suggests that integrating the two frameworks would make it easier to compare, test, and aggregate the outcomes of different informal learning experiences (Allen and Bonney, 2011). However, integration presents several challenges. First, the breadth of each set of categories makes aggregation challenging. Second, the Friedman framework meets NSF’s need to assess the impact of its AISL program on society as a whole, but the NRC framework places emphasis on the learning process within individuals. Third, each framework includes outcomes that are not clearly distinguished from other, closely related outcomes. Nevertheless, the elements of both frameworks can aid chemists who are considering the desired outcomes of an event.

Evaluation Questions, Indicators, and Measures

Evaluation is driven by questions (aimed at the event planners) that focus on the intended outcomes. The evaluation questions should establish both what is and what is not to be evaluated and can be used as a tool for structuring the evaluation as a whole. Each evaluation question depends on project goals, the purposes of the evaluation, the features of the project to be evaluated, and stakeholder interests. For example, if the desired outcome is an enhanced interest in chemistry, then measurement of chemistry content knowledge following the event would not be relevant to the goal (though it might be a familiar area to assess for individuals who work in a formal education environment).

To be useful, evaluation questions must be answerable. For instance, if it is not possible to contact the participants of a broadcast media presentation on chemistry, then the evaluation questions should not address participants’ behavior, attitudes, or knowledge. Evaluation questions should be developed for different stages of the project and should reflect different ways that evaluation can inform each stage. A list of example evaluation questions that could be adapted to a range of specific projects is provided in Box 5-3. The evaluation questions serve multiple purposes: assisting in targeting the important outcomes of a project and helping determine if the project’s design and implementation are effective.

After establishing project goals, outcomes, and clearly articulated evaluation questions, the next task is to develop indicators. Indicators are measured when performing the evaluation. They provide evidence related to the targeted outcomes for participants. Indicators should directly align with the outcomes and should be clear and measurable—in the same way that a good evaluation question must be answerable. For instance, if the project intends to increase participants’ content knowledge, the indicator should focus on knowledge gains rather than interest or engagement (Bonney et al., 2011). In addition to formulating indicators, evaluators identify measurement tactics to gather data related to the indicators. For example, if the intended outcome of a public chemistry demonstration is to increase participants’ interest and engagement in chemistry, measurement might include any or all of the following:

- counting the number of participants;2

- recording the length of time participants stay;

- observing participants’ facial expressions and degree of attentiveness;

- logging the types of questions participants ask of the presenter;

___________________

2 Care should be taken with this and other indicators that could result in overestimation of interest in chemistry. For example, individuals attending an activity may not have a specific interest in the chemistry being presented but rather in the overarching topic.

- collecting participants’ descriptions of why they attended, what they liked, what they learned that was new, and what they might do next or differently based on their experience; and

- identifying any unexpected activities.3

Many indicators will be quantifiable, and others will be qualitative—providing insight into the value, meaning, or import of a participant’s communication experience.

___________________

3 There is value in watching for emergent behaviors that may not have been anticipated when planning the activity and goals. For instance, if the goal was that participants would imagine a new product that is based on the chemistry they are experiencing, but they also debate the societal value of such a product during the activity, then this ought to be noted in the evaluation.

METHODOLOGY: DESIGNING AND CONDUCTING THE EVALUATION

Balancing Evaluation Approaches

Organizers of communication activities and professional evaluators have successfully used a diverse range of indicators and measurement methods to examine outputs and outcomes in informal science settings. Using a balance of evaluation approaches supports a primary goal of evaluation: to improve the effectiveness of an experience. Summative evaluations of outcomes are undoubtedly important, but they should not overshadow front-end and formative evaluations. On the continuum between front-end evaluation (to become familiar with participants’ knowledge, interests, and attitudes) and summative evaluation (to measure outcomes) are myriad opportunities for formative evaluation to reconsider, massage, and fine-tune a communication effort. Premature attempts to assess a project’s summative outcomes can be meaningless or, worse, can limit chances, through formative evaluation, to continue or improve a promising event.

Evaluation Design

Evaluation design is the manner in which an evaluation is structured to collect data to answer the questions about a communication event’s intended outcomes. A range of evaluation designs can be employed, including pre-post designs, which compare participant outcomes before and after the experience; designs that use control groups to provide more causal evidence; and designs that use mixed methods. For example, a pre-post design for a public chemistry demonstration might query the participants before and after the experience to objectively ascertain whether and how the experience influenced attitudes about chemistry. The most appropriate evaluation design for a communication event is project dependent. Evaluation design is shaped by the questions about outcomes, available resources, stakeholder expectations, and the appropriateness of the evaluation to the communication event.

Evaluation Considerations for Informal Environments

Evaluation in informal environments often requires different techniques than those used in formal settings. Evaluation can be done, but different techniques are needed.

One factor that differs between the two settings is access to the participants. Participants in informal environments (other than school groups) attend the activity by choice and cannot be required to take a survey or be tested as they could in a classroom setting. For example, participants in a chemistry demonstration at a shopping mall may not want to devote time to being interviewed or responding to a questionnaire after the demonstration. Attendees at a forum might not wish to remain after the program to provide feedback. Informal event

evaluators often use an incentive to increase participation in a follow-up interview, such as a discount coupon.

Broadcast or Web-based media can facilitate summative evaluation in informal environments. For example, flyers with a web link to evaluation questions can be given to participants, to encourage them to provide feedback. Web-based evaluation has logistical issues to address, such as keeping web links up to date and encouraging a response. Overall, summative evaluation of participants in informal science learning events requires planning, persistence, and sometimes luck (NRC, 2009).

Another factor to consider is the indeterminate nature of how participants might benefit from a communication event they attend voluntarily. People engage in informal science experiences for many reasons: they were brought by friends, attracted by an intriguing tagline, or looking for new knowledge, or they stumbled into it by accident. Similarly, people draw value from informal learning experiences in different ways. And, life experiences, societal contexts, and cultural values all influence a participant’s retrospective assessment of the experience. The notion of a single learning-related outcome that applies to all participants is complicated (Allen and Bonney, 2012; Friedman, 2008; NRC, 2009). For this reason, evaluations might include open-ended questions to seek unanticipated outcomes. For example, the evaluation of a calculus exhibit at the Science Museum of Minnesota revealed that the exhibit evoked powerful memories of mathematics from visitors’ school days (Gyllenhaal, 2006). Although the exhibit designers had not established “evoking memories” as an intended outcome, the evaluators’ use of loosely structured interviews and their flexible approach to analyzing the results allowed them to uncover and document this unexpected outcome.

In informal environments, it might be difficult to employ uniform evaluation approaches, such as standardized assessments, without sacrificing a participant’s freedom of choice. It might be difficult to identify individual, as opposed to shared, outcomes. It is often impractical to use an experimental design in which participants are compared with a control group of similar individuals who did not participate. This makes it difficult for summative evaluations to conclusively attribute specific outcomes to specific communication experiences, but less imposing methods can still provide useful information. In addition, the relatively new field of informal science evaluation is exploring promising new methods to consider these difficulties and strengthen future evaluations (Bonney et al., 2011).

DATA COLLECTION

Data collection methods should be determined after developing the targeted outcomes, evaluation questions, indicators, and evaluation design. When planning how to collect data for each indicator, consider the following questions (Bonney et al., 2011, p. 53):

- Who are the intended participants, and what specific information do you hope to get from them?

- What method of data collection is best suited to obtain the needed information from these participants?

- When will the information be collected, and by whom?

Based on the consideration of such questions, professional evaluators and researchers have successfully used various assessment methods to measure each of the six informal science learning outcomes identified by the NRC (2009). Interest and engagement in science (Strand 1) is often measured through self-reporting in interviews and questionnaires. For example, evaluators for the Fusion Science Theater in Madison, Wisconsin, gave participants a questionnaire before and after the performance, asking them to use Likert scales to indicate their interest in science and their confidence in their ability to learn science. The confidence ratings revealed that attending the performance had a large positive impact on the overall outcomes. Understanding of science knowledge (Strand 2) has been measured through analysis of participant conversations, think-aloud protocols (for example, having a visitor talk into a microphone while touring an exhibition), and postexperience measures, including self-reporting questionnaires, interviews, and focus groups.4 Additional methods include engaging participants to demonstrate their learning by producing an artifact, such as a concept map or drawing, and email or phone interviews conducted weeks or even years after the event. Engaging in scientific reasoning (Strand 3) is typically measured as a learning process (i.e., how people learn) rather than as a content-driven process (i.e., what people learn). Researchers and evaluators have measured the learning process using audio and video recordings of participants, which are analyzed for evidence of skills, along with self-reports of skills. Reflecting on science (Strand 4) involves understanding how scientific knowledge is constructed and how the learner constructs it. Although such understanding is critical for an informed citizenry—a potential goal of chemistry communication—it is difficult to measure. Nevertheless, evaluators for DragonflyTV, a PBS program for children,5 found an approach to evaluate whether watching the show changed children’s appreciation for and understanding of scientific inquiry. They provided an opportunity before and after viewing an episode for children to rank the importance of aspects of inquiry (such as the importance of keeping some variables constant across trials and of recording results); the results showed notable gains after children viewed the show.

As these examples illustrate, the participants in the communication experience are the primary source of data for most evaluations. The assessment instruments used to collect data

___________________

4 Note that pre- and posttests of science skills might result in feelings of failure and discourage future participation in science activities.

5 See http://pbskids.org/dragonflytv [accessed February 2016] for details.

should be appropriate to the participants in terms of language, culture, age, background, and other potential barriers to communication. Direct involvement of human subjects in data collection may require clearance in advance from an institutional review board.

Evaluation data related to the indicators is collected through a variety of traditional methods, such as observations, interviews, questionnaires, and even tests. Other forms of data can be collected as well, including artifacts that participants create during their experience (e.g., drawings, constructions, photos, and videos), specialized tools such as concept maps, and behaviors, such as how people move through a museum exhibit, how long they spend engaging in an activity, or behaviors tracked using web analytics. The data should be collected in ways appropriate to the participants and the setting. As an example, with some participants, creative variations, such as having participants place sticky notes on a wall or drop balls in a bucket to represent their opinions, might generate better results than a standard survey. Appendix A provides sample questionnaires and sources for other evaluation instruments.

Aligning Data Collection with Outcomes

A logic model (see Figure 5-1) helps define the measurement and data collection strategies that are most likely to provide information related to the outcomes. Table 5-2 illustrates this alignment, presenting a range of chemistry communication events organized by outcome, scale of effort, and types of setting and activity. The table suggests measurement and data collection strategies that might provide evidence of whether, and to what extent, participants achieved the intended outcomes, and hence whether the project succeeded. Sometimes the measurement approach focuses on a countable number (e.g., people attending a presentation, questions answered correctly on an assessment), whereas sometimes it requires interpretation (e.g., facial expressions while watching a performance, comments about why a website was attractive and valuable). Note that rates of participation are generally indicative of how attractive an experience is to participants, and that one can infer levels of interest from participants “voting with their feet”—choosing to engage or not.

In evaluations, data collection instruments should be designed to ensure their validity (do they measure what they purport to measure?) and reliability (are the measurements stable and consistent?). Although most chemists who are evaluating communication efforts will not have the resources or the need to rigorously validate their instruments, it is important to be aware that data quality and conclusions are directly related to instrument quality. At a minimum, instruments should be tailored to the target outcomes of the event and pilot-tested with friends or family to ensure that they are measuring what they are intended to measure.

Professional evaluations also take steps to minimize sources of bias in the data. Individual chemists evaluating one-time communication activities will probably not have the resources to control for bias to the same degree as professional evaluators. Moreover, for many communication activities or programs, some biases might not pose problems to the extent they do in

TABLE 5-2 Possible Measurement Approaches for Different Outcomes and Types of Communication Activities

| Intended Outcomes for Participants | ||||

|---|---|---|---|---|

| Engagement, interest, enjoyment (Strand 1) | Understanding of content (Strand 2) | Intentions toward future involvement or activity (Strand 6) | Impact on behavior and attitudes (Strands 1 and 6) | |

| Individual or small events | ||||

| Public presentations, demonstrations, and drop-in events | Length of time present, level of attentiveness, facial expression, questioning and other forms of participation, responses in brief surveys or interviews regarding why they participated, what they liked, etc. | Questions asked, verbal responses or hands raised in response to questions, responses on brief surveys regarding what they learned | Information seeking (e.g., taking brochures, filling out interest cards, liking a Facebook page), verbal responses, responses on brief surveys regarding what they might do differently | Follow-on interviews or surveys regarding discussions with others (e.g., dinner conversations), posts (e.g., Twitter), information seeking, signing up for programs |

| Websites, videos, broadcasts, and other media-based resources | Data analytics, posts and comments, responses on linked surveys or online forums regarding why they participated, what they liked, etc. | Data analytics, posts and comments, responses on linked surveys regarding what they learned | Participant information seeking, registering on sites, liking pages or posts, reposting online, responses on brief surveys regarding what they might do differently | Follow-on interviews or surveys regarding postexperience activities, reposts (e.g., Twitter), information seeking, registering for sites |

| Involvement with an after-school program, museum-based program, or ongoing public forum | Participant level of involvement (e.g., choosing “chem club” over “outdoor time” in an after-school setting), active participation in questioning and other scientific practices, responses in brief surveys or interviews regarding why they participate, what they like, etc. | Observations of participants engaging in questioning and other scientific practices with inquiry orientation, verbal responses to questions, responses on brief surveys at end of program regarding what they learned | Information seeking, verbal descriptions of plans or ambitions, responses on brief surveys regarding what they might do differently based on participation | Follow-on interviews or surveys regarding behaviors and attitudes specific to the communication goals, appropriate attitudinal assessments from ATIS or other sources |

| Broader, systematic communication efforts | ||||

| Public programming and performances | Responses in brief surveys or interviews regarding why they participated, what they liked, etc., appropriate science interest assessments from ATIS | Content knowledge assessments such as the one used for The Amazing Nano Brothers Juggling Show | Participant information seeking, verbal descriptions of plans or ambitions, responses on brief surveys regarding what they might do differently based on participation | Follow-on interviews or surveys regarding behaviors and attitudes specific to the communication goals, appropriate attitudinal assessments from ATIS or other sources |

| Ongoing programming in after-school programs, museums, or public settings | Responses in surveys or interviews regarding why they participated, what they liked, etc., appropriate science interest assessments from ATIS | Content knowledge assessments carefully aligned with the experiences and objectives of the programming | Responses in surveys or interviews regarding choice of activities, courses, or careers, appropriate science attitudinal assessments from ATIS or other sources | Follow-on interviews or surveys regarding behaviors and attitudes specific to the communication goals, appropriate attitudinal and behavioral assessments from ATIS or other sources |

NOTE: ATIS, Assessment Tools in Informal Science.

SOURCE: Michalchik, 2013.

larger-scale or professional evaluations. However, it is important to be aware of the ways that bias might be introduced, because bias can affect the results of the evaluation and any adjustments made in response to those results. Some forms of bias that are relevant to chemists and organizations conducting communication events include the following:

- The social desirability factor. Participants, like all people, will lean toward telling a friendly interlocutor what the interlocutor wants to hear. Questions can be formulated to avoid leading participants to what they perceive as the “correct” answer.

- Asking questions that only some participants can answer, depending on age, social circumstances, or other factors. For example, a younger child without experience at talking to strangers may say nothing about an entertaining presentation, yet her silence should not be construed as lack of interest or appreciation when compared with the response of an exuberant sibling who carries on about what fun she had.

- Sampling bias that is introduced when the only participants interviewed are ones who volunteer or show enthusiasm for the experience. Those who opt not to participate might have considerably different views of the experience. In a related vein, low response rates to a questionnaire sent after an activity might indicate a bias regarding the type of people likely to respond (e.g., people who loved the experience or those who disliked it to such a degree that they wished to express the reasons why).

In professional evaluations, data analysis begins with a focus on each individual data source (e.g., tabulating responses of parent surveys [one data source] and, separately, coding behaviors in field notes of observations of children [another data source]). Then, the evaluators synthesize information across data sources to answer each evaluation question. Decisions about how to analyze and synthesize data are complex; the bases for interpretation may be as well. A strong evaluation, however, justifies these decisions and makes the reasons for particular representations transparent, linking the art of evaluation to its science.

Evaluations of most chemistry communication events—particularly single activities conducted by individual chemists—are likely to be more straightforward. Most evaluations will have only one source of data (e.g., some kind of opinion survey, or interviews, or observations) and relatively small numbers of responses to tabulate.

Regardless of its complexity, the analysis process clarifies the evaluation’s limitations and often reveals unexpected or serendipitous findings. The analysis phase is also the phase in which to address the implications of the results, deriving helpful recommendations for those involved and, in some cases, others engaged in similar efforts.

REPORTING AND USING THE EVALUATION

Writing up the evaluation is critical to ensure that the evaluation meets its intended purpose—to improve the effectiveness of the chemistry communication experience. Because evaluation findings do not directly make recommendations or decisions but instead inform them, the findings must be interpreted and reported in ways that are helpful to the project designers, the funders who require evaluation, or other stakeholders. For example, a chemist engaged in a one-time communication activity may conduct a front-end evaluation to learn more about the intended participants’ interests and concerns. The information gathered should be synthesized and interpreted in a written report to guide the further design of the activity. Or, a chemist involved in an ongoing communication program may conduct a formative evaluation, and these findings should be summarized in writing to inform improvements in the program.

EXAMPLES OF CHEMISTRY COMMUNICATION EVALUATION

The communication efforts described in the following two cases illustrate how different the challenges of evaluating chemistry communication can be. Anyone new to evaluation of chemistry communication initiatives is encouraged to study examples available at informalscience.org and elsewhere. Appendix A suggests sources for additional information.

The Periodic Table of Videos

Starting with an initial 5-week project to create a short video on each of the 118 elements, the Periodic Table of Videos (PTOV) burgeoned into a highly popular YouTube channel (http://www.youtube.com/periodicvideos), hosting hundreds of professionally produced videos. The postings feature working chemists in their academic settings who share candid insights into their intellectual pursuits and professional lives. Although the raw number of hits, the positive feedback, and other indicators convinced them that their work was worthwhile, creators Brady Haran and Martyn Poliakoff, a journalist and a chemist, respectively, at the University at Nottingham, wondered publicly “how to measure the impact of chemistry on the small screen” (2011). A platform like YouTube comes with a built-in set of web analytics regarding viewership, but it also presents a distinct set of challenges for examining impact.

Haran and Poliakoff described their uncertainty regarding the quantitative analytics they derived from YouTube. While they recognized the value of the magnitude of the number of views (“a video with 425,000 views is clearly more popular than one with 7,000 views” [2011, p. 181]), they noted such issues as the following:

- One hundred views could represent one person watching the video repeatedly from different computers or mobile devices, whereas one view could represent a teacher showing it once to hundreds of students.

- Age and gender profiles rely on data that viewers provide, perhaps inaccurately.

- The meaning of geographical data, based on IP addresses, is unclear; for example, how should researchers interpret a temporary surge in viewing in a single country?

- Subscriber numbers indicate participants with a deeper level of interest, and comparisons with the subscriber base of another science channel, or a football club, provide a relative sense of PTOV’s popularity, but what does it mean when people unsubscribe?

- How much do YouTube promotions of featured videos skew the data?

- Do high levels of “likes” mean that PTOV is “converting” viewers or merely “preaching to the choir”? Although few in number, what do “dislikes” mean?

The comments posted by viewers watching the videos revealed some of the nuances of their experiences and gave Haran and Poliakoff “more useful information about impact” (2011, p. 181). The authors attempted to interpret the comments quantitatively, asking whether an increase in the number of comments was a result of the PTOV itself or a reflection of a general trend. They also tried to create an index of the impact of videos based on the number of comments generated, which proved impractical. The authors additionally used a “word cloud” to analyze the comments, but concluded that this approach merely confirmed the obvious: viewers enjoyed the programs.

Haran and Poliakoff ultimately found it most “reliable” to read and interpret viewers’ comments qualitatively. They identified and examined online interactions between viewers, a number of which occurred without participation from the program’s producers. They also studied the email messages and letters sent to them that described how the PTOV affected the viewer, categorizing them into two primary groups:

- adults who for the first time were enjoying science, despite bad experiences with the subject in school when students and

- high school students finding their interest and aptitude in science “awakened” by experiencing an approach different from studying chemistry texts and solving problems.

They concluded that, absent a proper market survey, “these comments are probably the most accurate indicators of impact and they are certainly the most rewarding to all of those involved in making the videos” (Haran and Poliakoff, 2011, p. 182). They also concluded that there is a large market for well-presented chemistry, and room remaining in cyberspace for high-quality science communication, despite the difficulties of measuring impact.

The Amazing Nano Brothers Juggling Show

The Amazing Nano Brothers Juggling Show is a live theater performance that dramatizes the nanoscale world (atoms, molecules, nanoscale forces, and scanning probe microscopy) using juggling and storytelling in an entertaining manner. The Strategic Projects Group at the Museum of Science in Boston created the show and offers it as a live performance as well as in a DVD set on nanotechnology. The Goodman Research Group conducted an evaluation to examine the effectiveness of the show in increasing participant knowledge of and interest in nanoscale science, engineering, and technology (Schechter et al., 2010). The museum had previously collected comment cards from the participants that showed they enjoyed the program. The Goodman researchers focused on what the participants learned and how much they engaged with the content.

The evaluation analyzed data collected in spring 2010 from three different groups. The evaluators surveyed 131 children (ages 6 to 12) either before or after one of several performances using an age-appropriate survey. They also surveyed 223 teens and adults either before or after a show and interviewed 10 middle school teachers who saw it on a field trip with their classes. The surveys took about 5 minutes to complete, and participants were given a rub-on tattoo in appreciation. Up to half of the families attending each show participated. On the days that the surveys were administered, printed performance programs, which reinforce show content, were not handed out. Example survey items are show in Box 5-4.

The preshow survey involved approaching individual attendees and asking them to participate in a brief survey about the show. They were given the forms and pencils, which were collected just before the show started. At different shows, an invitation to participate in a postshow survey was announced during the show’s finale by a cast member. The surveys were handed out to attendees at their seats and then collected at the theater exits.

Surveys received from attendees outside the target age group were excluded. Also, because few teens attended the performances and took the survey, only data from participants over 18 years of age were included in the analysis; teachers’ responses in the interview provided the only data regarding 13-year-old middle schoolers who attended. Interviews with teachers were conducted as follow-up discussions on a date after the performance. They lasted 15 to 20 minutes and included questions about the teachers’ perceptions of their students’ knowledge and attitudes before, during, and after the performance. For example, teachers were asked: “Could you please tell me what would be the ‘tagline’ for your class’s experience at the show?” and “Was the show especially good at getting across any particular concepts or insights that students had not been exposed to or that they had had difficulty grasping before seeing the show?”

On average, scores for children increased by 18 percent from pre- to postperformance, with significant increases in knowledge for half the content items in the survey. Adults’ scores also increased notably from pre- to postshow survey, with significant increases in five of the

content items. The Goodman researchers also found that the show was both captivating and informative for participants of all ages. Adults found the show highly educational. Teachers found that it reinforced classroom lessons and correlated well with science standards. The theatrical techniques supported learning potential by engaging participants. Sections of the show

involved a combination of theatrical techniques that engrossed the participants and heightened their learning potential, and the juggling was successful for teaching children, teens, and adults about the structure, movement, and manipulation of atoms. For the teens and adults familiar with basic nanoscience concepts, the performance deepened their understanding.

SUMMARY

Evaluation can seem intimidating at first. Chemists conducting communication events should draw from this chapter as much or as little as necessary to assist in evaluating their projects. Simple evaluation techniques may be appropriate for a small-scale communication activity, but it may be preferable to collaborate with a professional evaluator or knowledgeable colleague when evaluating larger-scale, extended events. The primary purpose of evaluation is to gather and analyze participant data that will help the events (both the current event and future iterations) achieve their intended outcomes. Because evaluation is evidence based, carrying out at least some evaluation is more likely to lead to effective communication than not employing evaluation at all.

REFERENCES

Allen, S., and R. Bonney. 2012. The NRC and NSF Frameworks for Characterizing Learning in Informal Settings: Comparisons and Possibilities for Integration. Paper prepared for the NRC Summit on Assessment of Informal and After School Science Learning. Available at http://sites.nationalacademies.org/DBASSE/BOSE/DBASSE_080109 [accessed August 2014].

Bonney, R., K. Ellenbogen, L. Goodyear, R. Hellenga, J. Luke, M. Marcussen, S. Palmquist, T. Phillips, L. Russel, S. Traill, and S. Yalowitz. 2011. Principal investigator’s guide: Managing evaluation in informal STEM education projects. Washington, DC: Center for the Advancement of Informal Science Education and Association of Science-Technology Centers. Available at http://informalscience.org/evaluation/evaluation-resources/pi-guide [accessed August 2014].

Friedman, A., ed. 2008. Framework for evaluating impacts of informal science education. March 12. Washington, DC: National Science Foundation. Available at http://informalscience.org/documents/Eval_Framework.pdf [accessed August 2014].

Gyllenhaal, E.D. 2006. Memories of math: Visitors’ experiences in an exhibit about calculus. Curator 49(3):345-364. Available at http://onlinelibrary.wiley.com/doi/10.1111/j.2151-6952.2006.tb00228.x/pdf [accessed August 2014].

Haran, B., and M. Poliakoff. 2013. How to measure the impact of chemistry on the small screen. Nature Chemistry 3:180-183.

Michalchik, V. 2013. Communicating Chemistry in Informal Environments: Evaluating Chemistry Outreach Experiences. Paper prepared for the NRC Committee on Communicating Chemistry in Informal Environments.

NRC (National Research Council). 2009. Learning science in informal environments: People, places, and pursuits, edited by P. Bell, B. Lewenstein, A.W. Shouse, and M.A. Feder. Washington, DC: The National Academies Press.

NRC. 2010. Surrounded by science: Learning science in informal environments. Washington, DC: The National Academies Press.

NSF (National Science Foundation). 2014. Advancing informal STEM learning, program solicitation. Available at http://www.nsf.gov/pubs/2014/nsf14555/nsf14555.pdf [accessed September 2014].

Schechter, R., M. Priedeman, and I. Goodman. 2010. The Amazing Nano Brothers Juggling Show: Outcomes evaluation. Available at http://www.grginc.com/documents/GRGMOSReportFINAL10-15.pdf [accessed August 2014].

Ucko, D. 2012. The science of science communication. Informal Learning Review 114:23. Available at http://www.museumsplusmore.com/pdf_files/Science-of-Science-Communication.pdf [accessed August 2014].

ADDITIONAL RESOURCES

AEA (American Evaluation Association). 2004. Guiding principles for evaluators. Fairhaven, MA: AEA. Available at http://www.eval.org/publications/guidingprinciples.asp [accessed June 12, 2015].

AEA. 2011. Public statement on cultural competence in evaluation. Fairhaven, MA: AEA. See www.eval.org.

Western Michigan University. 2011. Evaluation checklists. Available at http://www.wmich.edu/evalctr/checklists [accessed June 12, 2015].

Yarborough, D.B., L.M. Shulha, R.K. Hopson, and F.A. Caruthers. 2011. Program evaluation standards: A guide for evaluators and evaluation users, 3rd edition. The Joint Committee on Standards for Educational Evaluation. Thousand Oaks, CA: Sage Publications.