Appendix B

Commissioned Paper:

The Consortium as Experiment

David Lopatto

Grinnell College

The Consortium as Experiment

Introduction

The 2012 PCAST report to the president reported the “need to increase the number of students who receive undergraduate STEM degrees by about 34% annually over current rates” (PCAST, 2012). The report asserts that the principal mechanism by which these additional STEM graduates could be achieved is by reducing the attrition from undergraduate science programs, and that this reduction in attrition may be achieved by a number of reforms, including replacing standard science laboratory courses with discovery-based research courses. The latter assertion leads naturally to the theme of the current convocation on integrating discovery-based research into the undergraduate curriculum. My intended contribution to this discussion is a result of my work in the assessment of student learning in undergraduate science and in particular my familiarity with a successful discovery-based program, the Genomics Education Partnership. I hope to offer some observations regarding the research agenda that might be formulated to assess the impact of course-based undergraduate research programs.

A CURE as a “complex package”

The hypothesis that a course-based undergraduate research experience (CURE) is a vehicle for retaining science students is based on the observation that an undergraduate research experience (URE) is the model for a successful science educational experience (Lopatto, 2003, 2004; Seymour, et al., 2004). Thus, the simplest definition of a CURE is a URE-like experience occurring within a scheduled science course. This definition requires us to say something about UREs in general, and about how the CURE outcomes might be shown to resemble URE outcomes.

My research concerning student learning outcomes from undergraduate research experiences began about 15 years ago when my college won an NSF award for the integration of science research and education. The history of how this initial award propelled a research program on student learning is described in Lopatto (2010). The brief version is that I was privileged to collaborate with Dr. Elaine Seymour and her colleagues to conduct a mixed-methodology study of the benefits of the URE experience at four colleges. Seymour (see Seymour, et al., 2004) took a qualitative, interview approach to gathering data, while I arranged quantitative surveys for

TABLE B-1. A summary of the categories of benefits following from a URE found by Seymour, et al. (2004, left) and by Lopatto (2010, right). The categories on the left emerged from a coding of statements made by students during interviews. The categories on the right emerged from an exploratory factor analysis of numerical survey data.

| Based on Seymour et al. (2004) | Based on Lopatto (2010) |

| Personal/professional | Personal development |

|

Thinking and working like a scientist |

Knowledge synthesis |

|

Skills |

Interaction and communication skills |

|

Clarification, confirmation and refinement of career/education paths |

Professional development |

|

Enhanced career/graduate school preparation |

Professional advancement |

|

Changes in attitudes toward learning and working as a researcher |

Interaction and communication skills Responsibility |

|

Other benefits |

|

students (for example, Lopatto, 2003). Our results triangulated well (Table B-1). It seemed that a successful URE provided a wide range of skill learning, professional preparation, and personal development. This knowledge informed the development of a new survey, the Survey of Undergraduate Research Experiences (SURE) (supported by the Howard Hughes Medical Institute), which became widely used as an assessment tool for UREs. The heart of the SURE is a list of 21 learning benefits, which students evaluate for gain on a scale of 1 to 5. The benchmark data, drawn from over 100 institutions and programs, replicates very well from year to year (Lopatto, 2004; 2007), providing a credible baseline against which classroom undergraduate research outcomes may be measured. When demand grew for a similar instrument to assess student learning in CUREs, a new survey evolved from the SURE called the Classroom Undergraduate Research Experience (CURE) survey. The CURE survey retained the evaluation of learning benefits used in the SURE, permitting a comparison of the CURE and URE benefits.

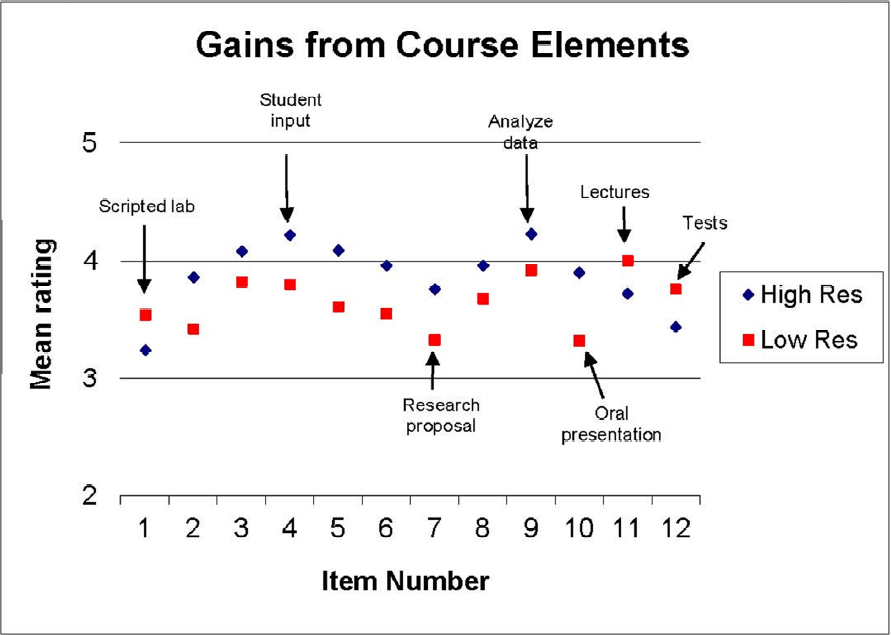

The CURE survey was not based on an a priori definition of a CURE. Instead, course instructors were asked to fill out a brief form to indicate what course activities they stressed. Some of the activities were classic—reading a textbook, taking a test—while others reflected discovery-based learning. Analyzing the instructor data, I found that a combination of 5 items constituted a rough scale for indexing a CURE:

- The course has a lab or project where no one knows the outcome.

- The course has a project in which the students have some input into the research process.

- The course has a project entirely of student design.

- The students become responsible for a part of the project.

- The students critique the work of other students.

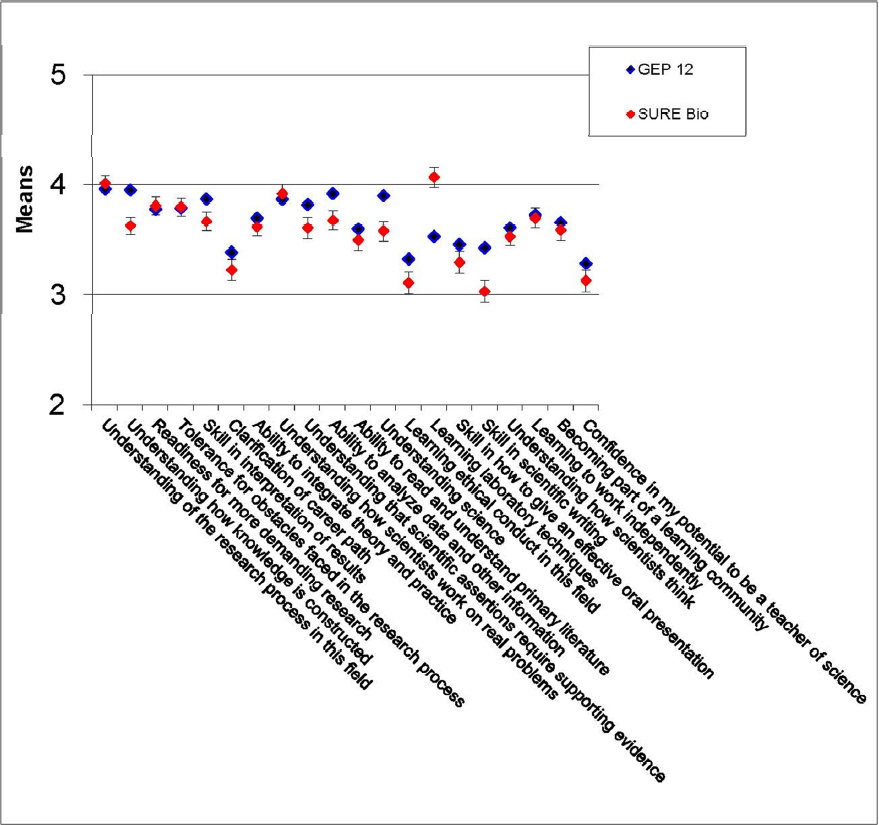

Using the numerical ratings of the instructor as a grouping variable, I performed a “median split” of the courses. The median split identified a group of high-scoring “high research-like” courses (in other words, CUREs) and a group of low-scoring “low research-like” courses (in other words, traditional “cookbook” science lab courses). Later, on the CURE survey, students evaluated their learning gains from the above course elements. The results are shown in Figure B-1. The student data reflects the observation that students learn based on what teachers teach. Beyond that observation, however, the students who participated in CUREs also evaluated the 20 learning gain items in a pattern similar to students in UREs (Figure B-2). The simplest conclusion that the CURE is successful is that the outcomes of the program are comparable to the outcomes observed following the URE experience. Lopatto (2008), for example, presented comparative results from the Genomics Education Partnership program and a group of summer undergraduate researchers. The self-reported gains from the experiences were similar. But while a CURE may resemble a URE in outcomes, the CURE may not resemble a URE as a process. The CURE may have many more local constraints imposed by the nature of the institution, its undergraduate science program, and the characteristics of the student body. These constraints limit the generalizability of any reported success of a CURE program at a particular college or university. The question remains as to how to establish a more generalized model of a successful CURE.

The methodologist Donald Campbell wrote extensively on the issue of generalizability, or external validity (Campbell, 1969, 1982, 1986). He observed that a successful program at one institution expresses “local molar causal validity” (Campbell, 1986). Local molar causal validity is “a first crucial issue and starting point for other validity questions.” This sort of validity has to do with the question, “did this complex treatment package make a real difference in this unique application at this particular place and time?” In other words, before we get down to the work of parsing the components of a successful CURE, do we have evidence that the “complex package” is successful in effecting desirable outcomes? I believe the answer is clearly “yes”, and I refer readers to the extensive body of evidence (see, for example, Auchincloss, et al., 2014; Linn, et al., 2015; Trosset el al., 2008; as well as issues of the CUR Quarterly or any teaching journal in your preferred discipline). Once we become convinced that the complex package leads to desired outcomes, then research questions become more precise (Beckman and Hensel, 2009). Researchers strive to measure the relative contribution of independent variables, the interactions among these variables, and the nature of mediating and moderating variables that influence outcomes. This research, in turn, leads to attempts to model the CURE experience (e.g., Corwin et al., 2015). Concurrently, researchers create and improve instruments that may measure the components of the CURE experience (e.g., Hanauer and Dolan, 2014; Laursen, 2015).

Research objective: efficacy versus effectiveness

As research on CURE experiences proceeds, however, we might pause to consider the strategic goal of a research agenda dedicated to understanding the CURE. There is a distinction to be made between analyzing components of a CURE that might affect its success and components

that do affect its success. The first case, demonstrating what might, all things being equal, affect a CURE, typically results from laboratory studies that isolate a variable as much as possible to establish the internal validity of the relationship between the independent variable and the target outcome (Mook, 1983). The second case, demonstrating what does affect a CURE, typically results from field research (Cook and Campbell, 1979). The distinction is found in psychology, where, for example, researchers attempt to study the success of psychotherapy and the comparative success of particular therapies. Therapy, like education, is an applied field aimed at changing human behavior. As research on therapy advanced, a distinction grew between two methodological approaches, called “efficacy” and “effectiveness.” Efficacy refers to the outcome of randomized clinical trials of therapies—controlled experiments. Effectiveness refers to the success of a therapy in actual clinical practice (Chambliss and Hollon, 1998). Efficacy studies emphasize internal validity, i.e., the effect of treatments in controlled settings to minimize

confounding variables, while effectiveness studies emphasize ecological validity, i.e., the effect of treatments in a genuine, relatively uncontrolled, applied setting. The lesson for CURE research is that these two research paths do not always converge. While the tough-minded scientist may feel that only efficacious practices should be employed, practitioners argue for “what works,” even if the approach does not fare well in controlled experiments. Treatments that show promise in controlled experiments may not have an effect in the open environment of the undergraduate program, while effective components of the CURE may evaporate in the laboratory. As a result, researchers may become frustrated on finding that standard experimental methods may produce an inconsistent, probabilistic picture of those variables that contribute to the success of a CURE.

The problem may go deeper than the fallibility of research design or statistical analysis. Our attempts to understand the nature of the CURE experience may yield only probabilistic outcomes because the nature of the CURE experience is such that it yields only probabilistic outcomes (Brunswik, 1943, 1952). There may be no single package of components in a CURE that guarantees success for the target outcome, no one best way.

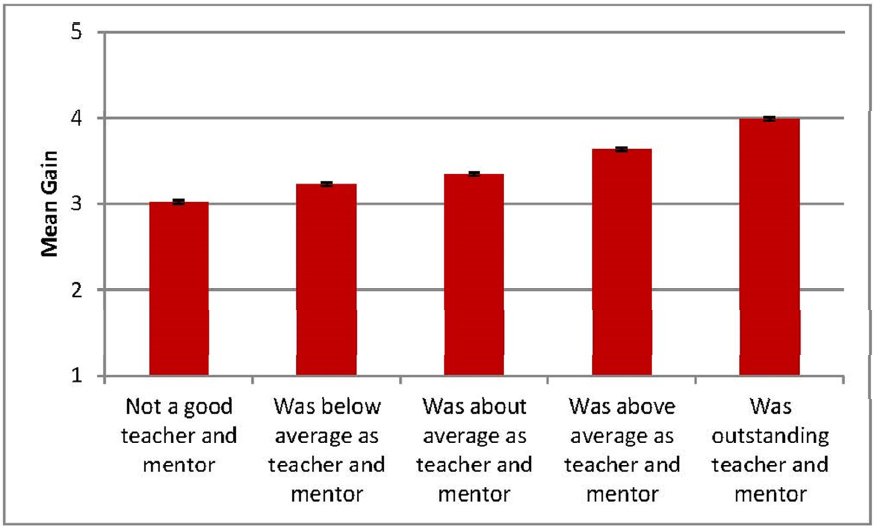

We can further illustrate the possible frustration that may occur if CURE researchers insist on following a path toward efficacy rather than effectiveness. Recalling again the analogy to psychology’s attempt to understand the nature of psychotherapy, it should be noted that therapy studies are routinely confounded by the influence of “nonspecifics,” confounds that are difficult to measure. In a therapy setting, the chief candidate for a nonspecific is the therapist. Therapists may impress a client as warm or cold, experienced or inexperienced. They may inspire confidence that therapy will be productive and so produce placebo effects. Experienced and charismatic therapists may help their clients regardless of the therapy type they employ. The parallel case of the nonspecific in CURE research is the course instructor. If CUREs are to be employed across institutions to help produce the 1 million additional STEM graduates that PCAST recommends, then course instructors will loom as a major nonspecific in any model of the CURE. Some models of the URE and CURE explicitly include the influence of research supervisors and course instructors under the concept of “mentor” (Linn, et al., 2015; Lopatto, 2010). I have no doubt that effective mentoring is a strong determinant of student success in undergraduate research experiences (Figure B-3). Despite a plethora of training opportunities and

“how to” manuals (e.g., Handelsman, et al., 2005; Merkel and Baker, 2002; NRC, 1997) mentoring remains one of the least controlled variables influencing undergraduate science education experiences. The course instructor, together with her team, that may include graduate or undergraduate teaching assistants, serves the role of mentor in the CURE experience. In my experience with programs that are conducted across institutions of higher learning (Lopatto et al., 2008, 2014), I have been impressed with the level of talent and dedication that course instructors bring to their task. For example, the Genomics Education Partnership currently includes faculty from over 100 institutions, united in their determination to bring genomics into the undergraduate biology curriculum. My intuition is that the ubiquity of the GEP lies in part with the nonspecific influence of course instructors. The course instructor, like the research mentor, is the adversary to experimental control. The instructor engages in a continuous transaction with students that involves ongoing formative assessment, remediation, and fine tuning of the CURE program—in short, the ongoing confounding influence that experimental methodology forbids. I suspect that the efforts of the instructor to ameliorate any problems encountered by students will never be eliminated from the study of CUREs, nor should it be. The effectiveness of the CURE experience, however influenced by the talented instructor, will continue to challenge efficacy studies of CURE component variables. Effectiveness, rather than efficacy, may emerge as the more important and possibly even primary objective of CURE investigations.

External validity and reference populations

External validity, or generalization, depends on our ability to define reference populations of students to which a research outcome might generalize. Ordinarily we assume a fairly homogenous population from which samples should be drawn for research. We assume that the best argument for a representative sample is random selection. I suggest that in the study of CUREs we should turn more of our attention to the definition of populations, which in turn may inform our concerns about random samples. In the study of CURE programs, the research settings (otherwise called colleges and universities) are not homogenous enough to constitute a reference population. Despite efforts to bundle institutions with Carnegie classifications or U.S. News lists, institutions differ on too many variables to be regarded as one homogenous population. The lack of a reference population obviates the need for random selection of institutions for a CURE program. Instead, a promising tactic for replicating a program is to select multiple sites, intending to take advantage of the inherent diversity of these sites, and to demonstrate the effectiveness of a CURE program despite this diversity (Cook and Campbell, 1979). This approach supports the consortium model of CURE programs, in which willing participating institutions join one or more CUREs that have some programmatic uniformity across a group of campuses (Jordan, et al., 2014; Shaffer, et al., 2010). For example, the initial demonstration of the success of the SEA-PHAGES program for first-year students was obtained by studying a consortium including 30 research universities, 18 master’s institutions, 22 baccalaureate colleges, and 3 associate degree granting colleges. The success of the Genomics Education Partnership was studied with a consortium that included schools with small and large student populations, residential and commuter schools, schools with predominantly traditional students and others with a high proportion of non-traditional students, as well as schools with greater than average populations of

first-generation and minority students. During various efforts to study the success of the Partnership (Shaffer, et al., 2010; Shaffer, et al., 2014) we investigated the institutional characteristics of the members, including public versus private institution, total enrollment, residential versus commuter school, etc., and failed to find a significant influence of institutional characteristics on student self-reported learning gains, attitudes toward science, or content test scores. We concluded that the program had a robust effect across institutions, but did not argue that the institutions were representative of the domain of higher education.

A second discussion of reference populations in CURE research concerns the population of humans experiencing CUREs (undergraduates). While some students are involuntary participants in a science course (e.g., through specific degree requirements), I am interested in those students who start down the path toward a science degree. It is generally recognized that these students are non-randomly selected (via tests, grade point averages, or applications) and self-selected (via their willingness to engage in the study of at least one of the sciences). Further departures from randomness result from specific attempts to study the effect of CUREs in retaining nontraditional students or students from underrepresented groups in the STEM pipeline. Regardless of how they are selected, all undergraduate students are “nested” in their institutions, complicating any attempt to study the interactions between student characteristics and program components. There is, however, one characteristic that students in CUREs share, namely, that they are ostensibly within the STEM community, and therefore at risk of attrition. Risk for attrition may be the only dimension that defines a reference population for STEM students. While programs that may attract other students to science are of great interest, attraction and attrition may be treated as different concepts, and CUREs that reduce attrition may include effective characteristics that do not generalize to the programs designed for attraction. In other words, the observation that research on the nature of CURE programs is flawed because the students/research participants are non-randomly willing to be engaged with STEM programs is not a serious objection to the research methodology that yields results applicable to other students engaged with STEM programs.

Related to the discussion of sampling from reference populations is the issue of assigning research participants into treatment or comparison groups. The lack of random assignment of participants to groups may lead to the conclusion that no valuable information may be gleaned from non-randomly assigned comparison groups, but according to Cook and Campbell (1979) that conclusion is not correct. Nonequivalent control groups may yield comparative information about outcome variables. The challenge for the researcher is to explicitly identify the “rival hypotheses” that may invalidate a comparison. For example, if a course using an innovative math pedagogy yields higher math test scores than a course using traditional math pedagogy, then a critic might argue that the difference was due to an initial between-group difference in previous math achievement. This specific threat to the credibility of the result may be met by an inspection of the participants’ history, such as standardized test scores.

Tactics for variable selection

The components of the URE and CURE models consist of configurations of independent variables, mediating and moderating variables, and first order and second order outcomes. One comprehensive model (Auchincloss, et al., 2014) includes a depiction showing 3 contexts by 9

activities by 24 short- and long-term outcomes. Another approach is to explicate sets of dimensions that might characterize a CURE. For example, Beckman and Hensel (2009) identify 8 sets of dimensions (e.g., student process centered versus outcome, product centered).

There are analytical tactics to reduce this complexity. For example, we could take the goal of the PCAST report faithfully and specify that the chief outcome variable is achievement of a bachelor’s or associate’s degree in a STEM field. This outcome is relatively easy to measure and serves as a proxy for a series of behaviors in which the student must have engaged on the path to the degree. But most researchers are not satisfied with this approach, which reveals nothing about dispositional variables such as persistence and scientific identity that may index a longer-term effect. While I agree that there are more interesting outcomes of the CURE than simply graduation in STEM, I think it necessary to raise a caution about placing our faith in dispositional constructs, i.e., those that imply personality formation or life span development. I will attempt to clarify this view with reference to two widely discussed student characteristics: persistence and scientific identity.

Persistence, for example, is a widely used term variously described as either the behavior of a student within a CURE experience or the continuation of the student’s journey from one science course to the next, perhaps then extending to a URE and an application to a STEM graduate program. The first difficulty with this term is its negative connotation. We tend to talk about persistent problems or persistent illnesses, not persistent happiness or enjoyment. To describe a student as persisting in the CURE experience suggests a dogged, determined worker who is conscientious enough to slog his way through the course but not very happy about it. Better descriptors of the effortful behavior of the student within the CURE experience might be conscientiousness, intrinsic motivation, or creativity. Persistent behavior may include trouble shooting a malfunctioning instrument, repeating a procedure, or continuing to work independently beyond expectations. If persistent behavior within a CURE experience is of interest, then unobtrusive observations of how much time a student spends on the project beyond what is required or how often the student raises procedural questions may provide an index of the behavior.

Persistence is also used to describe the longer engagement with the journey toward a STEM degree and beyond. It might more accurately be named commitment. By using persistence to describe both a within-course behavior and a following-course behavior, we may be confusing the relationship between persistence and the benefits of longer engagement with undergraduate science. Within a CURE, longer instructional time is positively correlated with student-reported learning gains (Shaffer, et al., 2014). A CURE experience also increases the percentage of students who register for the next course in the discipline (Jordan et al., 2014). A decision to make a longer-term commitment to the science education path, however, is vulnerable to the developmental pressures described by psychologist Daniel Levinson (1978). Levinson, studying the development of young people, wrote that “A young person has two primary yet antithetical tasks…to keep his options open, avoid strong commitments and maximize the alternatives…versus…to create a stable life structure…and make something of his life.” While some writers suggest that the development of scientific behaviors emerges only after several semesters of a URE (Linn, et al., 2015), the prolonged commitment to such programs will inevitably be challenged by attrition. Several years ago I had the opportunity to assist a consortium of mathematics faculty with a program that included two consecutive summers of

TABLE B-2. Comments made by students in the summer after their first year of LURE (left column) and after withdrawing from the program (right column). Each row represents the comments from the same student. The question in 2007 was “Are you looking forward to continuing research over the next year?” The comments in 2008 were solicited by two prompts, including “Students sometimes discontinue participation in a program for reasons unrelated to the program, including health, finances, family, etc.” and “Students sometimes discontinue participation because something more attractive has come along, such as travel, other research opportunities, or other career opportunities.” [Lopatto, D. (2009). Long-term Undergraduate Research Experiences (LURE): The experience of students who completed the program and those who did not. Unpublished document.]

| Comments in the summer of 2007 | Comments in the spring/summer of 2008 |

| I am not doing research over the next year because research just is not something I enjoy that much. | To sit for the CPA exam in Virginia, I have to have 150 credit hours and I did not want to take another year of school, so I am taking classes over the summer to fulfill this requirement. Also, I decided that being in a math research program wouldn’t have as much impact on a future career in business. It might help me to get a job, but other than that, I don’t think knowing how to analyze different situations using math will be helpful. |

| Yes, certainly – I’ve had a fantastic time this summer in research . | Another research program was my primary cause for not participating in a LURE project this summer. Although I found LURE to be a stimulating and interesting experience, I wanted to broaden my summer research experiences to include some of my other interests. |

| Definitely. | This summer, I’m working for DRS Technologies in St. Louis. It’s an internship program where we get to work on important but not “mission critical” projects in teams. Like the LURE program, there is a lot of exploration and discovery involved, but the setting is much more professional, formal, and business-oriented. I realized after a couple weeks that I very much prefer informal academic settings, but I’m still glad I took this opportunity because, in my opinion, self-discovery is better earlier rather than later. |

| I would be, but I don’t believe I will. Next summer I will likely be moving on to other endeavors, and the upcoming semester will prove to be very trying for me. | Mathematics is not my intended field of study. If I were a mathematics major, I would likely have remained in the program. However, I felt it was necessary to keep my options open with regards to my actual field of study, and I was not able to commit to the program for a second year. |

| Yes I am looking forward to continuing this research over the next year. | I received an actuarial internship at an insurance company and I figured I should take that since it is directly related to what I will be doing after college. |

| No. Although I enjoyed this experience during the summer, I’ve come to realize that this is not something I want to do for the rest of my life. | I got the opportunity to do research in computer science during this summer, which is related to my honors thesis. |

| No, I am not continuing the research experience next year. I am not in love with math so I do not want to pursue math research since it is not one of my passions. | I wasn’t sure what I was going to do this summer at the time that I made my decision, but I figured I would find something more attractive and interesting to me. I figured that my major would be Chemistry or Biochemistry and if I continued with undergraduate research (not even for this summer necessarily), I would want to conduct it in one of these areas. So I figured that if I was going to do research, doing math and chemistry research might be distracting or overwhelming. I just wanted to focus on one area and I’m more interested in chemistry. |

| My project was able to be completed in one summer, so I will not be continuing with the research. | I went into LURE thinking that I might want to do something with mathematics in the future and realized that this was not something that I would enjoy long term. |

| I am looking forward to continuing the research next school year, but not next summer. I hope to widen my summer experiences, and possibly do research in another field, or try and find a company to do an internship with. | Two members of my family were both having health problems this spring and I needed to be home this summer to help them out around the house. |

undergraduate research (Hoke and Gentile, 2008). Intuiting that students might leave the program over the two-year period, we asked them about their commitment in both the first and second year. Some students dropped out of the program the second year. Table B-2 shows the comments from LURE (Long-Term Undergraduate Research Experience) students made at two times in the program. The comments from students who were initially excited about the program, but later found that they could not continue, illustrate the increasing pressures that result in attrition over a long program. Many of their reasons for not participating in the second year are reasonable, and reflect the “options open” versus “stable life structure” described by Levinson.

The formation of a stable life structure might be another way of saying that young people desire to form an identity. The term has a long and varied history in psychology and sociology. The URE/CURE research literature hypothesizes a construct, scientific identity, which serves as a mediator between learning experiences and commitment to a science career. This identity variable is both an outcome of experience and an influence on motivation. Professional identity formation may be facilitated by participation in a learning community (Graham, et al., 2013). This enhanced science identity may be related to a sense of ownership of a research project or to a sense of belonging to a science community (Corwin, et al., 2015). Despite its current widespread use, I feel that we should use the term identity sparingly. I think we mean the term to signal a complex of behaviors related to doing science. These behaviors need an environment that will allow for their expression. The display of the scientific identity, for example, is constrained by other dynamics in the life of the student. Most undergraduates experience either a prescribed

general education curriculum or course selection in other disciplines that stems from a personal or imposed (e.g., by parents) desire to broaden their education. These students may find that behaving as a scientist is a suboptimal strategy for succeeding in the humanities, art, or social science. These students may learn to be adaptive—to behave as scientists, humanists, etc. in response to situational cues. Although scientific identity is suggested as related to career persistence (Corwin, et al., 2015), there are life-span influences that may require the abandonment of this identity, including failure to achieve a graduate degree, failure to find a professional position, failure to win grants, etc., moments that may favor adaptive behavior over scientific identity.

Of course, one might hypothesize that even in the absence of curricular or professional signs of scientific identity, students who experienced CURE or URE programs continue to think like a scientist. A few years ago my colleague Carol Trosset and I had the opportunity to conduct a survey of science alumnae at a liberal arts college (Lopatto and Trosset, 2008). We categorized the respondents as having had a URE experience or not while undergraduates, and then posed questions regarding their use of their science education after graduation. The responses were varied. Some respondents pursued a career in science while some did not. While some respondents could articulate a relation between their undergraduate science education and their later lives, I recall two comments that I think illustrate the difficulty of using the term scientific identity. One respondent, reflecting on her situation 5 years after graduation, reported “after completing my MS degree, I decided I was tired of working in the sciences and wanted to do something completely different.” Another respondent wrote, “I was at a wonderful Ph.D. program…with the path of many, many career options ahead…but I had been apart from my husband for years and wanted to be with him and have children. My science education argued for BOTH cases…I know the biological downsides to waiting to have kids, but I also had an appreciation for the stats: If I left, I’d probably never finish my degree.” It seems that the respondent’s scientific identity was conditioned on situations, rather than a permanent source of motivation to do science.

If we continue to think about the establishment of a scientific identity as a disposition or personality variable, then the door is open to the study of other identities as well. Gender and race loom large as identities that may interact with the formation of a scientific identity. A student who has experienced the life of a White male in the United States may enter college with a different identity than a student who identifies as a Hispanic female. The White male student may be far less actively engaged with considering or defending his identity than the Hispanic female, who may face challenges to her identity through microaggressions (Harwood, et al., 2012) or stereotype threats (Steele, 1997). Her effort to establish a scientific identity among her other identities may involve more cognitive and emotional energy than his. The study of how a person navigates among identities is termed “intersectionality” (Cole, 2009). The challenge of intersectionality complicates the formation of a scientific identity in ways that have not been fully studied.

The consortium as (quasi-) experiment

By a consortium I mean a group of programs or institutions organized around a common set of activities, in the present context to promote undergraduate science education. I leave it to

others to point out the possible economic and resource advantages of a consortium over an unaffiliated collection of programs. My interest is in discussing the advantages for research on CUREs afforded by the consortium over a piecemeal inspection of programs at individual institutions.

According to Campbell, a quasi-experiment is an experiment that has “treatments, outcome measures, and experimental units, but does not use random assignment to create comparisons from which treatment-caused change is inferred. Instead, the comparisons depend on nonequivalent groups that differ from each other in many ways other than the presence of a treatment whose effects are being tested…In a sense, quasi-experiments require making explicit the irrelevant causal forces hidden within the ceteris paribus [other things being equal] of random assignment” (Cook and Campbell, 1979; see also Campbell and Stanley, 1966). Many published accounts of CURE programs qualify as quasi-experiments; however, studies of a single institutional program lack the richness of information that is provided by the study of a CURE over multiple settings. One program that has yielded rich information about the nature and impact of a CURE is the Genomics Education Partnership.

The Genomics Education Partnership was founded by Prof. Sarah Elgin and originally supported by the Howard Hughes Medical Institute (Lopatto, et al., 2008). Several descriptions of the program are in print, so rather than compose another I quote from the article:

The GEP is a consortium in which more than 100 colleges and universities (mostly primarily undergraduate institutions, or PUIs) have joined with Washington University in St. Louis (WUSTL) with the goal of providing undergraduates with a research experience in genomics (see http://gep.wustl.edu). The GEP is investigating the evolution of the Muller F element, a region of the Drosophila genome that exhibits both heterochromatic and euchromatic properties, and the evolution of the F element genes. Undergraduates are involved in both finishing (improving the quality of draft sequence) and annotating (creating hand-curated gene models based on all available evidence, mapping repeats, and identifying other features) designated regions of the Drosophila genome. They work on 40-kb “projects,” which, after quality control checks, are reassembled to generate large domains for analysis. GEP materials have been adapted to many different settings, from a short module in a first genetics course to the core of a semester-long laboratory course to an “independent study” research course. A common student assessment is carried out using the central website. Pre/post-course quizzes demonstrate that GEP students do indeed improve their knowledge of genes and genomes through their research (Shaffer et al., 2010, 2014). Post-course survey results from 2008 and 2010–2012 on science attitudes are consistent and show an overall pattern and numerical scores very similar to those of students in a dedicated summer research program (Lopatto, 2007; Lopatto et al., 2008; see especially Shaffer et al., 2014). All student projects are completed at least twice independently, and a reconciliation process is carried out by experienced students working at WUSTL during the summer. Student annotations are deposited in GenBank and form the core of our scientific publications, which analyze the reassembled regions as a whole (e.g., Leung et al., 2010). A paper based on comparative analysis of the F element of four Drosophila species, now in preparation, will have more than 1000 student and faculty coauthors. Thus, by both pedagogical and scientific

measures, the GEP appears to have assembled a group of faculty who each has successfully developed a CURE on his or her campus. (Lopatto, et al., 2014, 713)

The original GEP description (Lopatto, et al., 2008) used the phrase “original research experiences within the framework of course curricula.” This phrase is a reasonable working definition of the CURE. Undergraduate research experiences have been defined by several authors (Lopatto, 2003, 2007); the key constraint on the CURE compared to the URE is time. Whereas a URE may include 10 weeks of dedicated summer research, a CURE must somehow fit in the time allotted for a course that might cover 14 weeks (more, if the course continues over several terms, e.g., Jordan et al., 2014; less in other academic schedules), and the CURE competes for the student’s attention with other programs with which the student interacts concurrently.

The GEP includes a large sample of institutions varying on the dimensions of size, student body, etc. as mentioned earlier. The full force of this large consortium is not to parse the institutional characteristics in a search for interactions with treatment, but rather to make the argument that the program has an effect despite the error introduced by the varying institutional characteristics. The treatment is a complex package including workshops for faculty, workshops for teaching assistants, a central website with shared curriculum and a wiki for sharing additional information, and a central support system provided by the staff at Washington University in St. Louis (see Lopatto, et al., 2014). The treatment is administered locally by one or more instructors, who determine the “dose” in the sense of how much instructional time is devoted to genomics. While no randomly assigned control group for comparisons is possible, nonequivalent control groups provided some basis for comparisons.

Measurement of outcomes

If we consider only the succinct text of the PCAST report, the minimal outcome we need be concerned about as practitioners is the increase in the number of STEM degrees. Researchers of the CURE programs, however, are often interested in more than the outcome of awarding of degrees. They endeavor to create instruments that yield information about student’s experiences and learning gains. To increase the credibility of individual instruments, methodologists pursue the path of classical test theory, in which validity and reliability of the instrument are demonstrated through correlational procedures. The advice stemming from the study of quasi-experiments calls for multi-operationism, that is, the use of multiple measures that may converge on a credible finding (Cook and Campbell, 1979). The assumption of multi-operationism is that measures that ostensibly tap into the same construct should correlate or converge on the same “signal”, the effect of the construct under study; however, just as important to the assumption is that two measures that share the same “signal” do not share the same “noise”, that is, that their sources of error should be different. For example, a survey eliciting student ratings of a CURE experience may correlate with a measure of student lab attendance. To the extent that they do not correlate perfectly, the sources of error for the survey may be that students misinterpret survey questions or have reading difficulties, while for attendance students may have non-voluntary absences due to illness. This independence of errors makes the any correlation between the measures more meaningful.

Multi-operational evidence in the GEP

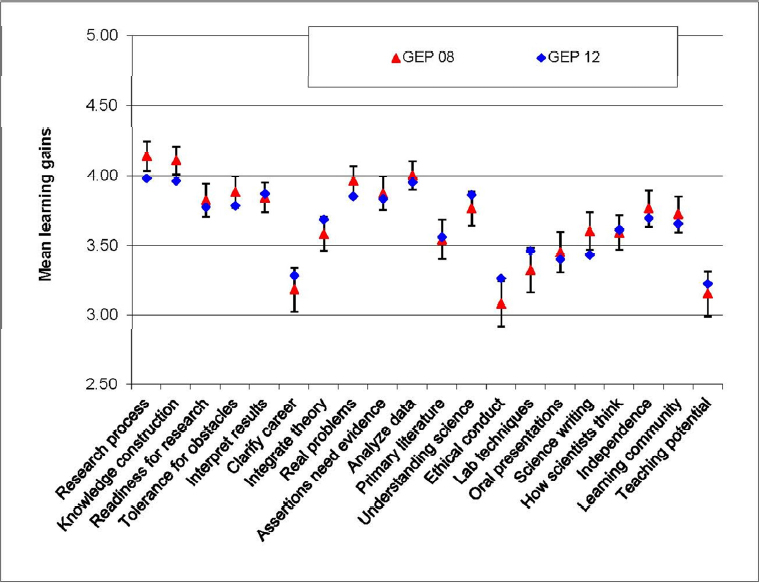

The multi-operational approach to measurement serves to reassure us that a finding is credible. For the GEP, multiple measures indicate that the class-based URE (i.e., the CURE) is in fact a URE experience. One source of evidence is the recognition that genuine research contributions are emerging from the program. The GEP has generated published research papers (e.g., Leung, et al. 2010; Leung, et al. 2015). A second source of evidence is the report of the GEP students framed against a benchmark of similar reports from students who completed a dedicated summer research experience (URE). Such a comparison is possible because the version of the post-course survey used by the GEP employed a set of 20 learning gain items used in the Survey of Undergraduate Research Experiences (SURE; Lopatto, 2004). The comparative results are shown in Figure B-2. Generally, the GEP students report learning gains comparable to those reported by the URE students. In addition to the close proximity of the mean scores, the pattern of results, i.e., how the means compare within a group, appears similar for the GEP and URE students. This similarity has proven reliable (Figure B-4). Additionally, every year qualitative comments are recruited from GEP students that comport well with the quantitative measures.

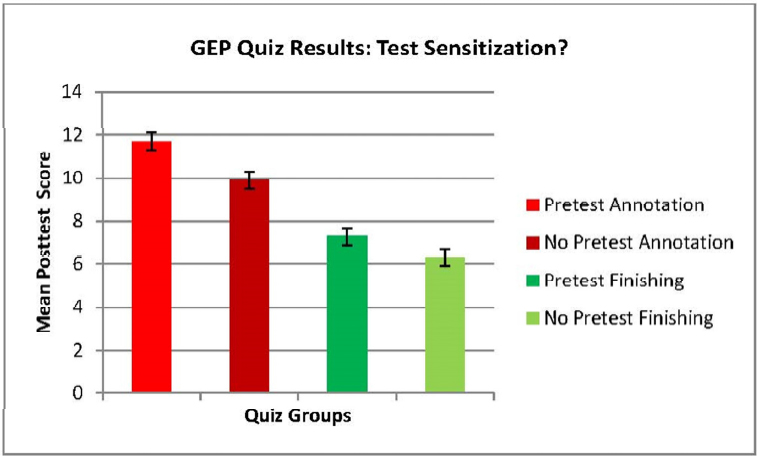

GEP students become acquainted with genomics practices such as annotation and finishing (see above). The group of instructors who are GEP members have designed quizzes to measure gains in these two knowledge areas. The quizzes are given pre- and post-course. At first glance, any increase from pre- to posttest in esoteric knowledge might be sufficient to convince us that learning has occurred. However, the quasi-experimental approach encourages us to think about rival hypotheses that might account for the gain. In the course of the GEP implementation, three nonequivalent control groups emerged. Two of these permitted us to discard two rival hypotheses to the view that students were learning not only about annotation and finishing, but more deeply about genes and genome: namely, test sensitization and maturation. Test sensitization is the hypothesis that students will improve their posttest scores by virtue of having seen the test before as the pretest. When the GEP first introduced the annotation and finishing quizzes, we used the same test for pre- and posttest. By chance some of the GEP students across institutions did not complete the pretest but did complete the posttest. I compared the posttest scores for students who saw the pretest with scores from students who did not. The results are shown in Figure B-5. It appears that test sensitization did affect posttest scores. In response, the GEP instructors created two equivalent form tests so that the pretest is not identical to the posttest. (Students are randomly assigned to one or the other for their pretest, and receive the other for their posttest.) This “equivalent forms” approach has eliminated the test sensitization confound.

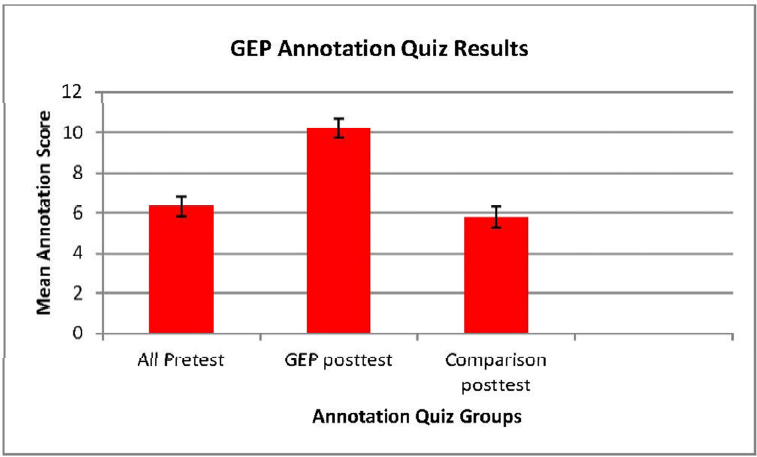

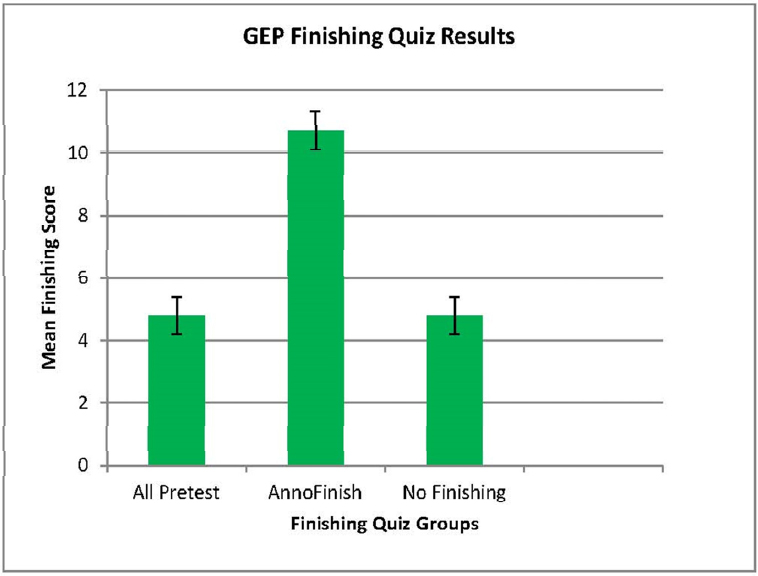

Maturation is the hypothesis that performance on a test improves simply because the student aged, matured, or profited from nonspecific life experiences. If maturation holds as a rival hypothesis, then we lose our trust in pretest-posttest gains. For that reason, GEP instructors volunteered to recruit non-GEP students from biology courses to take the annotation and finishing quizzes. These nonequivalent controls were exposed to biological knowledge during the semester. They presumably aged and matured at the same rate as the GEP students. Their test performance is shown in Figures B-6 and B-7. The figures illustrate that the GEP students’ posttest mean was greater than both their own pretest mean and the posttest mean of the nonequivalent comparison group.

In addition, Figure B-7 shows the third quasi-control group. Instruction on annotation and instruction on finishing are separable, with many more GEP sites teaching annotation than both annotation and finishing. To better understand the added value of instruction in finishing, the scores on the finishing quiz were examined for students who had explicit training in both annotation and finishing (the “annofinish” group) compared to students who had only annotation instruction (the “no finishing” group). The results show higher mean score for students who had finishing instruction and research participation in this area, and argue against any general gains in knowledge by virtue of instruction and research in annotation.

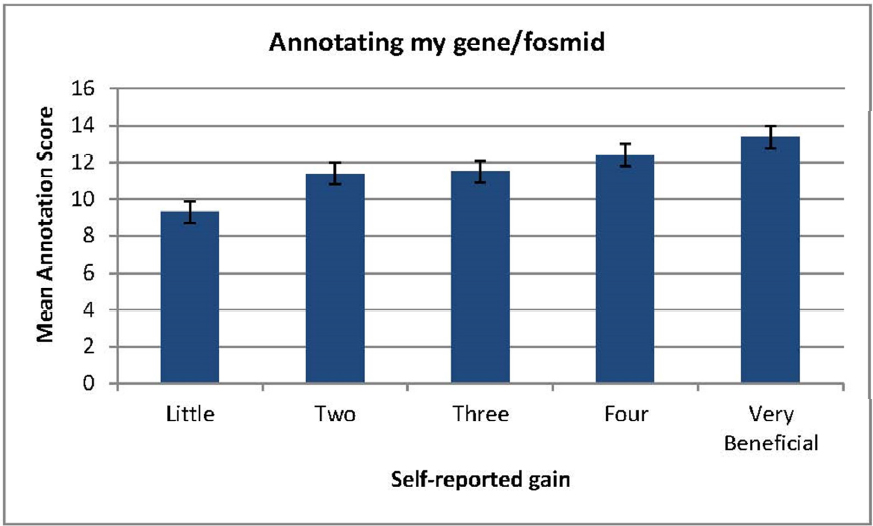

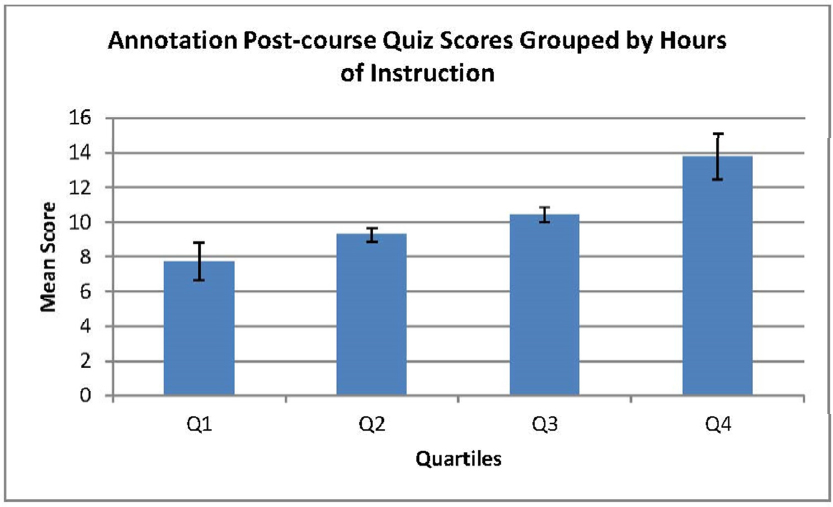

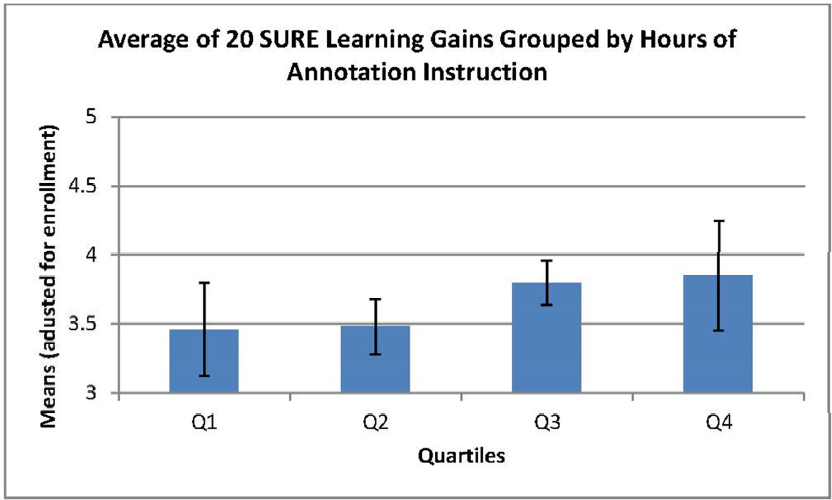

Taking the multi-operational approach, we explored the relationship between quiz scores as a measure of gains in content knowledge and student self-report of learning gains. The student survey used for the GEP includes both the items mentioned above as part of the SURE survey as well as items specifically related to features of the research project. We found that the relationship between quiz scores and self-report depended on the specificity of the survey question with the course activity (for example, Figure B-8). Where a survey item was specific to a course activity, the correlation between the quiz score and the item was in the range of 0.2 to 0.3 (statistically different from 0, modest in magnitude); more general survey items did not correlate with quiz scores. Both quiz scores and survey scores were sensitive to “dose” effects of instruction (Figures B-9 and B-10). A fuller presentation of this relationship is found in Shaffer, et al. (2014).

The effectiveness of the features that characterize the GEP as a consortium—faculty training, teaching assistant training, shared curriculum, a central support system provided by Washington University in St. Louis, diverse faculty implementation strategies on different campuses—are indirectly validated by the student data. The multi-operational approach suggests that data collected from another group of “subjects,” the GEP faculty, would provide some convergent validation to the argument that the consortium is a successful CURE program. We undertook to recruit faculty information and reported strong evidence for the key role of the central support system (Lopatto, et al., 2014) via both structured survey items and qualitative responses to prompts.

Does the success of the GEP generalize to reference populations of institutions and students? As discussed above, it is not clear that a homogenous reference population of institutions exists. The GEP demonstrates a CURE that affects student learning despite the differences among institutional members. The next step in the search for generality would be to replicate the effect with a new institution. The ease of replication is an additional attractive feature of the consortium as experiment, that is, a candidate for replication need only join the consortium.

Multi-operationism—what is related?

Recent literature suggests many domains of outcomes that could be affected by CUREs (Corwin, et al., 2015), as well as several directions for future measurement (Laursen, 2015). Going forward, it will be useful to sort out which outcome variables serve as key indicators of CURE success, and which outcome variables may not correlate with each other despite their equal significance. In other words, in mapping the outcomes of the CURE, should we adopt a one-to-many mapping or a many-to-one mapping? A one-to-many mapping would consist of

finding a key indicator of CURE success and then concluding that other indicators shown to be correlated with the key are also present. For example, if it can be shown that “ownership” (Hanauer and Dolan, 2014) routinely correlates with characteristics such as interest in the field or the ability to work independently, we may be able offer a simplified assessment program to CURE practitioners focusing on the ownership measure. On the other hand, we may discover that two commonly measured outcomes, such as content knowledge test scores and student self-reports, correlate poorly in a CURE program. In the GEP assessment (Shaffer et al., 2010) we found evidence of correlations between a content test of the practice of annotation in genomics and student self-reports of knowledge gain. These relationships were modest, however, and confined to self-report items that specifically asked about content learning. More global items, such as those related to self-confidence or working independently, did not relate to content test scores.

One may well wonder if content knowledge has a significant relationship with longer term commitment to a STEM career. Jordan and her colleagues created a test of biological concepts to be administered both to students in an experimental SEA PHAGES program and to controls. The intent of the testing was to demonstrate that the mean scores for the two groups would not differ, thus resisting the criticism that involvement in a CURE program might interfere with learning course content, and indeed they did not (Jordan, et al., 2014). If the students in the SEA PHAGES program go on to contribute to the increase of 1,000,000 degrees in STEM demanded by the PCAST report, it will not be due to the inferior or superior acquisition of content knowledge.

Ultimately, the selection of appropriate measures for assessing the success of CURE programs depends on the objectives of the programs. The minimal PCAST objective, yielding more bachelor’s or associate’s degrees in the STEM fields, might be sufficiently assessed through grades and registration patterns. If, as I suspect many CURE researchers believe, the CURE program is an occasion for shaping scientific motives and ways of thinking that may result in an interest in STEM fields beyond just graduation, then we should acknowledge the importance of self-report measures. Some 20 or so years ago the conversations regarding assessment of student learning generally fell into describing a dichotomy between “direct” and “indirect” measures of learning. Direct measures consisted of content and procedural learning as measured by local tests or standardized tests such as the GRE (Graduate Record Exam) or the CLA (Collegiate Learning Assessment). Descriptions of experience given by students through surveys, interviews, and focus groups were said to be indirect measures of learning, and were treated with some suspicion because student responses could be boastful, cautious, or merely delusional. But, as I have suggested (Lopatto, 2007) the question we need to ask is, the direct measure of what?

In the current research the student respondent was promised anonymity, precluding the matching of student survey responses with information from other sources. Beyond the tactical difficulties of identifying student responses or recruiting observations from supervisors, however, the challenge of validity is complicated by the concept of the “direct measure.” Within the standard science curriculum, a direct measure is often equated with an exam or laboratory exercise in which the student demonstrates memory for and skill in the use of the disciplinary information taught by an instructor. Other measures, such as the student’s self-reflection, are considered “indirect.” Skeptical of indirect measures of course behavior, researchers often demand that the indirect measure be validated with the direct measure. Within the undergraduate research experience, however, there are learning and experience goals that may be most directly measured by student report. Estimates of personal development, including tolerance for obstacles, readiness for more research, and self-confidence, are best made by the person who has direct access to these estimates. Estimates of the student’s likelihood to continue with science education and a science career can only be forecasts, and the person best positioned to make the forecast is the student. Some of the most desirable outcomes of an undergraduate research experience, including maturity, positive attitude toward science, and an intention to continue in the field, are most directly measured by student report. In short, the requirement for a direct measure needs to be clarified by posing the question, “The direct measure of what?”

If we wish to know if an undergraduate science major plans to go on to graduate school, we need to ask her. Her future in graduate school will not be predicted by content or critical thinking scores if she does not intend to submit an application. I am convinced that the study of student motives and attitudes, best known, however imperfectly, through direct report by the student, will be crucial to understanding the impact of any undergraduate pedagogy. The psychologist Kurt Lewin (1943) asserted that behavior depends on the sum of the person’s experience, which he called the psychological field, at the time of the behavior. The psychological field consists of the influence of the past and future on the person in the present time. An undergraduate in a CURE program will respond in a way affected by his or her past experience, his or her present

experience of the CURE, and his or her expectations of the future, as he or she views them at that time. The most direct means to knowing these is to ask.

The thrust of this article has been commentary salted with data for the purpose of suggesting a research strategy for the study of CURE effectiveness. The magnitude of the goal set out in the PCAST report requires that we move toward a research strategy that yields results to facilitate change. As presented above, I have argued that the main features of this strategy should be to emphasize the effectiveness of teaching and learning practices in the learning environment, to distinguish between attrition and attraction of potential science graduates, to avoid describing student success in dispositional terms, and to understand the advantages of a multi-operational approach to research in a consortium. Using the scope and diversity of the science education consortium, we should be able to discover the commonalities and contrasts in practice that will expand successful undergraduate science education.