5

Incidence and Consequences

Science has the potential to address some of the most important problems in society and for that to happen, scientists have to be trusted by society and they have to be able to trust each others’ work. If we are seen as just another special interest group that are doing whatever it takes to advance our careers and that the work is not necessarily reliable, it’s tremendously damaging for all of society because we need to be able to rely on science.

—Ferric Fang quoted by Jha (2012)

Synopsis: Research misconduct and detrimental research practices constitute serious threats to science in the United States and around the world. The incidence of research misconduct is tracked by official statistics, survey results, and analysis of retractions, and all of these indicators have shown increases over time. However, as there are no definitive data, it is difficult to say precisely what the incidence of misconduct is per grant or per paper and to determine trends. It is possible to say that while research misconduct is unusual, it is not rare. A variety of detrimental research practices appear to be tolerated, at least in the fields and disciplines that have been studied. Both research misconduct and detrimental research practices impose significant costs on the research enterprise. Particular cases of misconduct have also negatively affected society at large. The phenomenon of irreproducibility, which has attracted increasing attention during the course of this study, illustrates the negative impacts of detrimental research practices (DRPs), although this is a complex phenomenon and specifying the role of DRPs in irreproducibility will require additional research. Examining specific cases shows that tolerance for DRPs at the level of laboratories, institutions, sponsors, and journals enables misconduct and leads to delays in uncovering it. In addition, some DRPs are committed either directly or through inadequate practices by research institutions and journals, not just by individual researchers and research groups.

THE INCIDENCE OF RESEARCH MISCONDUCT AND DETRIMENTAL RESEARCH PRACTICES

The Responsible Science report (NAS-NAE-IOM, 1992) found that “existing data are inadequate to draw accurate conclusions about the incidence of mis-

conduct in science or about questionable research practices.” The report pointed out that “the number of confirmed cases of misconduct is low compared to the level of research activity in the United States,” but that there might be significant underreporting, and that “every case of misconduct in science is serious and requires attention.”

In recent years, a regular flow of high-profile cases of fabrication, falsification, and plagiarism (FFP) has been covered in the media. These have come from countries around the world, and they have been notable due to the prominence of the researchers involved, the importance of the work shown to be false or unreliable, the scale of the transgression in terms of, say, the number of papers to be retracted, or some combination of these factors. A particular trend has been the emergence of “serial misconduct”—cases of careers built on fabrication involving up to a hundred or more publications. A few examples taken from the past few years:

- In 2012, Harvard psychologist Marc Hauser, who gained prominence for his groundbreaking work on the origins of cognition and morality, was found by the Office of Research Integrity (ORI) of the Department of Health and Human Services (HHS) to have falsified and fabricated data and methods in six federally funded studies (Carpenter, 2012).

- In 2012 the University of Connecticut found that cardiovascular researcher Dipak Das fabricated or falsified data 145 times in his work on resveratrol (Science, 2012).

- As of September 2012, 28 papers authored by Korean biochemist HyungIn Moon of Dong-A University in South Korea had been retracted as a result of suspicions that he supplied reviewer suggestions to journals with e-mail addresses that actually went to him (Fischman, 2012).

- In 2012 the Japanese Society of Anesthesiologists released a report on the work of Toho University of Medicine faculty member Yoshitaka Fujii, concluding that he had fabricated data in 172 papers (JSA, 2012).

Over the past several decades, as federal agencies and research institutions have had to address research misconduct more frequently and institute formal policies, more information has become available about the incidence and significance of research misconduct. Information on the incidence of research misconduct, defined as FFP, is available in the reports of the National Science Foundation’s Office of Inspector General (NSF-OIG) and ORI.

In the case of NSF-OIG, misconduct findings have undergone a notable increase in recent years. In its semiannual reports to Congress, NSF reported just 1 finding of misconduct in 2003, 2 in 2004, and 6 in 2005, compared with 17 findings in 2012, 14 in 2013, and 22 in 2014. A rate of 16 findings per year represents less than two hundredths of a percent of the new awards NSF makes. A large proportion of NSF’s research misconduct findings are for plagiarism

(e.g., 18 of 22 in 2014). The number of research misconduct allegations made to NSF-OIG annually has more than tripled over the past decade (Mervis, 2013).

Research misconduct findings by ORI have shown less of an upward trend in the past decade, with 12 findings in 2003, 8 in both 2004 and 2005, 14 in 2012, 12 in 2013, and 13 in 2014. The majority of HHS’s research misconduct findings are for fabrication or falsification. As with NSF-OIG, the number of allegations made to ORI has increased significantly, going from 240 in 2011 to 423 in 2012 (ORI, 2013). Just as statistics on arrests or convictions will tend to undercount the number of crimes actually committed, the statistics on research misconduct findings will tend to undercount the actual incidence (Steneck, 2006).

In addition to these official statistics, a number of surveys of researchers regarding their practices have been undertaken in recent years. For example, a survey of research psychologists found that between a quarter and a half of the respondents admitted to having engaged in such practices as “failing to report all of a study’s conditions,” “selectively reporting studies that ‘worked,’” and “reporting an unexpected finding as having been predicted from the start” (John et al., 2012). In an earlier survey of scientists funded by the National Institutes of Health (NIH), less than 1 percent of respondents self-reported engaging in falsification of data and less than 2 percent admitted to plagiarism, but more than 10 percent admitted to engaging in practices such as “inappropriately assigning authorship credit” or “withholding details of methodology or results in papers or proposals” (Martinson et al., 2005).

Similarly, a meta-analysis of researcher surveys indicates that the incidence of FFP is somewhat higher than the official statistics indicate, with about 2 percent of researchers admitting to fabricating or falsifying data at least once, and more than 14 percent aware of colleagues having done so (Fanelli, 2009). Survey reports on misconduct by colleagues might be inflated by multiple researchers reporting the same incidents; one of the surveys attempted to avoid this by not including more than one researcher from a given department and found that 7.4 percent of respondents had observed misconduct committed by colleagues (Titus et al., 2008). At the same time, the narrower group of respondents would not be expected to know about all cases of misconduct among colleagues, making this a conservative estimate. The same meta-analysis showed that actions discussed in Chapter 4 as examples of detrimental research practices (DRPs) are relatively common. A survey on violations of research regulations—including human subjects protection violations as well as research misconduct—was sent to all comprehensive doctoral institutions and medical schools in the United States and yielded responses from 66 percent (DuBois et al., 2013a). The results reinforce the federal agency data cited above showing a significant rise in allegations—96 percent of the responding institutions had undertaken an investigation in the preceding year, with the modal number being 3 to 5 per year.

Determining the incidence of plagiarism and related trends faces some particular barriers. The difficulty in defining plagiarism continues to be an obstacle.

While plagiarism detection software has recently grown in popularity, text matches are not necessarily plagiarized (Wager, 2014). Text matches may occur for a variety of reasons, including copublication, legal republication, common phrases, and multiple versions of a publication (Wager, 2014). However, there are indications that the overall level of plagiarism in legitimate biomedical journals peaked at some point in the last decade and has been declining since as the use of plagiarism detection software by journals has become widespread (Reich, 2010a). Despite the likely decline in incidences, differences persist between journals in how they respond to plagiarism allegations (Long et al., 2009). The appearance of a large number of journals that appear to have little concern about publishing copied or duplicated work—many of which operate under an author-pays, open-access business model—has created a new channel for papers to be plagiarized (Grens, 2013a).

Other recent research has examined retractions of scientific articles in journals (Fang et al., 2012; Grieneisen and Zhang, 2012; Steen et al., 2013). Articles may be retracted for a number of reasons, including unintentional errors on the part of authors or publishers as well as research misconduct. One recent analysis that focused on articles contained in the PubMed database found that more than two-thirds of retractions were due to misconduct defined as FFP (Fang et al., 2012). Another analysis that examined retractions of articles in a variety of databases that collectively covered all disciplines between 1980 and 2011 found that 17 percent of the 3,631 retractions in which a cause was identified were due to data fabrication or falsification, and 22 percent were due to plagiarism (Grieneisen and Zhang, 2012). This research also found that there are more retractions in certain disciplines than would be expected based on their representation in the overall research literature (e.g., biomedicine, chemistry, and life sciences) and that other disciplines are underrepresented in terms of retractions (e.g., engineering, physics, and social sciences) (Grieneisen and Zhang, 2012).

These analyses have also found a sharp increase in the number of retractions over time, particularly over the past decade or so. Although the increase in the number of articles published annually is a contributing factor, the rate of retraction is also increasing. For example, an analysis of papers in the PubMed database found that the number of retractions has increased tenfold in recent years, while the total number of papers has only increased by 44 percent (Van Noorden, 2011). As with other statistics cited here, there are reasons to be cautious about using the number and rate of retractions as proxies for the incidence of misconduct or error. One analysis suggests that both the barriers to publishing flawed work and to retracting articles have been lowered over time (Steen et al., 2013). Retraction rates, particularly at the country and disciplinary level, can be skewed by the serial misconduct cases mentioned above, where a researcher has fabricated or falsified data underlying tens of articles (Grieneisen and Zhang, 2012). On the one hand, retracted papers still represent only a small proportion of the overall literature, and formal retractions have only gradually become a standard practice

in recent decades. On the other hand, some evidence suggests that many fraudulent papers are never retracted (Couzin and Unger, 2006) and all of the admittedly imperfect proxy measures for the incidence of research misconduct have displayed significant increases in recent years.

COSTS AND CONSEQUENCES

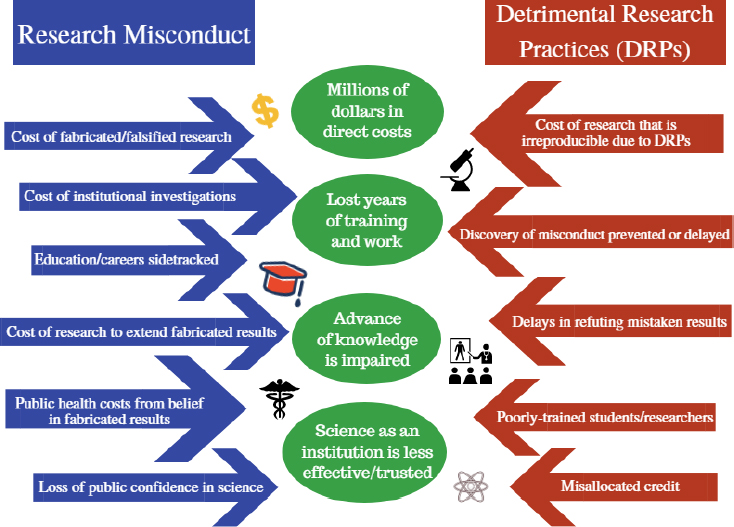

Research misconduct and DRPs constitute failures to uphold the values of science. Even if they had no wider consequences, it would be vital to prevent and address them. However, a variety of costs and consequences can be conceptualized even if they are difficult to quantify or measure precisely. The costs of research misconduct and DRPs can be broken down into (1) damage to the individuals, (2) reputational costs to the employer of the transgressor and the journal that published the work, (3) direct financial costs, (4) broader social costs, and (5) opportunity costs associated with categories 1 through 4. Figure 5-1 illustrates these costs.

Examples of the many individual costs of research misconduct and DRPs are wasted efforts of researchers who trusted a fabricated paper and did work to build on it, damage done to innocent collaborators (including graduate students and

postdocs experiencing career turmoil after misconduct committed by a supervisor or colleague is uncovered), time and energy associated with misconduct inquiries and investigations on the part of committee members and staff, wasted time by editors and reviewers, the damaged careers of the perpetrators themselves, and any retaliation or other negative repercussions suffered by good-faith whistleblowers or informants. One measure of the wasted efforts of later researchers is the extent to which papers based on fabricated data are cited, even if they are retracted (surprisingly often) (Neale et al., 2007). To give one example, in the 1990s the Geological Survey of India and Panjab University found that paleontologist Viswa Jit Gupta had fabricated and falsified data on fossil discoveries over more than 20 years (Jayaraman, 1994). Articles citing Gupta’s work are still cited, illustrating that the task of correcting the scientific record can become a long-term undertaking.

Reputational costs include the losses in prestige experienced by research institutions employing the author of a fabricated or falsified paper and by the journals publishing it.

Direct financial costs are borne by a number of stakeholders. Costs can include the funds provided by federal or private sponsors spent on fabricated or falsified research, the expense of investigating an allegation borne by the institution, and any additional funds that the institution pays to settle civil litigation connected with the misconduct. There have been efforts to directly measure the costs of research misconduct in particular cases or groups of cases. For example, a 2014 analysis found that direct NIH funding for 149 articles retracted due to research misconduct between 1990 and 2012 totaled $58 million, far less than 1 percent of NIH’s budget over that period (Stern et al., 2014). This method of analysis has limitations, since the research underlying articles is often supported by multiple sources and funding may not be cited. Funding for an additional 142 articles retracted due to misconduct over that period could not be tracked completely (Stern et al., 2014). Extrapolating the average grant amount associated with the 43 retracted articles supported only by cited NIH grants ($425,073) to the entire set of 291 retracted articles would yield a total of $123.7 million, “which might be considered an estimate of the total NIH funds directly spent on known biomedical research retracted due to misconduct over the past 20 years” (Stern et al., 2014). Adding up all the grants that contributed in any way to papers retracted due to misconduct over those 20 years, which the authors point out would overstate the costs of misconduct, totals $1.67 billion in actual funds and $2.32 billion in 2012 dollars (Stern et al., 2014). This analysis only looks at cases where an investigation has been completed and findings of misconduct have been made.

In another example of an effort to estimate the direct costs of funding for research that is fabricated or falsified, a report by the U.S. Department of Interior’s Office of Inspector General on a scientific integrity incident at a U.S. Geological Survey laboratory states that research and assessment projects totaling $108 mil-

lion in funding between 2008 and 2014 were affected by erroneous data produced by one individual researcher (DOI-OIG, 2016). Further analysis would be needed to determine the specifics of how these projects were affected and the actual costs of wasted effort and any work that had to be redone.

The reproducibility problem will be discussed in more detail below, but if the conclusions being drawn by some researchers in this area are anywhere close to correct, billions of dollars of public and private support for research might not be producing reliable knowledge (Ioannidis, 2005). The cost of research misconduct investigations should also be considered. One analysis has estimated the typical cost of an investigation for an institution to be $520,000 (Michalek et al., 2010). If this amount is extrapolated as the average for the 217 investigations reported to ORI during a year prior to the analysis, it would imply that an annual total of $110 million is spent by institutions on misconduct investigations involving HHS-funded research.

One area where the broader social costs of research misconduct are apparent from specific historical cases is in biomedical research. For example, research characterized by misconduct and DRPs funded by the tobacco companies very likely delayed the issuance of a warning on smoking and health by the U.S. Surgeon General. One expert estimates that if the warning had come out in 1959 rather than 1964, gains from an earlier decline in smoking in the form of increased life expectancy would have totaled $27 billion (Gardner, 2006). In another case, the fraudulent work of anesthesiologist Scott Reuben of Baystate Medical Center in Massachusetts played a large role in shaping treatments in that area over the years (White et al., 2009). This raises the possibility that deaths and other adverse events occurred due to administering treatments developed on the basis of fraudulent work, and it has necessitated researchers going back over the literature to see what findings can be salvaged and what experiments need to be redone (White et al., 2009). The case of Don Poldermans of the Erasmus Medical Center in the Netherlands has caused similar damage, although Poldermans denies having fabricated his work and the Erasmus Medical Center report has not been made public (Chopra and Eagle, 2012). The work of Poldermans and his collaborators in the area of perioperative use of beta blockers and statins informed clinical practice all over the world. The appropriate patient treatments are now highly uncertain (Bouri et al., 2013).

The case of Andrew Wakefield’s finding of a possible causal link between the measles, mumps, and rubella vaccine and autism might also be considered in this context (see Appendix D). Wakefield was later removed from the United Kingdom’s medical register due to professional misconduct committed while performing this research, and is alleged to have falsified data (Godlee et al., 2011; Triggle, 2010; UK GMC, 2010). The costs to society include an ongoing public controversy in multiple countries, public health costs, and even deaths due to a rise in cases of measles. It is not possible to determine the effect of Wakefield’s work on decreased vaccination rates and resulting outbreaks with any precision.

See Appendix D for a more detailed write-up of this case. Cases such as these may also sow broader mistrust of researchers in society.

To the extent that fabricated papers impede drug and treatment development by leading researchers down the wrong track, they also impose financial costs on companies and public health costs on society.

The Reproducibility Problem, Research Misconduct, and Detrimental Research Practices

Meta-analyses of research on particular research questions and even entire fields have produced new insights on the reliability of research. Apparently high rates of irreproducibility of research results in fields such as preclinical biomedical research and social psychology have been discovered and discussed over the past decade (Ioannidis, 2005; OSC, 2015). Issues related to reproducibility began to receive more general attention starting with a cover story that appeared in the Economist in October 2013 (Economist, 2013). The President’s Council of Advisors on Science and Technology devoted a significant amount of its January 2014 meeting to a discussion of the issue (McNaull, 2014). The journal Nature has set up an archive of articles on challenges in irreproducible research (Nature, 2015b). The reproducibility problem has gained wide attention and recognition as a major issue in science.

Reproducibility can be conceptualized or defined in several ways, depending on the discipline or context. It is possible to replicate some work by “using precisely the same methods and materials” to independently collect data, which would require the original work to be presented in “sufficient detail to allow replication or reanalysis” (Freedman et al., 2015). For example, it should be straightforward to replicate a chemical reaction if the amounts of the chemicals to be combined and other conditions such as pressure and temperature are specified precisely. Observations of many natural phenomena cannot be replicated exactly, but precise descriptions of a given phenomenon and the analytical methods used will allow others to validate the conclusions drawn by observing and analyzing similar phenomena. Likewise, clinical trials cannot be replicated exactly, even if the same dosage of a given pharmaceutical is tested on the same number of research subjects with similar characteristics, since the population being tested is different. However, if a drug has an actual, measurable therapeutic effect across a given population of subjects, the effect should be observed when the drug is administered to a similar population. In fields where replication through the independent collection and analysis of data is difficult or impossible for cost or other reasons, a different standard for reproducibility might be used, in which data and the computer code used to analyze them are made available to others for validation (Peng, 2011).

The failure to reproduce research results may be due to a number of factors. This is a nonexhaustive list:

- One or more independent variables affecting the results were not characterized or measured in the original work;

- One or more errors were made in setting up the experiment, data collection or recording, or data analysis in the original work;

- Reporting of the experimental procedures, data obtained, analytical methods, or other aspects of the original work were incomplete;

- The data in the original work were correctly obtained and recorded, but the reported results constituted a false positive or false negative;

- Data in the original work were fabricated or falsified;

- One or more errors were made in setting up the experiment, data collection or recording, or data analysis in an effort to reproduce the work.

In the normal progress of research, a certain level of irreproducibility is to be expected. If irreproducibility is due to unknown variables, knowledge advances when these are characterized and understood through further work. A certain level of error and false positives is compatible with a healthy field. Past a certain point, efforts to eliminate all of the possible factors that cause irreproducibility would be prohibitively expensive (Freedman et al., 2015).

However, concerns have been raised in recent years as irreproducibility rates of 50 percent or more have been estimated in certain fields. This is a far higher level than what might be considered healthy and implies that a significant fraction of effort in some fields is not advancing knowledge. In clinical research, for example, the prevalence of studies with relatively few participants, the reporting of effects that are small by statistical measures, a high number of tested relationships, greater flexibility in study design, the involvement of researchers with personal financial interests, and the popularity of a topic are correlated with the incidence of false positive results. According to a widely discussed analysis, systematic biases led to false positive findings in half or more published studies (Ioannidis, 2005). In addition, false claims may continue to be cited at a high rate, even after subsequent published studies have refuted them (Rekdal, 2014; Tatsioni et al., 2007). The low quality of preclinical research has been identified as a significant factor in the high failure rate of clinical trials in oncology. A recent effort to replicate 53 landmark preclinical studies in hematology and oncology was successful for only 6 articles (Begley and Ellis, 2012).

Lack of reproducibility has also become a significant issue in psychology. A large-scale effort to replicate 100 results published in psychology journals found that the mean effect size of the replications was about half of what was reported in the original articles, and that while 97 of the original articles reported significant results, only 36 of the replications did (OSC, 2015).

Contemporary concerns about reproducibility arise in several forms (Academy of Medical Sciences et al., 2015). One relates to the well-known bias toward reporting and publishing positive results on the part of researchers—the “file drawer problem” (Rosenthal, 1979). The measured effect in a study is the combi-

nation of any real effect plus random variability. Studies where random variability augments the real effect have high formal statistical significance. They are more likely to be submitted to and accepted by journals than work where random variability diminishes the real effect and statistical significance is not achieved. This bias can create a situation in which false-positive results are overrepresented in the published literature, particularly for research with small cohorts, even when the data are correct and the effects are real. When random variability augments an effect in a primary study, it is not likely to do so to the same extent in a replication study. This is an example of the well-known statistical phenomenon of regression to the mean. In some fields, it may be difficult or impossible to quantitatively predict the size of the effect or determine the cohort size likely to produce results that are statistically significant.

Experts have argued that the incentive structures in many modern research environments exacerbate this problem (Nosek et al., 2012). High-pressure research environments, poor publication practices, and funding patterns that create perverse incentives are presumed to be contributing factors (Alberts et al., 2014). These issues are explored in more detail in Chapter 6. An extreme form of positive results bias is seen in the practice of “p-hacking,” in which a dataset is searched or analyzed for a statistically significant relationship, to which a theory of causality is then attached (Academy of Medical Sciences et al., 2015). In general, caution is required when hypotheses are formulated after data have been collected; arcane hypotheses with marginal significance should be regarded with great suspicion. Statistical methods for testing multiple previously defined hypotheses at the same time with a dataset are available (Benjamini and Hochberg, 1995).

Another set of reproducibility concerns arises from flaws in study design and planning (Academy of Medical Sciences et al., 2015). An experimental design may be flawed to the point where it cannot be expected to produce reliable results, or the sample size may be too small to reliably confirm a statistically significant effect, leaving the study “underpowered” (Academy of Medical Sciences et al., 2015).

Other sources of error also figure into the discussion of reproducibility. The growing dependence of many fields of research on information technology and computational science, particularly in areas such as data analysis and simulation, is one potential source of error (Donoho et al., 2008). If data and the code used to analyze the data are not made available, the results cannot be validated through reanalysis. Another source of error that has become problematic in biomedical research is the lack of validation of certain reagents, including antibodies, cell lines, and animal models (GBSI, 2015). The widespread misidentification of cell lines is a specific example (Nature, 2015b).

To what extent are research misconduct and DRPs implicated in the reproducibility problem? There is still much to be learned about reproducibility, both in general and in specific fields. While results based on data fabrication and falsification would certainly be irreproducible, they would constitute only a small part of the reproducibility problem being faced in fields such as biomedical

research and psychology. Certain DRPs, such as misleading statistical analysis that falls short of falsification and the practice of p-hacking, are DRPs that are a direct cause of irreproducibility. Other DRPs, such as failing to share data and code, make replication and validation of results difficult or impossible and are therefore part of the reproducibility problem. Inattentive supervision of postdocs and other junior researchers and failure to catch obvious errors is another DRP that underlies some lack of reproducibility. In addition, tolerance for DRPs that cause or exacerbate the reproducibility problem on the part of journals, research institutions, and sponsors can make it more difficult to uncover research misconduct, as discussed below.

Regarding the costs of irreproducibility, one recent analysis puts forward an estimate, intended to be used as a starting point for debate, of $28 billion per year spent in the United States on “research that cannot be replicated” in preclinical biomedical research alone (Freedman et al., 2015). This figure is not based on a cost analysis but was created by applying a 50 percent rate of irreproducibility, around the lower bound of estimates generated by recent studies, to the total amount of preclinical biomedical research performed in the United States. The uncertainty surrounding this estimate points to the need to better quantify the costs and causes of the reproducibility problem in specific fields and across the research enterprise.

In addition to the direct financial costs, results that are irreproducible due to DRPs have some indirect costs that are similar in type to those that are incurred due to research misconduct, such as delays in rejecting and confirming key results, the time and effort of the researchers involved, and the time and effort of those seeking to build on false results. Chapter 7 will explore how DRPs can be more effectively uncovered and addressed.

Connections Between Detrimental Research Practices and Research Misconduct

Developments in social psychology demonstrate a link between a field’s tolerance for DRPs and delays in discovering significant cases of fabrication and falsification. Social psychology has received scrutiny recently due to a string of high-profile misconduct cases and doubts about the reliability of key results. In 2011, concerns were raised about the work of noted Dutch researcher Diederik Stapel, and a subsequent investigation by the three universities where he studied and worked found that he had fabricated data in 55 publications over many years (Levelt et al., 2012).

The Stapel investigation report enumerates a long list of DRPs that were used by Stapel and his coauthors and that appear to have been widely tolerated in the social psychology research culture. These include a variety of practices reflecting verification bias, such as repeating an experiment that has failed to produce the expected statistically significant result with minor changes in conditions—changes that would not be expected to affect the result—until statistically sig-

nificant results are attained, then reporting only those results. Often, incorrect or incomplete information about research procedures was provided in the publication. Statistical errors that reflected a lack of understanding of elementary statistics were common.

Perhaps the most alarming finding in the Stapel investigation report is the failure of coauthors, editors, and reviewers of leading social psychology journals and others in the field to note infeasible experiments or impossible results. Indeed, reviewers often reportedly encouraged DRPs in the service of “telling an interesting, elegant, concise and compelling story” (Levelt et al., 2012). The report concludes that “there are certain aspects of the discipline itself that should be deemed undesirable or even incorrect from the perspective of academic standards and scientific integrity.”

Daniel Kahneman, who won the 2002 Nobel Memorial Prize in Economic Sciences for his work on the psychology of decision making, challenged social psychologists in a 2012 e-mail message about research on priming, the phenomenon where exposure to a stimulus increases sensitivity to a later stimulus:

For all these reasons, right or wrong, your field is now the poster child for doubts about the integrity of psychological research. Your problem is not with the few people who have actively challenged the validity of some priming results. It is with the much larger population of colleagues who in the past accepted your surprising results as facts when they were published. These people have now attached a question mark to the field, and it is your responsibility to remove it. (Kahneman, 2012)

Estimating a Range of Financial Costs of Research Misconduct and DRPs

From this discussion and the existing evidence, it is possible to develop a reasonable range of the estimated costs borne by the research enterprise and the broader society due to research misconduct and DRPs. For example, the analysis discussed above estimated that confirmed cases of research misconduct directly affected about one-tenth of one percent of NIH extramural funding over the 1992-2012 period, implying an annual total of about $30 million for one agency if this relationship were to continue going forward. To this, one could add the cost of supporting work that is confirmed to be falsified or fabricated work by other federal agencies and the private sector, the cost of supporting falsified or fabricated work that is never investigated by all funders, and the indirect costs of supporting research to extend this fraudulent work. The cost of institutional investigations is estimated at $110 million per year (Michalek et al., 2010). Taking these various costs into account, a total of several hundred million dollars a year would be a reasonable, conservative estimate of the direct financial costs of research misconduct.

Indirect costs such as those arising from negative public health impacts that fabricated and falsified research contribute to, discussed above, should also be

included. The historical case of the tobacco industry and the more recent case of vaccines illustrate that these costs may run into the millions or even billions of dollars over a period of years in particular cases when the public is misinformed on important health issues. The costs of years of incorrect treatment given to thousands or even millions of patients and the costs of accumulating new knowledge to develop correct treatments, as illustrated by the Reuben and Poldermans cases, should also be considered.

DRPs also impose costs on the research enterprise. The financial costs of DRPs in the form of funding for research that does not produce reliable knowledge may be even larger than the analogous costs of research misconduct. There is much still to be learned about irreproducibility in research, including the extent to which DRPs are implicated and how significant a problem it is in fields other those where it is being actively examined such as biomedical research and social psychology.

Another consideration is the international nature of costs and consequences. This discussion focuses on the costs and consequences for the United States, but the Wakefield case shows that misconduct or DRPs committed elsewhere in the world can impose significant costs on U.S. patients and communities. The reverse is true as well.

Clearly, the costs of research misconduct and DRPs are currently difficult to estimate. From the above discussion, and taking into account estimates of several categories of costs, several hundred million dollars in annual costs within the United States is a reasonable lower bound, and the total may be as high as several billion dollars.